A Case of Mobile App Reviews as a Crowdsource

Автор: Mubasher Khalid, Usman Shehzaib, Muhammad Asif

Журнал: International Journal of Information Engineering and Electronic Business(IJIEEB) @ijieeb

Статья в выпуске: 5 vol.7, 2015 года.

Бесплатный доступ

Crowdsourcing is a famous technique to get innovative ideas and soliciting contribution from a large online community particularly in e-business. This technique is contributing towards changing the current business techniques and practices. It is also equally famous in analysis and design of m-business services. Mobile app stores are providing an opportunity for its users' to participate and contribute in the growth of mobile app market. App reviews given by users usually contain active, heterogeneous and real life user experience of mobile app which can be useful to improve the quality of app. Best to our knowledge, the strength of mobile app reviews as a crowdsource is not fully recognized and understood by the community yet. In this paper, we have analysed a crowdsourcing reference model to find out which features of crowdsource are present and are related to our case of mobile app reviews as a crowdsource. We have analyzed and discussed each construct of the reference model from the perspective of mobile app reviews. Moreover, app reviews as a crowdsourcing technique is discussed by utilizing the four pillars of the reference model: the crowd, the crowdsourcer, the crowdsourcing, and the crowdsourcing platform. We have also identified and partially validated certain constructs of the model and highlighted the significance of app reviews as a crowdsource based on existing literature. In this study, only one crowdsourcing reference model is used which can be a limitation of our study. The study can be further investigated and compared with other crowdsourcing reference models to get better insights of app reviews as a crowdsource. We believe that the understanding of app reviews as a crowdsourcing technique can lead to the further development of the mobile app market and can open further research opportunities.

App reviews, Crowdsourcing, crowd capital, user experience, m-business

Короткий адрес: https://sciup.org/15013368

IDR: 15013368

Текст научной статьи A Case of Mobile App Reviews as a Crowdsource

Published Online September 2015 in MECS DOI: 10.5815/ijieeb.2015.05.06

Crowdsourcing is a developing concept and has diversity in its application domains. It provides a model where a large number of people contribute to perform an activity especially online. The activity can be to solve a distributed problem [1] or to get innovative ideas [2] or a contribution to bring about some results. Crowd and crowdsourcer both can be individuals or a group of people to perform a task. Upon completing a task, the crowd can get some reward in various forms like some money, incentive etc. Time and cost which was previously taken for a solution of problem can be reduced by the model [1].

There exist various definitions of crowdsourcing [1], Estelles et al. [3] defines crowdsourcing as follows:

“Crowdsourcing is type of participate online activity in which an institution, a non-profit organization, or company proposes to a group of individuals of varying knowledge, heterogeneity and number, via a people who are voluntarily undertaking any sort of task. The undertaking of the task, of uneven difficulty and in which the crowd should contribute bringing their work, money, knowledge and/or experience. The user will receive the satisfaction of a given type of need, or the development of individual skills, while the crowdsourcer will obtain and utilize to their advantage that what the user has brought to the course of action, whose form will depend on the type of activity undertaken.”

To harness the dispersed knowledge and crowd’s wisdom crowdsourcing has been used in a variety of domains. Wikipedia is the most famous example of crowdsourcing application [4]. Other domain includes medicine[5, 6], marketing and business [2, 7] and environmental sciences [8, 9].To assemble IT-mediated individuals efforts crowdsourcing method has become famous [10]. In past few years this crowdsourcing technique is used for analysis and design of information system where user are involved in evaluation and improvement the software [11-14].

Mobile app markets are growing rapidly in terms of mobile apps and users. Millions of app reviews are being generated through these app stores. App stores (Google Play Store, iTunes App Store, Microsoft Store) are providing a software distribution model and provides a platform where user feedback is being provided in the form of ratings and reviews. Users' behaviors changed from passive to active and are sharing their real experiences in the form of reviews and ratings This kind of feedback can be helpful for other users in decision making to use an app or not.

Crowdsourcing is also providing a way for open innovation and co-creation value. We think that app stores are providing a crowdsourcing platform which can be used in a variety of ways. We posit that mobile app reviews is a crowdsourcing technique that can be utilized to structure diverse knowledge resources undertaken by scattered individuals, mediated through app stores. Mobile app store provides a platform where a large number of individual contributors build hard and planned sources of data related to real life experience of mobile apps. In this study, we have analyzed and discussed how mobile app reviews can be utilized as a crowdsource to generate innovative ideas, requirement and useful feedback from the users of mobile apps that can be utilized further.

Mobile App reviews have been studied as a source of understanding user requirement. Groen et al. [15] proposed a crowd-based requirements engineering technique to collect feedback through direct interface and social collaboration by using data mining techniques.

Prpic et el [16] classified crowdsourcing task into four parts: crowd voting, micro task, idea, and solution crowdsourcing. In case of app reviews, we can have two types: idea and solution crowdsourcing. Public reviews system where users can convey their satisfaction is common in other domains but visible crowd-sourced opinions is relatively fresh to the software sharing model and its effects are still not well understood [17].

In this paper, we discussed a crowdsource reference model [18] and analyzed how it is can be utilized for the understanding of mobile app reviews as a crowdsourcing technique. We have discussed each construct of the reference model from the perspective of mobile app reviews and analyzed whether specific features of the crowdsource reference model are applicable in our case or not.

-

II. Crowdsource Reference Model

In this model [18], authors reviewed crowdsourcing literature and developed taxonomy of four pillars: the crowd, the crowdsourcer, the task and the crowdsourcing platform. The taxonomy presented a variety of visions rather than trying to concurrence. They also intricate on the meaning of the features and challenges they introduced while developing crowdsourcing platforms. In our study, we have utilized this reference model to present mobile app reviews as a crowdsourcing technique. We have analyzed and applied the reference model and assessed it applicability in the case of mobile app reviews.

The reference model has following four pillars:

-

A. The crowd: the crowd is a group of people who perform in a crowdsourcing task.

-

B. The crowdsourcer: the crowdsourcer is the entity which can be a person, institution, an organization, etc. who gains the heterogeneous knowledge and wisdom of the crowd for an activity.

-

C. The crowdsourcing task: the activity of

crowdsourcing which the crowdsourcer requires from the crowd.

-

D. The crowdsourcing platform: the crowdsourcing place is the system where activity or tasked is performed. This system can consist of software or without software.

-

III. The Four Elements of Crowdsource Referenece Model: A Case of App Reviews

This section presents an analysis of the app reviews from the perspective of the reference model of crowdsource model [18].

The crowdsourcing is a famous technique utilized by different domains for various purposes such as cocreation and open innovation. Crowdsourcing also has an impact on information systems development and this process can be further improved. Prahalad et al. [21] introduced the idea of co-creation of value and meaning and found that users can exercise their influence in all parts of business system, can interact with the service providers to co-create value. Guzman et al. [22] discussed user reviews and discussed how it can play a vital role in eliciting user requirements. Authors also proposed an approach to analyze explicit user feedback, submitted in form of informal text. It facilitates to identify useful feedback for app analysts and developers, quantitatively evaluating the opinions about the single features, and grouping popular feature requests. According to authors, their approach can help the crowdsourcing of requirements.

By analysis app reviews, productive information can be extracted from crowd of user. User feedback in the form of app reviews can help to understand requirements for next update of app. Pango et al. [13] discussed that number of user are increasing who rate and write review in app stores have impact on number of downloads. Other things which can be obtained from reviews are bugs report, user experience and request for features, this informative information can helps developers towards crowdsourcing requirements.

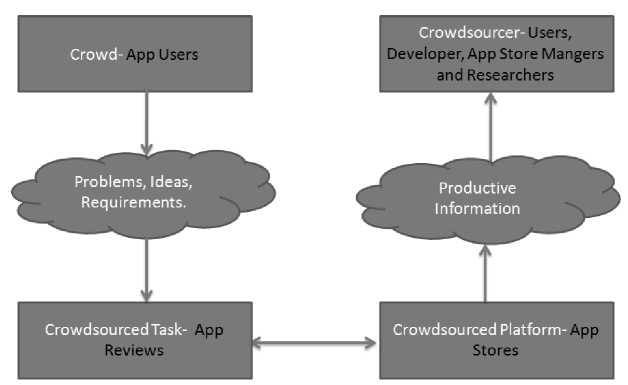

Figure 1 shows flowchart of complete crowdsourcing process with four pillars. We will discuss four pillars construct by construct.

Fig. 1. The process of crowdsourcing app reviews

-

A. The Crowd- Users

The crowd is a group of people who perform in a crowdsourcing task. In the case of mobile app reviews, crowdsourcing task is done by the app users who are writing comments and give star ratings ranging from 1-5. Millions of the app users are writing reviews and participating in crowdsourcing activity by having a valid Google + account.

-

• Diversity : Diversity is a state of being different or having variety. In our case, mobile app reviews are collected without specifying any location or background of users that demonstrates spatial diversity . App reviews are not selected on basis of gender and age and have gender and age diversity . App reviews can be very informal to formal and maycontain only sentiments or bug reporting or innovative idea written by different users of varied experience and exhibit the property of expertise diversity .

-

• Unknown-ness: Unknown-ness is not present is our case because app reviews are posted publicly. Anyone can know about the crowd that taking part in crowdsourcing process. On Google Play crowd can be contacted by Google+ profile.

-

• Largeness: Largeness mean with high magnitude or big numbers. Approximately 1.4 Million apps are present on Google Play Store [23] in which only one app of “Whatsapp” have more than 25 million reviews. One review means a unique app user, because user is only allowed to review once. This shows that how large number of crowd is present for one app. Largeness is clearly exhibit by the number of reviews available for an app.

-

• Undefined-ness: Undefined-ness means that without fixing limit or boundaries. In crowdsourcing of app reviews, crowd is random having no selection criteria. In case of app reviews, crowd is heterogeneous in nature regardless of abilities, work place or any sort of defined-ness. So, we can say that undefined-ness is also present in mobile app reviews.

-

• Suitability: Suitability means appropriate for some purpose or condition. In crowdsourcing app reviews crowd suitability means crowd is appropriate to perform reviews task or what is their motivation to this activity. Competence is not essential to write review but if some committed user is writing a review it can surely add some value to the crowdsourcer. Collaboration of crowd is not required in our case because each user writes separate reviews and only one review on one app. Volunteer reviewers are required to write comments and give star rating to add their contribution for improvement of mobile apps. Motivation is present when a user is writing a review. Platzer et al. [24] discussed 16 basic desires and corresponding intrinsic feeling that motivate a user to write a review. Mental satisfaction is present when writing a review contains complaint about mobile apps. Fu et al. [25] analyzed over 13 million app reviews that why user like or dislike mobile apps. Guzman et al. [22] have done sentiments analysis of app reviews to find out what user want to express about mobile apps. Sentiments of users excite them to perform this crowdsourcing task for their mental satisfaction, knowledge sharing and love for community. Crowd can have major contribution in app development so if user is sure that if reviews are viewed by app developer so self-esteem is partially present. Generally writing app reviewers are not contributing to develop any sort of skill.

Table 1 presents an overview of the features of crowd that are present or not present in our case of app reviews.

-

B. The Crowdsourcer: Users, Developer, App Store Owners and Researchers

Crowdsourcer is a body which gets benefit from crowdsourcing task. In our case of app reviews, there are four main stakeholders who can get advantage from crowdsourcing activity are as following:

-

a) Users: App users can have crowdsourced information about apps like different star rating and

Table 1. Features of Crowd present in App reviews case. Yes/No/Partially

-

b) Developers: Developer can use this useful information in the form of app reviews to improve the app better updates with removed bugs, add new feature and to know about users’ concern [28]. Developers need crowdsourced information from reviews to enhance the quality of app to generate more revenue.

-

c) App Store Owners: App stores owners like Google, Apple and Microsoft can use these review to filter out malicious apps and other problematic apps [25] . This kind of market is called user-driven market.

-

d) Researcher: Researchers have a chance to understand users’ perspective in a better way from app reviews, and to help developer avoid mistakes [29]. Researcher can provide detailed analysis of reviews and classification of crowdsourced information on app stores.

-

• Incentive Provision: Incentive is a thing which encourages someone to do some certain task or increase the efforts. Crowdsourcer may provide crowd different sort of incentives for inducement. In crowdsourcing, financial incentive is well known, but in app reviews case this incentive is not present. Social incentive can be a reason to write a review to get recognized publicly when review is thumbs up by other users. Some of the apps offer users to give five star rating and in return they can get a sort of

entertainment incentive [30].

-

• Open Call: An open call mean that any person who wishes to perform a task can try it out. In perspective of crowdsourcing, open call is to perform a task which is open to everyone irrespective of their background. App store owners provide an open call to app users to provide their feedback to participate in crowdsourcing task.

-

• Ethicality provision: It means to do something through moral and ethics. There are three actions which are supposed to be ethical in activity of crowdsourcing. First, the crowd have right to stop activity whenever according to their wish. This feature is present in app reviews case, user can delete and edit review at any time. This opting out will not affect the crowdsourcing, as sufficient number of review are present on apps stores. App store owner as a crowdsourcer share results of crowdsourcing activity to crowd, in the form of star ratings and comments. Writing a review on app crowd is totally safe and has no harm in any case.

Table 2 summarizes the features of crowdsourcer that are present or not present in our case of app reviews.

Table 2. Features of Crowdsourcer present in App reviews case. Yes/No/Partially

|

The Crowdsourcer |

Present |

|

Incentives Provision |

Yes |

|

V Financial Incentives |

No |

|

V Social Incentives |

Partially |

|

V Entertainment Incentives |

Partially |

|

Open Call |

Yes |

|

Ethicality Provision |

No |

|

V Opt-out Procedure |

Yes |

|

V Feedback to Crowd |

Yes |

|

V No Harm to Crowd |

Yes |

-

C. The Crowdsourced Task- Reviews

The type of crowdsourced task in this case is collection of reviews through crowdsource platform. It attracts and asks users crowd to share their experience by writing feedback and also through rating a quantified value. It requires users to have real time experience with the app and have innovative ideas and suggestions.

quantity; hence this activity of reviews can’t be outsourced.

-

• Modularity: Modularity means to what extent a task or activity can be subdivided into manageable standard micro tasks. Activity of writing reviews is an atomic task cannot be divided into micro task as it belongs to an individual. Each individual has to write review independently which will contribute to atomic task.

-

• Complexity: Complexity is state of being complicated or which is hard to understand. However, writing a review is simple task and does not require any sort of skills to perform this task.

-

• Automation Characteristics: Automation is a process or a facility of manufacturing automatically, without effort of humans process it controlled by self operating devices. A crowdsourced task is generally a task which is either expensive or difficult to automate. In case of app reviews, task cannot be automated because this type of crowdsourcing requires user feedback about an app. Automating this task is not possible because machine cannot share user experience.

-

• User- driven: An activity which is managed by a user is known as user-driven. Crowdsourced task of writing reviews is purely user-driven. Feedback of app in the form of reviews provides user-driven quality evaluation and marketing [13]. To find out different bugs and evaluation of an app for improved update, app reviews are welcomed by developer. The crowd of app user has shown the capability to indentify bugs, evaluate and solve developer’s problem. User as crowdsourcer may skim reviews for solution of problem that which app should be installed based on others users’ experience [31]. Innovative ideas can be extracted from creative reviews of crowd and can develop improved version of app [13, 22].User of app can be used for co-creation value when they write real time experience and can help in the process app development. Chen et al. [32] discussed how informative “information” from raw reviews of app user in app store can aid developers to improve their apps for future updates.

Table 3 summarizes the features of crowdsourcing tasks that are present or not present in our case of app reviews.

-

D. The Crowdsourcing Platform- App Store

The place where literally task occurs is the crowdsourcing platform. The vast majority of crowd engaging IT utilizes a web-based or mobile platform, or combination of both for crowdsourcing [16]. Followings are few feature of crowdsourcing platform indentified and discussed

-

• Crowd-related Interactions: Interactions between crowd and platform where crowdsourcing activity is performed. Reference model stated some of the interactions but are not limited. Crowd is not

specifically enrolled to perform crowdsourcing

Table 3. Features of Crowdsourced Task present in App reviews case. Yes/No/Partially

-

• Crowdsourcer-related interactions: Interaction

between crowdsourcer and crowdsourcing platform can be of different types. In our case, we have four stakeholders which are user, developers, app store manager and researchers. Some of features in reference model can and cannot be included in our case of app reviews. Developers are enrolled but other user, app store manager and researcher are not enrolled, so this feature is partially presents. Crowdsourcer do not authenticate user to perform task on app store. Reviews are written by user by their own choice and it is not a broadcast. Crowdsourcing platform provide assistance to developer only to view different static about their app only, like Google Play Developer Console provide this facility. Other stakeholder can views limited statics on app store like rating statics. There is no time limit to perform crowdsourcing task, users can write reviews according to their wish. For this task of crowdsourcing financial incentives are not given so price negotiation is not there. Results can be verified on app stores and any stakeholders can easily view static of star rating and comments. App store platform provides feedback to crowdsourcer about activities in which crowd are participating through Google Play Developer Console in case of Android.

-

• Task-Related Facilities: Some of these facilities which crowdsourcing platform can provide are discussed here which are not limited. Results of crowdsourcing task in form of reviews are aggregated on app stores to show overall rating of app [29]. Results are not hidden on app store anyone can view crowdsourced task. History of crowdsourced task is saved on crowdsourcing platform and a person can only write reviews once on app but it can be edited any time. There is no quality or quantity threshold for writing reviews, users can write whatever they want for their satisfaction and improvements.

-

• Platform-related facilities: Crowdsourcing platform provide some facilities in order to perform task in healthy way and smoothly. App stores provides online environment to write reviews for users. There are some checks of app store that abstain their platform for being misused. Fake and inappropriate reviews can influence placement of any app in Google Play [30]. App store provides feasible interaction between

Table 4. Features of Crowdsourcing Platform present in App reviews case. Yes/No/Partially

Table 4 summarizes the features of crowdsourcing platform that are present or not present in our case of app reviews. Facilities provided by app store platform are not limited to these motioned features.

-

IV. Discussion

Some of the significant features identified in the case of mobile app reviews are discussed here and shown in figure 2.

-

• The Crowd: Anyone can be a member of the crowd irrespective of gender, ethnicity, religion, and skill level in specific area making it diverse in nature. There is no limit on how many people can participate in this crowdsource activity. There is also no constraint on the selection of this crowd and does not demand a prior knowledge to perform the task. The participants of the crowd love to share their experience and knowledge and possess the motivation to lead others. Although, they can be criticized by others challenging their knowledge but even then they are ready to face it as they get satisfaction by such sharing.

-

• The Crowdsourcer: It gives an open call to the participants for their contribution at any time. Users can give feedback and share real time experience after downloading app. The feedback in the form of reviews is utilized by people belonging from different fields who are crowdsourcer. Buggy apps can create trouble on app store and crowdsourcing of app reviews can be used to check which app are asking for permission which are not required and other

suspicious activities. This task demands ethics to be followed but as our findings suggests it is not implemented in its true spirit because we have such reviews which violate the code of ethics insulting others by giving humiliating comments on their shared experience. Although one cannot use this for its personal promotions and business campaigns.

-

• The Crowdsourced Task: Crowdsourced task of mobile app reviews performs atomic and simple tasks by just giving rating and writing a review. This task cannot be performed by computers as it demands natural intelligence and real time experience Users of app can participate in co-creation value when they write real time experience and can help in the process app development.

-

• The Crowdsourcing Platform: The crowdsourcing platform can authenticate user before writing a review about an app the user has installed and can review only once. The results of reviews are being

■ _. _ , ■ The Crowdsourced |

■ The Crowd The Crowdsourcer ■ _,_ , ■

■ ■ Task ■

1 The Crowdsourcing

1 Platform

-

• Diversity • Open Call • Atomic Tasks

-

• Largeness • Ethicality • Simple Tasks

-

• Undefined-ness Provision • Complexfor

-

• Motivation Computers

• User-driven

-

• Crowd Authentication

-

• Aggregate Results

-

• Online Interactive and Attractive Environment

Fig. 2. Some of the important feature for mobile app review.

-

aggregated automatically and shown on platform. App stores provide online environment which is an attractive and interactive for both crowd and crowdsourcer.

Crowdsourcing activities performed by App users: Followings is a brief overview of a few crowdsource activities that an app user can perform:

-

A. Feature Request

Users can do a feature request which can be a useful feature request for other users and can help the developers to improve their apps.

“Loved the previous version, but the fact I can't place normal calls anymore makes using this app really annoying. When on the go VoIP call feature isn't handy at all! Please let the user switch to Normal call!”

“Please add a search feature for Country, Airline Name and Airport Name for easily find something without scrolling one by one.”

“Would be nice to have an alarm that would let me know when the game is starting. I did not find this type of feature.”

-

B. Recommendation for Users

Many users give recommendations to other users based on their experience of app. This makes download decision of new app user easy.

“Supported by many popular forums, Forum Runner is the essential application for anyone whom spends their spare time chatting to other users online about a topic of interest. Highly recommended!”

“I passed my test today! This app was great as its interactive rather than just reading a book. I would highly recommend downloading this app!”

“I will advice all those who want to download this app shouldn't because it does not even work. All those graphics there is not featured in the app. I regret downloading this app.”

-

C. Problem Spotting

Problems are spotted by app user for developers to fix bugs and indicate other app users about the quality of app.

“Not good at all. This app doesn't show the description of any product. You can see the description. Using your browser but this doesn't show it please fix this problem”

“This app is giving me way too many problems. It worked perfectly fine on my iPod and now that I've brought it on GS3 it's been terrible. Not adding the filters on the picture, the picture disappearing and giving me a blank screen, or just crashing, over and over again. I'm tired of it. Please fix this”

-

D. Suggestion for developer

App user gives suggestions to developer that how to improve their app. It can be in form of addition or removal of new feature.

“Great! It works great! A suggestion for improvement would be to let the user choose frequency and intensity freely with two numbers instead of tapping on a grid!”

“I have been using this launcher for years. No reason to ever switch. I do have a suggestion. I would really like to add shortcuts to an app group in my app drawer to home”

It is a problematic task to identify that what issues are coming in app and how to improve app in new updates. To find out what user requires from developer, like adding feature which was present on previous version and removed in new update or new feature request. Locob et al. [31] analyzed app review to find out that which reviews are requesting features. However, the understanding of the app reviews as crowdsource can play a key role in addressing the design issue related to the future development of personalized mobile services [32].

V. Conclusions

Crowdsourcing has become crucial in design, development and innovations of user driven mobile app market. App stores are providing a platform for app distribution and user feedback. User reviews are being studied and analyzed by different researchers and practitioners for a variety of purposes. However, the understanding of mobile app reviews as a crowdsource is limited. In this study, we have utilized a crowdsourcing framework to analyze the mobile app reviews as a crowdsource. We have discussed different constructs of the framework in the context of mobile app reviews and assessed the suitability of the crowdsource framework in the case of mobile app reviews. According to our understanding, app reviews can be a valuable crowdsource for the stakeholders of the mobile app market. In future work, we are seeking to develop a crowdsourcing framework for better understanding and utility of mobile app reviews.

Список литературы A Case of Mobile App Reviews as a Crowdsource

- Brabham, D.C., Crowdsourcing as a model for problem solving an introduction and cases. Convergence: the international journal of research into new media technologies, 2008. 14(1): p. 75-90.

- Chanal, V. and M.-L. Caron-Fasan. How to invent a new business model based on crowdsourcing: the Crowdspirit ® case. In EURAM (Lubjana, Slovenia, 2008).

- Estellés-Arolas, E. and F. González-Ladrón-de-Guevara, Towards an integrated crowdsourcing definition. Journal of Information science, 2012. 38(2): p. 189-200.

- Wikipedia. http://www.wikipedia.org/.

- Foncubierta Rodríguez, A. and H. Müller. Ground truth generation in medical imaging: a crowdsourcing-based iterative approach. In Proceedings of the ACM multimedia 2012 workshop on Crowdsourcing for multimedia. 2012. ACM.

- Yu, B., et al., Crowdsourcing participatory evaluation of medical pictograms using Amazon Mechanical Turk. Journal of medical Internet research, 2013. 15(6): p. e108.

- Whitla, P., Crowdsourcing and its application in marketing activities. Contemporary Management Research, 2009. 5(1).

- Gao, H., et al., Harnessing the crowdsourcing power of social media for disaster relief. 2011, DTIC Document.

- Fraternali, P., et al., Putting humans in the loop: Social computing for Water Resources Management. Environmental Modeling & Software, 2012. 37: p. 68-77.

- Prpic, J., A. Taeihagh, and J. Melton. A Framework for Policy Crowdsourcing. 2014.

- Ali, R., et al., Social adaptation: when software gives users a voice. 2012.

- Gebauer, J., Y. Tang, and C. Baimai, User requirements of mobile technology: results from a content analysis of user reviews. Information Systems and e-Business Management, 2008. 6(4): p. 361-384.

- Pagano, D. and W. Maalej. User feedback in the appstore: An empirical study. in Requirements Engineering Conference (RE), 2013 21st IEEE International. 2013. IEEE.

- Pagano, D. and B. Bruegge. User involvement in software evolution practice: a case study. In Proceedings of the 2013 international conference on Software engineering. 2013. IEEE Press.

- Groen, E.C., J. Doerr, and S. Adam, Towards Crowd-Based Requirements Engineering. A Research Preview, in Requirements Engineering: Foundation for Software Quality. 2015, Springer. p. 247-253.

- Prpić, J., et al., How to work a crowd: Developing crowd capital through crowdsourcing. Business Horizons, 2015. 58(1): p. 77-85.

- Hoon, L., et al. A preliminary analysis of vocabulary in mobile app user reviews. In Proceedings of the 24th Australian Computer-Human Interaction Conference. 2012. ACM.

- Hosseini, M., et al. The four pillars of crowdsourcing: A reference model. In Research Challenges in Information Science (RCIS), 2014 IEEE Eighth International Conference on. 2014. IEEE.

- Zhao, Y. and Q. Zhu, Evaluation on crowdsourcing research: Current status and future direction. Information Systems Frontiers, 2014. 16(3): p. 417-434.

- Ross, J., et al. Who are the crowd workers? Shifting demographics in mechanical Turk. In CHI'10 extended abstracts on Human factors in computing systems. 2010. ACM.

- Prahalad, C.K. and V. Ramaswamy, Co-creating unique value with customers. Strategy & leadership, 2004. 32(3): p. 4-9.

- Guzman, E. and W. Maalej. How do users like this feature? a fine grained sentiment analysis of app reviews. In Requirements Engineering Conference (RE), 2014 IEEE 22nd International. 2014. IEEE.

- Google Play. https://play.google.com/store/apps/.

- Platzer, E., Opportunities of automated motive-based user review analysis in the context of mobile app acceptance. Proc. CECIIS, 2011: p. 309-316.

- Fu, B, et al. Why people hate your app: Making sense of user feedback in a mobile app store. In Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining. 2013. ACM.

- Vasa, R., et al. A preliminary analysis of mobile app user reviews. In Proceedings of the 24th Australian Computer-Human Interaction Conference. 2012. ACM.

- Finkelstein, A., et al., App Store Analysis: Mining App Stores for Relationships between Customer, Business and Technical Characteristics. RN, 2014. 14: p. 10.

- McIlroy, S., et al., Analyzing and automatically labeling the types of user issues that are raised in mobile app reviews. Empirical Software Engineering, 2015: p. 1-40.

- Khalid, H. On identifying user complaints of iOS apps. In Software Engineering (ICSE), 2013 35th International Conference on. 2013. IEEE.

- Google, Developer Console Help- Fake ratings & reviews. https://support.google.com/googleplay/android-developer/answer/2985810?hl=en.

- Iacob, C. and R. Harrison. Retrieving and analyzing mobile apps feature requests from online reviews. In Mining Software Repositories (MSR), 2013 10th IEEE Working Conference on. 2013. IEEE.

- Asif, Muhammad, and John Krogstie. "Research Issues in Personalization of Mobile Services." International Journal of Information Engineering and Electronic Business (IJIEEB) 4.4 (2012): 1.