A Comparative Study of Feature Extraction Methods in Images Classification

Автор: Seyyid Ahmed Medjahed

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 3 vol.7, 2015 года.

Бесплатный доступ

Feature extraction is an important step in image classification. It allows to represent the content of images as perfectly as possible. However, in this paper, we present a comparison protocol of several feature extraction techniques under different classifiers. We evaluate the performance of feature extraction techniques in the context of image classification and we use both binary and multiclass classifications. The analyses of performance are conducted in term of: classification accuracy rate, recall, precision, f-measure and other evaluation measures. The aim of this research is to show the relevant feature extraction technique that improves the classification accuracy rate and provides the most implicit classification data. We analyze the models obtained by each feature extraction method under each classifier.

Feature extraction, Image classification, Models evaluation, Support vector machine

Короткий адрес: https://sciup.org/15013533

IDR: 15013533

Текст научной статьи A Comparative Study of Feature Extraction Methods in Images Classification

Published Online February 2015 in MECS

Feature extraction is one of the most important fields in artificial intelligence. It consists to extract the most relevant features of an image and assign it into a label. In image classification, the crucial step is to analyze the properties of image features and to organize the numerical features into classes. In other words, an image is classed according to its contents [1, 2, 3, 4, 5, 6].

The performance of the classification model and the classification accuracy rate depend largely on the numerical properties of various image features which represent the data of the classification model. In recent years, many feature extraction techniques have been developed and each technique has a strengths and weaknesses [7, 8, 9, 10]. A good feature extraction technique provides relevant features.

In this paper, we evaluate the performance of the data models obtained by the different feature extraction techniques in the context of binary and multiclass classification by using different classifiers. The experimentations were applied on the public image dataset “Caltech 101”. We use several performance metrics such as: correct rate of classification, precision, recall, etc.

The remainder of the paper is organized as follows: In Section 2, we introduce an overview of feature extraction techniques. Section 3, describes some classifiers. In Section 4 we discuss about the experimental results. Finally, we give a conclusion of our work and some perspectives in Section 5.

-

II. Feature Extraction Techniques

-

A. Color Features

In image classification and image retrieval, the color is the most important feature [11, 12]. The color histogram represents the most common method to extract color feature. It is regarded as the distribution of the color in the image. The efficacy of the color feature resides in the fact that is independent and insensitive to size, rotation and the zoom of the image [13, 14].

-

B. Texture Features

Texture feature extraction is very robust technique for a large image which contains a repetitive region. The texture is a group of pixel that has certain characterize. The texture feature methods are classified into two categories: spatial texture feature extraction and spectral texture feature extraction [14, 15, 16].

-

C. Shape Features

Shape features are very used in the literature (in object recognition and shape description). The shape features extraction techniques are classified as: region based and contour based [14, 17]. The contour methods calculate the feature from the boundary and ignore its interior, while the region methods calculate the feature from the entire region.

-

D. Feature Extraction Methods Used in this Study

In this work, we have selected fourteen feature extraction methods which are the most used in the literature.

The table 1 summarizes the different feature extraction methods used in our research.

Table 1. Some Feature Extraction Techniques

|

Name |

Method |

|

PHOG |

Pyramid Histogram of Oriented Gradients [27, 49] |

|

CONTRAST |

Contrast Features [28] |

|

FITELLIPSE |

Ellipse Features [29] |

|

FOURIER |

Fourier Features [30] |

|

FOURIERDES |

Fourier Descriptors [31, 32] |

|

GABOR |

Gabor Features [33, 34] |

|

GUPTA |

Three Gupta Moments Features [35] |

|

HARALICK |

Haralick Texture Features [36 37] |

|

HUGEO |

The Seven Hu Moments Features [38, 39, 40] |

|

HUINT |

The Hu Moments with Intensit Features [38, 39, 40] |

|

LBP |

Local Binary Patterns Features [41, 42, 43] |

|

MOMENTS |

Moments and Central Moments Features [44] |

|

BASICGEO |

Geometric Features |

|

BASICINT |

Basic Intensity Features [33] |

-

III. Classifier Systems

-

A. Support Vector Machine

Invented by Vapnik [18], Support Vector Machine (SVM) is a binary classifier which attempts to classify dataset by finding an optimal hyperplan. The basic idea of SVM is to find the optimal hyperplan which separates the instances space [19]. It has been shown that there is a unique optimal hyperplan that maximize the margin between the instances and the separator hyperplan. Finding this optimal hyperplan is equivalent to solve a quadratic optimization problem [20].

Several variant of SVM methods has been invented to solve the problem of hyper parameters or to solve quickly the quadratic optimization problem. Among these variant we quote: SVM-SMO, LS-SVM, υ-SVM etc.

In this study we use SVM-SMO which uses SMO to resolve the quadratic optimization problem. SMO is a very robust and fast method [21, 22, 23]. Also we propose to use LS-SVM as second variant of SVM. In LS-SVM, the classifier is found by solving the linear set of equations instead of quadratic programming problem [24, 25, 26].

-

B. K Nearest Neighbor

K nearest neighbor is a supervised learning method that has proven its performance in many applications. The goal of k-NN method consists to take the k instances which are nearest to the input instance. The k instances are defined by calculating a certain distance such as: Euclidian distance, City block distance, etc. The label of the input instance will be the label that is most represented among the k nearest neighbor.

-

IV. Experiment Results

In this section, we present the experimental results of applying different feature extraction methods in the context of image classification. Our research has been implemented by using Matlab R 2011a and the Balu Toolbox [47].

All the experimentations were conducted on the Caltech 101 image dataset [45]. We use the following image sets for the binary classification:

-

• Airplanes VS Car Side

-

• Cougar Face VS Cougar Body

-

• Crab VS Crayfish

-

• Crocodile VS Crocodile Head

-

• Flamingo VS Flamingo Head

-

• Water Lilly VS Sunflower

We have selected the sets of images as a way to create some ambiguity to the classifiers. This allows us to show the power of the feature selection methods and if the method can represents the contained of the image as relevant as possible. For example: Flaming VS Flamingo Head presents a correlation in classification, in this case, if the feature selection method provides a relevant features the classification will be improved, else, we reach a low classification accuracy rate.

The performance measures used to evaluate and analyze the results are: classification accuracy rate, Precision, Recall, F_measure, G_mean, AUC and the Roc Curve.

The table 2 presents the number of images in each category.

Table 2. Number of Image in Each Category Used for this Work

|

Category |

The number of image |

|

Airplanes |

800 |

|

Car Side |

123 |

|

Cougar Face |

69 |

|

Cougar Body |

47 |

|

Crab |

73 |

|

Crayfish |

70 |

|

Crocodile |

50 |

|

Crocodile Head |

51 |

|

Flamingo |

67 |

|

Flamingo Head |

45 |

|

Water Lilly |

37 |

|

Sunflower |

85 |

In each classifier systems, we must split the dataset into two parts: Training set and Test set. In this study, we divide the dataset as follows: 60% of instances will be used in the training phase and 40% of remaining instances constitute the test set.

We use the Linear SVM, SVM with Gaussian kernel, Least Square SVM (LS-SVM) and k-nearest neighbor for the classification. For SVM with Gaussian kernel, the parameters σ and C will be selected by experimentation. Also, we use the same parameters for LS-SVM with Gaussian kernel.

The Euclidean distance will be used for k-nearest neighbor with k=5.

-

A. Application in Binary Classification

The numerical results are illustrated in the following tables.

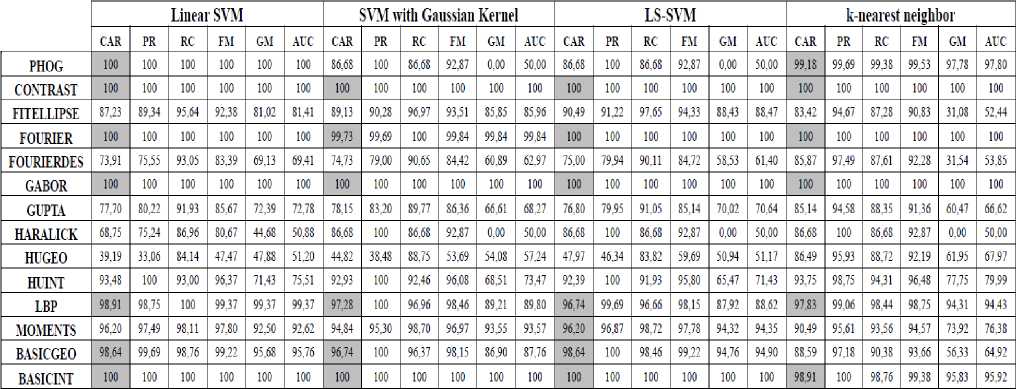

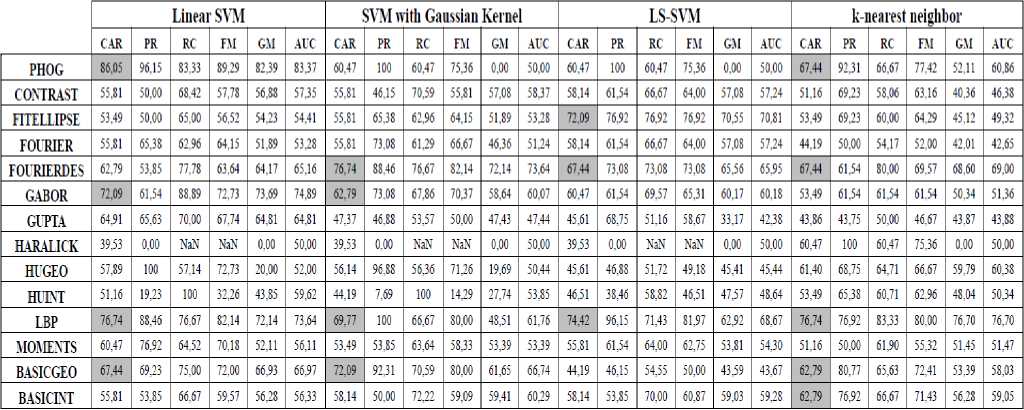

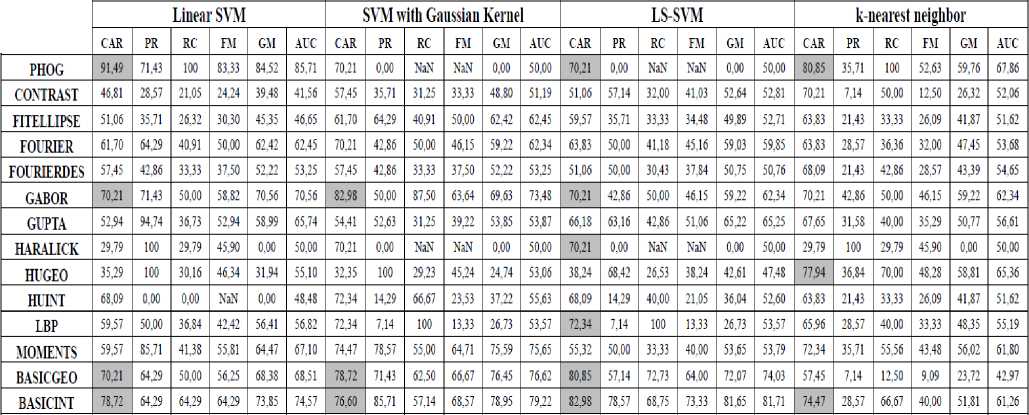

Table 3. The Numerical Results Obtained by the Feature Extraction Methods under Different Classifiers in the Image Sets: Airplanes VS Car Side

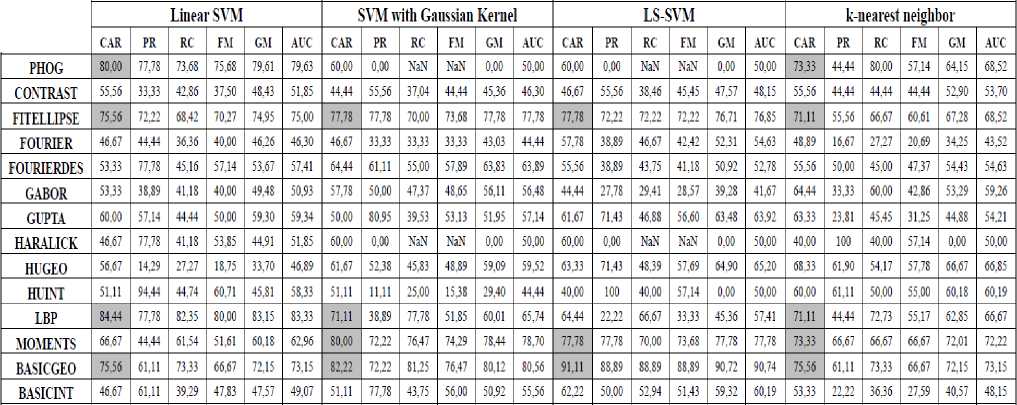

Table 4. The Numerical Results Obtained by the Feature Extraction Methods under Different Classifiers in the Image Sets: Cougar Face VS Cougar Body

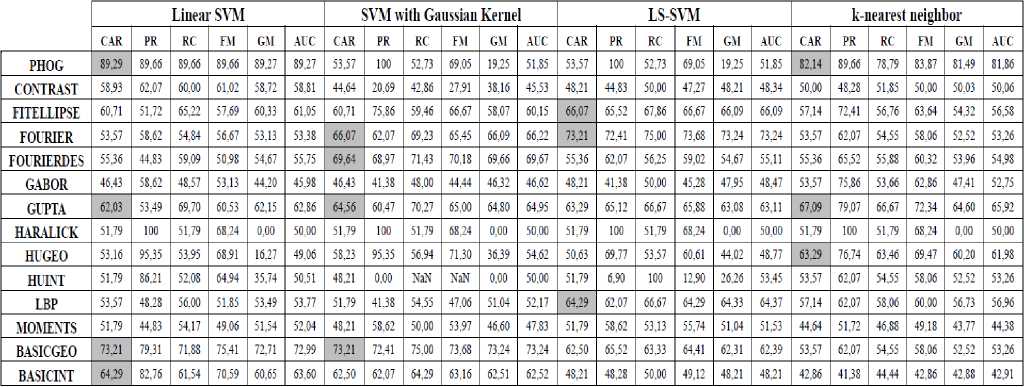

Table 5. The Numerical Results Obtained by the Feature Extraction Methods under Different Classifiers in the Image Sets: Crab VS Crayfish

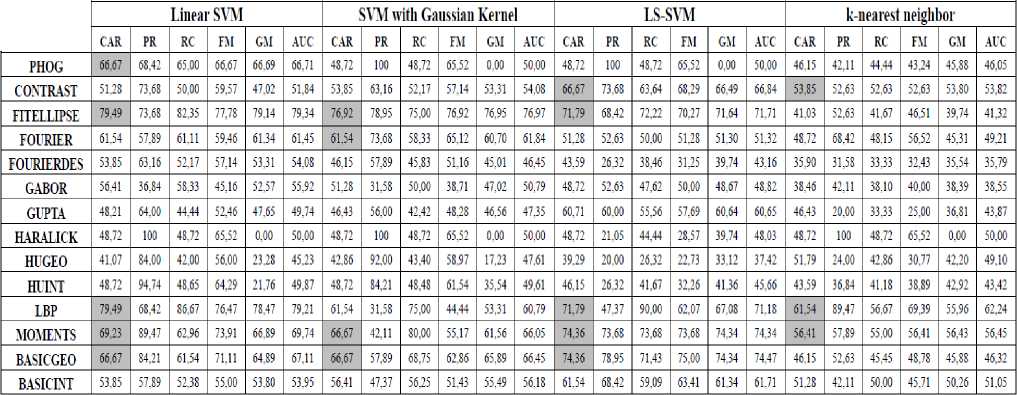

Table 6. The Numerical Results Obtained by the Feature Extraction Methods under Different Classifiers in the Image Sets: Crocodile VS Crocodile Head

Table 7. The Numerical Results Obtained by the Feature Extraction Methods under Different Classifiers in the Image Sets: Flamingo VS Flamingo Head

Table 8. The Numerical Results Obtained by the Feature Extraction Methods under Different Classifiers in the Image Sets: Watter Lilly VS Sunflower

The table 3, 4, 5, 6, 7 and 8 describe the numerical results of the classification data obtained by the different feature extraction methods for all the image sets used in binary classification.

The experiments are conducted over three variants of SVM (Linear SVM, SVM with Gaussian kernel and LS-SVM), also, we used the k-nearest neighbor.

Each column of classifier contains six columns:

-

• CAR: Classification Accuracy Rate

-

• PR: Precision

-

• RC: Recall

-

• FM: F_Measure

-

• GM: G_Mean

-

• AUC: Area Under the Curve

We evaluate the models obtained by each feature extraction method under each classier.

The rows of the tables represent the feature extraction methods which have employed in our research.

The filled cells represent the high classification accuracy rate of the classification models.

The analysis of the results shows that the PHOG, BASICGEO and LBP have reached a high classification accuracy rate compared to other methods under the Linear SVM Classifier. We record 100% of the correct rate on the dataset: Airplanes VS Care Side.

In SVM with Gaussian kernel, the feature extraction method that has given good results is: LBP. Under the LS-SVM classifier, the satisfactory results are observed in PHOG, FITELLIPSE and LBP.

In K nearest neighbor, the PHOG and the LBP have achieved a high classification accuracy rate.

It is very clear that the LBP and the PHOG feature extraction methods have given satisfactory results and attain a high classification accuracy rate with an advantage to LBP method. Also, the PHOG and the LBP methods give very good results under the Linear SVM Classier.

Obtaining a high classification accuracy rate does not signify that the model is precise and does not give information’s about the classifier and the data. That is why; we need to evaluate the model with other term.

In term of precision and recall, we record better results for PHOG and LBP. A perfect model is a model that has a precision and recall values close to 1. We remark that the models created by PHOG method with: Water Lilly VS Sunflower and Cougar Face VS Cougar Body have a high classification accuracy rate but are not precise (0 for precision and recall). In this case, we can say that these models are noisy, not precise and not efficient.

Generally, a precise model is a powerful model, but, it possible to obtain a precise model (precision close to 1) which is not very efficient (recall close to 0). In our results, we record that PHOG and LBP have given a very precise and very efficient models, and there is a good compromise between recall and precision.

We propose also to calculate the f_measure which represents the harmonic mean of precision and recall. It calculates the ability of system to give all the relevant solutions and reject other. Nevertheless, the model which reaches a high precision and recall has a good f_measure and the opposite is always true, in other terms; the f_measure describes the performance of the model.

The analysis of f_measure obtained by our models show that LBP and PHOG is very performance under the four classifiers.

A very interesting performance metric is the geometric mean of sensitivity and specificity (G_mean) which was used by Kubat et al. in [47]. In our study, favorable results in G_mean are recorded for: PHOG and LBP.

We note that the others feature extraction methods have given results a litter closer to PHOG and LBP. BASICGEO and GABOR have provided perfect results.

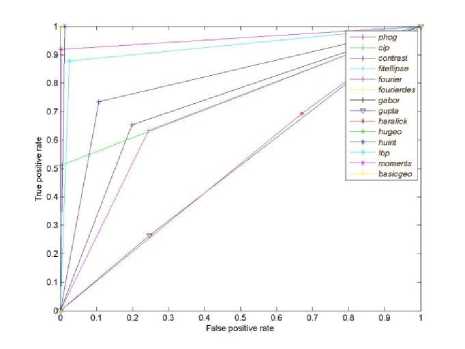

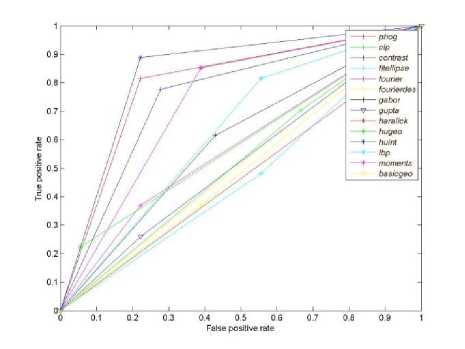

The following figures illustrate the Roc Curve of our models obtained by the feature extraction methods under the Linear SVM classifier. We plot only the ROC Curve of Linear SVM classifier because is the classifier system that has given good results.

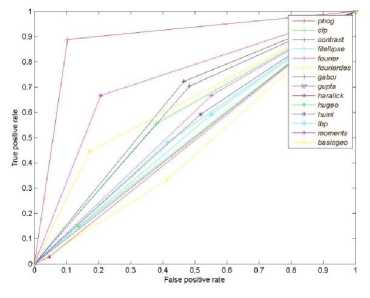

Fig.1. The ROC Curve for obtained by Linear SVM on the binary classification of image sets: Airplanes VS Car Side.

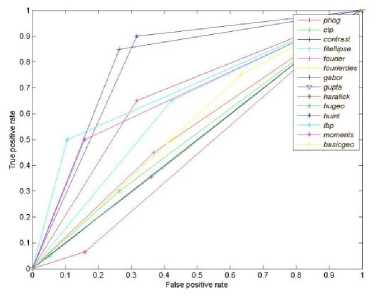

Fig.2. The ROC Curve for obtained by Linear SVM on the binary classification of image sets: Cougar Face VS Cougar Body.

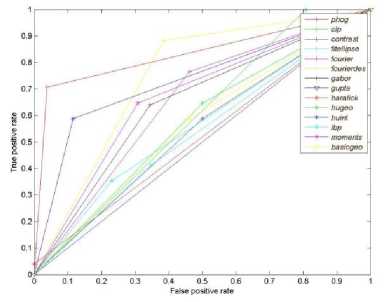

Fig.3. The ROC Curve for obtained by Linear SVM on the binary classification of image sets: Crab VS Crayfish.

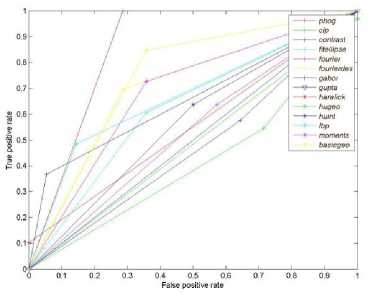

The figure 1, 2, 3, 4, 5 and 6 represent the ROC Curve of linear SVM classification for all the models obtained by using feature extraction method. The ROC Curve allows a good visual assessment and the AUC is used to evaluate the ROC Curve.

We show that PHOG method has a good performance.

-

B. Application in Multi-Class Classification

In this section, we evaluate the models obtained by the different feature extraction techniques in multi class classification. We use the same image sets defined above and we add two other classes:

Fig.4. The ROC Curve for obtained by Linear SVM on the binary classification of image sets: Crocodile VS Crocodile Head.

-

• Airplanes

-

• Car Side

-

• Cougar Face

-

• Cougar Body

-

• Crab

-

• Crayfish

-

• Crocodile

-

• Crocodile Head

-

• Flamingo

-

• Flamingo Head

-

• Lobster

-

• Scorpion

Fig.5. The ROC Curve for obtained by Linear SVM on the binary classification of image sets: Flamingo VS Flamingo Head.

The number of classes is 14. Our system extracts the features by using each method described above (table 1). Then, each dataset obtained by these feature extraction method will be used under the four classifiers. The numerical results are described in the table 9.

Fig.6. The ROC Curve for obtained by Linear SVM on the binary classification of image sets: Water Lilly VS Sunflower.

Table 9. The Classification Accuracy Rate in Multi classification for the 14 Models Obtained by Feature Extraction Methods

|

Name |

Linear SVM |

SVM with Gaussian Kernel |

LS-SVM |

KNN |

|

PHOG |

71,56% |

49,30% |

49,30% |

7,73% |

|

CONTRAST |

32,15% |

37,87% |

39,88% |

7,57% |

|

FITELLIPSE |

39,57% |

52,09% |

54,56% |

7,57% |

|

FOURIER |

44,98% |

56,26% |

57,19% |

7,57% |

|

FOURIERDES |

33,08% |

43,12% |

43,12% |

7,57% |

|

GABOR |

67,54% |

60,12% |

59,81% |

7,57% |

|

GUPTA |

39,76% |

38,91% |

41,33% |

9,09% |

|

HARALICK |

6,65% |

7,57% |

5,10% |

7,57% |

|

HUGEO |

13,58% |

14,79% |

22,55% |

9,21% |

|

HUINT |

7,57% |

23,18% |

52,86% |

7,73% |

|

LBP |

61,05% |

54,87% |

54,40% |

7,73% |

|

MOMENTS |

55,18% |

51,93% |

54,40% |

7,88% |

|

BASICGEO |

58,73% |

62,29% |

63,21% |

7,57% |

|

BASICINT |

28,44% |

40,80% |

42,53% |

7,57% |

The model obtained by PHOG, GABOR and LBP under Linear SVM have reached a high classification accuracy rate compared to the other models obtained by the other feature extraction methods.

PHOG exceeds all the feature extraction method in multi class classification.

The PHOG is a variant of HOG that use the pyramid levels technique. Its powerful and efficient lies in the fact that the HOG descriptor operates on localized cells, the method upholds invariance to geometric and photometric transformations, except for object orientation [48].

-

V. Conclusion

In this paper, we present a comparison protocol of different image classification models obtained by different feature extraction techniques. We evaluation the performance on both binary and multi class classifications. The experiments were conducted in term of: classification accuracy rate, precision, recall, f_measure, g_mean and AUC. Also, we propose to use the ROC Curve as visual evaluation. We applied this study on the Caltech 101 image datasets.

The results show that the PHOG, GABOR and LBP methods have reached a high classification accuracy rate and are the very precise and efficient methods. An advantage has been recorded to PHOG methods in term of precision and recall. Also, in multi class classification the PHOG, GABOR and LBP have given favorable results.

Other public image datasets should be tested to select the relevant models in image classification in the future. We propose as future work to use the feature selection method to choose the relevant feature instead of using all the feature extraction.

Список литературы A Comparative Study of Feature Extraction Methods in Images Classification

- S. Amin Seyyedi, N. Ivanov,"Statistical Image Classification for Image Steganographic Techniques", International Journal of Image, Graphics and Signal Processing (IJIGSP), vol. 6, no. 8, pp. 19-24, 2014.DOI: 10.5815/ijigsp.2014.08.03.

- D. Choudhary, A. K. Singh, S. Tiwari,V. P. Shukla,"Performance Analysis of Texture Image Classification Using Wavelet Feature", International Journal of Image, Graphics and Signal Processing (IJIGSP),, vol. 5, no. 1, pp. 58-63, 2013.DOI: 10.5815/ijigsp.2013.01.08.

- Z. Akata, F. Perronnin, Z. Harchaoui and C. Schmid, "Good Practice in Large-Scale Learning for Image Classification," IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013.

- S. Maji, A. C. Berg and J. Malik, "Efficient Classification for Additive Kernel SVMs," IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 35, no. 1, pp. 66-77, 2013.

- M. Luo and K. Zhang, "A hybrid approach combining extreme learning machine and sparse representation for image classification", Engineering Applications of Artificial Intelligence, vol. 27, no. 1, pp. 228-235, 2014.

- A. Morales-González, N. Acosta-Mendoza, A. Gago-Alonso, E. B. García-Reyes and J. E. Medina-Pagola, "A new proposal for graph-based image classification using frequent approximate subgraphs", Pattern Recognition, vol. 47, no. 1, pp. 169-177, 2014.

- R. J. Mullen, D. N. Monekosso and P. Remagnino, "Ant algorithms for image feature extraction", Expert Systems with Applications, vol. 40, no. 11, pp. 4315-4332, 2013.

- J. Qian, J. Yang and G. Gao, "Discriminative histograms of local dominant orientation (D-HLDO) for biometric image feature extraction", Pattern Recognition, vol. 46, no. 10, pp. 2724-2739, 2013.

- K. Xiao, A. L. Liang, H. B. Guan and A. E. Hassanien, "Extraction and application of deformation-based feature in medical images", Neurocomputing, vol. 120, pp. 177-184, 2013.

- C. Li, Q. Liu, J. Liu and H. Lu, "Ordinal regularized manifold feature extraction for image ranking", Signal Processing, vol. 93, no. 6, pp. 1651-1661, 2013.

- P. L. Stanchev, D. Green Jr. and B. Dimitrov. "High level colour similarity retrieval", International Journal of Information Theories and Applications, vol. 10, no. 3, pp. 363-369, 2003.

- J. Huang, S. Kuamr, M. Mitra, W. Zhu et al., "Image indexing using colour correlogram", In Proc. Computer Vision and Pttern Recognition, pp. 762-765, 1997.

- M. K. Swain and D. H. Ballard, "Color indexing". International Journal of Computer Vision, vol. 7, no. 1, pp. 11-32, 1991.

- D. P. Tian and B. Shaanxi, "A Review on Image Feature Extraction and Representation", Techniques International Journal of Multimedia and Ubiquitous Engineering, vol. 8, no. 4, pp. 385-396, 2013

- L. Li and P. W. Fieguth, "Texture Classification from Random Features," IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 34, no. 3, pp. 574-586, 2012.

- T. Yu, V. Muthukkumarasamy, B. Verma and M. Blumenstein, "A Texture Feature Extraction Technique Using 2D-DFT and Hamming Distance,", Fifth International Conference on Computational Intelligence and Multimedia Applications (ICCIMA'03), 2003.

- D. Zhang and G. Lu, "Review of shape representation and description techniques", Pattern Recognition, vol. 37, no. 1, pp. 1-19, 2004.

- C. Cortes and V. Vapnik, "Support-vector networks," Machine Learning, vol. 20, no. 3, pp. 273-297, 1995.

- P. Bartlett and J. Shawe-taylor, "Generalization performance of support vector machines and other pattern classifiers," Advances in Kernel Methods - Support Vector Learning, pp. 43-54, 1998.

- J. Ye and T. Xiong, "Svm versus least squares svm," Journal of Machine Learning Research, vol. 2, pp. 644-651, 2007.

- J. C. Platt, "Improvements to platt's smo algorithm for svm classifier design," Neural Computation, vol. 13, no. 3, pp. 637-649, 2001.

- J. C. Platt, B. Schölkopf, C. Burges, and A. Smola, "Fast training of support vector machines using sequential minimal optimization," Advances in Kernel Methods - Support Vector Learning, pp. 185-208, 1999.

- G. W. Flake and S. Lawrence, "Efficient svm regression training with smo," Journal Machine Learning, vol. 46, no. 3, pp. 271-290, 2002.

- J. A. K. Suykens, T. V. Gestel, J. D. Brabanter, B. D. Moor, and J. Vandewalle, "Least squares support vector machines," World Scientific Pub. Co., Singapore, 2002.

- J. A. K. Suykens and J. Vandewalle, "Least squares support vector machine classifiers," Neural Processing Letters, vol. 9, no. 3, pp. 293-300, 1999.

- L. Jiao, L. Bo, and L. Wang, "Fast sparse approximation for least squares support vector machine," IEEE Transactions on Neural Transactions, vol. 19, no. 3, pp. 685-697, 2007.

- B. Anna, Z. Andrew, and M. Xavier, "Representing shape with a spatial pyramid kernel," CIVR '07 Proceedings of the 6th ACM international conference on Image and video retrieval, 2007.

- D. Mery, "Classification of Potential Defects in Automated Inspection of Aluminium Castings Using Statistical Pattern Recognition", In Proceedings of 8th European Conference on Non-Destructive Testing (ECNDT 2002) Barcelona, Spain, pp. 17-21, 2002.

- A. Fitzgibbon, M. Pilu and R. B. Fisher, "Direct Least Square Fitting Ellipses", IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 21, no. 5, pp. 476-480, 1999.

- W. D. Stromberg and T. G. Farr, "A Fourier-Based Textural Feature Extraction Procedure", IEEE Transactions on Geoscience and Remote Sensing, vol. 25, no. 5, pp. 722 – 731, 1986.

- C. Zahn and R. Roskies, "Fourier Descriptors for Plane Closed Curves", IEEE Transactions on Computers, vol. 21, no. 3, pp. 269-281, 1972.

- G. Zhang, Z. Ma, L. Niu and C. Zhang, "Modified Fourier descriptor for shape feature extraction", Journal of Central South University, vol. 19, no. 2, pp. 488-495, 2012.

- A. Kumar and G. K. H. Pang, "Defect detection in textured materials using Gabor filters", IEEE Transactions on Industry Applications, vol. 38, no. 2, pp. 425-440, 2002.

- L. Shen and L. Bai, "Gabor Feature Based Face Recognition Using Kernel Methods", Sixth IEEE International Conference on Automatic Face and Gesture Recognition, 2004.

- L. Gupta and M. D. Srinath, "Contour sequence moments for the classification of closed planar shapes", Pattern Recognition, vol. 20, pp. 267-272, 1987.

- Haralick, "Statistical and Structural Approaches to Texture", Proc. IEEE, vol. 67, no. 5, pp. 786-804, 1979.

- A. Porebski, N. Vandenbroucke and L. Macaire, "Haralick feature extraction from LBP images for color texture classification", Published in: Image Processing Theory, Tools and Applications, pp. 1-8, 2008.

- M. K. Hu, "Visual Pattern Recognition by Moment Invariants", IRE Transaction on Information Theory IT-8, pp. 179-187, 1962.

- M. Mercimek, K. Gulez and T. V. Mumcu, "Real object recognition using moment invariants", Sadhana, vol. 30, no. 6, pp. 765–775, 2005

- P. Bhaskara Rao, D.Vara Prasad and Ch.Pavan Kumar, "Feature Extraction Using Zernike Moments", International Journal of Latest Trends in Engineering and Technology, vol. 2, no. 2, pp. 228-234, 2013.

- T. Ojala, M. Pietikainen and T. Maenpaa, "Multiresolution gray-scale and rotation invariant texture classification with local binary patterns", IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 24, pp. 971-987, 2002.

- Mu, Y. et al, "Discriminative Local Binary Patterns for Human Detection in Personal Album", CVPR-2008, 2008.

- T. Ahonen, A. Hadid and M. Pietikinen, "Face Description with Local Binary Patterns: Application to Face Recognition," IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 28, no. 12, pp. 2037-2041, 2006.

- R. J. Ramteke, "Invariant Moments Based Feature Extraction for Handwritten Devanagari Vowels Recognition", International Journal of Computer Applications, vol. 1, no. 18, pp. 1-5, 2010.

- L. Fei-Fei, R. Fergus and P. Perona, "Learning generative visual models from few training examples: an incremental Bayesian approach tested on 101 object categories", IEEE. CVPR 2004, Workshop on Generative-Model Based Vision, 2004.

- D. Mery, "BALU: A toolbox Matlab for computer vision, pattern recognition and image processing", http://dmery.ing.puc.cl/index.php/balu,2011.

- M. Kubat, R. Holte and S. Matwin, "Learning when negative examples aboud", In ECML-97, pp. 146-153, 1997.

- N. Dalal and B. Triggs, "Histograms of oriented gradients for human detection", Proceedings of the Conference on Computer Vision and Pattern Recognition, vol. 1, pp. 886-893, 2005.

- H. Zhang and Z. Sha, "Product Classification based on SVM and PHOG Descriptor", International Journal of Computer Science and Network Security, vol. 13, no. 9, pp. 1-4, 2013.