A Comprehensive Survey on Human Skin Detection

Автор: Mohammad Reza Mahmoodi, Sayed Masoud Sayedi

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 5 vol.8, 2016 года.

Бесплатный доступ

Human Skin detection is one of the most widely used algorithms in vision literature which has been numerously exploited both directly and indirectly in multifarious applications. This scope has received a great deal of attention specifically in face analysis and human detection/tracking/recognition systems. As regards, there are several challenges mainly emanating from nonlinear illumination, camera characteristics, imaging conditions, and intra-personal features. During last twenty years, researchers have been struggling to overcome these challenges resulting in publishing hundreds of papers. The aim of this paper is to survey applications, color spaces, methods and their performances, compensation techniques and benchmarking datasets on human skin detection topic, covering the related researches within more than last two decades. In this paper, different difficulties and challenges involved in the task of finding skin pixels are discussed. Skin segmentation algorithms are mainly based on color information; an in-depth discussion on effectiveness of disparate color spaces is elucidated. In addition, using standard evaluation metrics and datasets make the comparison of methods both possible and reasonable. These databases and metrics are investigated and suggested for future studies. Reviewing most existing techniques not only will ease future studies, but it will also result in developing better methods. These methods are classified and illustrated in detail. Variety of applications in which skin detection has been either fully or partially used is also provided.

Skin Detection, Skin Classification, Skin Segmentation, Face Detection, Standard Skin Database

Короткий адрес: https://sciup.org/15013973

IDR: 15013973

Текст научной статьи A Comprehensive Survey on Human Skin Detection

Published Online May 2016 in MECS DOI: 10.5815/ijigsp.2016.05.01

With the advancement of technology and emersion of automatic systems, image processing plays an indispensible role as countless number of image-analysisbased electronic systems is fabricated diurnal. Automatic door control systems are often designed based on motion detection, face recognition algorithms are employed extensively in surveillance, object tracking algorithms are directly involved in anti-accident systems and driverless cars and finally, human computer interaction (HCI) technology hugely rely on human segmentation and biometrics; these are all witnesses of this fact. Among these applications, extracting a feature in human’s body is one of the most fundamental under interest topics mainly due to its enormous number of applications.

A very shocking glance at actuarial of sleepy people driving cars reported by National Sleep Foundation’s 2005 Sleep in America poll call up for serious reactions. Accordingly, 60% of adult drivers (about 168 million people) have admitted that they have driven a vehicle while feeling drowsy in the past year, and more than one-third (37% or 103 million people) have actually fallen asleep at the wheel [1]! As a matter of fact, more than 1 tenth of those who have nodded off, say they have done so at least once a month and four percentages of them avouched that they have had an accident or near accident since they were too tired to drive. In the following, the National Highway Traffic Safety Administration have estimated that 100,000 police-reported crashes are the direct result of driver fatigue each year resulted in an estimated 1,550 deaths, 71,000 injuries, and $12.5 billion in monetary losses [1]. Fatigue directly affects the driver’s reaction response time and drivers are often too tired to realize the level of inattention. Thus, a driving monitoring system [2,3] shall be a promising solution to this dreadful problem. In such systems, several features including different signs and body gestures in the eyes or head such as repetitious yawning, heavy eyes and slow reaction is employed as a drowsiness cue. However, an essential step before facial feature extraction in variety of such systems is detection of human face or skin. Here, as the head pose or rotation may vary frequently, using skin color cue to detect facial features and face could be a promising choice. Skin segmentation algorithm is also a major concern in many other number of applications including content base retrieval [4], identifying and indexing multimedia information [5], gesture recognition [6,7], robotics [8], sign language recognition [9,10], gaming interfaces [11] and human computer interaction [12,13], and filtering objectionable URLs [14].

Skin classification is the act of discriminating skin and non-skin pixels in an arbitrary image. Detection of pixels related to human skin is a prevalent task as it has potential of high speed processing [15,16] and not sensitive to the changes of posture and facial expression. It is invariant against rotation [15,17], geometrics [16,18], stable against partial occlusion [15,17], scaling [19] and shape [20] and it is somewhat person independent [18]. Skin detectors are easily implement-able and they often impose low level of computation cost [21,22].

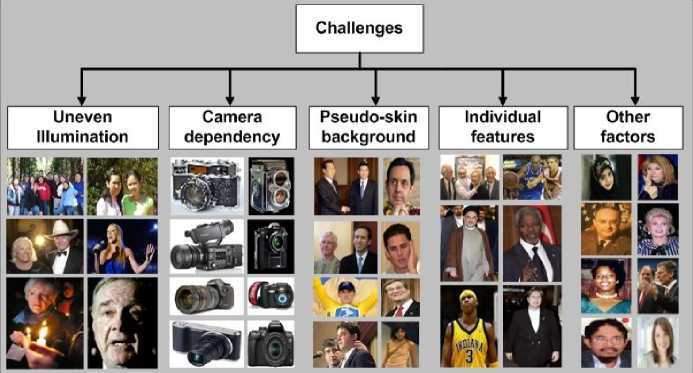

Fig.1. Challanging Factors Involved in Skin Detection in One Glance

Skin detection though seems to be an effortless task for human; there are performance limiting factors which some of them are common in different image processing especially biometrics and object analysis. In Fig. 1, these factors are presented with examples.

-

A. Uneven, inconsistent and nonlinear illumination

Without any exaggeration, the most performance deprecatory factor in skin detection is color constancy problem [17,23]. To be more specific, the intensity of a pixel in an image depends on both reflection and illumination. Reflection is related to the imaging scene while illumination is the effect of light source i.e. light fixtures or daylight natural illumination. The appearance of a scene varies when spectra, source distribution and lighting conditions changes [24] and this imposes difficulty as this behavior is nonlinear and unpredictable (controllable). Handling this problem has been investigated by two strategies. Using color correction and illumination cancelation algorithms prior to segmentation task significantly improve the performance [25, 26]. Dynamic adaptation approaches [27, 28] have been also employed for this problem, however in many cases, the generality of these system is doubted.

-

B. Complex, pseudo skin background

One important challenge in skin segmentation is the fact that a pixel with certain intensity in components of a color space can be perch into both skin and non-skin classes in different conditions [15,29]. There are numerous skin-like objects in the background such as wall, wood and brick which separating real skin pixels and pseudo skin pixels associated with them is not an straightforward task. A good skin detector algorithm shall be able to consider this effect unless the output will not be satisfactory. Most methods which are color and pixel based often have difficulty in dealing with such phenomenon. Complex backgrounds also impacts mostly on the performance of those approaches based on shape, texture and spatial features.

-

C. Imaging equipment and camera dependency

Camera characteristics (sensor response, lenses, device settings) [24] are very influential as the distribution of human skin color varies device to device [17,30] and this degrades the performance of detectors designed based on distribution of skin color cluster in one specific color space. Some methods attempts to use non-linear color space transformations [31] while some methods use several regression analyses to estimate device functions e.g. [32]. However, the former is not applicable for all devices and the latter has some pre-assumptions which are not always true.

-

D. Individual and intra-personal characteristics

Skin color is not stable across individuals though seems to be a discriminator tool [17,30]. Several factors are officious such as age since human skin color changes slowly with passing the life, sex; the distribution of human skin color is quite different across males and females (cosmetology makes it more disparate), and health; human’s skin color changes with specific illnesses. Ethnicity is also one of the most important factors as skin cluster varies slightly among black, yellow and white skin colors. It has been frequently observed that those methods designed based on specific training images (i.e. related to one particular ethnicity) do not deal with other ethnicities very well.

-

E. Other factors

Other less important but effective factors are quality of images [23], traditional scarf or wear [33], Computation inaccuracies and continuous nature of color transformations [34], movement of an object and blurring [25], significant overlap between skin and non-skin cluster [29] and reflection from water and glass [35]. It is worth to note that aforementioned challenges are related to those algorithms which are based on visible spectrum imaging. For infrared systems, expensive systems [17], tedious setup procedures, and temperature dependency are most common problems. Nevertheless, with all existing limitations and challenges, new algorithms, different variations of traditional methods and mixed systems are put in proposal each year.

A robust skin detector has a couple of features. First of all, as these systems are typically employed for filtering (reduction purposes), it is necessary to have a skin detector with not only high detection rate, but also low false rejection/detection [36]. A robust system should be general i.e. not to be designed for particular condition or specific ethnicity. A relatively fast system ought to be designed which can operate with un-calibrated cameras, arbitrary viewing geometry and unknown but commonly encountered illumination.

While a huge number of methods have been proposed, to the best of author’s knowledge, there are only two surveys in this topic. The first one authored by Vezhnevets et al. [38] covered a remarkable number of published works before 2003. The second one documented by Kakumanu et al. [17] in 2006 contains relatively more information regarding different types of methods and previously published works. The first though is coherent and spacious excludes new methods developed in last decade. Vezhnevets et al. have mainly discussed pixel-based methods and algorithms operating on each pixel of an image individually and independent of other pixels leaning on color feature. This paper naturally (most methods were based on statistical analysis of training datasets) emphasizes on statistical methods including parametric and non-parametric ones. Authors have not discussed standard evaluation and training datasets and evaluation metrics. However, at the end, an evaluation comparison is embedded. They have concluded that parametric methods are better suited for classifiers with limited data sets. However, in large data sets, methods less dependent on the skin cluster shape and automatic classification rules are more promising. One other important result was that exclusion of luminance from classification process will not lead to better performance. This was also mentioned and mathematically and practically investigated in [39,40,41]. Unfortunately, this has not been considered in many developed methods after then [42,43,44]. In addition to all previous points, Vezhnevets stated that one cannot evaluate how good a color space is based on the shape of skin’s cluster (as also claimed in [45]). This is mainly due to the fact that performance of different skin modeling schemes is too variable in different color spaces.

Kakumanu et al provided a much comprehensive survey as they classified and illustrated different color spaces; explained features, advantages and disadvantages of most of them. In addition, a couple of skin classifiers such as MLP, SOM and MaxEnt classifiers and previously developed statistical based models were also investigated. A very new approach in this paper was discussing illumination adaption algorithms which were very effective and directional for future studies since many authors began using color correction algorithms after that. Skin color constancy approaches such as Gray world and white patch and a brief comment on Neural Network based illumination adaptation methods were also included in their paper. Kakumanu et al. not only did evaluate skin detection modeling approaches, but they also investigated two standard datasets.

Many novel methods have been developed in recent years which are not covered in [17,38]. These include high performance spatial based methods, multispectral systems, numerous local and global adaptation techniques plus many evaluation and comparison papers. In this paper, a number of issues some missing from former works and some for the sake of completeness are represented. A discussion on skin segmentation challenges was provided. Understanding difficulties when finding a solution to a problem is one of the first steps in inception. It is very critical to investigate different challenges because this will direct the algorithms (which are going to be developed in future) to the way of finding an effective solution rather than a just-solution. Furthermore, variety of color spaces and their impression on the performance of models are also discussed. This is a talk on the choice of color space which will be an answer to many researchers claiming that color space X has the best performance or Y has the least (and they are often repugnance with each other). Color spaces are evaluated and compared based on their performance when using variety of methods. The other contribution of this paper is to provide the most up to date report on almost all (as much as possible) published methods related to skin modeling techniques. Most of these works are developed at recent five years after both of former surveys. As already mentioned, illumination is one of the most important factors in diminution of detection rate. However, recently a couple of plenary review papers have been published discussing illumination correction techniques in general [46-50]. Words are saved here and interesting readers are encouraged to recourse those papers. The other contribution of this paper is paraphrasing skin detection applications.

The rest of the paper is organized as follows. In next section, standard data bases for both training and evaluation phases are followed by superscription of evaluation metrics. In section 3, different color spaces are explained, and then, their performance is compared based upon literature results. In section 4, skin segmentation techniques are explicated in detail and in the following, a fair performance evaluation is discussed in section 5. Finally, skin segmentation applications and directive conclusions are elucidated in subsequent sections 6 and 7 respectively.

-

II. Standard Datasets and Evaluation Metrics

A complete skin database is required in both training and evaluation phases. Large number of skin and non-skin pixels ought to be used in order to develop an optimum classifier in training phase. In evaluation also, an exhaustive dataset of images are required in conjunction with their manually annotated ground truth images in order to effectively appraise the performance of a system. Thus, it is essential to investigate characteristics of current available datasets to motivate future studies to utilize them. Most of skin detection datasets are those originally developed for face detection, hand tracking and face recognition problems. There are some important issues which barricade fair and accurate evaluation of different methods. Firstly, although a great number of skin detectors have been proposed, many methods have not been experimented based on standard datasets. In fact, most papers are published based on random collection of personal or online public images. In addition, current experimental results are also based on different databases. Furthermore, many of available databases are neither standard nor specifically designed to measure how effective is an algorithm in dealing with particular condition or challenge. Finally, different datasets are used to train skin classifiers which for obvious reasons, this significantly affect the performance of detectors. Nonetheless, recent and common compiled benchmark datasets are discussed in this section.

The most important group of skin databases are those designed especially for training and assessment of skin classifiers. Compaq [45] is the first large skin dataset and perhaps the most widely used one which consists of 9,731 images containing skin pixels and 8,965 images not containing any skin. The entire database includes approximately 2 billion skin and non-skin pixels collected by crawling Web. Skin regions of 4,675 skin images have been segmented which in conjunction with non-skin images leads to 1 billion pixels. Many skin classifiers have been trained and evaluated based on this database [16,51,52,53]. An automatic software tool is also developed in order to generate ground truth images leading to imprecise results. Fig. 2 represents a set of images from Compaq dataset with GTs. This database is no longer available for public use [54,55].

In contrast with poor image quality in Compaq and its semi-supervised ground truth, ECU skin and face datasets [41] is compiled based on near 4,000 high quality color images and relatively accurate ground truth. ECU images ensure the diversity in terms of the background scenes, lighting conditions, and skin types. The lighting conditions include indoor lighting and outdoor lighting and the skin types include whitish, brownish, yellowish, and darkish skins. Skin dataset provided by Schmugge et al. [56] consists of 845 images. The dataset is composed of 4.9 million skin pixels and 13.7 million non-skin pixels. This dataset is very general as it contains images with different facial expressions, illumination levels and camera calibrations. MCG-skin database [57] contains 1,000 images randomly sampled from social network websites captured in variable ambient lights, confusing backgrounds, diversity of human races and also various resolutions and visual quality. This dataset contains 38,868,720 skin pixels and 139,091,233 non-skin pixels. Ground truth images in this dataset are not accurately labeled as eyes, eyebrows, etc are also considered as skin and pixels around edges are not also marked with charily. Ling et al. [58] used MCG-skin dataset and additional web collected images to construct a dataset of 37.5 million skin pixels and 135.58 million non-skin pixels. They used half of this dataset to train their SOM-based classifier and the other half for evaluation.

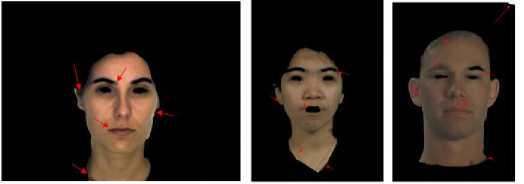

Kawulok et al. compiled HGR [59] database of images for hand gesture recognition (HGR). The dataset is organized into three series acquired in different conditions, individuals, size and background totally including 1558 images. UCI Machine Learning Repository [60] consists of skin pixels collected by randomly sampling B,G,R values from images of various age groups, races, and genders derived from face detection databases. Total learning sample size is 245,057; out of which 50,859 are the skin samples and 194,198 are non-skin samples. This database is only applicable for training purposes. Castai et al. [61] have also created SFA dataset based on FERET (876 images) [62] and AR (242 images) [63] databases. SFA consists of 3,354 samples of skin pixels and 5,590 non-skin samples with different 1 to 35×35 dimensions. A comparison between UCI and SFA based on the best topology and threshold of a neural network based skin classifier yielded to the fact that SFA is slightly more accurate than UCI in evaluation of skin detectors [61]. However, the SFA mainly include semi passport images which are not suitable for evaluation purposes. In Fig. 3, several GT images in SFA are depicted and red arrows shows false points.

Db-skin dataset [64] contains 103 skin images annotated by human with relative charily. In some images, eyes and skin and non-skin boundaries are not marked precisely, but they are taken in different lighting conditions and complex backgrounds. In a recent study, Montenegro et al. [65] compiled a dataset in order to compare the performance of 5 common color spaces. This dataset contains 705 RGB images from 47 Mexican subjects with different ages and distinct skin tones. Apart from former databases, images have been all acquired using a single Kinect sensor in completely controlled conditions. The dataset is not available for public usage. R. Khan et al. [15] also developed Feeval dataset consisting of 8991 images from 25 online videos plus per pixel manually generated ground truths. Videos mostly lack enough quality, and the diversity of only 25 videos is questionable. TDSD [66] and Sigal dataset [67] have been also developed for skin segmentation where ground truth labeling for both databases has not been performed with hand. The former contains 554 images including 24 million skin pixels and 75 million non-skin pixels. Table. I summarizes the characteristics of aforementioned skin datasets.

Face detection/recognitions datasets are also utilized for skin detection purposes. Razmjooy et al. [68] used Bao test bed for training and evaluation. Ding et al. [69] used Caltech with their own ground truth to measure the effectiveness of their classifier. Miguel et al. [70] used near 290 images from each public datasets for human activity recognition such as EDds, LIRIS, SSG, UT, and

AMI as evaluation sets. These 87,000 skin pixels cover a wide range of situations and illumination levels. Naji et al. [71] constructed a database of 125 images collected from

LFW, CVL and Web images. Tan et al. [72] used Pratheepan and ETHZ PASCAL datasets to present quantitative results.

Fig.2. A Set of Images from Compaq Dataset and Corresponding GTs

Fig.3. Ground Truth Images in SFA

Table 1. Common Datasets in Skin Segmentation

|

Dataset |

No. S images |

No. NS images |

No. S pixels |

No. NS pixels |

GT qualification |

|

Compaq [45] |

4,675 |

8,965 |

1 billion |

Annotated imprecisely |

|

|

ECU [41] |

4,000 |

2,000 |

209.4 million |

901.8 million |

Relatively Precisely annotated |

|

MCG [57] |

1,000 |

39 million |

139 million |

Annotated imprecisely |

|

|

Schmugge [56] |

845 |

4.9 million |

13.7 million |

Ternary marking charily |

|

|

Ling et al. [58] |

1,000 |

37.5 million |

135.58 million |

Unavailable |

|

|

HGR [59] |

1,558 |

- |

- |

Precisely annotated |

|

|

UCI [60] |

- |

50,859 |

194,198 |

Unknown |

|

|

SFA [61] |

1,118 |

- |

- |

Precisely annotated |

|

|

Db-skin [64] |

103 |

- |

- |

Relatively annotated precisely |

|

|

Montenegro [65] |

705 |

- |

- |

Unavailable |

|

|

Feeval [15] |

8,991 |

- |

- |

annotated imprecisely |

|

|

TDSD [66] |

554 |

24 million |

75 million |

Annotated imprecisely |

|

-

A. Evaluation measurement

Evaluation metrics are critical in assessment of a classifier’s power. Several performance measures are employed in a general classification problem including Recall, Accuracy, Precision, Kappa, F-measure, AUC (Area Under Curve), G mean , Informedness, and Markerdness [73,74]. Note that in different applications, one of these metrics may be more suitable than another one. 2×2 confusion (contingency) matrix for an skin detection problem is provided in Table .II.

Table 2. Confusion Matrix

Empirical and theoretical evidences demonstrate that these measures are biased with respect to data imbalance and proportions of correct and incorrect classifications [75]. These shortcomings have motivated a search for new metrics based on simple indices, such as the True Positive Rate (TPR) which is the percentage of correctly classified pixels and the True Negative Rate (TNR) is the percentage of pixels negatively classified. Precision and Recall are also two other important metrics to evaluate a skin detection algorithm [16,70]. Precision (P) is the percentage of pixels correctly labeled as positive whereas Recall (R) indicates true positive rate;

|

------------------ |

Classified Skin |

Classified Non-Skin |

|

Ground Truth skin |

True Positive (TP) |

False Negative (FN) |

|

Ground Truth N-skin |

False Positive (FP) |

True Negative (TN) |

TP + FP

TP + FN

The F-score integrates both Recall and Precision into a measure. It is defined as:

= (1 + b 2) х ( R. P ) score b2 P + R

The non-negative real b is a parameter to control the effect of the Recall and Precision separately. Typically, b is set to 1, thus obtaining the Fscore as a measure in range of [0,1], which can be viewed as a harmonic mean of the Recall and Precision [73]. Receiver Operating Characteristic Curve (ROC) is another popular technique for assessment of classifiers considering both Recall and Precision [76,77]. Precision and Recall are often in tradeoff with each other in skin segmentation and ROC is a very useful tool to indicate this fact. ROC is the right tool to represent effectiveness of a classifier independent of tunable parameters (e.g. a threshold value or number of training samples, etc). The Area Under Curve (AUC) is the quantitative representation of the ROC [78]. In [56,79], authors employed ROC and AUC concepts as a measure to experiment some of in doubt premises in skin segmentation. For skin cluster boundary models and whenever there is only one run, AUC is calculated as the average of TPR and TNR. CDR (Correct Detection Rate) (or Accuracy) represents the probability of a pixel to be correctly detected, while FDR (False Detection Rate) demonstrates the probability of a pixel to be wrongly detected [80,81]:

CDR. ( TP + TN > ч (3)

( TP + TN + FP + FN )

FDR = 1 - CDR (4)

Aibinu et al. [82] utilized Mean Square Error (MSE) and Correlation Coefficient (CC) in addition to previous measures to represent the performance of their skin detector. Both MSE and CC are calculated using difference between the binary mask and the ground truth. The former indicates the closeness of the output image to the ground truth considering the same penalty for false detection and false rejection. However, this cannot be used generally as a comparison factor since the result depends on the size of the image. The latter also quantifies the same idea but with a normalized value in the range of [-1,+1] which -1 shows the extreme dissimilarity and +1 is interpreted as a perfect detection. Considering SM as the binary mask and SG as the ground truth:

f f ( ( SM ( x , y ) - S„ ( x , y ))( S g ( x , y ) - S g ( x , y )) )

CC = ■ x=1 y=1 2—

I f f ( SM ( x , y ) - S m ( x , y ) ) :L f ( S g ( x , y ) - S g ( x , y ) )

У x = 1 y = 1 x = 1 y = 1

MN

MSE = ff( SM (x, y) - SG (x, y))

MN x = 1 y = 1

Matthews’s correlation is a metric which takes FP, FN, TP and TN into account [77]. Montenegro et al. [65] utilized this measure to compare the performance of different color spaces using Gaussian model.

c ( TP х TN ) - ( FP х FN ) (7)

( TP + FP ) ( TN + FP )( TN + FN ) ( TP + FN )

C is a measure in the range of [-1,+1] which +1 express identity and -1 indicates total false detection and rejection. This coefficient is not very useful for assessment purposes in skin segmentation particularly in cases with large background areas. Correct detection of such regions which are often easily segmented by most classifiers will be misinterpreted. In other words, this coefficient is not capable of providing fair evaluation for imbalanced classification problems in which one of the classes often outnumbered other class. In addition, when an algorithm gives very low FP and at the same time very low TP, C will be unfairly high [77].

-

B. Discussion

In this section, a review on the most existing and common datasets and evaluation metrics in skin detection literature was provided. Note that the impact of both the number and variety of training and test sets on the performance of classifiers is not hidden from any one. However, this factor is often ignored in evaluation of different works. Most of existing works report their statistical results based on one of abovementioned datasets or a self-made images without considering the effect of both size and diversity of photo sets. The second factor is the execution and training time. Though, few works have reported the training and evaluation time, most have not considered the importance of evaluation time. The training time is an indispensible factor particularly for dynamic (online) approaches. Skin detection is often employed as a preprocessor in most applications which makes them necessary to be real-time. Furthermore, an exact definition of skin is not still ascertained among authors. For example, in labeling ground truth images, some authors have considered lips, mouth, etc as skin while some have excluded them and this definitely affects the results. From another point of view, many authors have reported their performance rates using TP, FP, P, R and other nongraphical measures. However, skin detection is somehow a fuzzy problem in which there is an optimal threshold for each method and it is not constant for different images. In some cases, F-score, ROC curve and AUC have been reported to be strong criteria in describing the performance of an algorithm. In fact, ROC represents the pure stability of a detector independent on the choice of threshold. A similar associated numerical measure is AUC which has been illustrated before. Unfortunately, a few number of authors utilized AUC though it is very simple to calculate by using curve fitting techniques.

In summary, there are two major steps in fairly measuring performance of an skin classifier. For evaluation purposes, ROC and AUC seems to be very promising for comparison goals. The other one is related to the training and test sets. In order to address so called issues, recently, a new skin detection dataset (SSD) has been compiled to be publicly available for future studies. In developing SDD, limitations of former databases are obviated from different points of view. SDD contain 21,000 images with which 15,000 of them are photos without any skin pixel. All images are divided into 4 sets. Images are captured in different illumination conditions, using variety of imaging devices, from diversity of skin tones from people all around the word. Some images are snapshots from online videos and movies while some are static images acquired from popular face recognition/tracking/detection datasets and already cited skin datasets. Table. III shows the statistics of the SDD. First portion of the database is particularly considered for training purposes mainly comprise of single face images in different lighting conditions. In the table sections 2-4 include evaluation photos with manually annotated GTs.

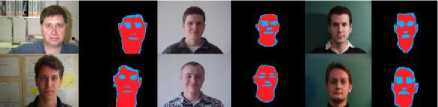

GTs have been marked with careful attention to the fact that no pixel is misclassified i.e. all skin pixels annotated as skin are actual skin pixels and non-skin pixels are not. There are certain pixels in all images in which either there is a question on the skinness of them (such as those regions around eyebrows and eyes, lips and nose holes, etc) or they are located at the boundary of skin and non-skin pixels in which in some images it is difficult to discriminate their skinness and in some images, it takes a lot of time to annotated them. Thus, GT images are divided into 3 non-overlapping regions; skin pixels, nonskin pixels and pixels which are not going to be considered both in evaluation and training. In Fig. 4 and Fig. 5, a set of training and evaluation test images are located. Obviously, black points indicate non-skin regions, red ones are associated with skin pixels and blue pixels portend ignored pixels. Using this database allows precise measurement of performance of skin detection methods. In addition, authors are capable of using different test sections in order to check if their results are independent of the test set. Also, as the number of pixels both in training and evaluation proliferate, the results would be more reliable.

Table 3. Statistics of the Sdd

|

Dataset sections |

Goal |

No. S images |

No. NS images |

|

1 |

Training |

2000 |

4000 |

|

2 |

Test |

1000 |

4000 |

|

3 |

Test |

1000 |

4000 |

|

4 |

Test |

1000 |

4000 |

Fig.4. A Set of Training Images in SDD

Fig.5. A Set of Test Images in SDD

-

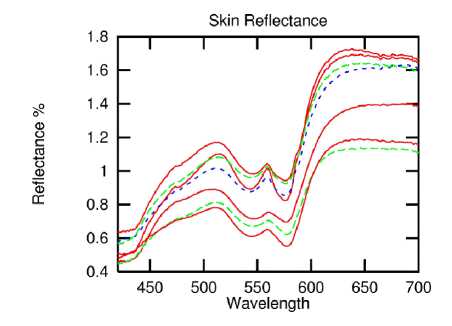

III. Study of different Color Spaces

Color is the perceptual result of light in the visible region of the spectrum, having wavelengths between 400 nm to 700 nm, incident upon the retina [83]. Color space is a three-dimensional geometric space with axes appropriately defined so that symbols for all possible color perceptions of humans fit into it in a psychological order [84]. One big group of color spaces are those directly depend on the imaging device and not the way human sees the color! They are often exploited for digital applications, representations and computations. Imaging equipments often use disparate color spaces with specific response to the environmental conditions due to their particular features e.g. printers often use CMY whereas TVs utilize popular orthogonal (YCbCr) color spaces. However, to ensure a proper color rendition in various devices, deviceindependent color spaces, such as CIE (Commission Internationale de l'Éclairage) color models are required to serve as an interchange standard. CIE colors are the colorimetric spaces that are device-independent.

Definitely, though color is not directly utilized in some skin detection approaches, it is one of the most decisive tools affecting the performance of skin algorithms. Both device dependant and independent color spaces have been used in skin classification tasks with different frequencies. Albiol et al. [5] mathematically proved that the optimum performance of skin classifiers is independent of the selected color space, though, the performance of most skin detectors are significantly in direct relation with the color space choice. Therefore, widely used color spaces are discussed in this section.

-

A. Device dependant color spaces

Device dependant color spaces have been extensively gained popularity for skin detection. RGB as the most common color space is expressed in terms of primary colors i.e. colors are encoded as a linear weighted addition of red, blue and green components. Several algorithms such as [7,78,85,86] are proposed based on RGB color space. In addition, in [40] it has been stated that RGB color space is the best choice for human skin detection based on particular criterions. However, there are several issues associated with RGB color spaces. First of all, R,G and B components are highly correlated, in addition to the fact that luminance (intensity) is in a linear relation with these components which means changing the luminance that easily happens leads to high variation in the RGB values [38,76]. This can be observed in the stretched skin cluster in RGB color space. Normalized RGB is the normalized version of the RGB color space so that sum of r, g and b components is unity. This color is often considered to be more robust against lighting variations and ethnicity in specific conditions and exhibit tighter cluster [38,17]. Normalized rgb is a popular color space utilized in [87,88,89] for skin segmentation in different approaches.

One big branch of device dependant color spaces are utilized in TV transmission and digital photography systems. The orthogonal color space family includes well-know color spaces such as YC b C r , YCgCr, YDbDr, YPbPr, YIQ, YUV, and YES. YIQ is employed in NTSC TV broadcasting [90] whereas YC b C r is utilized for JPEG, H.263 and MPEG compression tasks since it reduces the redundancy in RGB channels [20,91]. In addition, YUV color model is exploited by PAL (Phase Alternating Line) and SECAM (Séquentiel Couleur À Mémoire) color TV system [76]. In these color spaces, unlike the RGB, the luminance channel is separated from chrominance ones yielding to a very tight pseudo-ellipse skin cluster. Due to the particular features of these color spaces such as separation of luminance-chrominance channels, relatively simple RGB conversion and relatively tight skin cluster, orthogonal color models have been frequently used in skin segmentation [92-95].

Perceptual color spaces such as HSI, HSV, HSB, HSL and TSL are very attractive color spaces in skin detection literature. In these color models, each pixel is presented by Hue (tint or color), Intensity (lightness) and Saturation associated with physiological feeling of human [96]. Similar to the orthogonal color spaces, luminance and chrominance properties are separated in addition to the intuitiveness of the color space and invariance in white light sources which make them favorable for skin segmentation [97,98]. However, nonlinear RGB conversion of these color spaces are relatively computational, and hue component is discontinued. Furthermore, the cyclic nature of H component disallows usage of color parametric skin models [38]. Of course, HS components can be represented in Cartesian coordinates rather than polar one which not only solves this so called problem, but it also forms a tighter skin cluster. Similar to HSV, TSL is a normalized chrominance-luminance alteration of the normalized RGB with more intuitive values [38]. Baskan et al. [99], Zahir et al. [100], Sanmiguel et al. [70], Vishwakarma et al. [96] and Prasertsakul et al. [81] made use of perceptual color spaces for skin segmentation and illumination compensation.

There are also less frequently used color models which are obtained through either linear or nonlinear transformations of other color spaces. In addition, there are also variations in which different components of disparate color spaces are employed for skin segmentation. Several logarithmic variations of RGB are applied in skin detectors since they render illumination change [72,101,102,103]. In [23], RGB, HS and CbCr were combined whereas YCgCr and YIQ were used simultaneously in [104].

-

B. Device independent color spaces

CIE-XYZ, CIE-xy, CIE-Lab, CIE-Luv, CIE-Lch are colorimetric models defined and standardized by CIE committee. CIE-XYZ as both the foundation of other spaces and the oldest model is based on measurements of human visual perception. It is obtained using a linear transformation from RGB [90]. The Y represents the luminance component while X and Y both are related to the chrominance values. CIE-xy is the chromacity normalized version of the CIE-XYZ. In both of them, color difference is not perceived equally through the entire of the space. CIE-Lab attempts to make the luminance scale more perceptually uniform. It describes all the colors visible to the human eye. The chromacity of CIE-Luv is different from CIE-Lab, but the luminance i.e. L is identical. Since RGB is device dependant, there is not straightforward conversion to device-independent color spaces; however, using an absolute color space (e.g. sRGB which is used in many printers), RGB will be initially transferred using a device-dependant adjustment and then CIE-Lab can be obtained. This complexity of transformation is a noticeable disadvantage for device independent color models making them less eligible color model in skin classification algorithms. Nevertheless, Lee et al. [105], Shin et al. [40], Ravi et al. [106] and Lindner et al. [107] built skin classifiers based on device independent color spaces.

-

C. Color space comparison

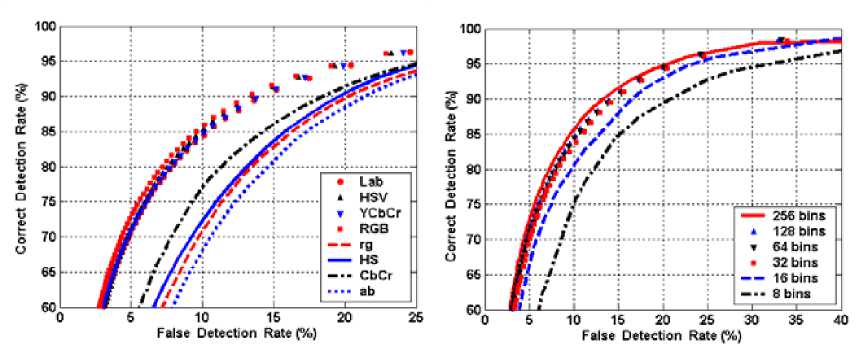

Color spaces undoubtedly affect the performance of skin detectors and different authors derived different results for the influence of color space choice on the performance of skin segmentation algorithms. In [108], it is claimed that TSL is the best color space for skin detection particularly when applied in conjunction with Gaussian and Mixture of Gaussian models. Khan et al. [15] stated that IHLS has the best performance in almost all methods except histogram LUT (look-up-table), and RGB,independent of the method, has the worst performance. Comparing the performance of variety of color spaces on single Gaussian model has been yielded into the fact that YCbCr has the best performance [76]. On the other hand, Montenegro et al. compared RGB, HSV, YC b C r , CIE-Lab and CIE-Luv in SFA data base. They used MCC as a metric for evaluation, and Gaussian classifier as the method, concluding that CIE-Lab is the winner while normalized RGB has the lowest performance [65]. In [109], Based on a comparative study, HSV seems to be more robust skin detector compared with other color spaces. Moreover, it has been concluded in [82] that performance of RGB based techniques lessens when the distinction between skin-pixels and objects increase, but in overall, using YCbCr yields better results.

Littman et al. [110] compared the performance of RGB, YIQ, and YUV in their hand recognition and detection approach on a small subset of evaluation images, resulting that the system is independent from the choice of color space. In [40], they also evaluated the dependency of skin detectors on the selected color model. They performed an exhaustive experiment on different color spaces reporting that RGB color space provides the best separability between skin and non-skin patches. In another work [111], a comparison study is done using Gaussian and histogram approaches on a dataset of 805 color images. It is claimed that the choice of color space significantly changes the performance, and HSI color space accompanying with histogram model outperform other cases. In addition, they stated that skin color modeling has greater impact than the color space transformation.

The work presented in [41] compares the performance of HIS, RGB, CIE-Lab and YCbCr. The best performance in terms of classification error is obtained in HIS and YC b C r models. However, based on [56], HIS should be employed with histogram technique to produce acceptable results. Authors in [112] stated that HIS is the best color space and used it in an explicitly defined method. In a recent study [106], using of both explicitly defined models and single Gaussian distribution resulted that YPbPr outperforms other models. González et al. [114] compared the performance of 10 common color spaces based on k-means clustering algorithm in 15 images from AR database with manually annotated ground truths. They have concluded that HSV, YCgCr, and YDbDr are the best color spaces. Nalepa et al. [115] showed that statistically combination of RGB and HSV outperforms other color spaces in a recall oriented system using an explicitly defined method. In [116] by using a different method, artificial neural network has been subjected for skin segmentation in variety of color spaces yielding to the conclusion that YIQ is the best choice. The idea to adapt an optimal chrominance color space rather than a segmentation model has been suggest in [117]. Here a non-liner transformation between YUV and new TSL* color space is used to boost the segmentation.

-

D. Discussion

A review on the utilization of different color space in skin detection was presented. Furthermore, a comparison based on literature results was included. To make the comparisons realistic, some points should be considered. First, as it was stated before, there are certain factors which are effective on the performance of detectors, but are missed in the studies, e.g. the size of training database. In some cases the method which is used and the evaluation set are also very decisive. In neural networks, the performance of classifier is directly depend on the number of neurons, as well as the initial guess of the weights. A similar logic exists in other approaches. Some databases are based on general images with different illumination and imaging conditions, but some are compiled based on a set of images in controlled conditions that can change the results in favor of some specific color spaces. In addition, some methods [8,94,95,118,119] have pretermitted luminance component in order to diminish the role of illumination. Several authors investigated integrity of this notional yielding to the fact that using luminance always boost the performance of detectors [36,39,40, 41,111]. Nevertheless, a very straight conclusion is that the performance of a color space is associated with several factors including the method used. In order to compare the performance of one classifier using different color models, all other factors which may influence the performance should be considered. Also, by looking from a wider perspective, it is tangible that different authors utilizing similar methods have reported inconsistent results. This obviously corroborates the fact that training sets, evaluation datasets and calibration factors used in each method are also decisive. Effort to find the optimum color space for the task of skin segmentation has been redirected into adapting optimal skin detection models and illumination compensation algorithms as performance comparisons show that the effect of segmentation technique is not even comparable with the efficacy of color model.

-

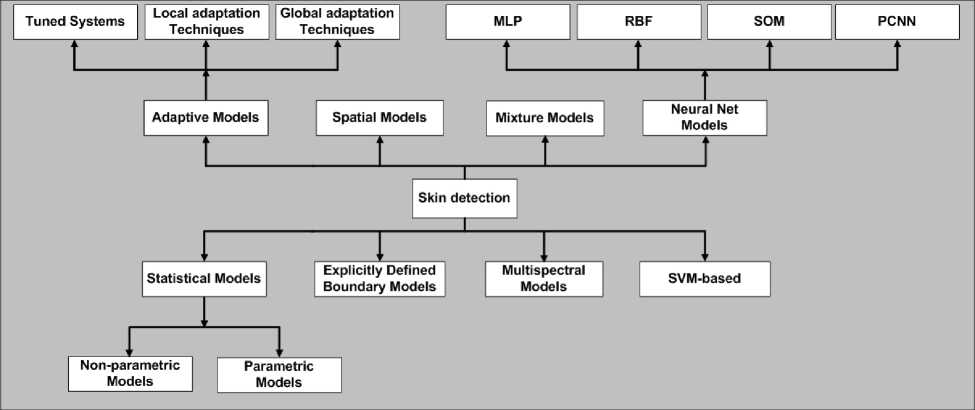

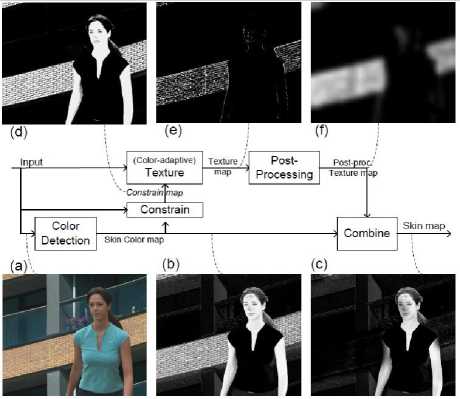

IV. Skin Detection Methods

In this section, most techniques used in segmentation of skin pixels are elucidated. Skin detection approaches can be classified into 8 groups which are not yet mutually exclusive. Explicitly defined methods are based on observation of the skin cluster in a particular color space and the idea is to design segmentation rules based on the boundary of the skin cluster. Statistical methods are based on actuarial information extracted from histogram of training skin and non-skin pixels. Non-parametric or parametric models are developed with the aim of obtaining the probability that a pixel belongs to skin or non-skin class. Artificial neural networks (ANN) are very useful tools in either estimation of skin distribution or direct classification of the pixels. ANNs with variety of structures have been utilized in skin segmentation of color images based on both color and texture information. Spatial analysis methods which have been recently developed not only concentrate on in-pixel information, but they often exploit neighboring pixels to boost the performance of classification. Adaptive methods often reach higher accuracies with the cost of computation mostly in images and videos with specific features. These methods often improve the performance of former methods by dynamically updating classifiers’ parameters based on global or local information of the test image. Non-visible spectral models are based on relatively expensive tools working in different spectral bands. SVM-based systems are also used for classification of skin pixels in specific applications. And finally, the mixture techniques are based on combination of former methods. Fig. 6 shows classification of skin detection methods.

Fig.6. Skin Detection Approaches

-

A. Explicitly Defined Boundary Models

Among different people, the main difference in skin color is in its intensity (brightness) rather than the chrominance (color) [24,114,116]. This observation has been a leading factor for developing a very common and simple approach in skin classification. Explicitly defined methods are based on a set of rules derived from the skin locus in 2D or 3D color spaces. These methods have a pixel-based processing scheme in which for any given pixel, rules are investigated to decide on the class of that pixel. They are very popular mainly due to their simple and quick training, low cost implementation and fast processing. However, several parameters are involved in degrading the performance of explicit classifiers, including their static nature, high dependence on training images, effectiveness of rules and inability to deal with most of skin detection challenges. If the training set is selected too general, the classifier gives a high false positive rate and if it consist of images of specific conditions, then the classifier is not applicable for many images. Nevertheless, explicitly defined boundary models with low cost and fast computational requirements are suitable for many applications in which lower accuracy is acceptive.

Kovac et al. [120] proposed a method of explicitly defined boundary model using RGB color space in two of daylight and flashlight conditions which has been reutilized in [16,85,86,119]. Here, a pixel is considered skin in uniform daylight conditions if it satisfies R>95, G>40, B>20, max{R,G,B}-min{R,G,B}>15, |R-G|>15, R>G and R>B conditions. In flashlight illumination, however, a pixel is assumed to be skin if R>220, G>210, B>170, |R-G|<15, R>G, R>B. Zhang et al. [121] transform the 3D color space to polar 2D color space, and by means of an explicit defined method on the radius and phase of any pixel in the polar space, the image is segmented to skin and non-skin regions. The boundary defined in [122,123] is based on normalized rgb color space. For a given pixel, upper and lower boundaries for “g” channel is determined based on value of “r”

component. Also, sRGB color space has been employed in [124,125] using very simple rules on sR, sG and sB values. Other variations of this approach based on RGB and relative color spaces are proposed in [87,101].

Orthogonal color spaces are frequently used in case of explicitly defined boundary models. Sagheer et al. [33] exploited two cases of normal lighting (Cb ϵ {110-120} and Cr ϵ {135-150}) and different lighting conditions (Cb ϵ {110-160} and Cr ϵ {120-150}). In order to detect faces, in [126,127], rules are dynamically reconfigured based on pixel’s value. A pixel’s skinness is investigated based on its luminance value:

if ( y > 128) ^ a = - 2 + 256 - Y , a = 20 - 256 - Y , a = 6, a = 8

1 234

YY if (Y < 128) ^ a = 6, a = 12,a = 2 + —, a =-16 + — 12 34

In this case, a pixel is classified as skin if it satisfies following conditions:

Cr > - 2( Cb + 24), Cr > - ( Cb + 17), Cr > - 4( Cb + 32), Cr > 2.5( Cb + a )

Cr > a , Cr > 0.5( a - Cb ), Cr < 220 - Cb , Cr < 4( a - Cb )

3 4 632

In [31,34,128,129], different elliptical boundaries are estimated based on the fact that skin locus in CbCr is similar to ellipse, and then in evaluation, only pixels surrounded by the ellipse are considered as skin. An FPGA implementation of a face detector based on explicitly defined boundary model in YCbCr color space is proposed in [21]. Similar classification works (using orthogonal color models) based on boundary model with variation in parameters originating from different training sets are presented in [12,118,130,131,132] .

Many works employed perceptual color spaces such as HSV to define explicit rules. Zahir et al. [100] proposed a simple boundary model using HSV color space for indoor and outdoor conditions. Pitas et al. [133] utilized HSV color space with following rules to detect skin pixels:

V > 40, 0.2 < 5 < 0.6, ( 0 °< H < 25 ° v 335 ° < H < 360 ° )

Garcia et al. [134] designed more sophisticated rules based on identical color space:

V > 40, H <- 0.4 V + 75,10 < 5 < ( - H - 0.1 V + 110)

if ( H > 0) ^ 5 < 0.08(100 - V ) H + 0.5 V (11)

if ( H < 0) ^ 5 < 0.5 H + 35

In [112,135,136,137], boundary models based on skin locus in other perceptual color spaces are proposed.

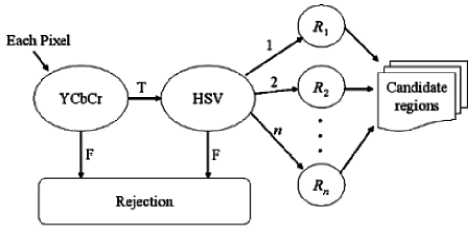

Combination of color spaces has been also effective to boost the performance of explicitly defined boundary models. Thakur et al. [23] employed RGB, CbCr and HS color models. Rules are set independently for triple color spaces and results are simply fused for taking final decision. An algorithm for tattoo detection is proposed in [138] based on combination of YC b C r and HSV. Kim et al. [139] proposed a technique based on fusion of RGB, HSV and YC b C r color spaces. A skin candidate region T(x,y) is calculated as:

T(x,y) = D 1 Wx , y ) + D2W2( x , y ) + D 3 W 3 ( x , y ) (12)

where Wi=TPi/FPi (for a set of 50 training images) and Di(x,y) represents the detected skin pixel in color space i. Subsequently, a threshold is adapted to decide whether or not the pixel located at (x,y) is skin. Furthermore, fusion of the result of different color spaces to reduce false positives has been a common procedure; some examples are: RGB and YC b C r [140], YC b C r and YUV [8], HSV and YUV [109], HSV and YC b C r [141,142], RGB and YUV [143] and HSV and YCgCr [144]. Gasparini et al. [98] used genetic algorithm to find accurate boundaries of skin cluster. He compared the method with some former methods including [120, 133]. The results showed better performance for genetic algorithm.

-

B. Statistical Models

Skin detection is a probabilistic problem and many techniques based on general distribution of skin color, each in a particular color model, have been developed. Based on an enormous training set, these methods estimate the probability that an observed pixel is associated with skin. Unlike parametric methods which are based on parameterized families of probability distributions, nonparametric methods are based on descriptive and inferential statistics. In the following subsections, these methods are explained.

-

1) Non-parametric (histogram based) models

There is no any explicit definition of probability density function in non-parametric techniques. Instead, a point to point mapping between color distribution and the quantized color model is provided, i.e., a skinness probability value is assigned to each discrete point of the entire color model. Single histogram based Look-UPTable (LUT) model is a common approach in modeling skin color cluster. In this technique by using a set of training skin pixels, distribution of skin pixels in a particular color space is obtained. Considering RGB as a color space with finest possible resolution (i.e. 256 bins per each channel), the 3D RGB histogram (or LUT) is constructed from 256*256*256 cells that each representing skin probability of one possible RiGiBi value. The learning process is simple, but populating the histogram requires a massive skin dataset. The final probability for each possible RiGiBi value is obtained as:

No . of occurance of RiGiBi (13) i i i total counts

This simply constructs an RGB-based 2563 size LUT. The related huge storage requirement which is histogram model’s blemish can be addressed by using both coarser bins and 2D color models.

Several authors have utilized LUT-based skin color modeling. Jones et al. [45] employed this technique for both person detection and adult image recognition. In order to reduce false positives of a face detector, a set of LUTs including different color spaces such as RGB and YUV are used in [145]. Liu et al. [18] utilized HS color model in conjunction with a LUT with 256*256 bins to build an skin classifier. They used morphological and blob analysis for further enhancement. Yoo et al. [146] also used a 32*32 LUT (total of 1024 bins) in the same color model for face tracking. Ibraheem et al. [147] adopted a mixture of histogram models in RGB, YCbCr and HSV for recognition of hand gestures. The overall model is the linear weighted summation of all 3 histograms. Chen et al. [148] employed the normalized histogram of skin color in HSV color space to filter many non-face regions.

Contrary to former LUT approach, the Bayesian classifier considers two histograms of skin and non-skin pixels. In fact, due to an obvious overlap between skin and non-skin pixels in different color spaces (Jones et al. [45] showed that in their study, 97.2% of colors occurred both as skin and non-skin), in above equation, P(RiGiBi) is a conditional probability for which it is already assumed the observed pixel belongs to skin class. Hence, according to Bayes rule:

P ( skin\RlGlBl ) =

P (| R G B | skin ) P ( skin )

P (| RjGjB ,.| skin ) P ( skin ) + P (| RGB ,.| non - skin ) P ( non - skin )

Using each of skin and non-skin histograms, P(RiGiBi|skin) and P(RiGiBi|non-skin) are calculated respectively. In designing a Bayesian classifier, two assumptions for prior probabilities are possible [25]. Using ML (Maximum likelihood) approach results in P(skin)=P(non-skin) while by using MAP (Maximum a posteriori) assumption, P(skin) and P(non-skin) are both estimated by employing a training set. Zarit et al. [97]

compared the performance of Bayesian classifiers using both ML and MAP premises in 5 different color spaces. It is concluded that ML based classifier significantly performs better. However, it is possible to use following detection rule to eliminate the effect of priori probabilities [38]:

P ( RiGiB i | skin ) P ( non - skin ) (15) P ( R G B | nonskin ) , P ( skin )

where the value of K is tunable parameter to remove the dependency of detector’s behavior to priori probabilities. The optimum value for θ is obtained by using ROC curve depend on the application. In [45], it is proved that for any choice of priori probabilities, the resultant ROC is the same.

In [149,35], a Bayesian classifier based on YCbCr color space is employed. Erdem et al. [16] employed Bayesian based post filtering method to reduce false positives of Viola-Johns face detector. In [150], the adequate number of quantization levels by minimizing an objective function which is the summation of false acceptance rate and false rejection rate in accordance with 2k number of bins is found. The Bayesian classifier accompanying with Adaboost method is used for detecting faces. Due to the precision of Bayesian technique in estimating skinness probability, this model has been fostered in several other skin and face detectors [12,51,103].

Zarit et al. [25] conducted a comparison study on the performance of histogram models in Fleck HS, HSV, rgb, CIE Lab and YCbCr. For training, 48 images are used and evaluation was done on 64 other images. It is concluded that Fleck HS and HSV outperform other color spaces. Also by using two resolutions of 128*128 and 64*64, the performance did not change significantly. Another work by Phung et al. [36,41] examined the tradeoff between the number of bins per channel and detection rate. The performance of Bayesian classifiers with disparate number of bins in five different color spaces including RGB, CIE XYZ, HSV, YCbCr and CIE Lab using ECU dataset are compared. There was no significant difference in performance when using different color spaces at histogram sizes more than 128 and 256 bins per channel. Contrary to other color spaces in which the performance rapidly degrades when histogram size is less than 64, for RGB color space the performance remains untouched when histogram size is not less than 32. However, performance always lessens if only chrominance channels are utilized, and there are significant performance variations between disparate choices of chrominance channels.

There are several advantage and disadvantages associated with non-parametric techniques. The ease of training and implementation, fast training, and independency on the shape of cluster [38] are the main advantages. In addition, histogram based methods often exhibit higher detection rate compare with parametric ones, as there is no fitting error in their probability estimation procedure. However, one main drawback of these techniques is their dependency on the training set which requires gathering of a large number of skin pixels. In addition, large memories [38,41,58] are required to implement histogram based models particularly when fine resolutions are needed.

-

2) Parametric models

Parametric models such as single Gaussian models (SGMs), Gaussian mixture models (GMMs), cluster of Gaussian models (CGMs), Elliptical models (EMs), etc, are developed to compensate LUT shortcomings such as high storage requirement. In addition, they generalize very well with a relatively smaller amount of training set [17,38]. Care should be taken in using these models; where the goodness of the fit is a very important parameter in such techniques which specifies how effectively PDF simulates the real distribution [151]. In SGM, there should be a smooth Gaussian distribution around the mean vector. However, in general conditions, the distribution is more sophisticated. In a controlled environment, by using elliptical Gaussian joint PDF, for particular color spaces, multivariate normal distribution of an m-dimensional color vector C can be modeled as:

P ( C ; µ , Λ ) =

1 exp( - ( C - µ ) T Λ -1( C - µ ) )

(2 π ) 2 | Λ |0.5 2

Distribution parameters i.e. diagonal covariance matrix (Λ) and mean vector (μ) are calculated based on ML (Maximum Likelihood) approach using N skin pixels of the training dataset in color space C j :

NN

µ = 1 ∑ C j , Λ= 1 ∑ ( C j - µ )( C j - µ ) T (17)

N j = 1 n - 1 j = 1

Either the probability value [152,153] or Mahalanobis Distance (MD) [154] from the mean vector and observation vector C can represent the skinness of the color vector [155]. Using an empirical harsh threshold or a hysteresis (double thresholding) method [52] considering ROC, by comparing it with either of probability or MD, the decision about any given pixel can be taken. MD is defined as [38]:

λ c ( C j ) = ( C j - µ ) T Λ- 1 ( C j - µ ) (18)

The result of applying the threshold to Eq. 18 is an ellipsoid in 3D color spaces. This will be logically true if the distribution of non-skin pixels is assumed to be uniform. Based on a non-uniform distribution, in [41], the use of unimodal Gaussians for both skin and non-skin distributions is investigated. In this case, the MD from color vector to both centriod of skin cluster and non-skin cluster should be considered:

λ c ( C j ) = ( C j - µ ) T Λ- 1( C j - µ ) - (( C j - µ ns ) T Λ ns - 1( C j - µ ns ))

where “ns” indicates non-skin related parametr. Ketenci et al. [76] compared the performance of SGM in rgb, YCbCr, HSV, YIQ and YUV without considering the goodness of the fit test. However, there are certain color spaces in which the distribution of skin color is somehow follows Gaussian rule. YC b C r color space has been hugely employed in SGM based techniques. Zhu et al. [156] employed SGM in YC b C r color model for both skin detection and lip segmentation. The most significant features of SGM are their training simplicity, negligible storage requirement, and relatively low cost of computation. In [43,93,94], also SGM is employed using C=(cb,cr)T as the color vector. Subban et al. [106] compared the performance of single Gaussian models in different color spaces reporting that YP b P r outperforms CIE-XYZ, YCC and YD b D r . In [65], a single Gaussian model is developed in RGB, HSV, YC b C r , CIE-Lab, and CIE Luv color spaces based on their own data base. Useing MCC as a metrics for evaluation, they conclude that CIE-Lab has the best performance while normalized rgb has the lowest. In [157,158], the SGM classifier is constructed based on rgb color space, and it is applied to remove probable non-regions of interests.

GMM is developed to model more complex distributions as a normalized weighted sum of Gaussian PDFs [159]. It compensates the inability of SGM in dealing with uncontrolled conditions in a general skin segmentation problem, in addition to the fact that SGM is not capable of approximating the actual distribution due to the asymmetry of distribution in its peak [105]. The mixture PDF is the summation of weighted Gaussian kernels defined as:

N P ( C ; µ , Λ , w , N ) = ∑ w j G j ( C ; µ j , Λ j ) (20)

j = 1

where each wi is the weight of each kernels and N is total number of single Gaussian components. The learning process in GMMs is quite different from that of SGMs; here, an iterative method called EM (Expectation Maximization) is often applied to estimate PDF’s parameters. EM is a two stage algorithm which tries to find the maximum Log likelihood function that estimates the real distribution as much as possible. In order to utilize it, the number of Gaussian components i.e. K and other parameters should be initialized that for which k-means clustering algorithm can be employed [160]. Evaluation process is also similar to that of SGMs that probability itself or Bayesian rule may be exploited for segmentation.

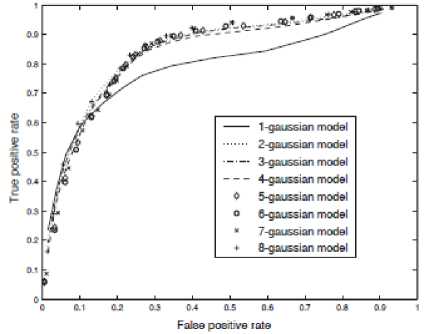

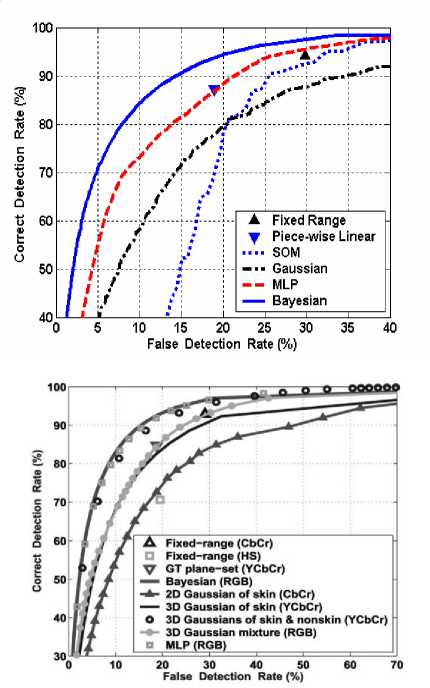

The most appealing features of GMM models are their simple evaluation process and low memory cost. The training process is however, much longer than the former methods. A comparative study on the performance of single and mixture of Gaussian distributions in [161] showed that mixture models improve the performance only in a relevant operating region (high True Positive rates). In addition, they concluded that increasing the number of kernels is not very effective.

Xie et al. [68] employed GMM in RGB color space for hand detection. In [45], 16 kernels, and in [159], 2

Gaussian components are employed for construction of GMM. Terrillon et al. [108] claimed that k=8 is an appropriate tradeoff between computation cost and goodness of fit. GMM technique was also utilized by Hossain et al. [162] who adopted different GMMs for different values of luminance in two bright and normal conditions.

Fig.7. ROC for SGM and GMM [161]

Other less common approaches are cluster of Gaussians and Bivariate Pearson Mixture Model (BPMM). Cluster of Gaussians have been employed for estimation of distribution with the idea that skin cluster for different ethnicities (whitish, darkish and yellowish) or in different illumination conditions (high, medium and low) forms different separate Gaussian patches in particular color space. Phung et al. [39] used 3 Gaussian clusters each characterized by its centriods and covariance matrix, obtained using K-means clustering. Each of clusters is related to one level of luminance defined as low, medium and high. In evaluation, they utilized minimum MD distance of the color vector to the clusters. Zou et al. [164] also applied 3 Gaussian clusters to model different ethnicities. BPMM has been also addressed for the task of skin segmentation [165,166]. Here, BPMM type IIaα (Bivariate Beta) is used to model skin color in HS color space. They claimed that this model is more precise, as in compare with GMMs the false rejection rate of BPMM was 5% less than that of GMM.

Another alternative way to redress SGM and GMM limitations was proposed in [105]. Here, skin distribution is estimated based on observation of semi-elliptical shape of skin cluster in some color spaces. For this case, Lee et al. [298] defined the elliptical boundary model as P(C; μ, Λ) where:

Ρ ( C ; µ , Λ ) = ( C - µ ) T Λ- 1 ( C - µ ) (21)

From the training point of view, this model is less computational and faster than GMM and parameters are simply obtained as:

µ = 1 ∑ NC j , Λ= 1 ∑ N f j ( C j -Ω )( C j -Ω ) T , Ω= 1 ∑ N f j C j

In above equations, N is total number of samples, and Q is mean of chrominance vectors. Given an input chrominance vector C, the decision on skinness of the pixel can be calculated using harsh thresholding [105]. The model has been tested under rg, CIE-uv, CIE-ab, CIE-xy, IQ, and CbCr color models concluding that it outperforms SGM and 6 kernel GMM model from detection rate point of view in all color spaces. Computationally, elliptical boundary model is almost as quick as SGM, but it isfaster than GMM. Xu et al. [167] also leveraged both elliptical boundary model and depth data (by using Kinect sensor) to perform hand and face localization.

Entropy Based Models have been also utilized for skin segmentation as well as several other image processing task including face detection [24], speech recognition [168], etc. However, they lost their attraction soon in skin classification mainly due to their high volume of computation, and intangible performance improvement in compare with former parametric and non-parametric models. Generally, a model is inferred of a set of training data to be used for classification. Choosing several relevant features, their histogram is calculated on the training pixels, and model parameters are obtained based on MaxEnt model[169]. Varieties of models have been developed based on maximum entropy model (MaxEnt), with constraints concerning marginal distributions. In one model, it is assumed that pixels are independent in color (baseline model) [170]. The MaxEnt solution to this model was obtained using Lagrange multipliers. The second model, Hidden Markov Model (HMM) provided better detection results as it is not as loose as the baseline one [171]. This model is obtained by constraining the baseline to a model in which skin zones are not considered thoroughly random but are made of pact patches. Thus, a constraint is added to the prior P(Y) (Y is the skin probability map (SPM) of the image) using 4 adjacent neighbors of each pixel. In this case, the MaxEnt model follows Gibbs distribution [172]. In First Order Model (FOM), there is another constraint imposed on the two-pixel marginal of the posterior, i.e., P(y s ,y t |x s ,x t ) in which s,t are neighbor pixels, x is the color vector and y is the skinness probability. Using Lagrange multipliers, solution to MaxEnt problem in FOM model is [172]:

Ρ ( Y | X ) ≈ exp[ ∑ λ ( s , t , xs , xt , ys , ys )] (23)

where Z(ys,yt,xs,xt)>0 are parameters of the distribution. The total number of parameters is 2563×2563×2×2 for a 24bit RGB color image.

Markov Random Fields (MRF) and Conditional Random Fields (CRF) are also other statistical approaches successfully applied to segmentation tasks with similar concepts to previous methods. The former is a graphical solution that models joint probability distribution based on Bayesian framework which takes the spatial connection between pixels into account, while the latter is an approach directly models conditional PDF [173]. For MRF first spatial relationship between pixels is directly integrated and then by using Bayesian concept, the model is inferred. The label distribution is computed by maximizing the probability of the MRF model as:

x ∗ = arg x max{ P ( x | y )} (24)

Using Bayesian method and the fact that prior probability of y i.e. P(y) is independent of x, the above equation is rewritten as:

x ∗ = arg max{ P ( y | x ) P ( x )} (25)

In [79,340], an initial segmentation is performed by using elliptical boundary model, and then by means of an iterative method, other skin pixels are annexed to the initially annotated ones. The repetitive algorithm exploits Ising model (a two class problem with interacting particles where pixels arranged in a planar grid) and Hammersley-Clifford Theorem (HCT) to compute the probability in terms of total energy. In each iteration, based on Ising model, the total energy of the system is computed and then by using HCT, the probability of configuration is calculated. The process continues until either the temperature reaches to a small value, or the difference in number of detected skin pixels between two successive results is less than 1 percentage of the total size of the image. In Gibbs distribution the temperature is a parameter which is updated in each replicate. The experimental results in [79,174] show the effectiveness of the method in compare with elliptical boundary model proposed in [105]. In some semi-skin color regions, however the algorithm exhibited poor results.

CRF model primary represented in [173,175] also combines information of different color spaces and model the spatial relationship between image pixels. Here, Eq. 24 is rewriten with an assumption that the detection results of K classifiers i.e. D(i) are available. The aim is to combine the high-level information obtained by each D(i) with the low-level information in image to take the final decision:

x∗ =arg max{P(x| y,D(1),D(2),...,D(k))}(26)

CRF is a discriminative model which defines the conditional probability as:

P(x| y,D(1),D(2),...,D(k))= 1

Z(y,D(1),D(2),...,D(k))

Q ( p )

∏∏ exp( ∑ λ pq f pq ( x ψ c , y , D (1) , D (2) ,..., D ( k ) ))

Cp∈C ψc∈Cp where Z(y, D(1), D(2), ..., D(k)) is normalization constant, Cp is a clique template from the clique set C, fpq is the qth real-valued feature function defined on the clique template Cp and kpq is the model parameter. Ahmadi et al. [173,175] utilized a pseudo-likelihood algorithm for parameter estimation as an approximation to ML estimation. For simplicity, in [175], they consider two simple explicit boundary model as D(1), D(2) and in [173], they boosted the performance of their detection by using the explicit boundary model in more color spaces.

A Bayesian network (or probabilistic directed acyclic graphical model) is a probabilistic graphical model that encodes a set of random variables and their conditional relationships (dependencies) [176]. Formally, Bayesian networks are directed acyclic graphs (DAG) in which edges represent conditional dependencies, and nodes which are not connected are conditionally independent. Each of the nodes represents random variable Xi with class conditional probability P(X i |n i ) where n i is the parent of Xi in graph. Variables could be observable quantities, latent variables, unknown parameters or hypotheses associated with a probability that takes a particular set of values as inputs for the node's parent variables and gives the probability of the variable represented by the node [176]. The structure for a network, S', is correct when it is possible to find a distribution P(C,X|S') that matches the actual distribution; otherwise, it is incorrect. ML estimation is utilized to learn the network parameters [177]. Two approaches are often used for construction of Bayesian classifiers; either selecting a structure and specify the dependency among variables or determining the distribution of features. Both impose the parameter set that is required to calculate the decision function. Popular Bayesian network classifiers are the Naive Bayes (NB) classifier, in which the features are assumed independent from the given class, and the Tree-Augmented Naive Bayes classifier (TAN) which was proposed to enhance the performance over the simple Naive-Bayes classifier. In the structure of the TAN classifier, the class variable is the parent of all the features and each feature has at most one other feature as a parent, such that the resultant graph of the features forms a tree. For learning the TAN classifier, the structure that maximizes the likelihood function out of all possible TAN structures is found in training data. The learning method proposed by Sebe et al. [177] is stochastic structure search (SSS) which improved the performance on a piece of Compaq dataset they used.

-

3) Discussion on statistical methods

Several statistical skin detection techniques have been elucidated in this subsection; all categorized in two minor classes of non-parametric and parametric approaches. Non-parametric methods are based on histogram of skin and non-skin pixels in a predefined training set. The size of training set has a direct impression on the performance of detectors. Jones et al. [45] employed a database of two times more than 2563 size to train their LUT system. This enormous training set is required to produce remarkable results. They were also unable to generalize and interpolate the training data [17,38]. The large storage requirements make the methods unfavorable choice particularly in embedded micro-system platforms. However, in compare with parametric models, histogram models are independent of the shape of cluster, trained simply and fast. In evaluation also, they can be utilized in several clock cycles per pixel needed for accessing

memory. In parametric methods, the simulation results of real distribution needed to be approved using goodness of fit measures. In addition, the choice of color space is also determinative as it is strongly dependant on the shape of skin cluster. Training complexity varies in different parametric methods, but in overall, both the training time and its complication are not copacetic in compare with histogram models. Parametric and non-parametric models have been compared throughout this section and generally, it was concluded that non-parametric models often outperform parametric ones with the cost of high storage requirement.

-

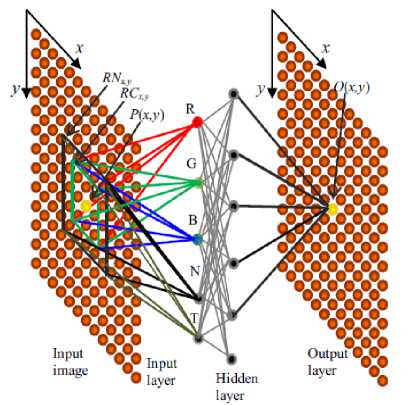

C. Neural Net Models

Artificial neural networks (ANNs) are mathematical models representing function F:X ^ Y, with a distribution over X or both X and Y, that simulate the function of brain inspired by human nervous system. They are generally presented as systems of interconnected neurons. Like other machine learning methods, neural networks have been used to solve a wide variety of tasks. In skin detection, ANNs have been utilized for different purposes and structures. In illumination compensation, dynamic models, in combination with other techniques, and direct classification, variety of ANNs such as MLP, SOM, PCNN, etc, are exploited.