A computer vision based lane detection approach

Автор: Md. Rezwanul Haque, Md. Milon Islam, Kazi Saeed Alam, Hasib Iqbal, Md. Ebrahim Shaik

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 3 vol.11, 2019 года.

Бесплатный доступ

Automatic lane detection to help the driver is an issue considered for the advancement of Advanced Driver Assistance Systems (ADAS) and a high level of application frameworks because of its importance in drivers and passerby safety in vehicular streets. But still, now it is a most challenging problem because of some factors that are faced by lane detection systems like as vagueness of lane patterns, perspective consequence, low visibility of the lane lines, shadows, incomplete occlusions, brightness and light reflection. The proposed system detects the lane boundary lines using computer vision-based technologies. In this paper, we introduced a system that can efficiently identify the lane lines on the smooth road surface. Gradient and HLS thresholding are the central part to detect the lane lines. We have applied the Gradient and HLS thresholding to identify the lane line in binary images. The color lane is estimated by a sliding window search technique that visualizes the lanes. The performance of the proposed system is evaluated on the KITTI road dataset. The experimental results show that our proposed method detects the lane on the road surface accurately in several brightness conditions.

Lane Detection, Computer Vision, Gradient Thresholding, HLS Thresholding

Короткий адрес: https://sciup.org/15016039

IDR: 15016039 | DOI: 10.5815/ijigsp.2019.03.04

Текст научной статьи A computer vision based lane detection approach

Conferring to information [1], in Great Britain about of 70% all described road accidents are an outcome of driver fault or slow response period. Very recently, many researchers are working on the intelligent vehicles to reduce the road accidents and ensure safe driving. With the advent of technologies, numerous techniques have been developed to notify the drivers about conceivable lane departure or crash, the lane structure and the locations of additional automobiles in the lanes. The lane detection on the road surface has become a very prominent issue as it provides noteworthy symbols in the ambient situation of the intelligent vehicles. It is a challenging task to detect the lane as the input image become noisy due to the variation of the environment.

In the very recent years, many of the researches associated with lane detection have been developed based on the use of the sensors, camera, lidar, etc. Clanton et al. [2] proposed a technique for multi-sensor lane departure warning that used roadmap with GPS receiver for road surface lane detection. The authors in [3] suggested a method using lidar with a monocular camera that was able to detect the lane in a real-time environment. The lidar or sensors obtained the data directly from the environment, and the devices are not dependent on the weather circumstances which are the main benefits of these techniques. However, the resolution of GPS is 1015 m, and the cost of the lidar is comparatively very high, which are the significant disadvantages of the modalities.

With the rapid growth of computer vision-based technology, the camera has become more popular that can capture any situation of the environment in any direction. As far as, the researches that have been done using vision-based techniques, the technique in [4] achieved the likely outcomes on the Caltech lane dataset. They introduced mainly three techniques for lane detection. These are the inverse perspective mapping (IPM) that is used to eliminate the perspective effect, image filtering that is used to eliminate noise through candidate lane generation, and lane model fitting that detects the lines on the road images. Besides, this technique concerns the model fitting, but the reliability and efficiency of the system are deserted here. The techniques that have been already proposed have some difficulties to detect lane in case the lanes are not fully visible. Some systems are developed based on the edge detection [5, 6, 18] that are reduced fewer accurate by lane-like noise because of the lens flare consequence. Moreover, the techniques in [7], based on color cues worked well although the illumination changes rapidly. The optical flow [8] can solve the problems that are occurred in the preceding techniques, but it needs high computational power and cannot promisingly work for the road without texture.

Furthermore, some techniques have been developed using the concept of a vanishing point for lane detection on the road surfaces. Gabor filters with texture orientation were used in [9] to detect lane. They used an adaptive soft voting technique that estimated a vanishing point and demarcated the confidence rank of the texture orientation. To measure the performance of the lane detection for structured and unstructured roads, a modality using a vanishing point estimation have been introduced in [10]. However, with the change of location, the vanishing point cannot be estimated accurately which is the major drawbacks of these methods.

In this paper, we introduced a computer vision-based techniques that can efficiently detect the lanes in any ambient environment. We have mainly used gradient and HLS thresholding for lane detection. For proper mapping, perspective transform has been applied after thresholding. The remaining part of the paper is prepared as follows. Section II presents the on-going as well as previous works that have been continued in this prominent area. The proposed lane detection technique with proper explanation is illustrated in Section III. The experimental results with input and output are depicted in Section IV. Section V finishes the paper.

II. Related Works

Several studies in computer vision have been proposed in the most recent years associated with lane detection. Many institutions have been working for a long time to propose efficient lane detection system. The researches related to this area are drawn briefly as follows.

Lee and Moon [11] proposed a real-time lane detection algorithm with a Region of Interest (ROI) that is able to work with the high noise level and response in a shorter time. The system used the Kalman filter and a least square approximation of linear movement for lane tracking operation. The system detects the lane as well as track the lane also. Song et al. [12] presented a system that can detect the lane as well as classify them using the concept of stereo vision for the essence of Advanced Driver Assistance Systems (ADAS). They proposed a model to detect the lane using the idea of Region of Interest (ROI), and for the classification task, they used Convolutional Neural Network (CNN) structure that is trained with the KITTI dataset to classify the right or left lane. However, the system failed to detect the lanes as the disparity image was noisy.

Wu et al. [13] designed a lane detection and departure warning scheme by determining the region of interest (ROI) in the region near to the automobile. The ROI is divided into non-overlapping chunks and to get the chunk gradients and chunk angles, two basic masks are developed that decrease the computational complexity. The driving situations are classified into four classes, and the departure system is developed with respect to lane detection outcomes. From the experimental outcomes, it is shown that the average lane detection rate is 96.12% and the departure warning rate is 98.60%. However, it takes comparatively high processing time due to computing the vertical and horizontal gradients.

Yoo et al. [14] presented a lane detection technique based on the vanishing point estimation. The system used the probabilistic voting technique to detect the vanishing points of the lane segments at first. The actual lane segments were determined by setting the threshold of the vanishing points as well as the line direction. In addition, to evaluate the lane detection rate a real-time inter-frame similarity scheme was proposed that decrease the false detection rate. As the lane geometry properties do not vary expressively, the real-time assessment scheme was under the postulation. However, the system cannot be worked for shapeless roads.

Ozgunalp et al. [15] introduced a vanishing point lane detection technique for multiple curved lanes. The system combined the disparity information with a lane marking technique that is able to estimate the PVP for a non-flat street condition. The redundant information of obstacles is removed by comparing the correct and fitted disparity values. Moreover, the estimation of PVP affected by the outliers at the operation of Least Squares Fitting and sometimes unsuccessful detection of lane happens due to the plus-minus peaks value selection. Piao et al. [16] developed a lane marking technique based on the binary blob analysis. To eliminate the perspective consequence from the road surfaces, the system used vanishing point detection and inverse perspective mapping. The binary blob filtering and blob verification methods are proposed to advance the effectiveness of the lane detection scheme. The outcomes of the system show that the average detection rate for the multi-lane dataset is 97.7%. However, the system failed to perform in a real-time environment.

Jung et al. [17] developed a lane marking modality using spatiotemporal images which are collected from the video. The spatiotemporal image created by accruing a set of pixels that are mined on a horizontal scan-line having a static location in every frame along with a time axis. Hough transform is applied to the collected images to detect lanes. The system is very effective for shortterm noises such as mislaid lanes or obstruction by vehicles. The system obtained the computational efficacy as well as the higher detection rate. Borkar et al. [18] proposed a lane detection technique based on inverse perspective mapping (IPM). The adaptive threshold technique is used to convert the input image to a binary image, and the predefined lane templates are used to select the lane marker candidates. RANSAC eliminated the outliers, and the Kalman filter tracked the lanes on the road surfaces.

Kang et al. [19] introduced a multi-lane detection technique based on the ridge attribute and the inverse perspective mapping (IPM). The features that are extracted have four local maxima as the identical lane is disseminated with a minor variance on the x-axis in the IPM image. The clustering based techniques are used to detect the lanes by clustering the lane attributes nearby every indigenous supreme point.

III. The Proposed Methodology for Lane Detection

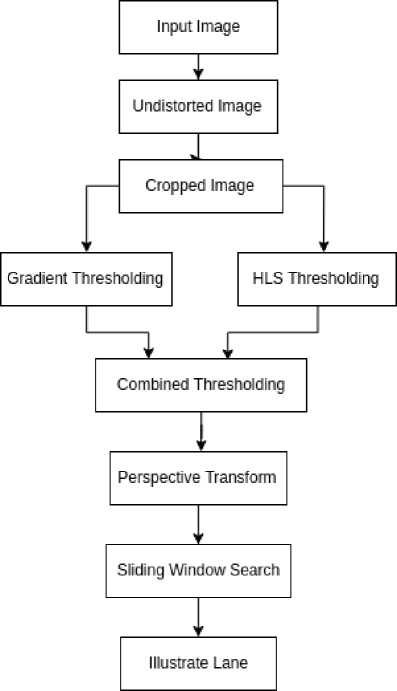

In this section, the proposed lane detection technique with proper explanation is illustrated. The overall working procedure of the proposed system is presented in Fig. 1. From the Fig. 1, it is shown that image preprocessing steps are taken to remove the noise from the images. The gradient and HLS thresholding are used to detect the lane lines on the images. The perspective transform visualizes the lane line properly. The lane detection dataset is retrieved from KITTI ROAD dataset [20] that contains 289 images. The details description of each step is outlined as follows.

Fig.1. The overall process of the proposed system for lane detection.

-

A. Preprocessing

Preprocessing stage has a significant characteristic of the lane lines marking. The main purpose of preprocessing is to increase the contrast, eliminate the noise and generate an edge image for the corresponding input image.

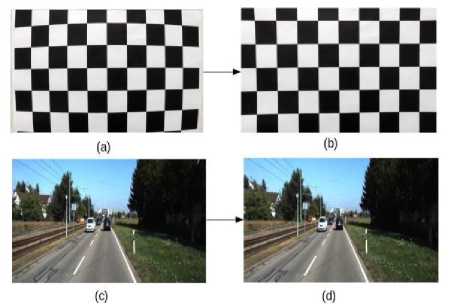

In this stage, the images are undistorted so that it will restore the straightness of lines, helping to identify lane lines. The variation between the distorted (original) and undistorted images is clear. The curved lines are now straight. The camera matrix and distortion coefficients using chessboard images are calculated in OpenCV. Here, it can be accomplished by gaining the inside corners within an image and utilizing that information to undistort the image. The foregoing concerns to coordinates in our 2D mapping while the contemporary represents the real-world coordinates of those image points in 3D space (with the z-axis, or depth = 0 for our chessboard images). Those mappings facilitate to attain out how to accurately eliminate distortion on images. The preprocessing steps with input image for the lane detection system are illustrated in Fig. 2. From the Fig. 2, it can be seen that the undistorted image is brighter than the original image as it is noise free.

Fig.2. The preprocessing steps with input image for the lane detection system. (a) Distorted chess board (b) Undistorted chessboard (c) Original image (d) Undistorted image.

-

B. Cropped Image

(a) (b)

Fig.3. Cropped Image for focusing on the region of lanes. (a) Undistorted image (b) Cropped image.

-

C. Thresholding

Thresholding [21] is generally used for image segmentation. This method is a kind of image segmentation that separates objects by altering grayscale images into binary images. Image thresholding technique is the most appropriate in images with high stages of contrast. The thresholding procedure can be stated as:

T = T [ a , b , p ( a , b ), f ( a , b )] (1)

where T represents the threshold value, the coordinates points of threshold value are (a, b) and the grayscale image pixels are p (a, b), f (a, b).

-

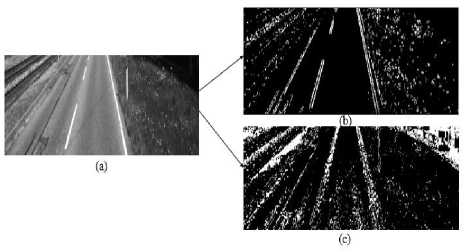

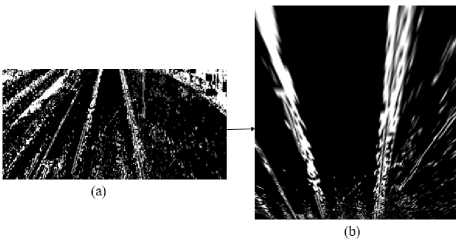

(i) Gradient Thresholding

Sobel is a kernel for gradient thresholding [22] in both and x and y-axis. Since the lane lines are probably going to be vertical, the more weight on the inclination in a y-axis is given. For proper scaling, total slope esteems and standardized is taken into consideration. The outcome after applying gradient thresholding is illustrated in Fig. 4 (b).

-

(ii) HLS thresholding

HLS (Hue Saturation Lightness) [23] color channel is used to handle cases when the road color is too bright or too light. L (lightness) channel threshold diminishes edges formed from shadows in the frame. S (saturation) channel threshold expands white or yellow lanes. H (hue) toward the line colors. The outcome after applying HLS thresholding is depicted in Fig. 4(c).

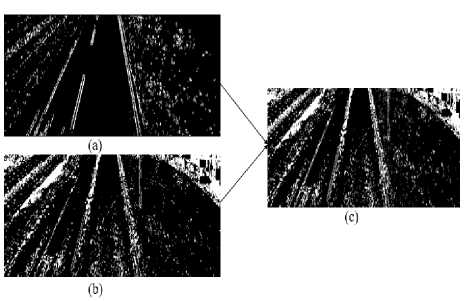

We have combined both of the Gradient and HLS (color) thresholding into one for the final thresholding binary image that improves the overall results of the lane detection process. The output combining the Gradient and HLS thresholding is demonstrated in Fig. 5(c).

Fig.4. Thresholding applies on the cropped image. (a) Cropped image (b) Gradient thresholding (c) HLS thresholding.

Fig.5. Combine the Gradient and the HLS thresholding for detecting lanes into the binary image. (a) Gradient thresholding (b) HLS thresholding (c) Combined thresholding.

-

D. Perspective Transform

The perspective transformation [24] is used to convert 3d world image into a 2d image. While undistorting and thresholding help to cover the crucial information, we can additionally divide that information by taking a drake at the part of the image of the road surface. To center in around the road part of the image, we move our point of view to a best down perspective of the street. While we don’t obtain any more information from this step, it’s enormously easier to isolate lane lines and measure things like curvature from this perspective. The Perspective Transform of the combined thresholding image is shown in Fig. 6.

-

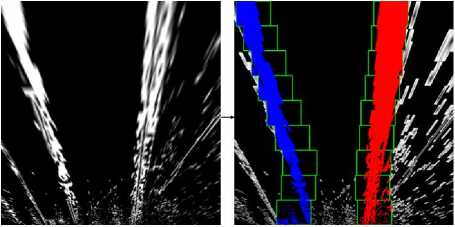

E. Sliding Window Search

As the lane lines already detected in an earlier frame, the information is used in a sliding window, placed around the line centers, to detect and track lane lines from bottom to the top of the image. The result of the sliding window search is demonstrated in Fig. 7. This permits us to do a highly qualified search and saves a lot of processing time. To recognize left and right lane line pixels, their x and y pixel positions are used, to fit a second-order polynomial curve:

f ( У ) = ay 2 + by + c = 0 (2)

The f(y) is used, rather than f(x) because the lane lines in the warped image are approximately vertical and may have the same x value for more than one y values.

Fig.6. Perspective Transform for viewing to the best down perspective of the road. (a) Combined thresholding (b) Perspective transform.

(a) (b)

Fig.7. Sliding Window Search for detecting primary stage of lanes from perspective transform image. (a) Perspective transform image (b) Sliding window search on perspective transform image.

-

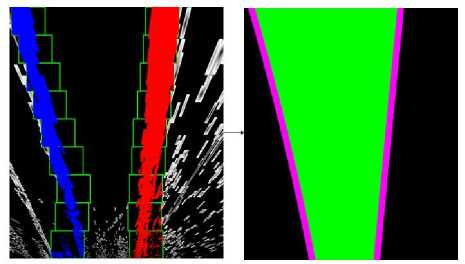

F. Illustrate Lane

Starting from the top point of view, the lane lines are easily recognized. A sliding window search distinguishes the lane lines. The green boxes express to the windows where the lane lines are colored. Since the windows search higher, they re-center to the standard pixel position so that they resemble the lines. The shaded lines will be stepped back onto the original image. The result of illustrated lanes from the sliding window search image is shown in Fig. 8.

(a)

(b)

Fig.8. Illustrate lane from sliding window search image. (a) Sliding window search image (b) Illustrated lanes and covered the space with green color.

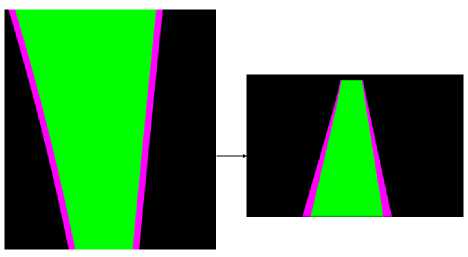

After illustrating the lane lines, the warp and crop operations are performed for proper visualization of the image. To warp, a 3x3 transformation matrix operation performed. Straight lines will continue straight still after the transformation. To observe this transformation matrix, 4 points on the input image and the corresponding points on the output image are needed. Among those 4 points, 3 of them should not be collinear. Then the images are cropped because, during the determination of recognizing lane lines, we only need to focus on the regions where we are likely to see the lanes. The result of the warped and cropped image is presented in Fig. 9.

(a) (b)

Fig.9. Warped and cropped of illustrated lane image. (a) Illustrated lane image (b) Warped and cropped of the previous image.

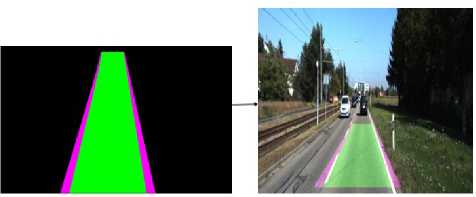

Conclusively, we get all this information and draw the results back onto the original image. The two pink lines which are recognized above are stated as lane lines, and the space between them is colored green to determine the road surface. While the pipeline prepares for a single image, it can easily be applied to processing many images to detect the lane line on the road surface. The final result of the proposed lane detection system is shown in Fig. 10.

(a)

(b)

Fig.10. The final result of lane detection. (a) Warped and cropped image (b) Detection of lanes.

IV. Experimental Results Analysis

-

A. Experimental Setup

The proposed system is executed in python language on a Desktop with Intel Core i55200U CPU @ 2.20GHz x 4. We have used OpenCV library in Python programming language with Ubuntu 18.04. Spyder is an integrated development environment which is used to fulfill our goal.

-

B. Results Analysis

The experimental results of some images that have been taken from the KITTI dataset [20] are demonstrated in this section, where lane detection results are verified under dense traffic and shadows. Fig. 11 shows the result of lane detection using shape and color features. The detected lanes are expressed as straight lines with pink color. Some failed cases are occurred due to shadows or broken lanes on the road surface. Fig. 11 (e) → (f) shows a little deviation detected lane lines from road lane lines because of shadow on the road. The proposed system also shows some error because of the width of the lane unexpectedly changes in the spatiotemporal image due to the pitch angle changes and thus disrupts the temporal consistency assumption. The proposed algorithm generates satisfying results with sharp curvature, sharp lane changes, hindrances, and lens flashes due to using the temporal consistency of lane width on each scan line. The experimental results show that the proposed technique identifies the lane accurately in different circumstances of environment like weather conditions and various illumination. To quantify the strength of the system, the accuracy of the method is also estimated on 175 frames from KITTI dataset. Among the 175 frames, 147 frames are correctly detected and 9 frames are incorrectly detected. The performance of the proposed system is illustrated in Table 1. The accuracy and false positive rate obtained by the system are 84% and 6.12% respectively.

Table 1. Performance of the KITTI lanes dataset.

|

Total number of all visible lines frames |

175 |

|

Number of detected lines frames |

147 |

|

Accuracy (%) |

84 |

|

Number of false detections |

9 |

|

False positive rate (%) |

6.12 |

|

Processing Speed (avg. f/s) |

314.75 |

Accuracy =

number of detected, Unes frames total number of all visible Unes frames

False Positive Rate =

number of false detections number of detected lines frames

Fig.11. Experimental results using the KITTI data set as the inputs

V. Conclusion

The paper presents a lane detection system using computer vision-based technologies that can efficiently detect the lanes on the road. Different techniques like preprocessing, thresholding, perspective transform are fused together in the proposed lane detection system. Gradient and HLS thresholding detect the lane line in binary images efficiently. Sliding window search is used to recognize the left and right lane on the road. The cropping technique worked only the particular region that consists of the lane lines. From the experimental results, it can be concluded that the system detects the lanes efficiently with any conditions of the environment. The system can be applied to any road having well-marked lines and implemented to the embedded system for the assistance of Advanced Driver Assistance Systems and the visually impaired people for navigation to keep them in proper track. In future, a real-time system with hardware implementation will be developed that will capture the images from the real-time scenario and detect the lanes based on the proposed technique as well as generate a warning for the concerned persons (drivers or visually impaired people).

Список литературы A computer vision based lane detection approach

- “Reported road casualties Great Britain 2009,” Stationery Office Dept. Transp., London, U.K., 2010.

- J. M. Clanton, D. M. Bevly, and A. S. Hodel, "A Low-Cost Solution for an Integrated Multisensor Lane Departure Warning System," in IEEE Transactions on Intelligent Transportation Systems, vol. 10, no. 1, pp. 47-59, Mar. 2009.

- Q. Li, L. Chen, M. Li, S. Shaw and A. Nüchter, "A Sensor-Fusion Drivable-Region and Lane-Detection System for Autonomous Vehicle Navigation in Challenging Road Scenarios," in IEEE Transactions on Vehicular Technology, vol. 63, no. 2, pp. 540-555, Feb. 2014.

- M. Aly, "Real-time detection of lane markers in urban streets," in Proc. IEEE Intelligent Vehicles Symposium, Eindhoven, pp. 7-12, 2008.

- H. Yoo, U. Yang, and K. Sohn, “Gradient-enhancing conversion for illumination-robust lane detection,” in IEEE Transactions on Intelligent Transportation Systems, vol. 14, no. 3, pp. 1083–1094, Sep. 2013.

- J. Deng, J. Kim, H. Sin, and Y. Han, “Fast lane detection based on the B-Spline fitting,” International Journal of Research in Engineering and Technology, vol. 2, no. 4, pp. 134–137, 2013.

- Tsung-Ying Sun, Shang-Jeng Tsai, and V. Chan, "HSI color model based lane-marking detection," in Proc. IEEE Intelligent Transportation Systems Conference, Toronto, Ont., pp. 1168-1172, 2006.

- R. Ke, Z. Li, J. Tang, Z. Pan and Y. Wang, "Real-Time Traffic Flow Parameter stimation From UAV Video Based on Ensemble Classifier and Optical Flow," in IEEE Transactions on Intelligent Transportation Systems. no.99, pp. 1-11, Mar. 2018.

- H. Kong, J. Audibert and J. Ponce, "General Road Detection From a Single Image," in IEEE Transactions on Image Processing, vol. 19, no. 8, pp. 2211-2220, Aug. 2010.

- P. Moghadam and J. F. Dong, "Road direction detection based on vanishing-point tracking," in Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, pp. 1553-1560, 2012.

- C. Lee and J. H. Moon, "Robust Lane Detection and Tracking for Real-Time Applications," in IEEE Transactions on Intelligent Transportation Systems, vol. no.99, pp.1-6, Feb. 2018.

- W. Song, Y. Yang, M. Fu, Y. Li and M. Wang, "Lane Detection and Classification for Forward Collision Warning System Based on Stereo Vision," in IEEE Sensors Journal, vol. 18, no. 12, pp. 5151-5163, Jun. 2018.

- C. Wu, L. Wang and K. Wang, "Ultra-low Complexity Block-based Lane Detection and Departure Warning System," in IEEE Transactions on Circuits and Systems for Video Technology, Feb. 2018.

- J. H. Yoo, S. Lee, S. Park and D. H. Kim, "A Robust Lane Detection Method Based on Vanishing Point Estimation Using the Relevance of Line Segments," in IEEE Transactions on Intelligent Transportation Systems, vol. 18, no. 12, pp. 3254-3266, Dec. 2017.

- U. Ozgunalp, R. Fan, X. Ai and N. Dahnoun, "Multiple Lane Detection Algorithm Based on Novel Dense Vanishing Point Estimation," in IEEE Transactions on Intelligent Transportation Systems, vol. 18, no. 3, pp. 621-632, March 2017.

- J. Piao and H. Shin, "Robust hypothesis generation method using binary blob analysis for multi-lane detection," in IET Image Processing, vol. 11, no. 12, pp. 1210-1218, Dec. 2017.

- S. Jung, J. Youn, and S. Sull, "Efficient Lane Detection Based on Spatiotemporal Images," in IEEE Transactions on Intelligent Transportation Systems, vol. 17, no. 1, pp. 289-295, Jan. 2016.

- A. Borkar, M. Hayes, and M. T. Smith, “A novel lane detection system with efficient ground truth generation,” in IEEE Transactions on Intelligent Transportation Systems vol. 13, no. 1, pp. 365–374, Mar. 2012.

- S. Kang, S. Lee, J. Hur and S. Seo, "Multi-lane detection based on accurate geometric lane estimation in highway scenarios," in Proc. IEEE Intelligent Vehicles Symposium, Dearborn, MI, pp. 221-226, 2014.

- J. Fritsch, T. Kühnl and A. Geiger, "A new performance measure and evaluation benchmark for road detection algorithms," in Proc. 16th International IEEE Conference on Intelligent Transportation Systems, pp. 1693-1700, 2013.

- M. Sezgin, and B. Sankur, “Survey over image thresholding techniques and quantative performance evaluation,” Journal of Electronic Imaging, vol.13, no.1, pp.146–165, 2004.

- G. P. Balasubramanian, E. Saber, V. Misic, E. Peskin, and M. Shaw, “Unsupervised color image segmentation using a dynamic color gradient thresholding algorithm,” in Proc. SPIE Human Vis. Electron. Imag. XIII, pp. 680 61H-1–680 61H-9, Feb. 2008.

- J. H. Yoo, D. Hwang, and K. Y. Moon, "Human body segmentation based on background estimation in modified HLS color space," in Proc. 9th WSEAS international conference on signal, speech and image processing (SSIP'09), pp. 152-155, 2009.

- Z. Liu, S. Wang and X. Ding, "ROI perspective transform based road marking detection and recognition," in Proc. International Conference on Audio, Language and Image Processing, Shanghai, pp. 841-846, 2012.