A Model based on Deep Learning for COVID-19 X-rays Classification

Автор: Eman I. Abd El-Latif, Nour Eldeen Khalifa

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 1 vol.15, 2023 года.

Бесплатный доступ

Throughout the COVID-19 pandemic in 2019 and until now, patients overrun hospitals and health care emergency units to check up on their health status. The health care systems were burdened by the increased number of patients and there was a need to speed up the diagnoses process of detecting this disease by using computer algorithms. In this paper, an integrated model based on deep and machine learning for covid-19 x-rays classification will be presented. The integration is built-up open two phases. The first phase is features extraction using deep transfer models such as Alexnet, Resnet18, VGG16, and VGG19. The second phase is the classification using machine learning algorithms such as Support Vector Machine (SVM), Decision Trees, and Ensemble algorithm. The dataset selected consists of three classes (COVID-19, Viral pneumonia, and Normal) class and the dataset is available online under the name COVID-19 Radiography database. More than 30 experiments are conducted to select the optimal integration between machine and deep learning models. The integration of VGG19 and SVM achieved the highest accuracy possible with 98.61%. The performance indicators such as Recall, Precision, and F1 Score support this finding. The proposed model consumes less time and resources in the training process if it is compared to deep transfer models. Comparative results are con-ducted at the end of the research, and the proposed model overcomes related works which used the same dataset in terms of testing accuracy.

COVID-19, X-rays, Deep Transfer Learning, VGG19, Classification, Machine learning, Support Vector Machine (SVM).

Короткий адрес: https://sciup.org/15018743

IDR: 15018743 | DOI: 10.5815/ijigsp.2023.01.04

Текст научной статьи A Model based on Deep Learning for COVID-19 X-rays Classification

COVID-19 is a virus produced by a disease called SARS-CoV-2[1]. It is extremely infectious and has rapidly spread on many countries. COVID-19 most commonly causes respiratory symptoms that can be like the flu, pneumonia, or viral pneumonia. COVID-19 attacks the lungs and respiratory system. Quickly recognition of symptoms is one of the first steps of protection against this disease [2].

Artificial intelligence (AI) and radiology imaging (chest CT and chest X-ray scan) offer promising solutions in COVID-19 diagnosis. In recent years, deep learning-based techniques [3] are used to solve many problems of computer-vision, particularly in medicinal fields such as bacterial colony classification [4, 5], disease identification [6], and lung abnormalities related to COVID-19. These techniques have shown good results on image classification problems in respect of effectiveness and prediction accuracy.

On the other hand, DL algorithms need a robust GPU machine and a huge number of training examples. To overcome this problem, various pre-trained models (Alexnet [7], SqueezNet [8], VGGNet [9], GoogleNet [10], and ResNet [11]) exist for training DL networks. These models show a significant role in the classification of viral and bacterial pneumonia [12, 13] and detecting the most thoracic infections [14, 15]. After studying existing algorithms towards classification chest X-rays images, it was found that the existing algorithms achieve low accuracy. The challenge is to make a model classify a patient with COVID-19 with increasing the accuracy and decreasing the falsepositive and false-negative percentage.

Several research projects have been dedicated to various COVID-19 related challenges and have been tackled using computer science techniques, such as detecting COVID-19 using geographical infections [16], exploring the role of different methods for fighting the COVID-19 [17], discovering the effects of oil and power industry on COVID-19 [18], finding possible COVID-19 treatment [19] and more. COVID-19 CXR image classification and categorization is the primary focus of the most existing research [20-22].

The main objective of this proposed technique is to classify chest X-rays images into COVID-19, normal, and viral pneumonia images. The features are extracted with four different DTL (Alexnet, VGG16, VGG19, and Resnet18). After feature extraction, different classifiers such as SVM, DT, and an ensemble algorithm are used for the classification task.

The remaining this research involves four sections: Section 2 illuminates the related algorithms of classification COVID-19; Section 3 (Proposed Method) explains the proposed technique; Section 4 represents the results of testing algorithm. Finally, Section 5 shows the conclusion and future work.

2. Related Works

DTL models show a fundamental role in the medicinal field because their major performance in image classification than traditional methods.

Tuan in [23] presented a model for classifying X-ray images into two classes (COVID-19 and healthy) and three classes classification (COVID-19, healthy, viral pneumonia). Three pre-trained models; Alexnet, GoogLeNet, and SqueezeNet are applied on three public Chest X-rays databases for training and testing.

The work described in[24] used eight pre-trained models to detect COVID-19. VGG16 and MobileNet from models are achieved an accuracy of 98.72% but VGG16 obtained high F-score than MobileNet.

DCGANs (deep convolutional generative adversarial networks) model presented in [25] to classify CXR images into three classes: normal, pneumonia, and COVID-19 using four different publicly datasets of chest X-ray images. DCGAN contains eight convolution layers, 3 max pooling, and two fully connected layers. Accuracy is 94.8%, 96.6%, 98.5%, and 98.6% for each dataset.

Asif et al. in[26] obtained CoroNet to discover COVID-19 disease from chest X-ray images. It depends on Xception CNN architecture. The net was employed for three-class classifications in addition to four class classifications (COVID-19, pneumonia bacterial, pneumonia viral and normal).

The model described in[27] was applied to classify pneumonia in X-ray images. For feature extraction, six DTL are used. KNN, SVM, Random Forest, and Naive Bayes classifier are applied for classification after feature extraction.

In [28], the Xception and VGG16 network are two CNN models used to diagnose pneumonia from chest X-Ray images. VGG16 and Xception generate an accuracy of 87% and 82%, respectively. From the test result, the Xception network is more effective for identifying pneumonia cases than the VGG16 but the VGG16 is more effective at identifying normal images.

The model in [29] includes four steps: data pre-processing, augmentation, feature extraction, and ensemble classifier to classify pneumonia from chest X-ray images. In the pre-processing and augmentation, all images are resized to 224 × 224 × 3 and then three augmentation techniques are performed. To extract features, AlexNet, DenseNet121, Inception V3, GoogLeNet and ResNet18 are used. Finally, the ensemble classifier combines outputs from all pre-trained models, which achieves an accuracy of 96.4% with a recall of 99.62%.

In [30], a model to classify pneumonia confirmed COVID-19, and normal medical images using transfer learning models is presented. MobileNet and inceptionV3 from deep CNN models are used. In the first step, images are resized to 224x224 and 299x299 for the mobile net and inceptionv3, respectively. These models have applied the ensemble learning techniques for features extraction then, a fully connected layer and SoftMax layer are used for classification.

3. Materials and Methods

In this work, the COVID-19 Radiography database [31] is used. Qatar University researchers and medical doctors are collaborated to make a database of chest X-ray images for COVID-19, normal and viral pneumonia images. In the first release, there are 219 images of COVID-19, 1341 of normal, and 1345 of the other class. But the second update consists of 3616 COVID-19, 10129 normal and 1345 viral pneumonia images. PNG file is the type of all images with 256* 256 dimensions. Fig.1 shows samples of the chest X-ray images of COVID-19 (first row), viral pneumonia (second row), and normal images in the third row.

Fig. 1. Samples from COVID-19 Radiography Database

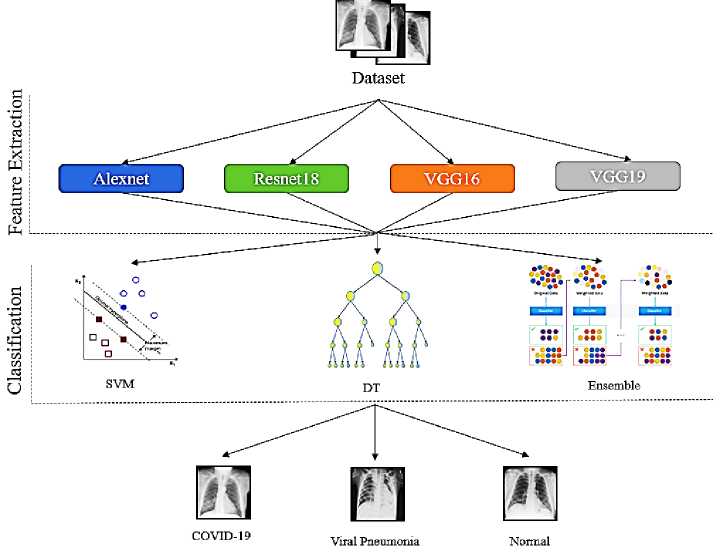

4. Proposed Model Architecture

The proposed model architecture consists of two phases; the first phase is the feature extraction while the second phase is the classification. Fig. 2 illustrates the proposed model architecture.

The features extraction phase will include four deep transfer models and they are Alexnet [7], Resnet 18 [11], VGG16 [9], and VGG19 [9]. They were selected during the experiments as it contains a small number of layers if it is compared with other deeper transfer learning. The next section will discuss each of the prior DTL models.

In the classification phase, three machine learning algorithms were selected, and they are Support Vector Machine [32], Decision trees [33], and Ensemble Algorithm [34]. Those machine learning algorithms were selected as they are the most commonly used algorithms in machine learning, and they have been used in much research such as [35, 36, 37].

Fig. 2. The proposed model architecture

In the proposed model experiments, Alexnet is used. It was presented in 2012 by Alex Krizhevsky. The model consists of five convolution layers and three max-pooling layers. The activation function after each convolution layer is used called ReLU (rectified linear unit) to increase the speed of the training process. Two dropout layers are used to avoid the model from over-fitting. The input image in this model is of size 227×227×3. The size of filters used in models is 11×11, 5×5, and 3×3.

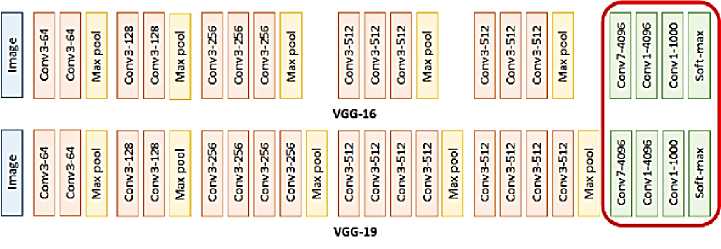

The VGG network (Visual Geometry Group) is also used during the experiments, the VGG presented by Simonyan and Zisserman in 2014. It has two versions: VGG16 and VGG19 with 16 and 19 layers blocks as shown in Fig.3. The VGG16 involves 13 convolutional layers and three fully connected layers but VGG19 contains three additional convolutional layers than VGG16. 224 × 224×3 is the size of the input image, and it consists of 5. In the first two blocks, two convolution layers and one max-pooling layer in every block are used. There are three convolution layers and one max-pooling layer in the lasting three blocks.

Fig. 3. The architecture of the VGG16 and VGG19 network

ResNet is introduced by He et al in 2015. ResNet has many versions of design like ResNet18, ResNet34, and ResNet50 (digit behind the name denotes the number of layers in the model). The network has an input image of 224 × 224×3. In the proposed algorithm, ResNet18 is used. It contains 17 convolution layers and one fully connected layer. Only two pooling layers are used in the network; max-pooling at the beginning and average pooling at the end of the network.

There are three different classifiers used in the proposed model: SVM, DT, and ensemble classifier. They are supervised algorithms used for classification as well as regression. SVM is used to distinctly classify training images to different class labels. It used to discover a hyperplane that maximizes the distance that separates the two classes. Let training set {(xt,yt)} where xt E Rd,yt E {-1,1} is separated by a hyperplane with margin p. There is one linear hyperplane represented by a vector w and a bias b that can isolated the two classes without errors using the equation:

w * x + b = 0

To categorize a point as negative or positive, two constraints are calculated as:

Г +1 w * x + b > 0

t-1 w * x + b < 0

Train SVM in the higher dimensional vectors will lead to extremely high computational costs. To overcome this problem, the kernel function is used. SVM is an efficient technique compared with the traditional classification techniques; it is suitable for small data; besides, it has high efficiency for learning [38]. Ensemble learning is an algorithm that merges many other techniques (e.g., decision tree, neural network) to create a classifier model [39].

DT is one of the inductive learning algorithms, which is used for creating a set of classification rules. Information gain (IG) and entropy are some of such criteria that are utilized to build the decision trees depend on the training features. IG is the amount of information obtained for training to make further decisions and it helps to determine the order of features. Consider a dataset with n classes. The entropy can be calculated using the following equation [40]:

Entropy = SUW z Cp)

P t is the probability of number in class i

5. Experimental Results

A computer system with 32 GB of RAM and an Intel Xeon CPU was used for all of the experiments. The system is equipped with (2 GHz) with NVIDIA TITAN Xp Graphics Card. The development of experiments was GPU specific to the MATLAB R2021b software package. The proposed model follows Algorithm 1 and the following specification was selected during the experiments

-

• Four deep transfer learning models for feature extractions (Alexnet, Resnet18, VGG16, and VGG19).

-

• Three different classifiers are being evaluated (Support Vector Machine, Decision Trees, and Ensemble

algorithm).

Dataset was divided into two parts (70% of the data for the training process, and 30% for the testing process) for experiment 1 and (90% of the data for the training process, and 10% for the testing process) for experiment 2.

The performance measures such as accuracy, precision, recall, and F1 Score are selected and given from Equation (4) to (7) along with the consumed time during the training process.

|

TruePos+TrueNeg Testing Accuracy =m „ „ , „ . Zm „ „ , „ . (4) 0 (TruePos+FalsePos) + ( TrueNeg+FaiseWeg) v 7 Precision(P) = — D True p°s „ л (5) (TruePos+FalsePos) Recall(R) = m nTruePos м л (6) 4 7 (TruePos+FaiseWeg) v 7 F1 Score = 2 * -P^R- (7) (P+R) |

Where FalsePos is the number of false-positive samples, TrueNeg is the number of true negative samples, and FalseNeg is the count of False Negative samples from a confusion matrix.

-

• Training time will be calculated and compared with DTL as a classifier.

Algorithm 1: The Proposed Model Algorithm

Input: COVID-19 Radiography Database

Output: Classification of the Input X-ray image according to three classes {COVID-

-

19, Viral Pneumonia, Normal} with the highest accuracy possible

-

1. Download DTL models weights: Alexnet, Resnet18, VGG16 and VGG19

-

2. Train the proposed model with 70% for experiment one and 90% for experiment two

-

3. For each image in dataset

-

4. Resize the input image to DTL default dimension

-

5. Fed the images to DTL model for features extraction

-

6. Replace the last fully- connected layers with three classifiers: SVM, DT and Ensemble

-

7. End

-

8. Test the proposed model with 30% for experiment one and 10% for experiment two with the performance metrics

-

5.1 Experiment Number 1

In experiment number one the dataset was split into 70% of the data for the training process, and 30% for the testing process. Table 1 presents the Precision, Recall, and F1 Score and Testing Accuracy for the different deep transfer models (Alexnet, Resnet18, VGG16, and VGG19) as features extractors with the different machine learning models (Support Vector Machine, Decision Trees, and Ensemble algorithm) as classifiers.

Table 1. Experiment Number 1 Results

|

Recall |

Precision |

F Score |

Testing Accuracy |

||

|

Alexnet |

0.9698 |

0.9684 |

0.9691 |

0.9745 |

|

|

Resnet18 |

0.9273 |

0.9254 |

0.9263 |

0.9364 |

|

|

SVM |

VGG16 |

0.9754 |

0.9715 |

0.9734 |

0.9784 |

|

VGG19 |

0.9755 |

0.9729 |

0.9742 |

0.9795 |

|

|

Alexnet |

0.8045 |

0.8098 |

0.8071 |

0.852 |

|

|

Resnet18 |

0.7409 |

0.737 |

0.7389 |

0.7876 |

|

|

DT |

VGG16 |

0.813 |

0.8221 |

0.8175 |

0.8575 |

|

VGG19 |

0.8157 |

0.8207 |

0.8182 |

0.8575 |

|

|

Alexnet |

0.9035 |

0.9286 |

0.9159 |

0.9349 |

|

|

Ensemble |

Resnet18 |

0.8442 |

0.898 |

0.8703 |

0.8918 |

|

VGG16 |

0.9276 |

0.9439 |

0.9357 |

0.9514 |

|

|

VGG19 |

0.9284 |

0.9458 |

0.937 |

0.947 |

|

From Table 1 results, it is shown that the VGG19 as features extractor and SVM as a classifier achieved the highest testing accuracy. The performance measurement reinforced the attained results as the integration between VGG19 and SVM achieved 0.9755, 0.9729, and 0.9742 in Recall, Precision, and F Score.

Another metric to show the performance of the selected model is the testing accuracy for every class. Table 2 illustrates the testing accuracy for the different models according to every class.

Table 2. Testing accuracy for every class for different DTL and machine learning algorithms for experiment one

Class

|

ML |

DTL |

COVID-19 |

Viral Pneumonia |

Normal |

Total Accuracy |

|

Alexnet |

95.5 |

96.8 |

98.1 |

97.4 |

|

|

SVM |

Resnet18 |

89.1 |

93.2 |

95.3 |

93.6 |

|

VGG16 |

96.9 |

96.1 |

98.4 |

97.8 |

|

|

VGG19 |

97 |

96.3 |

98.5 |

97.9 |

|

|

Alexnet |

74.4 |

78.6 |

89.9 |

85.2 |

|

|

DT |

Resnet18 |

61.5 |

73.8 |

85.9 |

78..7 |

|

VGG16 |

76 |

80.8 |

89.8 |

85.7 |

|

|

VGG19 |

75.2 |

80.8 |

90.2 |

87.7 |

|

|

Alexnet |

89.1 |

94.6 |

94.9 |

93.4 |

|

|

Ensemble |

Resnet18 |

86.5 |

93.5 |

89.4 |

89.1 |

|

VGG16 |

92.2 |

94.8 |

96.2 |

95.1 |

|

|

VGG19 |

91.6 |

96.6 |

95.5 |

94.7 |

|

It is shown in Table 2 that VGG19 with the SVM model achieved the highest accuracy possible for class COVID-19 and normal which is considered a good indicator to differentiate from these two classes as it is objective of the proposed model to detect the COVID-19 class with the highest accuracy possible.

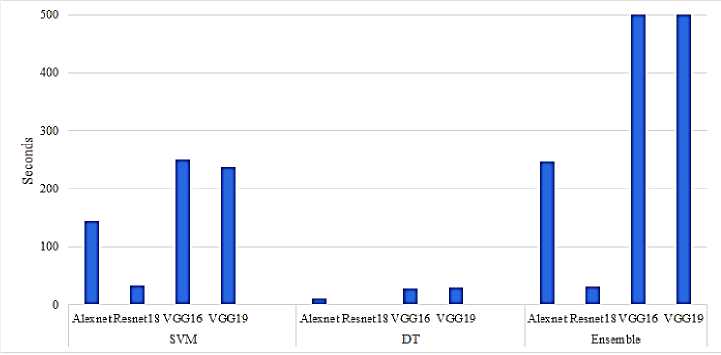

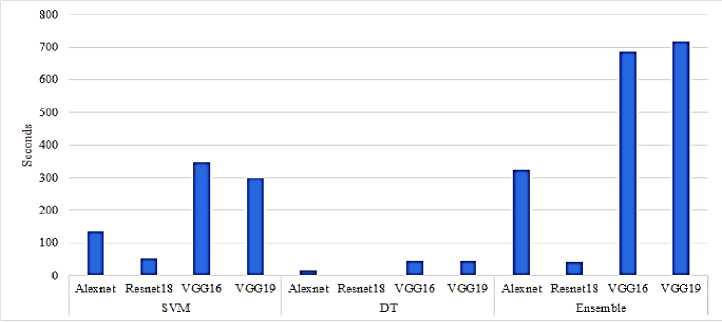

Another metric is to be measured is the time spent for training, it may be irrelevant to the classification model accuracy, and it gives an impression of how light or heavy the model according to calculations complexity. Fig.4. illustrates the spent training time for the different models for experiment number one.

Fig.4 presented that the DT classifier achieved the least time of training as this classifier did not include a mathematical complexity in its design. The Ensemble algorithm achieved the most time in the training process, as its nature that tries every possible solution from different machine learning models that fit the data to achieve the highest accuracy possible. The SVM classifier achieved a moderate time in the training process but achieved the highest accuracy possible. The VGG19 with all classifiers achieved the most time in the training process as it is considered one as it extracts most of the features if it is compared to other DTL models.

Fig. 4. Spent time of the training for the different models for experiment one

-

5.2 Experiment Number 2

In experiment number two the dataset was split into 90% of the data for the training process, and 10% for the testing process. This split decision was made to compare the proposed model with other related works. Table 3 presents the Precision, Recall, and F1 Score and Testing Accuracy for the different deep transfer models (Alexnet, Resnet18, VGG16, and VGG19) as features extractors with the different machine learning models (Support Vector Machine, Decision Trees, and Ensemble algorithm) as classifiers.

Table 3 illustrates that the SVM classifier with VGG19 as feature extractors achieved the highest accuracy possible for testing accuracy 0.9861 and 0.9837, 0.9787, and 0.9812 in Recall, Precision, and F Score. Also, table 3 presents that both DT and Ensemble didn’t perform as expected in terms of testing accuracy, and VGG19 as feature extractor achieved the highest accuracy possible whatever the classifier was.

Table 3. Experiment Number 2 Results

|

ML |

DTL |

Recall |

Precision |

F Score |

Testing Accuracy |

|

Alexnet |

0.9766 |

0.9760 |

0.9763 |

0.9822 |

|

|

Resnet18 |

0.9349 |

0.9278 |

0.9313 |

0.9446 |

|

|

VGG16 |

0.9812 |

0.9778 |

0.9795 |

0.9835 |

|

|

VGG19 |

0.9837 |

0.9787 |

0.9812 |

0.9861 |

|

|

Alexnet |

0.7950 |

0.7993 |

0.7971 |

0.8495 |

|

|

Resnet18 |

0.7476 |

0.7434 |

0.7455 |

0.8046 |

|

|

DT |

VGG16 |

0.7967 |

0.8043 |

0.8005 |

0.8548 |

|

VGG19 |

0.8478 |

0.8526 |

0.8502 |

0.8832 |

|

|

Alexnet |

0.9292 |

0.9519 |

0.9404 |

0.9564 |

|

|

Resnet18 |

0.8611 |

0.9117 |

0.8857 |

0.9129 |

|

|

Ensemble |

VGG16 |

0.9258 |

0.9466 |

0.9361 |

0.9505 |

|

VGG19 |

0.9396 |

0.9549 |

0.9472 |

0.9564 |

Another metric for evaluating a model's performance is the testing accuracy for each class. Table 4 summarizes the testing accuracy for the various models in each class.

Table 4. Testing accuracy for every class for different DTL and machine learning algorithms for experiment two

|

Class |

|||||

|

ML |

DTL |

COVID-19 |

Viral Pneumonia |

Normal |

Total Accuracy |

|

Alexnet |

97 |

97 |

98.8 |

98.22 |

|

|

SVM |

Resnet18 |

89.1 |

92.5 |

96.7 |

94.46 |

|

VGG16 |

96.5 |

97.8 |

99.1 |

98.35 |

|

|

VGG19 |

97.3 |

97 |

99.3 |

98.61 |

|

|

Alexnet |

76.3 |

74 |

89.4 |

84.95 |

|

|

DT |

Resnet18 |

65.9 |

69 |

88 |

80.46 |

|

VGG16 |

75.9 |

75 |

90.4 |

85.48 |

|

|

VGG19 |

79.4 |

84.1 |

92.2 |

88.32 |

|

|

Alexnet |

93.2 |

95.9 |

96.5 |

95.64 |

|

|

Ensemble |

Resnet18 |

88.5 |

93 |

92 |

91.29 |

|

VGG16 |

92 |

96 |

96 |

95.05 |

|

|

VGG19 |

93.3 |

96.9 |

96.3 |

95.64 |

|

It is shown in Table 4 that VGG19 with SVM model achieved the highest accuracy possible for class COVID-19 and normal which is considered a good indicator to differentiate from these two classes as it is objective of the proposed model to detect the COVID-19 class with the highest accuracy possible

Another metric is to be measured is the time spent for training, it may be irrelevant to the classification model accuracy, and it gives an impression of how light or heavy the model according to calculations complexity. Fig.5. illustrates the spent training time for the different models for experiment number two.

Fig. 5. Spent time of the training for the different models for experiment two

Fig.5 illustrates that the spent time for the DT classifier is the least consumed time while the spent time for the Ensemble algorithm is the most consumed time and that was as expected due to the complexity of Ensemble being much more than the DT and SVM. For the SVM classifier with VGG19 as a feature extractor, the training time was moderate if it is compared to other DTL models.

-

5.3 Results Discussion

From experiments number one and two, the following items can be concluded

-

• The integration of VGG19 as feature extractor and SVM as classifier achieved the highest accuracy possible whatever the data was divided into 70%-30% or 90%-10% for the training and the testing as it achieved 0.9795 and 0.9861 respectively in the testing accuracy.

-

• The performance metrics corroborate the findings from both experiments.

-

• In the class accuracy, the integration of VGG19 as feature extractor and SVM as classifier achieved the highest accuracy possible for the COVID-19 class with 97% and 97.3% for experiments one and two respectively.

-

• The spent time for training in the integration of VGG19 as feature extractor and SVM as classifier was moderate if it is compared to DT and Ensemble algorithm, that means the complexity is moderate too.

The question which may be raised here is, how about the spent time for the training for VGG19 as feature extractor and classifier at the same time, and what is the testing accuracy.

Table 5 illustrates the consumed time in training and testing accuracy for the VGG19 model as feature extractor and classifier and the proposed integration of VGG19 and SVM classifier.

|

Table 5. Training time and testing accuracy for the proposed integrated model and VGG19 model |

||||

|

Training Time (h:mm:ss) |

Testing Accuracy |

|||

|

VGG19 + SVM |

VGG19 |

VGG19 + SVM |

VGG19 |

|

|

Experiment 1 |

0:04:33 |

0:49:22 |

0.9795 |

0.9560 |

|

Experiment 2 |

0:05:30 |

1:12:38 |

0.9861 |

0.9756 |

Table 5 presented that the proposed integration of VGG19 and SVM spent much less time than the VGG19 model, it consumed only 4 minutes and 33 seconds in the training for experiment one while the VGG19 model consumed 49 minutes and 22 seconds while the testing accuracy was 0.9795 for the proposed model and 0.9560 for the VGG19 model. The same behavior occurred again in experiment number two; the proposed model is consumed less time in the training which means it is less complicated than the other model VGG19.

The last section of this research is dedicated to the comparison with other related works which used the same dataset. Table 6 presents the proposed integrated model of VGG19 with SVM with other related works.

Table 6. Comparative results with other related works

Список литературы A Model based on Deep Learning for COVID-19 X-rays Classification

- S. Law, A. W. Leung, and C. Xu, “Severe acute respiratory syndrome (SARS) and coronavirus disease-2019 (COVID-19): From causes to preventions in Hong Kong,” International Journal of Infectious Diseases, vol. 94, pp. 156–163, May 2020, doi: 10.1016/j.ijid.2020.03.059.

- P.-I. Lee and P.-R. Hsueh, “Emerging threats from zoonotic coronaviruses-from SARS and MERS to 2019-nCoV,” Journal of Microbiology, Immunology and Infection, vol. 53, no. 3, pp. 365–367, Jun. 2020, doi: 10.1016/j.jmii.2020.02.001.

- D. Han, Q. Liu, and W. Fan, “A new image classification method using CNN transfer learning and web data augmentation,” Expert Systems with Applications, vol. 95, pp. 43–56, Apr. 2018, doi: 10.1016/j.eswa.2017.11.028.

- R. H. Abiyev and M. K. S. Ma’aitah, “Deep Convolutional Neural Networks for Chest Diseases Detection,” Journal of Healthcare Engineering, vol. 2018, pp. 1–11, Aug. 2018, doi: 10.1155/2018/4168538.

- B. Zieliński, A. Plichta, K. Misztal, P. Spurek, M. Brzychczy-Włoch, and D. Ochońska, “Deep learning approach to bacterial colony classification,” PLoS ONE, vol. 12, no. 9, p. e0184554, Sep. 2017, doi: 10.1371/journal.pone.0184554.

- O. K. Oyedotun, E. O. Olaniyi, A. Helwan, and A. Khashman, “Hybrid auto encoder network for iris nevus diagnosis considering potential malignancy,” in 2015 International Conference on Advances in Biomedical Engineering (ICABME), Beirut, Lebanon, Sep. 2015, pp. 274–277. doi: 10.1109/ICABME.2015.7323305.

- A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” Commun. ACM, vol. 60, no. 6, pp. 84–90, May 2017, doi: 10.1145/3065386.

- F. N. Iandola, S. Han, M. W. Moskewicz, K. Ashraf, W. J. Dally, and K. Keutzer, “SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size,” arXiv:1602.07360 [cs], Nov. 2016, Accessed: Jan. 18, 2022. [Online]. Available: http://arxiv.org/abs/1602.07360

- K. Simonyan and A. Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition,” arXiv:1409.1556 [cs], Apr. 2015, Accessed: Jan. 18, 2022. [Online]. Available: http://arxiv.org/abs/1409.1556

- J. Qiu et al., “Going Deeper with Embedded FPGA Platform for Convolutional Neural Network,” in Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey California USA, Feb. 2016, pp. 26–35. doi: 10.1145/2847263.2847265.

- H. Qin, R. Gong, X. Liu, X. Bai, J. Song, and N. Sebe, “Binary neural networks: A survey,” Pattern Recognition, vol. 105, p. 107281, Sep. 2020, doi: 10.1016/j.patcog.2020.107281.

- D. S. Kermany et al., “Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning,” Cell, vol. 172, no. 5, pp. 1122-1131.e9, Feb. 2018, doi: 10.1016/j.cell.2018.02.010.

- S. Rajaraman, S. Candemir, I. Kim, G. Thoma, and S. Antani, “Visualization and Interpretation of Convolutional Neural Network Predictions in Detecting Pneumonia in Pediatric Chest Radiographs,” Applied Sciences, vol. 8, no. 10, p. 1715, Sep. 2018, doi: 10.3390/app8101715.

- A. K. Jaiswal, P. Tiwari, S. Kumar, D. Gupta, A. Khanna, and J. J. P. C. Rodrigues, “Identifying pneumonia in chest X-rays: A deep learning approach,” Measurement, vol. 145, pp. 511–518, Oct. 2019, doi: 10.1016/j.measurement.2019.05.076.

- P. Rajpurkar et al., “CheXNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning,” arXiv:1711.05225 [cs, stat], Dec. 2017, Accessed: Jan. 18, 2022. [Online]. Available: http://arxiv.org/abs/1711.05225

- K. Ahmed, S. Abdelghafar, A. Salama, N. E. M. Khalifa, A. Darwish, and A. E. Hassanien, “Tracking of COVID-19 Geographical Infections on Real-Time Tweets.” 2021.

- A. El-Aziz, A. Atrab, N. E. M. Khalifa, A. Darwsih, and A. E. Hassanien, “The role of emerging technologies for combating COVID-19 pandemic,” in Digital Transformation and Emerging Technologies for Fighting COVID-19 Pandemic: Innovative Approaches, Springer, 2021, pp. 21–41.

- A. A. Abd El-Aziz, N. E. M. Khalifa, and A. E. Hassanien, “Exploring the Impacts of COVID-19 on Oil and Electricity Industry,” in The Global Environmental Effects During and Beyond COVID-19, Springer, 2021, pp. 149–161.

- N. E. KHALIFA, G. MANOGARAN, M. H. N. TAHA, and M. LOEY, “The classification of possible Coronavirus treatments on a single human cell using deep learning and machine learning approaches,” Journal of Theoretical and Applied Information Technology, vol. 99, no. 21, 2021.

- N. E. M. Khalifa, G. Manogaran, M. H. N. Taha, and M. Loey, “A deep learning semantic segmentation architecture for COVID-19 lesions discovery in limited chest CT datasets,” Expert Systems, p. e12742, 2021.

- N. E. M. Khalifa, F. Smarandache, G. Manogaran, and M. Loey, “A study of the neutrosophic set significance on deep transfer learning models: An experimental case on a limited covid-19 chest x-ray dataset,” Cognitive Computation, pp. 1–10, 2021.

- N. E. M. Khalifa, M. H. N. Taha, A. E. Hassanien, and S. H. N. Taha, “The Detection of COVID-19 in CT Medical Images: A Deep Learning Approach,” in Big Data Analytics and Artificial Intelligence against COVID-19: Innovation Vision and Approach, Springer, 2020, pp. 73–90.

- T. D. Pham, “Classification of COVID-19 chest X-rays with deep learning: new models or fine tuning?,” Health Inf Sci Syst, vol. 9, no. 1, p. 2, Dec. 2021, doi: 10.1007/s13755-020-00135-3.

- M. M. Taresh, N. Zhu, T. A. A. Ali, A. S. Hameed, and M. L. Mutar, “Transfer Learning to Detect COVID-19 Automatically from X-Ray Images Using Convolutional Neural Networks,” International Journal of Biomedical Imaging, vol. 2021, pp. 1–9, May 2021, doi: 10.1155/2021/8828404.

- S. V J and J. F. D, “Deep Learning Algorithm for COVID-19 Classification Using Chest X-Ray Images,” Computational and Mathematical Methods in Medicine, vol. 2021, pp. 1–10, Nov. 2021, doi: 10.1155/2021/9269173.

- A. I. Khan, J. L. Shah, and M. M. Bhat, “CoroNet: A deep neural network for detection and diagnosis of COVID-19 from chest x-ray images,” Computer Methods and Programs in Biomedicine, vol. 196, p. 105581, Nov. 2020, doi: 10.1016/j.cmpb.2020.105581.

- D. Varshni, K. Thakral, L. Agarwal, R. Nijhawan, and A. Mittal, “Pneumonia Detection Using CNN based Feature Extraction,” in 2019 IEEE International Conference on Electrical, Computer and Communication Technologies (ICECCT), Coimbatore, India, Feb. 2019, pp. 1–7. doi: 10.1109/ICECCT.2019.8869364.

- E. Ayan and H. M. Unver, “Diagnosis of Pneumonia from Chest X-Ray Images Using Deep Learning,” in 2019 Scientific Meeting on Electrical-Electronics & Biomedical Engineering and Computer Science (EBBT), Istanbul, Turkey, Apr. 2019, pp. 1–5. doi: 10.1109/EBBT.2019.8741582.

- V. Chouhan et al., “A Novel Transfer Learning Based Approach for Pneumonia Detection in Chest X-ray Images,” Applied Sciences, vol. 10, no. 2, p. 559, Jan. 2020, doi: 10.3390/app10020559.

- F. Ahmad, A. Farooq, and M. U. Ghani, “Deep Ensemble Model for Classification of Novel Coronavirus in Chest X-Ray Images,” Computational Intelligence and Neuroscience, vol. 2021, pp. 1–17, Jan. 2021, doi: 10.1155/2021/8890226.

- “COVID-19 Radiography Database.” Accessed: Jan. 18, 2022. [Online]. Available: https://www.kaggle.com/tawsifurrahman/covid19-radiography-database

- Y. Zhang, “Support Vector Machine Classification Algorithm and Its Application,” in Information Computing and Applications, vol. 308, C. Liu, L. Wang, and A. Yang, Eds. Berlin, Heidelberg: Springer Berlin Heidelberg, 2012, pp. 179–186. doi: 10.1007/978-3-642-34041-3_27.

- L. A. Breslow and D. W. Aha, “Simplifying decision trees: A survey,” The Knowledge Engineering Review, vol. 12, no. 01, pp. 1–40, Jan. 1997, doi: 10.1017/S0269888997000015.

- X. Dong, Z. Yu, W. Cao, Y. Shi, and Q. Ma, “A survey on ensemble learning,” Front. Comput. Sci., vol. 14, no. 2, pp. 241–258, Apr. 2020, doi: 10.1007/s11704-019-8208-z.

- S. Guhathakurata, S. Kundu, A. Chakraborty, and J. S. Banerjee, “A novel approach to predict COVID-19 using support vector machine,” in Data Science for COVID-19, Elsevier, 2021, pp. 351–364. doi: 10.1016/B978-0-12-824536-1.00014-9.

- V. Singh et al., “Prediction of COVID-19 corona virus pandemic based on time series data using support vector machine,” Journal of Discrete Mathematical Sciences and Cryptography, vol. 23, no. 8, pp. 1583–1597, Nov. 2020, doi: 10.1080/09720529.2020.1784535.

- S. H. Yoo et al., “Deep Learning-Based Decision-Tree Classifier for COVID-19 Diagnosis From Chest X-ray Imaging,” Front. Med., vol. 7, p. 427, Jul. 2020, doi: 10.3389/fmed.2020.00427.

- J. Cervantes, F. Garcia-Lamont, L. Rodríguez-Mazahua, and A. Lopez, “A comprehensive survey on support vector machine classification: Applications, challenges and trends,” Neurocomputing, vol. 408, pp. 189–215, Sep. 2020, doi: 10.1016/j.neucom.2019.10.118.

- O. Sagi and L. Rokach, “Ensemble learning: A survey,” WIREs Data Mining Knowl Discov, vol. 8, no. 4, Jul. 2018, doi: 10.1002/widm.1249.

- S. R. Safavian and D. Landgrebe, “A survey of decision tree classifier methodology,” IEEE Trans. Syst., Man, Cybern., vol. 21, no. 3, pp. 660–674, Jun. 1991, doi: 10.1109/21.97458.

- M. Umair et al., “Detection of COVID-19 Using Transfer Learning and Grad-CAM Visualization on Indigenously Collected X-ray Dataset,” Sensors, vol. 21, no. 17, p. 5813, Aug. 2021, doi: 10.3390/s21175813.