A More Robust Mean Shift Tracker on Joint Color-CLTP Histogram

Автор: Pu Xiaorong, Zhou Zhihu

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 12 vol.4, 2012 года.

Бесплатный доступ

A more robust mean shift tracker using the joint of color and Completed Local Ternary Pattern (CLTP) histogram is proposed. CLTP is a generalization of Local Binary Pattern (LBP) which can be applied to obtain texture features that are more discriminant and less sensitive to noise. The joint of color and CLTP histogram based target representation can exploit the target structural information efficiently. To reduce the interference of background in target localization, a corrected background-weighted histogram and background update mechanism are adapted to decrease the weights of both prominent background color and texture features similar to the target object. Comparative experimental results on various challenging videos demonstrate that the proposed tracker performs favorably against several variants of state-of-the-art mean shift tracker when heavy occlusions and complex background changes exist.

MeanShift, Object Tracking, Completed Local Ternary Pattern, Joint Color-CLTP Histogram

Короткий адрес: https://sciup.org/15012511

IDR: 15012511

Текст научной статьи A More Robust Mean Shift Tracker on Joint Color-CLTP Histogram

For object tracking within the field of computer vision , many efficient algorithms[1] have been proposed in recent years , mostly on the issues of changing appearance patterns of both the object and the scene, nonrigid object structures, object-to-object and object-to-scene occlusions. Among various object tracking methods, the mean shift tracker has recently attracted many researchers’ attention [2] [3] [4] [5] [6] [7] [8][9]due to its simplicity and efficiency.

Mean shift tracking algorithm has been proved to be robust to scale, rotation, partial occlusion [2] [3] by using the color histogram to represent target object. However, it is inclined to fail when some of the target features present in the background. Comaniciu et al. [3] proposed a background-weighted histogram (BWH) to decrease background interference in target representation. However, Ning et al [4] demonstrated that the BWH-based mean shift tracker is equivalent to the conventional mean shift tracking method [2], and then a corrected background-weighted histogram (CBWH) is proposed to actually reduce the interference of background in target localization. Additionally, only utilizing color histogram to model the target object in the mean shift algorithm has the disadvantage that the spatial information of the target object is lost. Therefore, many other features, such as edge features [10], Local Binary Pattern (LBP) texture features [5], have been used in combination with color. Among these feature-combined mean shift trackers, the joint color-texture histogram proposed by Ning et al [5] achieves better tracking performance with fewer mean shift iterations and higher robustness.

Local Binary Pattern (LBP), firstly introduced by Ojala et al [11], is a simple yet efficient operator to describe local image pattern. It has great success in computer vision and pattern recognition, such as face recognition [12], texture classification [13] [14], unsupervised texture segmentation [15], dynamic texture recognition [16]. However, it still remains some issues to be further investigated. Tan and Triggs [17] proposed Local Ternary Patterns (LTP) that quantizes the difference between a pixel and its neighbours into three levels, which is less sensitive to noise in nearuniform image regions such as cheeks and foreheads. Guo et al [18] proposed Completed Local Binary Pattern (CLBP) where a local region is represented by its center pixel and a local difference sign-magnitude transform. CLBP is able to preserve an important kind of difference magnitude information for texture classification.

In this paper, a more robust mean shift tracker is discussed. We propose a novel texture descriptor, named Completed Local Ternary Pattern (CLTP), which is more discriminant and less sensitive to noise. The CLTP is firstly applied to represent the target texture features, and then combined with color to form a distinctive and effective target representation called joint color-CLTP histogram. In order to reduce the interference of background in target localization, corrected background-weighted histogram and background update mechanism [4] is adapted to decrease the weights of both prominent background color and texture features similar to the target. The proposed tracker is more robust especially in case of heavy occlusions and complex background changes than several state-of-art variants of mean shift tracker.

The rest of the paper is organized as follows. Section II briefly reviews conventional mean shift algorithm. Section III discusses Local Binary Pattern and

Completed Local Ternary Pattern. Section IV investigates mean shift tracker on joint color-CLTP texture histogram. Section V gives the comparative experimental results of the proposed tracker compared with several state-of-art mean shift trackers. Section VI concludes this paper.

the target candidate region centered at y , h is the bandwidth and C constant is a normalization function.

Bhattacharyya coefficient is used to calculate the likelihood of the target model and the candidate model as:

II. CONVENTIONAL MEAN SHIFT ALGORITHM

A. Target Representation

In this section, target representation using the color histogram in the conventional mean shift tracker [2] is reviewed. Assume that the target object being tracked is defined by a rectangle or an ellipsoidal region in a frame of video.

Target model q ˆ of object being tracked can be obtained as

p [ p ( y ), q ] = Z 4p ( у ) . , . (5)

u = 1

The distance between the target model and the candidate model is defined as:

d

[

P

(

y

),

< = {

B. Mean Shift Tracking

To minimize the distance defined by Equation (6) is to maximize Equation (5), Taylor expansion is used to linearly approximate Bhattacharyya coefficient (5) as follows:

where qˆ represents the probabilities of feature u in target model qˆ , m is the number of feature spaces, 5 is the Kronecker delta function, {x. } is the v 1 ) i=1-- n normalized pixel positions in the target region centered at original position, b(x*)maps the pixel x* to the

histogram bin, k ( x ) is an isotropic kernel profile and constant C is a normalization function defined by

C =

n z k (I |x*r) i=1 x 7

Analogously, the target candidate model p ˆ y of the candidate region can be computed as:

p ( y ) = { p u ( y ) }

7

nh p. (. у) = Ch z k i=1

Ch=—

n

Z k i =1

h

5 [ b (xt) - u J

where pˆ (y)represent the probabilities of feature u in target model p(y) , {x*} is the pixel positions in

1 ' 1= !•• - n h

1m 1nh p [ p(y), q ]« - Z p . ( у o) <. + ^h Z wk

2 u = 1 2 i = 1

where y is the target location in previous frame, and

to i

m

=z u =1

5 [ b ( x ) - u J

It can be seen that the first term in (7) is independent of y , hence maximizing the second term in (7) can achieve the goal of minimizing the distance in (6). In the iterative optimization process, the new position moving from y to y is defined as:

Enk i=1 x^g

У 1 =---------

Enk i ==1®i.g

For simplicity, the Epanechnikov profile is chosen, then (9) is reduced to

У1 =

Enk i=1 i i

z n = 1

® i

from (10), iteratively obtained new position will converge to a fixed position that is the most similar region to the target.

-

III. local bianry pattern and completed local

TENARY PATTERN

-

A. Brief Review of Local Benary Pattern and Its Variants

The LBP [14] operator labels the pixel in an image by thresholding its neighborhood with the center value and considers the result as a binary number (binary pattern). The general version of the LBP operator is defined as:

P - 1

LBP pr ( x , , У , ) = 2 5 ( g p — g , )2 p p = 0

5 ( X ) =

f 1 1 °

X > °

X < °

where g is the gray value of the central pixel, g is the value of its neighbors, P is the total number of involved neighbors and R is the radius of the neighborhood.

The “uniform” LBP pattern, denoted by LBPu 2 , is defined as the number of bitwise 0/1 changes in that pattern:

U ( LBP P , R ) = | 5 ( g p - 1 - g , ) - 5 ( g ° - g , )|

P - 1

+215 (gp- g,)- 5 (gp-i- g, )| p=1

Recent years, many variants of LBP have emerged, such as Local Tenary Patterns (LTP) [17], Completed LBP [18], Dominant LBP [19], MONOGENIC-LBP [20], Multimodal LBP [21], Sobel-LBP [22]. Among numerous variants of LBP, CLBP and LTP are outstanding because of its significantly improved texture classification accuracy. CLBP has three parts: CLBP_Center (CLBP_C), CLBP-Sign (CLBP_S) and CLBP_Magnitude (CLBP_M). CLBP_C is formed by converting the center pixel into a binary code after global thresholding, CLBP_S is obtained by converting the local difference signs into an 8-bit binary code, and CLBP_M is gained by coding the difference magnitudes as an 8-bit binary code. Experimentally, the CLBP could achieve much better rotation invariant texture classification results than conventional LBP based schemes. More details of CLBP can be referred in [18]. LTP quantizes the difference between a pixel and its neighbours into three levels, and then each ternary pattern is split into its positive and negative halves. More details of LTP can be seen in [17].

-

B. Completed Local Tenary Patterns

Given a central pixel g , its P circularly and evenly spaced neighbors gp (p = [0,1,-•♦,P-1]), and its radius of the neighborhood R . The local difference between g, and gp is denoted by dp = gp - g, , which can be viewed as two components: dp = 5p * mp ,

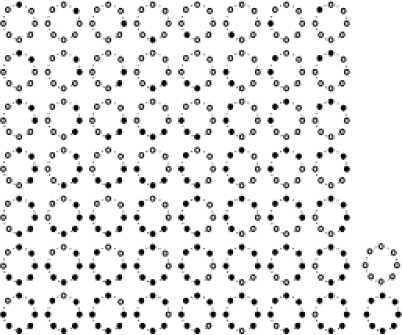

It has p* ( p - 1 ) + 3 distinct output values. Fig.1 shows all uniform patterns for P=8.

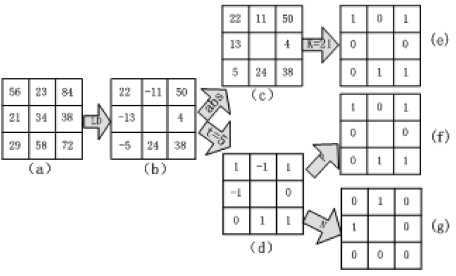

where s

' 1, d p > ° \- 1, d p < °

and m

. To construct

Figure 1.Uniform LBP patterns when P=8. The black and white dots represent the bit values of 1 and 0 in the 8-bit output of the LBP operator

Completed Local Ternary Patterns, s is rewritten as:

- 1 , dp <- 1

As ref. [17], a coding scheme that splits each ternary pattern into its positive and negative halves is applied. Thus, CLTP operator consists of three operators: CLTP_P (CLTP Positive operator), CLTP_N (CLTP Negative operator), and CLTP_M (CLTP Magnitude operator). They are defined as follows, respectively:

EP—\ f 1, x > к n 5. (mB, к)2p, 5. (x, к) = , (16)

p = ° 1 p 1 [ °, otherwise

Further, a locally rotation invariant“uniform” pattern is defined as:

CLTP _ P p , R = 2 P - ° 5 2 ( d p , t )2 p , 5 2 ( X , t ) =

1, X > t

0, otherwise

LBP Pr , iuR 2

/2 P = ° 5 ( g p - g , )ifU ( LBP p , R ) < 2

P + 1 otherwise

CLTP _ N P , R

= 2 P - ° 5 з ( d p , t )2 p , 5 3 ( X , t ) =

1, X <- 1

0, otherwise

which has P + 2 distinct output values.

where t is a threshold value, and к is an adaptively determined threshold as the mean value of m .

equation (1):

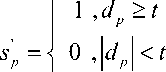

Fig.2 shows an example of the CLTP. Fig.2(a) is the original 3 x 3 local sample block with central pixel being 25. The difference vector is [ 4,38,24, - 5, - 13,22, - 11,50 ] , as shown in Fig.2(b). Absolute value of the difference vector is the local magnitude vector [ 4, 38,24,5,13,22,11,50 ] , as shown in Fig.2(c). By equation (15) with t = 5, Ternary code vector [ 0,1,1,0, - 1,1, - 1,1 ] (Fig.2(d)) is obtained, and then each ternary pattern is split into positive halves named cltp p ositive code of 01100101 (f ig .2( f )) and negative halves named CLTP Negative code of 00001010 (f ig .2( g )). f inally , cltp m agnitude code of 01100101 (f ig .2( e )) can be obtained by equation(16).

C w ={ C u. } w uw

Cuw = CZ k (llx* I ) 5 [bw (xi ) - uw ] =1

i = 1

Figure 2.CLTP(P=8,R=1): (a) a 3 X 3 sample block ;(b) the local differences; (c) magnitude components;(d) ternary code; (e) CLTP Magnitude code;(f) CLTP Positive code (g) CLTP Negative code. LD means local difference operator, abs represents absolute operator, P and N means positive operator and negative operator respectively, threshold of CLTP Magnitude (к = 21) is the mean value of magnitude components p (p =[0,1, ,7]) and set the threshold as t = 5 .

where w = 1,2,3 . q represents the probabilities of w feature u in target model qˆ (i.e. qˆ , qˆ and qˆ corresponds to joint RGB-CLTP_M histogram, joint RGB-CLTP_P histogram, joint RGB-CLTP_N histogram respectively). b(x*) maps the pixel x* to the corresponding histogram bin, m = N x Nr x N, x N is the number of joint

RGBp feature spaces , NR x NG x NB represents the quantized bins of color channels and N represents the bins of the CLTP texture features.

Similarly, the target candidate model pˆ ( y )

corresponding to the candidate region is given by:

p w ( У ) = { p . . ( y ) } = 1

nh puw (У) = Ch V k i=1

5 [ b w ( x i ) - u w

B. Corrected Background-Weighted Histogram and Background Model Updating Mechanism

Similar to the definitions of LBPriu 2 and

CLTP M riu 2 , both CLTP Priu 2 and CLTP N riu 2

P,R P,R P,R can also be defined to achieve rotation invariant classification.

The idea of corrected background-weighted histogram and background model updating mechanism in [4] is adapted to reduce the interference of background that has color and texture features similar to the tracked target. The background is represented as { o)u } with ( V m 5 = 1, w = 1,2,3 ) and it is

1 w uw =1“ m ^-^г-1 w calculated by the surrounding area of the target. Transformation between the representations of target model and target candidate model is defined by:

{ vM = min ( <) * /<) ,1 ) } uww uw

-

IV. Mean Shift Tracking with Joint of Color and CLTP Texture Histogram

-

A. Target Representation Using Joint of Color and CLTP Texture Histogram

To model the target efficiently, rotation invariant

CLTP patterns ( CLTP _ Mriu 2 , CLTP _ Priu 2 and

P , R P , R

CLTP _ N riu 2 ) are firstly obtained. Then joint RGB-

CLTP_M histogram, joint RGB-CLTP_P histogram and joint RGB-CLTP_N histogram are reaped by rewritten

* where o

w

is the minimal non-zero value in

Hence the target model is defined as:

c w={cu,J , w uw =1“ m

{ o u w } u w

.

1 m

<) u w = C w V u w Z k (| x i ) 5 [ b w ( X ) - u w ]

i = 1

where C ' w

nm

Z k (|| x *| ) Z v u „ 5 [ b w ( x i ) - u w ] i = 1 X ’ u = 1

and then a new weight formula is computed as:

m toW,i = Z 71 'wjpuw (У)5 [bw (x ) - Uw ] (23)

w . = 1

In the tracking process, the background will often change due to the variations of illumination, viewpoint, occlusion and scene content etc. Therefore, it is necessary to dynamically update the background model for robust tracking. Here, a simple background model updating mechanism is proposed. Assume that we have acquired the background features {oi } and v . ) w. =1«« m

{ v w . } . ™

=1

the previous frame. The background w.=1-- ml features {0) } v . w.. =1-- m

w and {vU. } , w.=1

are firstly obtained

in the current frame. Then Bhattacharyya similarity between {0) } and the old background model v u—ww =1“ m w the target object in the new frame accurately and robustly. The scheme of the approach can be designed as:

-

1) Initialize the position y of the target candidate region and set the iteration number k = 0 , the maximum iteration number N = 15 , the

position error threshold ^ (default value: 0.1 ) and the background model update threshold ^ (default value: 0.5 ).

Calculate the target model by (19) and the background-weighted histogram {<5 } , v w. ) w. =1«« m then compute {vu } by (21) and

-

7 w. =1-- m

transformed target model qˆ ' by (22) in the previous frame.

-

3) In the current frame, calculate the distribution of the target candidate model pˆ ( y ) .

'

-

4) Calculate the weights ®vi via (23).

- } w .

is computed by : 1 m

-

5) Calculate the new position y of the target candidate region using (26) and (27).

m p- = Z Vow?w. (24)

w . = 1

p = Цд + Л ( ap 2 + a p 3 )) (25)

If p is smaller than a threshold ^ when there are considerable changes in the background, {5} should be updated by {) } and v U — w. =1“ m v . ) w. =1“ ml

{ v w . } be replaced by { v w . } .

-

7 w. =1-- m 7 w. =1-- m

C. Tracking Using Joint of Color and CLTP Texture Histogram

In the iterative process of tracking, the estimated target moves from y to a new position y is defined as:

-

6) Set d ^| \ y.w - У o|| , y 0 ^ У пе. , k ^ k + 1 .

If d < ^ or k > N

Calculate { 0) У and { v,. } of tracked

1 w. J w w = 1- m t w.)

. w.=1 ml object in the current frame, and then obtain p based on (24) and (25). If p is smaller than ^ , then update

{ 0- - } w . = 1- ^ 1 0w =W • { ^' u . } w.. ^

{ v w „,} , and update <) . by (22).

7 w. =1-- m

Stop and go to Step 7.

else go to step(3)

-

7) Set the next frame as the current frame with initial location y and go to Step 1.

yw

Z i = ,

x ® .

Z i = 1

®

w , i

У пе. = ( ^ 1 У 1 + ^ 2 ( а 1 У 2 + a 2 У 3 )) (27)

where the parameters ^ , ^ , a and a i" equation (27) are set by the same way as in equation(23).

By equation (27), the proposed approach can explore

V. Comparative experiments of state-of-art meanShift Algorithms

To evaluate the effectiveness of the proposed method, we carried out a series of experiments on five challenging public video sequences, Table Tennis playing video sequences from [4], Camera sequences from PETS2001 database [23], Bird2 video sequences from [24], Walking Woman video sequences from [25],

and Panda video sequences from [26]. We make comparison with three state-of-the-art mean shift trackers, mean shift tracker with background-weighted histogram (MS_BWH) [3], mean shift tracker with corrected background-weighted histogram (MS_CBWH) [4] and mean shift tracker using joint color-LBP texture histogram (MS_RGBLBP) [5]. For comparative purpose, the tracked object of MS_BWH, MS_CBWH,

MS_RGBLBP and the proposed approach are represented by white, yellow, red and green rectangle box, respectively.

In the proposed approach and MS_RGBLBP, we set N x Nr x N„ x N = 8 x 8 x 8 x 10 . In MS BWH RGBp and MS CBWH, we set N x Nr x N„ = 8 x 8 x 8 .

RGB

The incremental size of search region is 5 pixels and threshold t in cltp is set manually to 5 . t he background region is three times the size of the target. Since Guo et al [13] mathematically demonstrated that the local difference reconstruction errors made by using S p and m p is E s = X 2 , and E m = 4 X 2 , we set ^ = 0.2 , ^ = 0.8 , a = a = 0.5 .

-

A. Comparative results on Table Tennis sequence (simple background, no occlusions).

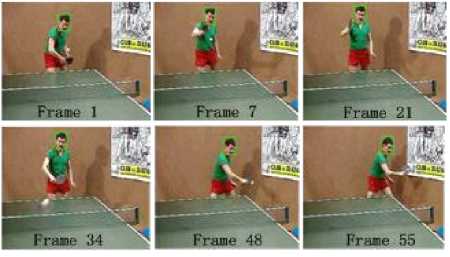

The target to be tracked is the moving head of the player. Tracking results of the proposed approach are shown in Fig.3. All trackers can locate the target accurately, since the color differences between the target and the background are distinctive and the background has simple structural information.

The first row of Table 1 lists the total and average numbers of mean shift iterations among the four methods. It is clear that the proposed method achieves better result than the other trackers.

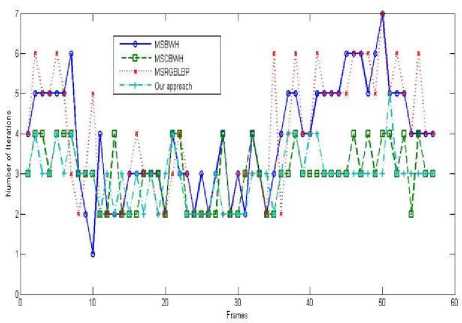

Fig.4 shows the comparative numbers of iterations in every frame. It can be seen that the proposed approach needs less iterations than MS_BWH and MS_RGBLBP in each frame at most case and performs as good as MS CBWH.

Figure 3. Tracking results of the proposed method on tennis playing video sequence. Frame1, 7, 21, 34, 48 and 55 are displayed.

Figure 4. Number of iterations on the Table tennis sequence

-

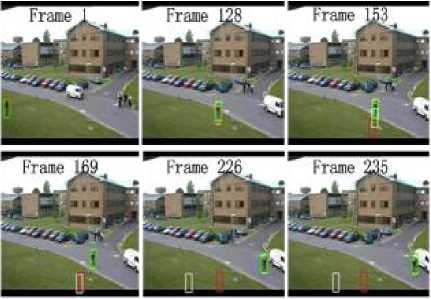

B. Comparative results on Camera sequence (background change, no occlusions).

The target to be tracked is the walking man with the small blurred object of interest and background change. Tracking results of the four methods are shown in Fig.5. All the methods can accurately locate the tracking object among the first 150 frames. However, neither MS_BWH nor MS_RGBLBP can locate the target object while both MS_CBWH and the proposed approach can still track the target accurately, when the background changes from grass to road.

The second row of Table 1 lists the total and average numbers of mean shift iterations of the four methods, which indicates that the proposed approach needs less number of iterations than MS_BWH and MS_RGBLBP, and just only performs a little bit worse than MS CBWH.

Figure 5. Tracking results of the four methods on Camera video sequence. Frame 1, 128, 153, 169, 226 and 235 are displayed.

-

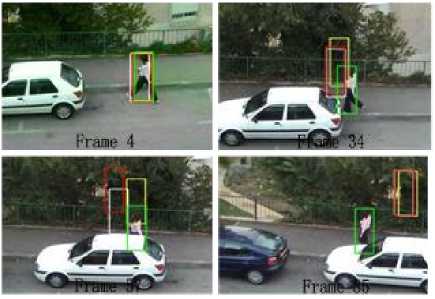

C. Comparative results on Bird2 video sequence ( no background change, heavy occlusions ) .

The target to be tracked is the flying bird with heavy occlusions. Fig.6 shows the tracking results of the four methods. It can be experimentally observed that MS_BWH and MS_CBWH drift away from the target into background regions but MS_CBWH and the proposed approach can still locate the target when heavy occlusion emerges. Moreover, the proposed approach is more stable and accurate than MS_CBWH when occlusion appears in frame 46 and 64.

The third row of Table 1 gives the numbers of iterations of the proposed approach and MS_CBWH, which indicates that the proposed approach performs better than MS CBWH.

Figure 6. Tracking results of the four methods on Bird2 video sequence. Frame 4, 16, 46 and 93 are displayed.

-

D. Comparative results on Walking Woman video sequences ( complex background changes, heavy occlusions )

The target to be tracked is the walking woman with heavy occlusions and complex background changes. Tracking results of the four methods are shown in Fig.7. Because the background is changing and heavy occlusion exists during tracking process, only the proposed approach can still track the target, while MS_BWH, MS_ CBWH and MS_RGBLBP all lose the target. This suggests that the joint color-CLTP histogram is more discriminant and can more efficiently exploit the target structural information than color histogram and joint color-LBP histogram.

TABLE 1. the numbers of iteration by the four methods

|

Video Sequence |

Frames |

Method |

Total Number of Iterations |

Average Number of Iterations |

|

Table Tennis |

58 |

MS_BWH |

220 |

3.86 |

|

MS_CBWH |

172 |

3.02 |

||

|

MS_RGBLBP |

222 |

3.89 |

||

|

Our approach |

167 |

2.93 |

||

|

Camera |

145 |

MS_BWH |

492 |

3.42 |

|

MS_CBWH |

428 |

2.97 |

||

|

MS_RGBLBP |

509 |

3.53 |

||

|

Our approach |

454 |

3.15 |

||

|

Bird2 |

99 |

MS_BWH |

— |

— |

|

MS_CBWH |

357 |

3.64 |

||

|

MS_RGBLBP |

— |

— |

||

|

Our approach |

350 |

3.57 |

-

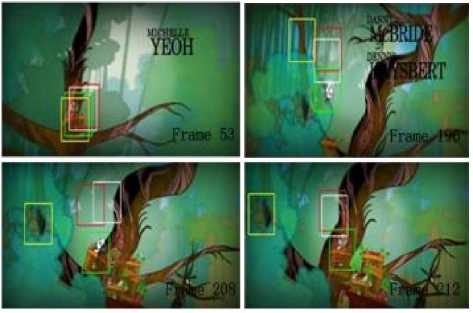

E. Comparative results on Panda video sequences (background changes, heavy occlusions, large in-plane rotations and fast movement)

The target to be tracked is the cartoon Panda with heavy occlusions, background changes, large in-plane rotations and fast movement. Tracking results of the four methods are shown in Fig.8. Because of the rotation invariant target model and fast convergence of meanshift, all the methods can handle large in-plane rotations and fast movement when there is no occlusion. However,as seen from frame 196,208 and 212, the other three trackers drift away after the target undergoes heavy occlusions and background changes at the same time whereas our proposed method performs well throughout this sequence. This also suggests that the joint color-CLTP histogram is more powerful than color histogram and joint color-LBP histogram.

Figure 7.Tracking results of the four methods on Walking Woman video sequence. Frame 4, 34, 57 and 85 are displayed.

Figure 8.Tracking results of the four methods on Panda cartoon video sequence. Frame 53, 196, 208 and 212 are displayed.

VI. Conclusion

In this paper, a more robust mean shift tracker based on a more distinctive and effective target model is proposed. First, a novel texture descriptor, Completed Local Ternary Pattern (CLTP), is proposed to represent

the target structural information, which is more discriminant and less sensitive to noise. Then, a new target representation, joint color-CLTP histogram, is presented to effectively distinguish the foreground target and its background. Finally, the corrected background-weighted histogram and background updating mechanism is adapted to reduce the interference of background that has color and texture features similar to the target object. Numerous experimental results and evaluations demonstrate the proposed tracker performs favorably against existing variants of state-of-the-art mean shift tracker.

Acknowledgment

The authors thanks for the support of National Science Foundation of China: “Vision Cognition and Intelligent Computation on Facial Expression” under Grant 60973070.

Список литературы A More Robust Mean Shift Tracker on Joint Color-CLTP Histogram

- A. Yilmaz , O. Javed , and M. Shah, “Object Tracking: a Survey, ” ACM Computing Surveys, 38, (4), Article 13, 2006.

- D. Comaniciu, V. Ramesh, and P. Meer, “Real-Time Tracking of Non-Rigid Objects Using Mean Shift, ” Proc. IEEE Conf. Computer Vision a nd Pattern Recognition, pp. 142-149 , 2000.

- D. Comaniciu, V. Ramesh, and P. Meer, “Kernel-Based Object Tracking,” IEEE Trans. Pattern . Anal. Machine Intell., 25, (2), pp. 564-577, 2003.

- J. Ning, L. Zhang, D. Zhang and C. Wu, “Robust Mean Shift Tracking with Corrected Background-Weighted Histogram,” IET Computer Vision, 2010.

- J. Ning, L. Zhang, D. Zhang and C. Wu, “Robust Object Tracking using Joint Color-Texture Histogram,” International Journal of Pattern Recognition and Artificial Intelligence, vol. 23, No. 7 ,pp.1245–1263,2009.

- G. Bradski, “Compuer vision face tracking for use in a perceptual user interface, ” Intel Technology Journal, 2(Q2) , 1998.

- J. Ning, L. Zhang, D. Zhang and C. Wu, “Scale and Orientation Adaptive Mean Shift Tracking,” IET Computer Vision, 2011.

- Q. A. Nguyen, A. Robles-Kelly and C. Shen, “Enhanced kernel-based tracking formonochromatic and thermographic video,” Proc. IEEE Conf . Video and Signal Based Surveillance, pp. 28–33,2006.

- C. Yang, D. Ramani and L. Davis, “Efficient mean-shift tracking via a new similiarity measure,” Proc. IEEE Conf . Computer Vision and Pattern Recognition, pp. 176–183,2005.

- I. Haritaoglu and M. Flickner, “Detection and tracking of shopping groups in stores,” Proc. IEEE Conf . Computer Vision and Pattern Recognition , Kauai, Hawaii, pp. 431–438, 2001.

- T. Ojala, M. Pietikäinen, and T. T. Mäenpää, “Multiresolution gray-scale and rotation invariant texture classification with Local Binary Pattern,” IEEE Trans. on Pattern Analysis and Machine Intelligence, vol. 24, no. 7, pp. 971-987, 2002.

- T. Ahonen, A. Hadid, and M. Pietikäinen, “Face recognition with Local Binary Patterns: application to face recognition,” IEEE Trans. on Pattern Analysis and Machine Intelligence, vol. 28, no. 12, pp. 2037-2041, 2006.

- T.Ojala, M. Pietikainen, and D. Harwood, “A comparative study of texture measures with classification based on feature distributions,” Pattern Recognition, vol. 29, no. 1, pp. 51–59,1996.

- T. Ojala, T. Mäenpää, M. Pietikäinen, J. Viertola, J. Kyllönen, and S. Huovinen, “Outex – new framework for empirical evaluation of texture analysis algorithm,” Proc. Inte’l. Conf. on Pattern Recognition, pp. 701-706, 2002.

- T. Ojala and M. Pietikäinen, “Unsupervised texture segmentation using feature distributions,” Pattern Recognition, 32, pp.477-486,1999.

- G. Zhao, and M. Pietikäinen, “Dynamic texture recognition using Local Binary Patterns with an application to facial expressions,” IEEE Trans. on Pattern Analysis and Machine Intelligence, vol. 27, no. 6, pp. 915-928, 2007.

- X. Tan and B. Triggs. “Enhanced Local Texture Feature Sets for Face Recognition Under Difficult Lighting Conditions,” IEEE Trans. on Image Processing, 19(6): pp. 1635-1650, 2010.

- Z. Guo, L. Zhang and D. Zhang, “A Completed Modeling of Local Binary Pattern Operator for Texture Classification,” IEEE Trans. on Image Processing, vol. 19, no. 6, pp. 1657-1663, June 2010.

- S. Liao, M. K. Law and A. S. Chung, “Dominant Local Binary Patterns for Texture Classification,” IEEE Trans. on Image Processing, Vol. 18, No. 5, pages 1107 – 1118, May, 2009

- L. Zhang, L. Zhang, Z. Guo and D. Zhang, “Monogenic-LBP : A new approach for rotation invariant texture classification,” Inte’l. Conf. on Image Processing, pp. 2677-2680, 2010

- R.M.N Sadat and S. W. Teng, “Texture Classification Using Multimodal Invariant Local Binary Pattern," IEEE Workshop on Applications of Computer Vision, pp 315-320, 2011.

- S. Zhao, Y. Gao, and B. Zhang, “Sobel-LBP," 15th IEEE International Conference on Image Processing, pp. 2144–2147, 2008.

- “Pets2001: http://www.cvg.rdg.ac.uk/pets2001/,” .

- S. Wang, H. Lu, F. Yang, M. Yang, "Superpixel Tracking," 13th International Conference on Computer Vision, pp. 1323-1330, 2011

- A. Adam, E. Rivlin, and I. Shimshoni, "Robust fragments-based track-ing using the integral histogram," IEEE Inte’l. Conf. on Computer Vision and Pattern Recogintion, pp. 798–805, 2006.

- W. Zhong, H. Lu and M.H. Yang, "Robust Object Tracking via Sparsity-based Collaborative Model, " IEEE Inte’l. Conf. on Computer Vision and Pattern Recogintion, 2012.