A Unified Framework for Systematic Evaluation of ABET Student Outcomes and Program Educational Objectives

Автор: Imtiaz Hussain Khan

Журнал: International Journal of Modern Education and Computer Science @ijmecs

Статья в выпуске: 11 vol.11, 2019 года.

Бесплатный доступ

Assessment and evaluation of Program Educational Objectives (PEOs) and Student Outcomes (SOs) is a challenging task. In this paper, we present a unified framework, which has been developed over a period of more than eight years, for the systematic assessment and evaluation of PEOs and SOs. The proposed framework is based on a balance sampling approach that thoroughly covers PEO/SO assessment and evaluation and also minimizes human effort. This framework is general but to prove its effectiveness, we present a case study where this framework is successfully adopted by our undergraduate computer science program in the department of computer science at King Abdulaziz University, Jeddah. The robustness of the proposed framework is ascertained by an independent evaluation by ABET who awarded us full six years accreditation without any comments or concerns. The most significant value of our proposed framework is that it provides a balanced sampling mechanism for assessment and evaluations of PEOs/SOs that can be adapted by any program seeking ABET accreditation.

Program Educational Objectives, Learning Outcomes, Outcome Assessment, Outcome Attainment, Assessment Tools, ABET Evaluation

Короткий адрес: https://sciup.org/15017140

IDR: 15017140 | DOI: 10.5815/ijmecs.2019.11.01

Текст научной статьи A Unified Framework for Systematic Evaluation of ABET Student Outcomes and Program Educational Objectives

One of the primary concerns of any educational institution or program is quality assurance mechanism. Quality assurance of a program mainly depends on effective assessment and evaluation processes pertaining to monitoring students' performance [1, 2, 3, 4, 5, 6, 7, 8]. In recent years, the focus of accreditation processes of educational programs has been shifted towards outcomebased assessment and evaluation. This departure first required to identify a set of characteristics for which an educational program intends to prepare its students. One of the world's leading accreditation body, Accreditation Board for Engineering and Technology (ABET) [9] requires two primary criteria pertaining to developing such characteristics in graduates of a program. In ABET terminology; they are Program Educational Objectives (PEOs) and Student Outcomes (SOs). In ABET parlance, PEOs are "broad statements that describe what graduates are expected to attain within a few years of graduation", whereas SOs describe "what students are expected to know and be able to do by the time of graduation. These relate to the knowledge, skills, and behaviors that students acquire as they progress through the program". Both PEOs and SOs require a robust mechanism for their successful evaluation [10] and they must be guaranteed to be attained in a meaningful manner [11, 12]. In our undergraduate computer science program, we have adopted the eleven well-known a-k ABET SOs and also defined PEOs (details follow). Out of these eleven outcomes, a, b, c, i, j and k are related to technical skills, while d-h cover soft skills [13, 14]. Generally, it has been observed that the soft-skills related SOs especially those pertaining to life-long learning are difficult to assess as compared to technical-skills related SOs [15].

This paper proposes a unified framework for systematic assessment and evaluation of PEOs and SOs, which has been implemented in the faculty of computing and information technology (FCIT), King Abdulaziz University, Saudi Arabia. The effectiveness of this framework is ascertained by ABET as our program got full six years accreditation (2013-2019) and then retained it for another six years (2019-2025) without any comments or concerns.

-

II. Process for Review of the Program Educational Objectives

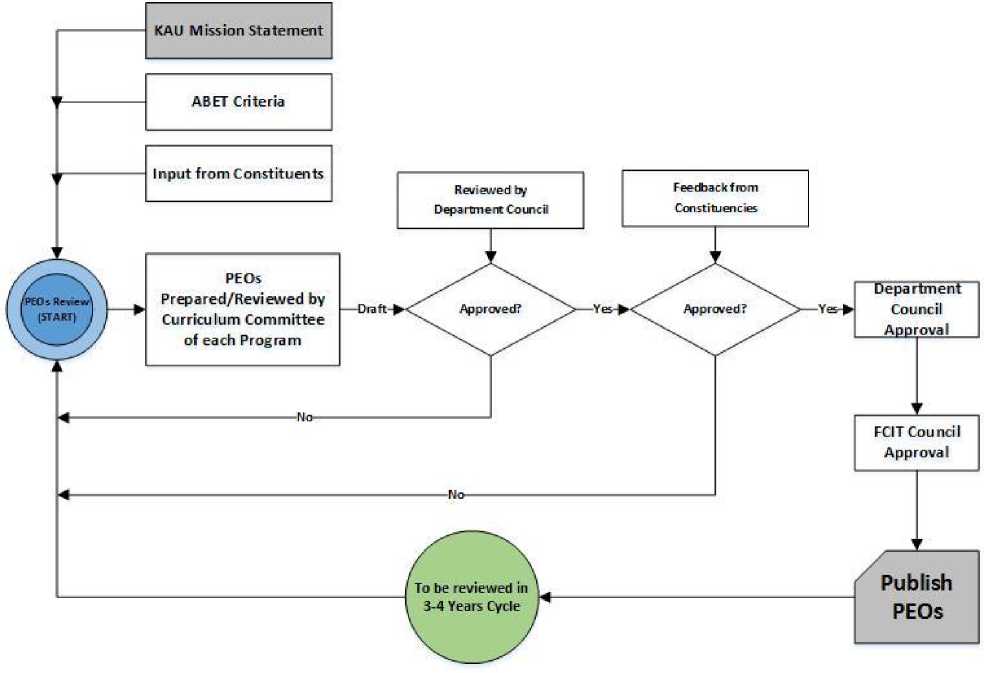

At FCIT, a formal process for formulating and reviewing of the PEOs is in place. The process used to periodically review the PEOs so that they remain consistent with the university mission, the different constituents’ needs, and the ABET-CAC criteria is depicted in Fig. 1. The salient elements of this process are briefly described in the following. The program faculty reviews the PEOs and may produce a draft for new PEOs formulation under the following circumstances:

Fig. 1. stablishment and review process of PEOs

-

• A change in the university mission.

-

• A request for change coming from members of one or more of the program’s main constituencies.

-

• A change in the ABET-CAC criteria affecting the PEOs.

-

• A change in the vision of national and international professional societies regarding computing-related practices.

-

• Aside from such circumstances, a periodic major review of the PEOs is conducted every three to four years.

Any changes in the PEOs are subject to a formal approval through the department and college councils. The above process has been applied in the establishment of our current PEOs. Our PEOs cover three classic objectives: professional practice expectations (PEO-1, below), societal context, i.e., how graduates fit or fulfil their expected role in society (PEO-2), and the academic/professional growth (PEO-3). The PEO are stated as follows (which are publicly available at the department website1):

PEO-1: Have a successful career in the practice of computer science and related applications built on their understanding of formal and applied methods for problem-solving, design of secure and dependable computer systems, and development of effective software systems and algorithmic applications.

PEO-2: Advance in responsibility and leadership and contribute as active partners in the economic growth and the sustainable development of the Saudi society.

PEO-3: Engage in professional development and/or graduate studies to pursue flexible career paths amid future technological changes.

-

III. Relationship of Program courses and Outcomes

Each program outcome is assessed within the academic program. Achievement of SOs can lead to the achievement of the PEOs and are a necessary condition for achieving the PEOs. The results of the SOs assessment and evaluation are thus applied to improve the program. SOs of our program are the measureable effects of curriculum which delivers the content to satisfy, in the larger context, the PEOs. As such, the SOs are closely related to the overarching PEOs, therefore, SOs are carefully mapped to the PEOs. In addition, courses are also mapped to SOs, where these mappings are identified by teams of instructors who have designed the course. As mentioned earlier, SOs are relatively broad statements, therefore, each course has identified and articulated using Bloom's taxonomy [16] around 15

specific, measureable course learning outcomes (CLOs). This is a constructive alignment [17, 18] of CLOs, learning activities, assessment tools and SOs. This process of course-SO mapping is carried out for each course. This mapping helps us in the assessment and evaluation of SOs because the exam questions are directly mapped to these specific CLOs instead of SOs, which are rather broad statements and hence difficult to assess directly. Whereas it is easy for all of the CLOs to be evaluated, we identified a sampling mechanism whereby a subset of the five CLOs called high value (henceforth, HVCLOs) are used for reported-assessment purposes. In addition, instead of exhaustively reporting on all assessment tools, a subset of 2-4 tools have been identified for each course that can give reasonable coverage of all the targeted HVCLOs (and hence SOs). These data from the HVCLOs are reported in the end of semester reports, which are written by the concerned course teams to drive the continuous improvement process. Any actions for the improvement of course performances are thus based on these data.

-

IV. Proposed Framework

An appropriate assessment and evaluation scheme is necessary for the successful attainment of the SOs [19]. In ABET terminology; "assessment is one or more processes that identify, collect, and prepare data to evaluate the attainment of student outcomes whereas evaluation is one or more processes for interpreting the data and evidence accumulated through assessment processes". We use various processes to assess the extent to which SOs are being attained.

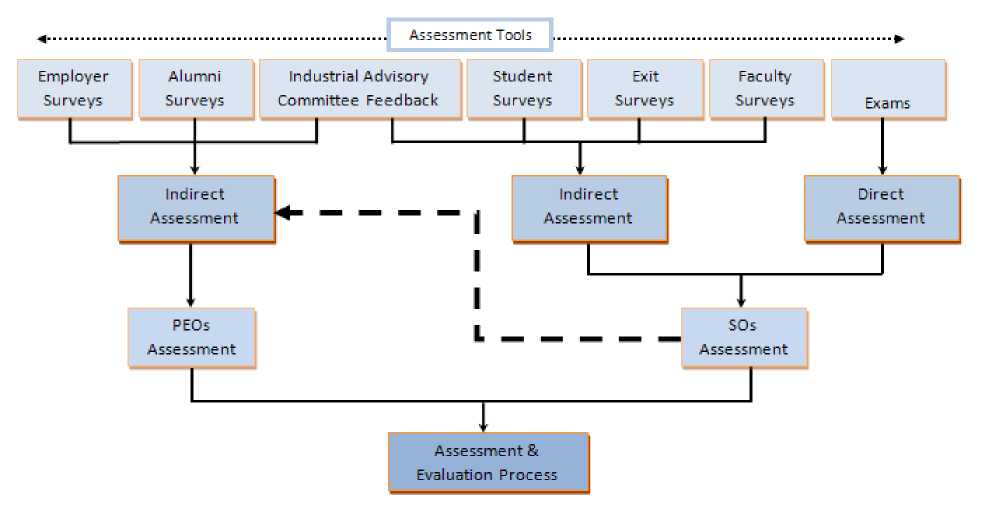

The processes of collecting data for both PEOs and SOs are generic as depicted in Fig. 2. SOs have direct and indirect methods of collecting data. For the objectives, indirect assessment is carried out through surveys of alumni and employers, and feedback from members of the industrial advisory board. Indirect assessment of objectives can also be “mildly” inferred from their mappings with the outcomes. On the other hand, direct assessment of the outcomes usually relies on the course work which includes final exam, midterm tests, quizzes, homework, laboratory works, assignments, practical, projects, presentations, class interaction, etc. The indirect assessment of the outcomes is based on several surveys such as student course evaluation surveys, exit surveys, faculty evaluations, and feedback from Industrial Advisory Board. For SOs, these data are gathered in every semester, whereas the surveys for PEOs remain open for a period of three years. The collected data are carefully analysed to inform the continuous improvement process in the program.

Fig. 2. Assessment and evaluation framework

-

V. Results Analysis

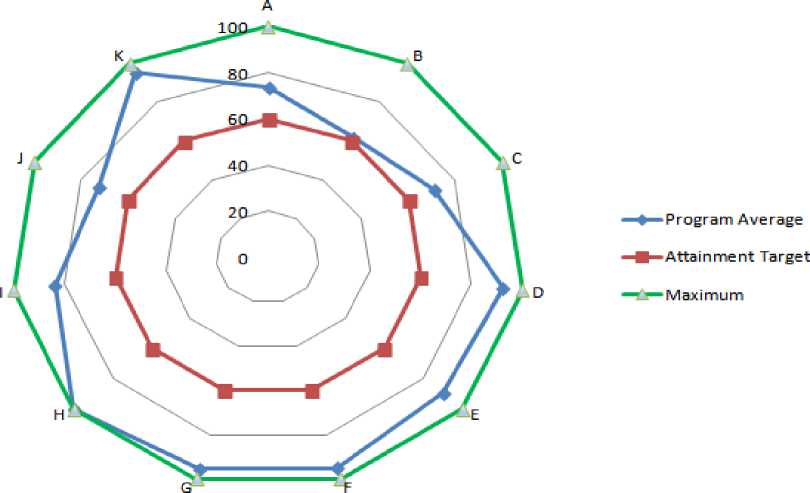

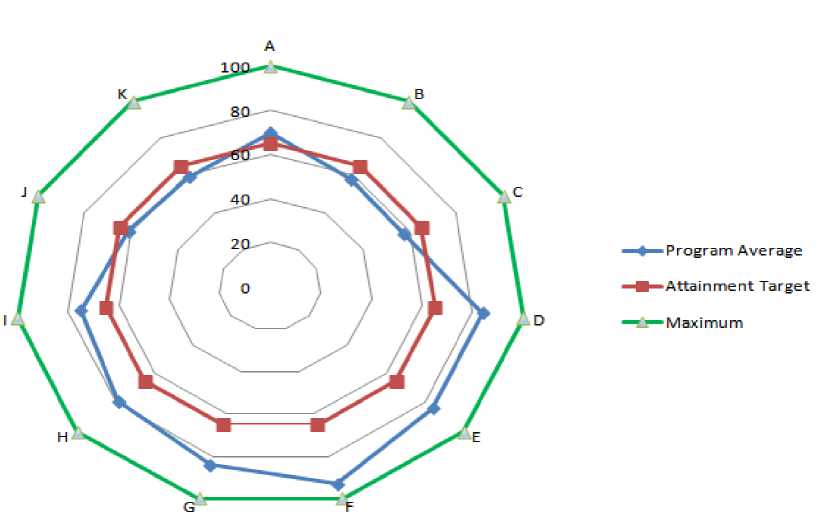

Here we present as a case study the results of our direct assessment of SOs to demonstrate the effectiveness of our framework. The SO data have been obtained only from the twenty-four core/mandatory courses, which are offered in our program. Collectively, in all these courses, there were ca. 300 students, which makes a reasonable sample size to draw meaningful conclusions from the data. As mentioned above, SOs are mapped to PEOs, therefore, an attainment of SOs would imply attainment of the relevant PEO as well. We define that an SO is achieved if at least 80% students attain at least 60% score. We follow the same assessment and evaluation process in every semester to gather SO attainment scores. However, for the sake of simplicity, a sample of the SO attainment data for recently completed two semesters is presented here. For a given SO, the SO attainment scores for all the contributing courses are averaged which results in the overall attainment for that SO. For instance, SO 'a' is assessed in 12 different courses, therefore, the attainment of this SO is averaged over 12 courses; similarly, mutatis mutandis for other courses. Fig. 3-4 show the SO attainment scores thus computed for the two previous semesters.

Fig. 3. Fall 2017 Results

Fig. 4. Spring 2018 Results

It is clear from Fig. 3-4 that overall all the SOs have successfully been achieved. The assessment results may give the impression that practically all outcomes have attained their threshold levels. Whereas this might be true on the program level, it is not necessarily true on the course levels. In fact, when our accreditation committee closely examined each course, in different courses attainment levels remained below the preset threshold value. Such shortcomings are further examined by the course instructors where recommendations for remedies and improvements are suggested. In the subsequent semester, the suggested corrective measures are incorporated and set the stage for the assessments, which are the driving force for the continuous improvement and a salient feature of our assessment and evaluation scheme.

-

VI. Discussion

Developing an appropriate framework to assess and evaluate students' learning is a continuous improvement process. Different assessment and evaluation processes can be implemented to monitor students' performance. The challenge is that mostly these assessment and evaluation processes are very gruelling and need intensive data gathering [20]. In this study, a robust assessment and evaluation framework is presented, which intends to enhance students outcome-based learning. As a case study, an ABET-accredited undergraduate computer science program is presented in which this framework has successfully been implemented.

Arguably, no assessment and evaluation plan could be optimal [21]; every plan may have its own advantages and disadvantages. To cover all aspects of students' learning and their attainment thus need a comprehensive analysis of data gathered through different assessment tools. On the one hand, reporting on very few assessment tools could be risky as this may not shed light on different aspects of outcome attainment. Whereas, an exhaustive approach whereby data from all assessment tools could be reported is, arguably, very time consuming and laborious activity. The main feature of our proposed framework is that we devised an appropriate sampling scheme which tradeoffs the two extremes. We suggest a sampling of assessment tools, and also encourage a sampling of CLOs. Our students and faculty surveys suggest that such sampling generally works well for both students and faculty members. Moreover, employers' survey results are also encouraging wherein employers showed better confidence on our graduates after implementation of the proposed framework. The value of the study thus presented here is that it may encourage the other similar programs; especially those seeking ABET accreditation, to adapt the same framework.

Some interesting questions came to fore during this study. For example, we noticed students' grade achievements do not necessarily corroborate with their outcome achievement. We observed many cases where students are getting high passing grades but they fail in attaining an HVCLO/SO. Another important question arises: Whether quality is assured when a program gets accreditation from a world leading-accreditation body for that program, or accreditation places extra pressures, which may be detrimental or counterproductive for the larger cause of an institution to enforce or enhance quality assurance? Accreditation is a checks and balance for quality assurance. Students' direct-assessment results indicate that accreditation contributed positively in enhancing students' performance, whereas students and faculty survey results indicate otherwise. In the latter, data reveal that various processes pertaining to quality assurance have been generally streamlined, however, such processes place extra burden on faculty thereby lacking their focus on innovation and teaching freedom. Faculty generally believe that the assessment-centric accreditation processes are very time consuming: most of their precious time is consumed in planning, reports writing, preparing assessment questionnaires, data gathering, and data analysis. Such laborious processes generally offer little opportunities to faculty members to devote time to their research, for example.

An independent evaluation from ABET confirms the utility of our proposed framework. However, it could be still little early to make a final conclusion about the worth and application of this framework unless it is evaluated over a longer period of time. Availability of more assessment may inform further enhancements to the existing framework.

-

VII. Conclusion And Future Work

In this paper, we presented a unified framework for systematic assessment and evaluation of PEOs (program educational objectives) and SOs (student outcomes) for our computer science program. The proposed framework is informed by our assessment and evaluation data for a period of more than eight years. Moreover, experiences and feedback from different stakeholders, including students, faculty members, and industrial advisory board also informed the design of the framework. The proposed framework is fully implemented and its effectiveness is also thoroughly examined. One of the salient features of the proposed framework is our sampling plan for assessment and evaluation of SOs, which has been carried out both at CLO-level sampling and sampling in the assessment tools. The efficacy of the framework is duly ascertained by ABET: first, our program got full six-years ABET accreditation in 2013 and then it retained this accreditation for six more years in 2019. The results indicate that the proposed assessment and evaluation framework is robust, especially for those programs, which are seeking ABET accreditation.

In future, we intend to enhance the assessment and evaluation plan for soft-skills related SOs. In our existing framework, we use similar tools to assess technical and soft skills, which may not be worthwhile. It has generally been observed that, where technical skills are easy to assess, soft skills are difficult to assess [22, 23]. To this end, more innovative methods will be explored to assess and evaluate soft skills in a more systematic manner.

ACKNOWLEDGEMENT

I thank AIMS team at Faculty of Computing and Information Technology, KAU, for compiling the SO assessment data. I also thank Adnan Nayfeh (ABET consultant) and Syed Hamid Hassan for their insights in developing the PEO and SO evaluation framework.

Список литературы A Unified Framework for Systematic Evaluation of ABET Student Outcomes and Program Educational Objectives

- H. Braun, A. Kanjee, E. Bettinger and M. Kremer, Improving Education Through Assessment, Innovation, and Evaluation, Cambridge: American Academy of Arts and Sciences, 2006.

- L. Jin, “A Research on the Quality Assessment in Higher Education Institutions,” in Proceedings of E-Product, E-Service and E-Entertainment Conference, Henan, China, 2010.

- S. Barney, M. Khurum, K. Petersen, M. Unterkalmsteiner and R. Jabangwe, “Improving Students With Rubric-Based Self-Assessment and Oral Feedback,” IEEE Transactions on Education, vol. 55, no. 3, pp. 319-325, 2012.

- P. B. Crilly and R. J. Hartnett, “Ensuring Attainment of ABET Criteria 4 and Maintaining Continuity for Programs with Moderate Faculty Turnover,” in Proceedings of the 2015 ASEE Northeast Section Conference, Boston, 2015.

- H. Wimmer, L. Powell, L. Kilgus and C. Force, “Improving Course Assessment via Web-based Homework,” International Journal of Online Pedagogy and Course Design, vol. 7, no. 2, pp. 1-19, 2017.

- T.-S. Chou, “Course Design and Project Evaluation of a Network Management Course Implemented in On-Campus and Online Classes,” International Journal of Online Pedagogy and Course Design, vol. 8, no. 2, pp. 44-56, 2018.

- C. Robles, “Evaluating the use of Toondoo for collaborative e-learning of selected pre-service teachers.,” International journal of modern education and computer science., vol. 9, no. 11, pp. 25-32, 2017.

- Rajak, A. K. Shrivastava, S. Bhardwaj and A. K. Tripathi, “Assessment and attainment of program educational objectives for post graduate courses.,” International journal of modern education and computer science., vol. 11, no. 2, pp. 26-32, 2019.

- ABET, “Criteria for Accrediting Computing Programs,” 2019. [Online]. Available: https://www.abet.org/accreditation/accreditation-criteria/criteria-for-accrediting-computing-programs-2018-2019/. [Accessed June 2019].

- J. P. Somervell, “Assessing Student Outcomes with Comprehensive Examinations: A Case Study,” in Proceedings of Frontiers in Education: CS and CE, Las Vegas, NV, USA, 2015.

- J. König, S. Blömeke, L. Paine, W. H. Schmidt and F.-J. Hsieh, “General Pedagogical Knowledge of Future Middle School Teachers: On the Complex Ecology of Teacher Education in the United States, Germany, and Taiwan.,” Teacher Education, vol. 62, no. 2, pp. 188-201, 2011.

- J. König, R. Ligtvoet, S. Klemenz and M. Rothland, “Effects of Opportunities to Learn in Teacher Preparation on Future Teachers’ General Pedagogical Knowledge: Analyzing Program Characteristics and Outcomes.,” Studies in Educational Evaluation, vol. 53, pp. 122-133, 2017.

- E. Smerdon, “An Action Agenda for Engineering Curriculum Innovation,” in 11th IEEE-USA Biennial Careers Conference, San Jose, 2000.

- L. J. Shuman, M. Besterfield-Sarce and J. McGourty, “The ABET Professional Skills - Can They Be Taught? Can They Be Assessed?,” Engineering Education, vol. 94, pp. 41-55, 2005.

- M. Danaher, K. Schoepp and A. A. Kranov, “A New Approach forAassessing ABET’s Professional Skills in Computing,” World Transactions on Engineering and Technology Education, vol. 14, no. 3, pp. 355-360, 2016.

- S. Bloom, M. D. Engelhart, E. J. Furst, W. H. Hill and D. R. Krathwohl, Taxonomy of Educational Objectives: The Classification of Educational Goals. Handbook I: Cognitive Domain., New York: David McKay Company, 1956.

- J. Biggs, “Aligning Teaching and Assessment to Curriculum Objectives,” Imaginative Curriculum Project, Learning and Teaching Support Network, Generic Centre, 2003.

- J. Biggs and C. Tang, Teaching For Quality Learning at University, Maidenhead: McGraw-Hill and Open University Press, 2011.

- R. M. v. d. Lans, W. J. v. d. Grift, V. v. Klaas and M. Fokkens-Bruinsma, “"Once is Not Enough: Establishing Reliability Criteria for Feedback and Evaluation Decisions Based on Classroom Observations,” Studies in Educational Evaluation, vol. 50, pp. 88-95, 2016.

- K. D. Stephan, “All This and Engineering Too: A History of Accreditation Requirements,” IEEE Technology & Society Magazine, vol. 21, no. 3, pp. 8-15, 2002.

- Damaj and J. Yousafzai, “Simple and Accurate Student Outcomes Assessment: A Unified Approach Using Senior Computer Engineering Design Experiences,” in IEEE Global Engineering Education Conference, 2016.

- S. Gibb, “Soft Skills Assessment: Theory Development and the Research Agenda.,” International Journal of Lifelong Education., vol. 33, no. 4, 2014.

- A. Aworanti, M. B. Taiwo and O. I. Iluobe, “Validation of Modified Soft Skills Assessment Instrument (MOSSAI) for Use in Nigeria.,” Universal Journal of Educational Research., vol. 3, no. 11, pp. 847-861, 2015.