Aerial Video Processing for Land Use and Land Cover Mapping

Автор: Ashoka Vanjare, S.N. Omkar, Akhilesh Koul, Devesh

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 8 vol.5, 2013 года.

Бесплатный доступ

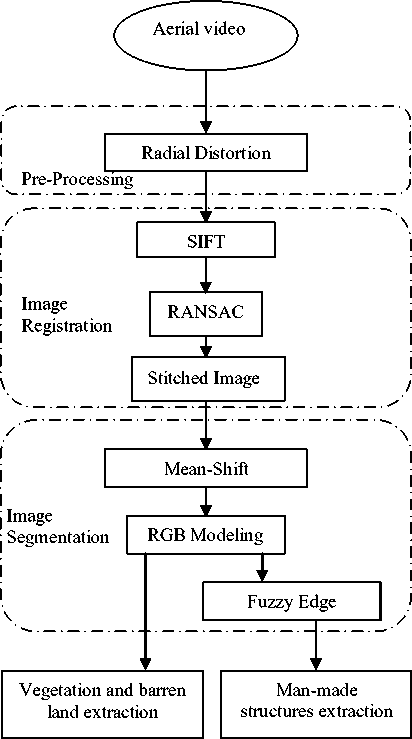

In this paper, we have proposed an Automatic Aerial Video Processing System for analyzing land surface features. Analysis of aerial video is done in three steps a) Image pre-processing b) Image registration and c) Image segmentation. Using the proposed system, we have identified Land features like Vegetation, Man-Made Structures and Barren Land. These features are identified and differentiated from each other to calculate their respective areas. Most important feature of this system is that it is an instantaneous video acquisition and processing system. In the first step, radial distortions of image are corrected using Fish-Eye correction algorithm. In the second step, the image features are matched and then images are stitched using Scale Invariant Feature Transform (SIFT) followed by Random Sample Consensus (RANSAC) algorithm. In the third step, the stitched images are segmented using Mean Shift Segmentation and different structures are identified using RGB model. Here we have used a hybrid system to identify Man-Made Structures using Fuzzy Edge Extraction along with Mean Shift segmentation. The results obtained are compared with the ground truth data, thus evaluating the performance of the system. The proposed system is implemented using Intel's OpenCV.

Radial Distortion, Image Stitching, Image segmentation, Identify land features

Короткий адрес: https://sciup.org/15013022

IDR: 15013022

Текст научной статьи Aerial Video Processing for Land Use and Land Cover Mapping

Unmanned Aerial Vehicles (UAV) are playing increasingly prominent role in urban planning [1], agricultural analysis [2][3][4], environmental monitoring [5][6], traffic surveillance [7], texture classification [8] due to their low cost and safe operation as compared to manned aircrafts. The data is acquired using a video sensor and is in digital format which makes it very feasible to process. Earlier work has been done to differentiate buildings from other terrain [9][10], roof top detection using aerial images [11], road extraction from aerial images [12][13], and shadow detection in aerial images [14]. Color image segmentation [15], which quantizes the color into several representing classes, is often used to extract information about buildings from aerial images. However, automatic detection of buildings in monocular aerial images without elevation information is hard. Buildings cannot be easily identified efficiently using color information because they don’t have any characteristic RGB values unlike barren land or vegetation. Another approach employing Active Contour Model (ACM) or Snake model [16] has been used for automatic building boundary extraction but due radiometric characteristics of the building being same to image background some buildings were not extracted. A rapid man-made object segmentation based on watershed algorithm has been developed that creates homogenous regions [17]. So we propose a system for identifying man-made, vegetation and barren land features from the given aerial video.

In this paper, we have proposed a system for identification, extraction and classification of objects from the given aerial video. As is shown in Figure 3. initially, the aerial images are affected by radial distortion so we use fish-eye algorithm to reduce the distortions. The radial distortion free images are aligned and stitched using Scale Invariant Feature Transform (SIFT) and Random Sample Consensus (RANSAC) techniques. Stitched images are segmented by Mean Shift Segmentation method to extract Vegetation, manmade structures and Barren Land surface features. The extracted features are evaluated using ground truth data for evaluating the segmentation performance. The accuracy of the results is very efficient. The advantages of the developed system over the other existing methods are easy, efficient and robustness for video processing and instantaneous automatic object recognition. We have successfully classified three entities viz. Man-made structures, Vegetation, Barren land with an overall accuracy of 94%.

-

II. Study Area

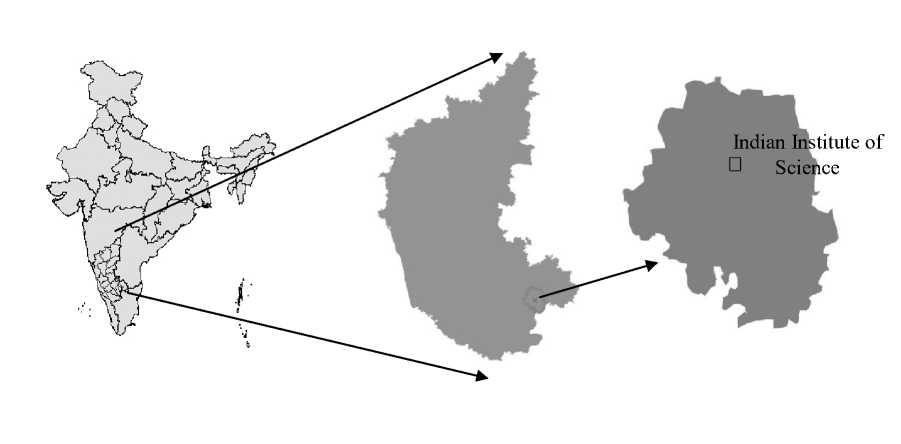

The study field is shown in Figure 1. The Indian Institute of Science located in Bangalore (13°01'35N, 77°33'56E). The Institute spreads over 400 acres of land situated in the heart of Bangalore city. The institute is covered with different variety of trees and it is located at an altitude of about 920mtrs above sea level.

The UAV is flown above the study area and video is acquired. The acquired aerial video is about 3 minutes. From the acquired video five different image frames of the same area are extracted for our study.

Figure 1. Study Area

-

III. Data Acquisition

In this section, the description of aerial video acquired from the UAV plane is discussed. UAV is designed with the specifications as shown in the Table 1. The designed UAV is shown in the Figure 2. The aerial video camera is mounted under the UAV plane and aerial video is recorded. The flying altitude of UAV while acquiring data is 1030mtrs above sea level and image resolution obtained is about 3 cm per pixel. The UAV that we have used is a medium altitude surveillance vehicle. The UAV and camera specifications are given in Table 1.

-

IV. Methodology

In this section, we are discussing methods of aerial video processing system. The system is divided into three major steps: 1) Pre-Processing 2) Image registration and 3) Image segmentation.

-

A. Pre-Processing

The camera used to capture the land surface features consist of fisheye lenses which causes radial distortions in images. Correction of this video is called “Rectification of radial distortions” using fisheye correction[18] Fish-eye lenses have greater “field of view (FOV)” which in turn covers the greater area but leads to the cylindrical distortion.

Table 1. Specifications

|

UAV Specifications |

Camera Specifications |

||

|

Wing span |

120Cm (Wing Span = 120cm , Wing Chord 17cm) |

Camera Name |

HD-PRO Camera with field of view 1700 |

|

Length |

87 cms |

||

|

Payload Weight |

1.1Kg Total 1Kg (module weight) + 120grams( payload) |

Video HD Resolutions |

1080p: 1920×1080, 30 Frames per second (FPS) |

|

Engine |

Electronic type Myn is Brushless DC motor [1000Kv out runner] |

||

|

Fuel tank: |

It runs with battery [3cell LiPo, 2200Mah] |

Field Of View(FOV) |

Wide 170º FOV, Medium 127º FOV |

|

Flight time: |

10 minutes |

||

|

Propeller: |

9 x 4.7E |

||

Figure 2. a) UAV designed for aerial video surveillance, b) UAV while takeoff and c) UAV while flying

Figure 3. System Overview

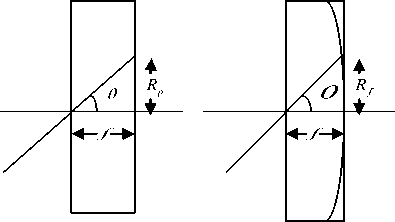

The Corrected images from radial distortion is shown in Figure 5. Let x and y be the x and y coordinates respectively, of a target pixel in the output rectilinear perspective image, and similarly, let x and y be those of a source pixel in the input fisheye image. Let R (as shown in Figure 4.) be the radial position of a point in fisheye image and R is the radial position of a point in perspective image. Then assuming equisolid angle mapping for fisheye image, the below equations hold true.

Figure 4. Projection of Non-fisheye and Projection of fisheye camera

R = 2 f sin( ^ /2)

Rp = f tan(^)

xpxf ypyf

2 xp sin(tan - 1 ( 2 /2))

2 y sin(tan 1 ( 2 /2)) y f = — 2----

2 = (7 X p 2 + y, 2)/ f

image _ width f = 4sin(FOVhorz /2)

-

B. Image Registration

In this step the radial distortion corrected images are aligned, transformed and stitched. Firstly, image features are found and matched with the features of the other image using SIFT and after that applying RANSAC to reduce the number of outliers and finally the image is stitched.

-

1) Image Registration

The SIFT algorithm was proposed by David G. Lowe [19] operates in four major stages to detect and describe local features, or key points, in an image: a) Scale-space extrema detection, b) Key point localization, c)

Orientation assignment and, d) Key point descriptor. For image matching and recognition, SIFT features are first extracted from a set of reference images and stored in a database. A new image is matched by individually comparing each feature from the new image to this previous database and finding candidate matching features based on Euclidean distance of their feature vectors. Using Scale Space Function, we can detect the locations that are scale and orientation invariant. It has been shown by Koenderink [20] and Lindeberg [21] that under a variety of reasonable assumptions the only possible scale-space kernel is the Gaussian function.

The scale space (8) of an image is defined as a function, L ( x , y , k a ) , that is produced from the convolution of a variable-scale Gaussian, G ( x , y , a ) ,with an input image, I ( x , y ) .

L ( x , y , k a ) = G ( x , y , k a ) 0 1 ( x , y ) (8)

G ( x , y , a ) = -1y e "( x 2 + y 2)2 a 2 (9) 2na

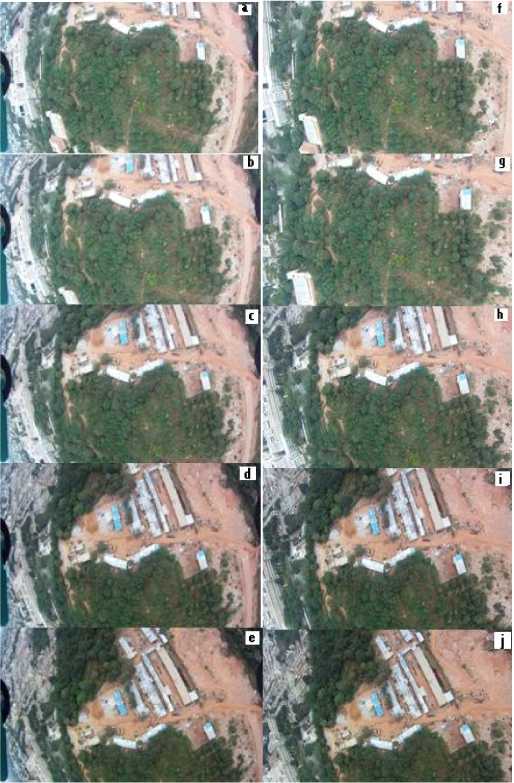

Figure 5. Five Image frames (a-e) are read from the given aerial video are corrected for radial distortion by using fish-eye correction algorithm which is shown in figure (f-j)

convolved with the image, D ( x , y , a ) can be used to detect the stable key point location in the sample space.

D ( x , y , a ) = L ( x , y , k,.a ) - L ( x , y , j ) (10)

Hence a DOG of an image between scales kza and kja is just the difference of the Gaussian-blurred images at scales (10). In order to detect the local maxima and minima of D(x, y, a) , each sample point is compared to its eight neighbors in the current image and nine neighbors in the scale above and below. It is selected only if it is larger than all of these neighbors or smaller than all of them. By assigning a consistent orientation to each key point based on local image properties, the key point descriptor can be represented relative to this orientation and therefore achieve invariance to image rotation. The scale of the key point is used to select the Gaussian smoothed image, L , with the closest scale, so that all computations are performed in a scale-invariant manner. For each image sample, L(x, y) , at this scale, the gradient magnitude, m(x, y) , and orientation, 0(x, y) , is pre computed using pixel differences.

m ( x , y ) = ^( L ( x + 1, y ) - L ( x - 1, y ))2 + ( L ( x , y + 1) - L ( x , y - 1))2 (11)

^ ( x , y ) = tan

л L ( x , y + 1) - L ( x , y - 1) л v L ( x + 1, y ) - L ( x - 1, y ) v

After we get N Matched pairs, the RANSAC [22] robust estimation is used in computing 2D homography. The Random Sample Consensus (RANSAC) algorithm is a resampling technique that generates candidate solutions by using the minimum number observations (data points) required to estimate the underlying model. The parameters for N iterations where N is number of matched pairs:

Select randomly the minimum number of points required to determine the model parameters.

Solve for the parameters of the model.

Determine how many points from the set of all points fit with a predefined tolerance.

s over the total number points in the set exceeds a predefined threshold, reestimate the model parameters using all the identified inliers and terminate.

Procedure:

Let u represent the probability that any selected data point is inliers.

Let v = 1 - u the probability of observing an outlier. N iterations of the minimum number of point’s denoted m are required, where

The Difference-of-Gaussian (DOG) function

mN

1 — p = (1 — u )

and thus with some manipulation,

N= log (1 — p )

log(1 — (1 — v )")

In stitching one important step that is involved is image warping (Figure 6.). It is merging of several images into a complete 2D mosaic image. In Stitching the transformation between images is often not known beforehand. In this example, two images are merged and we will estimate the transformation by letting the RANSAC matched pairs of correspondence in each of the images

Let X = [x y 1] denote a position in the original 2D image I and X' = [ x' y' 1] denote the corresponding position in the warped image I ,

|

" x '" |

5 COS |

s sin |

Tx" |

■ x" |

|||

|

t y |

= |

— ssin |

s cos |

Ty |

X |

y |

(15) |

|

_ 1 _ |

_ 0 |

0 |

1 _ |

_ 1 _ |

Where s is the scaling factor, Tx and Ty are the horizontal and vertical translations respectively and α is the rotation angle. Here, since we are dealing with the Aerial images we should warp the whole image about 4 points. This transformation is called perspective transformation. So replace X and X' by x1

X =

X ' =

y 1 1

x 1' y 1' 1

x2

y2

x 4 y 4 1

x2' x3'

y2' y3'

The Transformation matrix denoted by T is

X ' = T * X

Figure 6. Warped Image

Finally the aerial images are stitched by applying the proposed algorithm recursively for frames extracted sequentially from the video. The final step of stitching is to project the input images onto a common 2D coordinate system .The warped images are projected with respect to a reference frame. To apply the proposed algorithm for video, first two images are warped by applying perspective transformation for the second image with respect to first image and stitched on the 2D coordinate system. Then the third image is warped with respect to second image and so on. The final stitched image is shown in Figure 7.

Figure 7. Stitched Image

-

C. Image Segmentation

Image segmentation is the process of partitioning an image into multiple segments. The goal of segmentation is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. Image segmentation is typically used to locate objects and boundaries in images. More precisely, image segmentation is the process of assigning a label to every pixel in an image such that pixels with the same label share certain visual characteristics. There are various techniques for image segmentation based on different approaches. In this paper we have employed Mean Shift Segmentation and Fuzzy Edge Detection.

-

1) Image Segmentation

Mean Shift [23] is a robust and non-parametric iterative algorithm. For each data point, Mean shift associates it with the nearby peak of the dataset’s probability density function. For each data point, mean shift defines a window around it and computes the mean of the data point. Then it shifts the center of the window to the mean and repeats the algorithm till it converges. After each iteration, we can consider that the window shifts to a denser region of the dataset. After all the iterations we get final mean shift segmented image as shown in Figure 8.

Let a kernel function K ( xf — x ) be given. This function determines the weight of nearby points for reestimation of the mean. Typically we use the Gaussian kernel on the distance to the current estimate.

K ( xt - x ) = e cxi - x l2

The weighted mean of the density in the window determined by K is:

. . 2 x G N ( x ) K ( x, - x ) x

=--- iii

2 xt g N ( x ) K ( x ;. - x )

Figure 8 Mean Shift Segmentation

Where N ( x ) is the neighborhood of , a set of points for which K ( x ) ^ 0 . The mean-shift algorithm now sets m ( x ) ^ x , and repeats the estimation until converges.

-

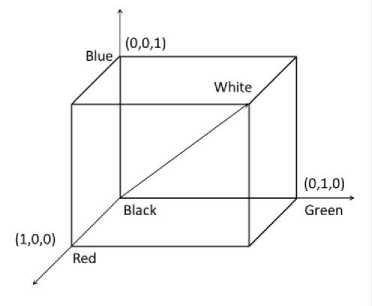

2) RGB Model

The RGB color model is an additive color model in which red, green, and blue light are added together in various ways to reproduce a broad array of the name of the model comes from the initials of the three additive primary colors, red, green, and blue. So on the basis of values of R, B and G different terrains and structures are distinguished.

Here, we use the fact that each type of terrain has a unique set of RGB values and we differentiate objects on the basis of their respective RGB values. For example vegetation will have a set of RGB values corresponding to green and barren land would have different set of RGB values.

Figure 9. RGB Color Space

-

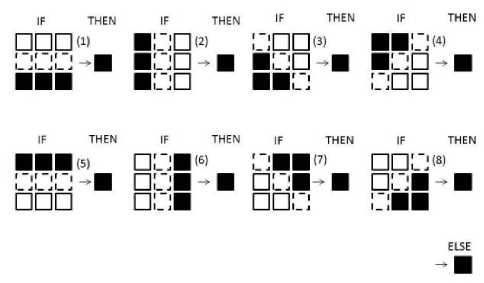

3) Fuzzy Edge Detection

We can use RGB values to identify vegetation or barren land because of their characteristic values but in the detection of Man-Made structures we can’t have specific RGB values. So we have incorporated a hybrid model to identify man-made structures employing Fuzzy Inference Ruled by Else-Action (FIRE), Else-Action (FIRE), designed by Russo and Ramponi [24] with RGB Model.

f = max( f 1,...., f 8)

An input image has luminance values [250] from {0, 1… 255}. For pixel P , let

W j { P( i - e )( j - f ) ,...., P ( i + e )( j + f )}

where e , f G |_ 0,... K / 2 J and K is the window size, be the set of pixels in the neighborhood of P with the exception of P (hence, | W y |= K * K - 1 ).

The inputs to the fuzzy edge extractor are the luminance differences between pixels in W to P ,

x,._ =w... X-P (23)

( ij , m ) ( ij , m ) ij ( )

1 < m < ( K * K - 1)

th

Where W(ij,m) is the mt element in the window centered at P .

Figure 10. The eight rules in the fuzzy rule base.

All subsequent operations performed on X are independent of all other pixels, therefore, X will be notationally reduced to X , to simplify the indexing and as all discussion is about a specific P and its W . The domain of is the set {- 255, . . , 255}.

A simplified rule is : If a pixel lies upon an edge, then make it black Else make it white.

Eight unique cases (Figure 10), representing an edge at every 45 degrees of rotation, figure is represented as a rule. Each rule has six antecedents, coinciding with six of the eight pixels in X. Fuzzy sets are used to represent the linguistic terms negative and positive. Similarly, the linguistic uncertainties of the consequent terms white and black are also modeled as fuzzy sets.

For each pixel, each of the eight rules consisting of six antecedents are fired using the values in X. For each rule, the average of the six confidences is used as the total antecedent firing f , where 1 ≤ i ≤ 8 . In this algorithm, it is assumed that a given pixel can belong to, at most, one of the eight cases. Therefore, the rule with the largest firing f is used as the antecedent firing strength to the fuzzy consequent set representing black.

And in addition to that we have set a threshold to detect edges of Man-Made structures only i.e. f if is greater than threshold then only edge of man-made structures will be detected.

-

V. Results And Discussions

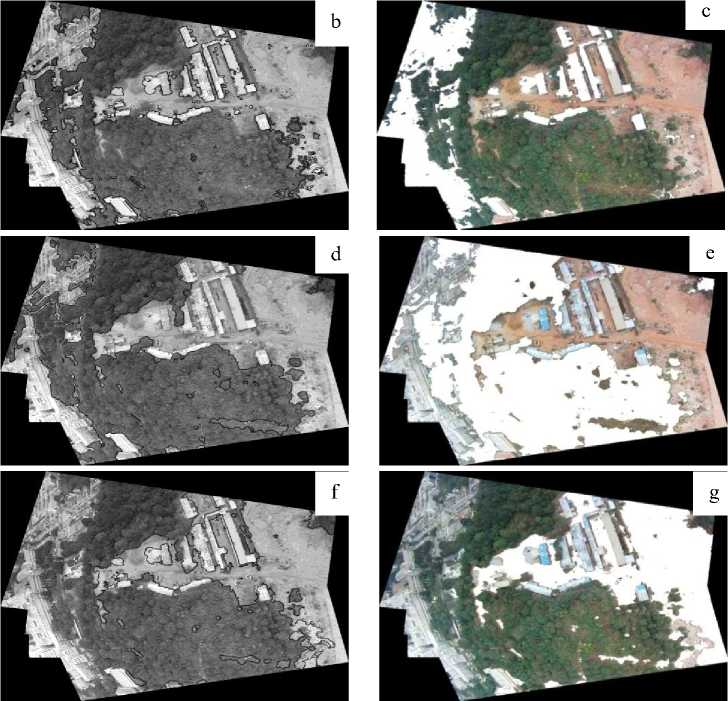

The correction of radial distortion is important for efficient processing. Figure 5 shows radially distorted and corrected images. The corrected images are then matched and stitched using SIFT followed by RANSAC. The efficiency of the stitching depends on the number of matched features which comes out to be very good and RANSAC then reduces the number of outliers. The stitched image is then segmented using Mean-Shift Segmentation which is followed by RGB modeling for vegetation and barren land extraction. For man-made structures, we employ a hybrid method incorporating fuzzy edge detection with Mean Shift Segmentation. In Mean Shift Segmentation, the efficiency of the results depends on three parameters viz. radius of space variables named as spatial radius, radius of color variables called color radius and max-level which stands for the number of scale pyramid we want for segmentation. The optimum results are observed at spatial radius=10, circle radius=20 and max level=2. A shift from these values leads to decrease in efficiency of the results. The resulting segmented image using above stated parameters is shown in Figure 8. For Fuzzy edge detection, the threshold values which are the difference in luminescence value of two adjoining pixels are significant in determining the accuracy of the results. We set a threshold of 100 to achieve optimum results. In order to check the correctness of the system, we calculated the user accuracy and producer’s accuracy of the segmented images by comparing processed results with the ground truth data. The respective accuracy is shown in the table below for different segmented images also shown below in Figure 11. Furthermore, the overall accuracy of the system comes out to be 94.02% as shown in the Table 2. The inability of the system in achieving 100% accuracy is credited to different camera calibrations and flight angles during UAV flight. For better results, the nadir point of the camera must coincide with the principal point of the lens. But when UAV is inclined at a greater angle, then this condition is hard to satisfy and so there is loss in quality of the acquired images and hence loss in accuracy occurs. Moreover, the relative lack of accuracy in building extraction is due to the fact that unlike vegetation cover and barren land which have same RGB values, buildings have varying RGB values.

Figure 11. a) Stitched Image, b), d) and f) Contours drawn for Man-Made Structures, Vegetation and Barren Land respectively, c), e) and g) Area extracted (white regions) for Man-Made Structures, Vegetation and Barren Land respectively

Table 2. User Accuracy and Producer Accuracy of the proposed system by identifying and extracting different entities.

*data in pixels

|

Aerial Data |

|||||||

|

Man-Made Structures |

Vegetation |

Barren |

Other |

Total |

Producer Accuracy |

||

|

Man-Made Structures |

107797* |

5083 |

1547 |

2931 |

117358 |

91.85% |

|

|

1 ts R to |

Vegetation |

5810 |

292625 |

589 |

0 |

299024 |

97.86% |

|

Barren |

1615 |

428 |

142480 |

4388 |

148911 |

95.68% |

|

|

Other |

3476 |

6494 |

2533 |

5468 |

17971 |

30.42% |

|

|

Total |

118698 |

304630 |

147149 |

12787 |

|||

|

User Accuracy |

90.81% |

96.06% |

96.83% |

42.76% |

|||

|

Overall Accuracy |

94.02% |

||||||

-

VI. Conclusions

The task of image stitching and image segmentation has been successfully accomplished by above discussed procedure. This method has been found to be an efficient and versatile approach for segmentation as we have applied it on different samples of images and compared the results. The system has shown high accuracy in vegetation and manmade structure classification and extraction. Considering that this is an instantaneous image processing system, the results are efficient. Although the methodology developed in this paper has been successfully applied but still some limitations were detected. The main limitations are related to flight angle and camera calibrations. This can be rectified by using a pre-defined path for UAV, so that a threshold inclination can be achieved beyond which processing of acquired image is not efficient.

Acknowledgment

This work is supported by the Space Technology Cell, Indian Institute of Science and Aeronautical Research and Developers board (ARDB). We thank the reviewers for his/her thorough reading and suggesting many important aspects. All the suggestion was very useful in revising our article.

Список литературы Aerial Video Processing for Land Use and Land Cover Mapping

- Zongjian L. UAV for mapping—low altitude photogrammetric survey.International Archives of Photogrammetry and Remote Sensing, Beijing, China, 2008.

- Grenzdörffer G. J., Engel A., Teichert B. The photogrammetric potential of low-cost UAVs in forestry and agriculture. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 2008, 31(B3) , 1207-1214.

- Laliberte A. S., Rango, A. Texture and scale in object-based analysis of subdecimeter resolution unmanned aerial vehicle (UAV) imagery.Geoscience and Remote Sensing, IEEE Transactions on, 2009, 47(3), 761-770.

- Herwitz, S. R., Johnson, L. F., Dunagan, S. E., Higgins R. G., Sullivan D. V., Zheng J., Brass J. A. Imaging from an unmanned aerial vehicle: agricultural surveillance and decision support. Computers and Electronics in Agriculture, 2004, 44(1), 49-61.

- Elfes A., Siqueira Bueno S., Bergerman M., Ramos Jr J. G. A semi-autonomous robotic airship for environmental monitoring missions. In Robotics and Automation, 1998. Proceedings. 1998 IEEE International Conference on Vol. 4, 3449-3455.

- Koo V. C., Chan Y. K., Vetharatnam G., Chua M. Y., Lim C. H., Lim C. S., Sew B. C. A new unmanned aerial vehicle synthetic aperture radar for environmental monitoring. Progress In Electromagnetics Research, , 2012, 122, 245-268.

- Srinivasan S., Latchman H., Shea J., Wong T., McNair J. Airborne traffic surveillance systems: video surveillance of highway traffic. In Proceedings of the ACM 2nd international workshop on Video surveillance & sensor networks, ACM, 2004, October, 131-135.

- Lipowezky U. Grayscale aerial and space image colorization using texture classification. Pattern recognition letters, 2006, 27(4), 275-286.

- Solka J. L., Marchette D. J., Wallet B. C., Irwin V. L., Rogers, G. W. Identification of man-made regions in unmanned aerial vehicle imagery and videos. Pattern Analysis and Machine Intelligence, IEEE Transactions on, 1998, 20(8), 852-857.

- Huertas A., & Nevatla R. . Detecting changes in aerial views of man-made structures. IEEE, In Computer Vision, Sixth International Conference on 1998, January, 73-80.

- Maloof M. A., Langley P., Binford T. O., Nevatia R., Sage S. Improved rooftop detection in aerial images with machine learning. Machine Learning, 2003, 53(1-2), 157-191.

- Treash K., Amaratunga, K. Automatic road detection in grayscale aerial images. Journal of computing in civil engineering, 2000, 14(1), 60-69.

- Tournaire O., Paparoditis N. A geometric stochastic approach based on marked point processes for road mark detection from high resolution aerial images. ISPRS Journal of Photogrammetry and Remote Sensing, 2009, 64(6), 621-631.

- Suzuki A., Shio A., Arai H., Ohtsuka S. . Dynamic shadow compensation of aerial images based on color and spatial analysis. IEEE, In Pattern Recognition, 2000, Proceedings. 15th International Conference on Vol. 1, 317-320.

- Deng Y., Manjunath B. S., Shin, H. Color image segmentation. IEEE, In Computer Vision and Pattern Recognition, 1999. Computer Society Conference on. Vol. 2.

- Peng J., Zhang D., Liu Y. An improved snake model for building detection from urban aerial images. Pattern Recognition Letters, 2005, 26(5), 587-595.

- Wei W., Xin, Y. Rapid, man-made object morphological segmentation for aerial images using a multi-scaled, geometric image analysis. Image and Vision Computing, 2010, 28(4), 626-633.

- Cameras R. V. White Paper A Flexible Architecture for Fisheye Correction in Automotive Rear-View Cameras.

- Lowe D. G. Distinctive image features from scale-invariant keypoints.International journal of computer vision, 2004, 60(2), 91-110.

- Koenderink J. J. The structure of images. Biological cybernetics, 1984, 50(5), 363-370.

- Lindeberg T. Scale-space theory: A basic tool for analyzing structures at different scales. Journal of applied statistics, 1994, 21(1-2), 225-270.

- Fischler M. A., Bolles R. C. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Communications of the ACM, 1981, 24(6), 381-395.

- Comaniciu D., Meer P. Mean shift analysis and applications. In Computer Vision, 1999. The Proceedings of the Seventh IEEE International Conference on Vol. 2, 1197-1203.

- Russo F., Ramponi G. Edge extraction by FIRE operators. In Fuzzy Systems, 1994. IEEE World Congress on Computational Intelligence., Proceedings of the Third IEEE Conference on 249-253.

- Luke R. H., Anderson D. T., Keller J. M., Coupland, S. Fuzzy Logic-Based Image Processing Using Graphics Processor Units. Proc. IFSA-EUSFLAT, 2009.