Age Dependent Face Recognition using Eigenface

Автор: Hlaing Htake Khaung Tin

Журнал: International Journal of Modern Education and Computer Science (IJMECS) @ijmecs

Статья в выпуске: 9 vol.5, 2013 года.

Бесплатный доступ

Face recognition is the most successful form of human surveillance. Face recognition technology, is being used to improve human efficiency when recognition faces, is one of the fastest growing fields in the biometric industry. In the first stage, the age is classified into eleven categories which distinguish the person oldness in terms of age. In the second stage of the process is face recognition based on the predicted age. Age prediction has considerable potential applications in human computer interaction and multimedia communication. In this paper proposes an Eigen based age estimation algorithm for estimate an image from the database. Eigenface has proven to be a useful and robust cue for age prediction, age simulation, face recognition, localization and tracking. The scheme is based on an information theory approach that decomposes face images into a small set of characteristic feature images called eigenfaces, which may be thought of as the principal components of the initial training set of face images. The eigenface approach used in this scheme has advantages over other face recognition methods in its speed, simplicity, learning capability and robustness to small changes in the face image.

Age prediction, face recognition, gender prediction, eigenface, eigenvectors

Короткий адрес: https://sciup.org/15014585

IDR: 15014585

Текст научной статьи Age Dependent Face Recognition using Eigenface

Published Online October 2013 in MECS DOI: 10.5815/ijmecs.2013.09.06

Biometric identification is the technique of automatically identifying or verifying an individual by a physical characteristic or personal trait. The term “automatically” means the biometric identification system must identify or verify a human characteristic or trait quickly with little or no intervention from the user. Biometric technology was developed for use in high-level security systems and law enforcement markets. The key element of biometric technology is its ability to identify a human being and enforce security [1].

Biometric characteristics and traits are divided into behavioural or physical categories. Behavioural biometrics encompasses such behaviours as signature and typing rhythms. Physical biometric systems use the eye, finger, hand, voice, and face, for identification.

Recognition of the most facial variations, such as identity, expression and gender, have been extensively studied. Automatic age estimation has rarely been explored. In contrast to other face variations, aging variations presents several unique characteristics which make age estimation a challenging task. Since human faces provide a lot of information, many topics have drawn attention and thus have been studied intensively. The most prominent thing of these is face recognition. Other research topics include predicting feature faces, classifying gender, and expressions from facial images, and so on. However, very few studies have been done on age classification or age estimation. In this research, we try to prove that computer can estimate/classify human age according to features extracted from human facial image using eigenfaces.

Humans are not perfect in the task of predicting the age of the subjects based on facial information. The accuracy of age prediction by humans depends on various factors, such as the ethnic origin of a person shown in an image, the overall conditions under which the face is observed, and the actual abilities of the observer to perceive and analyse facial information. The aim of this experiment was to get an indication of the accuracy in age estimation by humans, so that we can compare their performance with the performance achieved by machines.

.

-

II. Literature Review

Accurate age prediction is one of the most important issues in human communication. It is essential part of human-computer interaction. With the advancement in technology, one thing that concerns the whole world and especially in the developing countries is the tremendous increase in the population. With such a rapid rate of increase, it is becoming difficult to recognize each and every individual because we have to maintain copies either in digital or hard copy format of every individual at different time periods of his life. Sometimes database has the required information of that particular individual, but it’s of no use as it is now obsolete. With age a person’s facial features changes and it becomes difficult to identify a person given an image of his at two different ages.

Age prediction from human faces is a challenging problem with a host of applications in forensics, security, biometrics, electronic customer relationship management, entertainment and cosmetology. The main challenge is the huge heterogeneity in facial feature changes due to aging for different humans. Being able to determine the facial changes associated with age is a hard problem, because they are related not only to gender and to genetic properties, but to a number of external factors such as health, living conditions and weather exposure. Furthermore, gender can also play a role in the aging process, as there are differences in the aging patterns and features in males and females. Human age prediction by face images is an interesting yet challenging research topic emerged in recent years. There are some earlier works which aimed at stimulating the aging effects on human faces, which is the inverse procedure of age estimation.

Horng, et.al [2] proposed an approach for classification of age groups based on facial features. The process of the system was mainly composed of three phases: Location, feature extraction and age classification. Two backpropagation neural networks were constructed. The first one employs the geometric features to distinguish whether a facial image is a baby or not. If it is not, then the second network uses the wrinkles features to classify the image into one of three adult groups. This approach is somewhat efficient as it has 99.1% verification rate for the first network and 78.49% for the second. Fukai, et.al [3] proposed an age estimation system on the AIBO (AIBO is autonomic entertainment robot produced by SONY). HOIP face image database and images captured using AIBO were used, they used Genetic Algorithms (GA) as optimization approach to select important features, then they used Self Organizing Map (SOM) algorithm in training the neural network. Age estimation error of this approach is 7.46% which is considered to be reasonable.

Geng, et.al [4] proposed a subspace approach named AGES (Aging pattern Subspace) for automatic age estimation. The basic idea of AGES is to model the aging pattern, which is defined as a sequence of a particular individual’s face images sorted in time order, by constructing a representative linear subspace. The proper aging pattern for a previously unseen face image is determined by the projection in the subspace that can reconstruct the face image with minimum reconstruction error, while the position of the face image in that aging pattern will then indicate its age. The Main Absolute Error of this approach is 6.77% which is better than age estimation system of the AIBO and the ages in AIBO system are between 15-64, but in AGES they are between 0-69. Geng, et.al [5] extended their previous work on facial age estimation (a linear method named AGES). In order to match the nonlinear nature of the human aging progress, a new algorithm named KAGES is proposed based on a nonlinear subspace trained on the aging patterns, which are defined as sequences of individual face images sorted in time order. In the experimental results, the Main Absolute Error is 6.18% that is better than all the compared algorithms but with minor difference with AGES approach. Luu, et.al [6] introduced a novel age estimation technique that combines Active Appearance Models (AAMs) and Support Vector Machines (SVMs), to dramatically improve the accuracy of age estimation over the current state-of-the-art techniques. Characteristics of the facial input images are interpreted as feature vectors by AAMs, which are used to discriminate between childhood and adulthood, prior to age estimation. Age ranges are between 0-69.The Mean Absolute Error is 4.37 % that is better than all previous works. It is worth mentioning that in all the used approaches, the age range classifications are not as we are planning to consider in our classifications.

-

III. Age Prediction

All paragraphs must be justified, i.e. accurate age prediction is one of the most important issues in human communication. It is essential part of human-computer interaction. With the advancement in technology, one thing that concerns the whole world and especially in the developing countries is the tremendous increase in the population. With such a rapid rate of increase, it is becoming difficult to recognize each and every individual because we have to maintain copies either in digital or hard copy format of every individual at different time periods of his life. Sometimes database has the required information of that particular individual, but it’s of no use as it is now obsolete. With age a person’s facial features changes and it becomes difficult to identify a person given an image of his at two different ages.

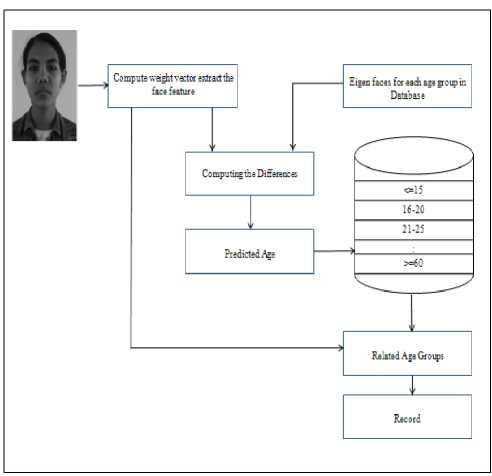

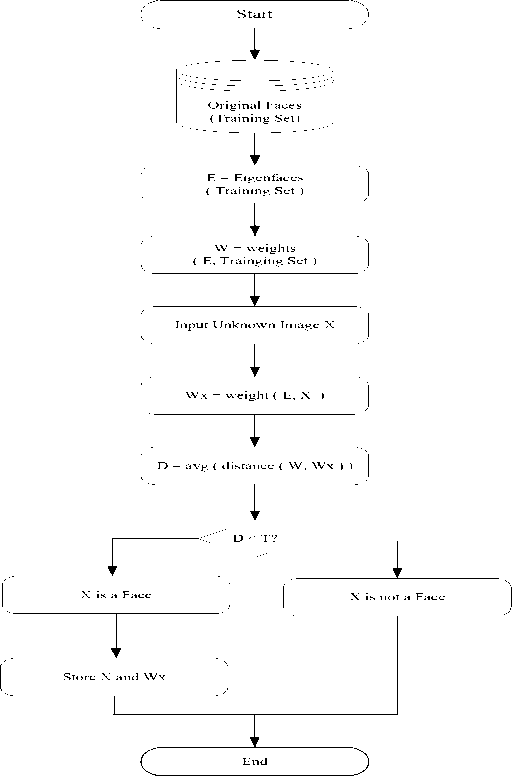

Figure 1. System Flow Diagram of the Age Prediction

Face region is extracted from a real image. Firstly, noise filtering and image adjusting processes are performed for image enhancing. Eleven age individual groups are included in a face database. Within a given database, all weight vectors of the persons within the same age group are averaged together. This creates “a face class”. When a new image comes in, its weight vector is created by projecting it onto the face space. The face is then matched to each face class that gives the minimum Euclidean distance. A ‘hit’ is occurred if the image nearly matches with its own face class. And then the age group that gives the minimum Euclidean distance will be assumed as the age of the input image. The record of the corresponding person is obtained by comparing with the estimated age group. The over view of the proposed system is illustrated in Fig1.

-

IV. Face Recognition

Since the beginning of the 1990s, the face recognition problem has become a major issue in computer vision, mainly due to the important real world applications of face recognition. On the theoretical side, face recognition is a specific and hard case of object recognition. Faces are very specific objects whose most common appearance (frontal faces) roughly looks alike. Subtle changes make the faces different .

Input Face Image

Preprocessing Noise reducing, Scaling, Cropping,...,etc.

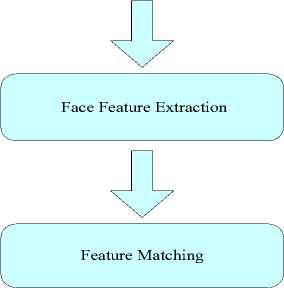

Figure 2. Steps in Face Recognition

In recent years, several face recognition systems have been proposed by many researches with large variations concerning preprocessing, feature extraction, inter pattern representation and of course classification. The development of face recognition over the past years allows an organization into two types of recognition namely frontal view and profile view or side view. In frontal view, both eyes and lip corner are visible. In side view or profile view, at least one eyes or one corner of the mouth becomes self occluded because of the head turn. A typical face recognition system includes the following steps:

-

(i) Extract human face area from images, i.e. detect and locate face.

-

(ii) Locate a suitable representation of the face region and

(iii)Classify the representations.

The processes in face recognition are generally classified as input the face image, then preprocessing that input face image such as removal of noise, scaling, cropping and so on. General Steps in Face Recognition is shown in Fig2.

-

A. Image Preprocessing

One of the major issues with solving the face recognition problem is the wide variety of appearances a face can take when viewed under different conditions. Examples of this include illumination, shadows, pose or expression. The aim of pre-processing in a face recognition system is to try and remove variant factors from a face image in order to allow the later stages of the process to work more effectively. The nature of many face detection algorithms means that many are very sensitive to certain properties such as illumination and as such pre-processing is a very important factor in the overall face detection system. The pre-processing step reduces the irrelevant information, eliminates noise and analyses the input image.

-

1) Removal of Noise : There are many kinds of adding noise to an image, such as ‘gaussian for Gaussian white noise, 'localvar' for zero-mean Gaussian white noise with an intensity-dependent variance, 'poisson' for Poisson noise, 'salt & pepper' for "on and off" pixels 'speckle' for multiplicative noise. This system use 'salt & pepper' methods to add noise in training image, see in Fig3(a). ‘Median filtering’ method is good method to remove the noise. Median filtering is a nonlinear operation often used in image processing to reduce "salt and pepper" noise, see in Fig3(b). Median filtering is more effective than convolution when the goal is to simultaneously reduce noise and preserve edges.

Figure 3. (a) Face with ‘Salt and Pepper’ Noise (b) Removing ‘Salt and Pepper’ Noise by Median Filtering

-

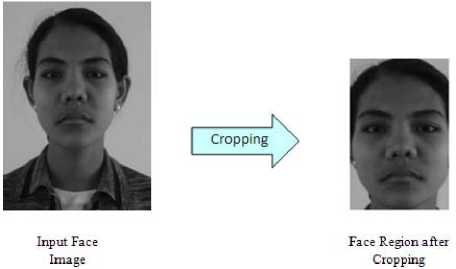

2) Cropping: Cropping creates a new image for the required region. After converting the gray level and noise removing, image is cropped to remove the unnecessary region and background. The system must be able to crop the face image to obtain only the face area. Fig4. illustrates the cropped face images and its original face images.

Figure 4. Cropping the Face Area from the Input Face Image

-

3) Resize : The face images are resized to a uniform size after cropping the face images. In this research, the training images are fixed as 200x300 pixels.

-

B. Eigenface

In mathematical terms, this is equivalent to finding the principal components of the distribution of faces, or the eigenvectors of the covariance matrix of the set of face images, treating an image as a point (or vector) in a very high dimensional space. The eigenvectors are ordered, each one accounting for a different amount of the variation among the face images.

These eigenvectors can be thought of as a set of features that together characterize the variation among face images. Each image contributes some amount to each eigenvector, so that each eigenvector formed from an ensemble of face images appears as a sort of ghostly face image, referred to as an eigenface. Each eigenface deviates from uniform gray where some facial feature differs among the set of training faces; collectively, they map of the variations between faces .

Figure 5. High-level functioning Principle of the Eigenface-based Face Recognition Algorithm

The one-time initialization operations are:

-

• Acquire an initial set of face images (the training set).

-

• Calculate the eigenfaces from the training set, keeping only the M eigenfaces that correspond to the highest eigenvalues. These M images define the face space.

-

• Calculate the corresponding location or distribution in M-dimensional weight space for each known individual, by projecting his or her face images (from the training set) onto the “face space”.

These operations can also be performed occasionally to update or recalculate the eigenfaces as new faces are encountered. Having initialized the system, the following steps are then used to recognize new face images:

-

• Calculate a set of weights based on the input image and the M eigenfaces by projecting the input image onto each of the eigenfaces.

-

• Classify the weight pattern as either a known person or not.

-

• (Optional) Update the eigenfaces and/or weight patterns.

-

C. Calculating Eigenface

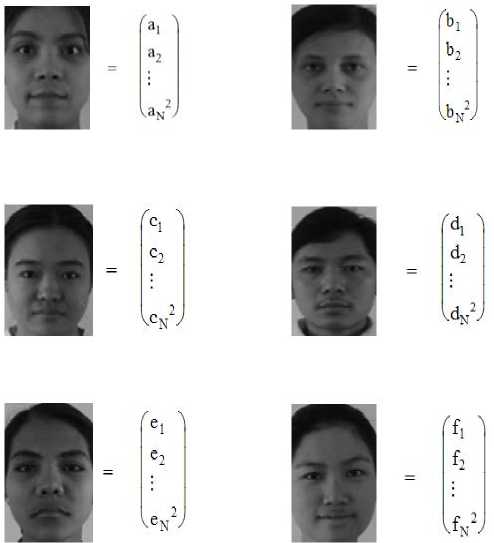

Let a face image I(x, y) be a two-dimensional N by N array of (8-bit) intensity values. Such an image may also be considered as a vector of dimension N2, so that a typical image of size 200 by 300 becomes a vector of dimension in 60,000 dimensional spaces. An ensemble of images maps to a collection of points in this huge space.

Images of faces, being similar in overall configuration, will not be randomly distributed in this huge image space and thus can be described by a relatively low dimensional subspace. The main idea of the principal component analysis (or Karhunen- Loeve expansion) is to find the vectors which best account for the distribution of face images within the entire image space. These vectors define the subspace of face images called “face space”. Each vector is of length N2, describes an N by N image, and is a linear combination of the original face images. Because these vectors are the eigenvectors of the covariance matrix corresponding to the original face images, and because they are face-like in appearance, they are referred to as “eigenfaces.”

m

'a l + a 2 + ...+ a м

1 b l + b 2 + — + bM

M ;

V f I + f 2 +----- + fN )

where M is the number of image in database.

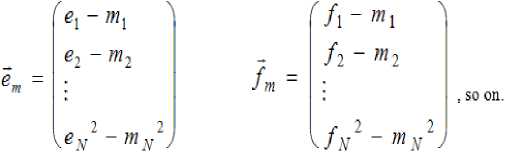

Each face differs vector Ti = фi — m

from the average by the where Ti is am , bm , — and so on.

- 1 M , m = M ^ *N ™

Figure 6. Examples of Some Training Faces Images

and then calculate the matrix A which is N 2 by M

- --

A = [ ambmcmdmemfm.....](3)

The covariance matrix which is N 2 by N 2

Cov = AAT(4)

The matrix Cov , however, is N 2 by N 2 , and determining the N 2 eigenvectors and eigenvalues is an intractable task for typical image sizes.

Compute another matrix, which is M by M as, L = A T A and find the eigenvector of L . Eigenvector of Cov are linear combination of image space with the eigenvector of L .

U = AV

Eigenvector represents the variation in faces. Compute for each face its projection onto the face space,

Q 1 = U ( a m ) , Q 2 = U T ( — ) , Q 3 = U T ( C m ) , — —

Q 4 = U T ( d m ) , Q 5 = U T ( — m ) , Q 6 = U T ( f m )

and so on.

The issue of choosing how many eigenfaces to keep for recognition involves a tradeoff between recognition accuracy and processing time. Each additional eigenface adds to the computation involved in classifying and locating a face. This is not vital for small databases, but as the size of the database increases it becomes relevant.

The input image is changed into the matrix form for recognizing a face as shown in Fig7.

results of the eigenface representation over a small number of face images (as few as one) of each individual.

Then reconstruct the face from the eigenface from the following equation,

----*-

^ = U Q

After that compute the distance between the face and its reconstruction

^ = II r m - ^ II 2 (10)

We can distinguish between a known face or not by using the thread hole value 9 ,

If ^ < 9 and f i > 9 , where i = 1,2,3,, ,M

Then it is a new face.

If ^ < 9 and { f i } < 9

Then it is a known face.

f r l1 r2

:

"

„ 2

V rN )

Figure 7. Face Image for Classify

And then subtract the average face from the above image matrix.

-

V. Conclusions

It is important to point out that, in the case of age prediction by humans, the whole face image (including the hairline) was presented to observers, whereas in the case of age prediction by machines, in effect only information from the internal facial area was utilized. Also we will work on improvement of the luminosity normalized method, and must aim at improvement in accuracy.

( r1- m l ^

r2 - m 2

'

V rN2 - mN2 )

-

VI. Result of Age Prediction

A new face image ( ф ) is transformed into its eigenface components r m . Compute its projection on to the face space.

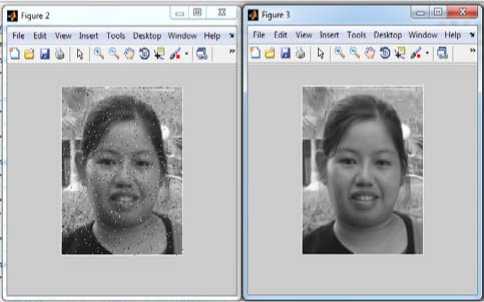

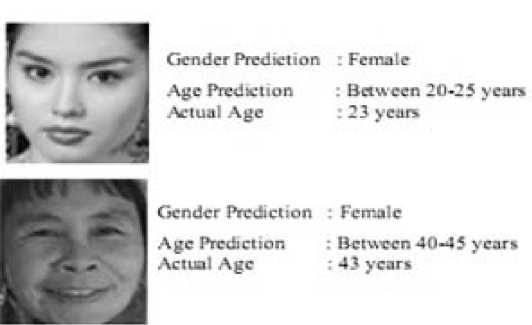

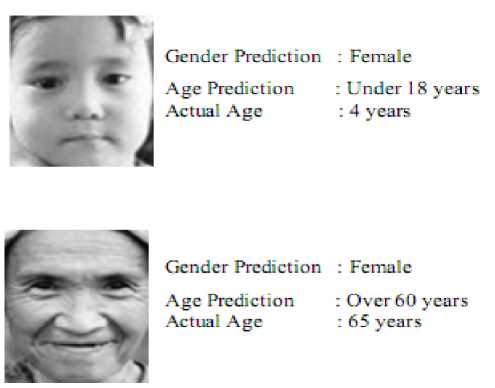

The following figures show the prediction results of different age groups by using testing image samples. The first prediction result is between 20-25 years and the actual age is 23 years. The second actual age is 43 year-old female and her prediction result is between 40-45 years. According to the following results, more accurate and precise prediction age can be known for any unseen faces.

n = U T ( r m )

The simplest method for determining which face class provides the best description of an input face image or compute the distance in the face space between the face and all known faces from the following equations,

f i2 = || O-Q i ||2 , for i = 1,2,., M (8)

Where Qt is a vector describing the itth face class.

The face classes Q i are calculated by averaging the

Figure 8. Some Results of Age Prediction

Mandalay, Myanmar in 2006. She is currently attending her Ph.D at University of Computer Studies, Yangon, Myanmar. Her research interests mainly include face aging modeling, face recognition, and perception of human faces.

Acknowledgment

The author is grateful to her family who specifically offered strong moral and physical support, care and kindness.

Список литературы Age Dependent Face Recognition using Eigenface

- P.Philips, "The FERET database and evaluation procedure for face recognition algorithm, "Image and Vision Computing, vol.16, no.5, pp.295-306, 1998.

- Horng, W., Lee, C. and Chen, C. "Classification of Age Groups Based on Facial Features", Journal of Science and Engineering, Vol. 4, No. 3, pp. 183-192, 2001.

- Fukai, H., Nishie, Y., Abiko , K., Mitsukura, Y., Fukumi, M. and Tanaka, M. "An Age Estimation System on the AIBO", International Conference On Control, Automation And Systems, pp.2551-2554, 2008.

- Geng, X., Zhou, Z. and Smith-Miles, K. "Automatic Age Estimation Based on Facial Aging Patterns", IEEE Transaction On Pattern Analysis And Machine Intelligence, Vol. 29, No. 12, pp.2234-2240, December 2007.

- Geng, X., Smith-Miles, K. and Zhou, Z. "Facial Age Estimation by Nonlinear Aging Pattern Subspace", Proceedings Of The 16th ACM International Conference on Multimedia , pp. 721-724, 2008.

- Luu, K., Ricanek Jr., K. and Y. Suen, C. "Age Estimation using Active Appearance Models and Support Vector Machine Regression", Proceedings Of The IEEE International Conference On Biometrics :Theory Applications And System , pp.314-318 ,2009.