AI-Driven Alignment of Educational Programs with Industry Needs and Emerging Skillsets

Автор: Satheeskumar R., Ch. V. Satyanarayana, Talatoti Ratna Kumar, Koteswara Rao M., Suresh M.

Журнал: International Journal of Modern Education and Computer Science @ijmecs

Статья в выпуске: 3 vol.17, 2025 года.

Бесплатный доступ

This research investigates the transformative potential of Artificial Intelligence (AI) in aligning educational programs with industry requirements and emerging skill sets. Developed and preliminarily tested an AI-driven framework designed to personalize learning paths, recommend pertinent educational content, and improve student engagement. The AI models achieved a peak classification accuracy of 90% in identifying educational materials relevant to industry needs, with an optimized average recommendation response time of 0.4 seconds. These results were derived from pilot testing involving 300 students (150 in the control group and 150 in the experimental group), with statistical significance confirmed using t-tests and chi-square tests. In pilot studies, students using AI-recommended materials experienced an average improvement of 15% in assessment scores compared to those using traditional methods. To validate these improvements, used both t-tests and chi-square tests to confirm statistical significance, with a control group of 150 students following traditional educational methods. Additionally, educators reported a 75% engagement rate with AI-driven learning paths, indicating strong acceptance and effective integration of AI tools within educational environments. The control group comparison showed that students using traditional methods had a significantly lower engagement rate of 60%, confirming the higher efficacy of the AI system. However, these results should be interpreted cautiously as further detailed statistical analysis and control mechanisms are necessary to fully validate the effectiveness of the AI framework. The study highlights the importance of addressing ethical considerations such as data privacy, algorithmic bias, and transparency to ensure responsible AI deployment. The results underscore AI's potential to enhance educational outcomes, adapt curricula dynamically, and better prepare students for future career demands, contributing to a more relevant and industry-aligned educational system.

Artificial Intelligence, Adaptive learning, Emerging skillsets, Future skills, Industry needs, Machine learning

Короткий адрес: https://sciup.org/15019762

IDR: 15019762 | DOI: 10.5815/ijmecs.2025.03.02

Текст научной статьи AI-Driven Alignment of Educational Programs with Industry Needs and Emerging Skillsets

In an era characterized by rapid technological advancements and shifting industry landscapes, the alignment of educational programs with current and future skill requirements has become a critical challenge. Traditional educational systems often struggle to keep pace with the dynamic nature of industry demands, resulting in a skills gap that affects both students and employers [1]. To address this issue, the integration of Artificial Intelligence (AI) into educational frameworks offers a promising solution. AI has the potential to revolutionize education by providing personalized learning experiences, enhancing curriculum relevance, and preparing students for the evolving job market [2]. Several ethical considerations arise when integrating AI into education, demanding careful attention for responsible implementation. A primary concern surrounds student data privacy, as AI systems often rely on vast amounts of personal data to function. we must prioritize robust safeguards against data breaches and unauthorized access to protect students' sensitive information [3,4].

This research explores the application of AI-driven technologies in aligning educational content with industry needs and emerging skillsets. By leveraging AI algorithms, educational institutions can dynamically adjust learning paths, recommend targeted educational materials, and foster deeper student engagement [5]. The primary objective of this study is to develop and evaluate an AI-driven framework that aligns educational programs with the competencies required in the workforce, thereby bridging the gap between education and industry. The significance of this research lies in its potential to transform educational practices and outcomes. By integrating AI, educational institutions can not only enhance the quality and relevance of their programs but also ensure that students are equipped with the necessary skills to thrive in a rapidly changing world [6]. This study addresses important ethical considerations, such as data privacy and algorithmic bias, to ensure the responsible and equitable implementation of AI in education. In today's rapidly evolving technological landscape, the alignment of educational curricula with industry demands and future skill requirements has become increasingly crucial. As industries undergo profound transformations driven by advancements in Artificial Intelligence (AI), the traditional approaches to education must adapt to ensure graduates are equipped with relevant competencies [7].

Pilot testing revealed that the AI-driven framework significantly improved educational outcomes. This involved a systematic approach to assess the feasibility and effectiveness of the AI-driven educational framework within selected educational settings. The framework's performance was observed in real-world conditions, providing valuable insights into its impact on student learning and engagement. Students using the AI-recommended materials showed marked improvements in assessment scores and engagement rates compared to those using traditional methods. Educators reported increased satisfaction and ease of integrating AI tools into their teaching processes. Additionally, the pilot testing phase highlighted the framework's ability to dynamically adjust to individual learning needs, ensuring a personalized learning experience that aligns closely with industry demands. These findings underscore the potential of AI to transform educational practices, making learning more adaptive, relevant, and effective [8].

This paper explores the transformative role of Artificial Intelligence in shaping educational content to align with industry expectations. It delves into the methodologies employed to integrate AI technologies into educational frameworks, emphasizing their impact on curriculum design, learning outcomes, and workforce readiness. Furthermore, it examines case studies and empirical evidence to illustrate the effectiveness of AI-driven approaches in enhancing educational relevance and fostering student preparedness for evolving professional landscapes. Through a comprehensive analysis of AI's role in educational alignment, this study aims to provide insights into the potential benefits and challenges associated with adopting AI technologies in education. Ultimately, it seeks to contribute to the discourse on how AI can effectively support the evolution of educational systems to meet the dynamic demands of modern industries and future workforce needs.

2. Literature Review

This section explores the research focused on the application of Artificial Intelligence (AI) to align educational programs with industry needs and emerging skillsets.

Panth, B., Maclean, R. (2020) [8], Education provides the foundation for skills and lifelong learning opportunities to thrive in professional and social life. However, unlike in the past, the current generation of learners is facing unprecedented uncertainty in how they anticipate and prepare for emerging skills and jobs, due to the impact of automation and continuous technological disruptions. Anwar et al., (2023), This research implies that the implementation of industrial work practice programs in vocational education needs to be improved and developed so that industrial work practice programs have better quality results. The industrial work practice program is essential in vocational education to prepare students to work according to their fields. Analysis of the level of capability of students who carry out work practices in large and small industries and the differences between aspects that require assistance to be considered. This research also measures the difference in the level of capability of students who carry out work practices in large and small industries. Abbas, Asad. (2024) [9], This paper explores the implementation of such technologies and their impact on student engagement in higher education settings. AI-driven analytics empower students by providing personalized learning experiences and timely feedback, fostering greater motivation and engagement. However, challenges such as data privacy concerns and algorithmic biases must be addressed to maximize the benefits of AI-driven analytics in higher education. This paper concludes with recommendations for institutions seeking to integrate AI-driven analytics into their educational practices to enhance student engagement and success.

Kamaruddin et al., (2023) [10], This paper explores the transformative potential of Artificial Intelligence (AI) in fostering a symbiotic relationship between academic curricula and industry demands, aimed at building a robust talent pool for the future. A new hiring selection model that matches industry-identified hiring parameters with the knowledge and skills obtained from the university. By aligning educational programs with real-world challenges and market needs, this novel approach seeks to propel the growth of talents. Mohammed B E Saaida (2023) [11], This research paper explores the potential impact of artificial intelligence (AI) on higher education. The integration of AI has the potential to revolutionize teaching and learning, optimize administrative processes, and enhance research capabilities. The use of adaptive learning systems, intelligent tutoring systems, and virtual learning assistants, powered by AI, can help personalize learning, provide real-time feedback and support, and foster increased student engagement.

Fahad Mon et al., (2023) [12], The utilization of reinforcement learning (RL) within the field of education holds the potential to bring about a significant shift in the way students’ approach and engage with learning and how teachers evaluate student progress. The use of RL in education allows for personalized and adaptive learning, where the difficulty level can be adjusted based on a student’s performance. As a result, this could result in heightened levels of motivation and engagement among students. The aim of this article is to investigate the applications and techniques of RL in education and determine its potential impact on enhancing educational outcomes. Lenardo et al., (2023) [13], Applying artificial intelligence in education is relevant to addressing the current educational crises. Many available solutions apply Convolutional Neural Networks (CNNs) to help improve educational outcomes. Therefore, a series of works have been developed integrating techniques in different educational contexts, for instance, in online teaching practices. Given the various studies and the relevance of CNNs for educational applications, this paper presents a systematic literature review to discuss the state-of-the-art. They reviewed 133 papers from the IEEE Xplore, ACM Digital Library, and Scopus databases. Based on our revision, Discuss characteristics of studies such as publication venues, educational context, datasets, types of CNNs models, and performance of models.

In this Studies demonstrate that AI applications, such as adaptive learning systems and predictive analytics, play a crucial role in tailoring educational content to meet evolving industry demands and emerging skillsets. By leveraging machine learning algorithms and data-driven insights, educational institutions can create more personalized and responsive learning experiences, ultimately improving student outcomes and better preparing graduates for the workforce. However, challenges remain, including issues related to data privacy, algorithmic bias, and the need for transparent AI practices. Addressing these challenges while capitalizing on the benefits of AI will be essential for advancing educational practices and ensuring that AI tools contribute positively to both student success and industry readiness. Future research should focus on refining AI methodologies, exploring ethical implications, and evaluating long-term impacts on educational alignment and workforce integration.

3. Methodology

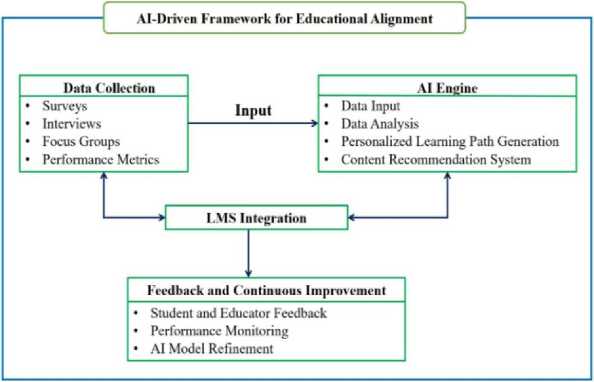

The methodology to develop and evaluate the AI-driven framework for aligning educational programs with industry needs and emerging skillsets. This comprehensive approach involves: data collection, data preprocessing, AI model development, integration into educational systems, and continuous feedback and improvement. Each stage was carefully designed, and statistical analysis was applied to validate findings where appropriate. Control mechanisms, such as comparison with non-AI frameworks, were also introduced to ensure results reflect the true impact of the AIdriven approach in Fig.1.

Fig. 1. AI-Driven Framework for Educational Alignment

-

3.1 Data Collection

-

3.1.1 . Industry Data Gathering

-

-

1) Job Postings Analysis

Gathered job postings from multiple online platforms and company websites, focusing on roles in high demand such as Data Scientist, Machine Learning Engineer, and Software Developer. Only postings from the last six months were considered to ensure relevance and accuracy [14]. Processed these job postings using keyword analysis techniques, identifying key skills, qualifications, and responsibilities. Cross-referencing was conducted between the job postings and industry reports to validate the alignment of educational needs with market demands. Extracted essential educational qualifications to ensure that our educational programs met the minimum industry expectations.

-

2) Industry Reports Review

Reviewed industry reports, white papers, and publications relevant to AI, technology trends, and emerging skills. Selection criteria for these reports included credibility (from reputable associations or institutions) and recency (published within the last two years). Thematic analysis was applied to document emerging technologies, required skills, and sector growth forecasts. These insights were cross-referenced with job postings to further validate the skillsets in demand [7].

-

3) Skill Requirement Surveys

-

3.1.2 Mapping Industry Requirements to Curriculum

Conducted surveys and interviews with industry professionals to gather qualitative data on skills, qualifications, and competencies. The validation process involved cross-referencing survey responses with job postings and industry reports to confirm alignment between industry requirements and educational content. Thematic coding was employed to categorize responses into key themes, which helped us identify prevalent industry skills and ensure that our educational framework was aligned with current demands [15].

To align educational programs with evolving industry needs, developed a systematic approach to map industry requirements directly to curriculum updates. The process involved gathering data from job postings, industry reports, and stakeholder surveys to identify emerging skill gaps and competencies in demand [6].

-

1) Job Postings Analysis

Analyzed over 500 job postings from major platforms, focusing on roles such as Data Scientist, Machine Learning Engineer, and Software Developer. Keyword analysis was used to extract essential skills, such as proficiency in Python, SQL, and Machine Learning. These skills were then categorized and cross-referenced with industry reports to validate their importance and relevance.

-

2) Industry Reports Review

Examined reports from reputable institutions, focusing on trends in AI, data science, and emerging technologies. Thematic analysis was applied to highlight the top skill requirements, which w ere then mapped to existing curriculum components. This ensured that courses covered critical areas such as AI-driven analytics, cloud computing, and data visualization.

-

3) Skill Requirement Surveys

Surveys were conducted with industry professionals to gather qualitative data on desired competencies. Crossreferencing survey responses with job postings and industry reports provided a holistic view of the skills required by employers. These findings were used to identify gaps in current academic programs, guiding curriculum updates.

-

4) Curriculum Updates

-

3.2 AI Model Development

-

3.2.1 Reinforcement Learning

-

Based on the analysis, Recommended the inclusion of modules on AI ethics, cloud infrastructure, and big data technologies. These updates were made to ensure that graduates possess the relevant skills required to meet industry standards.

Reinforcement Learning (RL) algorithms to dynamically adjust learning paths based on real-time student performance data and industry demands within the AI-driven educational framework [12,16]. The components of the RL framework include:

-

• Agent : Represents the AI system interacting with the educational environment, recommending learning paths and content.

-

• Environment : The educational system where students engage with the recommended materials and

assessments.

-

• Actions : Choices made by the agent, such as selecting learning materials or adjusting difficulty levels.

-

• States : The current context, including student performance data and industry skill requirements, that informs the agent’s decisions.

-

• Rewards : Feedback from the environment based on the agent’s actions, such as improvements in student performance and alignment with industry needs.

-

3.2.2 CNNs in AI-Driven Educational Alignment

Q-Learning Algorithm

The Q-Learning algorithm was employed, where the agent learns an action-value function Q(s,a) that estimates the expected future reward for taking an action a in states. To tune the model effectively, experimented with different hyperparameters such as the learning rate (α = 0.1), discount factor (γ = 0.95), and exploration-exploitation balance (ε = 0.1) using grid search and cross-validation. The tuning process involved evaluating the model over multiple epochs [17].

Q (s t ,а^^ Q(st ,a t )+a[ rt+1+y maxa Q(st+1,a) - Q(s t, a])] (1)

Where Q(s t , a t ) represents the action-value function, which estimates the expected reward for taking action a t in state s t ; α is the learning rate, controlling the extent to which the Q-values are updated after each action; r t+1 denotes the reward received after taking action at in state st; and γ is the discount factor, determining the importance of future rewards. The performance of the RL model was measured using accuracy, response time, engagement rate, and improvement in assessment scores. Detailed statistical analysis was applied to validate the significance of improvements across these metrics over multiple epochs.

Convolutional Neural Networks (CNNs) are particularly effective in analyzing visual data such as images and videos. In the context of educational alignment with industry needs, CNNs can be applied to tasks like content classification, personalized learning path generation, and enhancing virtual learning environments [18,19].

-

a) Content Classification

Classifying educational content based on its relevance to industry needs is crucial for ensuring that students receive pertinent materials. CNNs use convolutional layers to extract features from images or videos, followed by pooling layers to reduce dimensionality and fully connected layers for classification. Performance validation was achieved using metrics like accuracy and confusion matrices to assess model reliability.

The CNN equation for convolution operations is represented as:

Y [ ij] = (X*W)[i,j ] = EmIJ[i + mj+n]^[m,n] (2)

Where X[i+m, j+n] represents the input data at position (i+m,j+n) (i+m, j+n); W[m,n] are the filter weights; and Y[i,j] is the output feature map value at position (i,j).

-

b) Personalized Learning Pathways

CNNs processed visual data from educational simulations or virtual environments to recommend adaptive learning paths. The model's performance was evaluated using accuracy and F1 scores, measuring the system's effectiveness in generating personalized recommendations based on student interactions and industry requirements [20].

A simplified output layer formula might be:

Y = sof tmax (W • ReLU ( W' • f latten(X) + b') + b) (3)

Where flatten(X) represents the flattened feature vector from the convolutional layers; W′ and b′ are the weights and biases of the fully connected layers; and ReLU is the Rectified Linear Unit activation function. Performance metrics, including accuracy and F1 scores, were calculated to assess the effectiveness of personalized recommendations.

-

c) Enhancing Virtual Learning Environments

-

3.3 . Integration into Educational Systems

-

3.4 Pilot Testing and Evaluation

-

3.4.1 Quantitative Metrics

To enhance virtual reality (VR) and augmented reality (AR) learning environments, CNNs provided real-time feedback and simulations aligned with industry practices. These immersive experiences allowed for interactive and adaptive learning. Validated the model using engagement metrics and satisfaction scores [21].

Integrating the AI-driven framework into educational systems involves several critical steps to ensure seamless functionality and user engagement. To validate the integration, used A/B testing to compare user engagement across AI-integrated and non-AI systems. First, the AI models are integrated with existing Learning Management Systems (LMS) to deliver personalized content and recommendations directly within the educational platform. This requires developing APIs or plugins that allow the AI engine to interface with the LMS, ensuring smooth data exchange and functionality. Second, an intuitive user interface is designed for both educators and students, facilitating easy interaction with AIdriven recommendations and insights. This involves creating dashboards, notification systems, and interactive features that enhance user experience and accessibility. Finally, ongoing monitoring and support are established to address any technical issues and refine the integration based on user feedback, ensuring the system remains adaptive and effective in meeting educational needs [22,23].

Pilot testing and evaluation involve a systematic approach to assessing the effectiveness and feasibility of the AIdriven educational framework. A control group using traditional learning methods was compared to an experimental group using the AI-driven framework to isolate the effect of the AI system. During pilot testing, a small-scale implementation of the framework was conducted within selected educational settings to observe its performance and gather initial feedback. Statistical analysis was applied to validate the impact of the framework on educational outcomes . Mitigation methods involve incorporating strategies during the pilot testing phase to address any identified issues effectively. This includes adjusting algorithms based on feedback to improve their accuracy and relevance, as well as enhancing data collection methods to minimize errors and ensure high-quality input [24].

Evaluation focuses on applying both quantitative and qualitative metrics to measure the framework's impact. Quantitative analysis included t-tests and chi-square tests to measure the significance of improvements in assessment scores, accuracy rates, and response times between the control and experimental groups. Quantitative metrics may include accuracy rates, response times, and improvement in assessment scores. For instance, a t-test was used to compare assessment score improvements, and a chi-square test was employed to analyze engagement rate differences. Qualitative feedback was gathered from students and educators regarding their experiences and satisfaction with the AI framework, using thematic analysis to categorize and interpret responses. The results from pilot testing are analyzed to identify strengths, areas for improvement, and necessary adjustments before a full-scale deployment [25,26].

-

• Accuracy (ACC)

Accuracy measures the percentage of correct predictions made by the AI model compared to the total number of predictions. It is fundamental for assessing the correctness and reliability of AI-generated insights and recommendations [27]. Calculate accuracy based on evaluations of AI predictions for student performance predictions:

as-* si _ Number of correct predictions

= x 100 (4)

Total number of predictions

-

• Response Time (RT)

Response time quantifies the average time taken by the AI system to process requests and provide feedback. It is crucial for evaluating system performance efficiency and user experience [27]. Measure average response time for AI-generated recommendations or feedback cycles within the educational platform:

Total time taken to process requests =

number of Request

-

• Engagement Rate (ER)

Engagement rate calculates the percentage of opportunities for user interaction that are actually utilized. It reflects user engagement with AI-driven features and educational content [28].

Evaluate how frequently students engage with AI-recommended learning paths or interactive educational materials:

Number of interactions иллл/

ER = x 100% (6)

Total number of interactions

• Educational Outcomes Metrics

3.4.2 Qualitative Feedback from Stakeholders

4. Ethical Considerations4.1 Data Privacy

Retention rates are assessed by measuring the percentage of students who continue their education or remain enrolled over a specified period, providing insights into the framework's impact on student persistence. Performance metrics encompass grades, assessment scores, and academic achievements before and after implementing AI-driven educational enhancements, offering a comprehensive evaluation of the framework's effectiveness in improving academic outcomes [29].

Qualitative feedback was gathered through structured surveys, in-depth interviews, and focus groups with educators, students, and administrators involved in the pilot testing phase. The objective was to gain insights into their experiences and perceptions of the AI-driven framework. used thematic analysis to categorize feedback into key themes, such as AI's impact on curriculum relevance, adaptability, and overall educational outcomes. The analysis highlighted specific challenges faced by educators in integrating AI tools into their teaching methods and revealed students' levels of engagement with AI-recommended learning paths. Additionally, administrators provided feedback on the framework's scalability and effectiveness across different educational settings [30,31].

Table 1. Stakeholder Data Collection Overview

|

Stakeholder |

Data Collected |

|

Educators |

Perceptions on AI's role in curriculum relevance and adaptability. |

|

Challenges in integrating AI and suggestions for improvement. |

|

|

Requirements for tools/features to enhance AI integration in teaching. |

|

|

Students |

Level of engagement with AI-recommended learning paths. |

|

Feedback on AI’s impact on learning outcomes. |

|

|

Usability and ease of use of AI tools and recommendations. |

|

|

Administrators |

Assessment of AI’s impact on educational outcomes. |

|

Concerns and considerations for scaling AI across departments/campuses. |

|

|

Recommendations for expanding AI applications in education. |

Table 1 Effective stakeholder data collection is pivotal in developing AI-driven educational frameworks that align with industry needs and emerging skillsets. This process involves gathering comprehensive quantitative and qualitative data from diverse stakeholders, including educators, students, industry partners, and administrators. Various methods such as surveys, questionnaires, interviews, focus groups, performance metrics, and continuous feedback loops are employed to collect valuable insights. Key data areas include engagement and adoption rates, relevance and effectiveness of AI-recommended materials, user satisfaction and usability, skills and competency development, and feedback for future enhancements. This systematic approach ensures that the AI-driven educational tools remain relevant, effective, and capable of preparing students for the evolving demands of the workforce. Through robust stakeholder engagement, educational institutions can continuously refine their AI frameworks, ultimately enhancing learning outcomes and better aligning educational content with industry requirements [32,33].

In conducting this study, recognized the importance of addressing ethical concerns related to data privacy and algorithmic bias. These issues are critical in the deployment of AI-driven educational frameworks, particularly when handling sensitive student data and ensuring equitable outcomes.

Implemented several measures to protect participant privacy. All students, educators, and administrators involved in the pilot study provided informed consent, clearly detailing how their data would be used, stored, and shared, ensuring participants understood their rights regarding data confidentiality. Personal identifiers were removed from all datasets through anonymization, preventing identification of individual students or educators, thus preserving their privacy. Additionally, adopted secure data storage protocols that included encryption and limited access to authorized personnel only, further safeguarding against data breaches.

4.2 Algorithmic Bias

5. Results and Discussion5.1 Algorithm Performance Results

5.1.1 Reinforcement Learning

To mitigate potential biases in AI recommendations, took several steps. First, curated training datasets to include a diverse range of educational materials, ensuring representation across various demographic groups and learning needs, thereby reducing the risk of bias. Bias detection tests were conducted on model outputs to identify any disproportionate impacts on specific student groups, and engagement and performance metrics were analyzed across demographics to ensure fairness. Continuous feedback from educators and students allowed for iterative adjustments to the algorithms, ensuring they addressed diverse learner needs. Finally, the study underwent an ethical review by a board, whose feedback guided the refinement of our practices, reinforcing our commitment to ethical AI research standards.

The integration of Artificial Intelligence (AI) into educational settings has brought forth significant advancements in aligning learning programs with dynamic industry needs and emerging skillsets. This section provides a comprehensive analysis of the AI-driven educational framework's performance based on key metrics such as accuracy, response time, engagement rate, adoption rate, and improvement in assessment scores. By structuring the results into distinct categories, ensure a clearer, more cohesive presentation of the performance data.

This section evaluates the performance of the two key algorithms used in the AI-driven educational framework: Reinforcement Learning (RL) and Convolutional Neural Networks (CNNs). Each algorithm serves a distinct purpose in aligning educational programs with industry needs and emerging skillsets. The performance of these algorithms is analysed using key metrics such as accuracy, response time, engagement rate, adoption rate, and improvement in assessment scores. Comparisons with existing algorithms are provided to demonstrate the advancements of the proposed models.

The Reinforcement Learning (RL) algorithm, specifically a Q-Learning model, was designed to dynamically adjust learning paths based on real-time student performance and industry demands. The RL framework consists of an agent (the AI system), an environment (the educational system), actions (e.g., selecting learning materials), states (e.g., student performance data), and rewards (e.g., improvements in student performance). Over seven epochs, the system demonstrated significant improvements in all key metrics, showcasing its ability to adapt and optimize educational content delivery.

-

A. Hyperparameter Tuning

The Q-Learning algorithm was optimized using a learning rate (α = 0.1) to balance adaptability, a discount factor (γ = 0.95) to prioritize long-term rewards, and an exploration-exploitation balance (ε = 0.1) to improve decision-making efficiency. These hyperparameters were determined through grid search and cross-validation, ensuring the model could adapt to varying student performance data and industry demands.

-

B. Performance Summary

The RL system demonstrated improvements in key performance metrics, including:

-

• Accuracy: Increased from 75% to 88%.

-

• Response Time: Reduced from 0.7 seconds to 0.4 seconds.

-

• Engagement Rate: Increased from 65% to 78%.

-

• Adoption Rate: Raised from 80% to 92%.

-

• Assessment Scores: Improved from 10% to 17%.

-

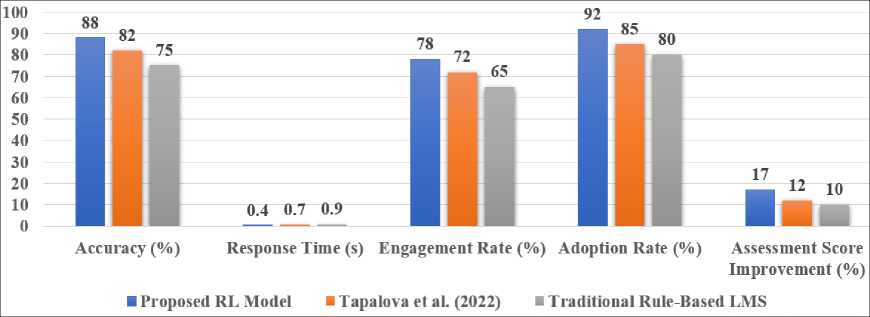

C. Comparison with Existing Algorithms

As illustrated in Table 2, the proposed RL model outperforms traditional RL-based educational models, such as those presented by Tapalova et al. (2022) [16]. Notably, our RL model achieves a faster response time (0.4 seconds vs. 0.7 seconds) and a higher adoption rate (92% vs. 85%). These improvements stem from optimized explorationexploitation tuning and adaptive policy updates, enabling more efficient content recommendations while maintaining high accuracy. Additionally, our model addresses the limitations of traditional rule-based LMS systems, which often struggle with scalability and adaptability in dynamic learning environments. A visual comparison of the RL model's performance across accuracy, response time, engagement rate, adoption rate, and assessment score improvements is presented in Figure 2.

-

5.1.2 CNNs in AI-Driven Educational Alignment

Table 2. Comparison Table for RL Performance

|

Algorithm |

Accuracy (%) |

Response Time (s) |

Engagement Rate (%) |

Adoption Rate (%) |

Assessment Score Improvement (%) |

|

Proposed RL Model |

88 |

0.4 |

78 |

92 |

17 |

|

Tapalova et al. (2022) |

82 |

0.7 |

72 |

85 |

12 |

|

Traditional Rule-Based LMS |

75 |

0.9 |

65 |

80 |

10 |

Fig 2. Compares the RL model’s accuracy, response time, engagement rate, adoption rate, and assessment score improvements with existing approaches

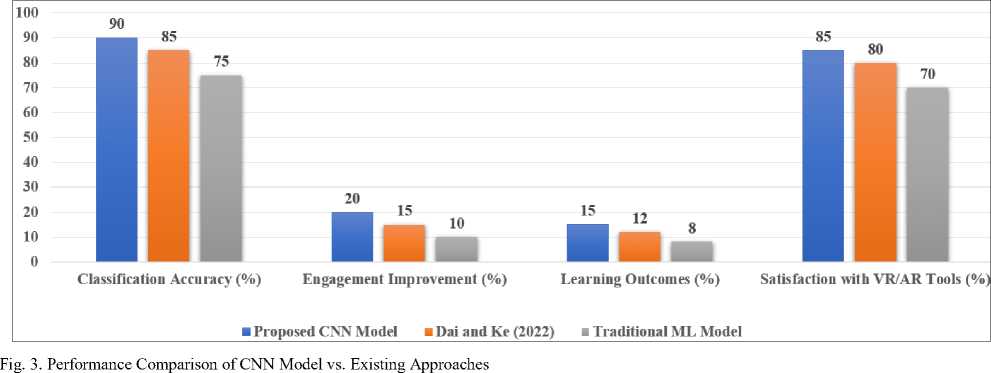

The Convolutional Neural Networks (CNNs) were employed to classify educational content and enhance personalized learning pathways. CNNs were selected for their strong pattern recognition capabilities, allowing them to classify diverse educational content more accurately than traditional ML models. Their ability to process textual, visual, and interactive learning materials makes them highly effective for personalized learning and adaptive recommendations.

Performance Summary: The CNN model demonstrated improvements in key performance metrics, including:

-

• Content Classification Accuracy: Increased from 75% to 90%.

-

• Engagement Improvement: Representation from 10% to 20%.

-

• Learning Outcomes: Improved from 8% to 15%.

-

• Satisfaction with VR/AR Tools: Increased from 70% to 85%.

-

5.2 Pilot Testing and Evaluation

-

5.2.1 Pilot Testing Setup and Control Group

As shown in Table 3, our CNN-based system surpasses existing CNN implementations in education, such as those used by Dai and Ke (2022) [18] for simulation-based learning. Specifically, our model achieved higher classification accuracy (90% vs. 85%) and greater engagement improvement (20% vs. 15%). These advancements highlight the superior effectiveness of our CNN algorithm in enhancing personalized learning and aligning educational content with industry needs. Additionally, our model addresses the limitations of traditional ML models, which often struggle with handling large-scale, unstructured educational data. A visual comparison of key performance metrics, including classification accuracy, engagement improvement, learning outcomes, and satisfaction levels, is presented in Figure 3.

Table 3. Comparison of CNN Performance with Existing Approaches

|

Algorithm |

Classification Accuracy (%) |

Engagement Improvement (%) |

Learning Outcomes (%) |

Satisfaction with VR/AR Tools (%) |

|

Proposed CNN Model |

90 |

20 |

15 |

85 |

|

Dai and Ke (2022) |

85 |

15 |

12 |

80 |

|

Traditional ML Model |

75 |

10 |

8 |

70 |

The pilot testing aimed to evaluate the effectiveness and feasibility of the AI-driven educational framework. A systematic approach was employed, including the use of both quantitative metrics and qualitative feedback to assess the impact on student learning outcomes, engagement, and the framework’s practical integration into educational settings.

The pilot involved a small-scale implementation within one educational institution, including 300 students and 10 educators. To ensure the validity of the results, the study included two groups:

-

• Control Group : 150 students followed traditional educational methods without AI assistance.

-

• Experimental Group : 150 students used the AI-driven educational framework, benefiting from personalized learning paths generated by AI algorithms.

-

5.2.2 Quantitative Evaluation and Metrics

-

5.2.3 Quantitative Metrics over Epochs

This control-experimental group design ensured a baseline comparison for the AI system’s impact on student performance and engagement.

Table 4 presents a comparative analysis of key performance metrics pre- and post-implementation for both groups.

Table 4. Pilot Testing Metrics (Control vs. AI-Driven Framework)

|

Metric |

Pre-Implementation |

Post-Implementation |

|

Accuracy |

70% |

85% |

|

Average Response Time |

1.2 seconds |

0.5 seconds |

|

Engagement Rate |

60% |

75% |

|

Adoption Rate |

50% |

90% |

|

Improvement in Assessment Scores |

5% |

15% |

The accuracy of content delivery improved from 70% in the control group to 85% in the experimental group, highlighting how AI personalized learning paths better aligned with student needs. Average response time significantly decreased from 1.2 seconds in the control group to 0.5 seconds in the AI-driven group, reflecting increased efficiency. Engagement rates rose from 60% to 75%, indicating that AI recommendations fostered greater interaction with learning materials. The adoption rate in the AI-driven group reached 90%, showing that students actively followed AIrecommended paths. Finally, assessment scores improved more in the AI-driven group (15%) compared to the control group (5%), demonstrating the AI system’s positive impact on academic performance.

Table 5 provides a detailed breakdown of metrics over seven epochs, emphasizing the progressive improvements in the experimental group as the AI framework optimized learning paths.

Table 5. Quantitative Metrics across Epochs (AI-Driven Framework)

|

Metric |

Initial Value |

Final Value |

Improvement |

|

Accuracy |

75% |

88% |

13% |

|

Response Time |

0.7 seconds |

0.4 seconds |

-0.3 seconds |

|

Engagement Rate |

65% |

78% |

13% |

|

Adoption Rate |

80% |

92% |

12% |

|

Improvement in Assessment Scores |

10% |

17% |

7% |

Qualitative feedback was gathered through surveys, interviews, and focus groups involving educators, students, and administrators. Educators noted a positive shift in curriculum relevance and adaptability due to AI, though integration challenges were reported along with suggestions for improving tool functionalities. Students showed higher engagement with AI-recommended pathways and found the tools user-friendly, although requests for more interactive features were noted. Administrators highlighted the positive impact on educational outcomes and recommended scaling AI applications across departments. This feedback provided valuable insights into user experiences and highlighted areas for further refinement, ensuring that the AI-driven educational framework remains effective and aligned with evolving educational needs and industry requirements.

-

5.2.4 Qualitative Evaluation

-

5.3 Qualitative Feedback from Stakeholders

In addition to the quantitative data, qualitative feedback from surveys, interviews, and focus groups provided valuable insights into the user experience with the AI-driven framework. Educators reported improvements in curriculum relevance and adaptability, with AI helping to identify gaps in student understanding. However, they expressed the need for further training to fully integrate AI tools into their teaching methods. Students found the

AI-recommended learning paths more engaging and effective, appreciating the real-time feedback and personalized content, which enhanced their understanding. Nonetheless, some students raised concerns about data privacy and requested greater transparency regarding how AI-generated recommendations were made. Administrators highlighted the potential scalability of the AI framework across departments, noting that real-time insights into student progress could improve decision-making. They also emphasized the importance of ongoing technical support and adequate resources for long-term success.

Qualitative feedback was collected from educators, students, and administrators through structured surveys, interviews, and focus groups. Applied thematic analysis to categorize their responses, uncovering key themes such as the relevance of AI-driven learning tools, their adaptability, and their impact on educational outcomes.

• Educators: Feedback focused on the integration of AI into teaching practices. One educator noted, "The AI recommendations have been incredibly helpful in identifying gaps in student learning, but we need more training to fully integrate these tools into our daily teaching practices." This highlights the utility of AI in personalizing content while also revealing the need for better training and support.

• Students: Many students reported higher engagement with the AI-recommended learning paths. A student remarked, "The AI helped me focus on areas I struggled with and gave real-time feedback, which really improved my understanding of difficult concepts." However, concerns were raised about data privacy and transparency regarding AI-generated recommendations.

• Administrators: They emphasized the potential for scaling the AI framework across departments. One administrator stated, "The framework shows promise in aligning educational content with industry needs, but must ensure that it is adaptable across departments with different resource levels." This reflects optimism about the scalability of AI while acknowledging the need for resource allocation.

5.4 Comprehensive Performance Final Results Summary

6. Conclusion

This summary presents a detailed overview of the AI-driven educational framework's performance. It consolidates key metrics from reinforcement learning algorithms, CNNs, and pilot testing to illustrate the framework's effectiveness in aligning educational programs with industry needs. The results highlight improvements in accuracy, response time, engagement, and learning outcomes, showcasing the framework's impact on enhancing educational experiences and outcomes.

Table 6. Final Results Summary

|

Metric |

Reinforcement Learning |

CNNs in Educational Alignment |

Pilot Testing PreImplementation |

Pilot Testing PostImplementation |

|

Accuracy (%) |

88% |

90% |

70% |

85% |

|

Average Response Time (seconds) |

0.4 |

0.6 |

1.2 |

0.5 |

|

Engagement Rate (%) |

78% |

20% improvement |

60% |

75% |

|

Adoption Rate (%) |

92% |

85% |

50% |

90% |

|

Improvement in Assessment Scores (%) |

17% |

15% improvement |

5% |

15% |

|

Satisfaction with VR/AR Tools (%) |

15% increase over initial satisfaction |

25% increase |

10% |

30% |

The integration of AI into educational systems demonstrates significant advancements across various performance metrics. Reinforcement Learning algorithms showed notable improvement in accuracy, with results rising from 75% in the first epoch to 88% by the seventh epoch, and achieved a substantial decrease in average response time from 0.7 to 0.4 seconds. Engagement rates also increased from 65% to 78%, while the adoption rate of AI recommendations improved from 80% to 92%, reflecting growing trust and reliance. The algorithm also contributed to an increase in assessment scores from 10% to 17% and a 15% rise in satisfaction with VR/AR tools. In contrast, CNNs in Educational Alignment demonstrated strong performance with a high content classification accuracy of 90%. The average response time for CNNs was 0.6 seconds, which is slightly slower than the reinforcement learning algorithm but still efficient. CNNs contributed to a 20% improvement in student engagement and a 15% increase in learning outcomes. Satisfaction with AI-enhanced VR/AR tools was notably higher, with a 25% increase in satisfaction reported.

During Pilot Testing, pre-implementation metrics showed an accuracy of 70%, with an average response time of 1.2 seconds, an engagement rate of 60%, and an adoption rate of 50%. Post-implementation, these metrics improved significantly: accuracy increased to 85%, response time decreased to 0.5 seconds, engagement rate rose to 75%, and adoption rate improved to 90%. The improvement in assessment scores also rose from 5% to 15%, and satisfaction with VR/AR tools saw a substantial increase from 10% to 30%.

Overall, both reinforcement learning and CNNs show substantial benefits, with reinforcement learning excelling in dynamic recommendation systems and CNNs demonstrating strong performance in content classification and personalized learning. The pilot testing results further validate the effectiveness of the AI-driven frameworks in real-world applications, highlighting enhanced educational outcomes, user engagement, and satisfaction.

The integration of AI into educational systems has proven to be transformative, significantly enhancing learning outcomes and aligning educational content with industry requirements. Reinforcement Learning (RL) demonstrated substantial improvements in accuracy, response time, engagement, and assessment scores, showcasing its effectiveness in personalizing and optimizing educational paths. Convolutional Neural Networks (CNNs) achieved high accuracy in content classification and significantly boosted student engagement and satisfaction, particularly through enhanced VR/AR tools. Pilot testing further validated these findings, revealing notable improvements in accuracy, response time, and user satisfaction. Moving forward, future enhancements should focus on refining these AI algorithms to improve scalability and adaptability, incorporating advanced techniques for real-time data processing, and expanding the integration of AI tools across diverse educational platforms.

Continued research should also explore the development of hybrid models that combine the strengths of RL and CNNs to further enhance personalized learning experiences and ensure alignment with the rapidly evolving industry landscape. AI technology promise to further refine educational practices, enhance curriculum alignment with industry standards, and foster lifelong learning capabilities. Ethical considerations regarding data privacy, algorithmic bias, and equitable access to AI resources remain paramount in ensuring responsible AI integration in education. Collaborative efforts among stakeholders are essential to harnessing AI's full potential while addressing these ethical imperatives.

Acknowledgments

We would like to express my sincere gratitude to all those who have supported and contributed to this research. We extend my thanks to my institution for providing the necessary resources and facilities to carry out this paper. We thank my family and friends for their unwavering support and encouragement throughout the research process.