An Interactive Approach for Retrieval of Semantically Significant Images

Автор: Pranoti P. Mane, Amruta B. Rathi, Narendra G. Bawane

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 3 vol.8, 2016 года.

Бесплатный доступ

Content-based image retrieval is the process of recovering the images that are based on their primitive features such as texture, color, shape etc. The main challenge in this type of retrieval is the gap between low-level primitive features and high-level semantic concepts. This is known as the semantic gap. This paper proposes an interactive approach for optimizing the semantic gap. The primitive features used are HSV histogram, local binary pattern histogram, and color coherence vector histogram. The mapping between primitive features of the image and its semantic concepts is done by involving the user in the feedback loop. Proposed primitive feature extraction method shows improved image retrieval results (Average precision 73.1%) over existing methods. We have proposed an innovative relevance feedback technique in which the concept of prominent features is introduced. On the application of the relevance feedback, only prominent features which are having maximum similarity are utilized. This method reduces the feature length and increases the efficiency. Our own interactive approach for relevance feedback is not only computationally simple and fast but also shows improvement in the retrieval of semantically meaningful relevant images as we go on increasing the iterations.

Semantic gap, content-based image retrieval, relevance feedback, HSV histogram, local binary pattern, color coherence vector

Короткий адрес: https://sciup.org/15013962

IDR: 15013962

Текст научной статьи An Interactive Approach for Retrieval of Semantically Significant Images

Published Online March 2016 in MECS

It’s a Digital world. Today everything is available in digital form. Now a day everyone prefers to store the images digitally than printing, hence digital image database is growing rapidly. And with the above situation comes a need to find an image from the available database as fast as possible. There are number of algorithms available to retrieve similar images. The retrieval algorithm using primitive features contents such as texture, shape, color is known as content-based image retrieval (CBIR). The other algorithm called Text based image retrieval identifies the similar images based on the textual words linked with the images. But it’s a lengthy manual labor to assign every image some keywords. In case of CBIR, it is observed that the images retrieved do not pass the criteria of user’s intention and thus there is a semantic gap between the images retrieved and the user’s mind. To reduce this gap, we have introduced user’s interface in the system in a form of feedback. This approach is known as the interactive approach [1-3].

The proposed methodology worked at two levels. Initially, the images are ranked by distances to the query image. In the second level, an interactive approach using relevance feedback is used by involving the user in the feedback loop. After every retrieval cycle, the user labels three relevant images which are used to redefine the query and retrieve similar images. The first level comprising of feature extraction using local binary pattern (LBP), HSV histogram (HSVH) and color coherence vector (CCV) [4]. The retrieval results using this proposed methodology are also compared with another feature extraction method [5]. It was marked that our approach for primitive feature extraction [4] proves to be better than other approaches. The relevance feedback technique proposed by us is simple and computationally fast. In addition to this, the retrieval of semantically meaningful images was improved with the increasing iterations.

The rest of the paper is organized as follows. The literature survey is given in section II. The methodology is explained in section III. Experimentation is given in Section IV. The results are discussed in section V. Section VI dictates the conclusion of the work proposed.

-

II. Literature Survey

Rui et al. [1] analyzed that there is the limited application of the proposed approaches for CBIR. Specifically, these approaches have not explored the important characteristics of the retrieval process, i.e. the semantic gap. Here the author proposed a relevance feedback (RF) based CBIR system, which utilize the primitive features of the high-level query given by the user. In every feedback loop, the feature weights are updated according to the user’s feedback. Here the experimentation is done on a large database and promising results were obtained. An RF model for CBIR is proposed where the user assigns weights to image features which are captured by the model and then the results are obtained for retrieval purposes [2]. The above approach has given a good interpretation of data. In addition to this while formulating the query, the negative examples are integrated and, therefore, the retrieval speed and the accuracy is improved.

Grigorova et al. models a feature adaptation concept which is based on relevance feedback [3]. The feature weights and parameters of the query are dynamically adjusted along with the image number and image type to obtain semantically meaningful images. It is marked by the author that there is an improvement in the accuracy as compared to existing RF techniques.

Hong et al. [6] utilized positive as well as negative feedbacks for CBIR. Support Vector Machines (SVMs) were used for the classification of the images into positive and negative class. In this approach the user does not require to provide preference weights for every positive class. An edge-based structural feature extraction system for CBIR is designed by Zhou and Huang [7]. Here, the author used the water filling algorithm. Pure texture images and real world photos are used for experimentation. The features used by the author are found appropriate for the images having significant or prominent edge structure, while the texture features like Wavelet Moments do not yield good results. They are found to be useful for extracting unique edge patterns which are stated as edge-based structural features. In addition to this, a generalized RF technique for tile-based image regional matching is also proposed, however the method failed to give the promising results due to the intrinsic position variant property and constant shape and size of the tiles.

Han and Guo [8] proposed a method that combines primitive or low level image features (LLIFs) and semantics seamlessly. Classification techniques and an RF are used to reduce the gap between low-level image features and high-level human semantics. Bayesian learning technique is utilized for classification of images whereas color coherence histogram, Gabor filter, edge direction coherence histogram are used for feature extraction. Matching degree between keywords is analyzed for similarity measure. In [9] the author used a Riemannian Manifold learning algorithm for reduction of SG in CBIR. The author proposed an RF technique which involved positive and negative (relevant/irrelevant) images stated by the user during the feedback. They have pre-computed the cost adjacency matrix and its Eigenvectors corresponding to the smallest Eigenvalues and then applied the Riemannian Manifolds learning concepts to estimate the boundary between positive and negative images. Claire and Brisset [10] exhibited an ontological way to deal with the mapping between high-level and low-level information, and it is applied to heterogeneous information integration for the configuration of a knowledge server supporting Situation/Threat Assessment and Resource Management (STA/RM) processes. The building of ontology is timeconsuming and should be supported by methodologies and tools.

The local binary pattern is used as a tool to capture facial expression in an image [11]. The local binary pattern played an important role to convey the required facial expression information. In our paper, local binary patterns are used as one of the primitive features to extract primitive feature information.

A survey on different low-level feature extraction techniques is presented in [12]. The author concludes that the Gabor Features, Pyramid Histogram of Oriented Gradient and LBP methods are efficient and give a high precision and classification accuracy.

Xu et al. developed a new RF method which involves short-term memory (STM) to save the feedback information [13]. Image selection is done with the help of STM. In addition to this a novel weight updating method based on feedback information is also proposed by the authors. This method is found to be highly efficient for retrieval of shape-based medical images.

-

III. Methodology

-

A. Preprocessing of Image

Preprocessing of the image is done so as to get the image in the desired form to perform operations on the image. All the database images are resized to 256x256 pixels for ease of operation. The RGB image is first converted to HSV [14-16] format. RGB to HSV conversion is done because this recorded RGB color fluctuates significantly with the camera direction, surface orientation, the illumination point, illumination spectrum and the interaction of light with the object. This variability should be dealt with in one way or another. Moreover, the way how a human perceives color is a complex issue where many efforts are taken to retrieve perceptual similarity. The HSV color model is generally used due to its invariant properties. The hue component does not vary with camera view-point and object orientation which occurs due to illumination and therefore mostly used for the retrieval of the object.

-

B. Feature Extraction

We have used color features [17, 18] like color coherence vector, color histogram, and local binary pattern to identify the similarity between images. Let’s see the brief idea about each used feature.

For a given color space, color histogram [1, 19-22] conveys the information about the quantity of occurrence of each color in an image. It is a graph with color on one axis and a corresponding number of pixels of each color on another axis. The Color histogram does not vary with translation, rotation about an axis perpendicular to an image and slowly varies with rotation about other axes occlusion and change of distance to the object.

A color coherence vector [23, 24] gives us the information whether a pixel belongs to a large similarly colored region. We refer to these significant regions as coherent regions , which are of significant importance in characterizing images. Color coherence vector classifies each pixel as coherent or incoherent depending on the criteria specified by the user. For a pixel to be coherent, it should be a part of a big connected component. Here we have given an area of 50 as a threshold i.e. area greater than 50 comes under big connected component and areas less than 50 comes under small connected components. In this way, the color coherence vector is being calculated.

LBP [25, 26] is one of the suitable features for texture classification. It assigns a new value to the pixel of an image based on its neighborhood intensity levels. LBP labels the pixels of an image by thresholding the neighborhood of each pixel and we get a binary number as a result. LBP is widely used in many CBIR applications due to its distinguishing power, computational simplicity and robustness to monotonic intensity variations caused due to illumination changes.

-

C. Relevance Feedback

We know primitive features are not sufficient to retrieve the best results; there is always a gap between the primitive features and the high-level human semantic. To reduce the semantic gap we have introduced users interface in the system in the form of relevance feedback [6, 1, 27, and 28]

The low-level features [17] like HSV histogram (HH), color coherence vector (CCV) and local binary pattern (LBP) are calculated. A matrix is created named FD, where all feature values are saved as shown in Eq. (1)

There are 24 values of the color histogram, 16 local binary pattern values, and 48 color coherence vector feature values. So the feature matrix is of dimension 1x88 for the query image. We have created a database of 800 images; the feature matrix of database images is Fq as shown in Eq. (2).

x11

x21

Fd = x1 j

1j J 800 x 88

where , i = 1 to 800 and j = 1 to 88

Fq = [У11 У12 . У1 j]

As the user enters the query image, we calculate the feature matrix for the query image as shown above as Fq and compare it with the feature matrix of database images and find out the corresponding Euclidean distance D E as shown in Eq. (3). We fetch the indices of first 20 images from sorted Euclidean distance matrix and display them. This is called the first iteration.

After the 1st iteration, the system will ask the user for user’s opinion. If he is not satisfied with the result, the system will ask the user for three most relevant images according to user’s intention.

d 11

d21

D E

d,

•1 -1800 x 1

Then these three images are taken as query and again all the features are extracted for these three images. Here we have introduced a concept of prominent features. Prominent Features are the features with a minimum difference. Out of 88 primitive features, 30 prominent features are selected and feature matrix is computed as shown in Eq. (4).

p11 p12 .. p1t

F =

1 PR p21

p 22 . . p 2 t

_ p s 1 p s 2 • • p st J 800 x 30

where, t = 1 to 30 and s =1 to 800

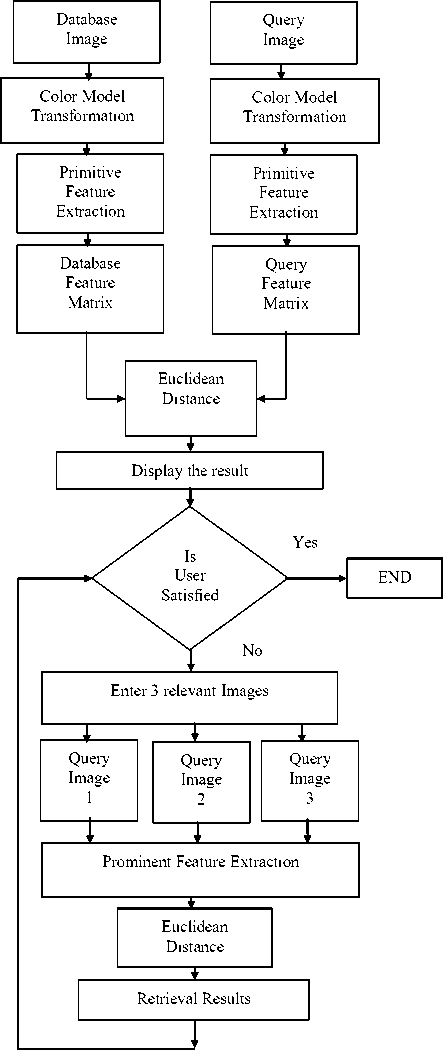

In the 2nd iteration, only the prominent features are considered while calculating Euclidean distance and the feature vector length of the new query is 30. The system asks for three most relevant images from the user, these images are again considered as query image and the process is repeated to retrieve 10 relevant images. While considering the 2nd query image, common images are discarded. The same procedure is applied for the 3rd query provided by the user and the corresponding results are displayed. This process repeats itself until the user is satisfied. This interaction of the user with the system is the relevance feedback. It is observed that the system gives improved results in 2nd or 3rd iteration. The complete flow of the system is as shown in Fig. 1.

Fig.1. System Flowchart.

-

D. System Algorithm

-

1. Accept the input query image.

-

2. Convert the RGB image to HSV format

-

3. Compute the low-level features of the query image and form the feature vector matrix

-

4. Compare the query feature vector with the database feature vector.

-

5. Calculate the Euclidean Distance.

-

6. Display the top 20 images with minimum distance.

-

7. Ask the user if he/she is satisfied with the results, if yes go to step 12

-

8. If no, get the rank of most relevant 3 images from the user.

-

9. Calculate prominent features for selected images. And find the Euclidean distance using only these prominent features.

-

10. Display 10 images per query by discarding the common images.

-

11. Go to step 7

-

12. End

-

IV. Experimentation

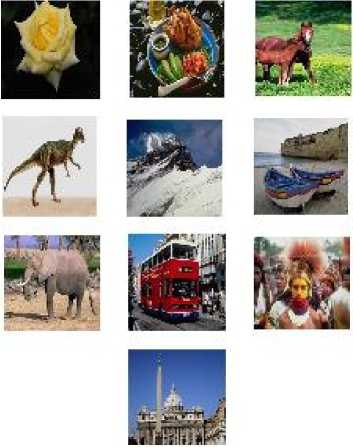

The database used is the subset of Corel Database [29]. The Database consists of 1000 images from 10 different classes. The ten classes are buildings, elephants, Africans, beach, bus, dinosaurs, horses, snowy mountains, roses, and food. Sample images from each category are shown in Fig. 2. The database is divided into 20:80 ratios. 20 images of each category are used for testing and 80 images of each category are trained to create the database.

In the first level of experimentation, the image features were extracted using two primitive feature extraction methods. One method uses a hybrid approach [28] using shape and texture and another method includes an approach which integrates global basic color features and the features exploring the spatial relationships i.e. LBP and CCV. This method integrates many orientations and keeps a comparatively low feature size.

The performance of the proposed algorithm is assessed by using two parameters, recall, and precision. Precision is based on the total number of images retrieved and number of relevant images retrieved. A precision of value 1.0 indicates that every image retrieved by the system was relevant. Let x be the number of relevant images retrieved and y be the total number of images retrieved. Precision is then given by Eq. (5).

Pr ecision = x (5)

y

Fig.2. Sample images from COREL database.

A recall is based on the number of relevant images retrieved and the total number of relevant images in the database. A perfect Recall value of 1.0 indicates 100% retrieval of relevant documents or images. It also describes how effectively the CBIR system retrieves all the relevant images for a particular query image. Let z be the total number of relevant images in the image database.

Re call = x (6)

z

-

V. Results And Discussion

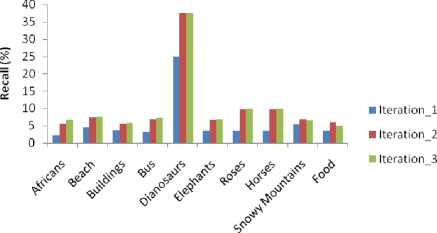

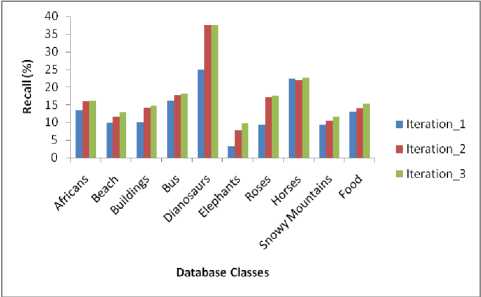

Table 1 gives the average recall by method 1 and proposed LLIF extraction method for primitive feature extraction i.e. method 2 without relevance feedback (RF). There is a huge difference between the recall by both the methods. The recall by Method 1 lies in the range of 2% to 5.35% maximum. For the class Dinosaurs we are getting the highest recall of 25%. As the images of this class are prominent by shape and there is just one object on the background, both the methods successfully retrieve the correct images. This is not the case with other class images. Still the proposed method which integrates the global basic color features, CH and the features exploring the spatial and structural relationship (LBP and CCV) is highly successful in retrieving large number of number of relevant images. By method 2 we get the recall in the range of minimum 3% up to maximum 25%. In addition to this the average recall is gotten to be more than 13% which is quite higher than that of using method 1.

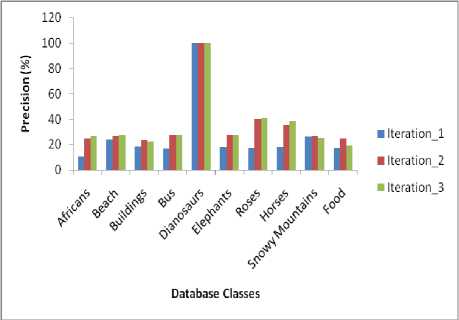

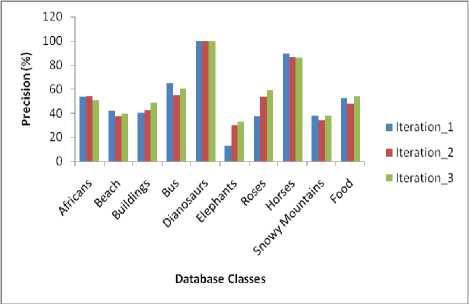

The retrieval results are improved by a significant amount when the RF is applied. Table 2 describes the average precision with RF by method 1 and method 2. The retrieval results show that after the use of RF, the average precision increases iteration wise. As we go on increasing the iterations, the number of relevant images retrieved increases except some exceptional cases. It depends upon the kind of query being feed back to the system. 100% precision is observed in case of Dinosaurs.

Table 1. Results (Average Recall) for LLIF extraction for CBIR without relevance feedback

|

Category |

Method 1 |

Proposed method (Method 2) |

|

Africans |

2.20 |

13.44 |

|

Beach |

4.55 |

9.94 |

|

Buildings |

3.65 |

10.00 |

|

Bus |

3.15 |

16.25 |

|

Dinosaurs |

25.00 |

25.00 |

|

Elephants |

3.55 |

3.25 |

|

Roses |

3.45 |

9.31 |

|

Horses |

3.55 |

22.38 |

|

Snowy mountains |

5.35 |

9.30 |

|

Food |

3.45 |

13.00 |

|

Average Recall for all test images |

5.79 |

13.19 |

By method 1 the average precision goes up to 35.42% in iteration 3. As we go on increasing the iterations, we can achieve better results. Category wise results are shown in Fig. 3 and Fig.4. Table 2 also shows the average precision by proposed method. In first iteration itself this method gives very good results. As we marked that for the classes like Africans, Buses, Horses the precision is very good in first iteration. Here we applied relevance feedback and observed the results for second iteration. The precision improves hardly by some amount in second iteration. We get precision in the range of minimum 30% up to maximum 100% in third iteration. Average precision in this case is found to be 57.03%. We can improve the results by increasing the number of iterations. It is observed that more semantically similar images are gotten as the iteration continues increasing.

Table 2. Average Precision with Relevance Feedback

|

Methods CBIR |

for |

Iterations |

||

|

1st |

2nd |

rd |

||

|

Method 1 |

26.63 |

35.63 |

35.42 |

|

|

Proposed method (Method 2) |

53.08 |

54.10 |

57.03 |

|

Table 3. Average Recall with Relevance Feedback

|

Methods CBIR |

for |

Iterations |

||

|

1st |

2nd |

rd |

||

|

Method 1 |

5.8 |

10.2 |

10.3 |

|

|

Proposed method (Method 2) |

13.2 |

16.8 |

17.6 |

|

Table 3 gives idea about average recall with RF by both the primitive feature extraction methods. Category wise results are shown in Fig. 5 and Fig. 6. As the iterations increases, the percentage recall increases. By method 1 the average recall lies in the range of 5.8% up to 10.3%. We can improve the recall further by performing more number of iterations.

Fig.3. Retrieval Results (% Precision) by proposed RF algorithm. (LLIFs extraction using method M-1)

In case of the retrieval by proposed LLIF extraction method, initially the recall values were found to be good. They improved further with increasing iterations. We get a recall of minimum 10% up to maximum 37%. Average recall is found to be 17.6% in the third iteration. It is observed that proposed method i.e. method 2 is found to be more efficient to give semantically significant results. In addition to this the retrieval results from Table 1 to Table 3 show that the proposed relevance feedback approach is found to be improving significantly irrelevant of any of the LLIF extraction techniques for the retrieval of semantically significant images.

Fig.7. Query Image

Fig.4. Retrieval Results (% Precision) of proposed RF algorithm. (LLIFs feature extraction using proposed method)

Fig.8. Results of first iteration by proposed approach

Database Classes

Fig.5. Retrieval Results (% Recall) by proposed RF algorithm. (LLIFs feature extraction using method M-1)

Fig.9. Results of 2nd iteration by proposed RF approach (Rank 1 to 9)

Fig.6. Retrieval Results (% Recall) of proposed RF algorithm. (LLIFs extraction using proposed method)

image:(HD imagefll) mage (12)

Fig.10. Results of 2nd iteration by proposed RF approach (Rank 10 to

-

VI. Conclusion

This study explored the CBIR framework utilizing RF that uses prominent features of new query labels to enhance the retrieval performance. Different feature extraction methods and RF strategies are contemplated and after marking the limitations of the existing routines, our own methodology has been presented.

One of the main contributions in this paper is the use of global color features and the features exploring the structural connections to amalgamate many orientations, textures and color distributions among the images.

Another work such as minimizing the feature vector size during re-querying to make the retrieval faster is proposed and the results are discussed. Deciding the weights while applying the RF is not necessary; rather it is observed that a simpler retrieval algorithm for RF is found to be better for optimization of semantic gap in CBIR. Our approach led to a fast, accurate and simple RF system which can be applicable to large and a variety of databases. But as it is said that “there is always a room for improvement”, we have reduced the semantic gap considerably and still efforts could be made to reduce it even further by using neural network approach.

Acknowledgment

The authors wish to thank Dr. Manesh B. Kokare for his valuable guidance. The authors would also like to thank the anonymous reviewers for their valued suggestions in improving the standard of this paper.

Список литературы An Interactive Approach for Retrieval of Semantically Significant Images

- Y. Rui, T. S. Huang , M. Ortega and S. Mehrotra, "Relevance Feedback: A Power Tool for Interactive Content-Based Image Retrieval", IEEE Trans. Circuits. Systems for Video Technology, vol. 8, no. 5, pp.644 -655 1998

- Kherfi, M.L.; Ziou, D., "Relevance feedback for CBIR: a new approach based on probabilistic feature weighting with positive and negative examples," IEEE Transactions on Image Processing, vol.15, no.4, pp.1017,1030, April 2006

- Grigorova, A.; De Natale, F.G.B.; Dagli, C.; Huang, T.S., "Content-Based Image Retrieval by Feature Adaptation and Relevance Feedback," IEEE Transactions on Multimedia, vol.9, no.6, pp.1183,1192, Oct. 2007

- Pranoti P. Mane, Narendra G. Bawane," Image Retrieval by Utilizing Structural Connections within an Image", I.J. Image, Graphics and Signal Processing, 2015, in Press.

- Saju, A.; Thusnavis, B.M.I.; Vasuki, A.; Lakshmi, P.S., " Reduction of semantic gap using relevance feedback technique in image retrieval system", Fifth International Conference on the Applications of Digital Information and Web Technologies (ICADIWT), pp.148,153, 17-19 Feb. 2014

- Hong, Pengyu, Qi Tian, and Thomas S. Huang, "Incorporate support vector machines to content-based image retrieval with relevance feedback", International Conference on Image Processing, Vol. 3. IEEE, 2000.

- Zhou, Xiang Sean, and Thomas S. Huang, "Image retrieval: feature primitives, feature representation, and relevance feedback", IEEE Workshop on Content-based Access of Image and Video Libraries, IEEE, 2000.

- Han, JunWei, and Lei Guo, "A new image retrieval system supporting query by semantics and example", International Conference on Image Processing, Vol. 3. IEEE, 2002.

- Pushpa B.Patil , Manesh B. Kokare , "Content Based Image Retrieval with Relevance Feedback using Riemannian Manifolds",Fifth International Conference on Signals and Image Processing, pp.26,29, 8-10 Jan. 2014

- Boury-Brisset, Anne-Claire, "Ontology-based approach for information fusion", Proceedings of the Sixth International Conference on Information Fusion, 2003.

- Gorti Satyanarayana Murty,J Sasi Kiran,V.Vijaya Kumar, "Facial Expression Recognition Based on Features Derived From the Distinct LBP and GLCM", IJIGSP, vol.6, no.2, pp. 68-77, 2014.

- S. A. Medjahed, "A Comparative Study of Feature Extraction Methods in Images Classification", International Journal of Image Graphics and Signal Processing (IJIGSP), 7: 16-23 (2015).

- Xiaoqian Xu, Dah-Jye Lee, K. Sameer, L. Antani, Rodney Long, James K. Archibald, "Using relevance feedback with short-term memory for content-based spine X-ray image retrieval", Neurocomputing, 72 (2009), pp. 2259–2269

- Plataniotis, Konstantinos N. and Anastasios N. Venetsanopoulos, "Color image processing and applications", Springer Science and Business Media, 2000.

- Xiaojun Qi; Ran Chang, "A fuzzy statistical correlation-based approach to content based image retrieval", IEEE International Conference on Multimedia and Expo, pp.1265, 1268, 2008.

- Peng-Yeng Yin; Bhanu, B.; Kuang-Cheng Chang; Anlei Dong, "Integrating relevance feedback techniques for image retrieval using reinforcement learning", IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 27, Issue 10, pp.1536,1551,Oct. 2005.

- Yang Mingqiang, Kpalma Kidiyo and Ronsin Joseph, "A Survey of Shape Feature Extraction Techniques, Pattern Recognition Techniques", Technology and Applications, Book edited by: Peng-Yeng Yin, pp. 626, November 2008.

- M.J. Swain, D.H. Ballard,"Color indexing", International Journal of Computer Vision, pp. 1132, vol. 7, Issue 1, 1991

- Dacheng Tao; Xiaoou Tang; Xuelong Li; Xindong Wu, "Asymmetric bagging and random subspace for support vector machines-based relevance feedback in image retrieval", IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 28, Issue 7, pp. 1088,1099, July 2006.

- Sangoh Jeong, Chee SunWon, RobertMGray, "Image retrieval using color histograms generated by Gauss mixture vector quantization", Computer Vision and Image Understanding, Volume 94, Issues 1-3, pp. 44-66, 2004.

- Jing Li; Allinson, N.; Dacheng Tao; Xuelong Li, "Multitraining Support Vector Machine for Image Retrieval", IEEE Transactions on Image Processing, vol. 15, Issue 11, pp. 3597, 3601, Nov. 2006.

- Ying Liu, Dengsheng Zhang, Guojun Lu, Wei-Ying Ma, "A survey of content-based image retrieval with high-level semantics", Pattern Recognition, Volume 40, Issue 1, Pages 262-282, January 2007.

- Zabih Justin Miller, Greg Pass, "Comparing images using color coherence vectors", The fourth ACM International Multimedia Conference, Boston, MA, USA, pp. 65-73, 1996.

- Takala, Valtteri, Timo Ahonen, and Matti Pietikinen, "Block-based methods for image retrieval using local binary patterns", Image analysis, Springer Berlin Heidelberg, pages 882-891, 2005.

- Menp, Topi," The Local binary pattern approach to texture analysis: Extenxions and applications", Oulun yliopisto, 2003.

- Karam, Omar, Ahmad Hamad, and Mahmoud Attia, "Exploring the Semantic Gap in Content-Based Image Retrieval: with application to Lung CT", ICGST-Graphics Vision and Image Processing Conference (GVIP 05 Conference), 2005.

- Traina, Agma J M, Joselene Marques, and Caetano Traina Jr., "Fighting the semantic gap on cbir systems through new relevance feedback techniques", 19th IEEE International Symposium on Computer-Based Medical Systems, IEEE, 2006.

- P. P. Mane, N. G. Bawane and A. Rathi, "Image retrieval using primitive feature extraction with hybrid approach", National Conference ACCET-15, India, pp. 99–102, 2015.

- J. Li and James Z. Wang, "Automatic linguistic indexing of pictures by a statistical modeling approach", IEEE Transactions on Pattern Analysis and Machine Intelligence, Pages 1075-1088, 2003.