An Obstacle Detection Scheme for Vehicles in an Intelligent Transportation System

Автор: Vidhi R. Shah, Sejal V. Maru, Rutvij H. Jhaveri

Журнал: International Journal of Computer Network and Information Security(IJCNIS) @ijcnis

Статья в выпуске: 10 vol.8, 2016 года.

Бесплатный доступ

Road obstacles cause serious accidents that have a severe impact on driver safety, traffic flow efficiency and damage of the vehicle. Detecting obstacles are important to prevent or to reduce such kind of the accidents and fatalities. However, it is difficult and becomes tricky because of some problems like presence of shadow, environmental changes or a sudden action of any moving things (e.g., car overtaking, animal coming) and many more. Thereby, this paper aims to design an obstacle detection technique based on (i) moving cameras and (ii) moving objects. These methods are applied to obstacle detection phase, in order to identify the different obstacles (e.g., potholes, animals, stop sign, obstacles, bumps, road cracks) by considering road dimensions. A new technique is introduced for detecting obstacles from moving camera and moving objects which overcomes several limitations over stationary cameras and moving/stationary objects. Further, paper reviews recent research trends to detect obstacles for moving cameras and moving objects with discussion of key points and limitations of each approach. Finally, the results show that the proposed method is more robust and reliable than the previous approaches based on the stationary cameras.

Image Processing, Obstacle Detection, Intelligent Transportation System, Safety, VANET (Vehicular Ad-hoc Network)

Короткий адрес: https://sciup.org/15011591

IDR: 15011591

Текст научной статьи An Obstacle Detection Scheme for Vehicles in an Intelligent Transportation System

Published Online October 2016 in MECS DOI: 10.5815/ijcnis.2016.10.03

The obstacle detection technologies are increasingly popular choices for driver assistant system. The evident safety benefit of these technologies is that driver can interact with another driver with a GPS system and also can aware of upcoming obstacles in advance without having to direct their visual attention to the road.

The occurrence of death by road accidents becomes significantly serious issue in every country. In 2013, the 32 countries noted a 4.3% decrease in road fatalities from 2012 and a 7.9% decrease from 2010. Provisional fatality data for 2014 shows in trouble with compared to 2013. Eight countries saw an increase in fatalities, 15 countries managed to reduce their road death toll. For the remaining countries, there was no significant change. The table shows some countries for road fatalities for recent data and some changes from 2013 to 2014.

Table 1. Road Fatalities Per 1, 00,000 Vehicles in Some Countries for Year 2013 and 2014.

|

Road Fatalities- Recent Data |

|||

|

Country |

2013 |

2014 |

Change 2013-2012 |

|

Japan |

5152 |

5012 |

-6.0% |

|

Korea |

5092 |

4762 |

-6.5% |

|

Chile |

2110 |

2119 |

+0.4% |

|

Italy |

3385 |

3213 |

-5.0% |

|

Poland |

3357 |

3202 |

-4.6% |

|

United Kingdom |

1769 |

1807 |

+2.1% |

|

Germany |

3339 |

3368 |

+0.9% |

|

Netherlands |

570 |

570 |

No Change |

A number of research studies have examined that the impact of obstacles on driver’s safety. While driving on the road, the driver must be interacting with GPS (Global Positioning System) system continuously. The distraction of the driver may cause a serious accident if there is any obstacle that he has to consider. The present approach is designed to further investigate the impact of this system on driver’s safety and performance. The primary questions which arrive are:

-

1) What are the changes in vehicle’s system for interaction over GPS?

-

2) How are these changes reflected in driver’s visual behavior while interaction?

-

3) What happens if the system will fail and is unable to detect obstacles?

In the design of the approach, there were a number of critical considerations. The way of interaction is entirely user-friendly via the small LCD/LED screen attached in the vehicle. Drivers did not have to look away from vehicle to find obstacles. The all drivers have to do is watch on that screen for upcoming obstacles. Given the importance of obstacle detection, the main measure of interest was to decrease the road accidents and driver’s safety. The paper is organized as follows. In the next section we give an outline of relevant works, followed by the detailed description of our approach. Experimental results are given in section 5. Section 6 concludes this paper and provides a perspective for future research in this area.

-

II. Related Work

In this section, we are describing the existing methods for detecting moving objects and obstacles for Intelligent Transportation System.

Wang et al. [1] in this paper they described moving object detection technique based on temporal information. It works mainly in three phases. 1. Motion saliency generation: In continuous frames by symmetric difference generates a temporal saliency map. 2. Attention seed selection: By maximum entropy sum method calculates the threshold to get max saliency in candidate area. Using the proposed approach, consecutive objects cannot be recognized.

Bhaskar et al. [2] propose an image processing based vehicle detection and tracking method. The traffic data will automatically extract from this method. Foreground can be differentiated from the background using two methods. In the first method the Gaussian mixture model is used to detect background of the frame and in the second method the Blob detection is used to trace the movement of an object from frames. Using foreground detector detection of the object is done through binary computations and each object is detected by defining a rectangular region at each object. To remove noise the operations, i.e., Morphological operations are determined on the result. When there is highly defended traffic system the computation time will be increased.

Xia Dong et al. [3] the method based on RGB color space and edge ration is used to detect the shadow with moving objects. They proved that a pixel that can be classified in one of the three types: either an object, background or a shadow by experiments. Most of the methods apply to steady cameras only, this is one of it.

Liu Gang et al. [4] present moving object detection algorithm with some improvement. The difference of two continuous frames (images) is calculated for detecting the moving object. Similarly, three-frame differential method subtracts frame pairs from three frame images. Detection of the edge of the image is done by Image edge detection. The dilation operation can be used for combining all the points of background into contact with the object in the object. It is not suitable when the environment contains strong light. So it is not suitable for all types of environment.

Hassannejad et al. [5] presents a system for detection of moving objects based on a monocular system in roundabouts. Initially, object detection, alignment of image, and video stabilization and alignment of images is Feature matching. Feature matches are decided after feature and the descriptors are being extracted from many images. The pair of features matches is extracted. The algorithm that is used is AdaBoost for pattern recognizing by sliding window. There are mainly three phases of implementation:

-

1) Feature detection: In this phase, using a baseline

algorithm the feature of the image that is moving is identified.

-

2) Clustering: On each independent feature object

was detected.

-

3) Pattern recognition: However, it is difficult to

detect object’s moving features; so using this all traceable patterns are detected.

There is a limitation of speed. This is not applied roundabouts for high speed at. Moreover, the shadow is also considered as object under the vehicles.

Choi et al. [6] when there is a fast illumination changes this robust moving object detection technique is used. Two methods are used to identify the effect of illumination change. 1) CDM: Chromaticity Difference Model and 2) BRM: Brightness Ratio. Both methods are used to detect regions like dark background that is shadowed road or bright background that is Illumination of sunlight. In CDM method it checks in different frames for illumination change. If there is no illumination change, then that pixels are considered as foreground pixels. And remaining pixels are supposed to false foreground pixel. In BRM method, it is only used to determine false foreground pixels. But it can be applied to only some limited illumination change. It will not work well when brightness change of the full system.

Cristina et al. [8] the vehicles that are travelling in opposite lane are taken as video and STS system allow the vehicle who wants to overtake. So this is designed to improve driver’s visibility, safety and overtaking decision. It is implemented using two systems; a realistic driving simulator and DSRC. STS system is designed in the manner that when this is a traffic sign of ‘no overtaking’ it will automatically disabled. After overtaking the vehicle will sends video streaming this was proceeding to end it. The timing delay in communication will be the minimum as vehicles are in direct communication. For better communication, the both vehicles must be in direct communication, as a vehicle in front is full in area of sight and is near. OpenSceneGraph is used to define the placement and angle of view.

Huijuan Zhang et al. [9] present an algorithm based on dynamic scenes for moving target detection. For that the video must be preprocessed. The shadow and some background region become a bottleneck for results, so that the frame yields false result due to match colors. For better result in this situation must be prevented. So we can use the ratio of length-width for the rectangular region as some result can have a rectangular counter model. The main disadvantage of this system is that it is not possible to detect flutter noise elimination and detection of multi moving objects.

Jinhai Xiang et al. [10] under changing illumination condition, it presents a technique for moving object detection and shadow removing. Using Gaussian mixture model the foreground is detected. The method is used to determine and differentiate between foreground objects and the background object. The shadow can be removed from detecting object using the contour of moving objects. But this method is limited to the small size of the object.

If the object is large sized then it won’t work for given assumptions.

Toth et al. [13] gives idea on image processing (query based) in VANET. Using request or specific query the object which was required will be detected automatically from the image. The input can be of three types: term condition, model, and request. For that the query will be created either by using an application system in which object is interested or by person. After, the query will be sent to other vehicles. The method of image processing is applied to the sequence of video or current image taken from the camera. The image processing functions are used for detecting objects like GETIMAGE, FINDIMAGE, QUERYINFO, spatial operation and set operations.

JiuYueHao et al. [15] presents spatio-temporal traffic scene, designed for moving object motion detection. The Spatio - temporal model is based on KDE used for dynamic background handling. KDE is used to detect moving foreground object. The Gaussian foreground model is used for the spatial correlation of the foreground pixel. The computation time is also reduced. The algorithm, called frame fusion is used for robust updating stage.

A. P. Shukla et al. [16] uses three techniques for segmentation and tracking moving object of vehicle detection. Three methods are: Background Subtraction method; Feature Based Method and Frame Differencing and Motion Based method. The background subtraction method is used to extract moving foreground objects from image storing background or using image series containing background. The frame difference method is used to subtract of two continuous image frames for identifying the target moving object from the frame image containing background. Region-based tracking method is used for the moving objects to track the region. The vehicle boundaries are tracked in the Contour tracking methods.

Dipali Shahare et al. [17] overcome the limitation of fixed cameras. It presents moving object detection with fixed camera as well as the moving camera for automated video analysis. The feature points are extracted from a current frame using the optical flow method. An improved Expectation-Maximization (EM) algorithm is used in consecutive frame subtraction. The algorithm based on the calculation that difference of neighboring frames and then moving region and background are recognized. In background subtraction method the comparison is made between each input image frame and the background model obtained from the previous image frames. That way, foreground frame and background frame can be obtained. However, it is not robust against illumination changes. It cannot detect shadow by a moving object.

-

III. Proposed Approach

The proposed approach regards to detecting the obstacle coming in the way of the vehicle while, the vehicle is moving. The aim of the system is to detect the obstacle, so that, the user can be notified and some action can be performed to prevent accidents. Fully system aims to the security of VANET in Intelligent Transport System. The following section describes, how the algorithm works, what kind of operations are performed and how the result is obtained. The obstacle detection algorithm is having some assumptions.

-

• The camera is fixed on the vehicle, so the camera is moving as the vehicle is moving.

-

• The obstacle is detected by the system whose, aspect ratio does not vary much or whose, aspect ratio is constant.

-

• The system needs many positive images as well as few negative images for training purpose and so, the system required prior knowledge about the type of obstacle.

-

• Positive image= Image, that contains the obstacle.

-

• Negative image= Image, that does not contain the obstacle (The image with no object).

The whole algorithm is divided into mainly 2 phases, system training and obstacle detection.

-

a. Obstacle detection algorithm

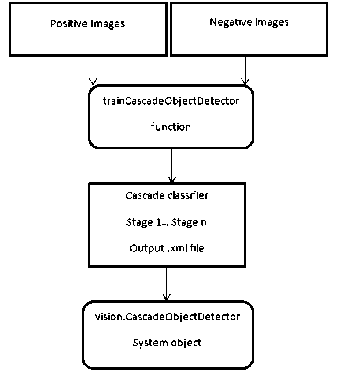

The full system algorithm, including both of the phases is described in Fig. 1.

Obstacle detection Algorithm

Input: Video, Positive images set. Negative images set Output: Image containing obstacle with a bounding box

-

1. Train the system.

-

2. Read the xml file generated as output from step 1.

-

3. Read the video.

-

4. Initialize each and every frame of the video from 0.1... ..n

-

5 Лог i = 0.n

-

6. Read video frame

-

7. if obstacle is detected

-

8. Draw bounding box

-

9. else neglect image frame

-

10. endif

-

11. endfor

-

Fig.1. Obstacle Detection Algorithm

Fig.2. System Training for Obstacle Detection [25]

-

b. Train the system

To identify object, we first train the system Computer Vision System Toolbox™ [25] provides a function to train a classifier as shown in figure 2.

The training stage is only required once in the system and once it is performed the resulting xml file can be used for detection purpose. The algorithm starts working with training of the system. The base of the proposed algorithm is Viola-Jones algorithm. Thus, 70 to 80 positive images are provided for the purpose to train the system. Also, few negative images around 10 to 20 are provided to let the system give an error free output. The path of the folder containing positive and folder containing negative images is given as the argument of the function.

Each and every stage of the training generates some positive sample images, less than a number of the positive image provided by the user as well as some negative sample images. The result of the initial training stage is forwarded to the next stage of training so that the false generated result may get discarded. Every stage is group of weak learners. Training of every stage is done using boosting that is the weighted average of decisions taken by the weak learners.

Suppose there is an N number of positive and negative image frames with label (xi, yi). [26]

-

• If the image i is having obstacle, then yi = 1, else yi

= -1. (1)

-

• Initial weight of image i, wi 1 = (2)

-

• For each feature f j = 1 to M, renormalize the weight so that they sum to 1.

-

• By applying feature on training set we can get, optimal threshold θ j and polarity s j that minimizes the weighted classification error, as 0 and 1. 0 if yi = h j (xl9 j Sj ) and 1 otherwise. (3)

-

• The algorithm constructs strong classifier from a combination of weighted weak classifier.

-

• h(x) = sign( ) (4)

Where, each weak classifier is a threshold function based on feature f j .

h j (x) = -S j , if f j <0 j (5)

= s j , otherwise .

As given argument to the number of stages for training from five to ten, the system works on training in those stages only. We use 5 stages of training in our system. The training state may take several seconds or a minute for training. Each and every stage use and identifies different number of positive and negative images and thus, the time taken by each stage may differ.

Once the training is done, the system stores generated xml file as output from training. The output xml file has the parameters of obstacle whose positive images were provided in training. Thus, by using this output xml file in the system for obstacle detection, we can detect the obstacle.

-

c. Detect obstacle

The second phase of the algorithm is obstacle detection. This phase of algorithm is mainly dependent on the initial phase, system training. This phase works by reading the video and loading into the system. As, the video is a sequence of images only, the video is divided into a number of sequential frames and on each and every frame the training result is applied.

If the obstacle is found to be in the current image frame, a bounding box is shown on it so that they can be notified. And at the same time, that image with bounding box is shown to the user.

Otherwise, there is no obstacle found to be in the system and so the system neglects the image frame and again checks the result in the next continuous sequential image frame.

-

IV. Experimental Result

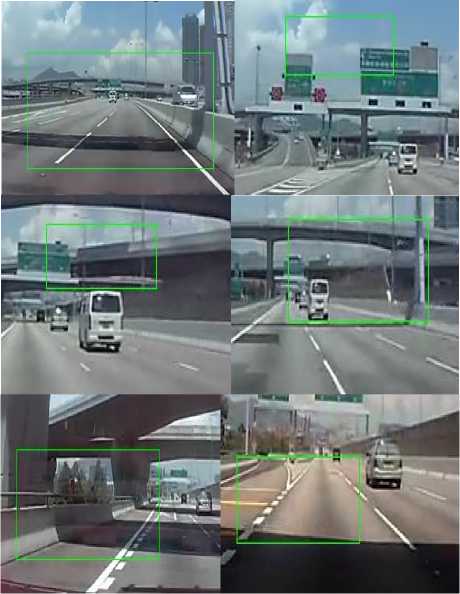

In this section, we describe the experiment done on the system and the result obtained from the system as an output. The system is experienced in different video taken in different weather conditions, day-night condition and morning-evening conditions. The video was recorded from various places like, highway, city road and others.

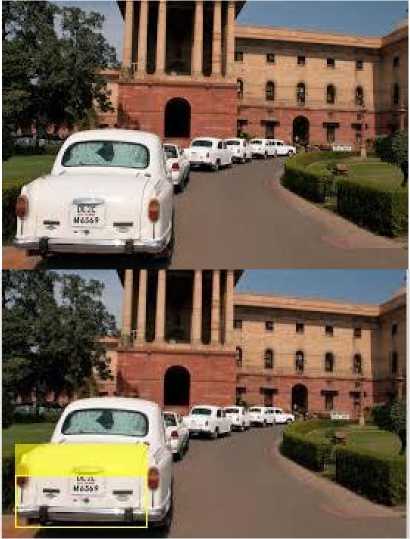

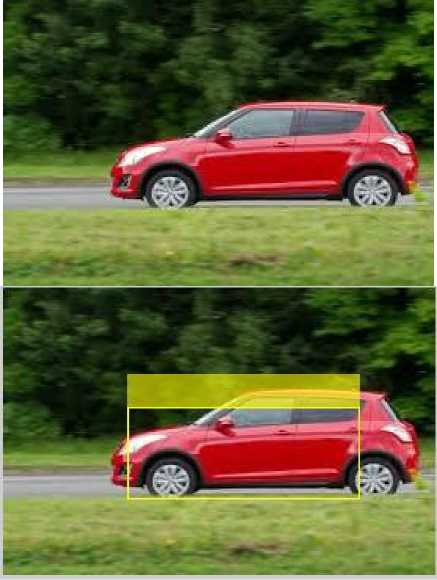

We observed that depending on the types of conditions the result may vary. Also, the system result may vary depending on the speed of the vehicle due to blur in the input video varying from a speed of the vehicle. Fig. 3. And Fig. 4. shows the result when the system is applied to the image. Fig. 6. shows the result when the system is applied to video.

The result obtained from images is as follows.

Fig.3. Detected Car as Obstacle

Fig.4. Detected Car

The result obtained from the video is as follows.

Fig.5. Detected Obstacle from The Video

V. Coclusion

In this paper, we proposed an algorithm to detect various obstacles coming in the way of the vehicle in

moving state. This system is proposed for the aim to prevent accidents, and is a step to make the driving fully automated for VANET in Intelligent Transport System. The algorithm works well for the obstacles that are previously trained by the system. Thus, the detection of obstacles in this system is countable. Starting from the base of Viola-Jones algorithm, we designed the approach using a number of images for training for the obstacle, whose parameters are known to the system.

There is still space for improvement in the system. As the number of the obstacles counted by system is countable, we can increase the number of obstacles to be detected. The system’s accuracy can be improved for all types of conditions. At the same time, the system should provide promising result for the obstacle whose aspect ratio is not constant or vary.

Список литературы An Obstacle Detection Scheme for Vehicles in an Intelligent Transportation System

- Zhihu Wang; Kai Liao; JiulongXiong; Qi Zhang, "Moving Object Detection Based on Temporal Information," Signal Processing Letters, EEE, vol.21, no.11, pp.1403,1407, Nov. 2014.

- Bhaskar, P.K.; Suet-Peng Yong, "Image processing based vehicle detection and tracking method," Computer and Information Sciences(ICCOINS), 2014 International Conference on , vol., no., pp.1,5, 3-5June 2014.

- Dong, Xia, Kedian Wang, and GuohuaJia. "Moving object and shadow detection based on RGB color space and edge ratio." In Image andSignal Processing, 2009.CISP'09.2nd International Congress on, pp. 1- 5.IEEE, 2009.

- Gang, Liu, NingShangkun, You Yugan, Wen Guanglei, and ZhengSiguo. "An improved moving objects detection algorithm." In WaveletAnalysis and Pattern Recognition (ICWAPR), 2013 International Conference on, pp. 96-102. IEEE, 2013.

- Hassannejad, Hamid, Paolo Medici, Elena Cardarelli, and PietroCerri. "Detection of moving objects in roundabouts based on a monocularsystem." Expert Systems with Applications 42, no. 9 (2015): 4167-4176.

- Choi, JinMin, Hyung Jin Chang, Yung Jun Yoo, and Jin Young Choi. "Robust moving object detection against fast illumination change."Computer Vision and Image Understanding 116, no. 2 (2012): 179-193.

- Patel, Chirag, Atul Patel, and Dipti Shah. "A Novel Approach for Detecting Number Plate Based on Overlapping Window and RegionClustering for Indian Conditions." (2015).

- Olaverri-Monreal, Cristina, Pedro Gomes, Ricardo Fernandes, Fausto Vieira, and Michel Ferreira. "The See-Through System: A VANETenabled assistant for overtaking maneuvers." In Intelligent VehiclesSymposium (IV), 2010 IEEE, pp. 123-128.IEEE, 2010.

- Zhang, Huijuan, and Hanmei Zhang. "A moving target detectionalgorithm based on dynamic scenes." In Computer Science & Education(ICCSE), 2013 8th International Conference on, pp. 995-998. IEEE,2013.

- Xiang, Jinhai, Heng Fan, Honghong Liao, Jun Xu, Weiping Sun, andShengsheng Yu. "Moving Object Detection and Shadow Removingunder Changing Illumination Condition."Mathematical Problems inEngineering 2014 (2014).

- Kanungo, Anurag, Ashok Sharma, and ChetanSingla. "Smart trafficlights switching and traffic density calculation using video processing."In Engineering and Computational Sciences (RAECS), 2014 RecentAdvances in, pp. 1-6. IEEE, 2014.

- Gomes, Pedro, Cristina Olaverri-Monreal, and Michel Ferreira. "Makingvehicles transparent through V2V video streaming."IntelligentTransportation Systems, IEEE Transactions on 13, no. 2 (2012): 930-938.

- Toth, Stefan, Jan Janech, and Emil r k. uery Based ImageProcessing in the VANET." In Computational Intelligence, Communication Systems and Networks (CICSyN), 2013 FifthInternational Conference on, pp. 256-260. IEEE, 2013.

- Badura, Stefan, and Anton Lieskovsky. "Intelligent traffic system:Cooperation of MANET and image processing." In IntegratedIntelligent Computing (ICIIC), 2010 First International Conference on, pp. 119-123. IEEE, 2010.

- Hao, JiuYue, Chao Li, Zuwhan Kim, and Zhang Xiong. "Spatiotemporaltraffic scene modeling for object motion detection."IntelligentTransportation Systems, IEEE Transactions on 14, no. 1 (2013): 295-302.

- A. P. Shukla and Mona Saini, "Moving Object Tracking of VehicleDetection" International Journal of Signal Processing, Image Processingand Pattern Recognition Vol.8, No.3 , 2015.

- Shahare, Dipali, and RanjanaShende. "Moving Object Detection withFixed Camera and Moving Camera for Automated VideoAnalysis." International Journal of Computer Applications Technologyand Research 3, no. 5 (2014): 277-283.

- Labayrade, Raphael, Didier Aubert, and Jean-Philippe Tarel. "Real time obstacle detection in stereovision on non flat road geometry through" vdisparity" representation."In Intelligent Vehicle Symposium, 2002. IEEE, vol. 2, pp. 646-651. IEEE, 2002.

- Byun, Jaemin, Ki-in Na, Beom-suSeo, and MyungchanRoh. "Drivable Road Detection with 3D Point Clouds Based on the MRF for Intelligent Vehicle." InField and Service Robotics, pp. 49-60. Springer International publishing, 2015.

- Kaur, Amandeep, and Tanupreet Singh. "A Comparative Analysis of Lane Detection Techniques."International Journal of ComputerApplications 112, no. 3 (2015).

- Bello-Salau, H., A. M. Aibinu, E. N. Onwuka, J. J. Dukiya, and A. J. Onumanyi. "Image processing techniques for automated road defectdetection: A survey." In Electronics, Computer and Computation (ICECCO), 2014 11th International Conference on, pp. 1-4.IEEE, 2014.

- Ding, Feng, Yibing Zhao, Lie Guo, Mingheng Zhang, and LinhuiLi."Obstacle Detection in Hybrid Cross-Country Environment Based onMarkov Random Field for Unmanned Ground Vehicle." Discrete Dynamics in Nature and Society2015 (2015).

- Aly, Mohamed. "Real time detection of lane markers in urban streets." In Intelligent Vehicles Symposium, 2008 IEEE, pp. 7-12. IEEE, 2008.

- Boroujeni, NasimSepehri, S. Ali Etemad, and Anthony Whitehead. "Fast obstacle detection using targeted optical flow." In Image Processing (ICIP), 2012 19th IEEE International Conference on, pp. 65-68. IEEE, 2012.

- MATLAB and Statistics Toolbox Release 2012b, TheMathWorks, I nc., Natick, Massachusetts, United States.

- Viola–Jones object detection framework. (2016, February 26). In Wikipedia, The Free Encyclopedia. Retrieved 12:40, March 25, 2016, from https://en.wikipedia.org/w/index.php?title=Viola%E2%80%93Jones_object_detection_framework&oldid=707070330

- P. Viola and M. Jones, "Rapid object detection using a boosted cascade of simple features," Computer Vision and Pattern Recognition, 2001.CVPR 2001.Proceedings of the 2001 IEEE Computer Society Conference on, 2001, pp. I-511-I-518 vol.1. doi: 10.1109/CVPR.2001.990517.