Analysis of multi-modal biometrics system for gender classification using face, iris and fingerprint images

Автор: Abhijit Patil, Kruthi R., Shivanand Gornale

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 5 vol.11, 2019 года.

Бесплатный доступ

A certain number of researchers have utilized uni-modal bio-metric traits for gender classification. It has many limitations which can be mitigated with inclusion of multiple sources of biometric information to identify or classify user’s information. Intuitively multimodal systems are more reliable and viable solution as multiple independent characteristics of modalities are fused together. The objective of this work is inferring the gender by combining different biometric traits like face, iris, and fingerprints of same subject. In the proposed work, feature level fusion is considered to obtain robustness in gender determination; and an accuracy of 99.8% was achieved on homologous multimodal biometric database SDUMLA-HMT (Group of Machine Learning and Applications, Shandong University). The results demonstrate that the feature level fusion of Multimodal Biometric system greatly improves the performance of gender classification and our approach outperforms the state-of-the-art techniques noticed in the literature.

Gender Identification, Biometrics, Multimodal, MB-LBP, BSIF, KNN, SVM

Короткий адрес: https://sciup.org/15016053

IDR: 15016053 | DOI: 10.5815/ijigsp.2019.05.04

Текст научной статьи Analysis of multi-modal biometrics system for gender classification using face, iris and fingerprint images

Published Online May 2019 in MECS DOI: 10.5815/ijigsp.2019.05.04

Biometrics is a technological system which measures the features or traits of individual based upon their physiometrics and behaviometrics. Humans also exhibits soft biometric characteristics like person’s gender, age, ethnicity, weight, height, gestures ,gait, accent, ear shape, length of legs and arms, skin color, hair color etc. To distinguish humans some natural ways are created using soft biometric instances. The classification of gender from biometric traits is one of the important steps in forensic anthropology, which is used to identify the gender of a criminal in order to minimize the list of suspects in a search. Very few researchers have worked on gender classification using fingerprints [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15], Iris[16,17,18,19,54], palmprint[20,21,22,24,57,58],face[23,26,27,28,29,30,31, 32,33,53,55,59,60], speech [34,35,36,37,38] and gait [42] etc and have gained the competitive results. These biometric traits which rely on any single biometric identifier often do not meet the requirements prudently as any uni-modal biometric system suffers from a variety of problems including distorted data, intra-class variations, inter-user similarity, constrained level of freedom, noncomprehensiveness, spoofs, Obfuscation (masking one’s own identity by altering the trait) and intolerable error rates and so on, which are shown in figure 1.a-g.

b

c

d

e

f

Fig.1.a- Distorted data b- Intra class Variation c- Noncomprehensiveness d- Spoofing Attack e- Effect of Ageing f-Inter user similarity g- Obfuscation

The above mentioned confines are overwhelmed by fusing multimodality which in turn provides more robustness to the results than any uni-modal system. Fusion [43, 51, 52] can be accomplished by four different ways namely they are: sensor-based, feature-based, score and a decision based fusion.

Multimodal-approach-based gender identification system has attracted attention in recent times because of ethical and reliability concern. Multiple biometrics-based gender identification represents an emerging trend and practically has many real-world applications like humancomputer interaction, gender-based advertisement, forensics, and surveillance etc. In this work, we present a multimodal biometrics-based gender identification system by integrating features from three different modalities viz Face, Iris, and Fingerprints. The paper is organized as follows: In section II, contains the literature review.The proposed methodology is described in Section Ш. After that, experimental results are discussed in section IV. In section V comparative analysis is done and finally, conclusion is drawn in the last section.

-

II. Relatesd Work

Preceding research has shown that there is a possibility of authenticating an individual from their respective multi-modalities, as well as from the related literature. It is observed that limited work has been is carried out for identifying a person’s gender using their multi-modal biometrics. This section reviews the studies which have been reported on gender identification using multi-modal biometric, Xiong Li et al. [39] have performed multimodal based gender identification by combining local binary patterns and Bag of words features based on decision level fusion on the face and fingerprint traits of the internal database of 397 volunteers from Han nationality and obtained an accuracy of 94% using Bayesian Hierarchical model. Mohamed A et al. [40] have performed multimodal based gender identification by combining features binary features, Eigenvalue, Syntactic Complexity, Response length, shallow and deep syntax and mean heart rate max-min difference features are combined on five different traits i.e. visual linguistic, physiological, thermal and acoustic traits from a selfcreated database of 51 males and 53 females and obtained an overall accuracy of 80.6% using decision tree classifier. Abdenour H et al.[41] have utilized three different unimodal databases namely CRIM, vidTimit and Cohn-Kanade dataset of face and video which are further combined to form a multimodal dataset (face and video) by using local binary patterns and obtained an overall accuracy of 96.3% by support vector machine. Caifeng Shan et al. [42] performed multimodal gender identification by combining the face and gait modalities from CASIA Gait-b dataset from which frontal face image is extracted from 119 subjects which are further combined with gait videos. AdaBoost based face detector and Background subtraction feature extraction techniques are implemented respectively and using the support vector machine classifier an overall accuracy of 97.2% is achieved.

From the literature, it is observed that there are different types of fusion levels they are; match score level, sensor level, feature level and decision level fusions. Among four levels of fusion our aim in this work is to use the feature level fusion to address the issues such as efficiency, robustness, applicability and universality.

-

III. Proposed Methodology

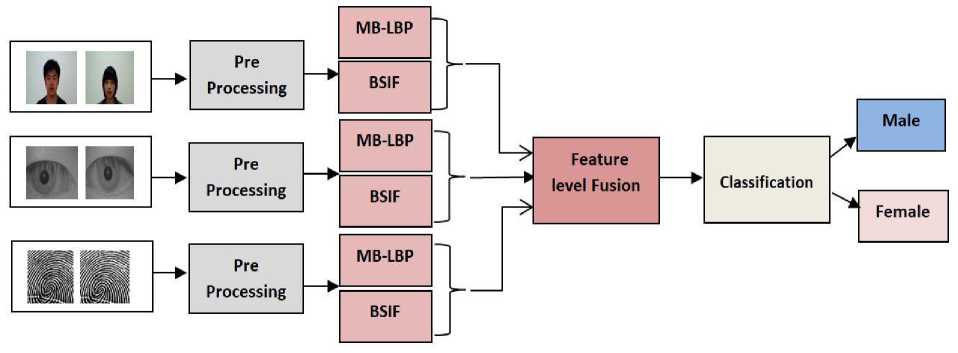

Gender classification using multimodal biometrics generally involves three steps namely pre-processing, feature extraction and classification. The initial task is to perform pre-processing and the pre-processing techniques are application dependent. Feature computation step, which deals with extraction of textural information using fusion of multiblock local binary patterns and BSIF filters.

Further, method is evaluated with different binary classifier. The block diagram of proposed methodology is represented below in figure 2.

Fig.2. Block Diagram of Proposed Methodology

-

A. Dataset

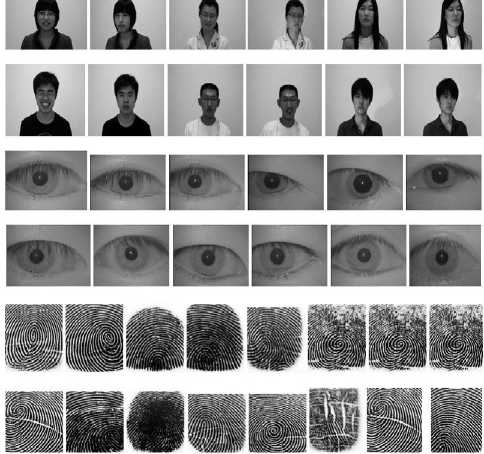

Authors have used publicly available SDUMLA-HMT standard dataset which is collected by machine learning and application lab of Shandong University [25]. The dataset includes real multimodal data from 106 individuals out of which 59 are male volunteers and 47 are female volunteers. The dataset contains face images which were collected from different poses, expressions and accessories. For Iris, dataset images are captured giving proper direction to volunteers, images from the both eyes are collected. Likewise, for fingerprint dataset images are acquired with FT-2BU sensors, from each such subject images of both-hand thumb, index and middle finger were collected by giving prerequisite directions. Some samples of the dataset of male and female images are shown below in in figure 3.

Fig.3. Sample of SDUMLA-HMT Database

-

B. Pre-Processing

Pre-processing is very significant step and is application dependent. The fingerprint image is normalized, and then the background is eliminated. For the Iris images are normalized by contrast limited adaptive histogram equalization (CLAHE). Then, for the faces, the images are resized and normalized to 250x250. Lastly, contrast limited adaptive histogram equalization is applied.

-

C. Feature Extraction

In this step, features of an image are expatiated which results in recognition of accuracy with a simple classification module. In this work, the feature level fusion of well-known texture descriptor multi-block-local binary pattern and binary statistical image feature features are implemented. However, from available literature and empirically testing it is witnessed that fusing scheme significantly improves the gender classification accuracy and leads to promising performance.

-

1) Multi-Block Local Binary Patterns

Local binary pattern [45] provides micro and local information from an image. Likewise the processing is quite similar to the LBP’s operation, except that summed blocks are used instead of individual pixel values of each neighboring sub regions, 16 equal sized non overlapping rectangles blocks are implemented for computing the features. From each such rectangle, the sum of pixel intensity is computed. The operator compares the central block average intensity g c with those of its neighboring rectangles {g 0,…… g c } and gives a binary sequence as an output value of MB-LBP’s operation.

MB - LBP = ^ s(g 0 - g c )2 k

Where g c is the average intensity and g k = {k=1…15} for neighboring the blocks.

Г 1 x > 0 5 ( x ) = j

[ 0 x < 0 (2)

Resulting binary pattern from each non overlapping block furthers these pattern values integrated and formed as feature vector. Comparing with original LBP, MB-LBP’s can capture fine macro structure which represents an image more precisely and is compact in size than the original LBP .

-

2) Binarized Statistical Image Features

Binary Statistical image feature technique encodes textural information from image sub-regions. These are mere generalization over Local Binary Patterns and Local Phase Quantization [10] which is based on the statistics of natural images. The basic vector obtained by projecting patches on subspaces from images locally by Independent Component Analysis [44]. Pixel coordinate values are threshold and respective binary codes are calculated. The image intensity patterns are represented by value in neighborhood of considered pixel for I (m, n) and filter WiKxK, the response is attained as ri=Zm, n I(m, n)x Wxk (m, n) (3)

Convolutional operation ‘x’ performed on I(m, n) which specifies patch dimensionality. A length of filter varies i.e. WiKxK = {1, 2, L}, K x K indicates the size of BSIF filter.

b ( i ) r i > 0

1 0 (4)

Likewise, for a given pixel (m,n) and a corresponding binary representation bi(m,n) BSIF is calculated as

BSIFk x k ( m , n) = Z l = 1 ( b ( m , n) x (2 i - L )) ^

Binary Statistical Image Feature are worked out as a histogram of pixels binary codes for each sub-region of images to characterize texture properties from it. In this experiment, filter size 11 *11 filters and 8bit length is fixed empirically which is capable of capturing sufficiently information from an image. Further this filter as a result produces a feature vector of 256 elements from each image.

-

3) Feature Fusion

In this work, feature level fusion technique is accomplished by augmenting the feature vectors obtained from a face, iris and fingerprint modalities of a single subject. The feature is computed by concatenating vectors. Let us say FAi={fa1,fa2,fa3….fan} represents features of face modality and IRi={ir1,ir2,ir3…irn} represents features of Iris modality and Fgi ={fg1,fg2,fg3….fgn} represents features of fingerprint modality of an volunteer .Then after the concatenation a new feature vector FAiIRiFgi {fa1,fa2,fa3….fan+ ir1,ir2,ir3…irn+ fg1,fg2,fg3….fgn} will be obtained which represents individuals. The purpose is to combine FAi, IRi and Fgi features to perform gender identification using multimodal biometrics. We have implicated two local feature extraction techniques i.e. MB-LBP and BSIF; the combined feature vector is computed from MB-LBP operations i.e.160 features which are fused with 256 features of BSIF and final feature vectors are generated by concatenation rule. The computed feature vector contains416 features obtained from each male and female image. Further, these features are stored and are used to train and test the system to classify males and females subjects. For this, we have trained our system with different parametric and non-parametric classifiers to check the performance and evaluation of earlier venerated classifying techniques.

-

D. Classifiers

Linear Discriminant Analysis: is one of basic classifier which has less computational complexity commonly used for dimensionality reduction [9]. The LDA classifier works by enhancing the separation by varying variance between classes by maximizing the rationality between and within the classes [10]. It’s a linear classifier, which classifies two-class problems optimally. In this particular instance, the LDA predicts either class label 1 for male or class label 0 for the female based on their class variances [9].

K-Nearest Neighbor classifier: will classify the class label based on measuring the distance between testing and training data. KNN [46, 48, 49] will classify by suitable K value which in turn finds the nearest neighbor and provides a class label to un-labeled images. Depending on the types of problem, a variety of different distance measures can be implemented. In this work, City-block distance, Cosine, Correlation and Euclidean distance is considered with K=3 which is empirically fixed throughout the experiment. Basically, K-NN is nonparametric which finds the minimum distance d between training sample M and testing pattern N using dctybiock(M, N) = Z nj - 1|Mj- Nj|

M Nt decs(MM, N)St = (1 - , cosine st (MsNt)(MsNt)

dEuclidean(M, N) = V(M — N ) T (M — N )

( M Nt )( M Nt ) '

dcorrelatin(M,N)st = (1 - I s s,

( M s Nt ' )( M s Nt ' ) ( M s Nt ' )( M s Nt )

Support Vector Machine : offers an opportunity to discover a non-linear transformation of the original input space into a high dimensional element space. Where an ideal isolating hyperplane can be found [47] seeking out an optimal hyperplane. In our work support vector machine endeavors and separates the two-class label form a vectors. Yi is a discriminant function F(x) = WT .Yi – Z splits data elements into two classes

by using these parameters Precision, Recall and Accuracy are computed by the following equations [50].

T

Pr ecision =---- — *100

T +F

- + -

F (x ) = WT Y, - Z > 1

Re call = (T p ) *100

(T - + F n )

Here Yi will predict either class label 0 for female or class label 1 for male.

Accuracy =

(T P + TN ) *100

( T p + TN + F p + F n )

-

IV. Results and Discussion

In this proposed work, gender discrimination ability of multimodal biometrics is investigated by fusing MB-LBP and BSIF correspondingly. The overall result observed is optimal by fusion of MB-LBP and BSIF rather than using them alone. As MB-LBP gathers minor and local appearance information from each block while BSIF encodes textural information over a wider range of scale from the images. The algorithmic steps are as follows:

Input: Multimodal images of SDUMLA-HMT database.

Output: Gender Identification using Multimodal biometrics.

Step 1: Images of SDUMLA-HMT dataset is considered for the experimentation.

Step 2: Image normalization is carried out accordingly based on the requirement of modalities, and further background is eliminated and contrast limited adaptive histogram equalization is applied.

Step 3: Feature level fusion of well-known texture descriptor multi-block-local binary pattern and binary statistical image feature are implemented.

Step 4: Final feature vectors are generated by concatenation rule.

Step 5: Further, these features are trained and tested using various parametric and non-parametric binary classifiers.

The experimentation were carried out by 10 cross validation over different binary classifiers i.e. LDA, K-NN and SVM classifier on publically available SDUMLA-HMT database witch we have used where each volunteers contributes 10 images from each modality respectively. Further the Chi square test for testing the goodness of fit [56] is performed to check whether there exists any correlation between the respective modalities and it is observed that the modalities are co- related. After computation of individual feature sets of BSIF, MB-LBP and combined feature set BSIF+MB-LBP, the results have been classified into four sections False Positive (Fp), False negative (Fn), True Positve (Tp) and True Negative(Tn)

The detailed experimental results along with confusion matrix are shown from table 1 to table 3.

Table 1. Confusion matrix and results of MB-LBP

|

Classifier |

Male |

Female |

Precision |

Recall |

Accuracy |

|

LDA |

529 |

61 |

89.66 |

90.89 |

89.2 |

|

53 |

417 |

||||

|

KNN Cityblock |

555 |

35 |

94.06 |

91.88 |

92.1 |

|

49 |

421 |

||||

|

KNN Cosine |

536 |

54 |

90.84 |

90.38 |

89.5 |

|

57 |

413 |

||||

|

KNN Euclidean |

554 |

36 |

93.89 |

89.35 |

90.4 |

|

66 |

404 |

||||

|

KNN Correlation |

539 |

51 |

91.35 |

90.43 |

89.8 |

|

57 |

413 |

||||

|

SVM Quad |

566 |

24 |

95.93 |

96.25 |

95.7 |

|

22 |

448 |

From the Table 1, it is observed that by using SVM classifier, the highest accuracy of 95.7% is achieved and lowest accuracy of 89.2 % is noted by Linear Discriminant Analysis. K-NN with a value of K=3 which is empirically fixed City-Block distance has performed with an accuracy of 92.1% and cosine distance has yielded lower accuracy of 89.5% than the city block. Similarly, with Euclidean distance, 90.4% accuracy is observed and Correlation distance tends to yield an accuracy of 89.8% respectively.

Table 2. Confusion matrix and results of BSIF

|

Classifier |

Male |

Female |

Precision |

Recall |

Accuracy |

|

LDA |

525 |

65 |

88.98 |

88.38 |

87.4 |

|

69 |

401 |

||||

|

KNN Cityblock |

567 |

23 |

96.10 |

95.77 |

95.5 |

|

25 |

445 |

||||

|

KNN Cosine |

568 |

22 |

96.27 |

94.19 |

94.6 |

|

35 |

435 |

||||

|

KNN Euclidean |

568 |

22 |

96.27 |

94.98 |

95.1 |

|

30 |

440 |

||||

|

KNN Correlation |

568 |

22 |

96.27 |

93.72 |

94.3 |

|

38 |

432 |

||||

|

SVM Quad |

586 |

4 |

99.32 |

99.15 |

99.1 |

|

5 |

466 |

From the Table 2, it is observed that by using SVM classifier, the highest accuracy of 99% is achieved and the lowest accuracy of 89.4 % is noted by Linear Discriminant Analysis. K-NN with a value of K=3 which is empirically fixed City-Block distance has performed with an accuracy of 95.5% and cosine distance has yielded lower accuracy of 94.6% than the city block, likewise, with Euclidean distance, 95.1% accuracy is observed and Correlation distance tends to yield an accuracy of 94.3% respectively. From the above experiment noticeable results are obtained. Further, feature level fusion is carried out and the results are predicted in table 3.

Table 3. Confusion matrix and result analysis of fusion (MB-LBP+BSIF)

|

Classifier |

Male |

Female |

Precision |

Recall |

Accuracy |

|

LDA |

543 |

47 |

92.03 |

92.50 |

91.4 |

|

44 |

426 |

||||

|

KNN Cityblock |

567 |

23 |

96.10 |

97.08 |

96.2 |

|

17 |

453 |

||||

|

KNN Cosine |

561 |

29 |

95.08 |

95.24 |

94.6 |

|

28 |

442 |

||||

|

KNN Euclidean |

560 |

30 |

94.91 |

95.07 |

94.4 |

|

29 |

441 |

||||

|

KNN Correlation |

561 |

29 |

95.08 |

94.92 |

94.4 |

|

30 |

440 |

||||

|

SVM Quad |

589 |

1 |

99.83 |

99.49 |

99.8 |

|

3 |

467 |

From table 3, we observed that by using SVM classifier, the top result of 99.8% accuracy is achieved and lowest accuracy of 91.4 % is noted by Linear Discriminant Analysis. K-NN with a value of K=3 which is empirically fixed City-Block distance has performed with an accuracy of 96.2% and cosine distance has yielded lower accuracy of 94.6% than the city block, Correspondingly Euclidean distance and correlation distance performed similarly and yielded an accuracy of 94.4% respectively.

-

V. Comparative Analysis

To realize the effectiveness of the proposed method, authors have compared the proposed work with similar works found in literature, the major drawback of the reported literature is the self-created database and required conventional setup is inapt and has limited modalities. On the contrary the proposed method outperformed other methods available the literature using a fusion of MB-LBP and BSIF filters with SVM Classifier on publicly available SDUMLA-HMT multimodal database, which yielded the result of an accuracy of 99.8% and are reported in the table 4 and fig-4.

-

VI. Conclusion

Biometrics is one of the vital indexes for gender identification through the face or other traits of the humans. Each parameter of the biometrics can be characterized as better or worse depending on the data of the individual which is acquired for identification or authentication. Likewise, in this paper, we have analyzed and identified different biometric traits for identifying gender using multimodal biometrics. Multimodal biometrics has emerged as a choice of securing the system as it has better accuracy by combining different modalities as the unimodal system imposes some limitations that can be overcome using multimodalities, through which one can enhance matching performance, increase the population coverage by reducing failure to enroll rate and also anti-spoofing. In this experiment, MB-LBP and BSIF feature descriptors have been fused together to extract the features from multi-biometrics traits i.e. Fingerprints, Face and Iris. The proposed approach is tested on SDUMLA-HMT multimodal dataset and compared to the state-of-art in terms of accuracy. The experimental results illustrates that this approach can achieve a better performance to conduct the gender classification based on multimodal biometric system. Further, this work can be extended for the fusion of different modalities and different feature sets to address the number of issues faced in implementation of biometric system with respect to the gender classification.

Conflict of Interest

The authors declare that there is no conflict of interest regarding the publication of this work/paper.

Acknoweldgement

Список литературы Analysis of multi-modal biometrics system for gender classification using face, iris and fingerprint images

- S. S Gornale and Kruti R, ”Analysis of fingerprint image for gender classification using spatial and frequency domain analysis” American International Journal of Research in Science, Technology, Engineering & Mathematics (AIJRSTEM), ISSN (Print): 2328-3491, ISSN (Online): 2328-3580, ISSN (CD-ROM): 2328-3629. Issue 3, Volume 1, pp. 46-50, June-August, 2013.

- S.S Gornale and Kruti R “Fusion of Fingerprint and Age Biometric for Gender Classification Using Frequency and Texture Analysis” Signal & Image Processing: An International Journal (SIPIJ) Vol.5, No.6, December 2014. DOI: 10.5121/sipij.2014.5606, pp.: 76-85, ISSN 0976-710X ( online); 2229-3922(print).

- S.S Gornale, “Fingerprint Based Gender Classification for Biometric Security: A State-Of-The-Art Technique”, International Journal of Research in Science, Technology, Engineering & Mathematics ISSN (Print): 2328-3491, ISSN (Online): 2328-3580, ISSN (CD-ROM): 2328-3629, Vol.4 No. Issue- 3, pp.: 39-49.Dec-2014- Feb-2015.

- S.S Gornale, “Fingerprint Based Gender Classification Using Minutiae Extraction”, International Journal of Advanced Research in Computer Science (IJARCS), ISSN 0976-5697, pp.: 72-77, Volume 6, No. 1, January-February 2015.

- S.S Gornale ,Basavanna M and Kruthi R ,“Gender Classification Using Fingerprints Based On Support Vector Machines (SVM) With 10-Cross Validation Technique”, International Journal of Scientific & Engineering Research (ISSN 2229-5518) Volume 6, Issue 7, July 2015

- S.S Gornale, Malikarjun Hangarge, Rajmohan P, Kruthi R, “Haralick Feature Descriptors for Gender Classification Using Fingerprints: A Machine Learning Approach”, International Journal of Advanced Research in Computer Science and Software Engineering”, Volume 5, Issue 9, September 2015 ISSN: 2277 128X, pp.:72-78.

- S.S Gornale, Abhijit Patil and Veersheety C, “Fingerprint based Gender Identification using Discrete Wavelet Transform and Gabor Filters”, International Journal of Computer Applications (0975 – 8887) Volume 152 – No.4, pp.:34-37, October-2016.

- S.S Gornale, Basavanna M, and Kruti R, “Fingerprint Based Gender Classification Using Local Binary Pattern”, International Journal of Computational Intelligence Research ISSN 0973-1873 Volume 13, Number 2 (2017),pp.:261-271 © Research India Publications http://www.ripublication.com

- S.S Gornale, Abhijit Patil and Kruti R , “Fusion of Gabor Wavelet and Local Binary Patterns(LBP) Features sets for Gender Identification using Palm prints”, International Journal of Imaging Science and Engineering(IJISE), Published by International Technology Foundation of Research (ITFR) ISSN: 1934-9955 Vol. 10, Issue-2, pp.:1-6.June-2018

- Kruti R , Abhijit Patil and S.S Gornale ,”Fusion of Local Binary Pattern and Local Phase Quantization features set for Gender Classification using Fingerprints”, International Journal of Computer Sciences and Engineering,vol.7 ,issue 1, pp.:22-29.Feb -2019.

- S .S Gornale and Abhijit Patil,” Statistical Features Based Gender Identification Using SVM”, International Journal for Scientific Research and Development, issue 8, pp.:241-244, ISSN: 2321 0613, 2016.

- K.S. Arun and K.S. Sarath,” A machine learning approach for fingerprint based gender identification, 2011 IEEE Recent Advances in Intelligent Computational Systems, ISBN: 978-1-4244-9478-1, pp.:22-24 Sept. 2011.

- Rijo Jackson Tom, T.Arulkumara,” Fingerprint Based Gender Classification Using 2D Discrete Wavelet Transforms and Principal Component Analysis” International Journal of Engineering Trends and Technology- Volume4Issue 2 2013.

- Mangesh K. Shinde ; S.A. Annadate,” Analysis of Fingerprint Image for Gender Classification or Identification: Using Wavelet Transform and Singular Value Decomposition”, International Conference on Computing Communication Control and Automation, IEEE DOI 10.1109.

- Zs. M. Kovacs Vajna, R. Rovatti, and M. Frazzoni, “Fingerprint ridge distance Computation methodologies, Pattern Recognition, vol. 1, pp. 69–80, 2000.

- Tapia J.E., Perez C.A., Bowyer K.W. (2015) “Gender Classification from Iris Images Using Fusion of Uniform Local Binary Patterns. In: Agapito L., Bronstein M., Rother C. (eds) Computer Vision - ECCV 2014 Workshops. ECCV 2014. Lecture Notes in Computer Science, vol. 8926. Springer, Cham.

- Zhang H., Sun Z., Tan T., Wang J. (2011) “Ethnic Classification Based on Iris Images”, In: Sun Z., Lai J., Chen X., Tan T. (eds) Biometric Recognition. CCBR 2011. Lecture Notes in Computer Science, Vol 7098. Springer, Berlin, Heidelberg.

- S. Aryanmehr, Fanny Dufoss, Farsad Zamani Boroujeni . “CVBL IRIS Gender Classification Database Image Processing and Biometric Research, Computer Vision and Biometric Laboratory (CVBL)”, 2018 IEEE 3rd International Conference on Image, Vision and Computing (ICIVC). 2018.

- Shruti Nagpal , Mayank Vatsa ; Richa Singh ; Afzel Noore ; Angshul Majumdar Maneet Singh , “Gender and ethnicity classification of Iris images using deep class-encoder”, 2017 IEEE International Joint Conference on Biometrics (IJCB), DOI: 10.1109/BTAS.2017.8272755, ISSN: 2474-9699, Denver, CO, USA.2017.

- W Ming and Y Yuan, “Gender Classification Based on Geometrical Features of Palmprint Images”, The Scientific World Journal vol.2014.Article Id: 734564, pp.: 7 2014.

- Gholamerza Amayeh, Gorge Bebis and Mircea Nicolescu , “Gender Classification from Hand Shapes”, 2008 IEEE society conference on Computer Vision and Pattern Recognition workshop, 2008, pp.:1-7 DOI: 10.11.09/ Computer Vision and Pattern Recognition workshop.

- S.S Gornale ,Abhijit Patil and Kruti R ,” Fusion Of Gabor Wavelet And Local Binary Patterns (LBP) Features Sets For Gender Identification Using Palmprints”, International journal of Imaging Science and Engineering,Vol.10,Issue No.2,April2018.

- G. Azzopardi, A. Greco, A. Saggese and M. Vento, "Fast gender recognition in videos using a novel descriptor based on the gradient magnitudes of facial landmarks," 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, 2017, pp.: 1-6.doi: 10.1109/AVSS.2017.8078525.2017

- Zhihuai Xie, Zhenhua G and Chengshan Q,” Palmprint gender classification by Convolution neural network”, IET Computer Vision, Vol 12, Issue 4 pp.:476-483 ,2018,

- Yilong Yin, Lili Liu, and Xiwei Sun,” SDUMLA-HMT: A Multimodal Biometric Database”, The 6th Chinese Conference on Biometric Recognition (CCBR 2011), LNCS 7098, pp.: 260-268, Beijing, China, 2011.

- Paul Viola and MichaelJ. Jones. 2004. Robust Real-Time Face Detection. International Journal of Computer Vision 57, Vol.2, pp.: 137–154 (2004).

- Len Bui ; Dat Tran ; Xu Huang ; Girija Chetty, “Classification of gender and face based on gradient faces”, 3rd European Workshop on Visual Information Processing, 10.1109/EuVIP.2011.6045544 Paris, France -July 2011.

- Arnulf B. A. Graf and Felix A. Wichmann, “Gender Classification of Human Faces”, Biologically Motivated Computer Vision, eds. H.H. B¨ulthoff, S.-W. Lee, T.A. Poggio and C. Wallraven, LNCS 2525, pp.: 491-501, 2002, Springer Verlag, Heidelberg.

- S.Ravi, S.Wilson, “Face Detection with Facial Features and Gender Classification Based On Support Vector Machine”, 2010 Special Issue - International Journal of Imaging Science and Engineering,

- Abul Hasnat ; Santanu Haider ; Debotosh Bhattacharjee ; Mita Nasipuri , “A proposed system for gender classification using lower part of face image:, 2015 International Conference on Information Processing (ICIP), DOI: 10.1109/INFOP.2015.7489451, ISBN: 978-1-4673-7758-4, Pune, India.

- Burhan Ergen and Serdar Abut, "Gender Recognition Using Facial Images" In proceedings of International Conference on Agriculture and Biotechnology IPCBEE vol.60 IACSIT Press, Singapore,V.60. 22, pp.: 112-117, (2013).

- M. Castrilln, J. Lorenzo-Navarro, and E. Ramn-Balmaseda,“ Descriptors and regions of interest fusion for in- and cross-database gender classification in the wild”, Image Vision Computing. Vol.57, pp.: 15-24 January 2017.

- A. Jain,J. Huang and S. Fang, "Gender identification using frontal facial images," 2005 IEEE International Conference on Multimedia and Expo, 2005.

- Jakub Walczak, Adam Wojciechowski, " Improved gender classification using Discrete Wavelet Transform and hybrid Support Vector Machine", Machine GRAPHICS & VISION vol. 25, no. pp.: 27-34, April,2016

- Hadi Harb , Liming Chen ,” Voice-Based Gender Identification in Multimedia Applications“, Journal of Intelligent Information Systems, vol.24: pp.: 179, 2005.

- Rafik Djemili ; Hocine Bourouba ; Mohamed Cherif Amara Korba,” A speech signal based gender identification system using four classifiers”, 2012 International Conference on Multimedia Computing and Systems ,ISBN no.978-1-4673-1520-3, 2012.

- Daniel Reid, Sina Samangooei, Cunjian Chen, Mark Nixon, and Arun Ross. 2013. So. biometrics for surveillance: an overview. Machine learning: theory and applications. Elsevier pp.: 327–352,2013,

- L Walawalkar, M. Yeasin, A. Narasimhamurthy, and R. Sharma. Support vector learning for gender classification using audio and visual cues. International Journal of Pattern Recognition and Artificial Intelligence, Vol.17 issue 3 pp.:417–439, 2003.

- X. Li, X. Zhao, H. Liu, Y. Fu and Y. Liu, "Multimodality gender estimation using Bayesian hierarchical model," 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, 2010, pp.: 5590-5593. doi: 10.1109/ICASSP.2010.5495242.2010.

- Mohamed Abouelenien, Veronica Perez-Rosas , Rada Mihalcea , and Mihai Burzo, “Multimodal Gender Detection” , in Proceedings of ICMI ’17, Glasgow, UK, November , pp.: 302-311.ISBN no. 978-1-4503-5543-8.2017. 2017.

- A. Hadid and M. Pietikainen, "Combining motion and appearance for gender classification from video sequences", 2008 19th International Conference on Pattern Recognition, Tampa, Florida, 2008, pp.: 1-4.doi: 10.1109/ICPR.2008.4760995.

- Caifeng Shan and Shaogang Gong and McOwan, Peter W,” Learning Gender from Human Gaits and Faces”,2007 IEEE Conference on Advanced Video and Signal Based Surveillance Washington, DC, USA.pp.:505-510,isbn 978-1-4244-1695-0.

- G. Prabhua, Poornima S, Minimize Search Time through Gender Classification from Multimodal Biometrics”, 2nd International Symposium on Big Data and Cloud Computing (ISBCC’15), Procedia Computer Science 50, pp.:289 – 294, 2015.

- Juho Kannala, E Rahtu, “Bsif: Binarized statistical image features”, IEEE International Conference on Pattern Recognition, (ICPR), pp.: 1363–1366, Nov.2012.

- Timo Ojala, Matti Pietikainen and Topi Maenpaa, “Multi-resolution gray-scale and rotation invariant texture classification with local binary patterns". IEEE Transaction on Pattern Analysis and Machine Intelligence Vol.24 Issue 7, pp.:971–987, 2002.

- S.S Gornale ,Pooja U Patravali, Kiran S Marathe and Prakash Hiremath,”Determination of Osteoarthritis using Histogram of Oriented Gradients and Multiclass SVM”, International Journal of Image, Graphics and Signal Processing(IJIGSP),Vol.9,no.12,pp.:41-49.2017.DOI 10.5815/ijigsp.2017.12.05.

- Shivananda V. Seeri, J. D. Pujari and P. S. Hiremath, “Text Localization and Character Extraction in Natural Scene Images using Contourlet Transform and SVM Classifier”, International .Journal of. Image, Graphics and Signal Processing (IJIGSP), May 2016, Vol. 8, issue.5, pp.:36-42 DOI: 10.5815/ijigsp.2016.05.02,.

- S. S. Gornale, Pooja U. Patravali, Archana M. Uppin, Prakash S. Hiremath, "Study of Segmentation Techniques for Assessment of Osteoarthritis in Knee X-ray Images", International Journal of Image, Graphics and Signal Processing(IJIGSP), Vol.11, issue.2, pp.: 48-57, 2019.DOI: 10.5815/ijigsp.2019.02.06.

- S. S. Gornale, Ashvini K Babaleshwar, Pravin L Yannawar," Detection and Classification of Signage’s from Random Mobile Videos Using Local Binary Patterns", International Journal of Image, Graphics and Signal Processing(IJIGSP), Vol.10, issue.2, pp.: 52-59, 2018.DOI: 10.5815/ijigsp.2018.02.06

- Radha ShankarMani and Vijayalakshmi,”Big Data Analytics”, 2nd Edition, Wiley India Pvt.Ltt, ISBN 978-81-265-6575-7, 2017.

- Ramadan Gad, AYMAN EL-SAYED, Nawal El-Fishawy and M. Zorkany,” Multi-Biometric Systems: A State of the Art Survey and Research Directions”, (IJACSA) International Journal of Advanced Computer Science and Applications Vol. 6, issue. 6, 2015

- Maneet Singh, Richa Singh and Arun Ross,” A Comprehensive Overview of Biometric Fusion”, Information Fusion, February 11, 2019.

- Chunyu Zhang, Hui ding, Yuanyuan Shang et al, “Gender Classification based on Multi scale Facial Fusion Features”, Hindawi Mathematical Problems in Engineering, Vol pp.:6 2018, ID: 1924151, , http://doi.org/10.1155/2018/924151.

- V.thomas. N.V.Chawla, K.VBowyer and P.J.Flynn, “Learning to predict gender from Iris Images”, Proceedings of 2007 First IEEE International conference on Biometrics Theory Applications and Systems, pp.: 1-5, Crystal City, VA, USA, September 2007.

- V.Singh, V shokeen and M.B.singh, “Comparison Of Feature Extraction Algorithms for Gender Classification From Face Images”, International Journal of Engineering Research and Technology, Vol 2, issue 5, pp.: 1313-1318, 2013.

- Linda N Groat, David Wang”, Architectural Research Methods, 2nd Edition, ISBN: 978-0-470-90855-6, April-2013, Wiley Publishing House.

- S .S Gornale, Abhijit Patil and Mallikarjun Hangarge and R Pardesi. (2019) Automatic Human Gender Identification Using Palmprint. In: Luhach A., Hawari K., Mihai I., Hsiung PA., Mishra R. (eds) Smart Computational Strategies: Theoretical and Practical Aspects. Springer, Singapore. ISBN: 978-981-13-6295-8, pp.: 49-58, 22 March 2019.DOI: https://doi.org/10.1007/978-981-13-6295-8_5.

- S .S Gornale, Abhijit Patil and Mallikarjun Hangarge ”Binarized Statistical Image Feature set for Palmprint based Gender Identification”, Book of abstract- International Conference on Machine Learning, Image Processing, Network Security and Data Sciences (MIND-2019) pp:61 3-4 march 2019 NIT Kurukshetra India.

- G. Azzopardi, A. Greco, A. Saggese and M. Vento, "Fusion of Domain-Specific and Trainable Features for Gender Recognition from Face Images," in IEEE Access, Vol. 6, pp.:24171-24183, 2018.doi:10.1109/ACCESS.2018.2823378.

- Vijaylaxmin G.V Mahesh and Alex Noel Joseph Raj “Zernike Moments and Machine Learning Based Gender Classification Using Facial Images”. In: Abraham A., Cherukuri A., Madureira A., Muda A. (eds) Proceedings of the Eighth International Conference on Soft Computing and Pattern Recognition (SoCPaR 2016). Advances in Intelligent Systems and Computing, Springer, Cham, ISBN; 978-3-319-60618-7, Vol 614, pp.: 398-408 DOI: https://doi.org/10.1007/978-3-319-60618-7_39.