Body and Face Animation Based on Motion Capture

Автор: Xiaoting Wang, Lu Wang, Guosheng Wu

Журнал: International Journal of Information Engineering and Electronic Business(IJIEEB) @ijieeb

Статья в выпуске: 2 vol.3, 2011 года.

Бесплатный доступ

This paper introduces the motion capture technology and its use in computer animation. Motion capture is a powerful aid in computer animation and a supplement to the traditional key-frame animation. We use professional cameras to record the body motion and facial expression of the actor and then manipulate the data in software to eliminate some occlusion and confusion errors. As to data that is still not satisfying, we use data filter to smooth the motion by cutting some awry frames. Then we import the captured data into Motionbuilder to adjust the motion and preview the real-time animation. At last in Maya we combine the motion data and character model, let the captured data drive the character and add the scene model and music to export the whole animation. In the course of computer animation, we use this method to design the animation of military boxing, basketball playing, folk dancing and facial expression.

Motion caputure, computer animation, Maya, Motionbuilder

Короткий адрес: https://sciup.org/15013079

IDR: 15013079

Текст научной статьи Body and Face Animation Based on Motion Capture

Published Online March 2011 in MECS

In performance animation system, motion capture system will record the actor’s motion and expression according to the requirement of drama [1]. And then in some animation software, these motion and expression can drive the character model to perform the same motion and expression as the actor does. The task of motion capture is to detect and record the motion trail of the actor’s body or facial muscles and transfer them into digital form. Motion capture technology is now the most important and complex part in performance animation, but also an immature one. There is still a lot of room for improvement. The capture system will be more precise, more adaptable to complex environment, and have more intelligence to aid the determination.

In motion capture system, we do not need to record the motion of every point on the actor, which will result in a huge computation pressure, but some key points that can represent the movement as a whole. The key points are mostly on the joints of body or muscles on face [2], which can determine other points’ locations in the movement. According to the curves of key points and combined with physical and physiological constrains, we can compose the final 3D motion.

Performance animation system includes body motion capture and expression capture; some systems are realtime capture while others are off-line system. According to different technology theories, motion capture can be divided into four types: mechanical, acoustic, electromagnetic and optical. The typical motion capture system usually includes sensors, signal capture device, data transform device and data manipulate device.

In this article, we use optical motion capture system based on computer vision to capture motion and expression. Theoretically, a point in 3D environment can be located by two different cameras and the trajectory of this point can be got by high speed continuous shooting image sequences. The advantages of optical motion capture system are: without the limit of wire and mechanical device, the actor can perform freely; its high sampling speed can meet the need of most high speed motion. The disadvantages are: the computation workload of post-processing is huge; the illumination and reflection of the environment light affect the capture result; nearby markers can be confused or occluded when capturing complex motions.

The remainder of this paper is organized as follows: section 2 introduces the history and application of motion capture; our experimental environment is described in section 3; in section 4 we outline the steps of motion capture and detailed capture and manipulation process are explained in section 5; and in the final part, we present some examples.

-

II. Backgroud

Motion capture technology in animation production appears in 1970s. Disney tried to copy the continuous photos of the actor to improve the animation effect. Although the motion is very realistic, the animation is lack of dramatics and cartoon. Researches are conducted by university labs in 1980s, attracting more and more attention from researchers and businessman and gradually turned from merely a trial study into a practical tool. With the rapid development of computer hardware and software and under the requirement of animation, motion capture is now move onto a practical stage and a number of manufactures have introduced a variety of commercial motion capture equipments, such as Vicon [3], Motion Analysis [4] and Polhemus [5]. And motion capture’s application area has got beyond performance animation and reached into virtual reality, games, ergonomic study, simulation training and bio-mechanics research.

Motion capture is so suitable in high realistic animation film or games that it can make an avatar perform like a real person. It can greatly improve the efficiency of animation, reduce the cost, make the animation more realistic and totally improve the level of animation. Motion capture provides a new way of human-machine interaction [6]. We can use the gesture and expression got from motion capture as input methods to control the computer. This technology is also important in virtual reality [7]. In order to realize the interaction between human and virtual environment, we must determine the head, hand or body’s locations and directions and send the information back to the display and control system. In robotic control [8], robot in danger environment can do the same motion performed by engineer in security environment to realize remote control. In interactive games, motion can be captured and used to drive game characters to give game player a new experience of participation, such as Kinect for Xbox 360 [9]. Motion capture is very useful in physical training as it makes the traditional training based only on experience enter into a digital era. Athlete’s motion can be recorded and manipulated in computer to make quantitative analysis and combined with human physiology, physics to enhance the training technology [10].

-

III. Capture environment

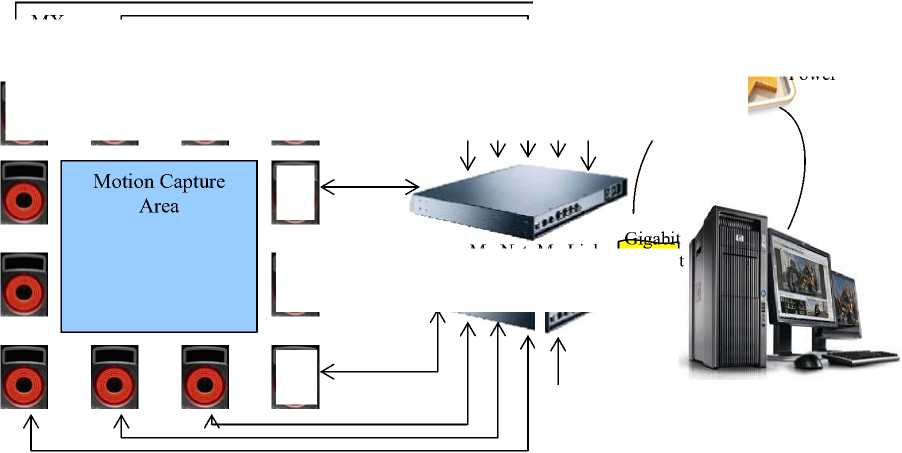

In our experiment environment, we use 8 VICON MX T40 cameras (4 megapixels) and 4 VICON MX T160 cameras (16 megapixels) which are hung on the ceiling in a 10*10m2 square area. Fig.1 is the architecture of the motion capture system.

The effective capture area is 7*7 m2. The high-precision cameras T160 are used to capture expression of the face and all of the 12 cameras are used to capture the motion of body. Besides MX cameras, the motion capture system includes MX units, MX software, workstation, MX cables and MX peripherals. The MX units here include MX Net and MX Link, which are used to create a distributed architecture of 12 MX cameras. The MX software is Blade and workstation is HP Z800. Proprietary MX cables connect the system components, providing a combination of power, Ethernet communication, synchronization signals, video signals, and data. MX peripherals include a Calibration Kit, suits and markers.

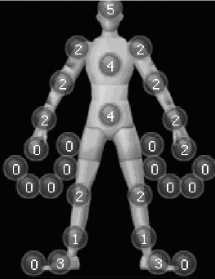

Each marker can be identified by cameras accurately, because every marker has its own grayscale image (Fig.2) which is distinguished from other markers, just as different person has different face. Each camera takes sequential pictures of all markers and then constructs the 3D location of all the markers.

Figure 2. Marker’s grayscale image.

-

IV. schedule of motion capture

Before motion capture, users will invite directors and actors and supply the storyboard of the drama. The director will explain the contents and the performance to the actors and they will practice until satisfying the requirement. Because of the special characteristic of motion capture, the actors sometimes will perform not so same as in a real film, and it need the director tell them how to do with the director’s experience.

Power

Mx Net Mx Link g Ethernet

Figure 1. Motion capture system.

MX

Cameras

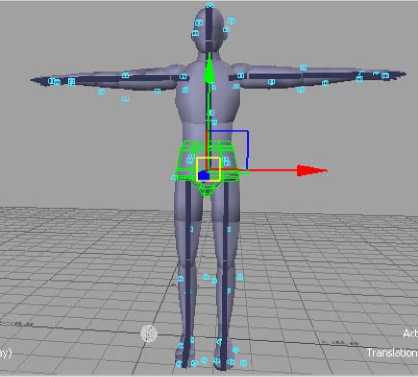

A. Character model

Character models (Fig.3) are provided by users, which are suitable for drama. You can design it in Maya or other software. In some animation which pursues real effect, the model should be made as similar as the performer whose motion will be captured. In this way, the captured points will fit the model well, so the motion performed by the character model looks with little distortion. But in some animation full of imagination, models are fancy, which do not agree with the performer and the performer will adjust his/her motion to simulate the motion which the fancy character will perform.

Figure 3. Character model in Maya.

B. Storyboard

Storyboard is an important part in animation production. Users will invite director and actors and the director will give out the storyboard of the drama. Actors will practice the performance with the aid of director. Storyboard is a series of illustrations or images displayed in sequence for the purpose of pre-visualizing a motion picture, animation, motion graphic, interactive media sequence and website interaction. The Storyboard software helps users take an idea and translate it into a visual story that will become a complete production. Planning ahead can save a lot of time and effort. Using storyboarding will save you considerable time during production and some additional planning of your storyboard project will further streamline the workflow.

C. Motion capture and data manipualte

-

1) Capture the actors’ motion (Fig.4) under the requirement of director. The capture process will be described in detail in next section, which includes calibrating the capture environment, actor preparing, capturing the preparatory motion and capturing the real performance.

-

2) Manipulate the motion data after capturing to export the apropriate data format. The captured data contians errors and it is not avoidable. Some errors are caused by the occlusion of points and some are because of the effect of light. In some error, we can find the points but cannot labling correctly. Part of errors can be eliminated by manually labeling and some can be smoothed by frame interpolation.

Figure 4. Motion capture in our experiment environment.

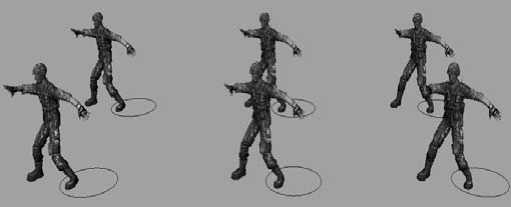

D. Using captured data to drive model

Figure 5. Motion capture data driven characters.

Use the data to drive the character model and let the model do the same motion performed by the actor (Fig.5). At this step, you can see the prototype of motion capture animation. The result will be affected by both the quality of captured motion and the model we created.

-

V. the process of Our System

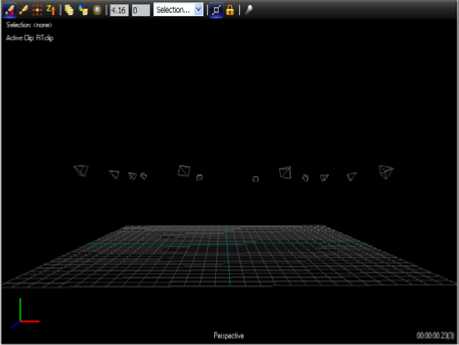

A. Calibrate environment

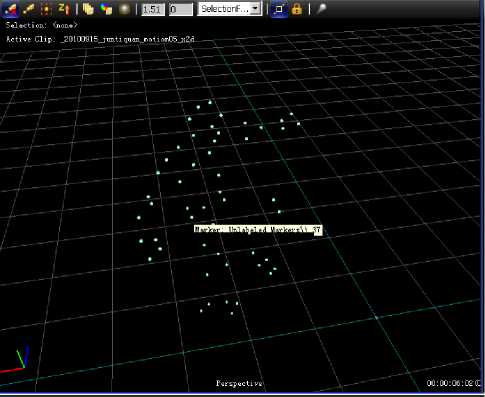

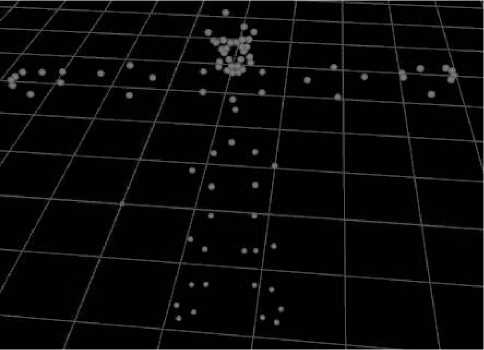

First, we will calibrate the environment. This step is important, for any error in the calibration will cause fault no matter how well the post process will be. The calibration includes cameras calibration, volume origin and axes calibration which will determine the floor and capture volume of the environment. When the scene (Fig.6) displayed on screen is the same as the actual environment, this step is successful.

Figure 6. Experiment environment.

B. Actor preparation

At the same time, actor (Fig.7) puts on the tights and according to the selected template, markers are stick to the tights or face. You can design your own template if you have some special requirements of the motion capture. Markers on the tight or face should be agreed with that on the template.

Figure 7. Actor with markers.

C. Capture the preparatory motion

The aim of capturing the preparatory motion is to identify the markers to the full extent and get the skeleton of the actor. The skeleton will be used in the following steps, so the actor should try to act standard and get the correct skeleton.

D. Capture and edit motion

After getting a satisfied skeleton, the capture process is simple. We just need to record the motion but it is very possible that some markers can not be identified correctly (Fig.8). If the missing markers are just in a few frames, the defect will not affect the whole motion; but if they are in sequential frames long enough, it will cause great problem. We must assign the correct name of the missing markers manually and it will assure the correct labeling and skeleton.

Figure 8. Captured motion with missing marker.

E. Motion capture data used in Motionbuilder

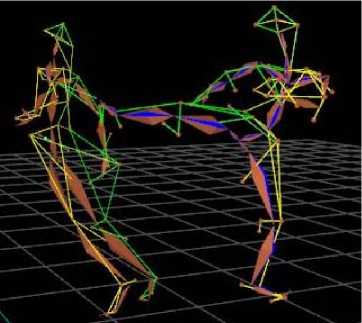

Import the captured data into Motionbuilder to view the real-time animation effect and do motion editing and data cleanup work. First we use the actor model in Motionbuilder to fit the markers we captured (Fig.9a); then we connect the gauge point on the actor with the marker captured one by one (Fig.9b). Let the captured motion drive the actor, if the result is satisfying, we bind the motion data with the skeleton for the next step.

a)

b)

Figure 9. Motion capture data driven actor in Motionbuilder.

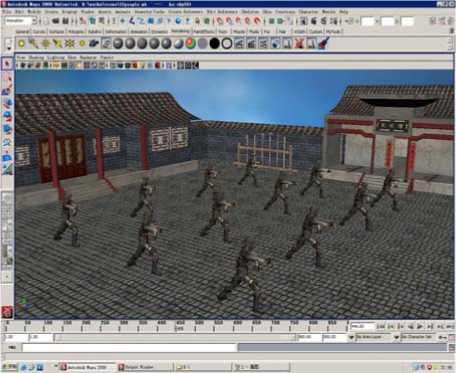

F. Motion in Maya

In this phase, the captured data, skeleton and character designed in Maya are combined together. We finish the animation and add the scene in Maya. Music and special effects are also added and we can export the final animation (Fig.10).

Figure 10. Military boxing.

G. Capture facial expression

Similar to the body capture, we can capture the facial expression. With tiny markers sticking on the face (Fig.11), facial expression can be captured by the powerful cameras T160. We can arrange number and locations of markers to form a face template according to the real capture requirement. Fig.12 represents the animation of mouth and eyes.

c)

a)

d)

Figure 12. Facial expression. a) Mouth close. b) Mouth open. c) Grin. d) Eyes open. e) Eyes close.

e)

H. Capture the full body

If the motion pays particular attention to the finger movement, we can also capture the fingers together with body movement. Fig.13 is the markers on glove and Fig.14 is the full body movement with face. We can capture all of them together. Also we can change the template of the hand with more or fewer markers.

Figure 11. Markers on face.

Figure 13. Markers on glove.

Figure 14. Markers on full body and face.

I. Multi-person interaction

If the animation needs interaction, we can capture the motions of two or more persons together (Fig.15). But the more persons in the scene, the more markers are occluded mutually, which will cause more errors and need more modification.

Figure 15. Multi-person motion capture.

-

VI. samples

We are considering capture more kinds of motions and set up an online motion library. Now we have captured walking series, football series, basketball series (Fig.16), folk dancing series (Fig.17) and Chinese kung fu series.

Figure 16. Basketball playing.

Conclusion and Future work

This paper describes the using of body and face motion capture in computer animation and gives some examples. The experience shows that applying motion capture to the characters in animation can produce vivid motion result and is more time-saving than traditional key-frame animation. We are considering combining motion capture and key-frame animation in the future work and taking the advantage of them to produce effective animation. And with more and more kinds of data captured, we will enrich our motion capture library and provide the data to researchers without the motion capture system.

Acknowledgment

This work was partly supported by Shandong Provincial Natural Science Foundation, China (ZR2010FQ011).

Список литературы Body and Face Animation Based on Motion Capture

- Alberto Menache, Understanding motion capture for computer animation and video games, Morgan Kaufmann, 1999.

- Esben Plenge, Facial Motion Capture with Sparse 2D-to-3D Active Appearance Models, Master Thesis, 2008.

- http://www.vicon.com/

- http://www.motionanalysis.com/

- http://www.polhemus.com/

- Qiong Wu, Maryia Kazakevich, Robyn Taylor and Pierre Boulanger, “Interaction with a Virtual Character through Performance Based Animation”, Lecture Notes in Computer Science, Volume 6133, 2010, p.285-288.

- E. Granum, B. Holmqvist, Soren Kolstrup, Madsen K. Halskov and Lars Qvortrup, Virtual Interaction: Interaction in Virtual Inhabited 3D Worlds, 1 edition, Springer, 2000.

- Ohta, A. and Amano, N., “Vision-Based Motion Capture for Human Support Robot in Action”, SICE-ICASE, 2006. International Joint Conference.

- http://www.xbox.com/en-US/kinect

- Wang zhaoqi, Xia shihong, Qiu xianjie, Wei yi, Liu li, Huang he, Digital 3D Trampoline Simulating System – VHTrampoline, Chinese Journal of Computers, Vol.30, No.3, Mar.2007.