Comprehensive method for multimodal data analysis based on optimization approach

Автор: Ivanov I.A., Brester C. Yu., Sopov E.A.

Журнал: Сибирский аэрокосмический журнал @vestnik-sibsau

Рубрика: Математика, механика, информатика

Статья в выпуске: 4 т.18, 2017 года.

Бесплатный доступ

In this work we propose a comprehensive method for solving multimodal data analysis problems. This method in- volves multimodal data fusion techniques, multi-objective approach to feature selection and neural network ensemble optimization, as well as convolutional neural networks trained with hybrid learning algorithm that includes consecutive use of the genetic optimization algorithm and the back-propagation algorithm. This method is aimed at using different available channels of information and fusing them at data-level and decision-level for achieving better classification accuracy of the target problem. We tested the proposed method on the emotion recognition problem. SAVEE (Surrey Audio-Visual Expressed Emotions) database was used as the raw input data, containing visual markers dataset, audio features dataset and the combined audio-visual dataset. During the experiments, the following variable parameters have been used: multi-objective optimization algorithm - SPEA (Strength Pareto Evolutionary Algorithm), NSGA-2 (Non-dominated Sorting Genetic Algorithm), VEGA (Vector Evaluated Genetic Algorithm), SelfCOMOGA (Self- configuring Co-evolutionary Multi-Objective Genetic Algorithm), classifier ensemble output fusion scheme - voting, averaging class probabilities, meta-classification, as well as resolution of the images used as input for the convolu- tional neural network. The highest emotion recognition accuracy achieved with the proposed method on visual markers data is 65.8 %, on audio features data - 52.3 %, on audio-visual data - 71 %. Overall, SelfCOMOGA algorithm and meta-classification fusion scheme proved to be the most effective algorithms used as part of the proposed comprehen- sive method. Using the combined audio-visual data allowed to improve the emotion recognition rate compared to using just visual or just audio data.

Multimodal data analysis, multi-objective optimization, feature selection, neural network ensemble, convolutional neural network, evolutionary optimization algorithms

Короткий адрес: https://sciup.org/148177755

IDR: 148177755 | УДК: 004.93

Текст научной статьи Comprehensive method for multimodal data analysis based on optimization approach

Introduction. Nowadays, data powers most of the artificial intelligence applications that are used to solve a wide range of practical problems – from hand-written digit recognition to medical image analysis. However, most data that is generated in the real world, has many modalities, or in other words, channels of perception. E. g., humans perceive the surrounding world through images, sounds, smells, tastes and touch.

Machines, on the other hand, are capable to register much more modalities that ultimately can be represented in a quantitative format. The big question is how to make all these modalities helpful to machines in their goal of better perception of the surrounding world. More specifically, the goal is to develop the algorithms that would combine all the available multimodal data and solve the practical problems more efficiently [1].

In this work we describe the comprehensive approach for multimodal data analysis and test it on the problem of automatic human emotion recognition. This problem deals with three data modalities: separate frames of a video sequence depicting the speaker’s face, marker coordinates of the main facial landmarks of speakers, and audio features of the speaker’s voice. The purpose of this work is to test empirically if combining multimodal data would help increase the effectiveness of automated human emotion recognition.

The rest of the paper is organized as follows. Section 2 includes an overview of the significant related work on the topic of multimodal data fusion for machine learning problems. In Section 3 the proposed comprehensive approach is described. Section 4 provides information regarding the dataset used. Section 5 includes the results of testing the proposed approach on the emotion recognition problem. Finally, Section 6 provides the summary of the results and future work.

Significant related work. The idea of constructing ensembles of base learners is popular today among machine learning researchers. Partly this can be explained by the lower calculation cost constraints. For many cases it has been shown that combining several base learners into ensemble helped to improve the overall system performance.

E. g., neural networks proved to be effective for solving practical problems of supervised and reinforcement learning when combined into ensembles [2; 3]. The question of using optimization algorithms, including stochastic optimization, for neural network training and optimal structure selection was also surveyed [4].

The problem of dimensionality reduction is also vital for building efficient machine learning systems. Apart from the standard methods like principal component analysis (PCA) [5], feature selection methods have become equally effective and practically applicable to a wide range of problems [6]. Specifically, wrapper methods based on single-objective and multi-objective optimization algorithms are used [7].

The emotion recognition problem can be formulated in different ways based on the practical needs:

-

1. Two emotion classes – “angry”, “not angry”. Practical usage – automated call centers, surveillance systems.

-

2. Determination of user’s degree of agitation. Practical usage – automated “awake”–“asleep” classification for drivers in order to prevent car accidents.

-

3. Seven emotion classes – neutral, happiness, anger, sadness, surprise, fear, disgust. Practical usage – automatic data collection for sentiment analysis.

The problem of multimodal data fusion for automatic emotion recognition is well represented among researchers [9–11]. They use various data modalities like facial markers, voice audio features, raw facial images, EEG (electroencephalogram) data, eyeball movement data, etc [12; 13]. Nevertheless, the emotion recognition problem is not completely solved nowadays.

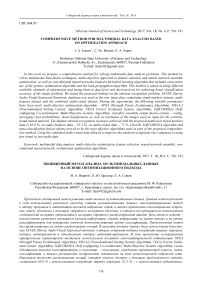

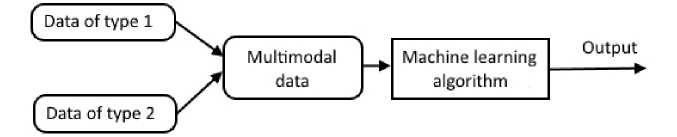

Methodology. The proposed comprehensive approach for multimodal data analysis includes two types of multimodal information fusion – data-level (fig. 1) and decision-level (fig. 2). Data-level fusion simply combines several unimodal datasets into the multimodal dataset, whereas decision-level fusion involves training several machine learning algorithms on unimodal datasets and then combining them into an ensemble and fusing their outputs.

Fig. 1. Data-level multimodal information fusion

Рис. 1. Слияние мультимодальной информации на уровне данных

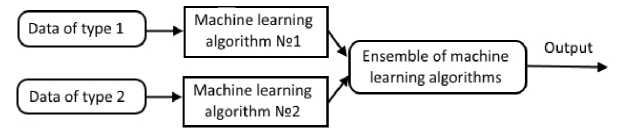

The diagram of the proposed comprehensive approach in terms of the emotion recognition problem is presented in fig. 3. The input data consists of the audio features extracted from a speech audio signal, facial marker coordinates, and separate video frames extracted from a video sequence. These data modalities are thoroughly described in Section 4.

Audio features and facial markers are fused on the data-level and provided as an input to the multi-objective feature selection procedure, which is described in Subsection 3.1. The output of this procedure are the feature sets optimized according to 2 criteria – the emotion recognition rate is maximized and the number of features used is minimized.

The feature set that provided the best emotion recognition rate is selected and passed further to the procedure of multi-objective design of neural networks (NN) ensemble (discussed in detail in Subsection 3.2). Both steps – feature selection and NN ensemble design, are performed using the same multi-objective optimization algorithm.

The output of this step is the set of neural networks with the optimized parameter values – the number of hidden neurons and the number of training iterations.

The third data modality, namely, the video frames are passed over to the convolutional neural network (CNN) [14] trained with hybrid learning algorithm, briefly described in Subsection 3.3.

The output of CNN is combined on the decision-level with the outputs of optimized neural networks described earlier. The final output is constructed using one of the following schemes:

-

1. Voting scheme – selects the class that is predicted by the majority of classifiers.

-

2. Averaging class probabilities – each class posterior probabilities are averaged over all base classifiers, the class with the highest probability is selected as the output.

-

3. Meta-classification – an additional metaclassification layer is added that takes the class probabilities predicted by the base learners as an input. Support vector machine (SVM) was used as a meta-classifier.

Fig. 2. Decision-level multimodal information fusion

Рис. 2. Слияние мультимодальной информации на уровне классификаторов

Fig. 3. Diagram of the comprehensive approach for solving multimodal data analysis problems on the example of emotion recognition problem

Рис. 3. Схема обобщенного метода решения задач анализа мультимодальных данных на примере задачи распознавания эмоций

Multi-objective approach to feature selection. The essence of the multi-objective approach to feature selection is in formulating the feature selection problem as a multi-objective optimization problem and solving it with a certain multi-objective optimization algorithm.

In this formulation, there are two conflicting optimization criteria:

-

1. Classification rate – to be maximized. This criterion is calculated according to the wrapper method scheme. This means that a selected subset of features is passed over to a certain classifier with constant parameter values (neural network in our case), which is trained on the corresponding reduced dataset, and then classification rate is calculated according to its standard formula:

-

2. Number of selected features – to be minimized. This is explained by the fact that fewer features generally lead to simpler models with higher generalization, therefore the fewer features are preferable.

R = — . 100 %, (1)

N where R is the classification rate, n – number of correctly classified instances, N – total number of instances.

The input variables for this optimization problem are binary vectors that indicate which features are selected (marked as 1), and which are not (marked as 0).

The class of evolutionary algorithms was chosen for solving the formulated multi-objective optimization problem, specifically, the algorithms SPEA (Strength Pareto Evolutionary Algorithm) [15], VEGA (Vector Evaluated Genetic Algorithm) [16], NSGA-2 (Non-dominated Sorting Genetic Algorithm) [17] and SelfCOMOGA (Selfconfiguring Co-evolutionary Multi-Objective Genetic Algorithm) [18]. This class of algorithms was chosen due to the fact that they do not require any prior knowledge about the optimized function, therefore, they are a good choice for solving complex multi-objective optimization problems, in which the optimization functions are not explicitly defined. Moreover, all of these algorithms provide the Pareto set estimate as a result of their work, which is useful for the next step of this approach – the design of neural network ensembles.

Multi-objective approach to the design of a neural network ensemble. The design of a neural network ensemble was formulated as the multi-objective optimization problem, in the same manner as the feature selection step. However, the optimized criteria in this case are:

-

1. Classification rate – to be maximized.

-

2. Number of hidden neurons – to be minimized. The idea behind this is that neural networks with fewer number of neurons generally have a simpler model structure, thus more robust.

The input variables in this optimization formulation are:

-

1. Number of hidden neurons.

-

2. Number of network training iterations.

The optimized subset of features found at the step of feature selection is passed over to the neural networks trained at this step. The same evolutionary multi-objective optimization algorithms were used for neural network optimization. Moreover the same algorithm was used for both steps – feature selection and neural net optimization, for every experiment run.

Hybrid learning algorithm for training convolutional neural networks. The hybrid learning algorithm for training convolutional neural networks (CNN) involve consecutive use of the co-evolutionary genetic optimization algorithm (GA) and the back propagation (BP) algorithm for training the network.

The idea behind this is to combine the two types of search – stochastic and gradient-based. First, GA, which uses a stochastic optimization strategy, is applied to find the potential subspace of the global optimum in the space of CNN weights. The solution found by GA is then used as the starting point for BP – which is a gradient-based procedure, to finalize the search and reach the global optimum.

Experiments were conducted that proved the effectiveness of this hybrid learning algorithm on the emotion recognition problem and on the MNIST hand-written digit recognition problem [19].

Dataset and feature description. SAVEE database was used for solving the emotion recognition problem in this work. This database consists of 480 videos of 4 male English speakers, pronouncing a set of predetermined phrases, imitating 7 basic emotions – anger, disgust, fear, happiness, neutral, sadness, surprise. The distribution of cases across classes is uniform – 15 cases per each emotion for every speaker, except for the neutral emotion – it is represented with 30 cases per speaker.

This database was selected for several reasons:

-

1. This DB was specifically designed for researching the effectiveness of audio-visual data fusion.

-

2. It includes videos of emotions of ordinary people, rather than professional actors, therefore it is closer to reality.

-

3. All 7 basic emotion classes are equally represented in this database.

Three data modalities were extracted from this dataset:

-

1. Video frames – 5 frames per video were selected, the final class output for the entire video was determined according to the voting scheme across the frames.

-

2. Audio features – extracted using openSMILE software kit [20]. Extracted features include speaker voice pitch features, energy features, duration features and spectral features. All in all, 930 features were extracted. PCA was applied to them, and 50 most informative components were used as the input data for the classifiers.

-

3. Facial markers – 60 markers were drawn on the speaker faces marking the main facial landmarks (fig. 4). The marker indicating the tip of nose was selected as the central point, and coordinates of all markers were tracked and registered for each video frame. Coordinates of each marker were averaged across the entire video, and their standard deviation was found, thus totaling in 240 features per video. PCA was applied in the same manner as for the audio features, and 50 most informative components were finally selected.

Also, audio features and facial markers were combined to form the audio-visual multimodal dataset with 100 features.

Experiments setup and results. The experiments were conducted across four different evolutionary multiobjective optimization algorithms applied to the feature selection and the design of neural networks ensemble, three different decision-level fusion schemes, and four different video frame sizes passed over to the CNN.

The evolutionary optimization algorithms include:

-

1. SPEA – Strength Pareto Evolutionary Algorithm.

-

2. NSGA-2 – Non-dominated Sorting Genetic Algorithm.

-

3. VEGA – Vector Evaluated Genetic Algorithm.

-

4. SelfCOMOGA – Self-configuring Co-evolutionary Multi-Objective Genetic Algorithm.

The decision-level fusion schemes include:

-

1) voting;

-

2) averaging class probabilities;

-

3) SVM meta-classification.

The video frame sizes include 40×40, 50×50, 70×70 and 100×100 pixels. Besides that, the experiments were conducted across different multimodal input data:

-

1. Visual markers + video frames.

-

2. Audio features + video frames.

-

3. Visual markers + audio features + video frames.

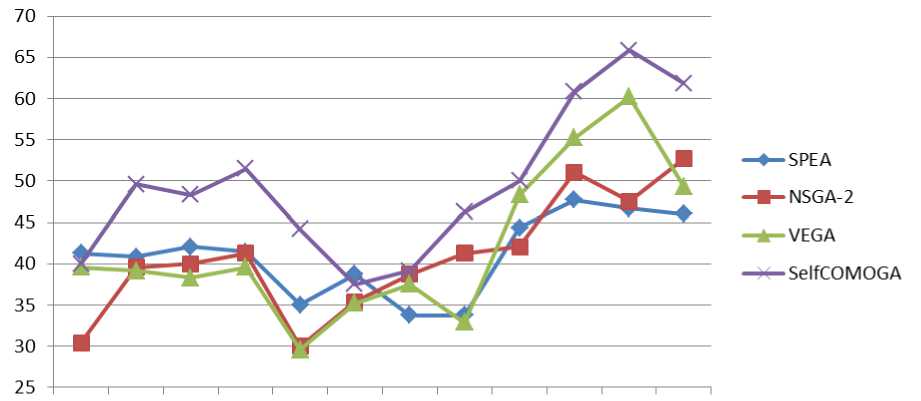

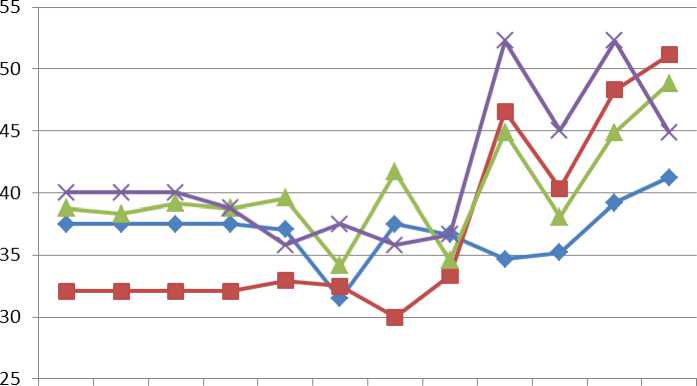

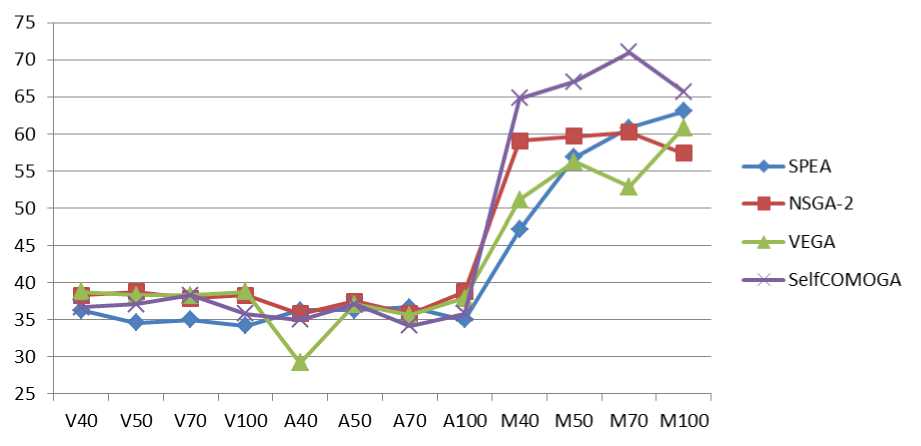

Emotion classification was done in a speakerindependent formulation, when the train and test datasets contain the instances belonging to different speakers. Cas-sification rate was chosen as a criterion to compare different variants of the developed comprehensive approach. The results are presented in fig. 5–7.

Fig. 4. Example of a video frame from SAVEE DB, face of the speaker is covered with the markers of the main facial landmarks

Рис. 4. Пример видеокадра из базы данных SAVEE, лицо говорящего покрыто маркерами основных точек-ориентиров лица

V40 V50 V70 V100 A40 ASO A7O A1OO M40 M5O M7O M1OO

Fig. 5. Emotion classification rate (%), facial markers + video frames input; fusion schemes:

V – voting, A – averaging class probabilities, M – meta-classification; video frame resolutions ( n × n ): n = 40, 50, 70, 100

Рис. 5. Точность классификации эмоций (%), лицевые маркеры + видеокадры на входе; схемы слияния: V – голосование; A – усреднение вероятностей классов; M – метаклассификация; размер видеокадров ( n × n ): n = 40, 50, 70, 100

—♦—SPEA

-■- NSGA-2

—*— VEGA

— Self CO MOG A

V40 V50 V70 V100 A40 ASO A7O A1OO M40 M5O M7O M1OO

Fig. 6. Emotion classification rate (%), audio features + video frames input; fusion schemes: V – voting, A – averaging class probabilities, M – meta-classification; video frame resolutions ( n × n ): n = 40, 50, 70, 100

Рис. 6. Точность классификации эмоций (%), аудиопризнаки + видеокадры на входе; схемы слияния: V – голосование; A – усреднение вероятностей классов; M – метаклассификация; размер видеокадров ( n × n ): n = 40, 50, 70, 100

Fig. 7. Emotion classification rate (%), facial markers + audio features + video frames input; fusion schemes: V – voting, A – averaging class probabilities, M – meta-classification; video frame resolutions ( n × n ): n = 40, 50, 70, 100

Рис. 7. Точность классификации эмоций (%), лицевые маркеры + аудиопризнаки + видеокадры на входе; схемы слияния: V – голосование; A – усреднение вероятностей классов; M – метаклассификация; размер видеокадров ( n × n ): n = 40, 50, 70, 100

According to the results, the following empirical conclusions can be made regarding the effectiveness of the proposed comprehensive approach applied to the emotion recognition problem:

-

1. Generally, SVM meta-classification fusion scheme proved to be more effective than voting and averaging.

-

2. SelfCOMOGA algorithm proved to be the most effective for feature selection and NN ensemble design,

-

3. The size of the input video frames passed over to the CNN does not significantly affect the effectiveness of the overall approach.

especially when coupled with meta-classification fusion scheme.

The best emotion recognition rate achieved with using visual markers along with video frames is 65 %, with audio markers + video frames – 52 %. Fusion of audio features and visual markers along with video frames provided an increase of the best achieved emotion recognition rate up to 71 %. Therefore, the proposed approach that uses all channels of available information, turned out to be effective in terms of the test emotion recognition problem.

Summary and future work. In this work we described the comprehensive approach for solving multimodal data analysis problems and tested it on the emotion recognition problem.

The advantage of the proposed approach is that it enables to use all available channels of input information in unison. Moreover, this approach is customizable, that is, it can include different optimization algorithms applied to its core procedures – multi-objective feature selection and classifiers ensemble design, different classification algorithms can be used as base learners of an ensemble, different decision-level fusion schemes can be applied.

According to experimental results, the proposed approach proved to be effective for solving the emotion recognition problem, which includes three channels of input data. The best achieved emotion recognition rate in a speaker-independent problem formulation is 71 %. The use of all three channels of input information outperformed the other cases where only a subset of input information as used.

More research needs to be done to check the effectiveness of the proposed approach on other machine learning problems with multiple data modalities.

Acknowledgements. The reported study was funded by Russian Foundation for Basic Research, Government of Krasnoyarsk Territory, Krasnoyarsk Region Science and Technology Support Fund to the research project № 16-41-243036.

Список литературы Comprehensive method for multimodal data analysis based on optimization approach

- Poria S., Cambria E., Gelbukh A. F. Deep convolutional neural network textual features and multiple kernel learning for utterance-level multimodal sentiment analysis//Proc. of the Conference on Empirical Methods in Natural Language Processing. 2015. P. 2539-2544.

- Fausser S., Schwenker F. Selective neural network ensembles in reinforcement learning: taking the advantage of many agents//Neurocomputing, 2015. № 169. P. 350-357.

- Urban traffic flow forecasting through statistical and neural network bagging ensemble hybrid modeling/F. Moretti //Neurocomputing. 2015. № 167. P. 3-7.

- Zhang L., Suganthan P. N. A survey of randomized algorithms for training neural networks//Information Sciences. 2016. № 364. Р. 146-155.

- Wold S., Esbensen K., Geladi P. Principal component analysis//Chemometrics and intelligent laboratory systems. 1987. № 2(1-3). Р. 37-52.

- Chandrashekar G., Sahin F. A survey on feature selection methods//Computers & Electrical Engineering. 2014. № 40(1). Р. 16-28.

- Han M., Ren W. Global mutual information-based feature selection approach using single-objective and multi-objective optimization//Neurocomputing. 2015. № 168. Р. 47-54.

- Haq S., Jackson P. J. B. Speaker-dependent audio-visual emotion recognition//International Conference on Audio-Visual Speech Processing. 2009. Р. 53-58.

- Analysis of emotion recognition using facial expressions, speech and multimodal information/C. Busso //Proceedings of the 6th International Conf. on multimodal interfaces. 2004. P. 205-211.

- Soleymani M., Pantic M., Pun T. Multimodal emotion recognition in response to videos//IEEE transactions on affective computing. 2012. № 3(2). Р. 211-223.

- Enhanced semi-supervised learning for multimodal emotion recognition/Z. Zhang //IEEE Intern. Conf. on Acoustics, Speech and Signal Processing. 2016. P. 5185-5189.

- Multimodal emotion recognition from expressive faces, body gestures and speech/G. Caridakis //IFIP Intern. Conf. on Artificial Intelligence Applications and Innovations. 2007. P. 375-388.

- Emotion recognition based on joint visual and audio cues/N. Sebe //18th International Conf. on Pattern Recognition (ICPR’06). 2006. № 1. Р. 1136-1139.

- Backpropagation applied to handwritten zip code recognition/Y. LeCun //Neural computation. 1989. № 1(4). Р. 541-551.

- Zitzler E., Thiele L. An evolutionary algorithm for multiobjective optimization: the strength Pareto approach//Technical Report № 43, Computer Engineering and Communication Networks Lab. 1998. 40 p.

- Schaffer J. D. Multiple objective optimization with vector evaluated genetic algorithms//Proceedings of the 1 st International Conference on Genetic Algorithms and Their Applications. 1985. Р. 93-100.

- A fast and elitist multiobjective genetic algorithm: NSGA-II/K. Deb //IEEE transactions on evolutionary computation. 2002. № 6(2). Р. 182-197.

- Иванов И. А., Сопов Е. А. Самоконфигурируе-мый генетический алгоритм решения задач поддержки многокритериального выбора//Вестник СибГАУ. 2013. № 1(47). С. 30-35.

- LeCun Y., Cortes C., Burges C. J. C. The MNIST database of handwritten digits. 1998.

- Eyben F., Wullmer M., Schuller B. OpenSMILE -the Munich versatile and fast open-source audio feature extractor//Proceedings of the 18th ACM International Conference on Multimedia. 2010. Р. 1459-1462.