Content based image retrieval using multi motif co-occurrence matrix

Автор: A.Obulesu, V. Vijay Kumar, L. Sumalatha

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 4 vol.10, 2018 года.

Бесплатный доступ

In this paper, two extended versions of motif co-occurrence matrices (MCM) are derived and concatenated for efficient content-based image retrieval (CBIR). This paper divides the image into 2 x 2 grids. Each 2 x 2 grid is replaced with two different Peano scan motif (PSM) indexes, one is initiated from top left most pixel and the other is initiated from bottom right most pixel. This transforms the entire image into two different images and co-occurrence matrices are derived on these two transformed images: the first one is named as “motif co-occurrence matrix initiated from top left most pixel (MCMTL)” and second one is named as “motif co-occurrence matrix initiated from bottom right most pixel (MCMBR)”. The proposed method concatenates the feature vectors of MCMTL and MCMBR and derives multi motif co-occurrence matrix (MMCM) features. This paper carried out investigation on image databases i.e. Corel-1k, Corel-10k, MIT-VisTex, Brodtaz, and CMU-PIE and the results are compared with other well-known CBIR methods. The results indicate the efficacy of the proposed MMCM than the other methods and especially on MCM [19] method.

Peano scan, Left most, Bottom right most, Concatenation

Короткий адрес: https://sciup.org/15015957

IDR: 15015957 | DOI: 10.5815/ijigsp.2018.04.07

Текст научной статьи Content based image retrieval using multi motif co-occurrence matrix

Published Online April 2018 in MECS DOI: 10.5815/ijigsp.2018.04.07

Extraction of powerful features from the visual content of an image plays a significant role in many image processing and pattern recognition applications. Content based image retrieval (CBIR) plays a vital role in the field of pattern recognition, image processing, and artificial intelligence. The steady increase in on-line accesses to massively available remotely sensed images, availability of mass storage devices at a lower cost, the widespread availability of high band width internet at a very low price and massive usage of images in normal life for all sorts of communication have drastically broadened the need of advancements in CBIR with more accuracy. The initial form of image retrieval is text based. In this method, each image is manually indexed or interpreted by text descriptors. This process of image retrieval, requires lot of human interaction and the success rate is highly dependent on how humans interpret or indexes the images.

In CBIR methods, low level visual features are extracted from data base images and stored as image features or indexes. The query image is also indexed with the same visual features as database images. The query image indexes are compared with indexes of data base images using distance measures. Based on the least distance values or similarity measures between database images and query image, the retrieved images are ranked. This process does not require any semantic labeling [1, 2] because the visual indexes are directly derived from the image content. The accuracy of a CBIR system depends on the extraction of more important and powerful depiction of the attributes from images. Many meaningful approaches are proposed in the literature for CBIR to extract the physical features such as texture [3], color [4, 5], shape or structure [6, 7] or a combination of two or more such features. The color indexing [4] and color histogram [5] lack spatial information and that’s why they produce false positives. Later the spatial information is obtained using color correlogram approach [8] which is an extended version of basic color histogram [5].The normalized version of color correlogram is derived based on color co-occurrence histogram [9]. The most important characteristic of an image is the texture. Today, the texture based features extracts more powerful discriminating visual features. Many methods are proposed in the literature to extract texture features and the popular ones are proposed by Haralick et al. [10], Chen et al. [11] and Zhang et al. [12], and Haralick [13].

Later, extended GLCM texture descriptors [14] and local extrema co-occurrence patterns (LECoP) [15] are proposed. To achieve better accuracy in CBIR the color features are combined with texture features. The popular methods include the multi-texton histogram (MTH) [16], modified color motif co-occurrence matrix [17], wavelets and color vocabulary trees [18], MCM [19], the k-mean color histogram (CHKM) [20] integrated color and intensity co-occurrence matrix (ICICM) [21], and modified color MCM [17]. The discrete wavelet transforms (DWT) are used to extract directional features on texture in three directions (horizontal, vertical and diagonal) [22]. The Rotated wavelet filters that collected various image features in various directions are used for image retrieval [23-25]. Many popular biomedical image retrieval systems are based on texture features [26, 27, 28]. The paper is summarized as follows: The related work is presented in Section 2. The proposed method is explained in Section 3. Experimental results and discussions are given in Section 4. Section 5 concludes the paper.

-

II. Related Work

Various approaches based on space filling curves have been studied [29, 30] in the literature for various applications like CBIR [31, 32], data compression [33], texture analysis [34, 35] and computer graphics [36]. The Peano scans are connected points spanned over a boundary and known as space filling curves. The connected points may belong to two or higher dimensions. Space curves are basically straight lines that pass through each and every point, of bounded sub space or grid, exactly once in a connected manner. The Peano scans are more useful in pipelined computations where scalability is the main performance objectives [37-39]. And other advantage of this is they provide an outline for handling larger dimensional data, which is not easily possible by the use of conventional methods [40].

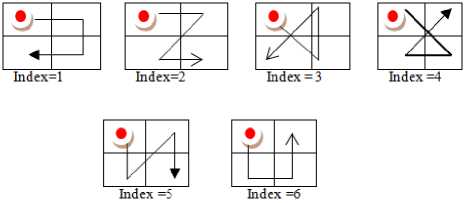

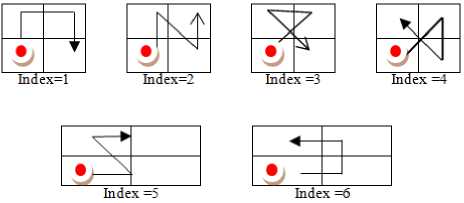

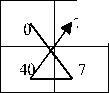

To capture the low level semantics of space filling curves on a 2x2 grid, a set of six Peano scan motifs (PSM) are proposed in the literature recently [19]. In [19] they have considered the initial scan position as top left most corners of the 2 x 2 grid only, and defined six different motifs. Each of these six different motifs represent a distinctive shape of pixels on the 2 x 2 grid (starting from top left corner) as shown in Fig.1. These motifs construction is similar to a breadth first traversal of the Z-tree. A compound string may result of all six motifs, if one traverse the 2 x 2 neighborhood, based on a contrast value. Based on the texture contrast features over 2 x 2 grid a particular motif out of the above six motifs will be resulted. Each motif on the 2 x 2 grid is represented with an index value (Fig.1). This transforms the image into an image with six index values. A co-occurrence matrix on the motif index image is derived and named as motif cooccurrence matrix (MCM) [19] and used for CBIR.

Fig.1. PSM initiated from top left most pixel on a 2 x 2grid.

Fig.2. PSM initiated from top right most pixel on a 2 x 2grid.

Fig.3. PSM initiated from bottom left most pixel on a 2 x 2grid.

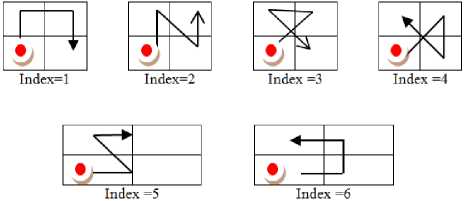

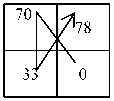

Fig.4. PSM initiated from bottom right most pixel on a 2 x 2grid.

-

III. Derivation of Proposed Multi Motif Co-Occurrence Matrix (Mmcm) Descriptor

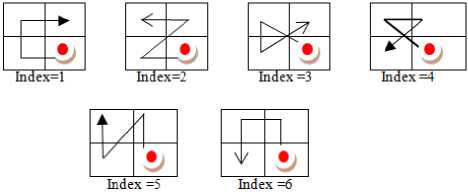

The proposed image retrieval system frame work is given in Fig.5.

This paper divides the texture image into 2 x 2 grids in a non-overlapped manner. The PSMs are derived on these 2x2 grids . On a 2 x 2 grid one can generate four types of PSMs starting from i.e. i) top left most pixel (Fig.1) ii) top right most pixel (Fig.2) ii) bottom left most pixel(Fig.3) iv) bottom right most pixel (Fig.4). This paper derived optimal PSM based on incremental difference in the intensities of the 2 x 2 neighborhood. Each type of PSM generates six motifs over a 2 x 2

window and it results a total of twenty four different motifs by considering all four directions as specified above on a 2 x 2 window. N.Janwara et al. [19] considered in their work on CBIR, the motifs that start from the top left corner of the 2 x 2 window (Fig.1). This reduces the number of motifs to six only (Fig.1). However, this is not representing the powerful texture information. One needs to consider different directions or different initial points to derive powerful texture information on a 2 x 2 grid. This is missing in [19]. To improve the performance drastically, the present paper computed two different types of motifs on the same 2 x 2 grid. The first one (type one) starts from top left most corner (Fig.1) and the other motif (second type) starts from bottom right most corner (Fig. 4).

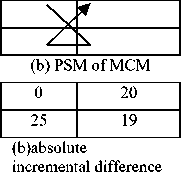

This paper initially finds the absolute differences of intensities of neighboring pixels with initial point of the PSM of the 2x2 grid as shown in Fig. 6(b) and 7(b). The motif is derived based on the absolute incremental intensity differences with initial points . The corresponding motif code/index is assigned to the 2x2 grid. The Fig.6(c) and Fig. 7(c) shows the formation of different motifs for the same 2x2 grid with two different initial scan points. The initial scan points are represented in red color.

Concatenation of GLCM features of MCMTLand MCMBR

Fig.5. The frame work of the proposed MMCM descriptor.

▼

MMCM descriptor

125 92

|

|85-85| |

|85-14| |

|

|85-125| |

|85-92| |

(a) (b)

(c ) (d)

Fig.6. (a) 2x2 grid; (b) absolute differences with respect to initial scan point (top left most); (c) formation of motif; (d) Motif index.

85 14

|92-85|

|92-125|

|92-14|

|92-92|

(a) (b) (c )

(d)

Fig.7. (a) 2x2 grid; (b) absolute differences with respect to initial scan point (bottom right most); (c) formation of motif; (d) Motif index.

This process derives two different types of transformed motif images from the original image namely motif indexed image initiated from top left most corner (MIITL) and motif indexed image initiated from bottom right most corner (MII BR ). The dimensions of MII TL and MII BR is N/2 x M/2, where N x M is the size of the original texture image and the range of values in MII TL and MII BR will be from 1 to 6. The present paper derived two co-occurrence matrices namely motif co-occurrence matrix MCM TL and MCM BR on the two transformed image i.e. MII TL and MII BR respectively. The size of MCM TL and MCM BR will be 6 x 6.

The transformation process of MII TL and MII BR images on a 8 x 8 texture image is displayed in Fig. 8.The transformed images are of size 4 x 4 with values ranging from 1 to 6.

|

22 |

53 |

19 |

54 |

25 |

55 |

55 |

24 |

|

78 |

55 |

84 |

52 |

57 |

90 |

86 |

50 |

|

19 |

68 |

35 |

28 |

10 |

38 |

36 |

55 |

|

83 |

29 |

10 |

68 |

54 |

31 |

44 |

82 |

|

76 |

52 |

47 |

43 |

47 |

53 |

15 |

56 |

|

45 |

38 |

61 |

45 |

40 |

62 |

40 |

76 |

|

50 |

86 |

85 |

88 |

20 |

11 |

87 |

17 |

|

90 |

96 |

14 |

14 |

55 |

59 |

51 |

66 |

(a) 8x8 texture image

|

4 |

6 |

3 |

3 |

|

6 |

3 |

6 |

2 |

|

3 |

6 |

3 |

2 |

|

3 |

3 |

3 |

5 |

|

3 |

3 |

2 |

6 |

|

6 |

6 |

4 |

3 |

|

2 |

2 |

4 |

3 |

|

2 |

2 |

1 |

1 |

-

(b) MIITL image for the Fig (c) MIIBR image for the Fig 8(a).

8(a).

Fig.8. Transformation of texture image into motif indexed image.

The two MCMs i.e. MCM TL and MCM BR are constructed using the transformed image whose (p,q,r) entry represents the probability of finding a motif p at a distance r from the motif q. The MCM TL and MCM BR are also constructed on the query image Q. The main intuition behind using the MCMTL and MCMBR is to find out the common objects i.e. motifs corresponding to the same grid with two different initial positions, in between query and database images.

This paper computed GLCM features on MCM TL and MCM BR on four different directions with different d values. The four different directions considered are 0o, 45o, 90o and 135o. The average feature value of these four directions under each d value is computed and it is considered as the feature vector. The present paper concatenated the feature vectors of MCM TL and MCM BR and derived multi MCM (MMCM). The feature vector size of the MMCM is 2xV, where V is the feature vector size of each MCM TL and MCM BR . The spatial relationship of MMCM between query and data base images makes the proposed MMCM highly effective in image retrieval. The proposed MMCM is highly suitable for CBIR problem because it captures low level texture properties more efficiently and further the dimensions of each MCMTL and MCMBR are only 6 X 6 for every color plane irrespective of the image size and grey level range of the original image.

Further in the proposed MCM TL and MCM BR , we have initially computed the absolute difference from the initial point of PSM with respect to other pixels of 2x2 grid which is different from MCM as shown in Fig.20. This absolute incremental difference derives the PSMs more precisely than motifs used in MCM as shown in Fig.9.

(a) 2x2 grid

Fig.9. Difference of MCM and proposed MCMTL indexing.

(c) PSM of

MCM TL

(d) Motif index of MCM

(d) Motif index of MCMTL

-

IV. Results and Discussions

In this paper the proposed MMCM is tested on popular and bench mark data bases of CBIR namely, Corel 1k [41], Corel-10k [42], MIT-VisTex [43], Brodtaz [44] and CMU-PIE [45]. These data bases are used in many image processing applications. The images of these data bases are captured under varying lighting conditions and with different back grounds. Out of these data bases the Brodtaz textures are in grey scale and rest of the data bases are in color. The CMU-PIE database contains facial images, captured under different varying conditions like: illuminations, pose, lighting and expression. The natural database that represents humans, sceneries, birds, animals, and etc., are part of Corel database. The above five set of different data bases contain different number of total images, with different sizes, textures, image contents, classes where each class consists of different sets of similar kind of images. The Table 1 gives overall summary of these five data bases. The image samples of these databases are shown from Fig. 10 to Fig. 14.

Fig.10. Corel-1K database sample images.

Fig.11. Corel-10K database sample images.

Table 1. Summary of the image databases.

|

N o. |

Name of the Database |

Size of the image |

Numbe r of categor ies/ classes/ subsect ions |

Number of images per category |

Total number of images |

|

1 |

Corel-1k[41] |

384x256 |

10 |

100 |

1000 |

|

2 |

Corel-10k[42] |

120x80 |

80 |

Vary |

10800 |

|

3 |

MIT-VisTex[4 3] |

128x128 |

40 |

16 |

640 |

|

4 |

Brodtaz 640[44] |

128x128 |

40 |

16 |

640 |

|

5 |

CMU-PIE[45] |

640x486 |

15 |

Vary |

702 |

Fig.12. The sample textures from Brodtaz database.

Fig.13. The sample textures from MIT-VisTex texture.

The query image is denoted as ‘Q’. After the feature extraction from MMCM of query image, the n-feature vectors of ‘Q’ are represented as VQ = (VQ 1 , VQ 2 ,..., VQ (n-1) , VQ n ). The extracted n-feature vectors, of MMCM, of each image in the database is represented as VDBi = (VDB i1 ,VDB i2 ,...,VDB in ); i = 1, 2,..., DB. The aim of any CBIR method is to select ‘n’ best images from the database image that look like the query image. To accomplish this, distance between the corresponding feature vectors of the query and image in the database DB is computed. From this, the top ‘n’ images whose distance measure is least are selected. This paper used Euclidean distance (ED) on the proposed method as given below in equation 1.

ED:

D(Q,k ) = Z ?=i 7f ^"^ (1)

* 1+ f DBtj + fQj

Fig.14. The sample facial images from CMU-PIE database.

Where fBBij is jth feature of ith image in the database |DB|.

To measure the performance of the proposed MMCM, CBIR method, this paper computed the two mostly used quality measures average precision/average retrieval precision (ARP) rate and average recall/average retrieval rate (ARR) as shown below: For the query image Iq, the precision is defined as follows:

Precision : P(lq ) =

Numb er of re I evan t imag e s re tri eve d

Total number of images retrieved

ARP = ^ Z^PPD | (3)

Recall : R(Jq') =

Number of relevant images retrieved

Total num ber of relevant images in the database

ARR = iBB_Zl=B| R( /. )

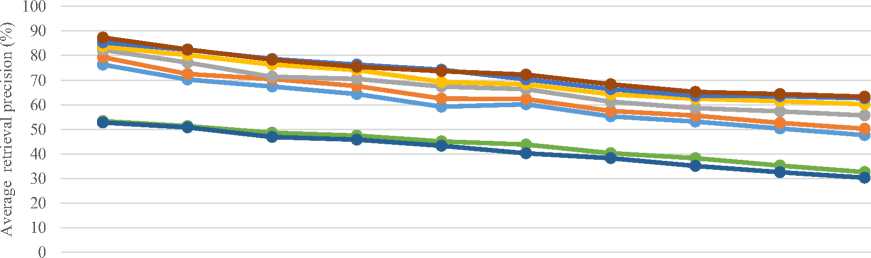

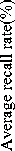

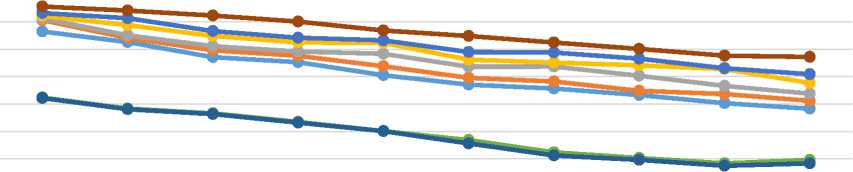

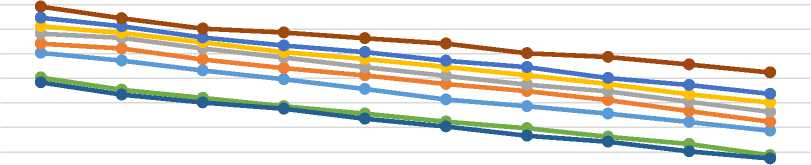

The proposed MMCM, MCMTL and MCMBR descriptors are compared with the retrieval results of the recent descriptors such as local binary pattern (LBP) [35], local ternary pattern (LTP)[46], semi-structure local binary pattern (SLBP)[47], local derivative pattern (LDP) [48], local tetra pattern (LTrP) [49].

10 20 30 40 50 60 70 80 90 100

No. of retrieved images

-

• SLBP[47] • LBP[35] • LDP[48]

-

• LTP[46] • LTrP[49] • Proposed MCMTL

-

• Proposed MCMBR ^*— Proposed MMCM method

-

(a) . ARP for Corel 1k database.

0.6

0.5

0.4

0.3

0.2

0.1

10 20 30 40 50 60 70 80 90 100

No. of images retrieved

-

• SLBP[47]

-

• LTP[46]

^*— Proposed MCMBR

-

• LBP[35]

-

• LTrP[49]

^*— Proposed MMCM method

-

• LDP[48]

-

• Proposed MCMTL

-

(b) ARR for Corel 1k database.

Fig.15. Comparison of proposed MCMTL, MCMBR and MMCM descriptors with SLBP, LBP, LDP, LTP, LTrP over Corel-1k database using (a) ARP (b) ARR.

-

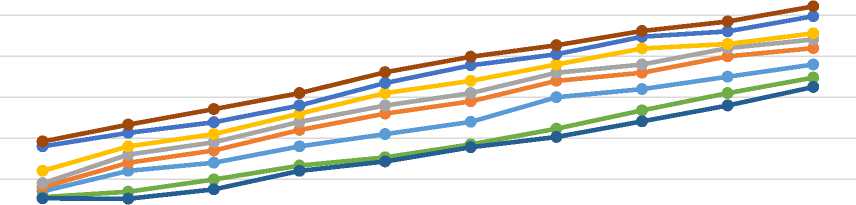

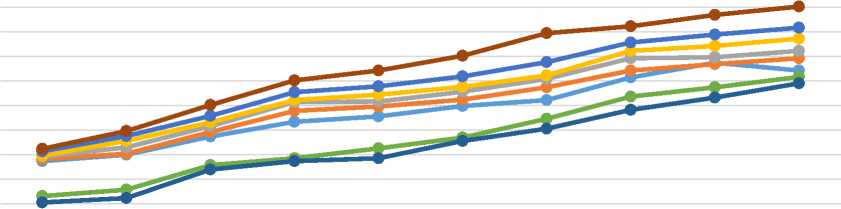

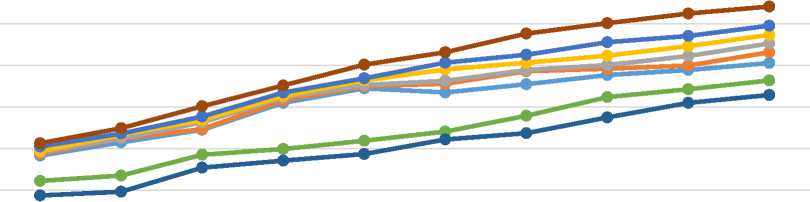

• SLBP[47] • LBP[35] • LDP[48]

-

• LTP[46] • LTrP[49] • Proposed MCMTL

—0=>Proposed MCMBR ^*— Proposed MMCM method

-

(a) . ARP for Corel 10k database.

No. of retrieved images

• SLBP[47]

• LBP[35]

• LTP[46]

^*—LTrP[49]

-

• LDP[48]

-

• Proposed MCMTL

-

• Proposed MCMBR

-

• Proposed MMCM method

-

-

(b) ARR for Corel 10k database.

-

Fig.16. Comparison of proposed MCM TL , MCM BR and MMCM descriptors with SLBP, LBP, LDP, LTP, LTrP over Corel-10k database using (a) ARP (b) ARR.

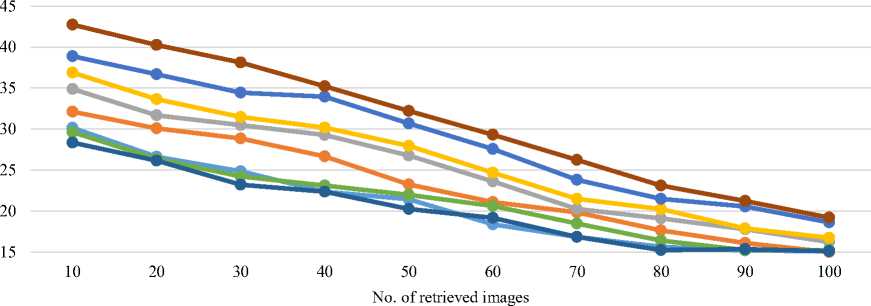

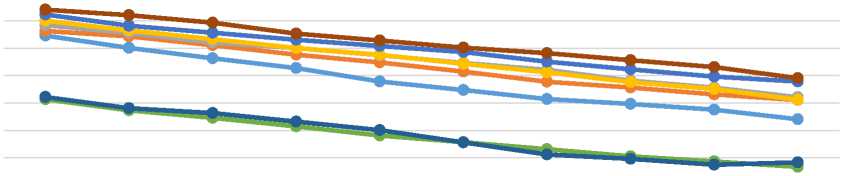

Aerage recall rate (%) Average retrieval precision (%)

10 20 30 40 50 60 70 80 90 100

No. of retrieved images

• SLBP[47]

• LBP[35] • LDP[48]

• LTP[46]

• LTrP[49] • Proposed MCMTL

—0=-Proposed MCMBR

^*— Proposed MMCM method

(a) ARP for MIT-VisTex texture database.

0.5

0.45

0.4

0.35

0.3

0.25

0.2

0.15

0.1

0.05

10 20 30 40 50 60 70 80 90 100

No. of retrieved images

• SLBP[47] е LBP[35] • LDP[48]

• LTP[46] • LTrP[49] • Proposed MCMTL

-

• Proposed MCMBR ^*— Proposed MMCM method

-

(b) ARR for MIT-VisTex texture database.

Fig.17. Comparison of proposed MCMTL, MCMBR and MMCM descriptors with SLBP, LBP, LDP, LTP, LTrP over MIT-VisTex database using (a) ARP (b) ARR.

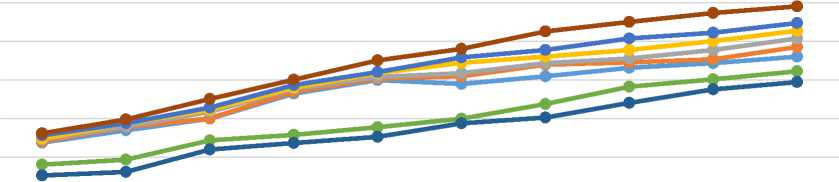

Average recall rate (%) Average retrieval precision (%)

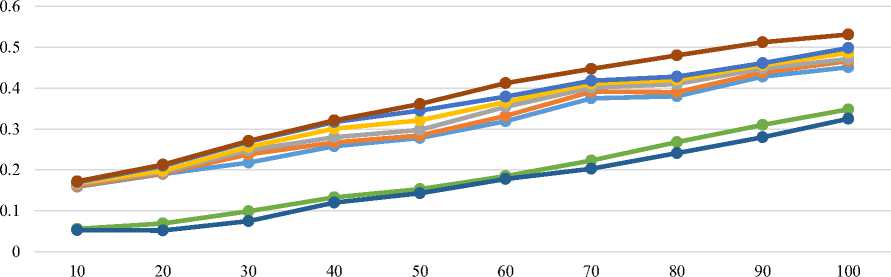

0.6

0.5

0.4

0.3

0.2

0.1

30 40 50 60 70 80 90 100

No. of retrieved images

-

• SLBP[47] • LBP[35] • LDP[48]

-

• LTP[46] ^*—LTrP[49] • Proposed MCMTL

-

• Proposed MCMBR • Proposed MMCM method

(a) ARP for Brodtaz texture database.

10 20 30 40 50 60 70 80 90 100

No. of retrieved images

-

• SLBP[47] • LBP[35] • LDP[48]

-

• LTP[46] —0=-LTrP[49] • Proposed MCMTL

—^Proposed MCMBR —^Proposed MMCM method

-

(b) ARR for Brodtaz texture database.

0.6

Fig.18. Comparison of proposed MCM TL , MCM BR and MMCM descriptors with SLBP, LBP, LDP, LTP, LTrP over Brodtaz database using (a) ARP (b) ARR.

Average recall rate (%) Average retrieval precision (%)

10 20 30 40 50 60 70 80 90 100

No. of retrieved images

• SLBP[47] • LBP[35] • LDP[48]

-

• LTP[46] • LTrP[49] • Proposed MCMTL

-

• Proposed MCMBR • Proposed MMCM method

0.5

0.4

0.3

0.2

0.1

(a) ARP for CMU-PIE database.

10 20 30 40 50 60 70

No. of retrived images

80 90 100

• SLBP[47] • LBP[35] • LDP[48]

• LTP[46] • LTrP[49] • Proposed MCMTL

—^—Proposed MCMBR —^—Proposed MMCM method

(b) ARR for CMU-PIE database.

in^rxti tn>jca

Fig.19. Comparison of proposed MCM TL , MCM BR and MMCM descriptors with SLBP, LBP, LDP, LTP, LTrP over CMU-PIE database using (a) ARP (b) ARR.

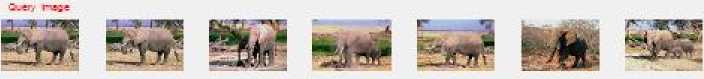

(a) Corel 1k database.

Qwer, "л 2<

(b) Corel 10k database.

|

S.No |

Descriptors |

Dimensions |

|

1 |

SLBP |

256 |

|

2 |

LBP |

256 |

|

3 |

LTP |

2x256 |

|

4 |

LDP |

4x256 |

|

5 |

LTrP |

13x256 |

|

6 |

Proposed MCM TL |

6x6 |

|

7 |

Proposed MCM BR |

6x6 |

|

8 |

Proposed MMCM |

2x(6x6) |

The dimension of each descriptor considered and the proposed MMCM is summarized in Table 2 . The dimension of MMCM is lower than all the recent and state- of-the-art descriptors and the retrieval rate of MMCM is better.

-

V. Conclusions

We have presented an extended version of MCM for image retrieval. The proposed MMCM captured more discriminative texture information than MCM by deriving Peano scan motifs in two different directions. This has resulted for the transformation of dual motif images. Using this, the present paper derived MMCM based on two MCM namely: “motif co-occurrence matrix –initiated from top left most pixel (MCMTL)” and “motif co-occurrence matrix –initiated from bottom right most pixel (MCMBR)”. The MCMTL and MCMBR captured the third order neighborhood statistics of the image. The size of MCMTL and MCMBR will be 6 x 6 only, thus it is easy to compute GLCM features on these two. The MCMTL and MCMBR are easy to understand, implement and efficient in terms of storage requirement and computational time. The concatenation of the feature vectors derived from i.e., MCMTL and MCMBR are assigned as MMCM features. The MMCM features are used for image retrieval. Thus the proposed MMCM features reduce the computation time of the similarity measure and its dimension is 2 x (6x6) which is least to any other descriptor (Table 2). The MMCM method is invariant to any monotonic mapping of individual color planes, such as gain adjustment, contrast stretching, and histogram equalization.

Список литературы Content based image retrieval using multi motif co-occurrence matrix

- F. Monay, D. Gatica-perez, Modeling semantic aspects for cross-media image indexing, IEEE Transactions on Pattern Analysis and Machine Intelligence 29 (10) (2007) 1802–1817.

- Pranoti P. Mane, Amruta B. Rathi, Narendra G. Bawane, An Interactive Approach for Retrieval of Semantically Significant Images , I.J. Image, Graphics and Signal Processing, 2016, 3, 63-70

- R.W. Picard, F. Liu, A new ordering for image similarity, in: Proceedings of IEEE Conference on ASSP, April (1994) 129–132.

- M.J. Swain, D.H. Ballard, Color indexing, International Journal of Computer Vision 7 (1) (1991).

- G. Pass, R. Zahib, J. Miller, Comparing images using color coherent vector, in: The Fourth ACM International Multimedia Conference, November (1996) 18–22.

- Lakhdar Belhallouche ,, Kamel Belloulata, Kidiyo Kpalma , A New Approach to Region Based Image Retrieval using Shape Adaptive Discrete Wavelet Transform, I.J. Image, Graphics and Signal Processing, 2016, 1, 1-14.

- Pranoti P. Mane , Narendra G. Bawane , Image Retrieval by Utilizing Structural Connections within an Image , I.J. Image, Graphics and Signal Processing, 2016, 1, 68-74.

- J. Huang, R. Kumar, M. Mitra, W. Zhu, W. Zahib, Image indexing using color correlogram, in: IEEE Conference on Computer Vision and Pattern Recognition, San Juan, Puerto Rico, June (1997) 762–768.

- P. Chang, J. Krumm, Object recognition with color co-occurrence histogram, in: Proceedings of the IEEE International Conference on CVPR, Fort Collins, CO (1997) 498–504.

- R. Haralick, K. Shanmugan, I. Dinstain, Textural features for image classification, IEEE Trans. Syst. Man Cybern. 3 (6) (1973) 610–622.

- J. Chen, S. Shan, C. He, G. Zhao, M. Pietikäinen, X. Chen, W. Gao, WLD: a robust local image descriptor, IEEE Trans. Pattern Anal. Mach. Intell. 32 (9) (2009) 1705–1720.

- L. Zhang, Z. Zhou, H. Li, Binary Gabor pattern: an efficient and robust descriptor for texture classification, in: 19th IEEE International Conference on Image Processing (ICIP), 2012, pp. 81–84.

- R. Haralick, Statistical and structural approaches to texture, Proc. IEEE 67 (5) (1979) 786–804.

- F.R. Siqueira, W.R. Schwartz, H. Pedrini, Multi-scale gray level co-occurrence matrices for texture description, Neuro computing 120 (2013) 336–345.

- M. Verma, B. Raman, S. Murala, Local extrema co-occurrence pattern for color and texture image retrieval, Neuro computing 165 (2015) 255–269.

- C.H. Liu, Z. Lei, Y.K. Hou, Z.Y. Li, J.Y. Yang, Image retrieval based on multi-textonhistogram, Pattern Recognit. 43 (2010) 2380–2389.

- S. Murala, Q.M. Jonathan, R.P. Maheshwari, R. Balasubramanian, Modified color motif co-occurrence matrix for image indexing and retrieval, Comput. Electr.Eng. 39 (2013) 762–774.

- S. Murala, R.P. Maheshwari, R. Balasubramanian, Expert system design using wavelet and color vocabulary trees for image retrieval, Int. J. Expert Syst. Appl.39 (2012) 5104–5114.

- Jhanwar N, Chaudhuri S, Seetharaman G, Zavidovique B. Content-based image retrieval using motif co-occurrence matrix. Image Vision Comput 2004; 22:1211–20.

- C.H. Lin, R.T. Chen, Y.K.A. Chan, Smart content-based image retrieval systembased on color and texture feature, Image Vis. Comput. 27 (2009) 658–665.

- Vadivel, A.K. ShamikSural, Majumdar, An integrated color and intensity co-occurrence matrix, Pattern Recognit. Lett. 28 (2007) 974–983.

- M.N. Do, M. Vetterli, Wavelet-based texture retrieval using generalized Gaussian density and Kullback-leibler distance, IEEE Trans. Image Process. 11, (2) (2002) 146–158.

- K. Prasanthi Jasmine1 ; P. Rajesh Kumar2 , Color and Rotated M-Band Dual Tree Complex Wavelet Transform Features for Image RetrievalM. I.J. Image, Graphics and Signal Processing, 2014, 9, 1-10.

- T.V. Madhusudhana Rao, Dr. S.Pallam Setty, Dr. Y.Srinivas, An Efficient System for Medical Image Retrieval using Generalized Gamma Distribution, I.J. Image, Graphics and Signal Processing, 2015, 6, 52-58.

- M. Kokare, P.K. Biswas, B.N. Chatterji, Texture image retrieval using rotated wavelet filters, J. Pattern Recognit. Lett. 28 (2007) 1240–1249.

- J.C. Felipe, A.J.M. Traina, C.J. Traina, Retrieval by content of medical images using texture for tissue identification, in: Proceedings of the 16th IEEE Symposium on Computer-Based Medical Systems, New York, USA, 2003, pp. 175–180.

- W. Liu, H. Zhang, Q. Tong, Medical image retrieval based on nonlinear texture features, Biomed. Eng. Instrum. Sci. 25 (1) (2008) 35–38.

- B. Ramamurthy, K.R. Chandran, V.R. Meenakshi, V. Shilpa, CBMIR: content based medical image retrieval system using texture and intensity for dental images, Commun. Comput. Inform. Sci. 305 (2012) 125–134.

- L. Nanni, S. Brahnam, A. Lumini, A local approach based on a Local Binary Patterns variant texture descriptor for classifying pain states, Expert Syst. Appl. 37 (12) (2010) 7888–7894.

- L. Nanni, A. Lumini, S. Brahnam, Local binary patterns variants as texture descriptors for medical image analysis, Artif. Intell. Med. 49 (2) (2010) 117– 125.

- Abbas H. Hassin Alasadi, Saba Abdual Wahid, Effect of Reducing Colors Number on the Performance of CBIR System , I.J. Image, Graphics and Signal Processing (IJIGSP), 2016, 9, 10-16

- Abdelhamid Abdesselam, Edge Information for Boosting Discriminating Power of Texture Retrieval Techniques s and techniques, I.J. Image, Graphics and Signal Processing (IJIGSP), 2016, 4, 16-28.

- Lempel, J. Ziv, Compression of two-dimensional data, IEEE Transactions on Information Theory 32 (1) (1986) 2–8.

- T. Ojala, M. Pietikainen, D. Harwood, A comparative study of texture measures with classification based on feature distributions, Pattern Recogn. 29 (1) (1996) 51–59.

- T. Ojala, M. Pietikainen, T. Maeenpaa, Multiresolution gray-scale and rotation invariant texture classification with local binary patterns, IEEE Trans. Pattern Anal. Mach. Intell. 24 (7) (2002) 971–987.

- Oliver, X. Lladó, J. Freixenet, J. Martí, False positive reduction in mammographic mass detection using local binary patterns, in: Proceedings of the Medical Image Computing and Computer Assisted Intervention (MICCAI 2007), Brisbane, Australia: Springer, Lecture Notes in Computer Science (LNCS) 4791, pp. 286–293, 2007.

- G. Seetharaman, B. Zavidovique, Image processing in a tree of Peano ,coded images, in: Proceedings of the IEEE Workshop on Computer Architecture for Machine Perception, Cambridge, CA (1997).

- G. Seetharaman, B. Zavidovique, Z-trees: adaptive pyramid algorithms for image segmentation, in: Proceedings of the IEEE International Conference on Image Processing, ICIP98, Chicago, IL, October (1998).

- Giancarlo R, Scaturro D, Utro F. Textual Data Compression In Computational Biology:A synopsis. Bioinformatics. 2009;25:1575–1586.

- A.R. Butz, Space filling curves and mathematical programming, Information and Control 12 (1968) 314–330.

- Corel Photo Collection Color Image Database, online available on http://wang.ist.psu.edu/docs/related.shtml

- Corel database: http://www.ci.gxnu.edu.cn/cbir/Dataset.aspx

- MIT Vision and Modeling Group, Cambridge, „Vision texture‟, http://vismod.media.mit.edu/pub/.

- P. Brodatz, Textures: “A Photographic Album for Artists and Designers “.New York, NY, USA: Dover, 1999.

- T. Sim, S. Baker, and M. Bsat, ―The CMU pose, illumination, and expression database,‖ IEEE Trans. Pattern Anal. Mach. Intell., vol. 25, no. 12, pp. 1615–1618, Dec. 2003.

- X. Tan and B. Triggs, “Enhanced local texture feature sets for face recognition under difficult lighting conditions,” IEEE. Trans. Image Process., vol. 19, no. 6, pp. 1635–1650, Jun. 2010.

- K. Jeong, J. Choi, and G. Jang, “Semi-Local Structure Patterns for Robust Face Detection,” IEEE Signal Processing Letters, vol. 22, no. 9, pp. 1400-1403, 2015.

- B. Zhang, Y. Gao, S. Zhao and J. Liu, “Local derivative pattern versus local binary pattern: face recognition with high-order local pattern descriptor,” IEEE Transactions on Image Processing, vol. 19, no. 2, pp. 533-544, 2010.

- S. Murala, R.P. Maheshwari and R. Balasubramanian, “Local tetra patterns: a new feature descriptor for content-based image retrieval,” IEEE Transactions on Image Processing, vol. 21, no. 5, pp. 2874-2886, 2012.