Convolutional Neural Network (CNN-SA) based Selective Amplification Model to Enhance Image Quality for Efficient Fire Detection

Автор: Sagnik Sarkar, Aditya Sunil Menon, Gopalakrishnan T, Anil Kumar Kakelli

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 5 vol.13, 2021 года.

Бесплатный доступ

Fires spread quickly and are extremely difficult to contain, and cause a great deal of damage to people and property. Current domestic systems for detecting outbreaks of fire, such as smoke detectors, are prone to reliability issues and will benefit greatly from having a secondary system in place to confirm the presence of a fire in the premises. In this paper, we have proposed a novel image pre-processing algorithm known as the Selective Amplification. This technique enhances images that are to be used in Convolutional Neural Networks, which are then trained on pre-processed images to detect fires with high accuracy. The efficacy of the proposed technique is verified by training two identical Convolutional Neural Network models on the same dataset of images. We train the proposed model on a version of the dataset that uses Selective Amplification for data pre-processing. The proposed model then demonstrates an improvement in the accuracy of the detection of fire in real-time over by 12.85%, compared to an identical model trained on the dataset without any pre-processing performed beforehand.

Selective Amplification, Convolutional Neural Network, Image Processing, Real-time Fire Detection, Image enhancement, Pattern Recognition

Короткий адрес: https://sciup.org/15017819

IDR: 15017819 | DOI: 10.5815/ijigsp.2021.05.05

Текст научной статьи Convolutional Neural Network (CNN-SA) based Selective Amplification Model to Enhance Image Quality for Efficient Fire Detection

Published Online October 2021 in MECS

The primarily used devices for detecting an outbreak of fire are smoke alarms, optical detection by ionization, and carbon monoxide detectors. However, these devices have quantifiable failure rates, and can greatly benefit through a secondary means of detecting and confirming the presence of fire within a vicinity. Thus, a solution to deploy this secondary fire detection measure is proposed. This solution simply analyses the footage from already-existing CCTV networks in real-time, requiring little to no additional hardware adjustments to a surveillance network that has already been established.

Our major contributions include:

-

• Selective Amplification algorithm : This algorithm is used to pre-process images before using it as training and validation data for the Convolutional Neural Network.

-

• Convolutional Neural Network and performance comparison between two such models, one of which is trained on the pre-processed dataset while the other is trained on the raw dataset.

-

2. Related Work

Previous research has proposed a variety of methods of detecting fire in real-time, either through the LUV Color space [1], analyzing smoke texture coming off of a flame in an image [2] thermal imaging [3], and many more. While these methods have displayed promising results, it is believed that our approach can significantly augment the performance of these models. This is because our algorithm simply “selectively amplifies” the luminosity of certain regions in the image. The algorithm works on the principle of making “bright regions brighter and dark regions darker”. This approach is meant to augment, and not outright replace the existing methods by which fire is detected within surveillance footage.

In this paper, initially, the related work in the field of fire detection using Computer Vision and Machine learning is discussed, and their merits are weighed. The development of the Selective Amplification algorithm, and the CNN architecture, is then discussed in detail. Two such CNNs are then trained on two identical datasets except for one distinguishing factor: one model is trained on the dataset after all the images have been pre-processed using Selective Amplification, while the other is trained on the raw dataset. The models in question have been trained on a dataset of over 2000 images, some of which contain a fire in the image and the other are control images in various settings, lightings, and times of the day.

Fire is an environmental as well as a life hazard. It can go out of control in urban areas as well as in dense forests. Fires in urban areas tend to be caused due to negligence or oversight. However, forest fires that cause extreme damage to the natural reserve of flora and fauna tend to be caused by environmental and climatic effects. These forest fires can be predicted [18] or in real-time to minimize the losses. Thus, a need to automate the detection and response to the same is a necessity. Various methods ranging from heat sensors, smoke detectors, gas sensors [17], and Computer Vision have been used and tested for the automation of the detection of fire. Though Computer Vision for fire detection is not so popular, it has been gaining popularity as of late as elaborated upon by [4, 5, 18]. [4] gave us the idea of employing a Convolutional Neural Network as our model of choice, while [6, 7, 5] gave us a solid use-case and some first-glance characteristics to look out for, such as frame-to-frame changes in brightness, color, the boundary of the source of light, flickering, etc. This further helped us in distinguishing between a flame and an artificial source of light. [3] allowed us to further cut down on false positives and improve our detection accuracy. When using visual feedback for fire detection, many algorithms and components of the fire have been targeted. For example, in [2], smoke textures are considered. This is an innovative way of detecting a fire based on the smoke emitted, as the flame of the fire might be covered by the dense smoke thus leaving only the smoke to serve as evidence for fire. Another way is analyzing the features of the flame itself in the cases where the flame is visible.

Some cases complement each other. Take for example a gas burner. It does not release much smoke, thus rendering a smoke detection system incapable. This is where the flame is considered as pretty obvious evidence for fire. In [8], a temporal analysis of the flame is done and the fire alarm is set to be triggered only when a certain pattern of movement or the shape of the fire has been detected. This is an effective way of confirming the presence of a fire, but the greatest downside of this method is that temporal analysis delays the detection of fire thus letting the fire go out of control in certain cases and causing greater damage to life and property. [9] proposes a similar way of detecting a fire based on the color and motion pattern analysis thus having similar flaws.

Early on in our attempts, we came across a fundamental problem: distinguishing between an actual fire and artificial sources of light. [1] helped us tackle this problem to a degree, by proposing to identify and distinguish a fire based on the color of the flame while tracking the flame’s motion frame by frame. Upon compiling this along with other data such as smoke detection, edge detection, and temporal analysis, the rate of false positives were dramatically reduced.

-

[10] proposes using the YCbCr color model for the detection process. YCbCr color space effectively separates the luminance from chrominance compared to other color spaces like RGB. [10] proposes a method that not only separates fire edge pixels but also separates high-temperature fire center pixels by taking into account the statistical parameters of fire image in YCbCr color space like mean and standard deviation.

-

3. Methodology

-

3.1. Pre-processing using Proposed Selective Amplification Algorithm:

At times smoke is the only thing that is visible in a fire or at times only the flame is visible, [11] therefore proposes a method of detecting and tracking both the fire and smoke. A Region of Interest (RoI) is first proposed and then passed through two classifiers: one for the smoke and the other one for the fire. Two classifiers increase the computation time because of the traditional bulky classifiers. To rectify this drawback, the network needs to be simplified, thus sacrificing performance. The performance can be improved with minimal computation if the image is pre-processed to highlight the essential features. The extraction of these features will reduce the burden of the classifier. [12] uses another approach combining multiple concepts including moving object detection, image feature extraction, and classifier recognition. The moving object detection separates the foreground from the background thus separating the fire, a moving entity from the remaining background. Feature extraction and classification do the remaining job of detecting fire. This is an efficient method of detection and localization in forest fires [13]. In residential areas, an immediate response from the network about the presence of fire anywhere in the image is necessary, and thus a balance between speed and accuracy needs to be maintained. Other methods to detect a flame include the detection of edges as in [14];

the major drawback of this is when smoke is released along with the flame, the edges are not well defined to map, and the edges get distorted. This limits the performance of this approach to contained fires and smokeless flames.

These conclusions from the existing literature have helped in understanding that the best way to tackle this problem is to make the prediction model lighter and making extraction of mainly the fire region a priority. In the proposed method a relatively lighter Convolutional Neural Network (a modified version of LeNet-5) [15] is employed with an image enhancement algorithm, as described in this paper. This is to extract only the necessary parts of the image thus aiding the model in the process of Feature Extraction.

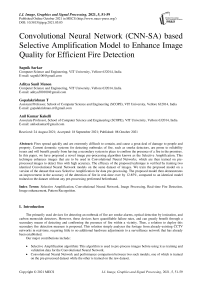

In this section, the proposed algorithm, preprocessing phase, and the architecture of the CNN are elaborated upon. Subsection 3.1 discusses the derivation and the intuition behind the Selective Amplification algorithm. The following subsection 3.2 provides a summary of the CNN model used for benchmarking the performance of the proposed algorithm. The next subsection 3.3 elaborates upon the training paradigm used. The final subsection 3.4 deals with the augmentation of the dataset to discourage overfitting and incentivize proper feature detection and training. This section thus provides an insight into the thought process behind the development of the algorithm and model and elaborates upon the components of the proposed model that is used for comparison in section IV.

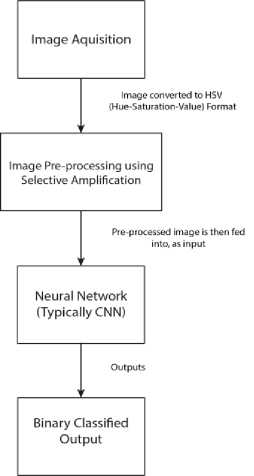

The main feature of a fire is that it is a source of light. And since it is a source of light, the logical conclusion would be that the brightness of the image of a fire would be higher than its surroundings. Keeping this in mind, a function is developed that increases the brightness of the parts of the image with a brightness higher than the certain threshold of intensity and decreases the brightness of parts of the image with brightness lower than the threshold. These facts require a function with varying concavity. Preferably with the concavity reversing at the mid-intensity value i.e., for an 8-bit image the maximum intensity value is 255 and the minimum intensity value is 0, therefore the flip should occur at 127.

A well-known equation that is point symmetric about a point and with opposite concavity on either side is the simple cubic equation (1). This equation needs to be transformed such that it has its point of symmetry about the midpoint and the range of image intensity output is not tampered with (to prevent data loss to a certain extent). The minimum and the maximum intensities are asserted to 0 and 1 respectively. This assertion makes it possible to scale this equation to any desired range.

у = х3 (1)

It can be observed that the regions where the x coordinate equals y coordinate is (-1, -1) and (1, 1). This sets the range of the current region of interest to be 2. This range needs to be scaled between 0 and 1 with a range of 1. Therefore, the x and y components are multiplied by 2 (2), reducing (1) by a scale of 2 in both x and y directions.

Fig.1. A high-level overview of the way the algorithm is intended to be applied to neural network models.

2у = (2х)3

Now that the required range is obtained, the origin i.e., the point of symmetry, is translated to (0.5, 0.5), the midpoint of the range of intensity. This is accomplished by subtracting 0.5 from the x and y coordinates in (2). Upon simplification, (3) is obtained.

2у - 1 = (2х - 1)3

(3) maps the maximum input intensity to the maximum output intensity and the minimum input intensity to the minimum output intensity. The desired concavity however is opposite on either side of the mid-point. This needs to be interchanged to match the specifications of the desired function. The x and y variables are interchanged to achieve this effect (4).

2х - 1 = (2у - 1)3

(4) is finally converted to a function (5) of the input intensity for application in pre-processing. The final function

is represented in Fig.2.

У =

(2ж-1)1/3 + 1

Here, x represents the input intensity and the variable y represents the output intensity. If a k-bit image is considered, the maximum value of intensity M is given by (6).

М = 2к - 1 (6)

Therefore, the range of intensity is 0 ≤ x ≤ M . To extend the equation for this range we divide the x and y values in (5) by the maximum possible value. So, we obtain (7) and (8).

( 2т -1) +1

у = М х --,0 <х,у<М

1 (- 22— 1)3+1

у = (2к - 1) х ^=1^—,0 < х,у < 2к - 1

Thus, (4), named the “Selective Amplification” algorithm, has been employed as the pre-processing algorithm using which both the dataset images that the neural network is trained on and any sample image is enhanced.

Ouput Intensity(y)

Fig.2. A visualization of the Selective Amplification Algorithm’s effect on images during pre-processing

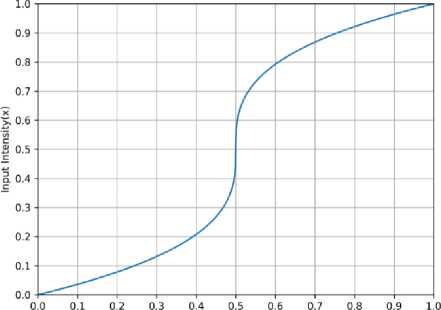

Fig.3. (a) are the images that contain a fire within the frame. (b) are the corresponding pre-processed versions of (a). (c) are the images without fire in the frame. (d) are the corresponding pre-processed versions of (c).

In Fig.3 , there are 4 pairs of images. (a) contains instances of an outbreak of fire. (b) is the corresponding preprocessed images of (a). Thus, it can be determined that the algorithm depicted in Fig.2 enhances the input images and complements existing methods of detection, filtering a lot of unnecessary information out of the image before the model is trained on them.

3.2. Network Architecture:

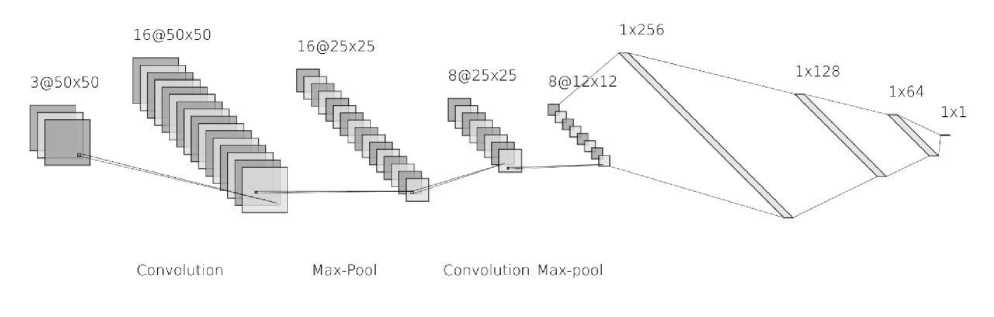

Fig.4. The network architecture of the Selective Amplification-Convolutional Neural Network (SA-CNN)

The network architecture is illustrated in Fig 4. This network is a modified version of the classic LeNet-5 architecture as proposed by [15]. The overall lightweight neural network can be divided into two conceptual blocks, the convolution block, and the deep neural network block. The convolution block consists of two convolutional layers each followed by a sigmoid activation and a Max-Pool layer. The convolution layers have a typical 3x3 kernel size with a stride of 1. The output of the convolutional layers is padded with the adjacent pixel values. The max-pooling layer has a pool window of 2x2 and a stride of 2. This is to extract the correlation of the features from the feature map, dividing it by half. The final feature maps are flattened and followed by the deep neural network block consisting of 3 dense layers of sizes 128, 64, and 1 with the output of the final layer being the binary prediction of the image. The convolution block specifically functions to extract the features exclusive to fires. This feature extraction phase is necessary for the network to classify the image after the selective amplification process. After the extracted features are forwarded to the deep neural network block, the extracted features are processed and undergo dimensionality reduction in a step-wise fashion. The step-wise dimensionality reduction reduces the effect of information bottleneck and provides reliable prediction. A small, lightweight network is purposefully selected to reduce the time taken for both training and prediction.

-

3.3. Training of Neural Network on Pre-processed Dataset:

-

3.4. Augmentation of training data:

-

4. Results and Discussion

The model is trained on images of fire and negative images i.e., images that do not contain the fire. The fire images feature fires of various origins, color, sizes and nature. This wide variety of images ensure that the model is properly trained to recognize all kinds of fire. The negative images have different sources of light other than the fire which include bulbs, lamps, outdoor ambient light. The more the variety of light sources, the better the classifier becomes and prevents false positive outcomes. Examples of the pre-processed images are illustrated in Fig.3. The images are converted to the HSV (Hue Saturation-Value) and the value component representing the brightness is transformed using the Selective Amplification function. After the transformation, the model is trained on the resulting images. Even during the process of prediction, the images are transformed and only then passed through the model.

Data Augmentation for fire detection is a crucial part of the process. This is because a fire can be of any size and may originate from any direction, and simply training our model on a dataset containing images of fires breaking out at the ground level proved to be insufficient during early testing. On augmentation i.e., by rotating, shearing and zooming, we can achieve this effect. Data augmentation also significantly increases the number of images to train on as the same image when rotated or zoomed into, provides us with a ‘new image’ for the neural network to train on. This is how we expand our dataset and train our model to be more accurate in more diverse use cases and scenarios.

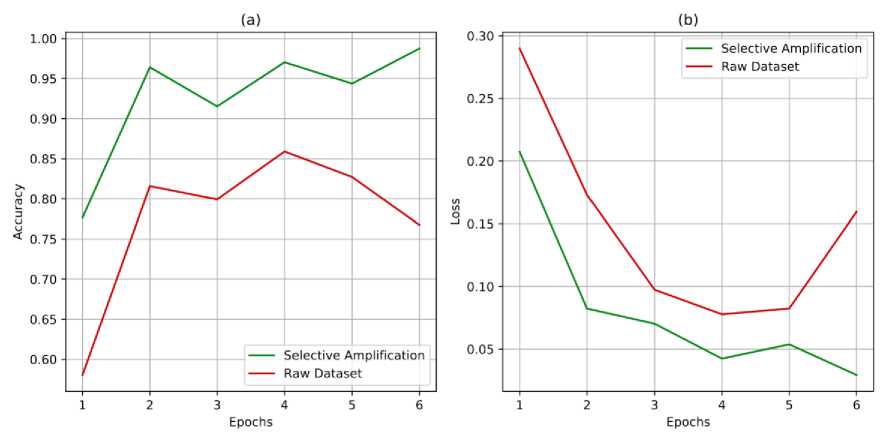

Fig.5. Performance comparison between the model trained on the dataset pre-processed using the proposed algorithm and the model trained on the raw dataset. (a) Model accuracy comparison. (b) Model loss comparison.

Table 1. Comparing the performance of both models for each epoch.

|

Epochs |

CNN with proposed Selective Amplification |

CNN without proposed Selective Amplification |

||

|

Loss |

Accuracy (%) |

Loss |

Accuracy (%) |

|

|

1 |

0.2073 |

77.66 |

0.2898 |

58.04 |

|

2 |

0.0823 |

96.42 |

0.1729 |

81.58 |

|

3 |

0.0703 |

91.53 |

0.0974 |

79.93 |

|

4 |

0.0424 |

97.04 |

0.0778 |

85.90 |

|

5 |

0.0538 |

94.37 |

0.0824 |

82.72 |

|

6 |

0.0293 |

98.75 |

0.1596 |

76.75 |

The performances of the two models, one of which was trained on the dataset that had been pre-processed using the proposed selective amplification algorithm, and the other, which was trained on the raw dataset images directly, are compared. The models are trained using the Adam optimizer and evaluated upon the binary cross-entropy loss. Both models were trained for 6 epochs and their loss and accuracy scores were recorded, the results of which have been plotted in Fig. 5 according to Table 1. For the proposed Selective Amplification algorithm to be deemed as a viable preprocessing algorithm, the proposed model should outperform the model by a greater accuracy score and a lower loss. The dataset had been prepared in two sets of training and validation. The models are evaluated on the same validation set to make them fair and suitable for comparison. Both the models were trained in the same setup with similar system conditions. The models were trained on an Nvidia RTX 2070 GPU and built using TensorFlow 2.0.

It should be noted that the Model Loss and Model Accuracy both plotted and tabulated are the validation Loss and Accuracy values of each of those models with each successive epoch. It is apparent that the second model simultaneously suffered the greater loss while having an accuracy lower than that of the first model. This indicates that using the pre-processing algorithm for both training neural network models before validation and validating new images leads to greater accuracy and lesser waste of resources. It can also be observed from the performance metrics that both the models are training desirably, having the accuracy score increase and the loss decrease with the succession of epochs. However, it must also be noted that in addition to the performance of the model based on selective amplification being better than the model without, the SA-CNN tends towards a better performance at the end of the training. In essence, even after the accuracy and the loss of the traditional model plateaus, the SA-CNN is still in its increasing phase of accuracy and can potentially perform even better upon rigorous training.

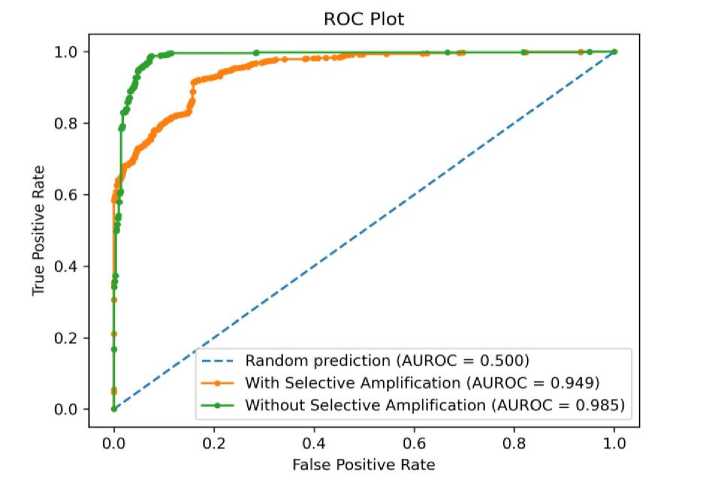

Fig.6. Model metrics comparison for Model 1 (Orange) and Model 2 (Blue). (a) Model accuracy comparison. (b) Model loss comparison.

Table 2. Confusion matrix, depicting the ratios of false positives and false negatives encountered while testing both CNNs (i.e., without and with proposed Selective Amplification applied beforehand respectively).

|

Actual Condition |

CNN without proposed Selective Amplification |

CNN with proposed Selective Amplification |

||

|

Fire Predicted |

No Fire Predicted |

Fire Predicted |

No Fire Predicted |

|

|

Fire |

1007 |

369 |

1336 |

40 |

|

No Fire |

42 |

741 |

55 |

728 |

Upon examination of the ROC curve in Fig.6, it can be deduced that the model which uses our proposed selective amplification algorithm outperforms a model that is trained on the raw dataset by yielding a higher count of true positive and true negative outcomes, thus reducing both: the risk of not detecting a fire, and that of letting it go unnoticed. The ROC curve of the SA-CNN tends to peak closer to the (1, 0) point and encompasses a higher area under the curve than the traditional CNN model signifying the higher reliability for the detection of fire with a low amount of false alarms or oversight. The confusion matrix as shown in Table 2 illustrates the actual results that were obtained from a sample dataset that has been manually collected. The dataset consists of varied images diverse in the color of the fire, shape, orientation, etc., while the non-fire images consist of outdoor and indoor lighting with varied colors and shapes of the light source. On performing pre-processing with selective amplification, the false negatives have significantly reduced, thus increasing the reliability of fire being detected.

-

5. Conclusion

In this paper, a novel approach to improving existing methods of real-time fire detection using surveillance footage is presented. This improvement is achieved using the proposed algorithm, termed as the ‘Selective Amplification’ algorithm, and is used to pre-process images before using them as training and testing data for a Convolutional Neural Network (CNN). The proposed Selective Amplification function is based on the principle of making “brighter pixels brighter and darker pixels darker”. This function operates on a continuous odd function with the first-order derivative increasing till the point of inflection and decreasing after. The function in this work is based on a simple cubic equation, however other versions involving and other odd degree polynomials will fetch similar results. This type of function helps in making the illumination (one of the most important features of the fire) prominent and easily distinguishable by feature extraction methods like CNN. Selective Amplification extends upon the existing works featuring feature extraction in fire detection systems and possibly increases the performance of these algorithms. The merits of real-time detection of an outbreak of fire through surveillance footage are discussed and evaluated. The development and the intuition of the proposed algorithm are elaborated upon, along with the CNN architecture necessary to implement the algorithm. The results confirm that the CNN trained and tested on data pre-processed using Selective Amplification showed an increase in best-case model accuracy of 12.85% over the model without it. Dynamic assignment of intensity values for the function can improve the performance on a wide spectrum of scenarios. The proposed function can be improved upon by applying various other base functions to provide a transformation curve befitting the scenario. Through these results, it can be confidently stated that the proposed algorithm can be implemented to augment most existing methods of detection of fire through surveillance footage using Computer Vision in real-time. Selective Amplification can be used as an additional layer in the existing feature extraction methods in real-time fire detection systems potentially increasing the reliability and performance of these systems. Alternatively, this system can be used in marine and forest surveillance systems to detect intruders based on luminescent activity.

Список литературы Convolutional Neural Network (CNN-SA) based Selective Amplification Model to Enhance Image Quality for Efficient Fire Detection

- D. Pritam, J. H. Dewan, Detection of fire using image processing techniques with luv color space, in: 2017 2nd International Conference for Convergence in Technology (I2CT), IEEE, 2017, pp. 1158–1162.

- Y. Chunyu, Z. Yongming, F. Jun, W. Jinjun, Texture analysis of smoke for real-time fire detection, in: 2009 Second International Workshop on Computer Science and Engineering, volume 2, IEEE, 2009, pp. 511–515.

- S.-Y. Jeong, W.-H. Kim, Thermal imaging fire detection algorithm with minimal false detection, KSII Transactions on Internet and Information Systems (TIIS) 14 (2020) 2156–2170.

- Y. Cai, Y. Guo, Y. Li, H. Li, J. Liu, Fire detection method based on improved deep convolution neural network, in: Proceedings of the 2019 8th International Conference on Computing and Pattern Recognition, 2019, pp. 466–470.

- P. V. K. Borges, E. Izquierdo, A probabilistic approach for vision-based fire detection in videos, IEEE transactions on circuits and systems for video technology 20 (2010) 721–731.

- N. M. Dung, S. Ro, Algorithm for fire detection using a camera surveillance system, in: Proceedings of the 2018 International Conference on Image and Graphics Processing, 2018, pp. 38–42.

- B. U. To¨reyin, Y. Dedeog˘lu, U. Gu¨du¨kbay, A. E. Cetin, Computer vision-based method for real-time fire and flame detection, Pattern recognition letters 27 (2006) 49–58.

- S. Vijayalakshmi, S. Muruganand, Fire alarm based on spatial temporal analysis of fire in video, in: 2018 2nd International Conference on Inventive Systems and Control (ICISC), IEEE, 2018, pp. 104–109.

- H. Kim, J. Park, H. Park, J. Paik, et al., Fire flame detection based on color model and motion estimation, in: 2016 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), IEEE, 2016, pp. 1–2.

- C. E. Premal, S. Vinsley, Image processing based forest fire detection using ycbcr colour model, in: 2014 International Conference on Circuits, Power and Computing Technologies [ICCPCT-2014], IEEE, 2014, pp. 1229–1237.

- H. Wang, Z. Pan, Z. Zhang, H. Song, S. Zhang, J. Zhang, Deep learning based fire detection system for surveillance videos, in: International Conference on Intelligent Robotics and Applications, Springer, 2019, pp. 318–328.

- K. Muhammad, J. Ahmad, I. Mehmood, S. Rho, S. W. Baik, Convolutional neural networks based fire detection in surveillance videos, IEEE Access 6 (2018) 18174–18183.

- S. Jin, X. Lu, Vision-based forest fire detection using machine learning, in: Proceedings of the 3rd International Conference on Computer Science and Application Engineering, 2019, pp. 1–6.

- T. Qiu, Y. Yan, G. Lu, An autoadaptive edge-detection algorithm for flame and fire image processing, IEEE Transactions on instrumentation and measurement 61 (2011) 1486–1493.

- Y. LeCun, et al.,Lenet-5, convolutional neural networks, URL: http://yann.lecun.com/exdb/lenet 20 (2015) 14.

- Manjunatha K.C., Mohana H.S, P.A Vijaya, "Implementation of Computer Vision Based Industrial Fire Safety Automation by Using Neuro-Fuzzy Algorithms", International Journal of Information Technology and Computer Science(IJITCS), vol.7, no.4, pp.14-27, 2015. DOI: 10.5815/ijitcs.2015.04.0.

- Razib Hayat Khan, Zakir Ayub Bhuiyan, Shadman Sharar Rahman, Saadman Khondaker, "A Smart and Cost-Effective Fire Detection System for Developing Country: An IoT based Approach", International Journal of Information Engineering and Electronic Business(IJIEEB), Vol.11, No.3, pp. 16-24, 2019. DOI: 10.5815/ijieeb.2019.03.03.

- Shi, Yixun. "A probability model for occurrences of large forest fires." International Journal of Engineering and Manufacturing (IJEM) 1, no. 1 (2012): 1-7.