Copy move forgery detection using key point localized super pixel based on texture features

Автор: Rajalakshmi C., Alex Dr. M. germanux, Balasubramanian Dr. R.

Журнал: Компьютерная оптика @computer-optics

Рубрика: Обработка изображений, распознавание образов

Статья в выпуске: 2 т.43, 2019 года.

Бесплатный доступ

The most important barrier in the image forensic is to ensue a forgery detection method such can detect the copied region which sustains rotation, scaling reflection, compressing or all. Traditional SIFT method is not good enough to yield good result. Matching accuracy is not good. In order to improve the accuracy in copy move forgery detection, this paper suggests a forgery detection method especially for copy move attack using Key Point Localized Super Pixel (KLSP). The proposed approach harmonizes both Super Pixel Segmentation using Lazy Random Walk (LRW) and Scale Invariant Feature Transform (SIFT) based key point extraction. The experimental result indicates the proposed KLSP approach achieves better performance than the previous well known approaches.

Copy move, segmentation, sift, klsp

Короткий адрес: https://sciup.org/140243289

IDR: 140243289 | DOI: 10.18287/2412-6179-2019-43-2-270-276

Текст научной статьи Copy move forgery detection using key point localized super pixel based on texture features

Photo manipulation involves transforming or altering a photograph using various techniques to achieve desired result. Image Tampering is a digital art which needs understanding of image properties and good visual creativity. One can tamper image for various reasons either to enjoy fun of digital works creating incredible photos or to false evidence. The cheaply available software in the market allows creating photorealistic computer graphics so that viewers can find indistinguishable tampered images. Among the various techniques for forgery method, copy move forgeries are prominent. Although copy move forgeries have already received a lot of attention and inspired a large number of papers, the detection of this kind of attack after scaling rotation, reflection, or all remains a challenging problem. When an image sustains attack, then it is often obliged to apply a geometric transformation. If any copy move tampering has taken place, it is essential to find out the parameters of the transformation used such as horizontal and vertical, translation, scaling factors, rotation angle. There is a need of technology to identify the tampering of image. In this paper, detection of copy move forgery in digital image has been carried out by proposed method using KLSP (LRW+SIFT).

1. Related work

There exist two main classes of algorithm for tampering detection one is block wise division algorithm and other is key point extraction algorithm [1]. The method based on DCT (Discrete Cosine Transform) which is used for describing blocks [1] is the best example for block wise division algorithm. The SIFT [2] and Speeded Up Robust Features [SURF] [3] are most widely used key points based algorithm. In [4] the authors proposed a novel methodology based on SIFT. The above said method allows both, to understand copy move attack has features. Computer Optics 2019; 43(2): 270-276.

occurred and also to recover the geometric transformation used to perform cloning. In [4] the author present the technique shows effectiveness with respect to composite processing and multiple cloning. In [4] the future work will be mainly dedicated to investigating to improve the detection phase with respect to the clone image patch with highly uniform texture. Further, the clustering phase will be extended by means of an image segmentation procedure. To study the duplicate parts of an image, uniform pixel in the image has to be identified. Super pixels are commonly defined as contracting and grouping uniform pixel in the image which have been widely used in image segmentation [5]. In [5] the author present a novel image super pixel segmentations approach using Lazy Random Walk (LRW) algorithm. In [5] the input image is to segment into small compact region with homogeneous appearance using LRW algorithm. This paper proposed a new detection method using super pixel segmentation and key point extraction. The proposed method integrates both Super Pixel Segmentation using LRW and key point extraction using SIFT. The rest of the paper is structure as follows Section 3 reviews the features of super pixel segmentation, and features of SIFT algorithm, Section 4 discussed in Section 5. Finally conclusion is drawn in final Section.

2. Background study 2.1. Features of super pixel segmentation

A super pixel is an image path which is better aligned with intensity edges than a rectangular path. Super pixel can be extracted with any segmentation algorithm. The super pixel segmentation algorithms are roughly categorized as water shed based, density based, contour evaluation, path base, clustering based, Energy optimization. These include [6–13]. In [5] the author presents an image Super Pixel Segmentation approach using LRW and en- ergy optimization algorithm and demonstrated that LRW achieves better performance than the previous well known approaches. In Super Pixel Segmentation using LRW, the method being with initializing the seed positions and run the LRW algorithm on the input image to obtain the probabilities of each pixel. Then the boundaries of initial super pixels are obtained according to the probabilities and the commute time. The input image of the proposed method is to be segmented using LRW algorithm which is experimentally proved as the best image segmentation image. The segmented image patches are compared on the basic of SIFT key points occurrence to find out the copy move forgery.

2.2. Features of SIFT algorithm

3. Proposed key point localized super pixels (KLSP) method

In [14] the authors, reviewed the existing tampering detection techniques and concluded that SIFT based forensic techniques are the best in copy move forgery detection. Most of the algorithms proposed in the literature for detecting and describing local visual features requires two step. The First is the detection step in which interest points are localized while in the second step robust local descriptors are built so as to be invariant which respect to orientation scale and affine transformation. In paper [14], [15], [4] the authors surveyed and confirmed the SIFT features are a good solution because of their good performance. The SIFT method are summarized as four steps. 1. Scale space extreme detection 2. Key point location 3. Assignment of one (or more) canonical orientation. 4. Generation of key descriptors. The next sections of the paper describes the new proposed method which is a hybrid of image segmentation method using LRW and SIFT method named as a Key points Localized Super Pixel descriptors (KLSP) method.

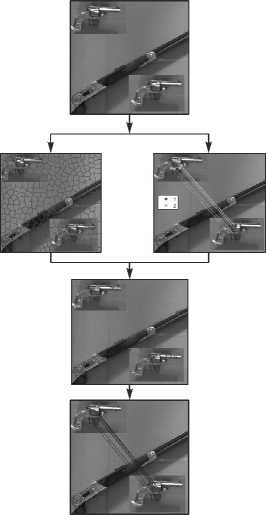

The proposed method KLSP is the hybrid of best image segmentation method of LRW and SIFT. The proposed method completes in three stages. The outline of the proposed method is shown in Fig. 1.

Input Image

Super Pixel Segmentatione by LRW

SIFT Based Key Point Detection

Fig. 1. The outline of the proposed method

-

3.1. Super pixel segmentation by LRW

-

3.1.1. Segmentation algorithm 1

The input image is to segment into small compact regions with heterogeneous appearance using LRW based super pixel initialization and optimization algorithm. The first step is to obtain the super pixel using the LRW algorithm with initial seed points. In order to improve the super pixel performance, optimize the initial super pixels by the new energy function in the second step.

Input: Input image I ( xi ) and an integer of initial seeds K .

Step1: Define an adjacency matrix,

W = [ Wij ] MxN .

Step 2: Construct the matrix S = D –1/2 W D –1/2 .

D is a diagonal matrix

Step 3: Compute f lk = ( I - α S )-1 y lk .

Where I is the identity matrix and f l k is a N ×1 vector, yl k is a N ×1 column vector as 0, 0,··· ,0, 1, 0,···, 0 where all the elements are zero except the seed pixels as 1. α is a control parameter in the range (0, 1).

Step 4: Compute R ( x i ) = argmax CT (c l k , x i ) to obtain the labels by assigning label R ( x i )l k to each pixel x i . R ( x i ) is the labeled boundaries of Super Pixels. CT denotes the normalized Commute Time. c l k denotes the center of the l-th Super Pixel, and the label l k is assigned to each pixel xi to obtain the boundaries of Super Pixels.

Step 5: Obtain Super Pixels by S l k = { x i / R ( x i ) = l k } where { i = 1,…, N } and { k = 1,…., K }.

Output: The Initial Super Pixel results Sl k .

-

3.1.2. Algorithm 2

The performance of super pixel is improved by following energy optimization function

E = E ( Area ( s ,) - Area ( У )) 2 + E Wx C ( c T , x )2. (1)

Area( S l) is the area of super pixel and Area( S ) defines the average area of super pixels.

W x is a penalty function for the super pixel label inconsistency.

Input: Initialize super pixels S and an integer NS P .

Step I: The new center relocates to

Fig.2. Segmentation

E W x ( CT ( c , n - 1, x )/|| x - C , n -Ч !) x C = E W x ( CT ( c 1 n - 1, x )/|| x - C , n -4 !)

Step 2: Our energy prefers to split the large super pixels with abundant texture information into small super pixel in the optimization stage, which makes the final super pixels to be more homogeneous in the complicated texture region. We adopt Local Binary Patten (LBP) to measure the texture information. LBP value of each pixel is defined as follows3).

q = gray level of LBP.

r = radius of circle command pixel i.

g = gray value of image.

We set q = 8,r = 1.

Based on LBP texture feature, area of super pixel is,

Area( S i ) = E LBP i , (3)

i a ,

Area ( у ) = ( E i б у , LBP ) / C . (4)

Area (S) is the Average area of super pixel, NSP – user defined number of super pixels. The area of the super pixel is large when it contains much texture information. The parameter Threshold controls the number of interaction in super pixel optimization. Threshold is in the range of (1.0, 1.4). When Area (S1) / Area(S) >= Threshold the large super pixel is divided into small super pixels. The PCA (Principal Component Analysis) method is used to split the super pixel along the direction with the largest variation of commute time and super pixel shape. After the splitting procedure, we get two new super pixels. The corresponding two new centers (C1new1 and C1new2 as follows s{( x - c,) - s > 0} wx (CT (ci, x)/!! x - c,!!) x

C 1 new ,1 = —7--------------7--------------------------, (5)

s { ( x - c , ) - s > 0 } w x ( CT ( c i, x )/!! x - c , ||)

i = new,2

s { ( x | x e s l ,( x - d). у > 0 } W x ( CT ( c ,, x )/1| x - c , ||) x (6)

s { ( x | x e s l ,( x - c , ). s > 0 } W x ( CT ( c ,, x )/1| x - c , ||)

We obtain the labeled boundaries of super pixels from commute time as follows R ( x i ) = argmin CT ( C 1 k , X i ).

Step 3: Run steps 1 to 3 iteratively until convergence Output: The final optimized super pixel results obtained.

-

3.2. SIFT Based key point detection

Chrisltein et al [16] showed that the SIFT possessed more constant and better performance and compared with other 13 image feature extraction methods in comparative experiment, so in our proposed algorithm, we chose SIFT as the feature print extraction method to extract the feature points from each image block and each block is characterized by SIFT feature points that were extraction in the corresponding block. Therefore each block feature contains irregular block region information and the extracted SIFT feature points. Summarizing this section for given an image I, this proleelve ends with a list of N key points each of which is completely described by following information.

X ( x , y , c , o , f ).

Where ( x , y ) – Co ordinates in the image plane.

ст - Scale of Key points.

o – Canonical orientation.

f – Final SIFT descriptor.

Following are the major stages of computation used to generate the set of image features.

-

I) Detection of scale space extreme

The scale space can be created by taking the original image and produce blurred images. Next, the original image is resized the half size and produces a number of blurred out images again that for man octave (vertical images of the same size). The Laplacian of Gaussian technique calculates the difference between two consecutive scales for the image, the difference of Gaussians (DOG) is a features improvement algorithm that is computed from the subtraction of one blurred version of the original image from another version of the same image which is less blurred.

The major key-points for matching are recognized in the image. These key-points are usually chosen by analyzing the edges, corners, blobs or even ridges. The first step of the finding the image key-points is to find the maximum and minimum pixels from all its neighbors.

-

II) Key points localization

The edges need to be eliminated. For this reason, a concept of a Harris corner detector is used. A 2×2 Hessian matrix (H) is used to compute the principal curvature. If this ratio is greater than a threshold then that keypoint is removed.

-

III) Orientation assignment

The maximum peak in the histogram is used to calculate the orientation and any peak higher than 80% of the histogram is considered. The magnitude and orientation are calculated for all pixels around the key-point.

-

IV) Key point descriptor

After the key points are detected, compute relative orientation and magnitude in a 16×16 neighborhood at key point. This 16×16 window is broken into sixteen 4×4 windows, within each 4×4 window, gradient magnitudes and orientation are calculated. These descriptors are used further for matching the key points.

Fig. 3. FPs of Image

-

V) Key point matching

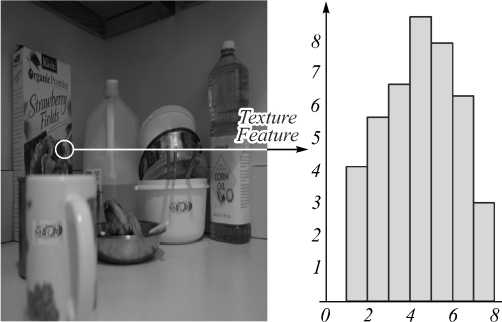

3.3. Texture feature extraction of key point

localized super pixel (KLSP)

The best Candidate match for each key point is found by identifying its nearest neighbor is the database of key points from training images. The nearest neighbor is the key point with minimum Euclidian distance we used Best Bin First (BBF) algorithm for invariant descriptor vector.

Fig. 4. Key point matching

In the next step of the proposed method, the matched key points are taken along with corresponding segmented region in the image. Thus the selected segmented regions are compared with its texture to identify the forgery region. In the proposed system, the input image (I) is feed into LRW algorithm for super pixel segmentation. Simul- taneously the key points are extracted using SIFT algorithm. The texture feature (LBP) Histogram is estimated for the super pixel segmentation which contains at least one Key point. Hence we will get the texture feature for all key point localized Super Pixel Segments. The chisquare distance based histogram matching algorithm is used to find the similarity of the super pixel resolution result based on the similarity, the copy move forgery is detected using texture feature of key point localized super pixel.

Algorithm for Proposed KLSP

Step 1: I, Input image //Let ‘ I ’ be the input Image

Step 2: Apply LRW to ‘ I ’ //Apply LRW algorithm to the above image to found out super pixels.

Step 3: Find Super pixel I ( sup ) for I

Step 4: Find Key points ( Kp ) for the image I using SIFT Algorithm

Step 5: Create Fake list ‘ F List ’ Let F List = ϕ

Step 6: for each kp ( i ) ε Kp find Texture features LBP for the super pixel in which kp ( i )occur

Step 7: for i = 1 to || Kp ||–1 for j = i +1 to || Kp ||

Step 8: Find Chi-square Histogram distance dij = chisquare (Hist( kpi ) , Hist( kpj )

Step 9: If d ij < Threshold then Forgery occurs.

Step10: FList = FList U kpi U kp j Add Super pixel region i & j to fake list ‘ F List ’.

end

Step11: If F List = ϕ, then Forgery not found.

else

Forgery is found.

4. Experimental results 4.1. Image database

In this section, we evaluate the proposed methodology using MICC-FF220 image set [17]. This dataset composed by 220 images, among them 110 and tampered are 110 originals. The image resolution various from 722 × 480 to 800 × 600 pixels and the size of the forged patch covers on the average, 1.2 % of the whole image.

-

4.2. Evolution

-

4.3. Experimental result

The feasibility of the proposed method is evaluated based on three parameters Sensitivity, Specificity, Accuracy as defined in [17] and are briefly represented in the following table1.

Table 1. Experimental Parameters

|

S.No |

Evolution Metrics |

Formula |

Abbreviations |

|

1. |

Sensitivity or TPR (True Positive Rate) |

TP(TP+FN) |

TP (True Positive): Tampered Image Identified as Forged. FN (False Negative) : Tampered Image Identified as authentic. FP (False Positive): Authentic Images Identified as Forged. TN (True Negative): Authentic Images Identified as Authentic. |

|

2. |

Specificity of TNR (True Negative Rate) |

TP(TN+FP) |

|

|

3. |

Accuracy (Images detected as forged / Total no of forged Images) |

(TP+TN)/ (TN+FP+TP+FN) |

To test the accuracy of the proposed method, the detection rate of the methods was computed based on Sensitivity, Specificity and Accuracy Complexity is measured in terms of average running time on a desktop PC equipped with an Intel 8 core. 3.4 GHz processor with 4GB RAM. The time taken to analyses an image is shown in Table 2.

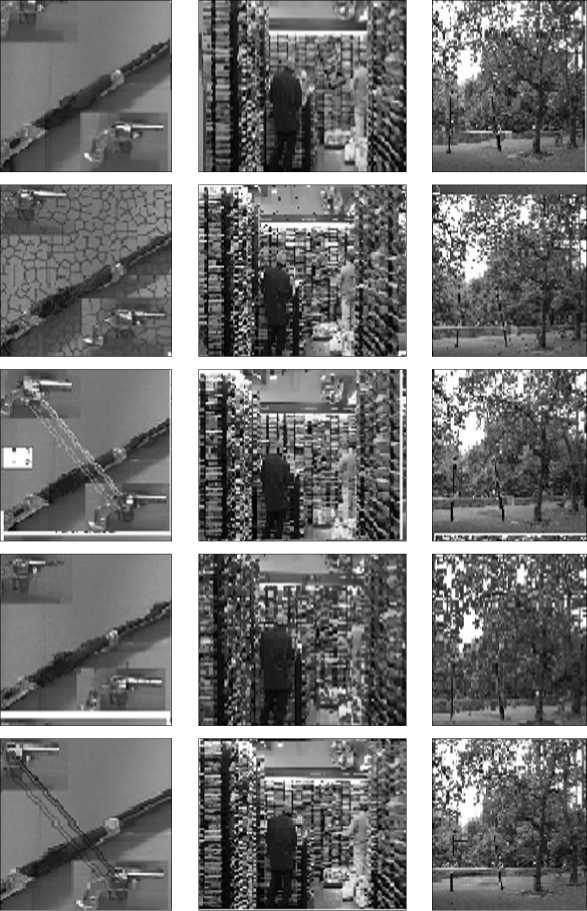

The performance of the proposed KLSP method was evaluated on MICC-F- 220 dataset. Some images are taken from image of MICC-F-220 data and are examined. The results are displayed as shown in Table 3.

Table 2. Time complexity

Table 3. Example results in KLSP

Original image (a)

Segmented images (b)

In first two images SIFT find out the matching points, but failed in other two images (c)

Key point detection using KLSP (d)

KLSP find out matching pts in all four images (e)

|

S. No. |

Average Time taken to complete segmentation in an image (Seconds) |

Average Time taken to find out Matching points in an image (Seconds) |

Average Total time taken by KLSP |

|

1) |

3.894 |

0.140 |

4.034 |

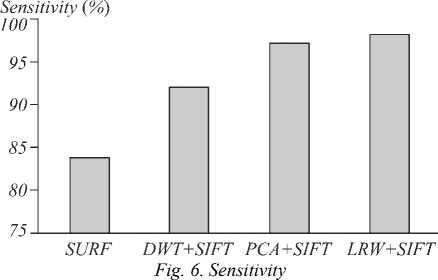

5. Comparison with conventional method

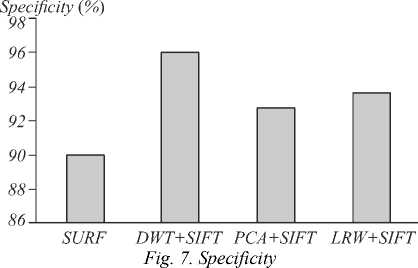

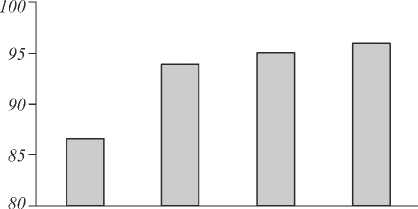

The performance of the proposed method is compared with conventional hybrid methods [18] in terms of Sensitivity, Specificity and Accuracy as shown in Table 3. The detection rate for MICC-F-220 dataset are recorded, which suggest that the proposed method outperforms the conventional hybrid methods. The performance of an image forgery detection system can be measured in terms of sensitivity, specificity, accuracy for SURF [18], DWT+SIFT [19], PCA+SIFT [20] and the proposed method KLSP (LRW+SIFT) and tabulated as below. Experimental result show that proposed KLSP (LRW+SIFT) method achieves higher values of sensitivity, accuracy than SURF, DWT+SIFT, PCA+SIFT and high specificity than SURF, PCA+SIFT. Hence it proves that the proposed KLSP is a better hybrid copy move forgery detection technique.

Table 4. Sensitivity

|

Sensitivity (%) |

|

|

SURF |

83.63 |

|

DWT + SIFT |

92 |

|

PCA+SIFT |

97.27 |

|

LRW+SIFT(KLSP) |

98.18 |

Table 5. Specificity

Specificity (%)

|

SURF |

90 |

|

DWT + SIFT |

96 |

|

PCA+SIFT |

92.72 |

|

LRW+SIFT(KLSP) |

93.64 |

Table 6. Accuracy

Accuracy (%)

|

SURF |

86.81 |

|

DWT + SIFT |

94 |

|

PCA+SIFT |

95 |

|

LRW+SIFT(KLSP) |

95.91 |

Conclusion

A lot of new algorithms are created for the detection of digital image forgery. In this paper, a new hybrid method is proposed and comparative study of proposed method is carried out with other well known approaches. Experimental result show that proposed KLSP (LRW+SIFT) method achieves higher values of sensitivity, accuracy and lower value of FNR as compared with other approaches. Our proposed KLSP algorithm achieves better Performance than the previous well know approaches in the Detection of digital image forgery.

SURF DWT+SIFT PCA+SIFT LRW+SIFT

Fig. 8. Accuracy

Overall our method proves to be beneficial to the research community and hope to spark the new ideas based on hybrid type detection.

Список литературы Copy move forgery detection using key point localized super pixel based on texture features

- Luo, W. Robust detection of region duplication forgery in digital image/W. Luo, J. Huang, G. Qiu//18th International Conference on Pattern Recognition (ICPR'06). -2006. -Vol. 4. -P. 746-749.

- Lowe, D.G. Distinctive image features from scale-invariant key points/D.G. Lowe//International Journal on Computer Vision. -2004. -Vol. 60, Issue 2. -P. 91-110.

- Bay, H. SURF: Speeded up robust features/H. Bay, A. Ess, T. Tuytelaars, L. Van Gool//Computer Vision and Image Understanding. -2008. -Vol. 110, Issue 3. -P. 346-359.

- Amerini, I. A SIFT based forensic method for copy move attack detection and transformation recovery/I. Amerini, L. Ballan, R. Caldelli, A. Del Bimbo//IEEE Transactions on Information Forensics and Security. -2011. -Vol. 6, Issue 3. -P. 1099-1110.

- Shen, J. Lazy Random walks for Superpixel segmentation/J. Shen, Y. Du, W. Wang, X. Li//IEEE Transactions on Image Processing. -2014. -Vol. 23, Issue 4. -P. 1451-1462.

- Moore, A. Superpixel lattices/A. Moore, S. Prince, J. Warrell, U. Mohammed, G. Jones//2008 IEEE Conference on Computer Vision and Pattern Recognition. -2008. -P. 1-8.

- Levinshtein, A. Turbopixels: Fast superpixels using geometric flows/A. Levinshtein, A. Stere, K. Kutulakos, J. Fleet, S. Dickinson, K. Siddiqi//IEEE Transactions on Pattern Analysis and Machine Intelligence. -2009. -Vol. 31, Issue 12. -P. 2290-2297.

- Watkins, D.S. Fundamentals of Matrix Computations/D.S. Watkins. -3rd ed. -New York, NY: Wiley, 2010.

- Ren, X. Learning a classification model for segmentation/X. Ren, J. Malik//Proceedings 9th IEEE International Conference on Computer Vision. -2003. -P. 10-17.

- Veksler, O. Superpixels and supervoxels in an energy optimization framework/O. Veksler, Y. Boykov, P. Mehrani//Proceedings of the 11th European conference on Computer vision. -2010. -Part V. -P. 211-224.

- Achanta, R. SLIC superpixels/R. Achanta, A. Shaji, K. Smith, A. Lucchi, P. Fsua, S. Sasstrunk. -EPFL Technical Report no. 149300. -Lausanne, Switzerland: 2010.

- Xiang, S. TurboPixel segmentation using eigen-images/S. Xiang, C. Pan, F. Nie, C. Zhang//IEEE Transactions on Image Processing. -2010. -Vol. 19, Issue 11. -P. 3024-3034.

- Yang, X. User-friendly interactive image segmentation through unified combinatorial user inputs/X. Yang, J. Cai, J. Zheng, J. Luo//IEEE Transactions on Image Processing. -2010. -Vol. 19, Issue 9. -P. 2470-2479.

- Rajalakshmi, C. Study of image tampering and review of tampering detection techniques/C. Rajalakshmi, Dr. M. Germanues Alex, Dr. R. Balasubramanian//International Journal of Advanced Research in Computer Science. -2017. -Vol. 8, No. 7. -P. 963-967.

- Lowe, D.G. Distinctive image features from scale invariant keypoints/D.G. Lowe//International Journal of Computer Vision. -2004. -Vol. 60, Issue 2. -P. 91-110.

- Christlein, V. An evaluation of popular copy move forgery detection approaches/V. Christlein, Ch. Riess, J. Jordan, C. Riess, E. Angelopoulou//IEEE Transaction on information Forensics and Security. -2012. -Vol. 7, Issue 6. -P. 1841-1854.

- Kaur, H. Simulative comparison of copy move forgery detection methods for digital images/H. Kaur, J. Saxena, S. Singh//International Journal of Electronics, Electrical and Computational System. -2015. -Vol. 4, special issue. -P. 62-66.

- Xu, B. Image copy move forgery detection based on SURF/B. Xu, J. Wang, G. Liu, Y. Dai//International Conference on Multimedia Information Networking and security (MINES). -2010.

- Hashmi, M.F. Copy move forgery detection using DWT and SIFT features/M.F. Hashmi, A.R. Hambared, A.G. Keskar//IEEE 13th International Conference on Intelligent System Design and Application (ISDA). -2013. -P. 188-193.

- Li, K. Detection of Image Forgery Based on Improved PCA-SIFT/K. Li, H. Li, B. Yang, Q. Meng, Sh. Luo//Proceeding of International Conference on Computer Engineering and Network. -2013. -P. 679-686.