Correcting Multi-focus Images via Simple Standard Deviation for Image Fusion

Автор: Firas A. Jassim

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 12 vol.5, 2013 года.

Бесплатный доступ

Image fusion is one of the recent trends in image registration which is an essential field of image processing. The basic principle of this paper is to fuse multi-focus images using simple statistical standard deviation. Firstly, the simple standard deviation for the kk window inside each of the multi-focus images was computed. The contribution in this paper came from the idea that the focused part inside an image had high details rather than the unfocused part. Hence, the dispersion between pixels inside the focused part is higher than the dispersion inside the unfocused part. Secondly, a simple comparison between the standard deviation for each kk window in the multi-focus images could be computed. The highest standard deviation between all the computed standard deviations for the multi-focus images could be treated as the optimal that is to be placed in the fused image. The experimental visual results show that the proposed method produces very satisfactory results in spite of its simplicity.

Image fusion, Multi-focus, Multi-sensor, Standard deviation

Короткий адрес: https://sciup.org/15013152

IDR: 15013152

Текст научной статьи Correcting Multi-focus Images via Simple Standard Deviation for Image Fusion

Published Online October 2013 in MECS (http://www.

Image fusion is the process of integrating two or more images to construct one fused image which is highly informative. In accordance with the development of technology and inventing various image-capturing devices, it is not always possible to get a detailed image that contains all the required information. During the past few years, image fusion gets greater importance for many applications especially in remote sensing, computer vision, medical imaging, military applications, and microscopic imaging [27]. The multi-sensor data in the field of remote sensing, medical imaging and machine vision may have multiple images of the same scene providing different information. In machine vision, due to the limited depth-of-focus of optical lenses in charge-coupled device (CCD), it is not possible to have a single image that contains all the information of objects in the image. Sometimes, a complete picture may not be always feasible since optical lenses of imaging sensor especially with long focal lengths, only have a limited depth of field [9]. The basic objective image fusion is to extract the required information of an image that was captured from various sources and sensors. After that, fuse these images into single fused (composite) enhanced image [14]. In fact, the images that are captured by usual camera sensors have uneven characteristics and outfit different information. Therefore, the essential usefulness of image fusion is its important ability to realize features that can not be realized with traditional type of sensors which leads to enhancing human visual perception. In fact, the fused image contains greater data content for the scene than any one of the individual image sources alone [7]. Image fusion helps to get an image with all the information. The fusion process must preserve all relevant information in the fused image.

This paper discusses the fusion of multi-focus images using the simple statistical standard deviation in k x k window size. Section II gives a review of the recent work and produces variety of different approaches reached. The proposed technique for fusing images had been presented in section III. The experimental results and performance assessments are discussed in section IV. At the end, the main conclusions of this paper have been discussed in section V.

-

II. IMAGE FUSION PRELIMINARIES

The essential seed of image fusion goes back to the fifties and sixties of the last century. It was the first time to search for practical methods of compositing images from different sensors to construct a composite image which could be used to better coincide natural images. Image fusion filed consists of many terms such as merging, combination, integration, etc [26].

Nowadays, there are two approaches for image fusion, namely spatial fusion and transform fusion. Currently, pixel based methods are the most used technique of image fusion where a composite image has to be synthesized from several input images [1][21]. A new multi-focus image fusion algorithm, which is on the basis of the ratio of blurred and original image intensities, was proposed by [19]. A generic categorization is to consider a process at signal, pixel, or feature and symbolic levels [15]. An application of artificial neural networks to solve multi-focus image fusion problem was discussed by [14]. Moreover, the implementation of graph cuts into image fusion to support was proposed by [16]. According to [23], a compressive sensing image fusion had been discussed. A wavelet-based image fusion tutorial must not be forgotten and could be found in [18]. A complex wavelets and its application to image fusion can be found in [11]. Image fusion using principle components analysis (PCA) in Compressive sampling domain could be found [28]. A new Intensity-Hue-Saturation (HIS) technique to Image Fusion with a Tradeoff Parameter was discussed by [3]. An implementation of Ripplet transform into medical images was discussed by [6]. An excellent comparative analysis of image fusion methods can be found in [26]. A comparison of different image fusion techniques for individual tree crown identification using quickbird images [20].

Here, in this paper, in order to compare the proposed technique with some common techniques in image fusion, therefore; we have used the wavelet and principle components analysis (PCA) as the most two widely implemented methods in image fusion [28]. The wavelet transform has become a very useful tool for image fusion. It has been found that wavelet-based fusion techniques outperform the standard fusion techniques in spatial and spectral quality, especially in minimizing color distortion. Schemes that combine the standard methods (HIS or PCA) with wavelet transforms produce superior results than either standard methods or simple wavelet-based methods alone. However, the tradeoff is higher complexity and cost [18]. On the other hand, principal component analysis is a statistical analysis for dimension reduction. It basically projects data from its original space to its eigenspace to increase the variance and reduce the covariance by retaining the components corresponding to the largest eigenvalues and discarding other components. PCA helps to reduce redundant information and highlight the components with biggest influence [26]. Furthermore, many tinny and big modifications on the known wavelet method were researched and discussed by many authors. On of these contributions the one that was introduced for image fusion named wavelet packet by [2]. Another contribution on wavelet called Dual Tree Complex Wavelet Transform (DT-CWT) can be found in [10][12]. Also, a curvelet image fusion was presented by [4][17]. Furthermore, many other fusion methods like contourlet by [8][22], and Non-subsampled Contourlet Transform (NSCT) which can be found in [5].

-

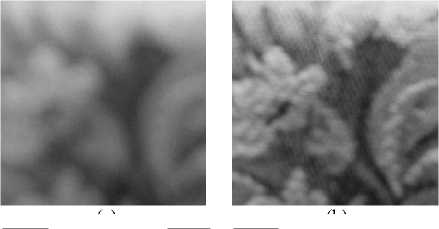

III. PORPOSED TECHNIQUE

The proposed technique in this paper was based on the implementation of the simple standard deviation for each of the fused and input images. At the beginning, a brief description about statistical standard deviation must be introduced. Actually, the standard deviation is a measure of how spreads out numbers are. Moreover, it is the measure of the dispersion of a set of data from its mean. The more spread apart the data, the higher the deviation. The standard deviation has proven to be an extremely useful measure of spread in part because it is mathematically tractable. In this paper, the essential contribution that was used as a novel image fusion technique was based on the fact that the standard deviation in the focused part inside an image had high details rather than its identical part in the unfocused image. Hence, the dispersion between pixels inside the focused part is higher than the dispersion inside the unfocused part. Hence, the standard deviation could be computed for each kxk window inside all the input multi-focus images. The higher value of the standard deviation between all the computed standard deviations in all the input images may be treated as the optimal standard deviation. According to Fig. 1, the standard deviation for an arbitrary 2x2 window size was computed to show that the higher standard deviation value in the four input images is the image that has more details. Statistically speaking, the computed standard deviations for the four input images were 0.5, 1.7078, 3.304, and 2.63, respectively; for figures (1.a), (1.b), (1.c), and (1.d). Therefore, the higher value of the standard deviation is (3.304) which belong to the third image (Fig. 1.c) which has sharp edges rather than the other images with blurred or semi-blurred edges. Consequently, the kxk window with the high standard deviation value is recommended to be the optimal window and that is to be placed in the fused image from all the input images. However, the suitable value of the window size k is preferred to be as small as possible. Therefore, in this article, the best recommended k that is to be gives best results is 2.

(a)

(b)

(c)

(d)

Figure 1. Four multi-focus images (a), (b), (c) and (d), each with its own standard deviation

Figure 2. Blurred Block from Clock (Blur)

|

188 |

188 |

186 |

185 |

186 |

184 |

182 |

180 |

179 |

178 |

|

188 |

187 |

186 |

185 |

184 |

183 |

182 |

182 |

181 |

179 |

|

187 |

188 |

187 |

186 |

184 |

183 |

182 |

182 |

181 |

179 |

|

188 |

188 |

187 |

187 |

186 |

184 |

182 |

180 |

179 |

178 |

|

188 |

187 |

187 |

186 |

185 |

184 |

181 |

179 |

176 |

175 |

|

187 |

186 |

185 |

184 |

182 |

182 |

180 |

178 |

174 |

172 |

|

186 |

185 |

183 |

182 |

181 |

180 |

178 |

176 |

173 |

171 |

|

186 |

185 |

183 |

181 |

181 |

180 |

177 |

174 |

172 |

170 |

|

184 |

183 |

182 |

181 |

179 |

177 |

174 |

173 |

171 |

168 |

|

180 |

180 |

179 |

178 |

177 |

174 |

171 |

170 |

167 |

164 |

|

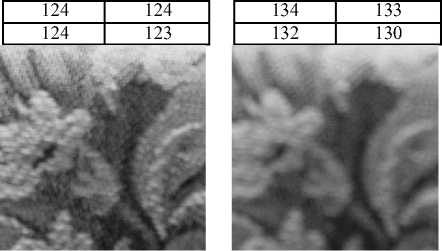

Figure 3. Original Block from Clock (Sharp) |

|||||||||

|

189 |

189 |

188 |

186 |

185 |

183 |

181 |

182 |

183 |

182 |

|

190 |

190 |

190 |

189 |

186 |

183 |

181 |

182 |

182 |

181 |

|

188 |

188 |

188 |

188 |

187 |

184 |

182 |

181 |

181 |

181 |

|

188 |

187 |

187 |

187 |

188 |

186 |

183 |

182 |

182 |

182 |

|

189 |

188 |

189 |

189 |

187 |

186 |

184 |

182 |

182 |

182 |

|

188 |

187 |

187 |

188 |

186 |

185 |

184 |

182 |

181 |

181 |

|

187 |

186 |

185 |

185 |

185 |

185 |

184 |

183 |

182 |

181 |

|

188 |

187 |

187 |

187 |

185 |

186 |

186 |

184 |

182 |

181 |

|

186 |

187 |

188 |

188 |

187 |

183 |

182 |

183 |

182 |

181 |

|

184 |

184 |

185 |

186 |

185 |

181 |

182 |

182 |

179 |

176 |

Figure 4. Blurred Block from Clock (Blur)

As another example, for the matter of changing the window size, a 10x 10 window size was used from clock multi-focus image, Fig. 2. The computed standard deviations for the 10x10 window size were (3.2489) and (1.8803), for the sharp and blurred images (for the small clock), respectively. The 10x10 window size was indicated by wide dark borders. Once again it is clear that the sharp edges image has higher standard deviation than the blurred image. The 10x 10 windows sizes for the sharp and the blurred images were presented in figures (3) and (4), respectively.

-

IV. EXPERIMENTAL RESULTS

In this section, experimental results that support the proposed technique in this paper were presented and discussed. Several standard test images were used as input images and the resulted fused images were obtained according to the proposed technique by implementing the application of the highest standard deviation value. Obviously, the ocular results show that the proposed technique produces quite satisfactory results compared with other methods like wavelet transform and principle components analysis (PCA). Practically, there are many performance measures used in image fusion techniques like Root Mean Square Error (RMSE), Peak Signal-to-Noise ratio (PSNR), Image Quality Index (IQI) [24], and structure similarity index (SSIM) [25][29]. Here, in this paper, the SSIM measure was imp lemented because of its high confidentiality [13].

TABLE 1 COMPARISON OF SSIM BETWEEN WAVELET, PCA, AND THE PROPOSED

|

Wavelet |

PCA |

Proposed |

|

|

Lena |

0.8278 |

0.8274 |

0.7260 |

|

Clocks |

0.9224 |

0.9222 |

0.8807 |

|

Peppers (V) |

0.8168 |

0.8166 |

0.7051 |

|

Peppers(D) |

0.8193 |

0.8190 |

0.7079 |

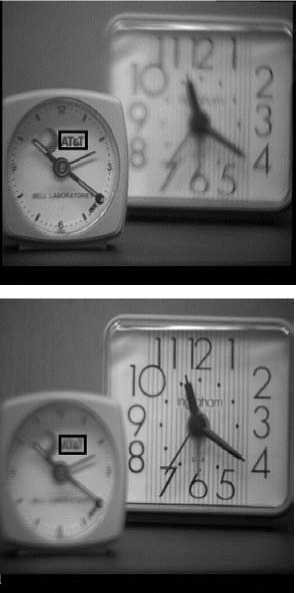

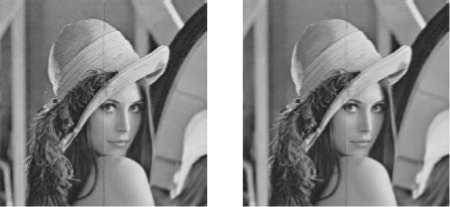

In accordance with Table (1), the SSIM values for the proposed technique are less than those for both wavelet and PCA. It seems that the structure similarity index in both wavelet and PCA is higher than the proposed fusion technique. At this point, it must be mentioned that the SSIM shows the similarity between the fused and the input images according to the original structure. Since the blurring size used in this paper for all test images is approximately half of the input images, then SSIM shows the similarity according to both blurred and sharp parts of the input images. Therefore, the smaller value of SSIM for the proposed technique does not mean that it is not good. But it exactly means that the degree of similarity for the fused image constructed by the proposed technique is less than the degree of similarity for both the wavelet and PCA. This contribution can be consolidated by the ocular results in Figures (5) to (8). Actually, the visual results obtained by the proposed technique have more sharpening details than other methods (wavelet and PCA).

As another performance measure, the PSNR was used to test the goodness of the constructed image. Obviously, the PSNR measure can not be computed unless the original image must be present. This can not be done when using SSIM because the original image may not be always available because the multi-focused images are obtained in different zooming times and angles for the same scene. In fact, there is no perfect image that can give the ability to be compared with. Hence, in this paper, the PSNR can not be evaluated for all the test images. Therefore, three test images have their original images present which are Lena, Peppers (V), and Peppers (D), where V stands for Vertical and D for diagonal blurring direction.

TABLE 2 COMPARISON OF PSNR BETWEEN WAVELET, PCA, AND THE PROPOSED

|

Wavelet |

PCA |

Proposed |

|

|

Lena |

5.1918 |

5.1955 |

5.7523 |

|

Peppers (V) |

5.0185 |

4.9843 |

5.2417 |

|

Peppers(D) |

4.6687 |

4.6724 |

5.2405 |

Clearly, according to Table (2), the PSNR values for the proposed technique are higher (in small absolute difference) than the PSNR values for both the wavelet and PCA for the fused images. Actually, this means that the differences between the constructed image using the proposed technique and the original image are less than those of the wavelet and PCA. This result supports the contribution that was stated previously which is that the proposed technique produces quite satisfactory results according to its similar common fusion methods such as wavelet and PCA.

(a) (b)

(c) (d)

(e)

Figure 5 (a) Blurred Image (left) (b) Blurred image (right) (c) Wavelet (d) PCA (e) Proposed

(a)

(c) (d)

(e)

Figure 6 (a) Blurred Image (left) (b) Blurred image (right) (c) Wavelet (d) PCA (e) Proposed

(a) (b)

(c) (d)

(e)

Figure 7 (a) Blurred Image (left) (b) Blurred image (right)

(c) Wavelet (d) PCA (e) Proposed

(a)

(b)

(c) (d)

(e)

Figure 8 (a) Blurred Image (left) (b) Blurred image (right) (c) Wavelet (d) PCA (e) Proposed

-

V. CONCLUSION

The main and fundamental conclusion that comes from the proposed technique is that its simplicity and performance compared to other complicated methods that produce almost the same results by using highly complicated mathematical formulas and time consuming transformations. The only disadvantage of the proposed technique is that the fused image is not always perfect. Hence, there must be a condition for the selection criterion for the best standard deviation between several standard deviations. This may be solved by future work. Further, the number of input images that was used in this article is two input images only. But this number can be generalized for more than two input images and that is the reason for the name multi-focused image fusion technique.

The results obtained by the highest value of the standard deviation technique has proven it’s adequacy and efficiency in constructing a fused image from multifocus images which were taken by different zooming process and times in the camera sensor. Finally, visual effect and statistical parameters indicate that the performance of our new method is better its competitors of the filed.

Additionally, one of the best recommendations that can be treated as a future work is the implementation of the proposed technique on color images than the gray scale images that were used in this article. Actually, this may facilitate the way for applying the proposed technique into video stream.

Список литературы Correcting Multi-focus Images via Simple Standard Deviation for Image Fusion

- Ben Hamza A., He Y., Krimc H., and Willsky A., “A multiscale approach to pixel-level image Fusion”, Integrated Computer-Aided Engineering, Vol. 12, pp. 135–146, 2005.

- Cao, W.; Li, B.C.; Zhang, Y.A. “A remote sensing image fusion method based on PCA transform and wavelet packet transform”, International Conference of Neural Network and Signal Process, vol. 2, pp. 976–981, 2003.

- Choi M., “A New Intensity-Hue-Saturation Fusion Approach to Image Fusion With a Tradeoff Parameter”, IEEE Transactions On Geoscience And Remote Sensing, Vol. 44, No. 6, June 2006.

- Choi M., Kim R. Y., Nam M. R., “Fusion of multi-spectral and panchromatic satellite images using the curvelet transform”, IEEE Geosci. Remote Sens. Lett., vol. 2, pp. 136–140, 2005.

- Cunha A. L., Zhou J. P, Do M. N., “The non-subsampled contourlet transform: Theory, design and applications”, IEEE Trans. Image Process, vol. 15, p.. 3089–3101, 2006.

- Das S., Chowdhury M., and Kundu M. K., “Medical Image Fusion Based On Ripplet Transform Type-I”, Progress In Electromagnetics Research B, Vol. 30, pp. 355-370, 2011.

- Delleji T., Zribi M., and Ben Hamida A., “Multi-Source Multi-Sensor Image Fusion Based on Bootstrap Approach and SEM Algorithm”, The Open Remote Sensing Journal, Vol. 2, pp. 1-11, 2009.

- Do M. N., Vetterli M., “The contourlet transform: An efficient directional multi-resolution image representation”, IEEE Trans. Image Process, vol. 14, pp. 2091–2106, 2005.

- Flusser J., Sroubek F., and Zitov B., “Image Fusion: Principles, Methods, and Applications”, Lecture notes, Tutorial EUSIPCO 2007.

- Hill P. R.; Bull D. R., Canagarajah C.N., “Image fusion using a new framework for complex wavelet transforms”, Int. Conf. Image Process, vol. 2, pp. 1338–1341, 2005.

- Hill P., Canagarajah N., and Bull D., “Image fusion using complex wavelets”, Proceedings of the 13th British Machine Vision Conference, University of Cardiff, pp. 487-497,2002.

- Ioannidou S.; Karathanassi V., “Investigation of the dual-tree complex and shift-invariant discrete wavelet transforms on Quickbird image fusion”, IEEE Geosci. Remote Sens. Lett., vol. 4, pp. 166–170, 2007.

- Klonus S., Ehlers M., “Performance of evaluation methods in image fusion”, 12th International Conference on Information Fusion Seattle, WA, USA, July 6-9, 2009, pp. 1409 – 1416.

- Li S., Kwok J. T., and Wang Y., “Multifocus image fusion using artificial neural networks”, Pattern Recognition Letters, Vol. 23, pp. 985–997, 2002.

- Miles B., Ben Ayed I., Law M. W. K., Garvin G., Fenster A., and Li S., “Spine Image Fusion via Graph Cuts”, IEEE tansaction on biomedical engineering, Vol. PP, No. 99, 2013.

- Nencini F., Garzelli A., Baronti S.,”Remote sensing image fusion using the curvelet transform”, Inf. Fusion, vol. 8, pp. 143–156, 2007.

- Pajares G., De la Cruz J. M., “A wavelet-based image fusion tutorial”, Pattern Recognition, Vol. 37, pp. 1855 – 1872, 2004.

- Qiguang M., Baoshu W., Ziaur R., Robert A. S.” Multi-Focus Image Fusion Using Ratio Of Blurred And Original Image Intensities”, Proceedings Of SPIE, The International Society For Optical Engineering. Visual Information Processing XIV : (29-30 March 2005, Orlando, Florida, USA.

- Luo R., Kay M., Data fusion and sensor integration: state of the art in 1990s. Data fusion in robotics and machine intelligence, M. Abidi and R. Gonzalez, eds, Academic Press, San Diego, 1992.

- Riyahi R., Kleinn C., Fuchs H., “Comparison of Different Image Fusion Techniques For individual Tree Crown Identification Using Quickbird Images”, In proceeding of ISPRS Hannover Workshop 2009, 2009.

- Rockinger O., Fechner T., “Pixel-Level Image Fusion: The Case of Image Sequences”, In Proceedings of SPIE , Vol. 3374, No. 1, pp. 378-388, 1998.

- Song H., Yu S., Song L., “Fusion of multi-spectral and panchromatic satellite images based on contourlet transform and local average gradient”, Opt. Eng., vol. 46, pp. 1–3, 2007.

- Wan T., Canagarajah N., and Achim A., “Compressive Image Fusion”, In the Proceedings of the IEEE International Conference on Image Processing (ICIP), pp. 1308-1311, October, 2008, San Diego, California, USA.

- Wang Z. and Bovik A. C., “A universal image quality index”, IEEE Signal Processing Letters, Vol. 9, No. 3, pp. 81–84, 2002.

- Wang Z., Bovik A. C. , “Image Quality Assessment: From Error Visibility to Structural Similarity”, IEEE Transactions on Image Processing, Vol. 13, No. 4, pp. 600-612, 2004.

- Wang Z., Ziou D., Armenakis C., Li D., and Li Q., “A Comparative Analysis of Image Fusion Methods”, IEEE Transactions On Geoscience And Remote Sensing, Vol. 43, No. 6, pp. 1391-1402, 2005.

- Yuan J., Shi J., Tai X.-C., Boykov Y., “A Study on Convex Optimization Approaches to Image Fusion”, Lecture Notes in Computer Science, Vol. 6667, pp. 122-133, 2012.

- Zebhi S., Aghabozorgi Sahaf M. R., and Sadeghi M. T., “Image Fusion Using PCA In CS Domain”, Signal and Image Processing: An International Journal, Vol.3, No.4, 2012.

- Zhang L., Zhang L., Mou X. and Zhang D., “FSIM: A Feature SIMilarity Index for Image Quality Assessment”, IEEE Transactions on Image Processing, Vol. 20, No. 8, pp. 2378-2386, 2011.