Depth based Occlusion Detection and Localization from 3D Face Image

Автор: Suranjan Ganguly, Debotosh Bhattacharjee, Mita Nasipuri

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 5 vol.7, 2015 года.

Бесплатный доступ

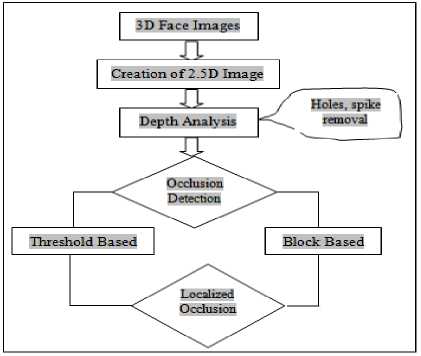

In this paper, authors have proposed two novel techniques for occlusion detection and then localization of the occluded section from a given 3D face image if occlusion is present. For both of these methods, at first, the 2.5D or range face images are created from input 3D face images. Then for detecting the occluded faces, two approaches have been followed, namely: block based and threshold based. These two methods have been investigated individually on Bosphorus database for localization of occluded portion. Bosphorus database consists of different types of occlusions, which have been considered during our research work. If 2D and 3D images are compared then 3D images provide more reliable, accurate, valid information within digitized data. In case of 2D images each point, named as pixel, is represented by a single value. One byte for gray scale and three byte for color images in a 2D grid whereas in case of 3D, there is no concept of 2D grid. Each point is represented by three values, namely X, Y and Z. The 'Z' value in X-Y plane does not contain the reflected light energy like 2D images. The facial surface's depth data is included in Z's point set. The threshold or cutoff based technique can detect the occluded faces with the accuracy 91.79% and second approach i.e. block based approach can successfully detect the same with the success rate of 99.71%. The accuracy of the proposed occlusion detection scheme has been measured as a qualitative parameter based on subjective fidelity criteria.

3D image, 2.5D image, Bosphorus database, Occlusion detection, Range image, Face recognition

Короткий адрес: https://sciup.org/15013869

IDR: 15013869

Текст научной статьи Depth based Occlusion Detection and Localization from 3D Face Image

Published Online April 2015 in MECS DOI: 10.5815/ijigsp.2015.05.03

Nowadays every single person is very much conscious about their own security, and it is very much reflected in our daily life. From ATM to automatic door opening and daily attendance, criminal identification, etc. are done through either by classical way or by biometric based approaches. Classical methods are having many drawbacks including difficulties to remember username and password. But those can be stolen or even duplicated very easily. On the other hand, biometric based methods have many advantages such as no headache about stealing, snooping or memorizing. It also possesses uniqueness property. Hence, biometric based authentication system becomes inevitable compared to the classical approach. But in this approach (especially, for automatic human face recognition) owns some challenging tasks to researchers for the robustness of the algorithm to recognize the faces in an uncontrolled environment.

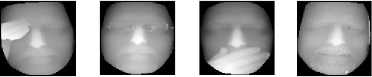

The expression, illumination and pose variations, different types of occlusions, face deformation due to age are some of the well known challenging tasks for automatic face recognition. But it is studied that, among available devices for face image acquisition, namely: 2D optical camera, thermal camera, video recorder, 3D sensors. Thermal and 3D face images are free from illumination [1] variation. The expressions can also be handled with effective algorithm. The rotated faces along yaw, pitch and roll are now easily be registered by available different face registration mechanisms [2] [15]. But handling of occlusion during automation of security purpose is very complicated task for scientists. Due to the occlusions, some portions of the face surface become inaccessible and thus very less information is available for processing by the algorithms. In figure 1, some of the occluded 2D visual (or optical) face images from Bosphorus [3] database are shown.

(a) Approaches of disguise

Fig.2. Approaches used in disguise

Fig.1. Different types of occlusions from Bosphorus database

(b) A typical approach for face disguise

Occlusions are different from another kind of similar challenges for face recognition like, disguise. Disguise might be considered as a superset of occlusions. Human disguise (especially, human face) himself/herself intentionally to hide from the surroundings or to make a separate representation to the society, whereas occlusion may be due to intention or by natural. A human face can be disguised by different approaches. Variation of hair style, hat or cap, combination of hat and glass, combination of glass, mustaches, beards and cap are some examples to disguise human face. In figure 2, some of the combinations have been described. Hence, it is evident that the disguise hides some facial data, but not as much as occlusion does.

Occlusion means images are not clear due to some obstacles. Again, occlusion during face image acquisition can also be made intentionally or accidentally or even it may be caused naturally. Face surface suppressed by hand is assumed to be intentionally occluded. On the contrary, some unwanted object comes from surroundings between image acquisition system and face. Surveillance cameras especially, CCTV footage based person identification systems suffer the most from this type of challenge. Now, due to various customs as well as human nature, occlusions play a crucial role during automation of face recognition. Wearing glass, having facial hair (like: mustaches, beard, etc.) can be considered as natural occlusion. The plastic surgery is another type of occlusion (or disguise) for face recognition. Reshaping any facial portion (like: lip, nose etc.) also causes hurdle for recognition of human being using face images. Some of these occlusions are shown in figure 1.

Occlusions can further be grouped into three sections, namely, permanent, temporal, and partial. In the case of permanent occlusion, faces are occluded by beard, mustaches, etc. By temporal it indicates that spectacles, hands are the reason for occlusion. But partial occlusion mean that some part of the human face is not captured correctly or missing that has not been restored back during face registration.

With the context of the paper, authors have investigated, and proposed depth based approaches for automation of occlusion detection and localization from 2.5D face [1] range images. Occlusion detection is a priory step for recognizing the face images correctly. For more robust identification purpose, the occluded faces are required to be detected first and then it is necessary to be pre-processed for restoration before it is pre-owned by recognition algorithm. 2.5D face images are used to consist of depth information from face surface but not the reflected light (like 2D), which is sampled and quantized during digitization process. Thus, surface depth is preserved better in 3D (or 2.5D) than 2D image.

The proposed algorithm is based on face depth. Face depth values from 3D images are not same as intensity values from 2D images. The intensity values that are captured by any camera for formation [4] of 2D image are followed by equation 1. Cameras mainly record the reflectance characteristic of the scene or object (here, the human face), whereas scanners are used to measure the face surface’s depth values in 2D space (X-Y plane) to create a 3D face image.

In this equation, illumination component is I(x,y) and R(x,y) is the reflectance component (or transmissivity) of any intensity value F(x, y) at X-Y plane. These two components are determined by illumination source and specification of the human face. On the other hand, depth values are used to represent as a series of points along three axes (X, Y and Z) stored in three individual sets. Due to the acquisition procedure [1], 3D images have an inherent property that those are free from illumination variation. The light source (whether a laser scanner or structured light) used for capturing the face images are useful to measure the depth of surface. The surrounding’s light source does not play any role during depth measurement. Thus, depth values are more valuable for automation of face recognition. This study has inspired the authors to design and develop a depth based occlusion detection methodology for 3D face images.

The paper has been organized as follows. In section 2, a literature survey is done. Section 3 is used for the description of the proposed algorithm. In section 4, experimental results have been discussed. Conclusion and future scope are illustrated in section 5.

-

II. State Of The Art

Alessandro Colombo et al. [5] have proposed occlusion detection methodology from range images. They have developed a face model using eigen faces approach for detecting the occluded faces. The distance between occluded face space and face model will be sufficient and reveal the presence of occlusions. With this methodology, 83.8% occluded faces have been detected from UND occluded face database. In [6], authors have stated another approach towards occlusion detection. The difference between generic face model and input face range image is implemented for detecting the occlusion. In [7], authors have identified the occluded faces by comparing the input 3D face with a model. The templates or models those have been derived from the training face spaces are non-occluded, frontal and with a neutral expression. Using ICP, authors have performed the comparison in an iterative approach. In each iteration, the points greater than a selected threshold value are omitted. Thus, the occluded section is identified and removed.

With the comparison to domain knowledge as described earlier in this paper, authors have proposed two algorithms for detecting the occlusion. One is block based technique, and another one is threshold based approach. For detecting partially occluded face images, no generic face model is followed. The depths of 2.5D face images have been processed for detecting the outward sections. For localizing the occluded part, an ERFI (Energy Range Face Image) model is implemented. It is neither an eigenface space nor an average face model. It is used to preserve the depth energy of all frontal range face images for any particular subject. This model is expression invariant.

-

III. Proposed Algorithm

The proposed method is has four steps namely, (i) 3D face image acquisition, (ii) range image creation (iii) depth analysis to make final decision for detecting occlusion for 3D face images from range images and (iv)

its localization.

Fig.3. Pipelined structure of proposed algorithm

-

A. 3D Face Images

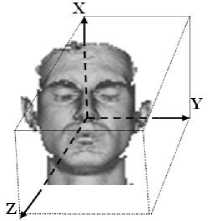

The 3D face images are with more information than 2D face images. The 2D images are formed from 2D vector of intensity values with particular image header and footer. The 3D images consist of metadata about the scanner along with the Z values (i.e. depth values) of the object in X-Y plane. Researchers can develop any kind of image representation like, spin image [8], range image [1], mesh image [1], triangular mesh, etc. according to their requirement. In figure 4, a typical 3D face image is shown. It is chosen from Frav3D database [9]. 3D face images from Frav3D database are VRML type [1] and images from Bosphorus database are of ‘.bnt.’ format [1].

The research work for occlusion detection is carried out on Bosphorus database [3]. Along with frontal, pose, expression variations and different occluded face images also belong in this database. Thus, this database is having all the challenging issues of face recognition. There are total 105 subject's 3D face images in the database. Among them, 60 are from the male and remaining 45 subjects are of the female. The face images have been captured by structured light scanner (SLS) [1] based acquisition system.

Fig.4. A typical 3D face image representation in X-Y-Z axes

-

B. 2.5D Image from 3D Image

The proposed technique is targeted on range images. The range images can also be termed as 2.5D image. In the range image instead of pixel’s intensity value, the depth value (‘Z’) of each point is represented by 2D vector with X-Y co-ordinates as its indices. The range images have been generated by the algorithm proposed by S. Ganguly et al. in [1]. This algorithm is further modified by the authors. The points that are not equal to -1000000000.000000 are only noted down. The same constraint has been followed for three components of each point in the point-clouds collected along three axes namely X, Y and Z. Thus, some unwanted points, non face regions highlighted in figure 5(a) are excluded in created 2.5D range face image (shown in figure 5(b)). Hence, the marked regions from the visual image are discarded in range image.

(a) Visual Image

(b) Range Image

Fig.5. Created only RoI as range face image

With this modified algorithm, RoI (Region of Interest) is easily extracted. The algorithm is implemented on randomly selected person’s 3D face image from Bosphorus database and generated range images are shown in figure 6. Corresponding 2D visual images of figure 6 have also been illustrated in figure 7. The description of these images has been completed in table 1.

(a) (b) (c) (d)

□ □ПП

(e) (f) (g) (h)

(i) (j)

Fig.6. Generated range face images from Bosphorus database

-

■ ■■■

-

(a) (b) I (d)

■ ■■■

(e) (f) (g) (h)

(i) (j)

Fig.7. Visual face images from Bosphorus database

Table 1. Description of the Face Images

-

(a) Face Image with ‘occluded eye by hand’

-

(b) Face Image with ‘occluded by eyeglass’

-

(c) Face Image with ‘occluded mouth by hand’

-

(d) Face Image with ‘disgust emotion’

(e)-(f) Face Image with ‘pose change-lower face variation.’

(g)-(h) Face Image with ‘pose variation along X-axis’

(i)-(j) Face Image with ‘pose variation along Y-axis’

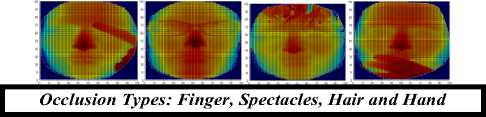

Fig.8. 2.5D surface representation of the occluded face images from Bosphorus database

-

C. Depth Analysis

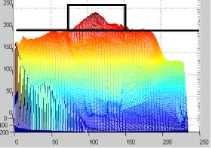

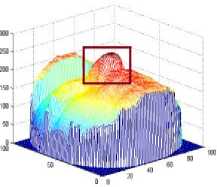

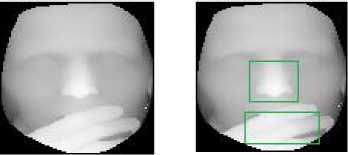

The depth values of facial surface that are kept in 2.5D range images are not available from 2D image. Thus, 2.5D range face image can significantly uncover the facial obtrude section instead of intensity values from 2D. It is noticed that, the nose can be detected at highest depth, and it is considered to be the most obtrude section from face image. In figure 9, the most obtrude section is localized at nose region from 3D face image.

Fig.9. The nose as an obtrude section

As because of the nose region (especially nose tip) is the closest point to the scanner it belongs to the obtruded region of the face. Now, if the face images are occluded (as shown in figure 9) then other than nose region, there should be another region as obtrude. This, investigation has inspired authors to design this depth based occlusion detection methodologies within face surface.

It is further investigated that, during face recognition there is a significant role of ‘makeup’. Here, makeup term is used to enlist the coloring, blushing, applying fair cream, etc. on face images other than plastic surgery. Some typical examples of face make are illustrated in figure 10. While the researchers are focused on 2D visible face image based recognition, then it becomes an important, challenging task.

(a) (b) (c) (d)

Fig.10. Synthesized examples of typical facial makeup from Bosphorus database

Due to the makeup, some face sections may become brighter or darker or even skin color may change. Thus, disguise due to makeup is also a great threat in an uncontrolled environment. In the presence of 2D thermal face image, this problem can be solved. In thermal face recognition process, minutia points are extracted from blood perfusion data [10]. Thus, different kinds of minutia points are extracted and recognized. But, some make up may also prevent from capturing heat radiation and thus blood perfusion data will be wiped out from the face region. But in all these situations, the depth data remains same. The change of face color, skin color during makeup does not affect the depth values. During the acquisition process [1], the scanner collects three different points along X, Y and Z axes, irrespective of texture information. Among three points, ‘Z’ points are the depth data (as shown in figure 4) used to create range face image. Thus, disguise problem due to makeup is automatically discarded during 3D face image based recognition [16] process.

-

• Preprocessing

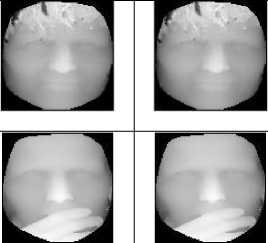

Before implementing the proposed algorithm on 2.5D range face images, all these images have been preprocessed by max filter followed by Adaptive median filter. Authors have also implemented median filter (shown in equation 2) with 3×3 filtering mask. The outcomes of these filters are shown in figure 11.

Original Image

Filtered Image 3x3 mask

(a)

(b)

(a) After adaptive median filter

(b) After median filter

Fig.11. Comparison of outcomes from max, adaptive median filter, and 3×3 median filters

Max filter (expressed in equation 3) [4] [11] is used to remove the holes from input 2.5D face image. The image may contain holes whose depth values are near 0 (zero) within face region. To discard holes from faces 3 × 3 window is used to obtain maximum depth value from 8-neighbours of the center pixel along with its own. Thus, the holes with zero depth value will be replaced by its maximum depth value of its neighbors. After the holes are removed, authors have again processed these filtered range face images using adaptive median filter [12]. Thus, spikes and holes are removed from range face images and finally it is smoothed. Final normalized smoothed field face image after applying max and adaptive median filters is shown in figure 12.

f(x ' ,y ' ) = median(x ,y)ew(x.y) {f(x,y)} (2)

f(x ' ,y ' ) = max (x,y)eW(x,y) {f(x,y)} (3)

In these equations, W(x, y) is the 3x3 window of the considered median and max filter and f(x,y) is the area of the original range face image R(x, y), defined by the window.

Fig.12. Filtered range face image

Adaptive median filter [4] has several advantages. Adaptive median filter is also capable to filter out bipolar and unipolar impulse noise. Thus, spikes are also removed from range face image. It also preserves the detailing part while smoothing, whereas, median filter fails to do. So, during the research work, authors have chosen adaptive median filter over median filter. A 3×3 window based median filter (described in equation 3) is also developed to compare the performances of the median filter and adaptive median filter that is shown in figure 11. During this research work, authors have designed the adaptive median filter with a fixed size 3×3 and then filter mask size is gradually increased to 5×5, 7×7 and 9×9.

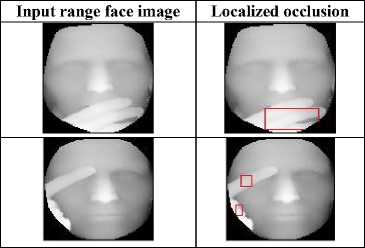

• Occlusion Detection

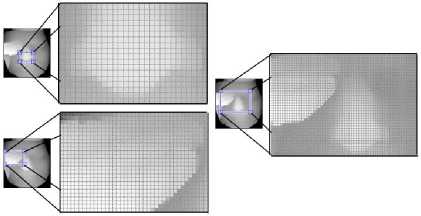

During the occlusion detection phase, authors have proposed two different methods. One is ‘threshold based’ and another one is ‘block based approach’. In both the approaches, the main focused area is to distinguish the obtrude sections. It is investigated that, if the face is occluded, then there must be more than one obtrudes section (other than nose area). In figure 13, the obtrude sections from neutral and occluded face images are shown.

(a) Neutral frontal face image

(b) Occluded face image

Fig.13. Description of obtrude section

Due to the pre-processing stage, the normalized face images should not belong with spike and holes. Thus, the proposed depth values can be sufficient to detect occlusions rather than detecting it from pixel values.

-

• Threshold Based Occlusion Detection

Bit-pane slicing [4] [13] is one of the crucial technique in the domain of image processing. It is very much useful to identify which bits are holding informative information from overall image. Authors have implemented this method for analyzing depth values from range face images. It is noticed that, due to the variations of surface’s depth, corresponding depth values from range images also varies. The outcome of bit-plane slicing technique from occluded, as well as neutral range face images (i.e. frontal non-occluded), are illustrated in figure 14 and 15.

(a) From 1st Bit

(b) From 2nd Bit

(c) From 3rd

(d) From 4th Bit

(e) From 5th

Bit

(f) From 6th Bit

Bit

(g) From 7th (h) From 8th

Bit Bit

Fig.14. Bit-plane sliced occluded range face images

(a) From 1st Bit

(b) From 2nd Bit

(c) From 3rd Bit

(d) From 4th

(e) From 5th Bit

(f) From 6th Bit

Bit

(g) From 7th (h) From 8th

Bit Bit

Fig.15. Bit-plane sliced neutral range face images

From the bit-plane sliced images, it is investigated that in 7th bit-plane slice there is always an extra ‘component’ or ‘obtrude section’ compared to same bit-plane of nonoccluded range face image. As because the range images have been pre-processed, there should not be any noise. Then the outward components can be detected using depth neighborhood (either by 4 or 8 neighborhoods) connectivity.

Thus, for investigation purpose, authors have implemented and applied Otsu’s multilevel threshold method [14] to depth values of range images. Otsu’s algorithm is iterative in nature, and it is also an unsupervised technique [4] [14]. At the threshold level 7, the two components are adequately identified, whereas, at same threshold level, neutral range face images (i.e. non- occluded) are having only one single obtrude section. In figure 16, this study has been illustrated.

(i) Preprocessed image

(ii) Detected two obtrude section

(iii) Corresponding boundary

(iv) Isolated facial component

-

(a) From occluded face

ГЛВШВПВ

-

(ii) Detected (iii) (iv) Isolated

-

(i) Pre- obtrude section Corresponding facial

processed boundary component image

-

(b) From non-occluded face

2.5D Range Image б

Fig.16. Examination of the algorithm

For robustness, a ‘cut mechanism’ is also incorporated for localizing the components. The input 2.5D range image is the source, and isolated regions are the final outcome of the proposed methodology for detecting the occlusion. In figure 17, a schematic diagram of the implemented cut mechanism is described.

Binary image before cutmechanism

.

ormalized image after cu mechanism

D

Facial obtrude section

2.5D Range Image

Binary image before cut-

mechanism

Normalized image after cutmechanism

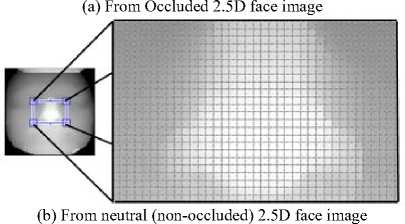

Fig. 18. Analyzed depth density from 2.5D images

Facial obtrude sections

Fig.17. Outcome of the proposed ‘cut mechanism’

Normalization is the pre-final step after which the decision of occluded face will be taken. During this image normalization step, the noises or unwanted regions are omitted. The presence of enormous impulse noise, holes due to lack of depth values during irregular 3D scanning may lead to reduced detection of the facial occlusion that will cause failure of face recognition. To introduce such challenges, authors have manually impured the range images by holes. Thus, from synthesized range face images more than one (for nonoccluded face) or two (for occluded face) components are detected as shown in figure 17.

The ‘cut mechanism’ is also an iterative process. In each iteration, the number of white pixels (the foreground) from each component is counted. Then, a threshold value ‘T’ is used to detect these small noisy regions in the images taken from synthesized dataset. The regions which satisfy this criterion are filled with a black pixel (the background). The remaining regions are used to detect the occluded faces.

The faces that are having two components of logical images as obtrude section, with high depth density, are detected as occluded 3D face images from 2.5D images.

-

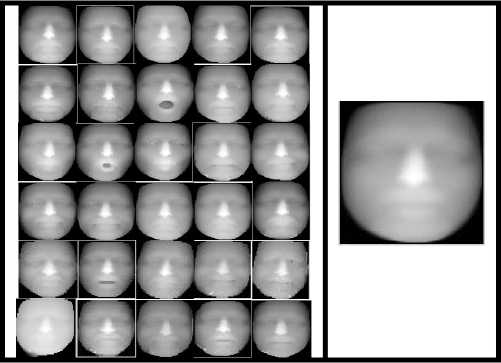

• Block Based Occlusion Detection

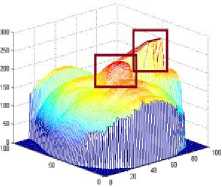

During depth value analysis phase, authors have noted that obtrude sections must have high depth density other than any other facial surface. It is also investigated that, for non-occluded faces only have nose region as an outward part whereas for occluded faces as shown in figure 1, there must be more than one noticeable depth density. In figure 18, this outcome has been represented.

In this methodology, two blocks of size of 3×3 and 5×5 are rolled over 2.5 D face images in a row-major order [15]. Thus, the depth values from 2.5D image under these windows are counted. With this mechanism, the outward facial regions are identified, and occluded faces are detected. The face images with more than one outward region have been detected as occluded faces. For more validation purpose, two different blocks have been considered for this research work.

There are certain advantages of this approach over threshold technique. This method is useful if the spikes are removed using the median filter instead of adaptive median filter. Like previous procedure, there is no need for selection of any cut or threshold value for occlusion detection. There will be no normalization phase during occlusion. Thus, the time complexity of this process would be less compared to the previous approach. But, due to the presence of a vast number of spikes and holes, this algorithm may not be useful. Moreover, the variations in block size are also tested for the robustness of this algorithm. The outcome of detected obtrude sections using block based method from range face images is shown in figure 19.

(a) Occluded 2.5D face image

(b) 2.5D face image with expression (non-occluded)

Fig.19. Outcome of the proposed ‘block based technique.’

-

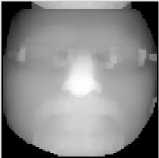

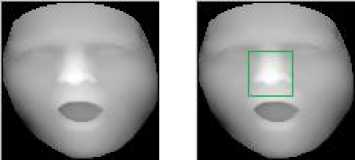

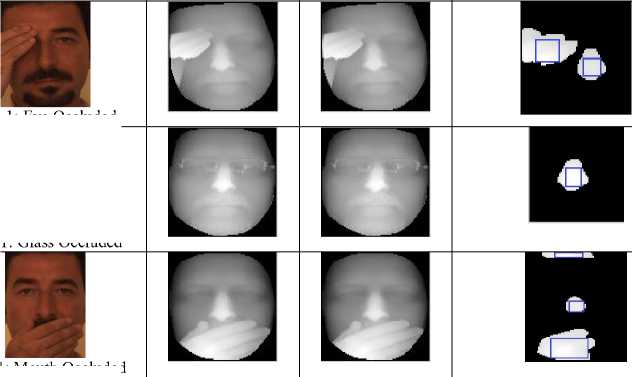

D. Occlusion Localization

During the localization of occlusion, an ERFI model [16] from each subject is developed by the authors. This model actually preserves all the depth energies from the frontal posed range face image. It is an expression as well as illumination invariant. The ERFI model of the subject shown in figure 11 is illustrated in figure 12.

(a)Frontal range face images

(b) ERFI model

Fig.20. ERFI model from randomly selected subject from database

The randomly selected subject from Bosphorus database consists of 48 different pose and expression variant 3D face images. Among them, approximately 30 face images are of frontal posed with variation of expressions. These 30 range face images have been used to create ERFI model for that subject to localize occluded section from face surface. Following the occlusion detection and ERFI model development, the absolute difference between ERFI model and input occluded range face image is computed using equation 4.

Di = |XERFI - YO| (4)

In this equation, XERFI is the ERFI model and YO is the occluded range face image. Absolute differences that have been estimated from two range images are used to carry out in a row-major order. Following this procedure, global maximum differences from face surface are localized as occluded section. The localized part of a randomly selected subject from the dataset is shown in table 2.

Table 2. Localized Occluded Section

-

IV. Experimental Results And Discussions

The basic target of these proposed algorithms is to detect the obtrude components for both the occlusion detection and localization. These components are the most outward sections, like nose or occluded area by glass, hand or hair. The faces with two outward or obtrude regions have been detected as occluded face images. The dataset that has been considered is having facial hair like beard, along with different types of occlusion, like spectacles, mustache, beard, etc. as discussed in figure 8. This is a challenging task for occlusion detection.

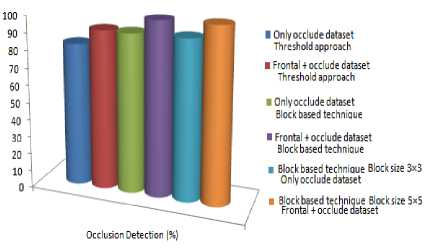

During the investigation phase, the proposed methodologies have been tested in a variety of directions. First, two datasets have been created from the original Bosphorus database. The first dataset contains all the frontal non-occluded (expression invariant) along with occluded face images. Another dataset consists of only occluded face images where variations of occlusion are considered. In these datasets, two different approaches have been experimented. In first process, proposed threshold technique is implemented. During second measurement, blocks of size 3×3 and 5×5 are implemented. The reason for splitting the original datasets into two sub-sets is to highlight the robustness of the proposed scheme. From the first dataset, if the algorithm detects the occluded regions from occluded as well as non-occluded faces then it would not be very much effective as aimed, whereas, if the detection algorithm is able to detect all the occluded faces from second dataset then the algorithm is definitely the robust one.

In table 3 and 4 the detected occluded face images using threshold technique as well as block based approach of randomly selected subjects from the database are reported. Hence, detection and thereafter localization of occlusion is done from a set of non-occluded as well as occluded range face images to describe the efficiency of the algorithm.

In this experimental section, two subjects have been demonstrated for discussion. Now, in these tables ‘RSS i: [facial actions]’ used as an abbreviated term of ‘Randomly Selected Subject’ (i=1 or 2) with various facial actions. Here, outcome of proposed methodologies have been described for two subjects from the database. The success rate of automatic occlusion detection is compared with manually detected occluded face images. The measurement of successful detection of occluded range face images from Bosphorus database is described in table 5. The comparison of these result sets is also represented in figure 21.

Table 5. Performance of the Proposed Methodology

|

Proposed method |

Success rate of occlusion detection (%) |

||

|

Only occlude dataset |

Frontal + occlude dataset |

||

|

Threshold approach |

82.85 |

91.79 |

|

|

Block based technique |

Block size 3×3 |

91.43 |

99.71 |

|

Block size 5×5 |

91.43 |

99.71 |

|

This analysis has been preserved by qualifying the output by subjective fidelity criteria [4]. For this investigation, 18 volunteers have been involved. The rating scale has been shown in table 6. Here, 4 subjective evaluation such as {Occluded- Detected; Occluded- Not detected; Non Occluded- Detected; Non Occluded- Not detected} is carried out. Due to the efficiency of the proposed algorithm, the non occluded faces have not been detected as occluded. Therefore, the performance rate that has been described is fully based on whether the occluded faces have been successfully detected or not.

Fig.21. Comparative study of the proposed algorithms

Table 6. Rating scale of the occlusion detection study

|

Subjective Evaluation |

Description |

|

Occluded- Detected |

The face is occluded, and it has successfully been detected |

|

Occluded- Not detected |

The face is occluded but not detected |

|

Non Occluded-Detected |

The face is not occluded, and it has successfully been detected |

|

Non Occluded- Not detected |

The face is not occluded but detected as occluded |

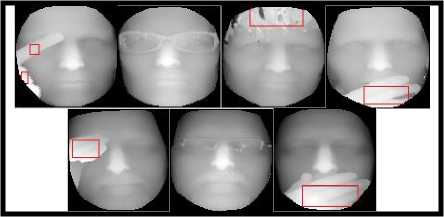

In figure 22, the localized occlusion on face surface by the proposed algorithm is represented. After occluded faces are detected then the accomplished occlusion localization rate from face surface is 95%.

Fig.22. Localized occluded section from face surface

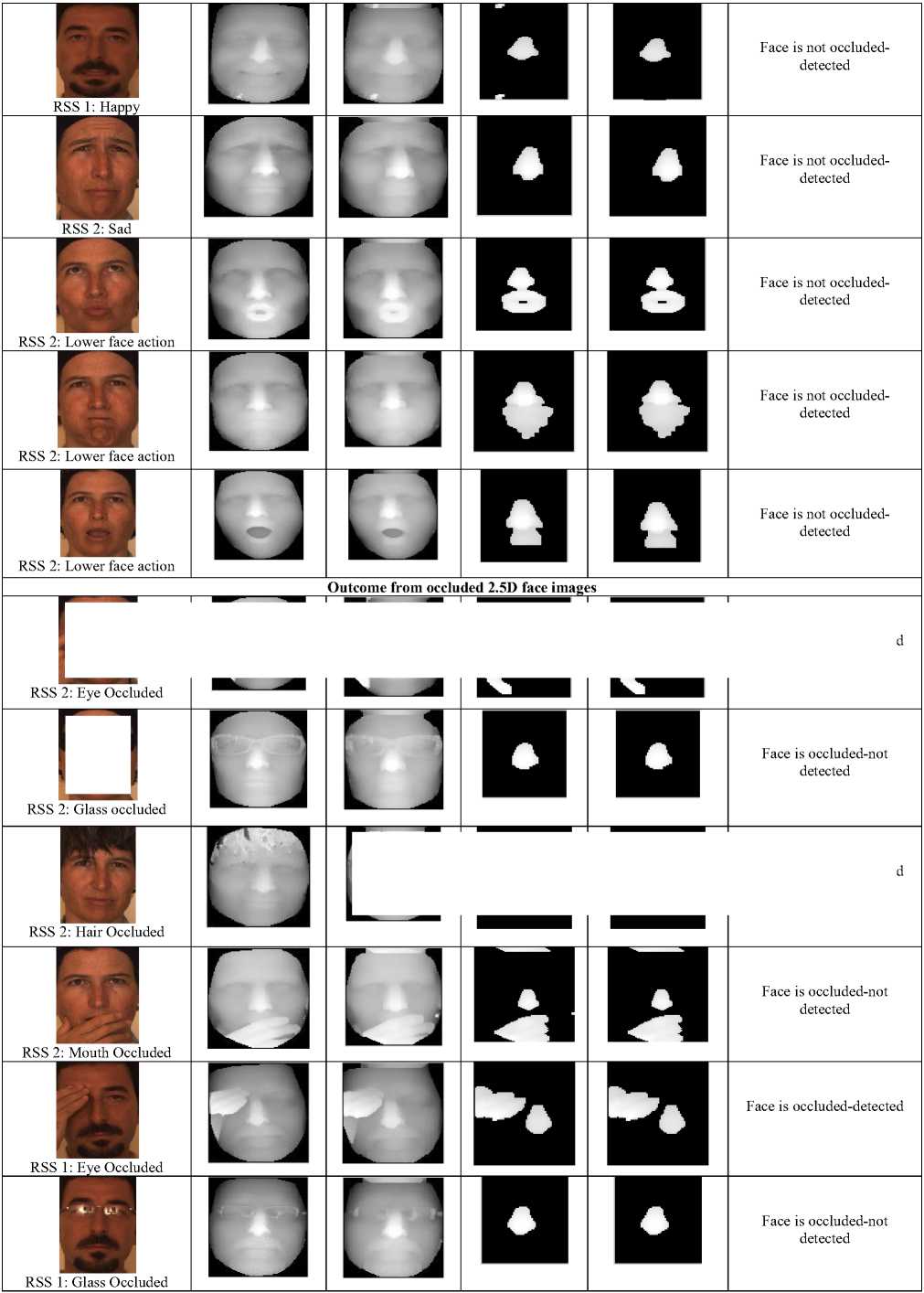

Table 3. Some Experimented Outcomes of the Threshold Based Technique

2D visual image

2.5 D image from 3D Pre-processed Image Isolated components

Normalized images

Remarks

RSS 1: Anger

Outcome from non-occluded 2.5D face images

.................□

Face is not occluded-detected

Face is occluded-detected

Face is occluded-detected

RSS 1: Mouth Occluded

Face is occluded-not detected

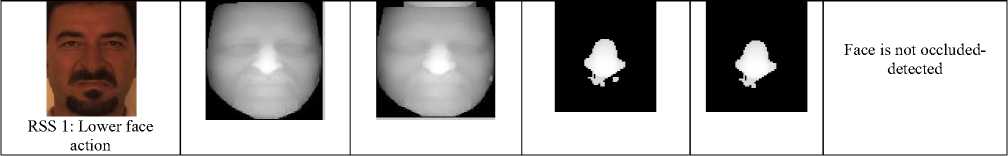

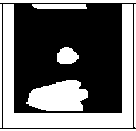

Table 4. Some Experimental Outcomes of the Block Based Approach

|

2D visual image |

2.5 D image from 3D |

Pre-processed Image |

Detected outward components |

Remarks |

|

Outcome from non-occluded 2.5D face images |

||||

|

RSS 1: Anger |

Face is not occluded-detected |

|||

|

■ RSS 1: Lower face action |

в |

Face is not occluded-detected |

||

|

т RSS 1: Happy |

D |

Face is not occluded-detected |

||

|

RSS 2: Sad |

Face is not occluded-detected |

|||

|

RSS 2: Lower face action |

Face is not occluded-detected |

|||

|

н RSS 2: Lower face action |

Face is not occluded-detected |

|||

|

RSS 2: Lower face action |

□ |

Face is not occluded-detected |

||

|

Outcome from occluded 2.5D face images |

||||

|

RSS 2: Eye Occluded |

Face is occluded-detected |

|||

Face is occluded-not detected

RSS 2: Glass Occluded

RSS 2: Hair Occluded

RSS 2: Mouth Occluded

RSS 1: Mouth Occluded

RSS 1: Eye Occluded

RSS 1: Glass Occluded

Face is occluded-detected

Face is occluded-detected

Face is occluded-detected

Face is occluded-not detected

Face is occluded-detected

-

V. Conclusions And Future Scope

Considering only face recognition term is a simple challenge for biometric application. But for the robust performance in the unconstrained environment it is required to consider many situations including variations of pose, expression, illumination and occlusion.

In this paper, authors have proposed some novel techniques for detecting the occluded faces and then localizing the occluded section among input range face images. It is a crucial task towards high performance face recognition purpose. Thus, not only frontal range face images but also occluded face can be considered for robust face recognition purpose. Now, authors are working to reconstruct the facial surface (i.e. to recreate the depth values in localized occluded sections) for automatic recognition reason.

With a set of advantages, as described earlier, the proposed algorithms are not capable of detecting the occluded face with spectacles.

Acknowledgment

Список литературы Depth based Occlusion Detection and Localization from 3D Face Image

- S. Ganguly, D. Bhattacharjee, and M. Nasipuri, "2.5D Face Images: Acquisition, Processing and Application," In ICC-2014 Computer Networks and Security, Publisher: Elsevier Science and Technology, Pages: 36-44, Proceedings of 1st International Conference on Communication and Computing (ICC-2014), ISBN: 978-93-5107-244-7, June 12th-14th, 2014.

- L. Spreeuwers, "Fast and Accurate 3D Face Recognition Using registration to an Intrinsic Coordinate System and Fusion of Multiple Region Classifiers," In Int J Comput Vis (2011) 93: 389–414, DOI 10.1007/s11263-011-0426-2.

- Bosphorus Database, URL: http://bosphorus.ee.boun.edu.tr/default.aspx

- R. C. Gonzalez and R. E. Woods, "Digital Image Processing," 3rd Edition, ISBN-13: 978-0131687288 ISBN-10: 013168728X

- A. Colombo, C. Cusano and R Schettini, "Recognizing Faces in 3D Images Even In Presence of Occlusions," 978-1-4244-2730-7/08, IEEE, 2008.

- N. Aly¨uz, B. G¨okberk, L. Spreeuwers, R. Veldhuis, and L. Akarun, "Robust 3D Face Recognition in the Presence of Realistic Occlusions," 978-1-4673-0397-2/12, IEEE, pp. 111-118.

- Hassen Drira, Boulbaba Ben Amor, Anuj Srivastava, Mohamed Daoudi, and Rim Slama. 3D Face Recognition under Expressions, Occlusions and Pose Variations, In IEEE Transactions on Pattern Analysis and Machine Intelligence, 2010.

- Z. Zhang, S. Heng Ong, K. Foong, "Improved Spin Images for 3D Surface Matching Using Signed Angles," pp 537-540, 978-1-4673-2533-2/12/$26.00 ©2012 IEEE, ICIP 2012.

- S. Ganguly, D. Bhattacharjee, and M. Nasipuri, "3D Face Recognition from Range Images Based on Curvature Analysis," In ICTACT Journal on Image and Video Processing, February 2014, Volume: 04, Issue: 03, pages 748-753, ISSN Number (Print): 0976-9099, ISSN Number (Online): 0976-9102.

- A. Seal, S. Ganguly, D. Bhattacharjee, M. Nasipuri, D. K. Basu, "Minutiae Based Thermal Human Face Recognition using Label Connected Component Algorithm," Procedia Technology, In 2nd International Conference on Computer, Communication, Control and Information Technology (C3IT-2012), February 25 - 26, 2012.

- H. N. Bui, I. S. Na, S. H. Kim, "De-Noising Model for Weberface-Based and Max-Filter-Based Illumination Invariant Face Recognition," In Ubiquitous Information Technologies and Applications, Lecture Notes in Electrical Engineering Volume 280, 2014, pp 373-380.

- H. Zhang, W. Zuo, K. Wang, D. Zhang and Y. Chen. "Median Filtering-Based Quotient Image Model for Face Recognition with Varying Lighting Conditions," In First International Workshop on Education Technology and Computer Science, DOI 10.1109/ETCS.2009.485, 2009.

- T. Srinivas, P. S. Mohan, R. S. Shankar, Ch. S. Reddy, P. V. Naganjaneyulu, "Face Recognition Using PCA and Bit-Plane Slicing," In Proceedings of the Third International Conference on Trends in Information, Telecommunication and Computing, Lecture Notes in Electrical Engineering Volume 150, 2013, pp 515-523.

- N. Otsu, "In A Threshold Selection Method from Gray-Level Histograms," In IEEE Transactions on Systems, Man, and Cybernetics, Vol. SMC-9, No. 1, January 1979.

- S. Ganguly, D. Bhattacharjee and M Nasipuri, "Range Face Image Registration using ERFI from 3D Images", in Proceedings of the 3rd International Conference on Frontiers of Intelligent Computing: Theory and Applications (FICTA) 2014, Series of 'Advances in Intelligent and Soft Computing; Volume 328', Publisher: Springer International Publishing, Pages: 323-333, Vol. 2, Print ISBN: 978-3-319-12011-9, Online ISBN: 978-3-319-12012-6, DOI: 10.1007/978-3-319-12012-6_36, November, 2014.

- P. S. Hiremath, M. Hiremath, "Depth and Intensity Gabor Features Based 3D Face Recognition Using Symbolic LDA and AdaBoost", International Journal of Image, Graphics and Signal Processing (IJIGSP) ISSN: 2074-9074(Print), ISSN: 2074-9082 (Online) DOI: 10.5815/ijigsp.