Enhancing navigation of autonomous mobile robots through permanent federated learning

Автор: Al-khafaji I.M.A., Alisawi W.Ch., Ibraheem M.Kh.I., Djuraev H.A., Panov A.V.

Рубрика: Информатика и вычислительная техника

Статья в выпуске: 3, 2023 года.

Бесплатный доступ

Deep learning is crucial for advancing artificial intelligence in robotics. Deep learning enables autonomous robots to perceive and control their environment. Federated learning allows distributed robots to train models while maintaining privacy. This paper focuses on using FL to overcome vision-based obstacles in mobile robotic navigation. We evaluate FL’s performance in both simulated and real-world environments. Our research compares FL’s multiple image classifiers to cloud-based central learning using existing data. We also implement a continuous learning system on mobile bots with autonomous data generation. Training models in simulation and reality improves accuracy and enables continuous model updates.

Federated learning, robot navigation, continuous learning, obstacle avoidance, efficientnet, lifelong learning, privacy

Короткий адрес: https://sciup.org/148327118

IDR: 148327118 | УДК: 004.89 | DOI: 10.18137/RNU.V9187.23.03.P.119

Текст научной статьи Enhancing navigation of autonomous mobile robots through permanent federated learning

Ал-Хафаджи Исра М. Абдаламир аспирант, МИРЭА – Российский технологический университет, Москва; ассистент преподавателя, Университет Мустансирия, город Багдад. Сфера научных интересов: информационные технологии, глубокое обучение, машинное обучение и разработка программного обеспечения. Автор двух опубликованных научных работ.

Enhancing navigation of autonomous mobile robots through permanent federated learning ing the gap between human and robotic learning. Multiple robots with diverse tasks are distributed across different locations, including simulators, within the FL-based lifelong learning system. The performance of EfficientNet and ResNet is evaluated using centralized and federated learning methods. The model’s accuracy is assessed using various datasets and environmental variables. New data is acquired by a mobile robot autonomously navigating through a novel environment. DL enables robots to build models based on real-world environments, complementing their environment detection capabilities. To address challenges in multiple-robot systems, a collective model for sharing environmental knowledge is proposed. The approach combines FL and Sim-to-Real techniques, integrating a real-world simulator and lifelong learning. The research aims to achieve unified and continuous learning, analyzing the effectiveness of neural networks in obstacle avoidance, assessing Sim-to-Real transfer, and integrating lidar-based navigation for data collection. The paper includes a comprehensive review of relevant research, methodology, experimental results, and concludes with future research directions. The study contributes to robotics by establishing unified and continuous learning methodologies, overcoming vision-based obstacles through FL and sim-to-real transfer, and enhancing the capabilities of autonomous robots in complex environments [1–3].

Related Work

Enabling collaboration and cooperation among diverse robots and deep learning (DL) systems is crucial for multi-robot systems. Previous research has focused on learning from real-world experiences, transferring simulations to reality, and continuous learning algorithms. Centralized learning, relying on cloud-based infrastructure [4], and federated learning, focusing on privacy and efficient network resource utilization, are important approaches for collaborative learning. Lifelong and continuous learning in robotics have primarily been studied in simulated environments. Real-world applications face challenges such as limited knowledge, navigation difficulties, and obstacle collisions. Lifelong Learning Navigation offers a promising approach for robots to continuously learn and improve their navigation performance. Visionbased deep learning for obstacle recognition and avoidance using cameras is an inexpensive alternative to lidar data [5]. Sharing and cooperation enable robots to learn about different environments. Deep reinforcement learning (DRL) models and sim-to-real transfer techniques contribute to the development of autonomous behaviors and overcoming vision-based obstacles in challenging environments [6].

Methodology

This section outlines the robot system components, the use of two deep neural networks for obstacle avoidance, and the navigation settings for continuous learning.

-

A. Data Collection for Federated Learning: Data collection involved Nvidia Isaac Sim, environment data, and various data sources. Simulated environments included hospital, warehouse, and office settings, while real-world rooms were used for validation. Training data from different sources were denoted as Si for simulated environments and HS or HR for Husky robot data.

-

B. Deep Neural Networks for Obstacle Avoidance: Two distinct deep neural networks were employed for obstacle avoidance. Architecture and parameters can be found in [7]. These networks were trained on collected datasets to enable effective obstacle avoidance based on visual inputs.

-

C. Navigation Settings for Continuous Learning: Navigation algorithms and strategies were designed to enable continuous learning. These settings ensured smooth and efficient robot movement while maintaining safe obstacle avoidance. By combining data collection, deep neural networks, and navigation settings, a robust framework was established for training and improving obstacle avoidance in both simulated and real-world environments.

-

D. Vision-based obstacle avoidance models: were trained using two deep learning (DL) models: EfficientNet and ResNet50 [8–10]. These models were convolutional neural networks (CNNs) specifically designed for binary classification of visual obstacles into movable and immovable classes. The choice of these architectures was based on their proven success in various domains and their suitability for the task given the low complexity and size of the data.The purpose of these models was to assess the performance of continuous learning and its ability to transfer knowledge from simulated to real environments. This approach focused on distinguishing between different types of visual obstacles in various settings, rather than object detection methods. The primary objective of the study was to develop a continuous learning method for obstacle avoidance.

-

E. To implement lifelong learning for obstacle avoidance based on federated learning, a lidar sensor was integrated into the Husky Navigation System. This FL-based system enabled the robots to continuously learn and navigate while avoiding visual obstacles. The lidar sensor detected areas with obstacles and areas without visual data, facilitating the navigation process.

The robots collected a sufficient amount of data and independently trained their own models, ensuring privacy and autonomy. These trained models were then sent to a server for aggregation, resulting in a comprehensive model representing the collective knowledge and experience of the robots. This unified model could be deployed on different robot types to evaluate its effectiveness in various scenarios.

For prototyping and experiments, the Clearpath Husky was used in both simulated and real-world environments. Equipped with an Ouster OS0-128 lidar sensor, the custom Husky robotic platform allowed for autonomous mobility. When the robot approached an area where the distance was below a specified threshold, a dedicated camera captured images. These images were classified to determine the presence of obstacles in the robot’s path. Sufficient images were collected to train local models based on the environmental data. FL methods were then employed to integrate these models into a unified model used for real-world obstacle avoidance.

Simulator data was utilized in the continuous learning process due to the challenges of obtaining continuous real-world data. In the next section, the performance of the FL model was evaluated using both simulated and real-world data distributions.

Experimental Results

This section presents the experimental results obtained using ambient environmental data from both simulated devices and the real world. We explore different knowledgesharing approaches for obstacle avoidance using the EfficientNet and ResNet50 models and assess the transferability of knowledge from simulation to reality. We also demonstrate the effectiveness of federated learning (FL) in simplifying lifelong learning, as demonstrated by the navigation capabilities of the Husky robot in both simulation and real-world scenarios.

Enhancing navigation of autonomous mobile robots through permanent federated learning

Centralized Training vs. Federated Learning We compare the effectiveness of standardized learning procedures through federated learning against traditional centralized training with data standardization. Our analysis evaluates performance using both real-world and simulated data. Building upon previous work [7], we extend our study to include AlexNet and ResNet50 models to observe performance variations between centralized and FL-based training using different deep learning models.

To conduct the experiments, we use datasets collected from simulated environments, including a university building (St0), office (St1), and warehouse (St2). We train the models individually on each dataset and their combinations (St0,1, St0,2, St1,2, St0,1,2) and validate them on dataset Sv_i, where i ∈ {0,1,2}. This approach simulates a collaborative learning paradigm where diverse subsets of robots learn without directly sharing base data. Only the models are developed, with the global model periodically updated.

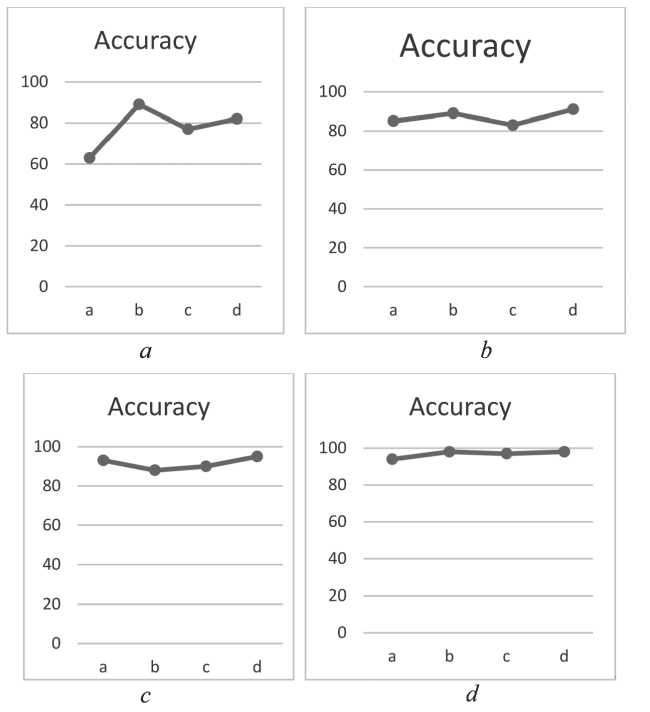

For real-world data, we repeat the process using datasets collected from rooms, offices, and labs (Rt0, Rt1, Rt2) and their combinations (Rt0,1, Rt0,2, Rt1,2, Rt0,1,2). The corresponding validation data is denoted as Rv_i, where i ∈ {0,1,2}. The accuracy of the EfficientNet and ResNet models for centralized and FL-based learning is depicted in Figure 1 and Figure 2, respectively.

Figure 1 ( a–d ). Accuracy of models for ResNet50

Accuracy

100 80 • • *

0 abed

a

Accuracy

100 ♦ - 80 60 40 20 0 abed

c

Accuracy

100 < if if *

20 0

b

Accuracy

100 80 ~ ~

0 d

Figure 2 ( a–d ). Accuracy of models for Efficient Net

The results show that the FL-based approach achieves competitive accuracy in both simulated and real-world scenarios compared to traditional centralized training methods for visiondependent obstacle avoidance tasks.

In addition to accuracy, we evaluate the area under the ROC curve (AUC) for each training strategy. The AUC values for the EfficientNet and ResNet50 models are presented in the accompanying Table. The AUC provides insights into the models’ performance and reliability. In terms of robotic navigation, false positives have a significant impact on reducing navigation speed, while infrequent collisions can be avoided by addressing the robot’s observations of the same obstacle from different angles. False negatives do not accumulate over time and are addressed before collisions occur.

Table

Area under ROC curve (AUC) values

|

a |

63 |

93 |

85 |

94 |

|

b |

89 |

88 |

89 |

98 |

|

c |

77 |

90 |

83 |

97 |

|

d |

82 |

95 |

91 |

98 |

|

a |

81 |

93 |

86 |

99 |

|

b |

84 |

92 |

88 |

98 |

|

c |

88 |

90 |

85 |

98 |

|

d |

86 |

95 |

90 |

99 |

Enhancing navigation of autonomous mobile robots through permanent federated learning

By presenting these experimental results, we demonstrate the effectiveness of different learning approaches and highlight the potential of federated learning in lifelong learning scenarios for robot navigation tasks.

Experimental Results

This section presents the experimental results obtained from simulated and real-world data for the EfficientNet and ResNet50 models. We compare the performance of standardized learning methods to the centralized learning approach, where data is aggregated into a single training set.

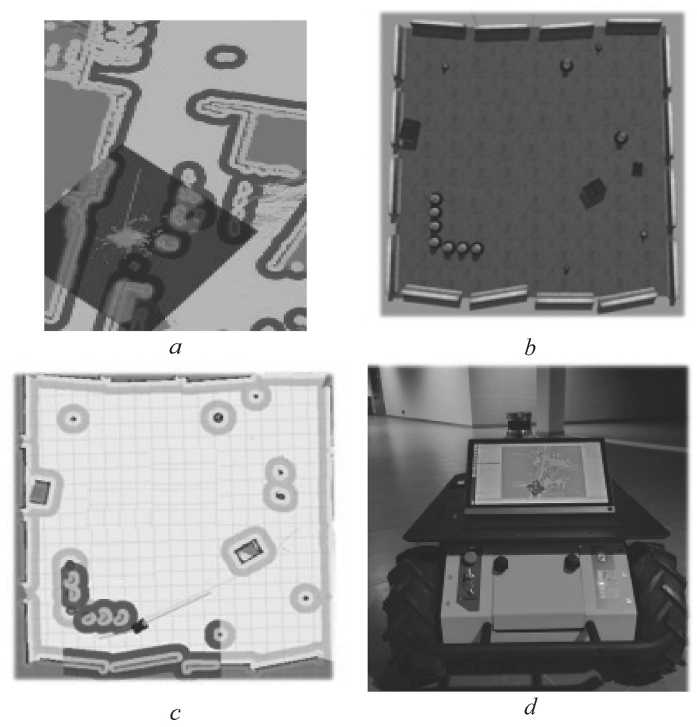

Regarding the utilization of the simulator, we followed the methodology outlined in [7] and evaluated the obstacle avoidance capabilities of the models using a real-world dataset. Figure 3 showcases the performance of the EfficientNet and ResNet50 models in a visibility-dependent scenario. The results indicate that the FL approach outperforms the data-centric models in terms of sim-to-real performance and clustering. The EfficientNet model demonstrates better suitability for obstacle avoidance within the dataset [9; 10].

Figure 3 ( a–d ). Husky Navigation

For the EfficientNet model, incorporating models from simulation or the real world increased vision-based obstacle avoidance accuracy. On the other hand, the ResNet50 model performed better when local models from the simulator were fused. Combining models from both sources improved accuracy, demonstrating the benefits of collaborative learning.

Discussion and Conclusion

This study introduces a FL-based perpetual learning approach for vision-based obstacle avoidance in various mobile robots operating in simulated and real-world environments. By using two different deep neural networks, we enhance the generalizability of the results. FL achieves competitive accuracy compared to centralized learning in both simulated and real-world scenarios, while promoting connectivity and data privacy for collaboration.The FL approach also demonstrates effective and stable transfer of obstacle knowledge from simulation to reality. Aggregating models from local agents in simulated or real-world environments improves obstacle avoidance performance.Future work involves extending the FL-based lifelong learning system to other robotic navigation tasks to enhance sim-to-real capabilities. Dynamic adaptation of simulated environments based on real-world robot experiences is also considered, such as introducing new obstacles [11].

In conclusion, this study highlights the effectiveness of FL in perpetual learning for visionbased obstacle avoidance in diverse robotic systems. FL has the potential to enhance performance, facilitate collaboration, and transfer knowledge between simulated and real-world environments [12; 13].

Список литературы Enhancing navigation of autonomous mobile robots through permanent federated learning

- Mateus Mendes, AP Coimbray, and MM Crisostomoy (2018) Assis-cicerone robot with visual obstacle avoidance using a stack of odometric data. IA ENG Int. J. Comput. Sci, 45:219–227.

- Harry A Pierson and Michael S Gashler (2017) Deep learning in robotics: a review of recent research. Advanced Robotics, 31(16):821–835.

- Wenshuai Zhao, Jorge Pena Queralta, and TomiWesterlund (2020) Sim-to-real ˜ transfer in deep reinforcement learning for robotics: a survey. In IEEE Symposium Series on Computational Intelligence. IEEE, 2020.

- Boyi Liu, Lujia Wang, Xinquan Chen, Lexiong Huang, Dong Han, and Cheng-Zhong Xu (2021) Peerassisted robotic learning: a data-driven collaborative learning approach for cloud robotic systems. In 2021 IEEE International Conference on Robotics and Automation (ICRA ), pp. 4062–4070. IEEE, 2021.

- Ying Li, Lingfei Ma, ZilongZhong, Fei Liu, Michael A Chapman, Dongpu Cao, and Jonathan Li (2020) Deep learning for lidar point clouds in autonomous driving: A review. IEEE Transactions on Neural Networks and Learning Systems, 32(8):3412–3432.

- Lingping Gao, Jianchuan Ding, Wenxi Liu, HaiyinPiao, Yuxin Wang, Xin Yang, and Baocai Yin (2021) A vision-based irregular obstacle avoidance framework via deep reinforcement learning. In IEEE/RSJ International Conference on Intelligent Robots and Systems, 2021.

- Xianjia Yu, Jorge Pena Queralta, and TomiWesterlund (2022) Federated learning for vision-based obstacle avoidance in the internet of robotic things. arXiv preprint arXiv:2204.06949.

- Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. (2021) Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, 25:1097–1105.

- Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun (2016) Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2016.

- Abhik Singla, Sindhu Padakandla, and Shalabh Bhatnagar (2021) Memorybased deep reinforcement learning for obstacle avoidance in uav with limited environment knowledge. IEEE Transactions on Intelligent Transportation Systems, 22(1):107–118.

- Bo Liu, Xuesu Xiao, and Peter Stone (2021) A lifelong learning approach to mobile robot navigation. IEEE Robotics and Automation Letters, 6(2):1090–1096.

- Ahmed Imteaj, UrmishThakker, Shiqiang Wang, Jian Li, and M HadiAmini (2021) A survey on federated learning for resource-constrained iot devices. IEEE Internet of Things Journal, 9(1):1–24.

- Hamidreza Kasaei S., Jorik Melsen, Floris van Beers, Christiaan Steenkist, and Klemen Voncina (2021) The state of lifelong learning in service robots. Journal of Intelligent & Robotic Systems, 103(1):1–31.