Face recognition based texture analysis methods

Автор: Marwa Y. Mohammed

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 7 vol.11, 2019 года.

Бесплатный доступ

A unimodal biometric system based Local Binary Pattern (LBP) and Gray Level Co-occurrence Matrix (GLCM) is developed to recognize the facial of 40 subjects. The matching process is implemented using three classifiers: Euclidean distance, Manhattan distance, and Cosine distance. The maximum accuracy (100%) is satisfied when GLCM and LBP are applied with Euclidean distance. The accuracy result of these two methods is advanced the Principle Component Analysis (PCA) and Fourier Descriptors (FDs) recognition rate. The ORL database is considered for constructing the proposed biometric system.

Face recognition, LBP, GLCM, Euclidean distance, Cosine distance, Manhattan distance

Короткий адрес: https://sciup.org/15016063

IDR: 15016063 | DOI: 10.5815/ijigsp.2019.07.01

Текст научной статьи Face recognition based texture analysis methods

Published Online July 2019 in MECS DOI: 10.5815/ijigsp.2019.07.01

The biometric system is an efficient technique which utilizes human biological traits for identification, it overcomes the limitations in the traditional system where the human traits cannot be stolen, forgotten, and fraud [1]. Human traits could be divided into two types: behavioral and physiological. The face, iris, hand geometry, fingerprint etc. are called physiological traits, while behavioral traits are relied on human actions such as gait, voice, signature, and keystroke [2,3].

The face recognition could be operated in two modes: verification mode and identification mode. The verification mode process indicates to one-to-one matching operation, while identification process indicates to one-to-many matching operation where the system attempts to answer this question “Who is this person?” [4,5].

This paper demonstrates a new approach of face recognition using two texture description methods: Local Binary Pattern (LBP) and Gray Level Co-occurrence Matrix (GLCM). Also, two different feature extraction methods are proposed for comparison: Principle Component Analysis (PCA) and Fourier Descriptors (FDs). In classification step, three algorithms are applied: Euclidean distance, Manhattan distance, and Cosine distance. The paper is organized as follows: section I demonstrates the introduction, section II illustrates the most related works. The proposed system construction is explained in Section III, section IV presents the experimental results of the feature extraction methods. Finally, section V concludes the best performance result among these methods.

-

II. Related Works

In 2013, Agarwal and Prakash proposed new technique for face recognition using Improved Principal Component Analysis (IPCA) to extract facial features. Wavelet Transform (WT) was applied for decomposition the face image into multilevel using Haar filter, then applied IPCA method over the sub-bands to extract the features. Basically, the operation of IPCA is depended on Eigenvalues and Eigenvectors to extract the features. Back Propagation Neural Network (BPNN) algorithm was applied for classification phase. The combination of two algorithms in one approach will improve the system security [6]. Bakshi and Singhal used PCA method to extract face features. The Discrete Cosine Transform (DCT) method is applied to compress the size of images to reduce the computational time, then PCA is implemented to extract the features and reduce the dimensionality. For classification phase, the Self Organize Map (SOM) neural network was used, where the database is collected personally, taking 4 different persons for each one 4 images with different face expressions. The proposed method satisfied 97.5% accuracy rate [7].

Dan Zou et al. in 2016 suggested a two-Dimensional Linear Discriminate Analysis (2DLDA) method for feature extraction of face image. The 2DWT algorithm was applied over face image to decompose the image into four bands, where the low-frequency components are used in next step. The 2DLDA was performed to extract the important features from these components. Particle Swarm Optimization (PSO) method was used for selecting the Support Vector Machine’s (SVM) parameters that utilized for classification phase. This proposed method acquired 98% accuracy rate using ORL databases [8].

In 2017, Kavitha et al. applied SVM algorithm to detect the face and recognize its expression. First, the face images were resizing into 64 X 64 pixel. The fuzzied Univalue Segment Assimilating Nucleus (USAN) area approach was utilized for feature extraction. The SVM was utilized to detect the face region in 125 images.

The accuracy rate was 90% with low error rate around 16% [9].

In 2019, Muthana H. and Marwa Y. used LBP, GLCM, FDs, and PCA method with fusion technique. The fusion technique is performed in feature level between face and iris trait after generating the features template of each trait separately. Euclidean distance is utilized in classification stage. Three databases are utilized: ORL, CASIA-V1, and MMU-1. The proposed technique achieved 100% accuracy rate using LBP and GLCM method, while PCA and FDs method achieved 97.5% accuracy rate [10].

This work applied twelve comparison methods that have been distributed on the four feature extraction methods and three classifiers.

-

III. Proposed System

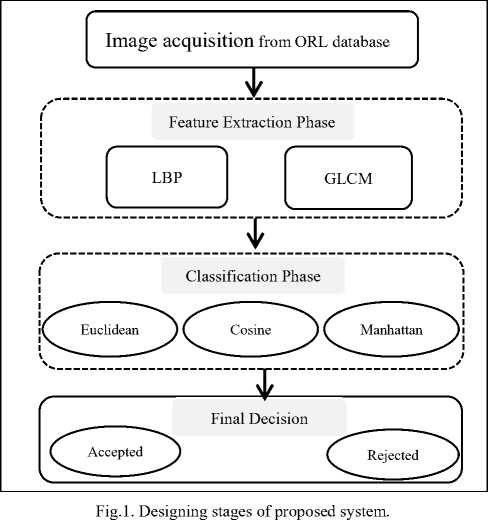

The proposed work is implemented in three major steps: image acquisition, feature extraction, and classification step for making the final decision. The flowchart that represents the system design steps can be summarized in fig.1.

-

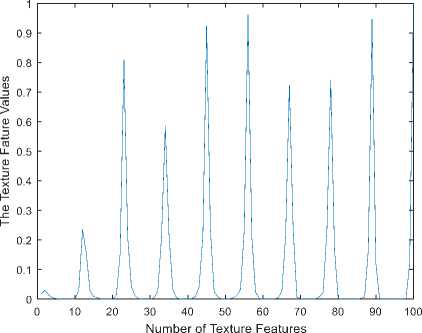

A. Feature extraction based LBP

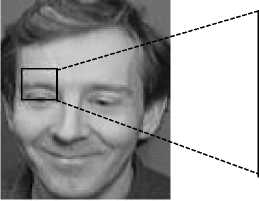

The idea of this method is relayed on comparison operation between eight neighbors and the center pixel, where the center pixel is utilized as threshold for its neighbors. The comparison result is one if the neighbor value greater than or equal to the threshold value and otherwise the result is zero, this operation can be computed using (1) and (2). The final LBP code is created by concatenation the comparison results of eight neighbors using (3). Fig.2 shows the basic operation of LBP method [11,12].

"l 9p 9c(1)

■={J ' 01

LBPp,R(Xc,yc) = ZP=0s(%l)2p(3)

Where 9p indicates to the neighbors gray value and 9c represents the gray value of center pixel. The value of P indicates to number of neighbors, while p indicates to the present neighbor value. xc and yc refer to the center pixel coordinates.

The basic LBP is improved to include more than eight neighbors by creating a circle with radius R around the center pixel, where the edge of this circle represents the neighbors pixels P [12].

The uniform pattern procedure is applied in this work, the idea of this procedure is depended on the number of transitions between 0 and 1. When LBP code includes two transitions from 1 to 0 or vice versa, also if no transition in bits the code is called uniform pattern.

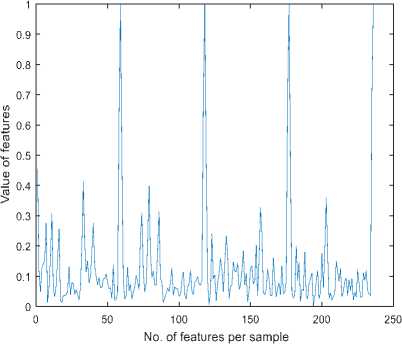

This procedure produces the most important texture features such as spot, edge, and corner. Also, the uniform pattern minimizes the vector length from 2p to P(P — 1) + 3 that leads to save memory [12]. Fig.3 demonstrates implementation result of face image using LBP method.

|

10 |

12 |

4 |

|

2 |

6 |

8 |

|

9 |

1 |

6 |

|

1 |

1 |

0 |

|

0 |

Threshold |

1 |

|

1 |

0 |

1 |

Binary : 11011010 Decimal : 218

Fig.2. The basic LBP operation.

Fig.3. The representation of face features using LBP

• Euclidean distance

D(X,Y) = V5 7=1 (X Z - YD 2

• Manhattan distance

D(X,Y) = 1 7=! |X ;

• Cosine distance

D(X,Y) = 1 -

—

Z 7= 1(xixyi)

J?^2 x SLy2

-

B. Feature extraction based GLCM

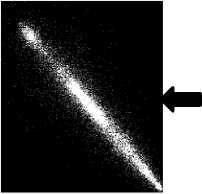

GLCM method is depending on the number of pairs pixel in the image, where it counts the number of pairs pixel that includes specific value and direction in the whole image and it registers the result in a matrix. The result from this operation is two-dimensional matrix that includes the texture features. The number of rows and columns in this matrix must equal to number of level [13]. The concept of GLCM method is illustrated in a simple example shown in fig.4. The facial texture features that extracted using GLCM method is demonstrated in fig.5.

The direction angles that are used to determine the relationship between intensity pixel pairs such as horizontal in 0 ° , vertical in 90 ° , and diagonal in 45 ° ,135°. Depending on GLCM matrix, four features can be produced: contrast, homogeneity, correlation, and energy [13,14,15].

-

C. Classification

In this stage, 400 facial images were used for 40 subjects; 10 samples for each one. These images were divided into two subsets: training and testing subset. The training subset includes 9 images for 40 subjects, while testing subset contains 40 facial samples for 40 subjects. The Olivetti Research Laboratory (ORL) database is used in this work. In this database, the face images are captured in frontal view with different facial expressions such as smile, closed eye, and natural.

Three algorithms are implemented for performing the matching operation, these algorithms are: Euclidean distance, Manhattan distance, and Cosine distance. The concept of distance algorithms is measured the distance between present vector and every vector that stored in training subset, where it selects the minimum distance is obtained. The proposed algorithms could be computed by following measurements:

Where

n: refers to the number of features in one template.

X ; : represents the tested face template, while Y ; refers to face template that stored in database.

1 2 0 1

2 2 0 1

3 0 2 1

0 1 3 1

GLCM Operation

|

j k |

0 |

1 |

2 |

3 |

|

0 |

0 |

3 |

3 |

1 |

|

1 |

3 |

0 |

2 |

1 |

|

2 |

3 |

2 |

2 |

0 |

|

3 |

1 |

1 |

0 |

0 |

GLCM matrix with direction 0°, Distance (D) 1, and symmetric

Fig.4. The operation of GLCM method.

Fig.5. The representation of face feature using GLCM.

explains the results of FAR, FRR, and accuracy rates using LBP and GLCM method; the maximum accuracy rate has been satisfied with Euclidean distance classifier for LBP method, while the Euclidean and Cosine distance achieved maximum accuracy rate with GLCM method. Table 2 show the accuracy results comparison among four methods: GLCM, LBP, FDs, and PCA. These comparison are implemented by the three classifiers. The maximum accuracy rate (100%) is obtained from GLCM with Euclidean and Cosine classifier, while LBP achieved maximum accuracy rate with Euclidean distance only. In the other side, the minimum accuracy rate is obtained from FDs using Manhattan distance.

FRR =

Number of rejection genuine Total numberof genuine assessed

x 100

FAR =

Number of accepted imposter

Total numberof imposter assessed

x 100

-

IV. Experimental Results

The proposed face biometric system is implemented in three steps. In the first one, the facial images are acquiring from ORL database, where 40 subjects are included each one has 10 samples (9 for training and 1 for testing). Fig.6 represents different facial expressions of ORL database, where the texture feature of these datasets are extracted in step two using GLCM and LBP methods. The final decision is decided in the final step using three comparative classifiers: Euclidean, Cosine, and Manhattan distance.

The performance mensuration of proposed system is evaluated using three important computations: False Rejection Rate (FRR), False Acceptance Rate (FAR), and accuracy rate as explained in (7), (8), and (9). Table 1

Accuracy rate % = ^G/jyT

Where NG : refers to number of genuine samples and NT refers to the number of total samples.

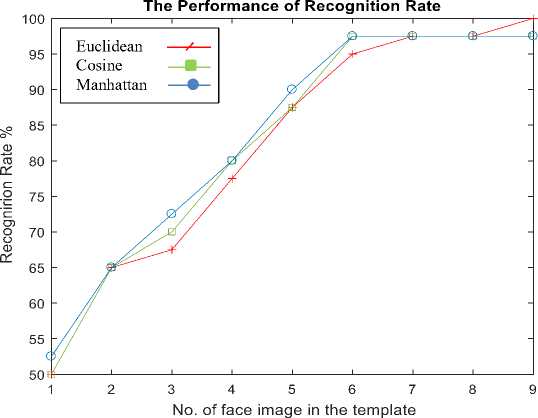

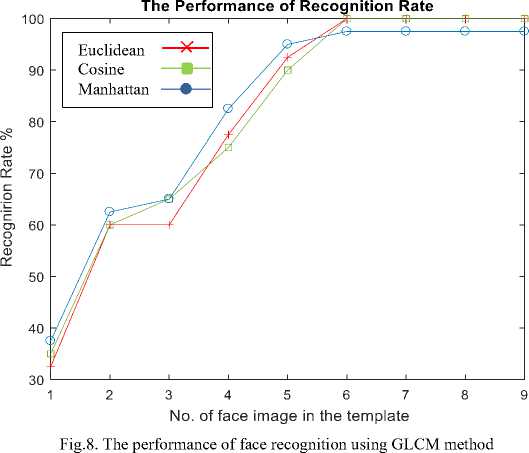

An addition comparison is made among the three classifiers, where the number of training images are changed from 1 to 9. Fig.7 explains the results of LBP method, where the Euclidean distance reached maximum accuracy rate for 9 images and the two other classifiers (Cosine and Manhattan) distance satisfied maximum accuracy rate for 6 images only. The results of GLCM method are explained in fig.8 for different number of training image (1 to 9), these results show that all the classifiers reached maximum accuracy rate for 6 images.

Fig.6. Samples of ORL database.

Table 1. The performance of LBP and GLCM method using 40 persons.

|

Methods |

Matching Algorithms |

FAR |

FRR |

Accuracy Rate% |

|

LBP |

Euclidean |

0.0317 |

0 |

100 % |

|

Manhattan |

0.0309 |

0.0309 |

97.5% |

|

|

Cosine |

0.0309 |

0.0309 |

97.5% |

|

|

GLCM |

Euclidean |

0.0317 |

0 |

100 % |

|

Cosine |

0.0317 |

0 |

100 % |

|

|

Manhattan |

0.0309 |

0.0309 |

97.5% |

Table 2. The comparison between the performances of four feature extraction methods for 40 persons.

|

Methods |

Accuracy Rate % |

||

|

Euclidean |

Cosine |

Manhattan |

|

|

GLCM |

100% |

100% |

97.5% |

|

LBP |

100 % |

97.5 % |

97.5% |

|

PCA |

92.5% |

95% |

90% |

|

FDs |

90% |

90% |

72.5 % |

Fig.7. The performance of face recognition using LBP method.

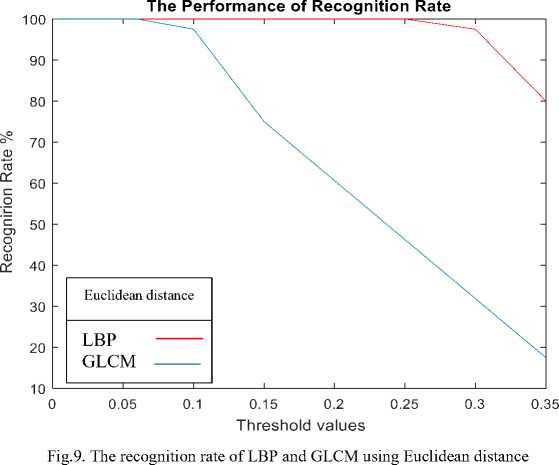

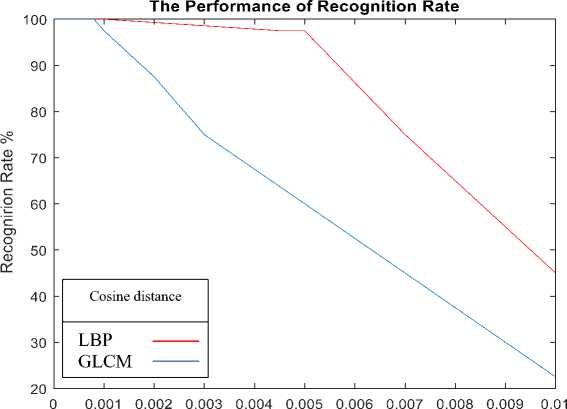

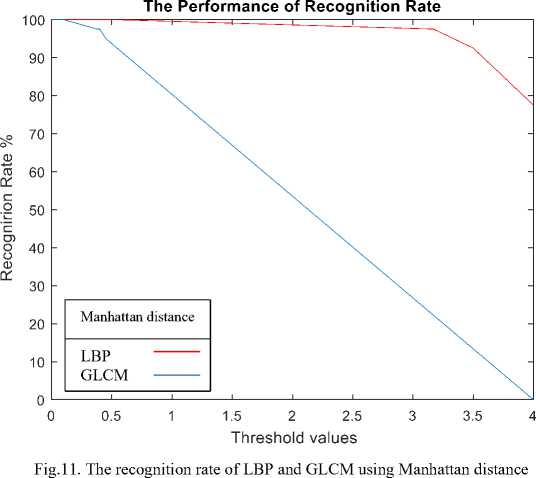

The relationship between the recognition rate and threshold is explained for each classifier. The LBP satisfied maximum recognition rate at threshold value (0.25), while GLCM achieved maximum recognition rate at threshold value (0.065) as shown in fig.9 for Euclidean distance. In fig.10, the GLCM achieved maximum accuracy rate at threshold value (0.0008), while LBP satisfied maximum accuracy rate at threshold value (0.0012) for Cosine distance. Finally for Manhattan distance, the LBP satisfied maximum recognition rate at threshold value (0.5), while GLCM achieved maximum recognition rate at threshold value (0.1) as shown in fig.11.

Threshold values

Fig.10. The recognition rate of LBP and GLCM using Cosine distance

-

V. Conclusion

The face recognition biometric system was developed to recognize 40 individuals from ORL database. The GLCM satisfied maximum accuracy rate (100%) with Euclidean and Cosine distance classifier, while the LBP obtained (100%) accuracy rate with Euclidean distance classifier only. The LBP and GLCM texture analysis method advanced the others like PCA and FDs feature extraction methods.

Список литературы Face recognition based texture analysis methods

- Sarah BENZIANE, Abdelkader BENYETTOU, "An introduction to Biometrics ", (IJCSIS) International Journal of Computer Science and Information Security, Vol. 9, No. 4, 2011.

- Marcos Faundez-Zanuy, "Biometric security technology", IEEE Aerospace and Electronic Systems Magazine, Vol.21 No. 6, pp. 15-26, June 2006.

- Ramadan Gad, Ayman El-Sayed, Nawal El-Fishawy, M. Zorkany, "Multi-Biometric Systems: A State of the Art Survey and Research Directions", International Journal of Advanced Computer Science and Applications (IJACSA), Vol. 6, No. 6, 2015

- Anil K. Jain, Arun Ross, Salil Prabhakar, "An Introduction to Biometric Recognition ", IEEE Transactions on Circuits and Systems for Video Technology, Vol. 14, No. 1, January 2004.

- H. Proenca, "Towards Non-Cooperative Biometric Iris Recognition", Ph.D Thesis, University of Beira Interior, October 2006.

- Prachi Agarwal, Naveen Prakash, "Modular Approach for Face Recognition System using Multilevel Haar Wavelet Transform, Improved PCA and Enhanced Back Propagation Neural Network ", International Journal of Computer Applications, Vol. 75, No.7, August 2013.

- Urvashi Bakshi, Rohit Singhal, "A New Approach of Face Recognition Using DCT, PCA, And Neural Network in Matlab", International Journal of Emerging Trends & Technology in Computer Science (IJETTCS), Vol. 3, Issue 3, 2014.

- Dan Zou, Hong Zhang, "Face Recognition Method Based On 2DLDA And SVM Optimated By PSO Algorithm ", Advances in Intelligent Systems Research, Vol. 130, 2016.

- R.Kavitha, V.Nisha, "Analysis Of Face Recognition Using Support Vector Machine", International Conference on Emerging trends in Engineering, Science and Sustainable Technology (ICETSST), 2017

- Muthana H. Hamd, Marwa Y. Mohammed, " Multimodal Biometric System Based Face-Iris Feature Level Fusion", International Journal Modern Education and Computer Science (IJMECS), Vol. 11, No. 5, 2019

- S. Marcel, Y. Rodriguez, G. Heusch, "On the Recent Use of Local Binary Patterns for Face Authentication", International Journal Of Image And Video Processing, Special Issue On Facial Image Processing, May 2007.

- Md. Abdur Rahim, Md. Najmul Hossain, Tanzillah Wahid, Md. Shafiul Azam, "Face Recognition using Local Binary Patterns (LBP)", Global Journal of Computer Science and Technology Graphics & Vision, Vol.13, Issue 4, 2013.

- Redouan Korchiynel, Sidi Mohamed Farssi, Abderrahmane Sbihi, Rajaa Touahni, Mustapha Tahiri Alaoui, "A Combined Method of Fractal and GLCM Features for MRI and CT Scan Images Classification", Signal & Image Processing : An International Journal (SIPIJ), Vol.5, No.4, August 2014

- Emrullah ACAR, "Extraction of Texture Features from Local Iris Areas by GLCM and Iris Recognition System Based on KNN", European Journal of Technic, Vol. 6, No.1, 2016.

- Girisha A. B., M. C. Chandrashekhar, M. Z. Kurian, "Texture Feature Extraction of Video Frames Using GLCM", International Journal of Engineering Trends and Technology (IJETT), Vol. 4, Issue 6, June 2013