Finger gesture detection and application using hue saturation value

Автор: Joy Mazumder, Laila Naznin Nahar, Md. Moin Uddin Atique

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 8 vol.10, 2018 года.

Бесплатный доступ

In this paper, we developed a mathematical model for finger gesture identification using two colored glove. The glove is designed in such a manner that wristband and middle finger of the glove are marked by blue color and other fingers are marked by red color. HSV values of those colors are implicated in order to identify red and blue colors. After detecting colors, two processes are employed for identification of fingers. One of them is the angle created at the wristband center between the middle finger and any other fingers. Other is examining the ratio between the wristband-middle finger distance and the projection of the wristband and other fingers distance on wrist-middle finger joining line. For both processes, the middle finger must present in order to identify the fingers. After identification of fingers gesture using both methods, an application of finger detection is presented here by changing a PowerPoint slide. This mathematical model was tested on several conditions and got the accuracy of more than 82%.

Finger gesture identification, two color glove, angle detection, ratio detection

Короткий адрес: https://sciup.org/15015986

IDR: 15015986 | DOI: 10.5815/ijigsp.2018.08.04

Текст научной статьи Finger gesture detection and application using hue saturation value

Hand gesture recognition system (HGR) becomes a significant part of human-computer interaction (HCI). Now a day, communication between human and computer is a well-known and familiar thought [1]. So, gesture recognition based methods and applications have achieved a remarkable amount of popularity all over the world. Basically, the gesture is defined as the movement of the hand to communicate with the computer. It is a specific combination of hand movement, orientation, flexibility observation at a certain time. Generally, gesture recognition is a process in which any system can understand what is going to be performed by the gesture [2]. It is used to interpret and explain the movement of any body parts as a meaningful command. Using the concept of gesture recognition system, one can detect hand or fingertips by using or without using color banded gloves.

Previous works based on finger identification using glove were highly background dependent. That’s why we propose a novel method of finger gesture identification which is less susceptible to the background and also provide less computational complexities. Basically, this paper is based on dynamic hand gesture recognition system, in which fingertips are detected using the realtime method. Wearing a glove on hand, where the middle finger has the blue band and other fingers have red bands. All the bands are placed on top of the fingers and maintain equal distances from the upper part of it. Another blue band is placed on the wrist of hand. A very simple method is used to track the position of fingertips and hence its movement, using the hue-saturation-values (HSV) of red and blue colors. HSV color plane is the most suitable color plane for color-based image segmentation. By setting the hue value ranges from the lower threshold to upper threshold for each color of hand glove, it can be easily detected all the bands of corresponding colors of the image frames. Then it has been converted the color space of original image frame of the webcam, i.e. from RGB to HSV plane. After detecting the colors of particular fingers, angle and ratio have been measured. Each angle is formed between blue wrist, middle fingertip (blue banded) and other red fingertips measuring one after another individually. The ratio is measured by dividing the wristband-middle finger distance by the projection of the wristband and other fingers distance on wrist-middle finger joining line. Since fingertips detection is done by measuring the angle and ratio between the red and blue banded fingertips, the blue band must present every time of measurement. Otherwise, this system may not determine the exact values of angle and ratio. Consequently, the result will be erroneous. One application of finger detection is shown here. If the system can detect Index finger, it will change a PowerPoint slide automatically. This algorithm tested on several samples and correct detection rate is higher than 82% for each finger.

-

II. Related Work

During the last few decades, many researchers have been interested in detecting hand and fingertips by using hand gesture recognition system based on HSV values. One of the first researchers of gesture recognition using a glove with fingertip markers which were reported by Davies et al. (1994) they used colored markers on the fingertip and a grayscale camera to track the fingertip movement and their orientations to determine hand gestures. This system exhibits a durable competitor against wired glove techniques for gesture recognition. After 2 years, Iwai et al. (1996) proposed a colored glove technique in which 10 fingertip identifications had been accomplished. They used different colors to entitle different parts of finger and sections of the palm for the purpose of avoiding the confinement problem. In this

problem, certain parts of the hand or fingers are covered by occlusion and the camera is unable to expound the gestures with accuracy. They used a method which automatically recognizes a limited number of gestures [9]. In recent years, more and more research was concentrated on vision-based hand gesture recognition. Yang analyzed the hand configuration to select fingertip and detect peaks in their spatial distribution and optimize local variance to locate fingertip [10]. J.M. Kim, W.K. Lee [11] and Sanghi [12] used directionally variant templates to detect fingertips. Some other methods are relying on specialized instruments and setup. K. Oka, et al. used infrared camera [13], Ying used stereo camera [14], J.L. Crowley, et al. used a fixed background [15], F.K.H. Quekand et al used marker on hand [16]. M. Dharani, K.T. Anjan and K.S. Kandarpa suggested a robust and efficient algorithm in which they used gloves for tracking fingertips using HSV values [17]. Y. Robert, Wang and J. Popovíc developed a method of tracking hands by 3D articulated user-input. Their approach is to track a hand with single camera, wearing an ordinary cloth glove which is imprinted with a custom pattern [18]. Recently, Monuri Hemantha, M.V. Srikanth describes Simulation of Real time hand gesture recognition for physically obstructed persons. They imposed sign language to communicate speech impaired people [19]. Vision-based approach is employed for the recognition. human-machine interface for gesture recognition is provided in the method. This system does not require any sensor or marker attached to the user and allow unrestricted symbols or character inputs from any location.

-

III. Gloves Design

To achieve better performance, it is necessary to design a glove with colored fingertips. For designing such a glove, it has been chosen two colors of band i.e. blue and red. Blue colored band is placed on the middle finger and the wrist of the hand. The distance between the blue fingertip band and the wristband is approximately 17.5 cm. all other fingers are banded with red color. The distance is measured from center to center of middle

Fig.1. Designed gloves for proposed model finger and wristband. And the measurement is taken with an ordinary scale so the proper accuracy may not be achieved. All colored band is placed on an upper portion of the fingers with a gap of 0.6 cm from the top of each finger. The following Fig. 1 shows the basic design of colored glove for the proposed model. As the picture has been taken by an ordinary camera, so it has been slightly edited to have a fine contrast picture. The background of the picture is kept white to clarify the picture.

-

IV. Proposed Model

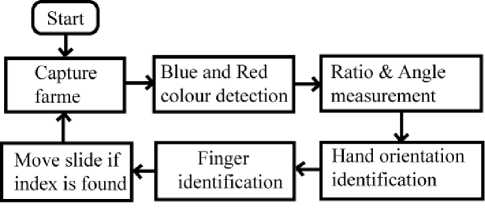

The proposed model for finger detection and tracking is shown in Fig. 2.

Fig.2. Flowchart for the proposed model

-

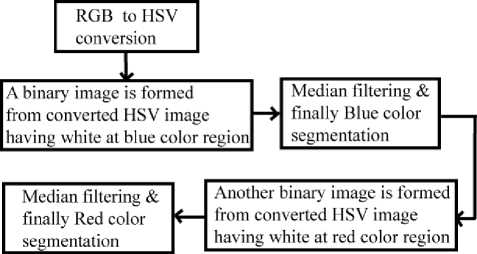

A. Blue and Red color detection

Fig.4. HSV cylindrical color representation

In order to convert RGB color space into HSV color space normalized version of R, G & B value ranging from 0 to 1 is used. For a certain pixel value of [R, G, B], C max and C min are defined by this equation-

C max = max( R , G , B) C m = min R ', G ', B ') where , R = R /255

G ' = G /255

B' = B /255

The difference between C max and C min is denoted by ∆.

SO, A = Cmax - Cmin

Hue is calculated by the following equation-

|

0 0 |

when , A = 0 |

||||

|

600 |

( G *- B ' xl------ I A |

mod 6 ^ |

, C max |

= R ' |

|

|

H = ] |

600 |

( B ' - R ' xl I A |

+ 2 ^ |

, C max |

= G ' |

|

600 |

( R ' - G ' xl I A |

+ 4 ^ |

, C max |

= B ' |

|

The following equation is deployed for saturation calculation- fo c

, max

= 0

5 = -! A

C

C

, max

* 0

max

Value calculation is depicted as follows- v=c max

A captured RGB image I(x,y) and converted HSV H(x,y) Image are shown in Fig. 5.

Fig.5. (a) Captured RGB image I(x,y) (b) Converted HSV image H(x,y)

In order to detect blue color from the converted HSV image, a binary image B(x,y) is defined which has the dimensions equal to I(x,y). A pixel value of B(x,y) will be white if the pixel value of the corresponding position lies in blue color range in H(x,y). Otherwise, the pixel value of B(x,y) will be black. The mathematical equation of B(x,y) is given below-

1 ff [ « 1 , в Y 1 ] ^ H ( x , У ) ^ [ « 2 , P l , Y 2 ]

0 otherwise

B ( x , У) =

Here, α1 and α2 are the lower and upper value for hue. Similarly, β1, β2, and γ1, γ2 are the lower and upper value for Saturation and Value respectively. For the detection of blue color, the range is used from [100,50,50] to [112,255,255] in the paper. The converted binary image is shown in Fig. 6(a).

The converted binary images are noisy. In order to reduce noise median filtering technique is applied. The median filter is a spatial nonlinear filter. Its response is based on ordering the pixels contained in the image area encompassed by the filter. In this filtering process, the central pixel of kernel window is replaced by its median value. In the presence of unipolar and bipolar noise, the median filter works perfectly. This technique is also effective to reduce salt and piper noises which means of having black pixels in white regions or having white pixels in black regions [19]. This process also creates less blurring effect on the image. Since it is a spatial filter so there is no ringing effect. The filtered images are shown in Fig. 6(b). After filtering the images, the blue regions on I(x,y) are tracked by taking the position from the binary image. The image after tracking is shown in Fig. 6(c).

Since the region of blue color in wristband is much larger than the color mark of the middle finger, the maximum area will be detected as wristband region, another one will be detected as the middle finger.

(a) (b)

(c)

Fig.6. (a) binary image having blue region white, other region black (b) binary Image after filtering (c) Blue color tracking

For the detection of red color, another binary image R(x,y) is defined having same dimension equal to I(x,y). The pixel values of R(x,y) is found from the following equation given below-

R ( x , y )

lf [ « 3 , Р з , Y 3 ] ^ H ( x , У ) ^ [ « 4 , P 4 , Y 4 ] otherwise

Here α 3 ,β 3 ,γ 3 are the lower values and α4,β4,γ4 are the higher values for red color in HSV plane. Red color range are used in the paper from [174,50,50] to [180,255,255]. The threshold image is shown in Fig. 7(a). Same filtering technique is used here for reducing noise. After filtering and taking the position from it, the final tracked image is shown in Fig.7(c)

(a) (b)

(c)

Fig.7. (a) binary image having red region marked by white other region black (b) binary Image after filtering (c) Red color tracking

-

B. Angle and ratio measurement

If center co-ordinate of wristband is (wx, wy), middle finger is (mx, my) and for any finger co-ordinate is (fx, fy), then the distance is calculated as follow- a = (Wwx - mx)2 + (wy - my)2 b = Xwx - fx)2 + (wy - fy)2

& c = ffx — mx ) 2 + ( fy — my ) 2

This calculation is depicted as in Fig. 8

Fig.8. Angle detection using cosine rule

From the distance, the angle is created at the wristband which is calculated using cosine rule-

9 = cos 1

л a 2 + b 2 - c c

( 2 ab

For various positions of hand, the measured angles for index finger are shown in Fig. 9.

Fig.9. Measured angles for the index finger

The variation of angle ranges for various fingers are shown in the table below-

Table 1. Angle variation for various fingers

|

Finger Name |

Angle Range |

|

Thumb finger |

180<θ<360 |

|

Index finger |

7.60<θ<15.40 |

|

Ring finger |

100<θ<190 |

|

Pinkie finger |

160<θ<280 |

The ratio between the distance of wristband and middle finger and the projection of distance between the wrist and any finger has differed from one to another. If the distance between wristband and a finger is ‘b’ and the projection of it on ‘a’ is ‘b*cosθ’, the variation of ‘a/(b*cosθ)’ for the various position of hand for index fingers are shown in Fig. 10

Fig.10. ‘a’ and ‘b*cosθ’ ratio for several orientations of the index finger

The variation of a/(b*cosθ) ratio for different fingers are given in the table below-

Table 2. Ratio variations for different fingers

|

Finger Name |

a/(b*cos9) |

|

|

lower value |

upper value |

|

|

Thumb finger |

1.40 |

2.40 |

|

Index finger |

1.02 |

1.08 |

|

Ring finger |

1.07 |

1.12 |

|

Pinkie finger |

1.14 |

1.26 |

-

C. Hand angle identification & relative positions of other fingers with respect to the middle finger

Fig.11. Hand orientation identification

The angle is found using this formula- my - wy tanα≡ mx - wx

-

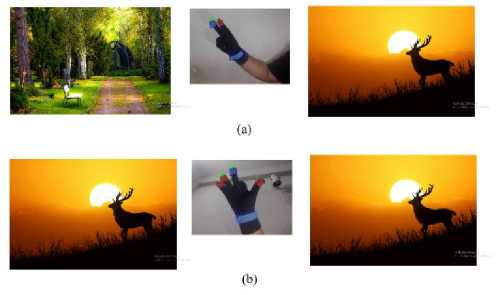

C. Final finger detection and PowerPoint slide movement

In a real-time frame, for each finger, if the conditions described above are satisfied, the finger is identified successfully. For any particular frame, it is checked that whether the index is found or not. If the index is found, then a slide of PowerPoint is changed, otherwise not. It is required to maintain a minimum 5sec gap between two successful slide movements.

Fig.12. (a) PowerPoint slide movement when the index is found (b) No slide movement when the index is not found

-

V. Results and limitations

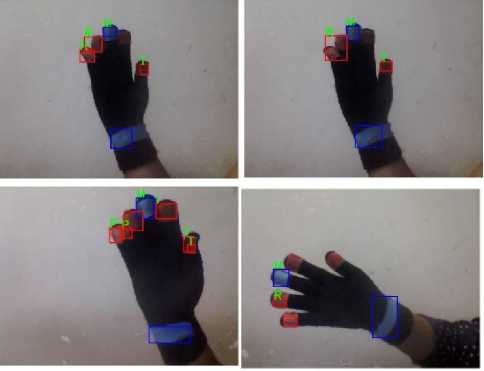

The experiment of real-time finger detection is conducted on images captured by webcam. To evaluate this method, there are total 200 frames are used for the detection of a finger. The frame size captured by the webcam is 640*480 px. Those images are captured in both uniform and non-uniform backgrounds as well as various lighting conditions. Some of the results are shown in fig. 13.

Fig.13. Various Finger detection result

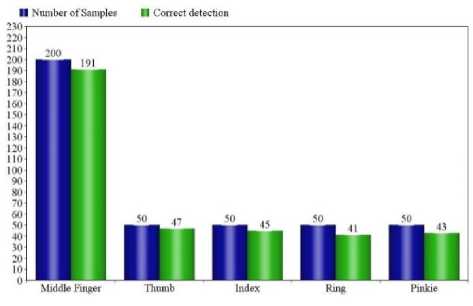

The number of samples and correct detection for each finger are shown in the Fig.14

Fig.14. Number of samples and correct detection for each finger

The accuracy of the detection for every finger is greater than 82%. The accuracy of each finger is shown in Fig. 15.

Fig.15. Graphical representation of accuracy for each finger.

From the chart, it is clear that accuracy for the fingers Ring and Pinkie is relatively lower than other fingers. The relative position of index and thumb finger with respect to middle finger is same but the angles they created at wristband are different and there is no overlap. However, in case of detecting ring and pinkie finger, the lower values of angle for pinkie are overlapped with the upper values of the ring finger. So, a cutoff value of 17 0 is taken for avoiding this anarchical situation. Due to overlapping of their angle and relatively closure ratio, sometimes Ring finger is detected as a pinkie finger or vice-versa.

This method has some other limitations too. If the background contains any detectable red or blue color object, this model may show anomalous results. Additionally, contour overlap occasionally provides erroneous results. When contours of two fingers are overlapped than those fingers are tracked as a single finger. Sometimes, any one of the fingers could not be detected. The main reasons behind this are the variation of lighting conditions, background noises and vertical rotations of hands. Those scenarios are shown in Fig. 16

Fig.16. Problems in identifying of fingers using angle

-

VI. Conclusion

Список литературы Finger gesture detection and application using hue saturation value

- A. Chaudhary, J.L. Raheja, K. Das, S. Raheja, "Fingers' Angle Calculation using Level-Set Method", Published in Computer Vision and Image Processing in Intelligent Systems and Multimedia Technologies, IGI USA, pp.191-202, 2014.

- E.S. Nielsen, L.A. Canalís, and M.H. Tejera, “Hand Gesture Recognition for Human-Machine Interaction”, Journal of WSCG, vol.12, pp.1-3, 2004.

- V. Nayakwadi, N.B. Pokale, “Natural Hand Gestures Recognition System for Intelligent HCI: A Survey”, International Journal of Computer Applications Technology and Research, ISSN: 2319–8656, vol.3, Issue-1, pp.10 – 19, 2013.

- J. Thampi, M. Nuhas, M. Rafi, A. Shaheed, “Vision based hand gesture recognition: medical and military applications”, Advances in Parallel Distributed Computing Communications in Computer and Information Science, vol. 203, pp 270-281, 2011.

- S. Sharma, M.R. Manchanda, “Implementation of Hand Sign Recognition for Non-Linear Dimensionality Reduction based on PCA”, I.J. Image, Graphics and Signal Processing, vol-2, pp.37-45, 2017.

- D. Stotts, J. Smith, M.K. Gyllstrom, “Facespace: Endoand Exo-Spatial Hypermedia in the Transparent Video Facetop”, In: Proc. of the Fifteenth ACM Conf. on Hypertext & Hypermedia. ACM Press, pp:48–57, 2004.

- W. Freeman, K. Tanaka, J. Ohta, & K. Kyuma, “Computer Vision for Computer Games”, Tech. Rep. and International Conference on Automatic Face and Gesture Recognition, 1996.

- S. Bilal, R. Akmeliawati, M. J. El Salami, A. A. Shafie, “Vision-Based Hand Posture Detection and Recognition for sign Language - A study”, IEEE 4th international conference on Mechatronics, ICOM, pp. 1-6, 2011.

- Human Computer Interaction Using Hand Gestures, Premaratne, p., 158 illus., 86 illus., Hardcover, pp.174, 2014. http://www.springer.com/978-981-4585-68-2.

- D. Yang, L.W. Jin, J. Yin, and Others, “An Effective Robust Fingertip Detection Method for Finger Writing Character Recognition System”, Proceedings of the Fourth International Conference on Machine Learning and Cybernetics, Guangzhou, China, pp.4191–4196, 2005.

- J.M. Kim, and W.K. Lee, “Hand Shape Recognition using Fingertips”, In the Proceedings of Fifth International Conference on Fuzzy Systems and Knowledge Discovery, Jinan, Shandong, China, pp.44-48, 2008.

- A. Sanghi, H. Arora, K. Gupta, Vats, V.B., “A Fingertip Detection and Tracking System as a Virtual Mouse, a Signature Input Device and an Application Selector”, Seventh International Conference on Devices, Circuits and Systems, Cancun, Mexico, pp.1–4, 2008.

- K. Oka, Y. Sato, and H. Koike, “Real Time Fingertip Tracking and Gesture Recognition”, Computer Graphics and Applications, IEEE, vol. 22(6), pp.64–71, Dec, 2002.

- H. Ying, J. Song, X. Renand and W. Wang, “Fingertip Detection and Tracking Using 2D and 3D Information”, Proceedings of the Seventh World Congress on Intelligent Control and Automation, Chongqing, China, pp.1149–1152, 2008.

- J.L. Crowley, F. Berardand and J. Coutaz,, “Finger Tacking as an Input Device for Augmented Reality”, Proceedings of International Workshop on Automatic Face and Gesture Recognition, Zurich, Switzerland, pp.195–200, 1995.

- F.K.H Quek, T. Mysliwiec, and M. Zhao, “Finger Mouse: A Free Hand Pointing Computer Interface”, Proceedings of International Workshop on Automatic Face and Gesture Recognition, Zurich, Switzerland, pp.372–377, 1995.

- M. Dharani, K.T. Anjan and K.S. Kandarpa, “A Colored Finger Tip-Based Tracking Method for Continuous Hand Gesture Recognition”, International Journal of Electronics Signals and Systems (IJESS), ISSN: 2231- 5969, vol-3, Iss-1, pp.71-75, 2013.

- Y. Robert, Wang, J. Popovi´c, “Real-Time Hand-Tracking with a Color Glove”. ACM Transactions on Graphics (TOG) - Proceedings of ACM SIGGRAPH, vol.28, Issue-3, Article No. 63, 2009.

- Monuri Hemantha et al, “Simulation of Real Time Hand Gesture Recognition for physically impaired”, International journal of Advanced Research in computer and communication Engineering vol. 2, issue 11, November, 2013

- Anand G Buddhikot, Nitin. M. Kulkarni, Arvind.D. Shaligram," Hand Gesture Interface based on Skin Detection Technique for Automotive Infotainment System", International Journal of Image, Graphics and Signal Processing(IJIGSP), Vol.10, No.2, pp. 10-24, 2018.DOI: 10.5815/ijigsp.2018.02.02

- https://en.wikipedia.org/wiki/HSL_and_HSV

- Rafael C. Gonzalez & Richard E. Woods, Digital image processing, 3rd edition, Pearson, Education, pp.156