Fingerprint Image Fusion: A Cutting-edge Perspective on Gender Classification via Rotational Invariant Features

Автор: Shivanand Gornale, Abhijit Patil, Khang Wen Goh, Sathish Kumar, Kruthi R.

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 4 vol.16, 2024 года.

Бесплатный доступ

In this cutting-edge technological milieu, fingerprints have become an alternative expression for the biometrics system. A fingerprint is one of the perceptible biometric modals which is predominantly utilized in almost all the security, and real-life applications. Fingerprints have many inherent rotational features that are mostly utilized for person recognition besides these features can also be utilized for the person gender classification. Thus, the proposed work is a novel algorithm which identifies the gender of an individual based on the fingerprint. The image fusion and feature level fusion technique are deliberated over the fingerprints with rotational invariant features. Experiments were carried on four state-of-the-art datasets and realized promising results by outperforming earlier outcomes.

Biometrics, Fingerprint, Feature Fusion, Gender Classification, Image Fusion

Короткий адрес: https://sciup.org/15019462

IDR: 15019462 | DOI: 10.5815/ijigsp.2024.04.04

Текст научной статьи Fingerprint Image Fusion: A Cutting-edge Perspective on Gender Classification via Rotational Invariant Features

Biometrics is a discipline that exploits the innate traits of individuals to differentiate them from one another. As per the International Standard Organization [1], biometrics pertains to the utilization of biological characteristics to validate and authenticate the identity of individuals [2]. Numerous methods within the realm of biometrics can be employed to classify gender, including but not limited to fingerprint examination [3], facial identification [4], iris scanning [5], analysis of palm prints [6], signatures [7], scrutiny of walking patterns (gaits), analysis of keystrokes, and assessment of voice/speech patterns [8].

Among physiological biometrics, a fingerprint is the dominantly used biometric traits. Fingerprints are unique imprint patterns which appear on the fingertips. Fingerprint is one of the significant biometrics traits which happens to be externally visible, non-invasive to the user in nature and remains constant during the lifetime of an individual. According to fingerprint analysis experts [9], a fingerprint pattern differs in every individual even in case of twins. Due to aging and other environmental factors the size and shape of the fingerprints may change, the basic patterns remain unchanged. These patterns are utilized and further been motivated to develop a generalize algorithm for gender classification.

Gender classification by fingerprint is soft distinctive information, which happens to be a binary class problem of determining a fingerprint belongs to male or female. Gender classification provides auxiliary information which acts as stride towards reducing the average search space and time. In many wide ranges of applications gender classification has been used, like digital forensics science, demographics statistics, passive surveillance and controlling access, collecting consumer statistics for advertisement and marketing etc. [10].

This significance of this work is to perform fusion techniques over fingerprints then evaluate the performance of different well known rotational invariant features with machine learning and deep leaning approaches and compare it with numerous gender classification techniques.

o The study addresses challenges related to gender classification, offering insights into how biometric data can be leveraged for such purposes.

o It fosters the deeper understanding of the complexities involved in gender classification using fingerprint data. o The proposed model can be used in various fields, including security, forensics, and identification systems.

The key contributions of the proposed work are as follows:

o An innovative approach to fingerprint image fusion by incorporating rotational invariant features to optimize the performance of our system.

o By integrating rotational invariant features and advanced image fusion techniques improves the accuracy of gender classification.

o This contribution enhances the system's robustness, ensuring accurate gender classification regardless of the finger's rotation during image acquisition.

The paper is organized as follows: Section 2 provides an overview of previous studies on gender classification using fingerprints. Section 3 outlines the methodology proposed in this research. Section 4 examines the results obtained from the experiments. Section 5 presents a comparative analysis between the proposed approach and existing methods. Lastly, Section 6 the conclusions are drawn with potential avenues for future research.

2. Related Work

Since the fingerprints are a widely used biometric trait, there are number of past studies have been reported based on person identity estimation/recognition. However, gender classification domain related works are limited. In this section, comprehensive reviews of related studies on this domain are being presented.

Emanuel M. et al. [11] have utilized texture features like Local binary patterns (LBP) and Local phase quantization (LPQ) features on 494 user database and obtained an accuracy of 88.7% accuracy for gender categorization. S.S Gornale et al. [12] have utilize Fourier transform, eccentricity, and major axis-length features on 1000 left hand thumb fingerprint image and by thresholding that achieved 80% accuracy. Discrete wavelet transformation features on 600 fingerprint images used in [13] and Support Vector Machine (SVM) classifier used for classification, accuracy of 91% is noted in the analysis.

In addition [14], authors also performed different variants of local binary patterns (LBP) on 640 fingerprint images for gender identification and by using rotational invariant local binary pattern feature extraction, an accuracy of 95.8% obtained with KNN classifier. S.S Gornale et al. [15] have utilized Discrete Wavelet Transformation, Discrete Cosine Transformation and Fourier transform on 4320 fingerprint images and with thresholding obtain an accuracy of 77% male and 78% for female respectively. Shivanand. S Gornale et al. [16] have implemented features fusion of the wavelet intimates, i.e., fusion of Gabor wavelet and discrete wavelet transformation is performed on dataset consists of 740 fingerprint image samples and by simple quadratic discriminant analysis (QDA) obtained an accuracy 97%. Basic statistical features on 740 fingerprint images of same dataset, with SVM classifier obtained an average accuracy of 89% in [17]. Kruti R et al. [18] have implemented feature fusion of grey level texture descriptors i.e., fusion local binary patterns and local phase quantization is performed and obtained an accuracy of 84.13% and 97% on self-fingerprint dataset and SDUMLA-fingerprint database respectively. Kruti R et al. [3] have performed fusion of textures features and synthesis of classifiers using voting strategy over 4 different databases, with synthesis of KNN, SVM and Decision tree classifiers the results tend get increased and obtained an accuracy of 98.9%,98.6%, 99% and 95.1% for SDUMLA, KVK, and 2 self-created databases respectively.

S.S Gornale et al. [2] have used Haralick descriptors on small dataset of 740 fingerprint images samples and illustrious an accuracy of 94%. ShadabAlam et al. [19] have used Discrete wavelet and Singular value decomposition on small self-created fingerprint database from 42 subjects and out-performed gender classification by using KNN classifier obtain an accuracy of 91%.

3. Proposed Methodology3.1 Pre-Processing

Deshmukh D.K et al. [20] have performed minutia mapping, Gabor wavelet and Discrete wavelet features for gender classification on a relatively small dataset of 100 male and 100 female fingerprint images and noted an accuracy of 70%. Rim et al. [21] have performed gender classification using deep learning approach on self-created 8000fingerprints of male and female images. With Training a network like VGG-19 and ResNet-50 from starch achieved to get accuracy of 97%. Qi, Y et al. [22] have performed gender classification from fingerprints using ResNet-Auto-Encoder network on self-created 6000 fingerprint of 98 male and 102 female images samples and achieved an accuracy of 96.5%.

In accordance with the general literature, researchers have used fingerprint biometrics for gender classification systems using various techniques, but the reported studies are limited to a specific age-group, regional areas, as well as their experimental setup is on a limited database. However, there is still room for developing a system for gender classification that is more general and nonspecific.

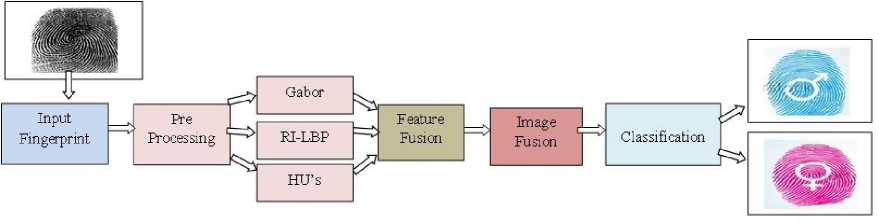

Gender classification using fingerprints is a relatively new issue that has emerged as an appealing research area in machine learning. The below figure (Fig.1.) illustrates, general steps involved in gender classification using fingerprint.

Fig. 1. Proposed Methodology

Preprocessing is a vital step used to enhancing the input image for better feature extraction from all 4 datasets; undergoes preprocessing steps by normalizing each image to a size of 250 x 250 then background is eliminated and lastly enhancement is performed by contrast limited adaptive histogram algorithm. The method is illustrated in Fig.2.

Fig. 2. (a) Input Fingerprint (b) Resized Image (c) Normalized Image

-

3.2 Feature Extraction

-

3.2.1 Gabor wavelet:

Fingerprints are naturally subject to various rotations due to pressure, finger placement, and surface angle. Without rotation-invariant features, a fingerprint gender identification system would treat each rotated version of a fingerprint as a distinct fingerprint, resulting in a high false rejection rate. To address this problem, this work develops rotationinvariant features to extract rotation-invariant features from fingerprints.

During the image acquisition, the fingerprint images can be registered or recoded from any direction and orientations. Thus, the rotational invariant features have been considered and utilized for getting comprehensive information apropos the fingerprint. In the proposed work, three illustrious rotational invariant feature extraction approaches (i.e. rotational invariant local binary patterns, Gabor wavelet and Hu’s invariant features) are employed. However, based on existing research and empirical assessments, it has been demonstrated that fusion schemes could significantly enhance the classification of gender accuracy and lead to promising performance.

Gabor Wavelets are forms of natural information and are inspired biologically, Gabor features are rotational and scale invariant in nature, and they resemble the human brain's visual cortex very well [23].

(к, I: f, д') = exp {^ + |} cos(2n f k)(1)

к = ksin9 + lcos9(2)

I = kcosd - lsin9(3)

Gabor wavelets offer multi-resolution and multi-orientation features [23], and they can even be used to discover an image's spatial frequencies. Consequently, images are characterized using Gabor filters, which extract energy information from images and employ it to perform recognition tasks [24].

8 2k and 8 2l are the Gaussian envelope along with k and l axis respectively which control the spatial extent. The variable 'F' indicates the frequency of interest points in the underlying image. The values of k and l range from 0 to k-1 and 0 to l-1, respectively, representing different scales. The variable 'θ' represents the orientation of the filter along the x-axis. By varying the values of k and l, various orientations and frequency features can be evaluated from an image.

-

3.2.2 Rotational Invariant Local Binary Patterns (RI-LBP):

-

3.2.3 Hu’s Feature:

Local Binary Pattern was firstly made known by Ojala et al. [25] in 1996, as powerful texture descriptor; and is sensitive towards the grey level transitions; The RI-LBP is extended contrivance of original LBP; which examines intensity [7] for each pixel patterns by rotating an image in different scales accordingly. The remaining working is as similar, where input image is divided into ‘NxN’ local regions, and by comparing the central pixel with its neighboring pixels a binary pattern is assigned [26]. The LBP results are typically computed using the following formula, assuming a pixel at coordinates (X c , Y c ).

LBP(XC,YC) =^+1s(in - in)2n

Where, ‘i n ’ represents a neighboring pixel of N pixels around the central pixel i c and s=1, if i n ≥i c , for each fingerprint image [14].

HU’s was made known by HU et al. [27] in year 1962. Hu’s moments are independent to geometrical transformation and have natural capability of rotational and scale invariance [28]. The fingerprint image can be made scale and translation invariant by shifting the coordinate’s f (x, y) [28] and polynomial (p+q) bases to the centroid m10/m00 and m01/m00, [29] [25] than central moments can be calculated by.

^ pq = \-co\ ( x - x ) p ( У - У ) qf ( x , У ) dxdy

Where, x = m 1° and у = ^0^ m00 is the mass of the image. The scale invariance [30] is obtained by equation 5, m oo т °о'

but they are prone towards the noise, to avoid this further they are normalized which is given in equation 6.

P = -^ ^q

Where, у = ^+^ + 1, for (p+q) =1,2, 3., Later, seven Hu’s invariant moments [27] [31] is calculated with order of with respect to RST [32].

Ф1 = 920 + 902(7)

^2 = (920 - 902)2 + 4911

^з = (9зо - 3912)2 + (3921 - 9оз)2(9)

^4 = (9зо + 912)2 + (921 + 9оз)2(10)

P s = (и зо - 3U 12 )(U 3O + I 12X I 30 + U 12 )2 - 3(U 21 + U 02 )2 +

(3l21 - 1оз)(121 + иозЖизо + U12)2 - (U21 + иоз)2]

Рб = (U20 - 102)[(1з0 + 112)2 - (121 + U02)2] + 4^11(^30 + I12XI21 + 1оз)

P 7 = (I 21 - U 02X 1 з0 + ЖК^ ЗО + I 12 )2 - 3(U 21 + ^ оз )2] +

-

(3U12 - 1зо)(121 + 1зо)[3(1зо + 112)2 - (121 + 1оз)2]

-

3.3 Feature Selection

The selection of Gabor waves, Hu moments, and Local Binary Patterns (LBP) with rotational invariance for gender classification is based on their ability to effectively capture key characteristics of fingerprint patterns and mitigate the impact of rotational variations. Each of these feature extraction methods possesses specific advantages:

-

• Gabor Waves:

Gabor wavelets are highly suitable for capturing localized frequency information in images, adaptation them exceptionally effective in representing the intricate details inherent in fingerprint patterns. Their ability to selectively detect orientation is crucial in addressing variations arising from diverse finger placements and orientations [33].

-

• Hu Moments:

Hu moments are known for their invariance to image translation, rotation, and scaling. These moments offer a concise representation of an object's shape, which makes them well-suited for characterizing the distinctive and invariant features of fingerprint patterns, irrespective of their orientation.

-

• Local Binary Patterns (LBP) with Rotational Invariance:

-

3.4 Fusion

-

3.4.1 Feature level Fusion (FF):

-

3.4.2 Image Fusion (IF):

Local Binary Patterns (LBP) excel in capturing texture information by encoding the local patterns of pixel intensities. The inclusion of rotational invariance within LBP guarantees the robustness of the extracted features, even when fingerprint rotations are present, which is a frequent phenomenon in real-world situations.

The synergy of these feature extraction methods is designed to enhance the accuracy and reliability of gender classification in fingerprint analysis.

Fusion can be achieved through four distinct methods: image-based, feature-based, score-based, and classifierbased fusion [2]. This study primarily focuses on feature-level and image-level fusion techniques applied to fingerprint images for gender classification.

A fingerprint tends to have rich sets of features and intuitively feature level fusion is expected to be more effective in gender identification [23]. In this work, manifold rotational invariant features like RI-LBP, Hu’s moments, and Gabor Wavelets; are extracted from the fingerprints and are fused together via concatenation rule [15].

Image Fusion paradigm has several applications like in medical data imaging [28], remote sensing [34] and surveillance [35] etc. Image Fusion is a process of combining information of two or more images which are obtained from same or different sensor. The image fusion not only reduces the amount of data whereas the produced image is certainly more informative and suitable for human and machine learning perception task.

Image Fusion technique can be used to perform different task related to applications. In this work, spatial based technique is considered with simple average scheme. As in proposed work, some of the fingerprint images out of four-databases are having low brightness and contrast, the averaging scheme has been considered. This traditional fusion technique is used to combine images by averaging the pixels and results in high brightness and contrasted image [28]. This technique focuses on all the region of the image and if the image is of same kind, then it will work well. In the Fig. 3illustration of image fusion technique has been depicted.

Fig. 3. Working of Image Fusion Technique

-

3.5 Machine Learning Classifiers

-

3.5.1 Nearest Neighbor Classifier:

-

3.5.2 Decision Tree:

-

3.5.3 Support Vector Machine:

A machine learning classifier sorts of pixels into a predetermined number of distinct classes or groups of data depending on the attributes that were computed for each one. The many binary classifiers that may lead to a greater classification rate for gender identification are highlighted in this work.

The nearest neighbor classifier is a supervised learning method that assigns class labels by considering the distances to neighboring instances and classifies based on the k-values. It examines the data points closest to it, as determined by the user, and assigns labels to unlabeled samples [16].

Da y - block ( M , N ) = E nJ = 0 1 M j - N j I (14)

The decision tree algorithm constructs a training model by utilizing the training data to predict the class based on decision rules [2]. It is a supervised machine learning technique that generates a decision tree from the provided training data [18]. The algorithm initially identifies all attributes and selects the most significant attribute as the root, while the remaining attributes are assigned as leaf nodes. This process is repeated for each subset until leaf nodes are present in every branch of the tree [32]. The Gini diversity index is employed to measure the impurity in the following equation.

gdm = 1— XnC2^) (15)

Where, c (k) is the observed classes with class n that reach the node.

SVMs represent the statistical learning method [2], which categorizes the label based on several learning functions [23]. It simply functions as a binary-modeled classifier that looks for the best hyper-plane to divide labels from a set of n input vectors from Y i labels [36].

Here, Y i predicts values either of the class belonging to male or female class by the using the F(X) discriminative function.

4. Experimental Analysis

The Algorithmic form of proposed method is depicted below:

Input: Raw Fingerprint Image samples

Output: Gender Classification using fingerprint images.

Start

Step 1: Input a fingerprint image.

Step 2: Perform pre-processing that includes converting image into binary and resizing and normalizing to a size of 250x 250 and finally CLACH is applied.

Step 3: Compute features Rotational Invariant-Local Binary Patterns, Hu’s Moments and Gabor wavelet features individually.

Step 4: Perform Image fusion and Feature Fusion of Rotational Invariant-Local Binary Patterns, Hu’s Moments, and Gabor wavelet features.

Step 5: Classifying the computed features using various classifiers.

End

-

4.1 Dataset and Evaluation Protocol

From the existing work it has been observed that the available standard databases do not contain gender annotations accompanying images. This motivates us for creating our own database (i.e. Third, fourth datasets) which further if required can be made publicly available. The experiments were conducted on 4 different state-of-the-art databases on collectively on 9290 fingerprint images. These fingerprint images are collected from different peoples from assorted age groups, demography, and different ethnicity, which makes algorithm to check for complex. The focus of the work is to check the robustness and novelty of the gender classification algorithm. In these section details of the databases is deliberated.

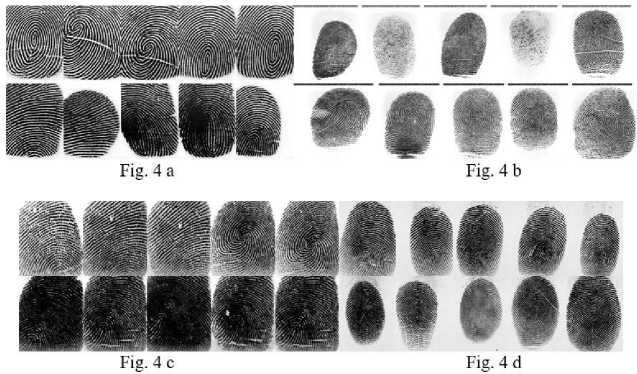

The First one dataset is a publicly available SDUMLA-fingerprint dataset. This database is compiled and kept up to date by Shandong University's machine learning and application lab [37]. This dataset comprises of real multimodal data of 106 individuals they belong from different ethnicity and age groups; out of which 59 were male and 47 were female subjects. By giving the necessary instructions; from each volunteer the thumb; index and middle finger images which were collected by FT-2BU sensor. Sample images of male and female fingerprints from the dataset are shown in the Fig 4 a.

The second dataset used is the KVK fingerprint dataset, which is maintained by the Aurangabad, India based KVK multimodal Biometric Lab Researchers [38]. For this work, we are using the fingerprints of the multimodal database. This is a dataset contains actual multimodal data that was gathered from 48 people, and they belong to different age groups, 39 of whom were male subjects and 9 of which were female subjects. It includes pictures that were taken using a Futronic fingerprint sensor. Each of these subjects had both hands' worth of fingerprints taken. Sample images of male and female from the dataset are depicted in Fig 4 b.

The Third and Fourth database are self-prepared databases. Both databases were prepared in phase wise manner and fingerprint images were collected from northern and southern portion of Karnataka, India. For both the databases, fingerprints were collected from the real persons of different age groups from both rural and urban individuals using Fingkey Hamster 2nd generation scanner. The collected fingerprints were captured with 512DPI resolution with size of 250X250 pixels. In the first phase, fingerprints were collected from the age group of 15-20 and later in the second phase from the age group 21-60’s of the populace are considered.

The Third Database which contained fingerprints captured from people of northern portion of Karnataka contains a total of 348 individuals left and right-hand fingerprints, with 183 male and 165 female included in the data size of 3480 images. Thus, in totally the database3 has 3480 fingerprints. This dataset can be made available for the additional comparisons. Sample fingerprint images of male and female from the dataset are depicted in the Fig 4 c.

Fourth Dataset contains fingerprints which are captured from 427 healthy subjects of southern portion of Karnataka, India, 157 are male volunteers and 270 are female volunteers. By providing preliminary instructions, 10 fingerprint images from each volunteer were obtained, 5 from the right hand and 5 from the left. Thus, database4 has in total of 4270 fingerprints. If required, further comparisons can be done for this dataset. Sample images of the male and female fingerprints are shown below in Fig 4 d.

Fig. 4. Sample image of male and female fingerprint a) SDUMLA-fingerprint dataset, b) KVK-Fingerprint dataset, c) Self-created database1, d) Selfcreated database2

Firstly, the independent performance of RI-LBP, Gabor wavelet and Hu’s features are separately investigated. For each instance, the RI-LBP yields 10 features, Gabor Wavelet produces 60 features and Hu’s invariants yields 7 features which are stored and independently classified with different binary classifiers. Later, Feature level fusion of RI-LBP, Gabor Wavelet and Hu’s features is checked by concatenating the obtained features. For the classification of the computed features, in each case, K-NN, Decision Tree and SVM Binary classifiers with 10-fold cross validation is employed.

Further, to check the robustness of the proposed algorithm a novel approach of Image level fusion (IF)and feature level fusion (FF) of RI-LBP, Gabor Wavelet and Hu’s features has been tested over the 4 distinct databases. The Image fusion technique is performed by the simple spatial averaging scheme for yielding 1 corresponding image by fusing all the 10 fingerprints images of a person, which is considered for the gender classification. The experiment was carried out by 10-fold cross validation using different binary classifiers on 4 state-of-the-art databases and the results are predicated in the form of confusion matrix which are illustrated in Table1-Table 4.

Table 1. Result of SDUMLA-Fingerprint Database (i.e., Database1)

|

Classifier |

RI-LBP |

GABOR |

HU’S |

FEATURE FUSION |

IMAGE FUSION |

||||||||||

|

о о Цн |

о S |

СЗ z—ч 3 & < О |

о S о ь |

о S |

ОЗ Z~Ч 3 & < О |

ь |

S |

СЗ z—ч 3 & < О |

о о ь |

S |

СЗ z—ч 3 & < О |

о о ь |

S |

3 £ < □ |

|

|

KNN |

372 |

98 |

85.18 |

481 |

52 |

91 |

325 |

145 |

77.7 |

422 |

48 |

92.1 |

45 |

2 |

93.3 |

|

59 |

531 |

43 |

547 |

91 |

499 |

35 |

555 |

5 |

53 |

||||||

|

SVM |

361 |

109 |

83.8 |

450 |

20 |

96.3 |

40 |

430 |

55.4 |

462 |

8 |

98.8 |

47 |

0 |

100 |

|

62 |

528 |

19 |

571 |

42 |

548 |

4 |

586 |

0 |

58 |

||||||

|

Decision Tree |

432 |

38 |

92.35 |

447 |

23 |

95.7 |

427 |

43 |

91.6 |

450 |

20 |

95.8 |

42 |

5 |

94.2 |

|

43 |

547 |

22 |

568 |

45 |

545 |

24 |

566 |

1 |

57 |

||||||

For the SDUMLA-Fingerprint database1, independent analysis the highest accuracy of 96.3% is obtained by Gabor wavelet with SVM classifier and the lowest precision of 55.4% is obtained by Hu’s invariant features with support vector machine observed in the Table-1. On the other hand, with the fusion of features it is noted that SVM classifier is achieved the highest accuracy of 98.8% and the lowest accuracy of 92.1% is perceived with KNN classifier. However, with the Image fusion and feature level fusion, the highest accuracy of 100.0% is attained by using the SVM classifier and the KNN classifier perceived with lowest accuracy of 93.3%.

Table 2. Result of KVK-Fingerprint Database (i.e., Database2)

|

Classifier |

RI-LBP |

GABOR |

HU’S |

FEATURE FUSION |

IMAGE FUSION |

||||||||||

|

о о Рн |

О S |

8^ < |

О о Рн |

О S |

< |

о Рн |

О S |

< |

О Рн |

О |

8^ < |

О о Рн |

О S |

8^ < |

|

|

KNN |

52 |

38 |

88.5 |

63 |

27 |

90.6 |

34 |

56 |

84.7 |

71 |

19 |

92.9 |

5 |

4 |

91.6 |

|

17 |

373 |

18 |

372 |

17 |

373 |

15 |

375 |

0 |

39 |

||||||

|

SVM |

79 |

11 |

97 |

84 |

6 |

97.9 |

64 |

26 |

51.8 |

89 |

1 |

99 |

7 |

2 |

95.8 |

|

3 |

387 |

4 |

386 |

205 |

185 |

1 |

389 |

0 |

39 |

||||||

|

Decision Tree |

77 |

13 |

94.7 |

84 |

6 |

96.8 |

66 |

24 |

92.5 |

88 |

2 |

97.8 |

9 |

0 |

97.9 |

|

12 |

378 |

9 |

381 |

12 |

378 |

9 |

381 |

1 |

38 |

||||||

Similarly, for KVK fingerprint database, as observed from Table 2 by the independent analysis RI-LBP with support vector machine classifier has obtained highest accuracy of 97% and the lowest accuracy of 51.8% is obtained by Hu’s invariant with the support vector machine classifier. Further, by the fusion of the features, the highest accuracy of the 99% is obtained by support vector machine classifier and lowest accuracy of 92.9% is yielded with KNN classifier. However, by the Image fusion and fusion of features, the accuracy of 97.9% is obtained by decision tree classifier and lowest accuracy of 91.6% is yielded with KNN classifier.

Table 3. Result of Fingerprint Database3

|

Classifier |

RI-LBP |

GABOR |

HU’S |

FEATURE FUSION |

IMAGE FUSION |

||||||||||

|

"сЗ ь |

о S |

0s |

"сЗ Рн |

О S |

0s |

"сЗ Рн |

О S |

(o' 0s |

"сЗ ь |

о S |

(o' 0s |

о Рн |

О S |

(o' 0s |

|

|

KNN |

1541 |

109 |

93.4 |

1556 |

94 |

94.3 |

1203 |

447 |

75.6 |

1596 |

54 |

96.2 |

158 |

6 |

94.2 |

|

118 |

1712 |

102 |

1728 |

400 |

1430 |

77 |

1753 |

14 |

168 |

||||||

|

SVM |

1470 |

180 |

89.6 |

1589 |

61 |

95.5 |

1644 |

6 |

47.2 |

1605 |

45 |

97.7 |

164 |

0 |

100 |

|

181 |

1649 |

93 |

1737 |

1829 |

1 |

32 |

1798 |

0 |

182 |

||||||

|

Decision Tree |

1568 |

82 |

94.8 |

1590 |

60 |

96.4 |

1482 |

168 |

90.8 |

1602 |

48 |

96.8 |

159 |

5 |

96.5 |

|

96 |

1734 |

63 |

1767 |

150 |

1680 |

62 |

1768 |

7 |

175 |

||||||

Similarly, for the self-created database (i.e., dataset3) from the Table 3, we have observed that the Gabor wavelet with the decision tree classifier has obtained an accuracy of 96.4% using independent analysis of features and the lowest accuracy of 47.2% is yielded by Hu’s invariant features with support vector classifier. Further by the fusion of features the highest accuracy of 97.7% is observed by the support vector classifier and lowest accuracy of 96.2% is obtained with KNN classifier. However, by the image level fusion and feature level fusion a preeminent accuracy of 100% is witnessed by the support vector classifier and lowest accuracy of 94.2% is obtained with KNN classifier.

Table 4. Result of Fingerprint Database 4

|

Classifier |

RI-LBP |

GABOR |

HU’S |

FEATURE FUSION |

IMAGE FUSION |

||||||||||

|

о о fe |

S |

(o' 0s |

'ri о fc |

о 'ri S |

(o' 0s ri |

о ь |

о S |

(o' 0s ri |

о Рн |

о S |

(o' 0s ri |

о о fc |

о S |

(o' 0s |

|

|

KNN |

2428 |

282 |

85.7 |

2500 |

210 |

86 |

2387 |

323 |

78.3 |

2511 |

199 |

87.8 |

266 |

4 |

94.6 |

|

328 |

1242 |

388 |

1182 |

603 |

967 |

321 |

1249 |

19 |

137 |

||||||

|

SVM |

2189 |

521 |

74.4 |

2614 |

96 |

92.5 |

2705 |

5 |

63.5 |

2625 |

85 |

93.9 |

269 |

1 |

99 |

|

574 |

996 |

223 |

1347 |

1556 |

14 |

173 |

1397 |

3 |

153 |

||||||

|

Decision Tree |

2593 |

117 |

93.2 |

2631 |

79 |

95.5 |

2492 |

218 |

89.8 |

2666 |

44 |

96 |

265 |

5 |

98.1 |

|

171 |

1399 |

113 |

1457 |

218 |

1352 |

125 |

1445 |

3 |

153 |

||||||

Correspondingly, for the self-created fingerprint dataset (i.e., dataset4) from the Table 4, we have observed that the by independent analysis of features the highest accuracy of 95.51% is observed with Gabor wavelet with the decision tree classifier and the lowest accuracy of 63.5% is yielded by Hu’s invariant features with support vector classifier. Further by the fusion of features the highest accuracy of 96.05% is observed with decision tree classifier and the lowest accuracy of 87.85% is obtained by KNN classifier. However, by the image level fusion and feature level fusion the best accuracy of 99% is observed with support vector machine classifier and the lowest accuracy of 94.6% is yielded by KNN classifier.

5. Deep Learning Using Pre-trained Network

Several rotational invariant feature extraction techniques from a fingerprint image have been employed. Further, to check the robustness of the image fusion technique Deep learning using pre-trained model has been implemented. Deep learning in the recent past has gained lot of attention in the field of machine learning and pattern recognition. Deep learning is newer extension to the machine leaning, which is inspired from the biological neurons that are found in the human brain. Primarily, neuron accepts the whole image as input and automatically extracts the meaningful features by neurons which are required for efficient gender classification task and finally, neurons will classify the gender.

We have fine-tuned the AlexNet by feeding the fused fingerprint images for the gender classification. AlexNet has been considered because it’s a well-estimable model which can be trained with limited amount of data and by empirical evaluation, we have observed that AlexNet network able to extract many abstract and high scale features even from the fused fingerprint images.

Gender Classification from fused fingerprint images initially starts with resizing. As the input requirement for the AlexNet is a fixed size to 224*224 colors, we empirically converted grey image to color by duplicating the grey channels. Feature computation step deals with extraction of features information using meaning full feature map which includes fully connected convolutions layers with different convolution window sizes i.e. of 11*11, 5*5 and 3*3 pixels and is tailed with ReLU [39]. After the ReLU activation function the dropout layer is utilized which reduces over fitting in network. Lastly, is evaluated with different binary classifiers that have 2 neurons that identify the class membership which predicts whether the fused fingerprint images belong to male or female.

Deep learning with fine tuning the network is conducted on four different homologous databases. The results are depicted in the form of confusion matrix in below table 5. It is witnessed that the highest accuracy of 100% is observed from KVK-database with fine tuning network by decision tree classifier and 99% accuracy is attained from SDUMLA-database with fine tuning by decision tree classifier. Further, for Self-created database1 it is observed that highest accuracy of 99% is observed with fine tuning network by SVM classifier. And lastly, for Self-created database2 it is perceived that highest accuracy of 99% is observed with fine tuning network by decision tree classifier.

Table 5. Confusion matrix with accuracy of Different Fingerprint Databases

|

Database: KVK |

SDUMLA |

Database3 |

Database4 |

|||||||||

|

Classifier |

о о fe |

^ |

Й z—ч < |

о fc |

Ч < |

О fe |

S |

8^ < |

О fc |

S |

8^ < |

|

|

KNN |

8 |

1 |

91.7 |

45 |

2 |

89.52 |

155 |

9 |

97.1 |

256 |

14 |

93.4 |

|

3 |

36 |

9 |

49 |

1 |

181 |

14 |

142 |

|||||

|

SVM |

2 |

7 |

84.4 |

45 |

2 |

95.2 |

163 |

1 |

99 |

269 |

1 |

98.6 |

|

0 |

39 |

3 |

55 |

0 |

182 |

5 |

151 |

|||||

|

Decision Tree |

9 |

0 |

100 |

46 |

1 |

99 |

162 |

2 |

98 |

269 |

1 |

99 |

|

0 |

39 |

1 |

58 |

3 |

179 |

0 |

156 |

|||||

6. Comparative Analysis

To realize the effectiveness of the proposed method, the authors have compared the proposed with the similar works present in literature and are presented in Table 6.

Table 6. Comparative Analysis

Список литературы Fingerprint Image Fusion: A Cutting-edge Perspective on Gender Classification via Rotational Invariant Features

- Ma, J., Ma, Y., & Li, C. (2019). Infrared and visible image fusion methods and applications: A survey. Information Fusion, 45, 153–178. https://doi.org/10.1016/j.inffus.2018.02.004

- Gornale, S. S., Hangarge, M., Pardeshi, R., & Kruthi, R. (2015). Haralick feature descriptors for gender classification using fingerprints: A machine learning approach. International Journal of Advanced Research in Computer Science and Software Engineering, 5(9), 72-78.

- R, K., Patil, A., & Gornale, S. (2019). Fusion of Features and Synthesis Classifiers for Gender Classification using Fingerprints. International Journal of Computer Sciences and Engineering, 7(5), 526–533. https://doi.org/10.26438/ijcse/v7i5.526533

- De-La-Torre, M., Granger, E., Radtke, P. V. W., Sabourin, R., & Gorodnichy, D. O. (2015). Partially-supervised learning from facial trajectories for face recognition in video surveillance. Information Fusion, 24, 31–53. https://doi.org/10.1016/j.inffus.2014.05.006

- Tapia, J. E., Perez, C. A., & Bowyer, K. W. (2015). Gender classification from iris images using fusion of uniform local binary patterns. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (Vol. 8926, pp. 751–763). Springer Verlag. https://doi.org/10.1007/978-3-319-16181-5_57

- Gornale, S., Patil, A., & Hangarge, M. (2021). Palmprint Biometric Data Analysis for Gender Classification Using Binarized Statistical Image Feature Set (pp. 157–167). https://doi.org/10.1007/978-981-16-1681-5_1

- Gornale, S. S., Kumar, S., Patil, A., & Hiremath, P. S. (2021). Behavioral Biometric Data Analysis for Gender Classification Using Feature Fusion and Machine Learning. Frontiers in Robotics and AI, 8. https://doi.org/10.3389/frobt.2021.685966

- Djemili, R., Bourouba, H., & Korba, M. C. A. (2012). A speech signal based gender identification system using four classifiers. In Proceedings of 2012 International Conference on Multimedia Computing and Systems, ICMCS 2012 (pp. 184–187). https://doi.org/10.1109/ICMCS.2012.6320122

- Mayhew, S. (2015). History of Biometrics. Biometric Update. Retrieved from http://www.biometricupdate.com/201501/history-of-biometrics

- Brunelli, R., & Falavigna, D. (1995). Person Identification Using Multiple Cues. IEEE Transactions on Pattern Analysis and Machine Intelligence, 17(10), 955–966. https://doi.org/10.1109/34.464560

- Marasco, E., Lugini, L., & Cukic, B. (2014). Exploiting quality and texture features to estimate age and gender from fingerprints. In Biometric and Surveillance Technology for Human and Activity Identification XI (Vol. 9075, p. 90750F). SPIE. https://doi.org/10.1117/12.2048125

- Gornale, S. S. (2015). Fingerprint based gender classification for biometric security: A state-of-the-art technique. AIJRSTEM, 9(1), 39-49.

- Gornale, S. S., Basavanna, M., & Kruthi, R. (2015). Gender classification using fingerprints based on support vector machines (SVM) with 10-cross validation technique. International Journal of Scientific & Engineering Research, 6(7), 588-593.

- Gornale, S. (2017). Fingerprint Based Gender Classification Using Local Binary Pattern. International Journal of Computational Intelligence Research ISSN 0973-1873 Volume 13, Number 2 (2017), Pp. 261-271, 13(2), 261–271.

- S.S, G., & R, K. (2014). Fusion of Fingerprint and Age Biometric for Gender Classification Using Frequency and Texture Analysis. Signal & Image Processing: An International Journal, 5(6), 75–85. https://doi.org/10.5121/sipij.2014.5606

- Gornale, S., Patil, A., & C., V. (2016). Fingerprint based Gender Identification using Discrete Wavelet Transform and Gabor Filters. International Journal of Computer Applications, 152(4), 34–37. https://doi.org/10.5120/ijca2016911794

- Gornale, S. S., & Patil, A. (2016). Statistical Features Based Gender Identification Using SVM. International Journal for Scientific Research and Development, (8), 241-244.

- R, K., Patil, A., & Gornale, S. (2019). Fusion of Local Binary Pattern and Local Phase Quantization features set for Gender Classification using Fingerprints. International Journal of Computer Sciences and Engineering, 7(1), 22–29. https://doi.org/10.26438/ijcse/v7i1.2229

- Alam, S., Dipti, Dua, M., & Gupta, A. (2019). A comparative study of gender classification using fingerprints. In Proceedings of the 2019 6th International Conference on Computing for Sustainable Global Development, INDIACom 2019 (pp. 880–884). Institute of Electrical and Electronics Engineers Inc.

- Deshmukh, D. K., & Patil, S. S. (2020). Fingerprint-based gender classification by using neural network model. In Applied Computer Vision and Image Processing: Proceedings of ICCET 2020, Volume 1 (pp. 318-325). Springer Singapore.

- Rim, B., Kim, J., & Hong, M. (2020). Gender Classification from Fingerprint-images using Deep Learning Approach. In ACM International Conference Proceeding Series (pp. 7–12). Association for Computing Machinery. https://doi.org/10.1145/3400286.3418237

- Qi, Y., Qiu, M., Jiang, H., & Wang, F. (2022). Extracting Fingerprint Features Using Autoencoder Networks for Gender Classification. Applied Sciences (Switzerland), 12(19). https://doi.org/10.3390/app121910152

- Tom, R. J., & Arulkumaran, T. (2013). Fingerprint Based Gender Classification Using 2D Discrete Wavelet Transforms and Principal Component Analysis. International Journal of Engineering Trends and Technology, 4(2), 199–203.

- Tan, X., & Triggs, B. (2007, October). Fusing Gabor and LBP feature sets for kernel-based face recognition. In International workshop on analysis and modeling of faces and gestures (pp. 235-249). Berlin, Heidelberg: Springer Berlin Heidelberg.

- Gornale, S. S., Patravali, P. U., & Hiremath, P. S. (2020). Automatic Detection and Classification of Knee Osteoarthritis Using Hu’s Invariant Moments. Frontiers in Robotics and AI, 7. https://doi.org/10.3389/frobt.2020.591827

- Ojala, T., Pietikäinen, M., & Harwood, D. (1996). A comparative study of texture measures with classification based on feature distributions. Pattern Recognition, 29(1), 51–59. https://doi.org/10.1016/0031-3203(95)00067-4

- Gornale, S. S., Patil, A., & Kruthi, R. (2019). Multimodal biometrics data based gender classification using machine vision. International Journal of Innovative Technology and Exploring Engineering, 8(11), 1356–1363. https://doi.org/10.35940/ijitee.J9673.0981119

- Baskar, A., Rajappa, M., Vasudevan, S. K., & Murugesh, T. S. (2023). Digital Image Processing. Digital Image Processing (pp. 1–193). CRC Press. https://doi.org/10.1201/9781003217428

- Gornale, S. S., Patil, A., & Hangarge, M. (2019, March). Binarized Statistical Image Feature set for Palmprint based Gender Identification. In Book of abstract-International Conference on Machine Learning, Image Processing, Network Security and Data Sciences (MIND-2019) pp (Vol. 61, pp. 3-4).

- Ojala, T., Pietikainen, M., & Maenpaa, T. (2002). Multiresolution grayscale and rotation invariant texture classification with local binary patterns. IEEE Transactions on pattern analysis and machine intelligence, 24(7), 971-987.

- Huang, Z., & Leng, J. (2010). Analysis of Hu’s moment invariants on image scaling and rotation. In ICCET 2010 - 2010 International Conference on Computer Engineering and Technology, Proceedings (Vol. 7). https://doi.org/10.1109/ICCET.2010.5485542

- Hu, M. K. (1962). Visual Pattern Recognition by Moment Invariants. IRE Transactions on Information Theory, 8(2), 179–187. https://doi.org/10.1109/TIT.1962.1057692

- Karimi-Ashtiani, S., & Jay Kuo, C. C. (2008). A robust technique for latent fingerprint image segmentation and enhancement. In Proceedings - International Conference on Image Processing, ICIP (pp. 1492–1495). https://doi.org/10.1109/ICIP.2008.4712049

- James, A. P., & Dasarathy, B. V. (2014). Medical image fusion: A survey of the state of the art. Information Fusion, 19(1), 4–19. https://doi.org/10.1016/j.inffus.2013.12.002

- Liu, Y., Wang, L., Cheng, J., Li, C., & Chen, X. (2020). Multi-focus image fusion: A Survey of the state of the art. Information Fusion, 64, 71–91. https://doi.org/10.1016/j.inffus.2020.06.013

- Grigor’eva, M. A. (2019). Human gender determination based on the measurements of handprints that are devoid of dermatoglyphic features. Sudebno-Meditsinskaya Ekspertiza, 62(4), 22–29. https://doi.org/10.17116/sudmed20196204122

- Yin, Y., Liu, L., & Sun, X. (2011). SDUMLA-HMT: A multimodal biometric database. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (Vol. 7098 LNCS, pp. 260–268). https://doi.org/10.1007/978-3-642-25449-9_33

- Siddiqui, A. M., Telgad, R. L., Lothe, S. A., & Deshmukh, P. D. (2019). Development of Secure Multimodal Biometric System for Person Identification Using Feature Level Fusion: Fingerprint and Iris. In Recent Trends in Image Processing and Pattern Recognition: Second International Conference, RTIP2R 2018, Solapur, India, December 21–22, 2018, Revised Selected Papers, Part II 2 (pp. 406-432). Springer Singapore.

- Sharanappa Gornale, S., Patil, A., & Ramchandra, K. (2020). Multimodal Biometrics Data Analysis for Gender Estimation Using Deep Learning. International Journal of Data Science and Analysis, 6(2), 64. https://doi.org/10.11648/j.ijdsa.20200602.11