Frame work for expression invariant face recognition system using warping technique

Автор: Deepti Ahlawat, Vijay Nehra, Darshana Hooda

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 7 vol.10, 2018 года.

Бесплатный доступ

Facial expressions, usually has an adverse effect on the performance of a face recognition system. In this investigation, expression invariant face recognition algorithm is presented that converts input face image with an arbitrary expression into its corresponding neutral facial image. In the present study, deep learning algorithm is used to train classifiers for reference key-points, where key-points are located and deep neural network is trained to make the system able to locate the landmarks in test image. Create an intermediate triangular mesh from the test and reference image and then warp it using affine transform and take the average of the normalized faces. To extract the features presented in the result image shift invariant feature extraction technique is used. Finally, results are compared and the recognition accuracy is determined for different expressions. The present work is tested on three different databases: JAFFE, Cohn-Kanade (CK) and Yale database. Experimental results show that the expression invariant face recognition method is very robust to variety of expressions and recognition accuracy is found to be 97.8 %, 96.8% and 95.7% for CK, JAFFE and Yale databases respectively.

Expression Invariant face recognition, Warping, Shift Invariant Feature Transform

Короткий адрес: https://sciup.org/15015980

IDR: 15015980 | DOI: 10.5815/ijigsp.2018.07.06

Текст научной статьи Frame work for expression invariant face recognition system using warping technique

Published Online July 2018 in MECS DOI: 10.5815/ijigsp.2018.07.06

Face recognition is an efficient biometric technique for recognition of a person from an image or a video. It is a non-intrusive method and can be used without subject’s knowledge. Despite of more than thirty years of deep research work, the performance of the state-of-the-art of many face recognition systems is greatly affected. Human brain can easily recognize faces, but it is challenging task for computer based face recognition system especially when variations in pose, illumination, expression and age are involved in the facial region [1-2]. A great effort has being devoted to automate facial recognition system, but it still remains a challenging problem. The research on face recognition has being progressing with continuous visible improvements made from past to present.

Face recognition is incorporated in applications related to surveillance, automated attendance management, public identification, verification of credit cards and driving license, criminal justice system and forensic, law enforcement and security applications to name only a few [3-7]. The typical applications of face recognition are shown in Table 1.

Table 1. Typical applications of face recognition [7]

|

Area |

Application |

|

Smart cards |

Driver’s license, entitlement programs, Smart cards Immigration, national ID, passports, voter registration Welfare fraud. |

|

Information security |

TV Parental control, personal device logon, desktop logon. |

|

Entertainment |

Human-robot-interaction, humancomputer interaction, Video game, virtual reality, training programs. |

|

Law enforcement and surveillance |

Secure trading terminals, Law enforcement Advanced video surveillance, CCTV control and surveillance Portal control, postevent analysis, Shoplifting, suspect tracking and investigation. |

Today, one of the vital problems in the recognition of a person are emotions and verbal communication which cause variations in the face. Expressions have an adverse effect on the system accuracy and is the cause of failure for many face recognition algorithms as the databases are generally neutral faces without expressions such as ID cards, passports or driving license. Many researchers have investigated methods to improve the face recognition by removing the facial expressions to obtain a neutral face i.e. making the face expression-invariant [1015]. Hence to develop a robust face recognition algorithm which is insensitive to expression variations is one of the greatest challenges in this field.

In the present investigation, to address the issues of the expression variations efforts have been made to devise expression invariant face recognition system.

The rest of the paper is organized as follows: Section 2 describes the previous work related to expressioninvariant techniques. Section 3 summarize the framework for present investigation. Section 4 discuss the databases used in the present work with brief description. Finally, Section 5 presents the results and discussion followed by conclusion.

-

II. Related Work

In this section, record of earlier prominent research work related to this study is presented. The review is mainly for expression invariant techniques. Table 2 gives a brief overview of the work related to the present study.

Table 2. Overview of state-of-art of Expression Invariant techniques

|

Techniques |

Researchers |

|

ExpressionInvariant |

Martinez et al. (2003), Ramachandran et al. (2005), Liu et al. (2003), Li et. al. (2006), Lee et al. (2008), Hua-Chun et. al. (2008), Tasai et al. (2009), Hsieh et al. ( 2009), Z. Riaz et. al.(2009), Petpairote et al. (2013), Varma et al. (2014), Biswas et. al. (2014), Murty et al. 2014, Patil et. al. (2016), Patil H. et. al. (2016), Juneja et. al. (2018) |

A brief review of record of earlier work for expression invariant techniques is briefly summarized as follows:

While identifying a person, facial expressions make it difficult for a system to recognize a face. Author Martinez et. al. investigated the various issues faced in face recognition system [8] . Ramachandran et.al. [9] proposed a method of converting a smiling face to a neutral face to improve face recognition. In this paper, a triangular mesh model called Candide, is registered on the smiling face images and the appearance of the smiling face is changed using piecewise affine warp to move the triangle to the neutral state. The results of PCA and LDA with FERET database in face recognition increased from 67.55% to 73.5%.

Facial Asymmetry method (Asymfaces) was implemented by Liu et.al. [10] on 2D images, used for person identification. AsymFaces was claimed to be invariant to facial expression changes. PCA was applied to Facial Asymmetry method for dimension reduction. Author Lee and Kim presented a method that transform input face image with a random expression into its corresponding neutral facial expression image by direct and indirect facial expression transformation. Active appearance method (AAM) was used to extract facial features from the input image and AAM was also applied for facial expression recognition. By using direct and indirect transformation, expression feature vector was transformed to corresponding neutral facial expression vector. Finally, face recognition was performed by nearest neighbor classifier, LDA and the results obtained were appropriate and practical when the number of training subjects were limited [11].

AdaBoost algorithm for the classification by the distance differences among faces for manual 18 fiducial points between neutral and pseudo neutral images. As AdaBoost algorithm used in classification stage, the result increased to 96%. Pseudo neutral images of the converted facial expression were close to realistic images but does not look like a realistic face image [12].

Hsieh et.al. used optical flow computation for input expression variant faces with reference to neutral faces. They used elastic image warping to remove the expression from the face image and to recognize the subject with the expression face images. The results improved under expression variations but optical flow computation outperformed with illumination variations [13].

Petpairote and Madarsami proposed Thin Plate Spline Warping (TPSW) [14] method to warp expression faces to neutral faces with a significant improvement in recognition accuracy. The experimental results for face recognition of MUG-FED and AR-Face databases showed a significant improvement in recognition rate for Principal Component Analysis(PCA) [15], Linear Discriminate Analysis (LDA) [16, 17] and Local Binary Pattern(LBP) [18] methods after warping the expression face to neutral face. This 2D approach of warping and recognition is computationally less expensive than 3D approaches. Varma et.al. developed an efficient face recognition system which was invariant to expression variations. Self-Organizing Map (SOM) based network was used for feature extraction of true features. k-NN ensemble method used to classify faces to find accurate matching of the face. System was tested on IITK and FERET database and recognition rate was more than 91% [19].

-

A. Gap in Study

After a brief review of related work in previous section and from the state-of art it is found that the computation time is more for warping processes and also the experimental results obtained does not give a realistic image which can be overcome by the method as presented in this work. Face is a popular attribute for automatic recognition but variations due to facial expression produce large distortion in the appearance. To overcome this problem, an approach to improve the recognition rate is presented, in which an image with expression is warped to create a neutral face. In nutshell, in the present investigation, efforts have been made to explore a technique which makes a face recognition system expression -invariant.

-

B. Objective of Study

The present investigation aims to devise an efficient expression-invariant technique for face recognition. The specific objectives of the present investigation are as follows:

-

1. To present an efficient expression-invariant technique for face recognition.

-

2. To compare the face recognition results on databases i.e . JAFFE, Yale and CK which involve drastic expression variations.

After a brief overview of the objective of the study, in the forthcoming section, methodology adopted for carrying out study under reference is presented.

-

III. Material and Method

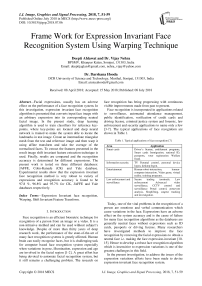

The major steps for present investigation is depicted in figure 1. The various steps involved in the implementation of system architecture are as follow:

Fig.1. Flow Diagram of Present Investigation

-

A. Image Acquisition

Firstly, images are acquired from selected databases of different person with varying expressions. The system presented in this investigation captures the images of a number of subject for different expressions from the JAFFE [20], Yale [41] and CK [42] databases.

-

B. Image Preprocessing

Pre-processing is required to enhance the performance of the system. In the present study, the input test image may have different size from the reference set, so for each input image, face are cropped, normalized and scaled to improve the performance.

-

C. Face Detection

After preprocessing stage, face detection is applied on the image. Face detection can be defined as two-class classification problem where an image region is classified as either a ‘face’ or a ‘not-a-face’. Most efficient work done by Voila and Jones in 2000’s has being a successful face detector which is able to run in real time also [21]. In a given test image, the goal of face detection algorithm is to detect whether a face is present or not. If the face is present it returns the locations of the face [22]. In this study basic Adaboost Viola and Jones algorithm is used for face detection which is the fastest and most successful approach for real time systems. The three main contributions of Voila-Jones face detector [21] are:

-

• Haar-like features: Offers a new representation of image called ‘Intergral Image’ which enables fast features calculation. The integral image can be computed from the given image using few operations per pixel.

-

• AdaBoost Learning Algorithm: Haar-like features gives a large set of features, to ensure fast classification, a classifier is constructed using AdaBoost algorithm to select the small or critical features required.

-

• Cascade Classifier: The set of features selected by weak classifier are further processed by combining complex classifier in cascade which enhances the speed of detector. Also weak classifier discards the background region and complex classifiers enhances face region.

Viola Jones approach is used in this investigation as it enables to detect faces with high speed and also implementation of the system is easy.

-

D. Detection of landmark points

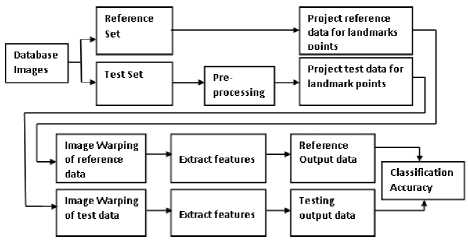

Landmark points or fiducial points are pixel coordinates which define geometry of face. These coordinate points are a set of facial salient points, usually located on the corners and outer mid points of the lips, corners of the eyebrows, corners of the eyes, corners of the nostrils, tip of the nose, and the tip of the chin [23]. In previous works, authors used model based face recognition algorithms to generate a grid over a face and for that some reference points (on an average 64-80 points) were required and researchers marked those points manually or semi automatically by Bunch Graph Matching algorithm [24]. In this investigation, 81 landmark points were generated. Additionally, choosing the feature points should represent the most important characteristics on the face and should be extracted easily.

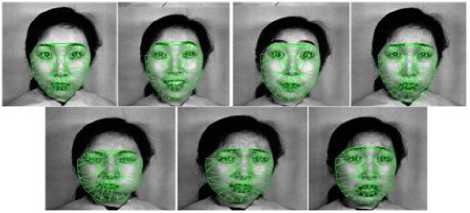

In this study, a facial image with 81 landmark points is chosen having 19 for chin, 18 for lips (which includes mouth corners, upper lip and lower lip), 10 for nose, 18 for both the eye and 16 for both the eyebrows as illustrated in the figure 2. As an example, JAFFE database have been shown with 81 feature points [20]. Figure 3 shows the fiducial points on the different face images of Subject 1.

Fig.2. 81 landmark points on reference face image

Fig.3. Facial landmark points on reference and test face images of JAFFE data set

-

E. Extract facial region from image

Once a set of facial landmarks is defined, other landmarks are used to extract the facial region by creating a polygon shape and masking the values outside the image. Effectively, this refines the face image, removing bias from the background, and hair structure.

-

F. Facial structure: Triangular mesh

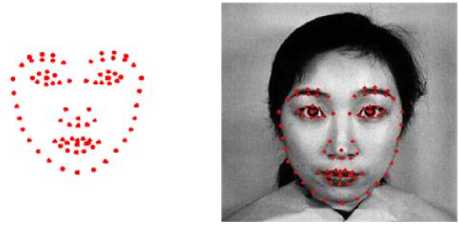

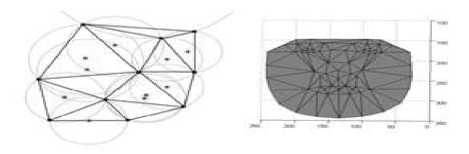

A set of triangular accord between sets of facial landmarks are used to pre-compute a triangular mesh for all the faces as shown in figure 4.

Fig.4. Resulting triangular facial mesh

This mesh is created by using Delaunay triangulation, which is a unique construction that no vertex from any triangle may lie within the circumcircle of any other triangle. The resulting triangles maximize the angles of the all triangles, generally having few thin, skinny triangles. Once a general facial mesh has been generated unique facial meshes can be created for all faces. Figure 5 clearly shows the face with triangular facial mesh on JAFFE database with the different expression variations.

Fig.5. Triangular mesh created between facial landmarks on JAFFE database

-

G. Interpose between landmark points

Facial mesh formed in previous step on the reference image and on the test image can now be compared. To morph an image between two resulting meshes interpose an intermediate facial mesh and wrap both test and reference faces to it. The intermediate facial mesh is generated by weighing between both sets of facial landmarks using equation (1):

for all landmarks i

tm,l = (1 - t) * lr,l + t* lt,i (1)

where 1т д is the ith interpolated mid-point landmark, lT ^ is ith reference landmark, lt ,i is i th test image landmark, t is weight between 0 and 1, where zero represents reference image’s facial landmarks and one represents the test image’s landmark points.

-

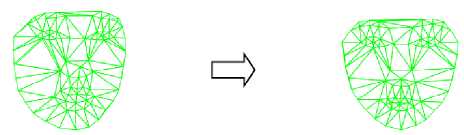

H. Warping towards intermediate mesh

After calculating an intermediate facial mesh, the reference and test image are warped using thin plate splines based warping technique. The Thin plate spline (TPS) method was made popular by Fred L. Bookstein in the context of biomedical image analysis [25]. The term “thin-plate spline” refers to the bending of a thin sheet of metal. The process of using thin plate splines in image warping involves minimizing a bending energy function for a transformation over a set of given nodes or landmark points. While warping an image, all landmark points will be used to calculate the bending energy function which is then used to interpolate and transform the pixels into a warped image. This algorithm gives good results for scattered and sparse features like facial landmark points. Figure 6 shows the geometry change, when warping a facial expression (fear) to a reference face. The purpose of using this algorithm is to transform an expression face image to neutral face by mapping the landmarks of expression face to one preset standard face of neutral landmarks.

Fig.6. Geometry change, when warping an expression (fear) to a neutral

TPSW method estimates the new coordinates that minimizes energy between expression points and the neutral reference points given by the energy function in (10) that includes the smoothing term as given in equation (2):

•(=Mg^(^($\^ (2)

The basis for solving the algorithm is given by a kernel function U using equation (3):

У = r2 log ( r2 ) , where r = (xa - xb) + (ya - yb)2 (3)

Given a set of source landmark points, we define P as a matrix of (3 * n) where n is the number of landmark points as depicted in equation (4):

■ 1 Х1

1 Х2

У 1

У 2

,3 * n

. 1 ^3 Уз.

Using kernel function, let K be a n*n matrix defined by equation (5) :

■ 0

^i)

. U(rui)

U(T 12 )

U (Г1„)"

U02n)

,n*n

U^) ... 0

Let Z is a 3*3 zero matrix as shown in equation (6):

z=lo 0 ol, 3*3 (6)

Finally, a matrix L is defined by combination of К, P, PT and Z as given in equation (7):

Where t is the weight between 0 and 1,1r is the matrix of reference image projected to the intermediate triangular mesh, 1£ is the matrix of target image projected to the intermediate triangular mesh. Finally, we obtain a synthesized neutral face.

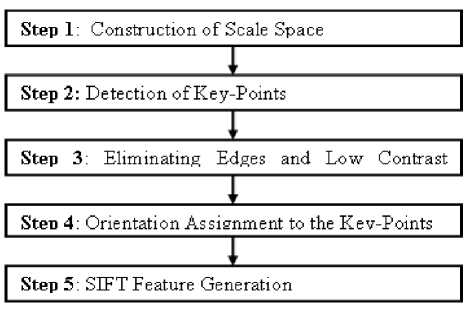

I. Feature Extraction and Classification

Feature extraction involves reduction of amount of resources required to explain a large set of data. Also initial data is suspected to be redundant, then it can be reduced to form a set of features. In the previous step, after obtaining a synthesized face, SIFT [26] is used for feature extraction with the advantage that SIFT features posses a strong robustness to illumination, pose and occlusion variations. It is widely and effectively used method for face recognition as it out performs PCA [15], Independent Analysis component (ICA) [27], LDA [16], Gabor transform [17] etc. The steps for feature extraction using SIFT is depicted in figure 7.

L = [К P], (n + 3) * (n + 3) (7)

Where, PT is the matrix transpose operator and L is a (n + 3) * (n + 3) matrix

The matrix L allows us to solve the bending energy equation. Inversing the matrix L on to another matrix У, which is defined as У = (V|0 0 0)T, which is a column vector of length (n + 3) where V is defined as any n-vector V = (v 1 ,17 2 , vn) . We derive a vector of n weights as W = (w 1 ,w2,..wn) and the coefficients d 1 , dx, dy of affine transformation as shown in equation (8). The affine transformation is a composition of translation, rotation, dilation and shear transformations.

Fig.7. Steps for feature extraction using SIFT

L-1y = (W|d1 dx dy)T (8)

To define z ( x, y), the elements of L 1 У can be used everywhere in the plane, which is shown by equation (9):

z(x,y) = di + dx + dy + Zn=i(w/U(|P/ - (x,y)|))

Every test image is matched with the corresponding database reference image individually and the matching results are used to arrive at a final decision. Cosine similarity measure [28] finds its application in data mining, for searching a required structure in huge databases is used for matching the image feature vectors. The database image with maximum matching score is a match. The present system is tested on databases with expression variations like: anger, fear, disgust, surprise, sad.

The final smoothing TPSW is obtained by minimizing the energy function given in equation (10) where parameter X is smoothness constraint weight and 1 ^ is smoothing term given in equation (2).

^TPS = En=i(li —/(Xi,yi)2) + A1^ (10)

After obtaining an intermediate mesh from both reference and test faces, we interpolate between the faces to obtain a mixed face using equation (11):

morp/ace = (1 — t) * 1r + t * 1£ (11)

IV. Database Used

The aim of this section is to determine the effectiveness of the proposed work on publicly available database with large facial variations. The experiments were conducted on three facial expression databases; namely, the JAFFE, Yale and Cohn-Kanade. The performance of the proposed method is compared with other state-of-art techniques available in the literature. A brief description of the datasets is presented in this section.

-

A. JAFFE database

Japanese Female Facial Expression (JAFFE) database was planned and assembled by Michael Lyons, Miyuki K., and Jori Gyoba at psychology Department in Kyushu University [20]. This database has 213 gray scale images of 10 Japanese female models with 7 facial expressions (6 facial expressions + 1 neutral face). Size of each image is 256 * 256. Figure 8 shows the sample images of 10 subjects in the database.

Fig.8. Facial images of 10 subjects of JAFFE Database

-

B. Yale Database

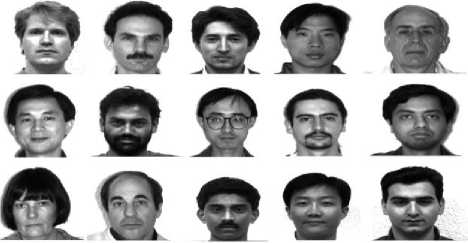

The Yale Face Database [41] contains 165 grayscale images in GIF format of 15 individuals. There are 11 images per subject, one per different facial expression or configuration: center-light, with glasses, happy, left-light, with no glasses, normal, right-light, sad, sleepy, surprised, and wink. Size of each image is 320 x 243 pixels. Figure 9 shows the sample images of 15 subjects in the database.

Fig.9. Facial images of 15 subjects of Yale Database

-

C. Cohn-Kanade database

CK database [42] includes 486 sequences from 97 posers. Each sequence begins with a neutral expression and proceeds to a peak expression. The peak expression for each sequence in fully FACS coded and given an emotion label. Figure 10 shows the sample images of the CK database.

Fig.10. Sample images of CK database

Table 3 summarize the database used in present study, which is freely available online for research purpose.

Table 3. Brief description of face databases

S. No Databas e Description Link 1 JAFFE [20] Contains face images of 10 subjects with 6 different facial expressions and 1 neutral expression for each subject. Total of 213 gray scale images with the resolution of 256*256. org/ 2 Yale [41] Contains 15 individuals with 5 different expressions and a neutral image for each subject. Total 165 gray scale images with the resolution of 320 x 243. .edu/datasets/yale _face_dataset_ori ginal/ p 3 CK [42] Contains images of 97 individuals with six expressions which begins with a neutral expression and proceeds to a peak expression. u/ckagree/

The aim of this study is to design a single image based face recognition system which converts an expression face to neutral face and also recognize which individual the face belongs to.

-

V. Results and Discussion

-

A. Experimental Results

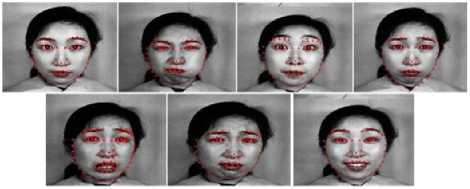

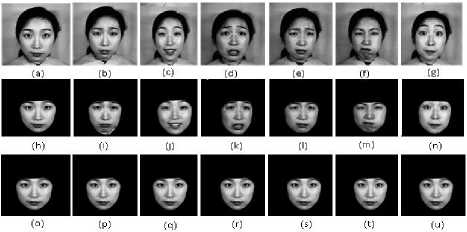

The test images of the database are first normalized to have same scale, position and rotation to mark landmark points on the neutral face and the test face as shown in figure 3. In this process the expression face image is transformed to neutral face image using warping technique i.e. thin plate spline warping method. After transformation to neutral image SIFT is used for feature extraction and we compare the result using cosine similarity measure. Figure 11 depicts the neutral and expression faces, cropped faces and their corresponding warped faces. In figure 11 (a) to (g) depicts the reference face and the expression face of the same subject and the cropped faces from (h) to (n) respectively of the JAFFE database. Further figure (o) to (u) shows that after warping the expression face has being morphed to the same image as the reference image.

Fig.11. Examples of warping of a face; (a) Reference face of subject 1; (b), (c), (d), (e), (f), (g) expression face of subject 1; (h), (i), (j), (k), (l), (m), (n) cropped images of same individual; (o), (p), (q), (r ), (s), (t), (u) are the images warped to neutral face.

Table 4 shows the recognition accuracy of different expressions for the three databases. For the JAFFE data set it is observed that anger have the highest recognition accuracy i.e. 100%. The accuracy of disgust is much closer to anger and the expression fear and surprise has the lowest recognition rate due to large variations in the region of eyes and mouth of the face.

On the similar basis for the CK data set the average recognition accuracy of different expressions is shown in Table 4. It is detected that for the disgust the average recognition accuracy is highest i.e. 100% followed by anger with 99.4%. The accuracy of the fear and surprise has the lowest recognition rate of 96.5% and 95.1% respectively, which is due to large variations in the region of eyes and mouth.

Table 4. Recognition accuracy for JAFFE and CK datasets

|

Database |

JAFFE |

CK |

||

|

Expression |

SVM (%Accu racy ) |

k-Means (%Accura cy |

SVM (%Accura cy) |

k-Means (%Accura cy) |

|

Anger |

100 |

61.8 |

99.14 |

63.4 |

|

Disgust |

99.4 |

54.1 |

99.8 |

62.1 |

|

Fear |

95.3 |

55.6 |

95.4 |

59.8 |

|

Happy |

98.9 |

58.7 |

98.5 |

61.1 |

|

Sad |

96.8 |

52.4 |

96.3 |

58.5 |

|

Surprise |

94.68 |

53.8 |

94.8 |

57.3 |

Similarly, as shown in Table 5 Yale data set has average recognition accuracy for anger and sad approx. 98%. The accuracy of wink images has the lowest recognition rate of 92.9%.

Table 5. Recognition accuracy for Yale database

|

Expression |

Happy |

Sad |

Sleepy |

Surprise |

Wink |

|

Recognition rate (%) |

98.7% |

97.9% |

95.10% |

94.5% |

92.9% |

-

B. Performance Comparison

In this section, results obtained from proposed work are compared to other state-of-art techniques in Table 6 and Table 7. After an extensive review of literature on expression- invariant techniques, only those works are included that reports their results for JAFFE and Yale database. Table 6 present the performance of the listed methods in form of recognition rates for changeable expressions for JAFFE database. The recognition rates of SVD, LLE-Eigen and FLD-PCA-ANN are shown in the first, second and third row respectively. The proposed warping method is shown in the fourth row. The proposed algorithm is compared with other state-of-art algorithms and provides a significant improvement in the recognition rate.

Table 6. Comparison of recognition accuracy for JAFFE database

|

Algorithm |

Recognition rate |

|

Higher order SVD [36] |

92.96% |

|

LLE-Eigen [37] |

93.93% |

|

PCA- FLD-ANN [35] |

84.90% |

|

Proposed approach |

97.80% |

Table 7 presents the work for state-of-art technique for Yale data set. It is clear from table that the result obtained from proposed technique are better in comparison with the recognition performance of remaining techniques.

Table 7. Performance comparison with other approaches on Yale database

|

Algorithm |

Recognition rate |

|

Gabor-NNDA [38] |

90.00 % |

|

Wavelet-DCT [39] |

91.82 % |

|

FT-DCT-DWT [40] |

82.50 % |

|

Proposed approach |

95.70 % |

-

VI. Conclusion

In this investigation, a recognition system which is invariant to facial expressions is presented. The present method converts an input face image with any arbitrary expression into its corresponding neutral facial image. Deep learning algorithm is used to train the classifiers model for reference key-points and network is trained to locate the landmarks in test image. Then an intermediate triangular mesh is created from the test image and reference image and then images are warped using affine transform and an average of the two is taken. Finally, results are compared with the reference face image and recognition accuracy is determined for different expressions. And it is observed that the average recognition rate is 96.8%, 95.4% and 97.8% for JAFFE, Yale and CK database respectively.

The work presented here has a great contribution in military for public security, surveillance and other fields. Moreover this approach is less expensive then 3D approaches. The future work may involve the development of a recognition method using the proposed technique on multiple variations like illumination and pose. In summary, the present work is an attempt made to devise a face recognition system invariant to expressions.

Список литературы Frame work for expression invariant face recognition system using warping technique

- H. Drira,Ben Amor, B.,A.Srivastava,M. Daoudi, R.Slama, 3D Face Recognition under Expressions, Occlusions, and Pose Variations, IEEE Transactions in PatternAnalysis and Machine Intelligence, vol. 35, no. 9, pp. 2270-2283, 2013.

- Chintalapati, S., Raghunadh,M. V., Illumination, Expression and Occlusion Invariant Pose-Adaptive Face Recognition System for Real-Time Application, International Journal of Engineering Trends and Technology(IJETT), 2014.

- Chellappa R., Wilson C.L. , and Sirohey S., Human and machine recognition of faces: A survey, Proceedings of the IEEE, vo. 83, no. 5, pp. 705-740, 1995.

- Jafri R., Arabnia H. R., A Survey of Face Recognition Techniques, Journal of Information Processing Systems, vol. 5, no. 2, pp. 41-68, 2009.

- Phillips P. J., Moon H., Rauss P. J., and Rizvi S. A., The FERET Evaluation Methodology for Face Recognition Algorithms, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 22, no. 10, pp. 1090-1104, 1995.

- Samal A. and Iyengar P.A., Automatic recognition and analysis of human faces and facial expressions: A survey, Pattern Recognition, vol. 25, no. 1, pp. 65-77, 1992.

- Zhao W., Chellappa R., Rosenfeld A., Phillips P.J., Face Recognition: A Literature Survey, ACM Computing Surveys, vol. 35, no. 5, pp. 399-458, 2003.

- A. M. Martinez, M.-H. Yang, and D. J. Kriegman, Special issue on face recognition, Computer Vision and Image Understanding: CVIU, vol. 91, pp. 1-5, 2003.

- M. Ramachandran, S.K. Zhou, D. Jhalani, and R. Chellappa, A Method for Converting a Smiling Face to A Neutral Face with Application to Face Recognition, Proc. ICAASSP Conf., vol. 2, pp. 977- 980, 2005.

- Y. Liu, K. L. Schmidt, J. F. Cohn, and S. Mitra, Facial asymmetry quantification for expression invariant human identification, Computer Vision and Image Understanding: CVIU, vol. 91, pp. 138-159, 2003.

- H.-S. Lee, and D. Kim, Expression-Invariant Face Recognition by Facial Expression Transformations, Pattern Recognition Letters, vol. 29, no. 13, pp. 1797-1805, 2008.

- P. Tasai, T.P. Tran, and L. Cao, Expression-Invariant Facial Identification, Proceedings of the 2009 IEEE International Conference on Systems, Man, and Cybernetics, pp. 5151-5155, 2009.

- C.-K. Hsieh, S.-H. Lai, and Y.-C. Chen, Expression-Invariant Face Recognition with Constrained Optical Flow Warping, IEEE Trans. on Multimedia, vol. 11, no. 4, pp. 600-610, 2009.

- C. Petpairote and S. Madarasmi, Face Recognition Improvement by Converting Expression Faces to Neutral Faces, ISCIT Conf., pp. 439 – 444, 2013.

- Turk M. and Pentland A., Eigen faces for recognition, Journal of Cognitive Neuroscience, vol. 3, no. 1, pp. 71-86, 1991.

- Lu J., Plataniotis K., and Venetsanopoulos A., Face recognition using LDA based algorithms, IEEE Transactions on Neural Networks, vol. 14, no. 1, pp. 195-200, 2003.

- Kumar C.Magesh, Thiyagarajan R., .Natarajan S.P, Arulselvi S., Sainarayanan G., Gabor features and LDA based Face Recognition with ANN classifier, Proceedings Of ICETECT, 2011.

- Ahonen T, Hadid A, Pietikainen M, Face Recognition with Local Binary Patterns, Proc. Eighth European Conf. Computer Vision, pp. 469-481, 2004.

- Varma Rahul, Sandesh Gupta, and Phalguni Gupta., Face recognition system invariant to expression, In International Conference on Intelligent Computing, Springer International Publishing, pp. 299-307, 2014.

- Lyons Michael J., Akamatsu Shigeru, Kamachi Miyuki and Gyoba Jiro, Coding Facial Expressions with Gabor Wavelets, IEEE International Conference on Automatic Face and Gesture Recognition, Nara Japan, IEEE Computer Society, pp. 200-205, 1998.

- Viola P., and Jones Michael J., Robust real-time face detection, International Journal of Computer Vision, vol. 57, no. 2, pp. 137-154, 2004.

- Yang, Ming-Hsuan, David J. Kriegman, and Narendra Ahuja, Detecting faces in images: A survey, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 24, no. 1,pp. 34-58, 2002.

- Z. Zeng, M. Pantic, G. I. Roisman, T. S. Huang, A Survey of Affect Recognition Methods: Audio, Visual, and Spontaneous Expressions, IEEE Transactions on Pattern Analysis and Machine Intelligent, vo. 31, no. 1, 2009.

- Wiskott, L., et al, Face Recognition by Elastic Bunch Graph Matching, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 19, no. 7, pp. 775-779, 1997.

- F. L. Bookstein, Principle Warps: Thin-Plate Splines and the decomposition of deformations, IEEE Trans. on Pattern Analysis and Machine Intelligence, vol. 11, no. 6, 1989.

- Lowe D., Distinctive image features from scale-invariant key-points, Int. Journal of Computer Vision, vol. 60, no. 2, pp.91-110, 2004.

- Hyvärinen Aapo and Oja Erkki, Independent Component Analysis: Algorithms and Applications, Neural Networks Research Centre Helsinki University of Technology P.O. Box 5400, Finland, Neural Networks, vol. 13, no. 4-5, pp. 411-430,2000.

- Pirlo G, Impedovo D, Cosine similarity for analysis and verification of static signatures, IET Biometric, vol. 2, no. 4, 151–158, 2013.

- Biswas A., Ghose M.K., Expression Invariant Face Recognition using DWT SIFT Features, International Journal of Computer Applications, vol. 92, no. 2 pp. 30-32, 2014.

- Li, X., Mori, G., Zhang, H., Expression-invariant face recognition with expression classification, In Proc. Third Canadian Conf. on Computer and Robot Vision, 77, 2006.

- Patil, H.Y., Kothari, A.G. and Bhurchandi, K.M, Expression invariant face recognition using semidecimated DWT, Patch-LDSMT, feature and score level fusion, Journal Applied Intelligence, vol. 44, no. 4, pp. 913-930, 2016.

- Patil, H.Y., Kothari, A.G. and Bhurchandi, K.M., Expression invariant face recognition using local binary patterns and contourlet transform, Optik-International Journal for Light and Electron Optics, vol. 127, no. 5, pp. 2670-2678, 2016.

- Hua-Chun, T., and Z. Yu-Jin, Expression-independent face recognition based on higher-order singular value decomposition, In The international conference on machine learning and cybernetics, pp. 12-15, 2008.

- Z. Riaz, C. Mayer, M. Wimmer, M. Beetz, and B. Radig, A Model Based Approach for Expressions Invariant Face Recognition, Department of Informatics, Technische Universitat München, pp. 289-298, 2009.

- Tsai P, Jan T, Expression-invariant face recognition system using subspace model analysis, In The IEEE international conference on systems, man and cybernetics, pp. 10–12, 2005.

- Hua-Chun T, Yu-Jin Z, Expression-independent face recognition based on higher-order singular value decomposition, In The international conference on machine learning and cybernetics, Kunming, China, 12–15 July 2008.

- Abusham EE, Ngo D, Teoh A, Fusion of locally linear embedding and principal component analysis for face recognition, In The pattern recognition and image analysis. 1 Jan. 2005.

- Kirtac K, Dolu O, Gokmen M, Face recognition by combining Gabor wavelets and nearest neighbor discriminant analysis:, In The 23rd international symposium on computer and information sciences, 2008.

- Abbas A, Khalil MI, Abdel-Hay S, Fahmy HM , Expression and illumination invariant preprocessing technique for face recognition, In International conference on computer engineering & systems, 2008.

- Lihong Z, Cheng Z, Xili Z, Ying S, Yushi Z, Face recognition based on image transformation, In The WRI global congress on intelligent systems, May 2009.

- http://vision.ucsd.edu/datasets/yale_face_dataset_original/yalefaces.zip

- http://www.consortium.ri.cmu.edu/ckagree/

- Murty G S, SasiKiran J.,Vijaya Kumar V, Facial expression recognition based on features derived from the distinct LBP and GLCM, International Journal of Image, Graphics And Signal Processing (IJIGSP), vol.2, no.1, pp. 68-77,2014.

- Juneja K, Rana C.," Multi Featured Fuzzy based Block Weight Assignment and Block Frequency Map Model for Transformation Invariant Facial Recognition", International Journal of Image, Graphics and Signal Processing(IJIGSP), vol.10, no.3, pp. 1-8, 2018