Human Emotion Recognition System

Автор: Dilbag Singh

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 8 vol.4, 2012 года.

Бесплатный доступ

This paper discusses the application of feature extraction of facial expressions with combination of neural network for the recognition of different facial emotions (happy, sad, angry, fear, surprised, neutral etc..). Humans are capable of producing thousands of facial actions during communication that vary in complexity, intensity, and meaning. This paper analyses the limitations with existing system Emotion recognition using brain activity. In this paper by using an existing simulator I have achieved 97 percent accurate results and it is easy and simplest way than Emotion recognition using brain activity system. Purposed system depends upon human face as we know face also reflects the human brain activities or emotions. In this paper neural network has been used for better results. In the end of paper comparisons of existing Human Emotion Recognition System has been made with new one.

Emotions, Visible color difference, Mood, Brain activity

Короткий адрес: https://sciup.org/15012363

IDR: 15012363

Текст научной статьи Human Emotion Recognition System

Published Online August 2012 in MECS

We know that emotions play a major role in a Human life. At different kind of moments or time Human face reflects that how he/she feels or in which mood he/she is. Humans are capable of producing thousands of facial actions during communication that vary in complexity, intensity, and meaning. Emotion or intention is often communicated by subtle changes in one or several discrete features.

The addition or absence of one or more facial actions may alter its interpretation. In addition, some facial expressions may have a similar gross morphology but indicate varied meaning for different expression intensities. In order to capture the subtlety of facial expression in non-verbal communication,

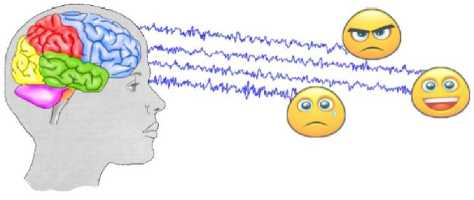

I will use an existing simulator which will be able to capture human emotions by reading or comparing facial expressions. This algorithm automatically extracts features and their motion information, discriminate subtly different facial expressions, and estimate expression intensity.Fig1 is showing how Emotion recognition using brain activity performs its task.

Fig. 1: Emotion recognition using brain activity

-

II. Problem definition

As described in the overview, the purpose of this paper is to analyze the limitations with existing system Emotion recognition using brain activity [1]. In Emotion recognition using brain activity the developer Robert Horlings has used brain activities which is toughest task to do as it become expensive, complex and also time consuming when we try to measure human brain with Electroencephalography (eeg).

They have used existing data and the result of their analysis were 31 to 81 percentage correct and from which even by using Fuzzy logic 72 to 81 percentage only for two classes of emotions. Apparently the division between different emotions is not (only) based on EEG signals it depend on some another.

In this paper I am going to purpose a system (by using an existing simulator) which is capable for achieving up to 97 percentage results and easy than Emotion recognition using brain activity system. My purposed system depends upon human face as we know face also reflects the human brain activities or emotions. In this paper I have also try to use neural network for better results by using a existing simulator.

-

III. Human Emotions

Emotion is an important aspect in the interaction and communication between people. Even though emotions are intuitively known to everybody, it is hard to define emotion. The Greek philosopher Aristotle thought of emotion as a stimulus that evaluates experiences based on the potential for gain or pleasure. Years later, in the seventeenth century, Descartes considered emotion to mediate between stimulus and response [2]. Nowadays there is still little consensus about the definition of emotion. Kleinginna and Kleinginna gathered and analyzed 92 definitions of emotion from literature present that day [3]. They conclude that there is little consistency between different definitions and suggested the following comprehensive definition:

Emotion is a complex set of interactions among subjective and objective factors, mediated by neural/hormonal systems, which can:

-

1. Give rise to affective experiences such as feelings of arousal, pleasure/displeasure;

-

2. Generate cognitive processes such as emotionally relevant perceptual effects, appraisals, labelling processes;

-

3. Activate widespread physiological adjustments to the arousing conditions; and

-

4. Lead to behaviour that is often, but not always, expressive, goal directed, and adaptive.

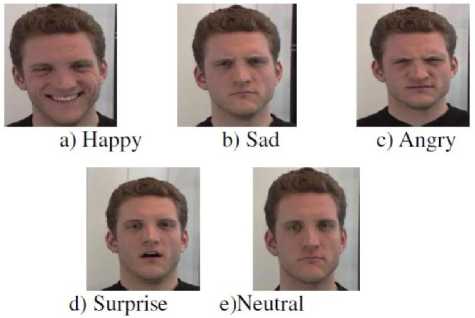

This definition shows the different sides of emotion. On the one hand emotion generates specific feelings and influences someone’s behavior. This part of emotion is well known and is in many cases visible to a person himself or to the outside world. On the other hand emotion also adjusts the state of the human brain, and directly or indirectly influences several processes [2]. Fig2 is showing different Human emotions which are use able in order to achieve our goal.

Fig. 2: Different Human emotions

In spite of the difficulty of precisely defining it, emotion is omnipresent and an important factor in human life. People’s moods heavily influence their way of communicating, but also their acting and productivity. Imagine two car drivers, one being happy and the other being very mad. They will be driving totally different. Emotion also plays a crucial role in all-day communication. One can say a word like `OK' in a happy way, but also with disappointment or sarcasm. In most communication this meaning is interpreted from the tone of the voice or from non-verbal communication. Other emotions are in general only expressed by body language, like boredom [1].

A large part of communication is done nowadays by computer or other electronic devices. But this interaction is a lot different from the way human beings interact. Most of the communication between human beings involves non-verbal signs, and the social aspect of this communication is important. Humans also tend to include this social aspect when communicating with computers [3].

-

IV. Related Work

Research efforts in human–computer interaction are focused on the means to empower computers (robots and other machines) to understand human intention, e.g. speech recognition and gesture recognition systems [1]. In spite of considerable achievements in this area during the past several decades, there are still a lot of problems, and many researchers are trying to solve them. Besides, there is another important but ignored mode of communication that may be important for more natural interaction: emotion plays an important role in contextual understanding of messages from others in speech or visual forms.

There are numerous areas in human–computer interaction that could effectively use the capability to understand emotion [2], [3]. For example, it is accepted that emotional ability is an essential factor for the nextgeneration personal robot, such as the Sony AIBO [4]. It can also play a significant role in ‘intelligent room’ [5] and ‘affective computer tutor’ [6]. Although limited in number compared with the efforts being made towards intention-translation means, some researchers are trying to realise man–machine interfaces with an emotion understanding capability.

Most of them are focused on facial expression recognition and speech signal analysis [7]. Another possible approach for emotion recognition is physiological signal analysis. We believe that this is a more natural means of emotion recognition, in that the influence of emotion on facial expression or speech can be suppressed relatively easily, and emotional status is inherently reflected in the activity of the nervous system. In the field of psychophysiology, traditional tools for the investigation of human emotional status are based on the recording and statistical analysis of physiological signals from both the central and autonomic nervous systems [8].

Researchers at IBM recently reported an emotion recognition device based on mouse-type hardware [9]. Picard and colleagues at the MIT Media Laboratory have been exerting their efforts to implement an ‘affective computer’ since the late 1990s [10]. Although they demonstrated the feasibility of a physiological signal-based emotion recognition system, several aspects of its performance need to be improved before it can be utilized as a practical system. First, their algorithm development and performance tests were carried out with data that reflect intentionally expressed emotion.

Moreover, their data were acquired from only one subject, and, hence, their emotion recognition algorithm is user-dependent and must be tuned to a specific person. It seems natural to start from the development of a userdependent system, as the speech recognition system began with a speaker-dependent system. Nevertheless, a user-independent system is essential for practical application, so that the users do not have to be bothered with training of the system. To our knowledge, there is no previous study that has demonstrated a physiological signal-based emotion recognition system that is applicable to multiple users.

Another problem with current systems is the required length of signals. At present, at least 2–5 min of signal monitoring is required for a decision [11]. For practical purposes, the required monitoring time should be reduced further. In this paper, a novel emotion recognition system based on the processing of physiological signals is presented. This system shows a recognition ratio much higher than chance probability, when applied to physiological signal databases obtained from tens to hundreds of subjects.

The system consists of characteristic waveform detection, feature extraction and pattern classification stages. Although the waveform detection and feature extraction stages were designed carefully, there was a large amount of within-class variation of features and overlap among classes. This problem could not be solved by simple classifiers, such as linear and quadratic classifiers, that were adopted for previous studies with similar purposes.

-

V. The scope of this research work

-

i. This research work deals with measuring the facial expressions of human beings.

-

ii. It does not deal with rest of body of the humans.

-

iii. As there exist some methods which do the same job they are also considered in this research work.

-

iv. Since it is not feasible to run these algorithms in real environment, therefore a simulator is developed which will simulate the proposed work.

-

v. Different type of tests will be implemented using proposed strategy.

-

vi. Visualization of the experimental results and drawing appropriate performance analysis.

-

vii. Appropriate conclusion will be made based upon performance analysis.

-

viii. For future work suitable future directions will be drawn considering limitations of existing work.

Throughout the research work emphasis has been on the use of either open source tools technologies or licensed software.

-

VI. Research Methodology

The step-by-step methodology to be followed for Human emotion recognition system using neural networks:

-

1. Study and analyze various old techniques for human emotion recognition.

-

2. Based upon above analysis a simulator is developed for Human emotion recognition by using MATLAB 7.5 version (I have only used the simulator it is developed by a team of Luigi Rosa 'ITALY').

-

3. Results achieved after the execution of program are compared with the earlier outputs.

Requirements: Matlab, Matlab Image Processing Toolbox, Matlab Neural Network Toolbox.

-

VII. How Emotion recognition system works

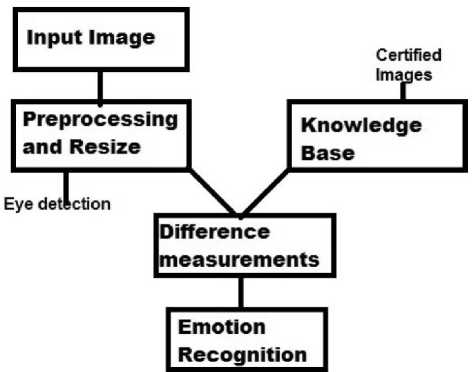

Consider the Fig8 in which required steps are given for emotion recognition.

Fig. 8: How Emotion recognition system works.

-

A. Knowledge Base

It contains certified images which we will use for comparisons for the sake of emotion recognition. These images are highly qualified and these are stored in given database. Whenever any input is given to system, system will find the relevant picture from knowledge base by comparing input to certified images and gives a output (emotion).

-

B. Preprocessing and Resize

The main goal of this step is to enhance input image and also remove various type of noises. After applying these techniques we need to resize the image that is consider only human face this is done by using eye selection method.

-

C. Difference Measurements

It will find the difference between the input image and the certified images (stored in knowledge base) and give result to emotion recognition step.

-

D. Emotion Recognition

It will get output from Difference measurement and compare the result and give output depend on minimum difference.

-

VIII. EigenExpressions for Facial Expression Recognition

Luigi Rosa has proposed an algorithm for facial expression recognition which can classify the given image into one of the seven basic facial expression categories (happiness, sadness, fear, surprise, anger, disgust and neutral). PCA is used for dimensionality reduction in input data while retaining those characteristics of the data set that contribute most to its variance, by keeping lower-order principal components and ignoring higher-order ones. Such low-order components contain the "most important " aspects of the data. The extracted feature vectors in the reduced space are used to train the supervised Neural Network classifier.

This approach results extremely powerful because it does not require the detection of any reference point or node grid. The proposed method is fast and can be used for real-time applications. This code has been tested using the JAFFE Database. Using 150 images randomly selected for training and 63 images for testing, without any overlapping, we obtain an excellent recognition rate greater than 83 percent.

Major features:

-

1. Facial expression recognition based on Neural Networks.

-

2. PCA-based dimensionality reduction.

-

3. High recognition rate.

-

4. Intuitive GUI(Graphical User Interface).

-

5. Easy C/C++ implementations

-

IX. Experimental Results

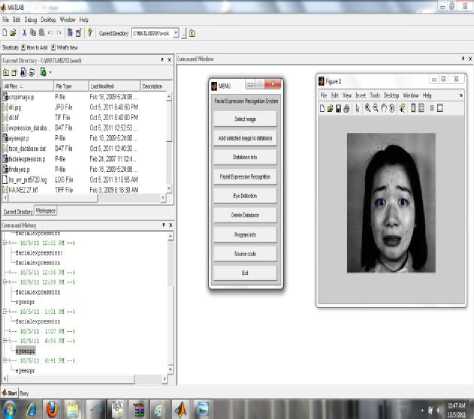

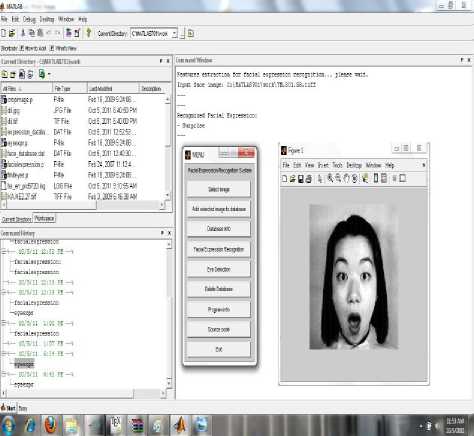

I have used Luigi Rosa's EigenExpressions for Facial Expression Recognition simulator in which by giving many inputs and get many outputs which are correct to great extent some of the results are shown in Fig. 9 and Fig. 10:-

Fig. 9:Eyedetection in EigenExpressions for Facial Expression Recognition simulator

Fig.10: Surprised recognition in EigenExpressions for Facial Expression Recognition simulator

-

X. Proposed Algorithm

As I have shown in Fig. 8 Here I am going to explain that how my Purposed system going to achieve the work and also what will the Design of my system is. As I have mentioned so far my system will have capability to measure different type of emotions at a moment (like a man is laughing but actually he is sad). The algorithm I have developed is as:-

Step 1: Input a image f(x,y).

Step 2: Apply Enhancement and Restoration process to detect face accurately and get g(x,y).

Step 3: % compare image with emotion categories and each category have some different sort of images like happy, very happy or a sad showing that he is happy and store them a array emotion[i][j] where I reflects section of emotion type and j reflecting section which actually have image related to i.

For(i=1;i=section size;i++)

For(j=1;j=total images in i;j++) if (g(x,y) like emotion[i][j]) Result[i]= %age of results;

%Result[i] gives the relative measurements of g(x,y) like emotion[i][j];

Break;

Step 4: For(i=1;i=section size;i++) Print section "have" Result[i];

Step 5: Exit

-

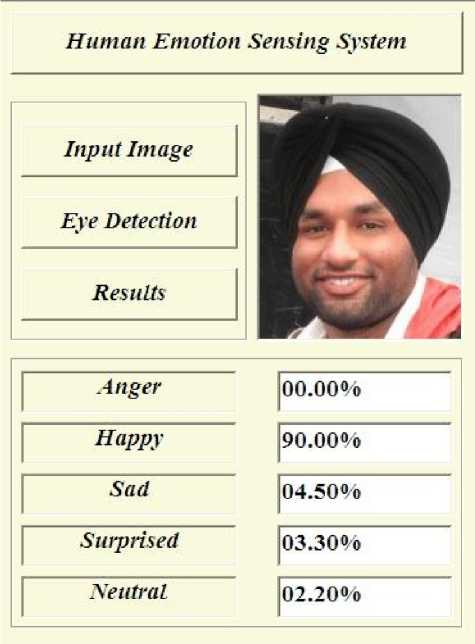

XI. Developed Simulator

In this section I am going to define the design of my system that is how it looks like or what will happen if you give this kind of input. The design has been developed in visual basic and it has been implement it using Matlab. Fig. 11 is showing my purposed system when it run first time.

In this system I have added three buttons

-

a. Input Image: This button will allow user to browse and find their image of interest.

-

b. Eye Detection: This button is actually need to focus on human face by comparing their eyes.

-

c. Results: Here my developed algorithm will actually work and give results like shown in Fig12. Fig.12 is showing the output of my purposed system when it will actually implemented.

Fig. 11: How Human Emotion Sensing System looks like.

-

XII. Performance Results

After implementing Luigi Rosa's EigenExpressions for Facial Expression Recognition simulator I have used the performance or output of these results to compare with other method's results. An artificial neural network trained using Levenberg Marquardt algorithm was developed for emotion recognition through facial expression [2]. Fig13 illustrates the comparative performance with other approaches [3-5]. As evident the proposed approach has given very given performance for the datasets considered. We plan to extend this work for intelligent surveillance systems to detect anomaly behaviours (example: predict potential terrorist activities) in public places. We also target to develop adaptive selection strategies to detect important features etc.

Results are as shown:

Table I Comparison of different approaches for Human Sense Recognition

|

Classifier |

Performance% |

|

Proposed Method |

98 |

|

K- Nearest Neighbors |

80 |

|

Rule Based System |

88 |

|

Optical Flow Based System |

98 |

|

Multilayer Perception |

93.3 |

|

Template Matching |

95.5 |

|

Hidden Markov Model |

85 |

|

Support Vector Machines |

79.3 |

|

Adaptive Neuro Fuzzy System |

76.4 |

Fig12: Results of Human Emotion Sensing System

Table III. Comparison of modelling facial expressions with modelling speech using HMMs.

|

Facial Expression |

Speech |

|

|

Human Action |

Audio Action |

Visual Action |

|

Dimension (Including Time Series) |

2-dimensional Signals |

3 or 4dimensional Signals |

|

Action Unit |

Phoneme |

Expression Unit: Individual AUs or AU Combinations |

|

HMM Unit |

1st-order 3-state HMM |

2nd-order 3-state HMM for Upper Facial Expression and 3rd-order 4-state HMM for Lower Facial Expression. |

|

HMM Unit Combinations |

One Word |

One Basic Facial Expression ( e.g. , joy) |

|

Concatenated HMM Unit Combinations |

Sentences |

Continuously Varying Basic Facial Expressions |

-

A. Correspondence between facial expressions and elements of the Hidden Markov Model.

Correspondence between facial expressions and elements of the Hidden Markov Model is shown in Table II.

Table II Correspondence between facial expressions and elements of the Hidden Markov Model.

|

Facial Expression |

Hidden Markov Model |

|

|

Hidden Process |

Mental State |

Model State |

|

Observable |

Expression (Facial Action) |

Symbol Sequence |

|

Temporal Domain |

Dynamic Behavior |

A Network of State Transition |

|

Characteristics |

Expression State Transition |

Probability and Symbol Probability |

|

Recognition |

Expression Similarity |

The Confidence of Output Probability |

-

B. Comparison of modeling facial expressions with modeling speech using HMMs.

Comparison of modeling facial expressions with modeling speech using HMMs has been shown in Table III.

-

XIII. Conclusion

In this paper we have analyzed the limitations of existing system Emotion recognition using brain activity. In 'Emotion recognition using brain activity' brain activities using EEG signals has been used which is toughest task to do as it become expensive, complex and also time consuming when we try to measure human brain with Electroencephalography (eeg).

Even when they have used existing data their result of analysis were 31 to 81 percentages correct and from which even by using Fuzzy logic 72 to 81 percentages only for two classes of emotions were achieved. This paper also gives us idea that we can sense human emotions also by reading and comparing the faces with images or data which is stored in knowledge base. In this paper by using a system which is trained by neural networks we have achieved up to 97 percent accurate results.

-

XIV. Future Work

Due to lack of experience in this research field I have spent my most of time to understand basic concepts and existing techniques than building my own technique. However we have seen that we have got good results by using a simulator which uses neural network. But still this simulator has some limitations like at a time it will give results in one answer like Yes/No so for future work I will try to add Fuzzy logic membership function in it as one input may belong to different areas than only to a single one.

In future work I will also try to add the application of Gabor filter based feature extraction in combination with neural network for the recognition of different facial emotions with more accuracy and better performance.

-

XV. References

-

[1] L. Franco, Alessandro Treves “A Neural Network Facial Expression Recognition System using Unsupervised” Local Processing, Cognitive Neuroscience Sector – SISSA.

-

[2] C. L. Lisetti, D. E. Rumelhart “Facial Expression Recognition Using a Neural Network” In Proceedings of the 11 th International FLAIRS Conference, pp. 328—332, 2004.

-

[3] C. Busso, Z. Deng , S. Yildirim , M. Bulut , C.M. Lee, A. Kazemzadeh , S.B. Lee, U. Neumann , S. Narayanan Analysis of Emotion Recognition using Facial Expression , Speech and Multimodal Information, ICMI’04, PY, USA, 2004

-

[4] Claude C. Chibelushi, Fabrice Bourel “Facial Expression Recognition: A Brief Tutorial

-

Overview”.

-

[5] C. D. Katsis, N. Katertsidis, G. Ganiatsas, and D. I. Fotiadis, Toward Emotion Recognition in CarRacing Drivers:A Biosignal Processing Approach, IEEE Transactions On Systems, Man, And Cybernetics—Part A: Systems And Humans, Vol. 38, No. 3, 2008.

-

[6] ANDREASSI, J. L. (2000): “Psychophysiology: human behavior and physiological response” (Lawrence Erlbaum Associates, New Jersey, 2000)

-

[7] ARK, W., DRYER, D. C., and LU, D. J. (1999): “The emotion mouse”. 8th Int. Conf. Humancomputer Interaction, pp. 818–823.

-

[8] ARKIN, R. C., FUJITA, M., TAKAGI, T., and HASEGAWA, R. (2001): “Ethological modeling and architecture for an entertainment robot”. IEEE Int. Conf. Robotics & Automation, pp. 453–458

-

[9] BERGER, R. D., AKSELROD, S., GORDON, D., and COHEN, R. J. (1986): “An efficient algorithm for spectral analysis of heart rate variability”, IEEE Trans. Biomed. Eng., 33, pp. 900–904

-

[10] BING-HWANG, J., and FURUI, S. (2000): ‘Automatic recognition and understanding of spoken language—a first step toward natural human-machine communication’, Proc. IEEE, 88, pp. 1142–1165.

-

[11] BOUCSEIN, W. (1992): “Electrodermal activity’ (Plenum Press, New York, 1992) BROERSEN, P. M. T. (2000a): ‘Facts and fiction in spectral analysis”, IEEE Trans. Instrum. Meas., 49, pp. 766–772.

Dilbag Singh is a student of Guru Nanak Dev University, Amritsar Punjab India in Department of Computer Science and Engineering. He has completed his master degrees in computer science in 2010 at Guru Nanak Dev University, Amritsar Punjab. Now he is going to complete his M. tech in June 2012. His

research interests include Parallel computing, software structure, embedded system, object detection and identification, and location sensing and tracking.

Список литературы Human Emotion Recognition System

- L. Franco, Alessandro Treves “A Neural Network Facial Expression Recognition System using Unsupervised” Local Processing, Cognitive Neuroscience Sector – SISSA.

- C. L. Lisetti, D. E. Rumelhart “Facial Expression Recognition Using a Neural Network” In Proceedings of the 11 th International FLAIRS Conference, pp. 328—332, 2004.

- C. Busso, Z. Deng , S. Yildirim , M. Bulut , C.M. Lee, A. Kazemzadeh , S.B. Lee, U. Neumann , S. Narayanan Analysis of Emotion Recognition using Facial Expression , Speech and Multimodal Information, ICMI’04, PY, USA, 2004

- Claude C. Chibelushi, Fabrice Bourel “Facial Expression Recognition: A Brief Tutorial Overview”.

- C. D. Katsis, N. Katertsidis, G. Ganiatsas, and D. I. Fotiadis, Toward Emotion Recognition in Car-Racing Drivers:A Biosignal Processing Approach, IEEE Transactions On Systems, Man, And Cybernetics—Part A: Systems And Humans, Vol. 38, No. 3, 2008.

- ANDREASSI, J. L. (2000): “Psychophysiology: human behavior and physiological response” (Lawrence Erlbaum Associates, New Jersey, 2000)

- ARK, W., DRYER, D. C., and LU, D. J. (1999): “The emotion mouse”. 8th Int. Conf. Human-computer Interaction, pp. 818–823.

- ARKIN, R. C., FUJITA, M., TAKAGI, T., and HASEGAWA, R. (2001): “Ethological modeling and architecture for an entertainment robot”. IEEE Int. Conf. Robotics & Automation, pp. 453–458

- BERGER, R. D., AKSELROD, S., GORDON, D., and COHEN, R. J. (1986): “An efficient algorithm for spectral analysis of heart rate variability”, IEEE Trans. Biomed. Eng., 33, pp. 900–904

- BING-HWANG, J., and FURUI, S. (2000): ‘Automatic recognition and understanding of spoken language—a first step toward natural human-machine communication’, Proc. IEEE, 88, pp. 1142–1165.

- BOUCSEIN, W. (1992): “Electrodermal activity’ (Plenum Press, New York, 1992) BROERSEN, P. M. T. (2000a): ‘Facts and fiction in spectral analysis”, IEEE Trans. Instrum. Meas., 49, pp. 766–772.