Image retrieval based local motif patterns code

Автор: A.Obulesu, V. Vijay Kumar, L. Sumalatha

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 6 vol.10, 2018 года.

Бесплатный доступ

We present a new technique for content based image retrieval by deriving a Local motif pattern (LMP) code co-occurrence matrix (LMP-CM). This paper divides the image into 2 x 2 grids. On each 2 x 2 grid two different Peano scan motif (PSM) indexes are derived, one is initiated from top left most pixel and the other is initiated from bottom right most pixel. From these two different PSM indexes, this paper derived a unique LMP code for each 2 x 2 grid, ranges from 0 to 35. Each PSM minimizes the local gradient while traversing the 2 x 2 grid. A co-occurrence matrix is derived on LMP code and Grey level co-occurrence features are derived for efficient image retrieval. This paper is an extension of our previous MMCM approach [54]. Experimental results on popular databases reveal an improvement in retrieval rate than existing methods.

Peano scan, Optimal scan, Co-occurrence matrix, Image query

Короткий адрес: https://sciup.org/15015973

IDR: 15015973 | DOI: 10.5815/ijigsp.2018.06.07

Текст научной статьи Image retrieval based local motif patterns code

Published Online June 2018 in MECS DOI: 10.5815/ijigsp.2018.06.07

Digital images provide vital, huge amount of information and also give more clarity and understanding about the object. Today Powerful handy cameras with low price, high resolution and huge storage are available widely. Today human beings are mostly communicating with the help of images due to wide availability of internet with huge band width at low price. This has given lot of significance and challenges for accurate effective and efficient image retrieval.

In the initial days of image retrieval (IR), images are retrieved based on text indexes attached to each image. These methods require lot of human interaction and that’s why they are not popular. To overcome this, around 1990’s, IR methods, based on low level features, are proposed and named as “content-based image retrieval (CBIR)”. The goal of CBIR system is to retrieve set of most similar images from the database of images that matches closely or exactly with the query image, and this matching.The CBIR feature descriptors can be divided into three categories: global, regional and local. The local features and descriptors exhibit higher discriminative power than global features [1]. The global features are derived for the entire image and local features are derived on local neighborhood patches. The global image features are represented by color information by Chen et al. [2] by using the image color distributions. Color difference histogram (CDH) is designed for color image analysis [3]. Wang et al. [4] represented global image information effectively based on the texture, color, and shape features. The demerit of these global feature methods is, that they have not encoded the relationship among local neighboring structures and pixels. Region based approaches are also proposed for CBIR in the literature [5, 6]. Basically these methods divide the image into different blocks or regions of fixed size or of different sizes. The region based approach [5] requires human interaction in the middle of retrieval process. Region based CBIR methods also considered the image spatial and color arrangements for effective retrieval [6]. These region based approaches [5, 6] have shown excellent retrieval performance however with high computational time and with too many dimensional features. The methods based on local features are extensively used in various computer vision and image processing applications and achived excellent results [ 7-15] The local features of image are color, shape, texture, edge and etc.… Among these local features the color is the most significant and prominent feature and have profound impact on human perception. The color based methods [16, 17] are very popular in CBIR due to their effectiveness and low computational complexity. Texture represents prominent local information and texture feature represents randomness, coarseness, smoothness etc…The popular texture descriptors include gray level co-occurrence matrices, the Markov random field (MRF) model [18],Gobor filtering [19] and the local binary pattern (LBP) [20].In MPEG-7 standard, the texture features are derived using homogeneous texture descriptor, texture browsing descriptor and the edge histogram descriptor [21].

Images are also represented by different types of structures or shapes present in the image [22, 23]. The popular classical shape descriptors are Fourier transforms coefficients [24], Moment invariants [25] and MPEG-7 es[26]. Further, CBIR models based on local structures are very popular in the literature because they describe significant features of the image efficiently and precisely [ 27, 28, 29, 30, 31, 33]. The Texton co-occurrence matrix (TCM) [27] represents the spatial correlation of textons, and derives statistical features. The cooccurrence matrix features are integrated with histogram features in Multi-texton histogram (MTH) [28].The color, texture, shape information of a micro structure are integrated with similar edge orientation in Microstructure descriptor (MSD) [29]. The color and texture features are extracted on a local basis in Structure elements’ descriptor (SED) [30].To compute the uniform color difference between two points under different backgrounds efficiently, Color difference histogram (CDH) [31] is proposed and it combines color features edge orientation. Hybrid information descriptors (HIDs) extract features among different image feature spaces with image structure and multi-scale analysis [32].The local directional information from local extrema pattern is extracted by using Local extrema co-occurrence pattern (LECoP) [33]. The color volume and edge information are integrated to notice bar-shaped structures for image representation by using saliency structure histogram (SSH) [3]. These structure based methods have shown promising results in image retrieval, but their performance degrades under rotation and scaling. In the image retrieval and image classification problems, it is not possible to encode the exact information contained by an image, using only one type of features such as color or texture or structure or shape. Therefore, it becomes highly desirable to merge these features in such a way that dimensionality should not increase too much. In this paper, a novel image feature description method, called multi-trend structure descriptor, is proposed for CBIR. The proposed method extracts image features from multiple perspectives and pays more attention to the local spatial structure information.

The paper is summarized as follows: The related work is presented in Section 2. The proposed method is explained in Section 3. Experimental results and discussions are given in Section 4. Section 5 concludes the paper.

-

II. Related Work

The basic unit of any image is the grey level intensity of a pixel. And any image is treated as a two dimensional array of pixels. A pattern represents a connected component, i.e., a set of adjacent pixels with similar intensities or range of intensities or attributes. A pattern derived on a neighborhood represents a shape. Researchers established many methods that derive significant features from local neighborhoods especially on a 3 x 3 neighborhood [27, 28, 29, 30]. The space filling curves or Peano Scans also derive a shape. The Peano scans are connected points spanned over a boundary and known as space filling curves. The connected points may belong to two or higher dimensions. Space curves are basically straight lines that pass through each and every point of the bounded sub space or grid exactly once in a connected manner. The Peano scans are more useful in pipelined computations where scalability is the main performance objective [34, 35, 36] and other advantage of that is they provide an outline for handling higher dimensional data, which is not easily possible by the use of conventional methods [37].The methods based on space filling curves have been studied [38] in the literature for various applications like CBIR [39, 40, 41], data compression [42], texture analysis [43, 44] and computer graphics [45].

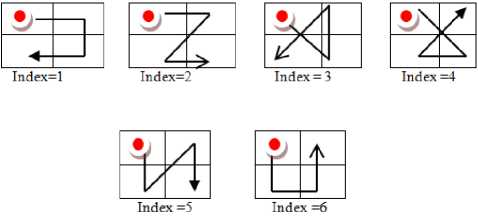

Recently a set of six Peano scan motifs (PSM) over a 2 x 2 grid is proposed in the literature [46]. The PSMs are derived based on the ability of space curves in capturing the low level information. The initial point of this PSM [47] is fixed and it usually starts from top left corner of the 2 x 2 grid. The PSM are given indexes from 0 to 5. Each of these six different motifs represents a distinct sequence of pixels on the 2 x 2 grid and shown in Fig 1. These motifs constructions is similar to a breadth first traversal of the Z-tree. A compound string is generated using the six motifs, by traversing the 2 x 2 grid based on the incremental value of contrast. In Motif co-occurrence Matrix (MCM) [47] approach, initially the image is transformed into Motif index image, where each pixel is assigned with a specific motif index ranging from 0 to 5. A co-occurrence matrix is built on motif indexed image and the features derived on MCM are used for efficient CBIR.

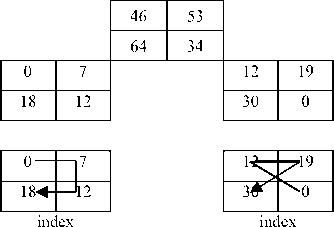

Fig.1(a). Primitive motifs initiated from top left most pixel on a 2 x 2grid.

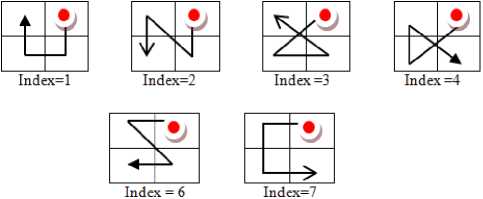

Fig.1(b). Primitive motifs initiated from top right most pixel on a 2 x 2grid.

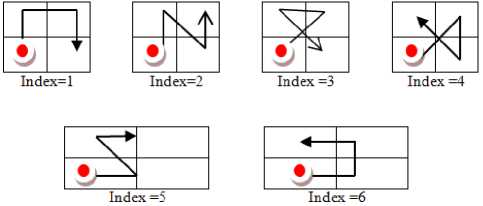

Fig.1(c). Primitive motifs initiated from bottom left most pixel on a 2 x 2grid.

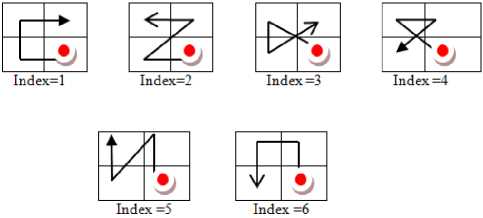

Fig.1(d). Primitive motifs initiated from bottom right most pixel on a 2 x 2grid.

-

III. Methodology

To obtain the local features more efficiently than MCM, this paper has derived a Local motif pattern (LMP) code. The LMP code is derived by computing two different PSMs, initiated from two different positions of the 2 x 2 grid. The first PSM is computed by initiating from top left most corners (Fig. 1 (a)) and the second PSM (second type) initiates from bottom right most corner of the 2 x 2 grid (Fig. 1 (d)). The PSMs are computed by minimizing the variation of local intensities among the pixels in a local neighborhood. Each PSM generates a motif index ranging from 0 to 5. And based on these two different PSMs indexes, this paper computed “Local Motif Pattern” (LMP) code in base 6. A unique decimal code is derived for LMP, based on the two different PSMs on the 2 x 2 grid and the 2 x 2 grid is replaced with LMP code. The unique decimal code for LMP is generated by multiplying the PSM indexes with base 6 and summing them as given in equation 1.

LMP = T^m,* б^"1 (1)

Where m j is the motif index (ranging from 0 to 5) for the PSM type j and j ranges from 1 to 2. The LMP code ranges from 0 to 6j-1 and for two different PSMs i.e. j=2, the LMP code ranges from 0 to 35. This process is repeated on entire image in a non-overlapped manner and 2 x 2 grid is replaced by the LMP code. The Fig. 2 shows the transformation process of the 2 x 2 grid into LMP code. The derivation of LMP coded image on a 8x8 gray level image is shown in Fig.3. The Fig.3(a) displays the gray level image. The Fig.3(b) and 3(c) displays the two different transformed PSM indexed images. The Fig.

3(d) displays the final PSM coded image derived from the Fig. 3(b) and 3(c), based on equation 1.

The present paper computed co-occurrence matrix (CM) on the transformed LMP coded image. The LMP-CM derives rich information about the local features of the texture.The LMP-CM is constructed using the transformed image whose (i, j, k) entry represents the probability of finding a LMP code i at a distance k from the LMP code j. The LMP-CM is also constructed on the query image Q. The main intuition behind this is, to find out the common objects i.e. LMP code corresponding to the same grid, in between query and database images. The spatial relationship between the corresponding LMP code with two different scans between query and data base images makes the proposed LMP-CM highly effective in image retrieval. The proposed LMP-CM is highly suitable for CBIR problem because the size of the feature vector of LMP-CM is only 36 X 36 irrespective of the image size and grey level range of the original image.

The main contributions of this paper are given below:

-

1. The existing MCM has derived motifs that are initiated from only one initial point i.e., top left most pixel of a 2 x 2 grid, whereas our proposed LMP derives motifs from two different initial points on the 2 x 2 grid i.e., top left and bottom right pixels.

-

2. The existing MCM ranges from 6 x 6, which is not able to provide powerful texture discriminative information due to low range of index values and scanning in one direction,whereas the proposed system derives a smart unique LMP code ranges from 0 to 62-1,based on the LMP indexes on each scan.

-

3. Our earlier approach MMCM [46] derived cooccurrence matrix based on two peano scan motifs, however MMCM is not derived a unique code.

-

4. The derivation of a unique LMP code on a 2 x 2 grid, made the proposed method equivalent or similar to LBP method, which derives a unique code on 3 x 3 neighborhoods.

0 3

**

61 =18

Fig.2. Generation of LMP code for j= 2 (LMP j=2 ) on a 2 x 2 grid.

|

2 |

52 |

36 |

45 |

12 |

52 |

14 |

56 |

|

25 |

45 |

16 |

28 |

59 |

63 |

45 |

25 |

|

42 |

51 |

26 |

35 |

85 |

69 |

42 |

85 |

|

74 |

52 |

16 |

38 |

56 |

92 |

15 |

63 |

|

19 |

63 |

98 |

65 |

78 |

45 |

62 |

36 |

|

52 |

36 |

48 |

59 |

86 |

74 |

15 |

85 |

|

45 |

56 |

58 |

92 |

96 |

36 |

97 |

48 |

|

98 |

41 |

86 |

59 |

93 |

74 |

75 |

23 |

(a) An 8x8 image.

|

0 |

5 |

2 |

4 |

|

3 |

2 |

5 |

4 |

|

4 |

3 |

4 |

5 |

(b) PSM indexed image initiated from top left corner

|

1 |

5 |

2 |

5 |

|

0 |

0 |

4 |

4 |

|

3 |

1 |

5 |

4 |

|

1 |

5 |

2 |

5 |

(c) PSM indexed image initiated From bottom right corner

Fig.4. Corel-1K database sample images.

|

6 |

35 |

14 |

34 |

|

3 |

2 |

29 |

28 |

|

22 |

9 |

34 |

29 |

|

29 |

34 |

18 |

7 |

(d) Local motif pattern coded image of (a)

Fig.3.Formation of local motif pattern coded image.

-

IV. Results and Discussions

In this paper the proposed LMP-CM is tested on popular and bench mark databases, of CBIR namely, Corel 1k [48], Corel-10k [49], MIT-VisTex [50], Brodtaz [51] and CMU-PIE [52]. These five data bases are used in many image processing applications. The images of these data bases are captured under varying lighting conditions and with different back grounds. Out of these data bases the Brodtaz textures are in grey scale and rest of the data bases are in color. The CMU-PIE database contains facial images, captured under different varying conditions like: illuminations, pose, lighting and expression. The natural database that represents humans, sceneries, birds, animals, and etc., are part of Corel database. The above five sets of different data bases contain different number of total images, with different sizes, textures, image contents, classes where each class consists of different sets of similar kind of images. The Table 1 gives overall summary of these five data bases. The image samples of these databases are shown from Fig. 4 to Fig. 8.

Fig.5. Corel-10K database sample images.

Fig.6. The sample textures from Brodtaz database.

Fig.7. The sample textures from MIT-VisTex texture.

CM, of each image in the database is represented as VDB i = (VDB i1 ,VDB i2 ,...,VDB in ); i = 1, 2,..., DB. The aim of any CBIR method is, to select ‘n’ best images from the database image that look similar to query image. To accomplish this, distance between the corresponding feature vectors of the query and image in the database DB is computed. From this, the top ‘n’ images whose distance measure is least are selected. This paper used Euclidean distance (ED) on the proposed method as given below in equation 2.

ED:

The retrieval of images is carried out in the following way. The query image is denoted as ‘Q’. After the feature extraction from LMP-CM of query image, the n-feature vectors of ‘Q’ are represented as VQ = (VQ1, VQ2,..., VQ (n-1) , VQ n ). The extracted n-feature vectors, of LMP-

D(QJ a) = 1 ^=1

f DB lj - fQj 1+ f DBtj + fQj

Table 1. Summary of the image databases.

|

No. |

Name of the Database |

Type of database |

Size of the image |

Number of categories/ classes/subsections |

Number of images per category |

Total number of images |

|

1 |

Corel-1k [48] |

Natural database: vary from humans to animals to sceneries |

384x256 |

10 |

100 |

1000 |

|

2 |

Corel-10k [49] |

Natural database: vary from humans to animals to sceneries |

120x80 |

80 |

Vary |

10800 |

|

3 |

MIT-VisTex[50] |

Texture database |

128x128 |

40 |

16 |

640 |

|

4 |

Brodtaz640 [51] |

Texture database: |

128x128 |

40 |

16 |

640 |

|

5 |

CMU-PIE [52] |

Facial images captured under varying pose, illuminations, expression and lighting. |

640x486 |

15 |

Vary |

702 |

Fig.8. The sample facial images from CMU-PIE database.

To measure the retrieval performance of the proposed LMP-CM, this paper computed the two mostly used quality measures, average precision/average retrieval precision (ARP) rate and average recall/average retrieval rate (ARR) as shown below: For the query image Iq, the precision is defined as follows:

Precision : P (I q ) =

Number of relevant images retrieved

Total number of images retrieved

ARP = ^fff P(h) | (4)

Recoil : R(Iq) =

Number of relevant images retrieved

Total number of relevant images in the database

ARR = -D^-fi^R^Ii)

Where fDB.. is jth feature of ith image in the database |DB|.

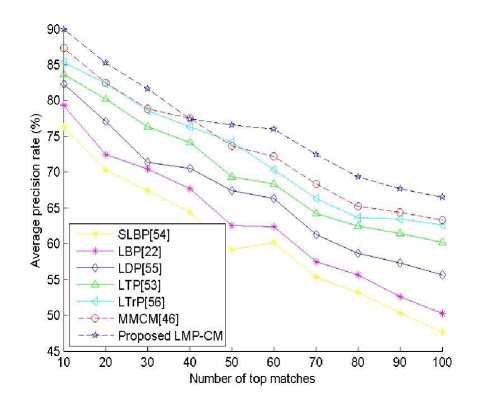

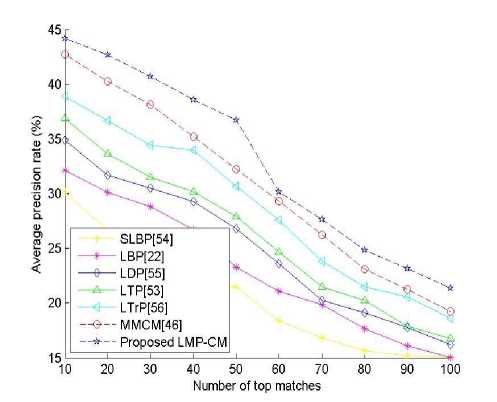

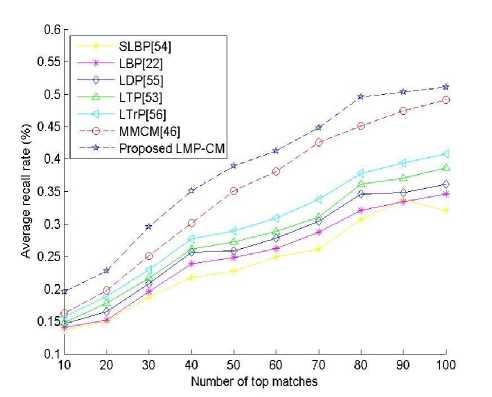

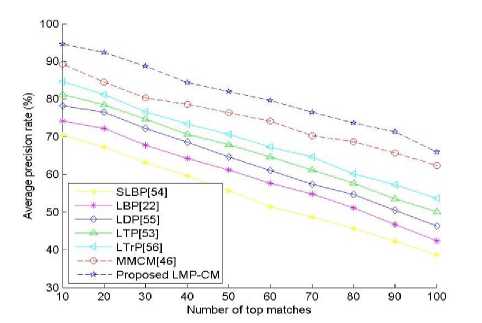

(a) ARP for Corel 1k database.

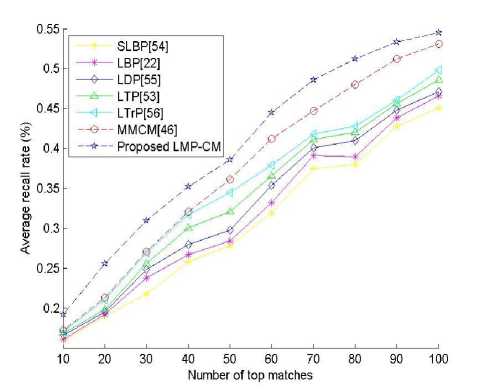

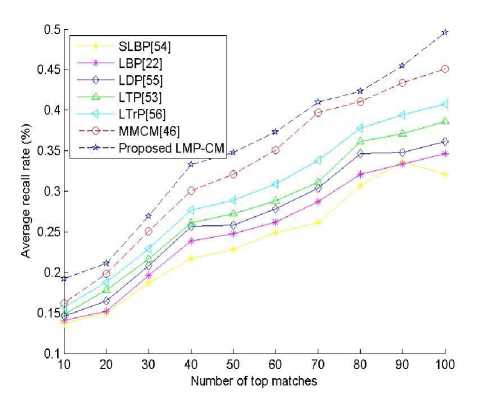

(b) ARR for Corel 10k database.

(b) ARR for Corel 1k database.

Fig.10. Comparison of proposed LMP-CM descriptor with SLBP, LBP, LDP, LTP, LTrP and MMCM over Corel-10k database using (a) ARP (b) ARR.

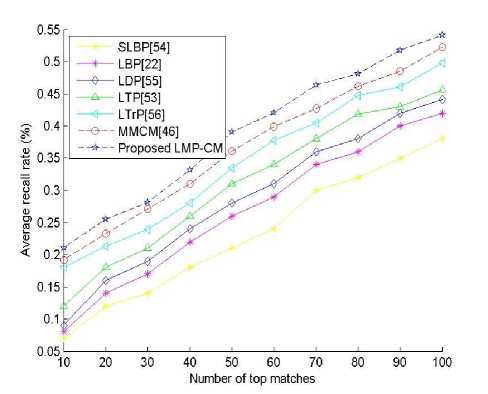

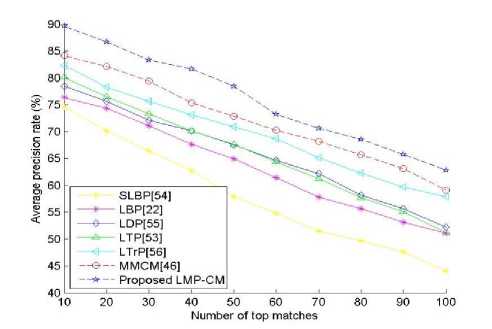

(a) ARP for MIT-VisTex texture database.

Fig.9. Comparison of proposed LMP-CM descriptor with SLBP, LBP, LDP, LTP, LTrP and MMCM over Corel-1k database using (a) ARP (b) ARR.

(a) ARP for Corel 10k.

(b) ARR for MIT-VisTex texture database.

Fig.11. Comparison of proposed LMP-CM descriptor with SLBP, LBP, LDP, LTP, LTrP and MMCM over MIT-VisTex texture database using (a) ARP (b) ARR.

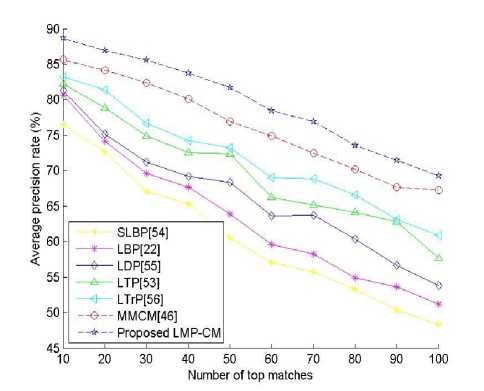

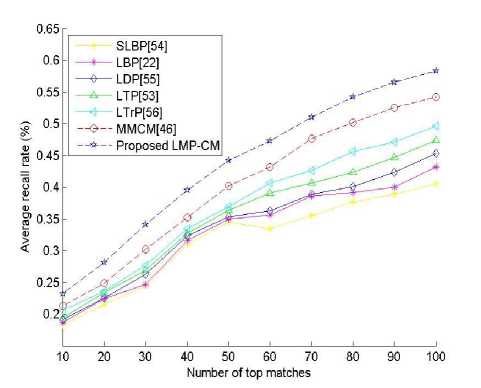

(a) ARP for Brodtaz texture database.

(b) ARR for CMU-PIE database.

Fig.13. Comparison of proposed LMP-CM descriptor with SLBP, LBP, LDP, LTP, LTrP and MMCM over CMU-PIE database using (a) ARP (b) ARR.

(b) ARR for Brodtaz texture database.

The proposed LMP-CM, descriptor is compared with the retrieval results of the popular descriptors such as our earlier approach MMCM [46] and other popular local based approaches like: local binary pattern (LBP) [22], local ternary pattern (LTP)[53], semi-structure local binary pattern (SLBP)[54], local derivative pattern (LDP) [55], local tetra pattern (LTrP) [56] and the results plotted from Fig.9 to 13 using ARP and ARR on each individual database. The following points are noted down.

Fig.12. Comparison of proposed LMP-CM descriptor with SLBP, LBP, LDP, LTP, LTrP and MMCM over Brodtaz texture database using (a) ARP (b) ARR.

(a) ARP for CMU-PIE database.

-

1. Out of the considered five databases, the Corel 1k, MIT-Vistex and Brodtaz databases has shown high ARP and ARR further these databases exhibited more or less similar retrieval rates. The main reason for this is the Corel 1k, MIT-Vistex and Brodtaz databases contains more images per category which is very high and also these database contains only few categories of images.

-

2. The Corel 10K database exhibited a low ARP and ARR on all existing methods. The reason for this, the complex set of images, more number of categories and few numbers of images per category.

-

3. The proposed LMP-CM exhibited a high retrieval rate than other existing methods. The proposed method has shown high precession rate, when compared to SLBP, LBP and LDP approaches on all databases.

-

4. An increase of 4% and 8 % in precession rate is recorded on proposed method when compared to LTrP and LTP methods respectively.

-

5. The performance of our method is clearly better than the SLBP, LBP, and LDP descriptors over all the databases considered (both natural and human faces) using both ARP and ARR quality metrics.

-

6. The proposed LMP-CM has exhibited a higher APR and ARR when compared to our earlier MMCM method. On average the proposed LMP-CM has shown approximately 2 to 6% higher retrieval rate than MMCM.

-

7. The main reason for high performance of the proposed LMP-CM method is due to the derivation of LMP code from two different MCMs starting from the two different positions of the 2x2 grid.

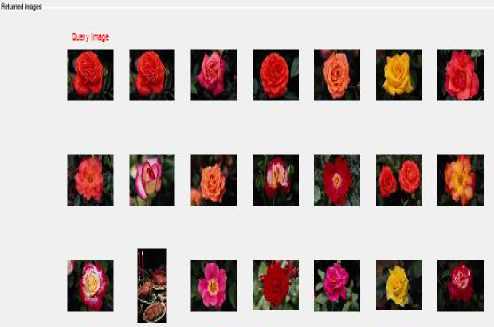

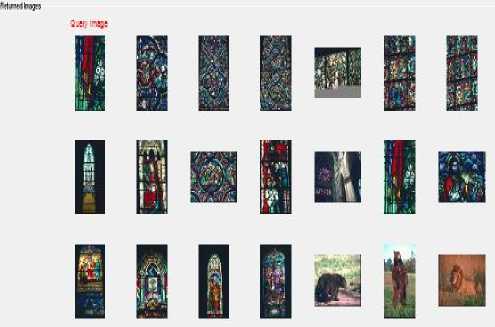

(a) Corel 1k.

(b) Corel 10k.

(c) MIT-VisTex texture database .

(d) Brodtaz texture database.

Зяг/ refit

аяяяяя

iiiSiili

UJjIdO

-

(e) CMU-PIE database.

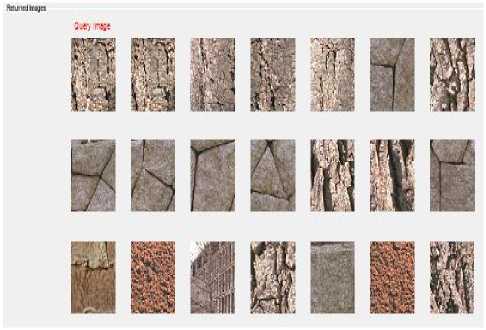

Fig.14. Top 20 retrieved images of considered databases.

Fig.14 shows the top 20 retrieved images for one query image from each database by the proposed LMP-CM descriptor and the following are noted down.

The precision obtained by the proposed LMP-CM for Fig.14 is 89.96%, 44.20%, 89.63% , 88.66%,and 94.62% over Corel-1k, Corel-10k, MIT-VisTex, Brodtaz and CMU-PIE databases respectively. The performance of the proposed descriptor is far better than other descriptors over the natural and textural databases.

Table 2. The dimensions of each descriptor.

|

S.No |

Descriptors |

Dimensions |

|

1 |

SLBP[62] |

256 |

|

2 |

LBP [31] |

256 |

|

3 |

LTP[63] |

2x256 |

|

4 |

LDP [61] |

4x256 |

|

5 |

LTrP [64] |

13x256 |

|

6 |

MMCM [54] |

24 x 24 |

|

7 |

Proposed LMP-CM |

36 x 36 |

The dimension of each descriptor considered and the proposed LMP-CM is summarized in Table 2. The dimension of LMP-CM is lower than all the recent and state-of-the-art descriptors and the retrieval rate of LMP-CM is better.

-

V. Conclusions

We have presented an extended version of MCM and our earlier approach MMCM for image retrieval. The proposed LMP-CM captured more discriminative texture information by deriving a unique PSM code based on two different directions of PSM. The range of LMP code will be 36 only (0 to 35), thus it is easy to compute GLCM features on this and the dimension of LMP-CM will be 36 x 36. The LMP-CM is easy to understand, implement and are efficient in terms of storage requirement and computational time. Thus the proposed LMP-CM features reduce the computation time of the similarity measure and are least to any other descriptor (Table 2). The LMP-CM method is invariant to any monotonic mapping of individual color planes, such as gain adjustment, contrast stretching, and histogram equalization. The experimental results reveals the superiority of the proposed method over the existing methods.

Список литературы Image retrieval based local motif patterns code

- L. Junling, K. Degang, Z.H. Wei, C. Chongxu, Image retrieval based on weighted blocks and color feature, in: 2011 International Conference on Mechatronic Science, Electric Engineering and Computer (MEC), IEEE, 2011, pp. 921–924.

- Chen WT, Liu WC, Chen MS. Adaptive color feature extraction based on image color distributions. IEEE Trans Image Process 2010;19(8):2005

- Liu GH, Yang JY. Content-based image retrieval using color difference histogram. Pattern Recogn 2013;46(1):188–98.

- Wang XY, Yu YJ, Yang HY. An effective image retrieval scheme using color, texture & shape features. Comput Stand Interfaces 2011;33(1):59–68.

- Umesh D. Dixit, M. S. Shirdhonkar, Signature based Document Image Retrieval Using Multi-level DWT Features, I.J. Image, Graphics and Signal Processing (IJIGSP) , 2017, 8, 42-49.

- Dongfeng Han Wenhui Li, Xiaomo Wang, Yanjie She , Interest Region-Based Image Retrieval System Based on Graph-Cut Segmentation and Feature Vectors , International Conference on Computational and Information Science CIS 2005: Computational Intelligence and Security pp 483-488

- Lakhdar Belhallouche, Kamel Belloulata , Kidiyo Kpalmag. A New Approach to Region Based Image Retrieval using Shape Adaptive Discrete Wavelet Transform, I.J. Image, Graphics and Signal Processing (IJIGSP), 2016, 1, 1-14.

- G S Murty ,J Sasi Kiran , V.Vijaya Kumar, “Facial expression recognition based on features derived from the distinct LBP and GLCM”, International Journal of Image, Graphics And Signal Processing (IJIGSP), Vol.2, Iss.1, pp. 68-77,2014, ISSN: 2074-9082.

- Pullela R Kumar, V. Vijaya Kumar, Rampay.Venkatarao, “Age classification based on integrated approach”. International Journal of Image, Graphics and Signal Processing (IJIGSP), Vol. 6, Iss.7, 2014, pp. 50-57, ISSN: 2074-9082.

- G S Murty ,J Sasi Kiran , V.Vijaya Kumar, “Facial expression recognition based on features derived from the distinct LBP and GLCM”, International Journal of Image, Graphics And Signal Processing (IJIGSP), Vol.2, Iss.1, pp. 68-77,2014, ISSN: 2074-9082.

- Amir Farhad Nilizadeh, Ahmad Reza Naghsh Nilchi, Block Texture Pattern Detection Based on Smoothness and Complexity of Neighborhood Pixels, I.J. Image, Graphics and Signal Processing (IJIGSP), 20 , , 1-9.

- Dolly Choudhary, Ajay Kumar Singh, Shamik Tiwari, Dr. V P Shukla, Performance Analysis of Texture Image Classification Using Wavelet Feature, I.J. Image, Graphics and Signal Processing (IJIGSP), 2013, 1, 58-63

- M. Srinivasa Rao, V.Vijaya Kumar, MHM KrishnaPrasad, Texture Classification based on Local Features Using Dual Neighborhood Approach, I.J. Image, Graphics and Signal Processing (IJIGSP), 2017, 9, 59-67

- K. Srinivasa Reddy, V. Vijaya Kumar, B. Eswara ReddyFace Recognition Based on Texture Features using Local Ternary Patterns, I.J. Image, Graphics and Signal Processing (IJIGSP), 2015, 10, 37-46.

- P. S. Hiremath and Manjunatha Hiremath, Depth and Intensity Gabor Features Based 3D Face Recognition Using Symbolic LDA and AdaBoost, I.J. Image, Graphics and Signal Processing (IJIGSP), 2014, 1, 32-39.

- Lin CH, Huang DC, Chan YK, Chen KH, Chang YJ. Fast color-spatial feature based image retrieval methods. Expert Syst Appl 2011;38(9):11412–20.

- JunYueabZhenboLib1LuLiubZetianFu, Content-based image retrieval using color and texture fused features Mathematical and Computer Modelling Volume 54, Issues 3–4, August 2011, Pages 1121-1127.

- Cross.G, Jain. A, Markov random field texture models, IEEE trans. Patter. Amnal. Mach. Intell. 5(1), 25-39, 1983

- Qin H., Qin L., Xue L., Li Y. A kernel Gabor-based weighted region covariance matrix for face recognition. Sensors. 2012;12:992–993.

- Zhang B., Gao Y., Zhao S., Liu J. Local derivative pattern versus local binary pattern: Face recognition with high-order local pattern descriptor. IEEE. Trans. Image Process. 2010;19:533–544

- Basavaraj S. Anami1 , Suvarna S Nandyal2 , Govardhan. A, Color and Edge Histograms Based Medicinal Plants’ Image Retrieval, I.J. Image, Graphics and Signal Processing, 2012, 8, 24-35..

- T. Ojala, M. Pietikäinen, T. Mäenpää, Multiresolution gray-scale and rotation invariant texture classification with local binary patterns, IEEE Trans. Pattern Anal. Mach. Intell. 24 (7) (2002) 971–987.

- Nidhal El Abbadi1 and Lamis Al Saadi, Automatic Detection and Recognize Different Shapes in an Image, IJCSI International Journal of Computer Science Issues, Vol. 10, Issue 6, No 1, November 2013

- Gang Zhang ,Zong-min Ma ,Lian-qiang Niu , Chun-ming Zhang ,, Modified Fourier descriptor for shape feature extraction , Journal of Central South University February 2012, Volume 19, Issue 2, pp 488–495

- J. Flusser, T. Suk, Pattern recognition by affine moment invariants, Pattern Recogn. 26 (1) (1993) 167–174.

- B.S. Manjunath, P. Salembier, T. Sikora, Introduction to MPEG-7: Multimedia Content Description Interface, vol. 1, John Wiley & Sons, 2002.

- G.H. Liu, J.Y. Yang, Image retrieval based on the texton co-occurrence matrix, Pattern Recogn. 41 (12) (2008) 3521–3527.

- G.H. Liu, L. Zhang, Y.K. Hou, Z.Y. Li, J.Y. Yang, Image retrieval based on multi-texton histogram, Pattern Recogn. 43 (7) (2010) 2380–2389.

- G.H. Liu, Z.Y. Li, L. Zhang, Y. Xu, Image retrieval based on micro-structure descriptor, Pattern Recogn. 44 (9) (2011) 2123–2133.

- X. Wang, Z. Wang, A novel method for image retrieval based on structure elements descriptor, J. Visual Commun. Image Represent. 24 (1) (2013) 63–74.

- G.H. Liu, J.Y. Yang, Content-based image retrieval using color difference histogram, Pattern Recogn. 46 (1) (2013) 188–198.

- Ketan Tang ; Oscar C. Au ; Lu Fang ; Zhiding Yu ; Yuanfang Guo, Multi-scale analysis of color and texture for salient object detection, Image Processing (ICIP), 2011 18th IEEE International Conference on, 1-14 Sept. 2011.

- M. Verma, B. Raman, S. Murala, Local extrema co-occurrence pattern for color and texture image retrieval, Neurocomputing 165 (2015) 255–269.

- G. Seetharaman, B. Zavidovique, Image processing in a tree of Peano coded images, in: Proceedings of the IEEE Workshop on Computer Architecture for Machine Perception, Cambridge, CA (1997).

- G. Seetharaman, B. Zavidovique, Z-trees: adaptive pyramid algorithms for image segmentation, in: Proceedings of the IEEE International Conference on Image Processing, ICIP98, Chicago, IL, October (1998).

- G. Shivaram, G. Seetharaman, Data compression of discrete sequence: a tree based approach using dynamic programming, IEEE Transactions on Image Processing (1997) (in review).

- A.R. Butz, Space filling curves and mathematical programming, Information and Control 12 (1968) 314–330.

- G. Peano, Sur une courbe qui remplit toute une aire plaine, Mathematische Annalen 36 (1890) 157–160.

- D. Hilbert, Uber die stettige Abbildung einter linie auf einFlachenstuck, Mathematical Annalen 38 (1891) 459–461.

- N. Jhanwar, S. Chaudhuri, G. Seetharaman, B. Zavidovique, Content based image retrieval using optimal Peano scans, in: International Conference for Pattern Recognition, August (2002).

- G. Peano, Sur une courbe qui remplit toute une aire plaine, Mathematische Annalen 36 (1890) 157–160.

- D. Hilbert, Uber die stettige Abbildung einter linie auf ein Flachenstuck, Mathematical Annalen 38 (1891) 459–461.

- A. Lempel, J. Ziv, Compression of two-dimensional data, IEEE Transactions on Information Theory 32 (1) (1986) 2–8.

- J. Quinqueton, M. Berthod, A locally adaptive Peano scanning algorithm, IEEE Transactions on PAMI, PAMI-3 (4) (1981) 403–412.

- P.T. Nguyen, J. Quinqueton, Space filling curves and texture analysis, IEEE Transactions on PAMI, PAMI-4 (4) (1982)

- R. Dafner, D. Cohen-Or, Y. Matias, Context based space filling curves, EUROGRAPH-ICS Journal 19 (3) (2000).

- A.Obulesu, V. Vijay Kumar, L. Sumalatha, Content Based Image Retrieval Using Multi Motif Co-Occurrence Matrix, International journal of image,graphic signal processing (IJIGSP), (Accepted).

- Jhanwar N, Chaudhuri S, Seetharaman G, Zavidovique B. Content-based image retrieval using motif co-occurrence matrix. Image Vision Comput 2004; 22:1211–20.

- Corel Photo Collection Color Image Database, online available on http://wang.ist.psu.edu/docs/related.shtml

- Corel database: http://www.ci.gxnu.edu.cn/cbir/Dataset.aspx

- MIT Vision and Modeling Group, Cambridge, „Vision texture‟, http://vismod.media.mit.edu/pub/.

- P. Brodatz, Textures: “A Photographic Album for Artists and Designers “.New York, NY, USA: Dover, 1999.

- T. Sim, S. Baker, and M. Bsat, ―The CMU pose, illumination, and expression database,‖ IEEE Trans. Pattern Anal. Mach. Intell., vol. 25, no. 12, pp. 1615–1618, Dec. 2003.

- X. Tan and B. Triggs, “Enhanced local texture feature sets for face recognition under difficult lighting conditions,” IEEE. Trans. Image Process., vol. 19, no. 6, pp. 1635–1650, Jun. 2010.

- K. Jeong, J. Choi, and G. Jang, “Semi-Local Structure Patterns for Robust Face Detection,” IEEE Signal Processing Letters, vol. 22, no. 9, pp. 1400-1403, 2015.

- B. Zhang, Y. Gao, S. Zhao and J. Liu, “Local derivative pattern versus local binary pattern: face recognition with high-order local pattern descriptor,” IEEE Transactions on Image Processing, vol. 19, no. 2, pp. 533-544, 2010.

- S. Murala, R.P. Maheshwari and R. Balasubramanian, “Local tetra patterns: a new feature descriptor for content-based image retrieval,” IEEE Transactions on Image Processing, vol. 21, no. 5, pp. 2874-2886, 2012.