Implementation of Hand Sign Recognition for Non-Linear Dimensionality Reduction based on PCA

Автор: Shilpa Sharma, Rachna Manchanda

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 2 vol.9, 2017 года.

Бесплатный доступ

Hand Sign or gesture recognition is the way of communication for hearing and speech impaired people. Gestures are formed from motion of body or state but commonly initiate from the hand and face. Speech and gestures are the expressions; these are the communication medium between human beings. Hand gesture is movement or motion of human hand. Gesture recognition is mathematical interpretation of human hand by using computing devices. There are different sign languages used in all over world and have its own grammar structure. Even in India has different languages used in every state, sign languages has little difference in contra dictionary region. Hand sign recognition is used for robot control applications and sign language interpretation.

Gesture recognition, gesture description, Human-machine interface, features extraction, PCA

Короткий адрес: https://sciup.org/15014164

IDR: 15014164

Текст научной статьи Implementation of Hand Sign Recognition for Non-Linear Dimensionality Reduction based on PCA

Published Online February 2017 in MECS and Computer Science

Gesture recognition is a mathematical computation of hand movement that provides interface between Human and machine. It provides Human machine interaction for communication without using mechanical devices. Two types of gestures used in the system are: Online gestures and Offline gestures. Online gestures are those which having direct manipulations like scaling and rotating. These are used to scale or rotate a substantial object. Offline gestures are those gestures which are produced after the user interaction with object [12]. Example: gestures to activate a menu. Interpreter glove is an adaptive tool for hearing and speech-impaired people that provides communication with non-disabled community by using sign language and hand gestures [3]. The tool consists of hardware and software system that works with glove based technique for text-and language processing [7]. It can operate as an interpreter that convert signs or gestures in text and then read them aloud. The hand poses including bending, extended, crossed, closed and spread fingers, positions of wrist and absolute hand orientation are recorded. It based upon finger spelling which can be referred as Dactyl International sign language [5]. The glove is able to recognize and read aloud any sign language word. The glove prototype is made up of elastic material. It consists of gesture recognition, speech conversion module, data processing and speaker. In this system vision based approach is used because glove based system is so costly and a heavy procedure [14]. In this paper morphological filters are used for the noise reduction. Principle component analysis is an algorithm that is used to reduce multiple dimensions of data. In system description it consists of sign recognition, feature extraction and reduction and data acquisition.

-

A . Hand Gesture Recognition

Hand gesture recognition is used in robot control by assuming the gestures of hand. It includes an algorithm that identifies hand pose and involves set of five commands or counts. The signs having various meanings depend upon functioning of the robot [2]. For example: when it count one or digit one that means ‘move forward’, count five that means ‘stop’. Similarly count two ‘means reverse’, count three means ‘turn right’, count four means ‘turn left’. These are the terms used in robot control with hand gestures [13].

-

B . Man Machine Interface

Man-machine interaction is also known as human computer interaction system. It provides communication between human and computer via mouse and keyboard. The flow of information between human and computer is called loop of interaction. Graphical User Interface (GUI) is used in different applications such as internet browsers, desktop applications. Voice user interfaces (VUI) is used for speech recognition and synthesis in system. Verbal, non-verbal communication and facial expressions are used in recognition. Complex programming algorithm is used to convert gesture codes into machine language [4].

-

C. Gestures

Gestures are the medium of communication by movement of hand, head. Gesture is a non-verbal or non vocal communication. Gestures are of two types: static and dynamic.

Dynamic gestures are changed over a specific time period. Gesture used to say goodbye or waving movement of hand is an example of dynamic gestures [16]. Static gestures are observed at the instant of time. Sign used to say stop is an example of static gestures.

-

D. Applications

Hand gesture recognition having different applications on different domain [8]. Applications are 3D design, virtual reality, tele-presence, robot control, sign language.

3D Design : System works with inputs like 3D images that are provided by computer aided design for humancomputer interface or communication. Manipulation of 3D inputs proceeds with the mouse. There are movements in user gestures then CAD model is moved. 3D images are used to manipulate or interpret data used in system.

Virtual Reality: Some simulations having additional sensor information such as sound from speakers and headphones. These are used in communication media system. These are very accurate and having no effect of user characteristics and environmental changes.

Tele-Presence: Tele presence is used to raise the need of manual operations in case of system failure or emergency conditions. These are used in remote areas or to control the robotic arm in real time robot control also called ROBOGUEST.

Robot Control : Robot control is an important application for communication media. This application is used to control a robot using hand pose signs, uses numbering to count five fingers. For example: number1 means ‘move forward’, number5 means ‘stop’. The counting system depends upon the functioning of the robot.

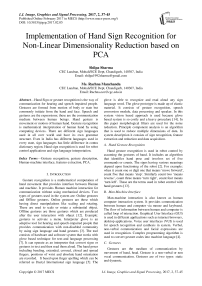

Sign Language: Sign language is the way of communication. It is a language for speech impaired people. Sign language depends on hand gestures. It uses static and dynamic gestures. It having different signs used for communication between speech impaired persons and a normal person.

There are different types of sign languages in all over world. Every country has its own sign languages. Grammar is also different for every sign language. In sports ground coach uses sign language for the game rules and steps. Sign language is used for the communication. In airport ground sign language is used to communicate with pilots at the time of take off and land on the runway. Indian sign language has its own grammar structure. Different languages are used in different states of India. Gestures are independent of spoken English or Hindi language. Many people think that sign language is similar to Spoken language like Hindi or English but it’s different. Indian sign language not depends on other sign languages. These are such applications used in the hand gesture recognition system.

Three types of algorithms used in detection of hand gestures. These are:

a.) 3D-Model based algorithm.

b.) Skeletal-based algorithm.

c.) Appearance-based algorithm.

Interpretation of gesture recognition takes place in different ways. This process depends upon the input data. 3D model uses volumetric or skeletal based approaches. These models are used for the computer vision purpose and also used in computer animation industry.

There are different applications of skeletal model. These are:

a.) These algorithms are faster because of using different key parameters.

b.) There are possibilities of pattern matching against a template database.

c.) Detection programs are used to focus significant parts of body.

These are the parameters and algorithms used in the system.

Fig.1. Sign Language.

-

II. System Description

Digital camera is used to record the images or videos of gestures. These images or gestures are used as input in the system. 26 combinations of gestures are used in system and input images having background of white color [1]. Then pre-processing and segmentation is done for these input images. It includes four major steps:

-

A. Data Acqisition

The main function of sign recognition is to achieve high accuracy. Inputs are taken by web camera in the system. In sign recognition 260 images are used, because there are 26 signs or alphabets in sign language [6]. Each sign has 10 images.

These images are captured at 380×420 pixels resolution. These images are captured at white background to reduce noise present in images.

-

B. System Requirements

Vision-based technique consists of one webcam that captures images or videos of gestures. These gestures are used as input in system for recognition. Pre-processing consists of morphological filtering, image acquisition and segmentation [10]. Image acquisition is used to achieve high accuracy. Morphological filters are used to reduce the noise from the images. Otsu’s algorithm is used for the segmentation with various features.

-

C. Feature Reduction

This system aims to convert correlated data into uncorrelated data. This method is used to reduce large dimensions of data into smaller dimensions. Related data or information is encoded in compressed or reduced form. It reduces less discriminative data. Extraction of data or features is a method to encode data with high accuracy. PCA is the main feature that extract by the system [15].

It maximizes the data variance and mapping the data into lower dimensional subspace, the process is known as principle components. Principle component analysis (PCA) is better than the linear discriminant analysis (LDA) method. This algorithm is better for specially those cases in which few training samples are used. Feature reduction transforms the high dimensional data into smaller. It describes high dimensional data into efficient and meaningful representation of reduced dimension. PCA provides the data which has no redundancy like Gabor filter wavelet. Feature extraction and dimension reduction are combined in one step called PCA.

-

D. Sign Recognition

Sign recognition having objective to improve performance and accuracy. Principle component analysis (PCA) algorithm is used to reduce large dimensional data [9]. PCA algorithm involves various features in reduction of multi-dimensional data [11].

In this above system a webcam is used for the gesture recognition. This webcam captures different images and videos, used as input devices instead of mouse and keyboard.

PCA is an algorithm which reduces multidimensional data into smaller dimensions. This is a dimensionality reduction method. In this algorithm a covariance analysis between factors takes place. In this method the correlated data is converted into uncorrelated data. The original data is remapped into a new coordinate system. This system is based on the variance with data. PCA required a mathematical procedure for transferring a number of correlated variables into a number of uncorrelated variables called principle components. In PCA based system vision- based setup is used.

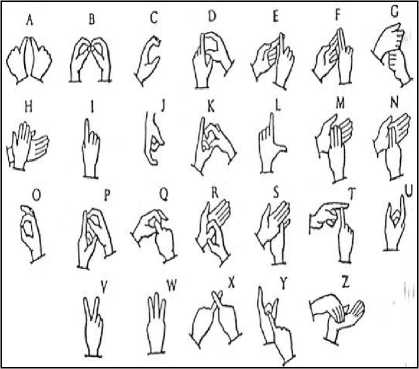

In above diagram there is a sequence of gesture. The data is processed and after this process gesture segmentation occurs. The process of gesture segmentation separates the background and pixels of image. Noise present in images is reduced by the morphological filters. In this system a webcam is used, which captures images or videos of hand gestures. These gestures are used as an input in the recognition process.

These are the features of hand gestures used for the recognition. The multiple dimensions of data are converted into smaller dimensions. A sequence of gestures is used for the recognition and after data processing and image segmentation, gestures are checked that they are recognized or not. If all the gestures are recognized then the recognition process is complete, otherwise it checks for image segmentation again. These are the important parameters of hand gestures for recognition process.

Table 1. Features of Gesture recognition

|

Feature |

Principle Component analysis |

|

Applications |

PCA applications are beneficial in significant fields such as criminal investigation. |

|

Computations for large Datasets |

No requirement of large computations using principle component analysis. |

|

Noise |

Noise is reduced in maximum variations. Small variations in background ignored automatically. |

|

Focus |

There are widest variations in background of extraction algorithm. |

|

Redundancy |

Lack of redundancy in data. |

|

Supervised learning technique |

PCA is unsupervised learning technique. |

|

Discrimination |

There is no structure assumption used with Principle component analysis algorithm. |

|

Direction of maximum discrimination |

Directions of maximum discrimination are not similar to directions of maximum variance. The background noise in images is removed by using filtering techniques. |

|

Well distribution |

PCA is dimension reduction technique and distributed well in system . |

NaN/nan: Not a number. (e.g. 0/0).

Realmin: Smallest usable positive real number (e.g.

2.2251e-308).

Realmax: largest usable positive real number (e.g. 1.7977e+308).

I/j: square root of -1.

These are the different variables that are used in Matlab R2013a for gesture recognition.

There is no need to compile the codes, means compilation process is not compulsory. Matlab is an interpreter and consists of different steps:

There is no need to compile the codes, means compilation process is not compulsory.

These are linked to C, C++, Java, and SQL.

These are little bit slower than the compiled code.

Matlab R2013a is used for the recognition of hand gesture recognition in pre-processing.

Fig.2. System Description.

-

III. Related Work

Shreyashi Narayan Sawant, M.S. Kumbhar: presents ‘Real time Sign Language Recognition using PCA.’ This paper describes feature extraction and features reduction. Principle Component Analysis (PCA) is used as main feature and is used for dimensionality reduction [1]. Morphological filters are used to reduce noise present in images. PCA algorithm is used for the gesture recognition.

Sanjay Singh et al: presents ‘Real time FPGA based implementation of color image edge detection’. This is very basic or important step for many applications such as object tracking, object identification, image segmentation. The main challenge to the real time color image edge detection is due to high volume of data to be processed [10].

Hiroomi Hikawa, Keishi Kaida: ‘Novel FPGA Implementation of hand sign recognition system with SOM-HEBB classifier’ presents hand sign recognition system with hybrid network. This system consists of Selforganizing map (SOM) and Hebbian learning algorithm [2]. Features are extracted from input gesture images, which are mapped to a lower dimensional map of neurons. Hebbian network is a single layer feed-forward neural network. Hebbian learning algorithm identifies categories of system.

Bhavina Patel et al: This paper used microcontroller based gesture recognition system. It describes working and design of a system which is useful to communicate a normal person with deaf, dumb or blind people. This system converts sign language into voice that is Understandable by blind or normal people. The sign language is translated into text for deaf people [12].

Archana S. Ghotkar et al: Vision based hand gesture recognition system has been discussed as hand plays vital communication mode. Vision based system have challenges over traditional hardware based system by use of computer vision and pattern recognition.

Siddharth S. Rautaray et al: It describes computer vision algorithms and gesture recognition techniques. Vision-based system is a costly procedure to detect hand gestures. This will be used in controlling applications like virtual games, browsing image in virtual environment using hand gestures [9].

Asanterabi Malima et al: This paper describes a fast and simple algorithm for robot control in hand gesture recognition. Proposed algorithm segments the hand region and then makes interference on the activity of fingers involved in the Gesture. The segmentation algorithm is too simple.

Rafiqul Zaman Khan et al: This paper includes various methods from neural network, HMM, fuzzy-c means clustering for its features orientation [7]. HMM tools are perfect for dynamic gestures. Its efficiency is especially for robot control. The recognition algorithm depends upon the applications that it requires [8].

Nidhi Gupta et al: This paper presents a system that detects the hand gesture motions using principle of Doppler Effect. It uses a sensor module for the transmission of ultrasonic waves and is reflected by a moving hand. The received waves are the frequency shifted due to Doppler Effect. The noise in human audible range did not affect the detection.

Ruiduo Yang et al: This paper involves dynamic programming process to overcome the low-level hand segmentation errors. Process which separates object and background of image is called segmentation. In this above paper segmentation errors are removed by dynamic programming [13].

Krishnakant C. Mule & Anilkumar N. Holambe: describes ‘Hand gesture recognition using PCA and Histogram projection’. PCA is used as a feature extraction and reduction method. This paper presents how to use histogram as a feature extraction method for extracting pixels from input image and then how to use principle component analysis (PCA) to reduce size of feature vector [6].

Monuri Hemantha, M.V. Srikanth: describes ‘Simulation of Real time hand gesture recognition for physically impaired’. In this paper sign language is used to communicate speech impaired people [5]. Visionbased approach is used for the recognition. It provides human-machine interface for gesture recognition. This system does not demand on sensors or markers attached to the user and allow unrestricted symbol or character input from any position.

Jonathan Alon et al.: ‘Simultaneous localization and recognition of Dynamic hand gestures’. In this paper dynamic space-time warping (DSTW) algorithm is used [16]. DSTW algorithm aligns a pair of query and model gestures in both space and time. Dynamic programming is then used to compute global machining cost. These methods are used to recognize the above system.

Zijian Wang et al.: ‘Vision-based hand gesture interaction using particle filter, principle component analysis and transition network’. Vision based technique is used in this system. Particle filters and PCA are two main approaches used in desired system. PCA is used as a feature extraction and reduction method. An algorithm based on particle filter is used for tracking the hand gesture.

Fig.5. Applications performing sign C.

-

IV. Results

In proposed system there is set of 26 images of human hand used for recognition. These inputs or images are of singe person and are captured by using webcam. Background of images is white to remove illusion effect. In pre processing the gestures are recognized by using Matlab R2013a. In vision-based technique webcam is used to capture gesture images or videos. These images or videos are used as an input in the system.

Fig.6. Applications performing sign D.

Fig.3. Applications performing sign A.

These are the applications that perform gesture A by using webcam images as input.

Fig.4. Applications performing sign B.

Fig.7. Applications performing sign E.

These are the above results that take webcam images as input for feature extraction and perform gesture recognition. To recognize gestures minimum distance between test and train image is calculated. These are applications that perform and detect different hand gestures or signs. Matlab R2013a is used for the cosimulation. Morphological filters are used to reduce noise.

Matlab stands for matrix laboratory and is fourth generation programming language. Matlab is developed as a programming language by mathworks. It includes such languages like c, c++, Java and FORTRAN. Matlab is a program that is used for numerical computations. This software is designed for solving linear algebra type problems.

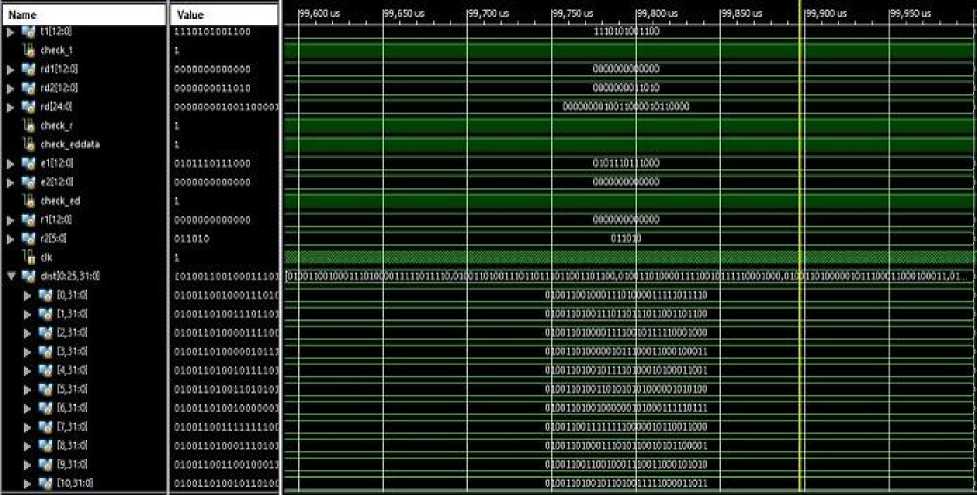

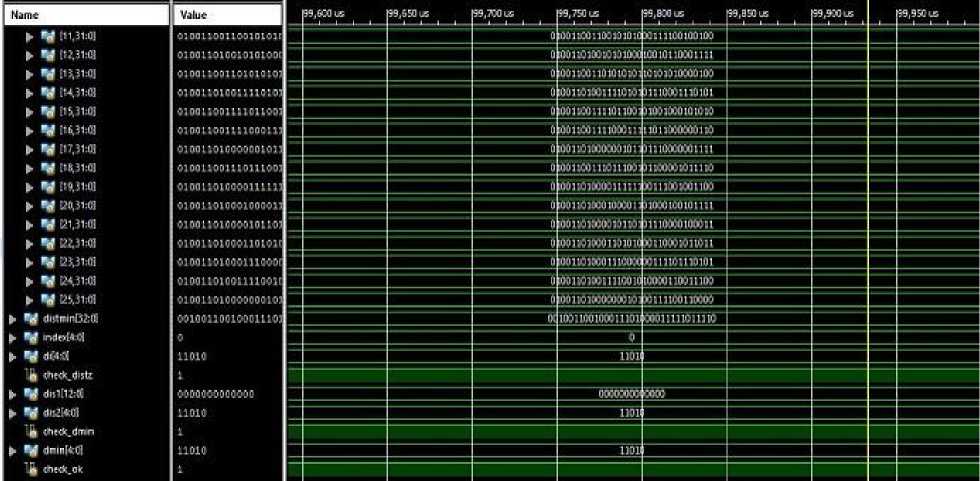

Fig.8. Simulation of gesture A with input values.

Fig.9. Simulation of gesture A with output values.

These are the results of hand gesture recognition by using Xilinx ISE 14.1. These are the software tools used for the synthesis and simulation of hand gesture recognition. Image segmentation separates image background and pixels.

Fig.10. Results of image segmentation for gesture recognition.

-

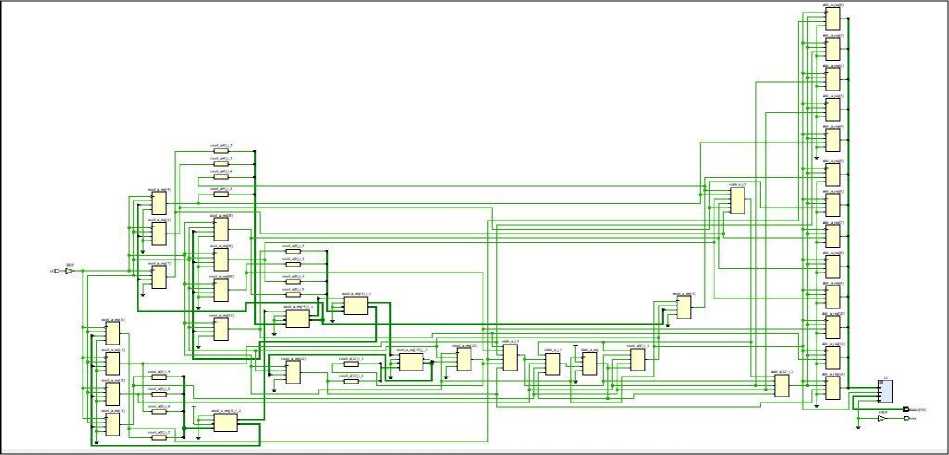

A. Schematic Diagram

Fig.11. Schematic for gesture recognition.

-

B. Synthesis

hand_gesture_m_test Project Status (06/30/2016 - 22:07:19)

Project File:

hand jgest_m_test_imp. xise

Parser Errors:

No Errors

Module Name:

hand_gesture_m_test

Implementation State:

Translated

Target Device:

xc6slx45t-3fgg434

•Errors:

No Errors

Product Version:

ISE 14.7

• Warnings:

230 Warnings (230 new)

Design Goal:

Balanced

• Routing Results:

Design Strategy:

Xilinx Default [unlocked)

• Timing Constraints:

Environment

System Settings

• Final Timing Score:

Device Utilization Summary (estimated values)

Logic Utilization

Used

Available

Utilization

Number of Slice Registers

996

54576

1%

Number of Slice LUTs

12784

27288

46%

Number of fully used LUT-FF pairs

978

12802

7%

Number of bonded lOBs

6

296

2%

Number of Block RAM/FIFO

10

116

8%

Number of BUFG/BUFGCTRLs

1

16

6%

Fig.12. Synthesis report.

Xilinx ISE (Integrated synthesis environment) is a software tool that is produced by the Xilinx. This software is used for the synthesis and analysis of HDLdesigns. These software tools are used to examine RTL diagrams, Timing analysis.

Xilinx ISE consists of Embedded development kit, a software development kit and chip scope pro.

These are the above results of hand gesture recognition. The pre-processing results are taken by using Matlab R2013a. Then post-processing results are on Xilinx ISE14.1. Noise present in the images is reduced and accuracy is improved up to 90%

-

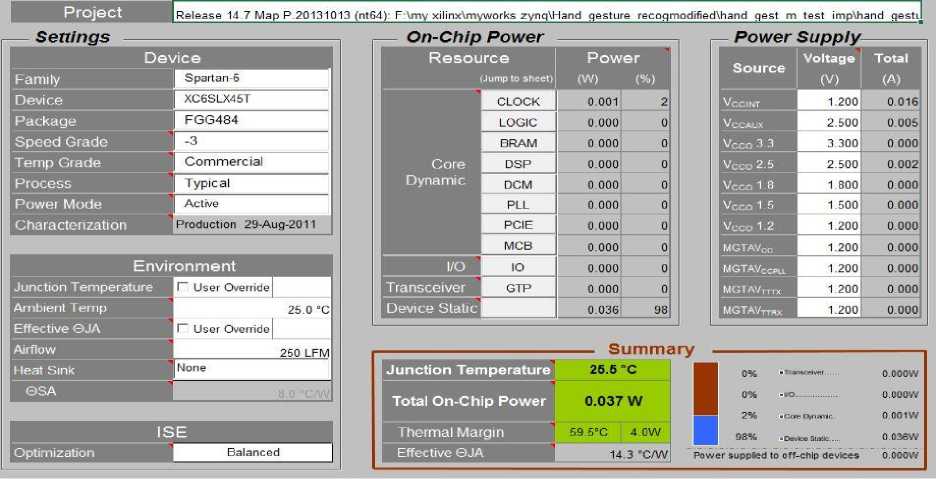

C. Power Utilization

Fig.13. FPGA implementation of gesture recognition.

-

V. Conclusion

This paper presents the human-computer interaction. Human computer interaction is an application of system in which motions and movements of hands are used as input devices instead of using mouse and keyboard. This interaction is natural without using mechanical interaction devices. Now-a-days vision based human computer interaction becoming more important. This system is also used in applications such as in games instead of using mouse and keyboard; we can use such gestures to play any game. Other one application is that it can be used for security purpose by keeping hand gesture passwords. These are used in electronic devices by putting a sensor that recognizing hand gestures. PCA based features are used in the system. Morphological filters are used to reduce noise present in images or system. This system is used to improve accuracy, reliability and recognition time of interaction. This interaction aims to good performance in recognition. Gestures are recognized by using a low cost webcam and movements of hands. This system concludes good performance and accuracy in recognition.

Acknowledgment

Список литературы Implementation of Hand Sign Recognition for Non-Linear Dimensionality Reduction based on PCA

- Shreyashi Narayan Sawant, M.S. Kumbhar, "Real Time Sign Language Recognition using PCA." 2014 IEEE International Conference on Advanced Communication Control and Computing Technologies (lCACCCT).

- Hieroomi Hikawa and Keishi Kaida, "FPGA implementation of hand gesture recognition with SOM-HEBB classifier." IEEE Transactions on circuits and systems for video technology, Vol. 25, No.1, January 2015.

- Amarjot Singh et al, "Autonomous Multiple Gesture Recognition system for disabled people." I.J. Image, Graphics and signal processing 2, 39-45, 2014 in MECS.

- Swapnil V.Ghorpade, Sagar A.Patil, Anmol B.Gore, Govind A.Pawar, "Hand gesture recognition system for Daily information retrieval." International Journal of Innovative research in computer and communication Engineering, Vol. 2, and Issue 3 in March 2014.

- Monuri Hemantha et al, "Simulation of Real Time Hand Gesture Recognition for physically impaired." International journal of Advanced Research in computer and communication Engineering Vol. 2, issue 11, November 2013.

- Krishnakant C.Mule et al, "Hand Gesture Recognition using PCA and Histogram Projection." International Journal on Advanced Computer Theory and Engineering (IJACTE). Vol. 2, Issue 2, 2013.

- Rafiqul Zaman Khan and Noor Adnan Ibraheem, "Comparative study of hand gesture recognition system." Computer science and information technology pp.203-213, 2012.

- Rafiqul Zaman Khan and Noor Adnan Ibraheem, "Survey on gesture recognition for hand image postures." Computer and information science Vol.5, No.3, may 2012.

- Siddharth S. Rautaray, Anupam Agarwal, "Real time hand gesture recognition for dynamic applications" International journal of Unicomp (IJU), Vol.3, No.1, January 2012.

- Sanjay Singh et al, "Real-Time FPGA based Implementation of color image Edge detection." I.J. Image, Graphics and signal processing 12, 19-25, 2012 in MECS.

- Cem Keskin, Ali Taylan Cemgil, and Lale Akarun, "DTW Based Clustering to Improve Hand Gesture Recognition." Springer-Verlag Berlin Heidelberg pp. 72-81 2011.

- Bhavina Patel, Vandana Shah, Ravindra Kshirsagar, "Microcontroller based Gesture Recognition system for the handicap people." Journal of Engineering research and studies, vol2, issue 4, 2011.

- Ruiduo Yang, Sudeep Sarkar et al, " Handling movement Epenthesis and Hand Segmentation Ambiguities in continuous Sign Language Recognition using Nested Dynamic Programming." IEEE Transactions on Pattern Analysis and machine intelligence, Vol. 32, No. 3, March 2010.

- Pragati Garg, Naveen Aggarwal and Sanjeev Sofat, "Vision Based Hand Gesture Recognition." International Journal of Computer, Electrical, Automation, Control and Information Engineering Vol3, No1, 2009.

- S.S. Ge *, Y. Yang and T.H. Lee :Hand gesture recognition and tracking based on distributed locally linear embedding. Image and Vision Computing 26 (2008) 1607–1620.

- Jonathan Alon, Vassilis Athitsos, Quan Yuan, and Stan Sclaroff, "Simultaneous Localization and Recognition of Dynamic Hand Gestures." IEEE Workshop on Motion and Video Computing, Jan. 2005.