Improving facial image recognition based neutrosophy and DWT using fully center symmetric dual cross pattern

Автор: Turker Tuncer, Sengul Dogan

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 6 vol.11, 2019 года.

Бесплатный доступ

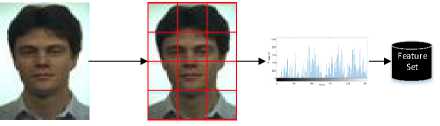

Face recognition is one of the most commonly used biometric features in the identification of people. In this article, a novel facial image recognition architecture is proposed with a novel image descriptor which is called as fully center symmetric dual cross pattern (FCSDCP) The proposed architecture consists of preprocessing, feature extraction and classification phases. In the preprocessing phase, discrete wavelet transform (DWT) and Neutrosophy are used together to calculate coefficients of the face images. The proposed FCSDCP extracts features. LDA, QDA, SVM and KNN are utilized as classifiers. 4 datasets were chosen to obtain experiments and the results of the proposed method were compared to other state of art image descriptor based methods and the results clearly shows that the proposed method is a successful method for face classification.

Fully Center Symmetric Dual Cross Pattern, Neutrosophy, DWT, Facial Image Recognition

Короткий адрес: https://sciup.org/15016061

IDR: 15016061 | DOI: 10.5815/ijigsp.2019.06.05

Текст научной статьи Improving facial image recognition based neutrosophy and DWT using fully center symmetric dual cross pattern

Published Online June 2019 in MECS DOI: 10.5815/ijigsp.2019.06.05

systems should apply the standard face matching procedure. The samples in this system are updated at certain intervals. In addition, the progress of the technology has led to the rapid development and widespread use of these devices in hardware and software [3-6]. In order to solve face recognition and classification problems, several methods have been proposed in the literature [7,8]. Image descriptors based facial image recognition methods have been achieved satisfactory results. Therefore, these methods have been generally used to solve these problems [9-12].

In this study, a novel descriptor is proposed. The proposed descriptor is compared to LBP, DCP, LQPAT descriptors. These descriptors are widely used in the literature. Therefore, we chose them. A Neutrosophy and DWT based method is proposed to improve the performance and robustness of the proposed descriptor when used in facial image recognition applications. Accuracy is used as the performance parameter in the study and the results obtained are examined.

The major contributions of the proposed method are given as below

Biometrics systems aim to identify using physical or behavioral characteristics of people [1-3]. Today, many security priority systems use a variety of biometric systems. Because there is no need for an additional card or device in the identification of people in these systems [4]. Biometric systems have many application areas such as digital forensics, medicine and controlled passing [4-7]. The biometric systems are divided into physical (fingerprint, iris, face, retina, palm, palm-vein, ear) and behavioral (signature, gait recognition). One of the widely used physical biometric systems is the face recognition because facial data is public and researchers can create facial images datasets easily. Face recognition systems are developed for the identification of a company in accordance with the security procedure and applications for a criminal/person identification. Every individual who will use automatic face recognition

DCP is a new generation image descriptor for face recognition but DCP doesn’t use all of the pixels in a 5 x 5 size of blocks. Therefore, a novel image descriptor is presented to improve feature extraction capability of the DCP and this image descriptor is called as fully center symmetric dual cross pattern (FCSDCP).

Neutrosophy and DWT are well transformations and have been widely used for face classification. In this study, a hybrid transformation is proposed using Neutrosophy and DWT to directly use the advantages of these transformations. The experiments prove success of the proposed hybrid transformations.

4 classifiers are used to test success of the proposed transformation and the proposed descriptors.

- The proposed methods are compared to previously presented methods and the comparisons clearly demonstrated that the proposed methods have higher classification abilities than the others.

A novel descriptor based on Neutrosophy and DWT is proposed for facial image recognition. The related works are given in Section 2. In Section 3, Neutrosophic Sets and DWT are presented. At the same time, the feature extraction process of the LBP, DCP, and LQPAT descriptors is elaborated. The proposed descriptor is described in Section 4. The proposed Neutrosophy and DWT based facial image recognition method is presented in Section 5. The experimental results are given in Section 6. In Section 7, conclusions and future works are mentioned.

-

II. Background

-

A. Neutrosophic Sets

Neutrosophy is one of the most used methods among fuzzy-based transformations. The theory of neutrosophy analyses an A proposal as A (True), Anti-A (False) and Non-A together. Mathematical description of the Neutrosophy is given in Eq. (1) [13].

[T I F] = Neut(A) (1)

where T is true, F is false and I is indetermined. T, I and F consist of neutrosophic sets Neut (.) defines function of the neutrosophy. These sets are obtained using Eq. (2) -(7).

T(i,j) = 1 - _ amax_ a (2)

a max a min

^ j^ — ^_ ОДтах—ВДМ

^^ffmax~^^ffmin

diff(i,j) = la(i,j) - a(i,j)l(4)

diffmax = max{diff(i,jl i e P,j e Q}(5)

diffmin = min{diff(i,jl i e P,j e Q}(6)

F(i,j) = 1 - T(i,j)(7)

where a is input image, a is constructed by mean values of the each m x m sized overlapping blocks and it represents mean image, m > 1. a(ij) defines mean value of each block.

amax is the maximum values of a(i, j)V I e P, Vj e Q am in is the minimum values of a(i, j)V i e P, V j e Q diff(i,j^ is the absolute value of difference between element a(i, j) in local mean value a(i,j")

Neutrosophy has been used in many different areas for instance noise reduction, thresholding, segmentation in image processing. Neutrosophy can extract salient features from the face image [14-17].

-

B. Discrete Wavelet Transform (DWT)

DWT is widely used method for obtaining transformation coefficients. DWT separates an image two types of coefficients. These coefficients are called as approximate wavelet and detail wavelet coefficients. Approximation wavelet coefficients represent low frequency components (LL), while detail wavelet coefficients include high frequency components (LH, HL, HH) [18, 19]. The DWT equation is given below.

[LL LH HL HH] = yWT2(^mage, 'Haar') (8)

DWT is widely used in image processing. Because the human vision system is less sensitive against the noise generated by the processes on bands obtained from DWT. The represented of DWT is shown Fig. 1 [20].

I 2D-DWT

LL LH HL HH

Fig.1. The represented of DWT

The LL band is approximately bands and is robust against JPEG compression. LH, HL and HH sub-bands include detail and textural features of the images. LH band expresses horizontal details. The HL band is a vertical detail band. The HH band represents diagonal detail [18]. The LH, HL and HH sub-bands are generally used for textural feature extraction [19].

-

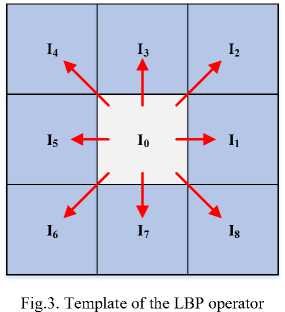

C. Local Binary Pattern (LBP)

Local binary pattern (LBP) was developed by Ojala in 1996 [28]. The main attributes of the LBP are given as follows. LBP is a fast feature extractor and it can be extract distinctive features with a short time. It is simple and has basic mathematical structure [14,15,21]. The representation of LBP is shown in Fig.2.

Fig.2. The represented of LBP

The success of the LBP allows for the development of different descriptors. The feature extraction process of the LBP method is given in Fig.3. In LBP, the image is divided into 3 x 3 sizes overlapping blocks. The binary features are extracted using signum function and masthem.

In the LBP method, the following equations are used to obtain the feature set [22].

Ai,. = S(Ii,Io)x 27+S(I?,Io)x 26

A?,, = Sfe Io)x 25 + S(l4, Io)x24(10)

A3,. =S(I5,Io)x 23+S(I6,Io)x 2?

А4 = S(l7, Io)x21 + S(I8, Io)x2o(12)

A = A1y + A?,. + A3.+ A4.

0, x < у

S(x,y) = {1, x>y

LBP = HistogramA(15)

where S (.) is a signum function and this function is utilized as binary feature extraction function.

-

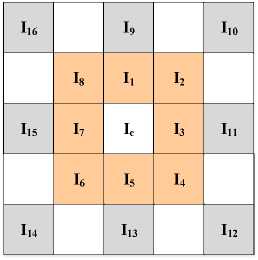

D. Dual Cross Pattern (DCP)

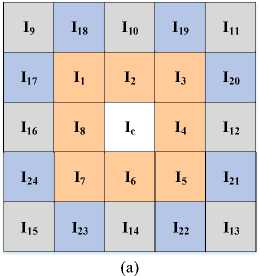

DCP divides into 5 x 5 blocks. As shown in Fig. 4, the DCP method obtains a common histogram by evaluating the [I1-I8] pixels and the [I9-I16] pixels separately [23,24].

Fig.4. Template of the DCP operator

DCP obtains a feature set of image using the following equations.

A4. = S(/1,/c) x 2+5(19,11)(16)

A?j=S(I2,Ic) x 2+S(/io,/?)(17)

A1j=S(I3,Ic)x 2 + S(Iii,I3)(18)

A4. = S(l4, Ic) x 2 + S(Ii?,I4)(19)

A?7=S(I5,Ic) x 2+S(Ii3,I5)(20)

A6,.= S(I6,Ic) x 2+S(Ii3,I5)(21)

A7. =S(I7,Ic) x 2+S(Ii4,l7)(22)

A8,. = S(I8,Ic) x 2 + S(Ii6,l8)(23)

A1 = Ai,. * 43 + A3. * 4? + A®. * 41+A7,. * 40

A? = A?., * 43 + A4. * 4? + A6, * 41+A8,. * 40

featnre_set = conc(HistogramA1, Histogram^)

(26) where conc(.) is feature concatenation function. Two feature images are constructed using DCP. In the image descriptors, histograms of the feature images are utilized as feature set. Therefore, conc(.) function is used to combine histograms of the feature images.

-

E. Local Quadruple Pattern (LQPAT)

The Local Quadruple Pattern (LQPAT) is a descriptor used for face recognition. As shown in Fig. 5, in LQPAT, the image is divided into 4 x 4 blocks and this matrix is evaluated as the lower 4 blocks for feature extraction [25,26].

|

I 0 |

I 1 |

I 4 |

I 5 |

|

I 2 |

I 3 |

I 6 |

I 7 |

|

I 8 |

I 9 |

I 12 |

I 13 |

|

I 10 |

I 11 |

I 14 |

I 15 |

Fig.5. Template of the LQPAT operator

The equations used by the LQPAT method for feature extraction are given below.

Ai,. =S(Io,I4)x 27+S(I1,I5)x 26

A2,.= S(I?,I6)x 25+ S(I3,I7)x24(28)

A3,, = S(l4, Ii?)x 23 + S(I5, 11з)х 2?

A?j = S(l6, Ii4)x 21 + S(l7, IiS)x2o(30)

A = AiJ + A?,. + A3,.+ A4,

BlJ=S(I12,I8)x 27+S(Iu,I9)x 26

Blj = S(IW Iio)x 25 + S(I15,1ц)%24

B,, =S(I8,Io)x 23+S(I9,Ii)x 22

B4 = S(Iio, I2)x 21 + S(In, I3)x20

B=B1,j + B2 + B3 + B4(36)

LQPAT = {HistogramA, HistogramB](37)

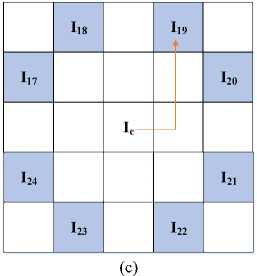

Fig.6. Template showing the proposed descriptor (a) 5 x 5 block of face image (b) Center symmetric DCP pixels (c) Knight pixels

-

III. The proposed descriptor: Fully Center Symmetric Dual Cross Pattern (FCSDCP)

DCP doesn’t use all of the pixels in the block with size of 5 x 5. Therefore, an improved version of the DCP which uses all of the pixels is presented in this study and it is called as fully center symmetric dual cross pattern (FCSDCP). The main aim of this descriptor is to extract distinctive and deep feature from images. In the FCSDCP, block pixels are divided into two groups as shown in Fig. 6.

-

- DCP pixels

-

- In the DCP, there are 8 unused pixels and we called them as Knight pixels. Because center pixel reaches the unusual pixels using knight moves in the chess.

|

I 9 |

I 10 |

I 11 |

||

|

I 1 |

I 2 |

I 3 |

||

|

I 16 |

I 8 |

I c |

I 4 |

I 12 |

|

I 7 |

I 6 |

I 5 |

||

|

I 15 |

I 14 |

I 13 |

(b)

In this method, as shown in Fig. 6 (c), the effect of all the pixels is transferred to the feature set with knight movements in the chess from center to other pixels.

AiJ=S(Ii,Is)x27+ S(Ii,I6)x^6(38)

A^j = S(I3,I7)x 25 + S(I4, I8)x24(39)

A2, = S(I9,Iu)x 23 +S(Iio,Ii4)x 22(40)

A4; = S(In,Ii5)x 2i + S(In,Ii6)x20

-

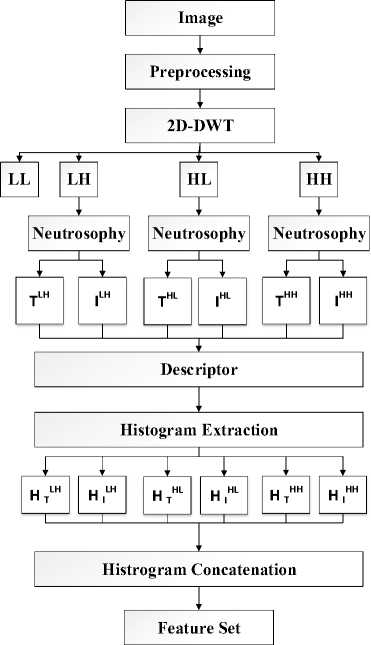

IV. The Proposed Method for Facial Image Recognition

This method consists of pre-processing, feature extraction and classification phases. The face image is segmented in the pre-processing phase. DWT and Neutrosophy are used to obtain transformation coefficients. LL, LH, HL and HH sub-bands are calculated using DWT. T, I, F set of the LH, HL and HH sub-bands are calculated using Neutrosophy. The proposed local descriptor (FCSDCP) is applied to T and I set for obtaining feature set. KNN [27], SVM [28], LDA [29] and QDA [30] are considered as classifiers in this method because these classifiers are widely used in the literature. The block diagram of the proposed method is given at Fig.7.

Fig.7. The general block diagram of the proposed method

I1 = fcsdcp(ILH)(56)

I2 = fcsdcp(IHL)(57)

I3 = fcsdcp(IHH)(58)

Step 6: Extract histogram of the textural images.

Step 7: Concatenate extracted histograms to obtain final feature set.

Step 8: Classify the extracted features using LDA, QDA, SVM and KNN.

The properties of the classifiers used in the proposed method are given in Table 1.

Table 1. The used properties for classification

|

Method |

A ttributes |

Value |

|

QDA |

Regularization |

Diagonal Covariance |

|

LDA |

Regularization |

Diagonal Covariance |

|

Kernel function |

Quadratic |

|

|

Manuel kernel scale |

1 |

|

|

Box constraint level |

1 |

|

|

SVM |

Kernel scale mode |

Auto |

|

Standardize data |

True |

|

|

PCA |

Disable |

|

|

Multiclass method |

One-vs-One |

|

|

Number of neighbors |

1 |

|

|

Distance weight |

Equal |

|

|

KNN |

Distance metric |

Euclidean |

|

PCA |

Disable |

|

|

Standardize data |

True |

The steps of the proposed DWT and Neutrosophy based method are given as below.

Step 1: Load image.

Step 2: Segment the face image. This step describes pre-processing.

Step 3: Calculated LL, LH, HL, HH bands of the segmented facial image using 2D DWT.

[LL LH HL HH) = DWT2(segim, filter')(49)

Step 4: Create T and I set by applying neutrosophy to the LH, HL and HH bands. We didn’t use F set because F = 1 -T.

[TLHILH) = Veutrosophy (LH)(50)

[THLIHL] = Veutrosophy (HL)(51)

[THHjHH] = Veutrosophy (HH)(52)

Step 5: Apply the proposed FCSDCP to T LH , T HL , T HH , jlh,jhl ап^ jhh images respectively to calculate texture images using Eq. (53)-(58).

T1 = fcsdcp(TLH)(53)

T2 = fcsdcp(THL)(54)

T3 = fcsdcp(THH)(55)

V. Dataset

(a)

(b)

(c)

(d)

The attributes of these databases used for obtaining experimental results are given in Table 2.

Table 2. The attributes of the facial databases for performance evaluation

|

Database |

Samples per Class |

Class es |

Sample Resolution |

Total Samples |

Image Format |

|

AT&T |

10 |

30 |

512x512 |

300 |

pgm→jpg |

|

CIE |

10 |

30 |

512x512 |

300 |

jpg |

|

Face94 |

10 |

30 |

512x512 |

300 |

jpg |

|

AR |

10 |

30 |

512x512 |

300 |

raw→jpg |

The performance properties of the PC which the study is performed used to simulation are listed in Table 3.

-

VI. Performance Analysis and Discussions

The simulations and application of the proposed method is programmed using MATLAB 2016a and obtained results are given in this chapter and the attributes of the used PC are listed in Table 3.

Table 3. The properties of the PC

|

Operating System |

Windows 10 |

|

Programming Tool |

MATLAB 2016a |

|

CPU |

Intel Core i7-7700 |

|

CPU Frequency |

3.60 GHz and 4.20 GHz with Turbo boost |

|

RAM |

16 GB |

|

Buffer |

8M |

|

HDD |

1 TB |

|

Operating System |

Windows 10 |

|

Programming Tool |

MATLAB 2016a |

Acc(%) =

-

#True Positive+#True Negative

#Total number of image x 100

where Acc is accuracy value.

-

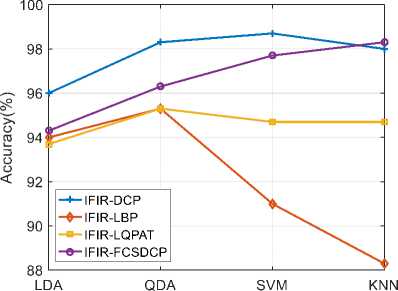

- Performance Analysis on CIE Database: The first experimental results in the study are obtained for the CIE database, which is widely used in the literature. The experimental results are given in Table 4.

Table 4. The experimental results for CIE database

|

CIE Database |

||||

|

Classifications |

IFIR-DCP |

IFIR-LBP |

IFIR-LQPAT |

IFIR-FCSDCP |

|

LDA |

96.0 |

94.0 |

93.7 |

94.3 |

|

QDA |

98.3 |

95.3 |

95.3 |

96.3 |

|

SVM |

98.7 |

91.0 |

94.7 |

97.7 |

|

KNN |

98.0 |

88.3 |

94.7 |

98.3 |

When the experimental results are analyzed, the average accuracy values of the descriptors for the selected classifiers are calculated as DCP-97.8, LBP-92.1 LQPAT-94.6 and FCSDCP-96.7.

-

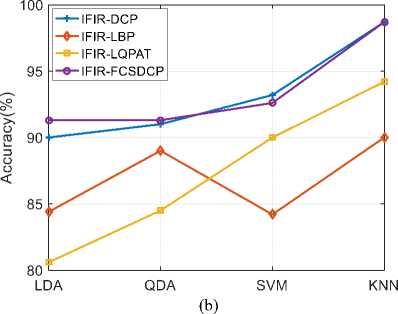

- Performance Analysis on AR Database: The images in AR databased are initially converted from raw format to jpg format. The experimental results are presented in Table 5.

Table 5. The experimental results for AR database

AR Database

|

Classifications |

IFIR-DCP |

IFIR-LBP |

IFIR-LQPAT |

IFIR-FCSDCP |

|

LDA |

90.0 |

84.4 |

80.6 |

91.3 |

|

QDA |

91.0 |

89.0 |

84.5 |

91.3 |

|

SVM |

93.2 |

84.2 |

90.0 |

92.6 |

|

KNN |

98.7 |

90.0 |

94.2 |

98.7 |

|

KNN |

98.7 |

90.0 |

94.2 |

98.7 |

The average accuracy values of the descriptors for the selected classifiers are DCP 97.8, LBP 92.1 LQPAT 94.6 and 96.7 for the proposed method. The average accuracy ratios of the descriptors are 93.2, 86.9, 87.3 and 93.5 for DCP, LBP, LQPAT and FCSDCP, respectively.

-

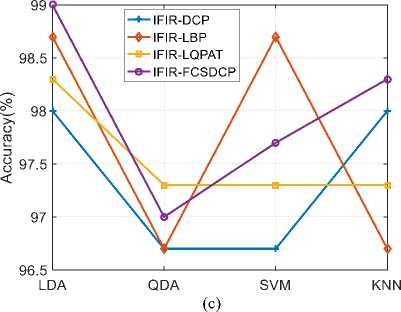

- Performance Analysis on Face94 Database: The experimental results obtained using Face 94 are presented in Table 6.

Table 6. The experimental results for Face94 database

|

Face94 Database |

||||

|

Classifications |

IFIR-DCP |

IFIR-LBP |

IFIR-LQPAT IFIR-FCSDCP |

|

|

LDA |

98.0 |

98.7 |

98.3 |

99.0 |

|

QDA |

96.7 |

96.7 |

97.3 |

97.0 |

|

SVM |

96.7 |

98.7 |

97.3 |

97.7 |

|

KNN |

98.0 |

96.7 |

97.3 |

98.3 |

The results obtained with the Face94 database are calculated as DCP 97.3, LBP 97.7 LQPAT 97.6 and FCSDCP 98.0.

|

AT&T Database |

||||

|

Classifications |

IFIR-DCP |

IFIR-LBP |

IFIR-LQPAT IFIR-FCSDCP |

|

|

LDA |

77.3 |

73.0 |

72.7 |

78.0 |

|

QDA |

79.7 |

75.7 |

75.7 |

83.3 |

|

SVM |

83.7 |

71.3 |

80.3 |

84.0 |

|

KNN |

90.3 |

79.0 |

84.7 |

87.7 |

-

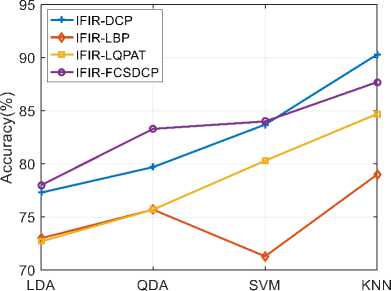

- Performance Analysis on AT&T Database : In order to use the images in AT&T database, images are converted

from pgm to jpg format. The experimental results are given in Table 7.

(a)

(d)

According to results, we proposed a successful image descriptor using novel space and a novel image descriptor. Also, 4 classifiers have been used and performances of these classifiers are compared. 4 datasets have been used to obtain numerical results. Discussions of this work are given as below.

-

- In this article, a novel transformation space is proposed. DWT and Neutrosophy are used together to propose this space and it is called as IFIR.

-

- The performance of the proposed descriptor differs according to the number of samples, image structure in database like the other commonly used descriptors in the literature.

-

- The experimental results showed that the proposed method can be used successfully in facial image recognition. At the same time, the IFIR-based FCSDCP descriptor is evaluated to have a higher recognition rate than other descriptors evaluated in the study.

-

- 4 classifiers were used in this work. The obtained

accuracies illustrated that KNN and SVM classifiers generally resulted more successful than LDA and QDA.

-

- Best accuracy values of the proposed IFIR-FCSDCP method are 98.3, 98.7, 99.0 and 87.7 for CIE, AR, Face94 and AT&T datasets respectively.

-

- The proposed method was also compared with Kagawade and Angadi’ s method. The Kagawade and Angadi’ s method uses AR database and the best score of it was equal to 98.21%. The proposed IFIR DCP and IFIR-FSCDCP are achieved 98.7%. These results are proved the success of the proposed method [37].

-

- Also, the proposed method is compared to the other descriptors using AR database. The comparison results are listed in Table 5 [37]. The descriptors used for comparisons are Adjacent Evaluation Local Ternary Pattern (AELTP), Adaptive Local Ternary Pattern (ALTP), Binary Gradient Contours (1) (BGC-1), Binary Gradient Contours (2) (BGC-2), Binary Gradient Contours (3) (BGC3),

Improved Local Ternary Pattern (ILTP), Local Concave-and-Convex Micro-Structure Patterns (LCCMSP), Linear Directional Binary Pattern (LDBP), Local Directional Number Pattern (LDN), Local Extreme Complete Trio Pattern (LECTP), eXtended Center-Symmetric Local Binary Pattern (XCS-LBP), Quad Binary Pattern (QBP), Mixed Neighbourhood Topology Cross Decoded Patterns (MNTCDP).

Table 8. Comparison results using AR dataset.

|

Descriptors |

Classification Accuracy |

|

AELTP [38] |

94.15% |

|

ALTP [39] |

93.91% |

|

BGC-1 [40] |

96.15% |

|

BGC-2 [40] |

96.47% |

|

BGC-3 [40] |

95.88% |

|

ILTP [41] |

93.32% |

|

LCCMSP [42] |

87.21% |

|

LDBP [43] |

96.66% |

|

LDN [44] |

97.0% |

|

LECTP [45] |

86.84% |

|

XCS-LBP [46] |

90.84% |

|

QBP [47] |

90.32% |

|

MNTCDP [37] |

97.37% |

|

The proposed IFIR-LBP |

90.0% |

|

The proposed IFIR-LQPAT |

94.2% |

|

The proposed IFIR-DCP |

98.7% |

|

The proposed IFIR-FCSDCP |

98.7% |

According to Table 8, the proposed IFIR-DCP and FSDCP have the best accuracy rates among the all descriptors for AR dataset.

-

VII. Conclusion

The performance of the proposed descriptor like other descriptors depends on image structure, database and number of samples. In addition, the high accuracy rates obtained prove success of the proposed method.

Список литературы Improving facial image recognition based neutrosophy and DWT using fully center symmetric dual cross pattern

- Chang, T., Kuo, C. C.: ‘Texture analysis and classification with tree-structured wavelet transform’, IEEE T. Image Process., 1993, 2, (4), pp. 429-441.

- Gomez-Barrero, M, Rathgeb, C, Li, G, et al.: ‘Multi-biometric template protection based on bloom filters’, Inform. Fusion, 2018, 42, pp. 37-50.

- Zhang, Y., Shang, K., Wang, J., et al.: ‘Patch strategy for deep face recognition’, IET Image Process., 2018, 12, (5), pp. 819 – 825.

- Tome, P., Vera-Rodriguez, R., Fierrez, J.: ‘Facial soft biometric features for forensic face recognition’, Forensic Sci. Int., 2015, 257, pp. 271-284.

- Kwak, K. C., Pedrycz, W.: ‘Face recognition using a fuzzy fisherface classifier’, Pattern Recogn., 2015, 38 (10), pp. 1717-1732.

- Wen, G., Chen, H., Cai, D., et al.: ‘Improving face recognition with domain adaptation’, Neurocomputing, 2018, 287, pp. 45-51.

- Vo, D. M. Lee, S. W.: ‘Robust face recognition via hierarchical collaborative representation’, Inform. Sciences, 2018, 432, pp.332-346.

- Edmunds, T., Caplier, A.: ‘Face spoofing detection based on colour distortions’, IET Biometrics, 2017, 7, (1), pp. 27-38.

- Pillai, A., Soundrapandiyan, R., Satapathy, S., et al.: ‘Local diagonal extrema number pattern: A new feature descriptor for face recognition’, Future Gener. Comp. Sy., 2018, 81, pp. 297-306.

- Yang, B., Chen, S.: ‘A comparative study on local binary pattern (LBP) based face recognition: LBP histogram versus LBP image’, Neurocomputing, 2013, 120, pp. 365-379.

- Chakraborty, S., Singh, S., Chakraborty, P.: ‘Local gradient hexa pattern: A descriptor for face recognition and retrieval’, IEEE T. Circ. Syst. Vid., 2016, 28, (1), pp. 171-180.

- Liu, L., Fieguth, P., Zhao, G., et al.: ‘Extended local binary patterns for face recognition’, Inform. Sciences, 2016, 358, pp. 56-72.

- Guo, Y., Cheng, D. H.: ‘New neutrosophic approach to image segmentation’, Pattern Recogn., 2009, 42, (5), pp. 587-595.

- Zhang, M., Zhang, L., Cheng, H. D.: ‘A neutrosophic approach to image segmentation based on watershed method’, Signal Process., 2010, 90, (5), pp. 1510-1517.

- Sengur, A., & Guo, Y.: Color texture image segmentation based on neutrosophic set and wavelet transformation. Computer Vision and Image Understanding, 2011, 115, (8), pp. 1134-1144.

- Tan, K. S., Lim, W. H., Isa, N. A. M., ‘Novel initialization scheme for Fuzzy C-Means algorithm on color image segmentation’, Appl. Soft Comput., 2013, 13, (4), pp.1832-1852.

- Koundal, D., Gupta, S., Singh, S.: ‘Computer aided thyroid nodule detection system using medical ultrasound images’, Biomed. Signal Proces., 2018, 40, pp. 117-130.

- Thanki, R. Borra, S.: ‘A color image steganography in hybrid FRT–DWT domain’, Journal of Information Security and Applications, 2018, 40, pp. 92-102.

- Sengur, A.: Wavelet transform and adaptive neuro-fuzzy inference system for color texture classification. Expert Systems with Applications, 2008, 34, (3), 2120-2128.

- Sangeetha, N., Anita, X., ‘Entropy based texture watermarking using discrete wavelet transform’, Optik, 2018, 160, pp. 380-388.

- Ojala, T., Pietikäinen, M., Mäenpää, T.: ‘Multiresolution gray-scale and rotation invariant texture classification with local binary patterns’, IEEE Trans. Pattern Anal. Mach. Intell., 2002, 24, (7), pp. 971–987.

- Pan, Z., Li, Z., Fan, H., et al.: ‘Feature based local binary pattern for rotation invariant texture classification’, Expert Syst. Appl., 2017, 88, pp. 238-248.

- Ding, C., Choi, J., Tao, D., et al.: ‘Multi-directional multi-level dual-cross patterns for robust face recognition’, IEEE Trans. Pattern Anal. Mach. Intell., 2016, 38, (3), pp. 518-531.

- Gao, Y., Qi, Y.: ‘Robust visual similarity retrieval in single model face databases’, Pattern Recogn., 2005, 38, (7), pp.1009-1020.

- Chakraborty, S., Singh, S. K., Chakraborty, P.: ‘Local quadruple pattern: A novel descriptor for facial image recognition and retrieval’, Comput. Electr. Eng.,2017, 62, pp. 92-104.

- Chakraborty, S., Singh, S. K., Chakraborty, P.: ‘Centre symmetric quadruple pattern: A novel descriptor for facial image recognition and retrieval’, Pattern Recogn. Lett., 2017, pp. 1-9.

- Abuzneid, M. A., Mahmood, A.: ‘Enhanced Human Face Recognition Using LBPH Descriptor, Multi-KNN, and Back-Propagation Neural Network’, IEEE Access, 2018, 6, pp. 20641-20651.

- Guo, G., Li, S. Z., Chan, K.: ‘Face recognition by support vector machines’, In Automatic Face and Gesture Recognition, 2000. Proceedings. Fourth IEEE International Conference on, 2000, pp. 196-201.

- Chen, L. F., Liao, H. Y. M., Ko, M. T., et al.: ‘A new LDA-based face recognition system which can solve the small sample size problem’, Pattern Recogn, 2000, 33, (10), pp. 1713-1726.

- Lu, J., Plataniotis, K. N., Venetsanopoulos, A. N.: ‘Regularized discriminant analysis for the small sample size problem in face recognition’, Pattern Recogn Lett, 2003, 24, (16), pp. 3079-3087.

- Kabacinski, R., Kowalski, M.: ‘Vein pattern database and benchmark results’, Electron Lett, 2011, 47, (20), pp. 1127 - 1128.

- Martinez A.M., Benavente, R.: ‘The AR Face Database’, CVC Technical Report,24, June 1998.

- Martínez , A.M., Kak , A.C., ‘PCA versus LDA’, IEEE Trans. Pattern Anal. Mach. Intell., 2001, 23, (2), pp. 228–233.

- Libor Spacek's Facial Image Database, “Face94Database”:http://cswww.essex.ac.uk/mv/allfaces/faces94.html (accessed Jan 1, 2018).

- Samaria F., Harter A.: ‘Parameterisation of a stochastic model for human face identification’, In Applications of Computer Vision, Proceedings of the Second IEEE Workshop on, 1994, pp. 138-142.

- Zhu, J., Wu, S., Wang, X., et al.: ‘Multi-image matching for object recognition’. IET Comput. Vis., 2018, 12, (3), pp. 350-356.

- Kas, M., Ruichek, Y., and Messoussi, R., 'Mixed Neighborhood Topology Cross Decoded Patterns for Image-Based Face Recognition', Expert Systems with Applications, 2018, 114, pp. 119-142.

- Song, K., Yan, Y., Zhao, Y., and Liu, C., 'Adjacent Evaluation of Local Binary Pattern for Texture Classification', Journal of Visual Communication and Image Representation, 2015, 33, pp. 323-339.

- Yang, W., Wang, Z., and Zhang, B., 'Face Recognition Using Adaptive Local Ternary Patterns Method', Neurocomputing, 2016, 213, pp. 183-190.

- Fernández, A., Álvarez, M.X., and Bianconi, F., 'Image Classification with Binary Gradient Contours', Optics and Lasers in Engineering, 2011, 49, (9-10), pp. 1177-1184.

- Nanni, L., Brahnam, S., and Lumini, A., 'A Local Approach Based on a Local Binary Patterns Variant Texture Descriptor for Classifying Pain States', Expert Systems with Applications, 2010, 37, (12), pp. 7888-7894.

- Ruichek, Y., 'Local Concave-and-Convex Micro-Structure Patterns for Texture Classification', Pattern Recognition, 2018, 76, pp. 303-322.

- Rajput, S. and Bharti, D.J., 'A Face Recognition Using Linear-Diagonal Binary Graph Pattern Feature Extraction Method', International Journal in Foundations of Computer Science andTechnology (IJFCST, 2016, 6, (2), pp. 55-65.

- Rivera, A.R., Castillo, J.R., and Chae, O.O., 'Local Directional Number Pattern for Face Analysis: Face and Expression Recognition', IEEE transactions on image processing, 2013, 22, (5), pp. 1740-1752.

- Vipparthi, S.K. and Nagar, S.K., 'Local Extreme Complete Trio Pattern for Multimedia Image Retrieval System', International Journal of Automation and Computing, 2016, 13, (5), pp. 457-467.

- Silva, C., Bouwmans, T., and Frélicot, C., 'An Extended Center-Symmetric Local Binary Pattern for Background Modeling and Subtraction in Videos', in, International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, VISAPP 2015, (2015).

- Zeng, H., Chen, J., Cui, X., Cai, C., and Ma, K.-K., 'Quad Binary Pattern and Its Application in Mean-Shift Tracking', Neurocomputing, 2016, 217, pp. 3-10.