Integration of deep learning and wireless sensor networks for accurate fire detection in indoor environment

Автор: Dheyab O.A., Chernikov D.Yu., Selivanov A.S.

Журнал: Журнал Сибирского федерального университета. Серия: Техника и технологии @technologies-sfu

Рубрика: Информационно-коммуникационные технологии

Статья в выпуске: 1 т.17, 2024 года.

Бесплатный доступ

Systems for detecting fires are essential for protecting people and property. Still, there are a lot of problems with these systems' accuracy and the frequency of false warnings. This study uses wireless sensor networks with deep learning to improve the accuracy of real-time fire detection systems and decrease false alarms. Wi-Fi camera movies are analyzed using the YOLOv5 deep learning model. This model locates and classifies items quickly and precisely using deep learning techniques. To guarantee accurate detection, a sizable collection of fire-related data is used to train the model. When a fire occurs, users receive early warnings via WebRTC technology, and live footage of the burning location is broadcast. Using these sophisticated technologies, the efficiency of fire detection in the indoor environment can be improved, providing users with immediate and accurate alarms. Personnel and property safety is improved, and losses due to fires in the interior environment are decreased.

Fire detection, yolov5, deep learning, wireless sensor networks

Короткий адрес: https://sciup.org/146282835

IDR: 146282835 | УДК: 654.946-187.4

Текст научной статьи Integration of deep learning and wireless sensor networks for accurate fire detection in indoor environment

Цитирование: Деяб О. А. Интеграция систем глубокого обучения и беспроводных сенсорных сетей для точного обнаружения пожара в помещении / О. А. Деяб, Д. Ю. Черников, А. С. Селиванов // Журн. Сиб. федер. ун-та. Техника и технологии, 2024, 17(1). С. 124–135. EDN: WZHDHV capacity to handle image and video streams in real-time is one of its main advantages this makes it perfect for applications that need quick responses. Delays in detecting fires are a common problem with traditional fire detection systems, which can result in serious loss and damage. We can greatly shorten the reaction time by using YOLOv5 and communication technology, allowing for quicker firefighting and evacuation operations. The decrease in false alerts is another essential feature of fire detection systems. False alerts erode the confidence of the system. YOLOv5 can also be trained on big datasets, allowing it to learn and adapt to different fire conditions. We can improve the algorithm's capacity to detect fires effectively across multiple contexts by using a wide set of training data, such as different fire types, sizes, and environmental circumstances.

Many approaches for constructing a fire detection system have been proposed. To identify fires, [5] employed a variety of color schemes, including RGB, HSI, YUV, YCbCr, and CIE L*a*b*. The paper [6] provides an overview of four commonly used deep learning-based object identification methods, highlighting their merits and shortcomings. According to the findings of this study, the fire lighter is a fire with a probability ranging from 82 % to 84 %. The creation of an internal system for early fire detection and real-time fire location identification is discussed in [7]. This study's test findings show that candle fire can be detected with an accuracy of 65.8 %. The paper [8] describes the development of vision-based early flame detection and notification. The result of the proposed method shows the flame of wood stoves, candles, and fire lighters as fire. YOLOv3 was improved in [9] to improve feature extraction capacity and overcome computational complexity for accurate fire detection. In [10], the YOLOv4 model was developed to identify fires in real-time scenarios. The study [11] proposes a particular fire detection method based on YOLOv5, which gives superior accuracy and real-time performance. The fire lighter, according to the findings of this inquiry, is a fire. [12] The purpose of this study is to develop a fire detection and early warning system that will monitor the property and notify the homeowner if a fire starts. [13] This paper's main objective is to handle kitchen safety issues and alert users' phones in the event of a gas leak or fire.

This paper proposes to develop a wireless sensor network based on a Wi-Fi camera and deep learning. To detect fires, the YOLOv5 model was used. Compared to previous versions, YOLOv5 is smaller, faster, and more accurate. It is easy to use and does not require any programming knowledge. The suggested concept was designed to handle flames that resulted from heating stoves, candles, and lighters as false alarms rather than real fires. The idea also suggested using a communications network to relay data from the cameras to the fire detection system. The proposed device not only alerts the user to the presence of a fire but also displays live imagery of the surrounding region. The proposed system additionally shows the user the location of the fire in the structure.

I. Wi-Fi Cameras and Data Transmission

II. Web Real-Time Communication

Web Real-Time Communication, or WebRTC for short, is the name of an open-source protocol that allows people to communicate in real-time through web applications. With WebRTC, data can be shared, and audio and video may be easily exchanged between browsers without requiring the installation of any software. RTP (Real-Time Transport Protocol), STUN (Session Traversal Utilities for NAT), ICE (Interactive Connectivity Establishment), and other technologies are required for WebRTC technology to function. WebRTC makes it simple for developers to build online apps that provide file and screen sharing, audio and video chatting, and both. WebRTC offers an application programming interface (API) for controlling real-time communications. This interface allows developers to establish secure and effective direct communication channels across browsers. Many contemporary browsers, including Microsoft Edge, Mozilla Firefox, and Google Chrome, support WebRTC. Numerous industries, including online gaming, telemedicine, remote learning, online communications, and real-time collaboration, use WebRTC technologies. By offering a direct and efficient method of online communication, WebRTC is a potent and distinctive technology that enhances the user experience [15].

III. Deep learning and machine learning

Machine learning is the study of methods and frameworks that let computers learn and form opinions or predictions without needing to be explicitly programmed. It comprises training a computer system to recognize patterns and form conclusions based on data [16].

Deep learning is a branch of machine learning that draws inspiration from the composition and operation of the human brain [17]. Artificial neural networks, which are composed of interconnected layers of nodes, are used to process and learn from massive volumes of data. Deep learning algorithms are able to extract complex features and generate precise predictions because they are able to autonomously learn hierarchical data representations.

IV. YOLO (You Only Look Once)

A well-known object recognition method that has revolutionized computer vision is called YOLO [18]. Joseph Redmon et al. were the ones who first proposed it in 2016. Yolo's real-time processing speed is its main benefit, which makes it appropriate for applications that need quick and precise object recognition. YOLO builds a grid from the input image and calculates the bounding boxes and class probabilities for every grid cell. Yolo has seen numerous improvements and changes since its original release. Some of the most popular YOLO variants include the following:

-

1. YOLOv1: This iteration of YOLO introduced the idea of dividing an image into grids and forecasting bounding boxes and class probabilities. Nevertheless, it had trouble localizing and was not able to distinguish small features.

-

2. YOLOv2 (YOLO9000): To address the shortcomings of the first version, anchor boxes and a multi-scale approach were included. It also included improved object identification accuracy and a broader network architecture.

-

3. YOLOv3: This improved YOLO performance and accuracy by utilizing multiple detection scales and integrating a feature pyramid network (FPN). Additionally, it brought in novel methods like Darknet-53, which improved the backbone network's efficiency.

-

4. YOLOv4: The object classification modularity in YOLOv4 has been enhanced to enhance accuracy and stability in the recognition of diverse objects.

-

5. YOLOv5: Introduced in 2020, YOLOv5 seeks to improve upon the simplicity and speed of YOLO. The proposal introduces a unique approach named «Scaled-YOLOv4» together with a streamlined design to achieve comparable precision while reducing inference time.

Every YOLO version has advanced real-time object detection and has found widespread use in a range of applications, such as autonomous vehicles, robots, and surveillance systems [19–26].

V. Algorithm

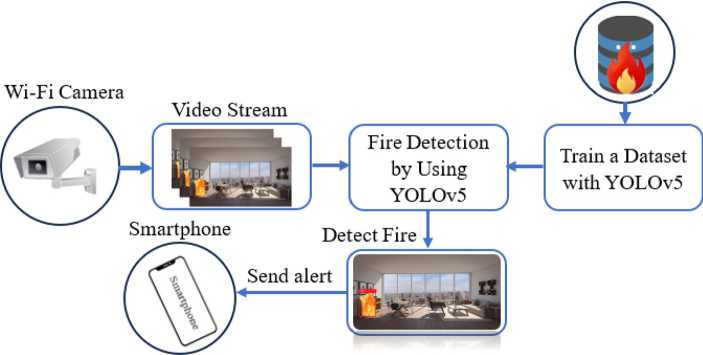

Fig. 1 depicts the proposed algorithm for accurately detecting fire.

The initial stage in training an advanced fire detection system is to amass a dataset of photographs containing both genuine and false flames, as well as non-fire images [27]. The dataset should be broad, spanning a wide range of scenarios, such as various lighting conditions and camera angles. It is possible to obtain the dataset by gathering images from a variety of sources, including video surveillance cameras, public image repositories, and plain old image capture. Making sure the dataset is indicative of the real-world situations in which the system will be used and balanced is crucial. A lighter, candle, or wood stove fire was not regarded as a fire but rather as a false alert in order to improve fire detection accuracy and lower false alarms. Examples of the positive and negative images used in training are displayed in Fig. 2.

Database (Fire Images)

Fig. 1. Fire detection algorithms

b

Fig. 2. Sample images used to train the YOLOV5 model; a – negative images; b – positive images

Following the collection of the dataset, the photos must be labeled in order to identify the areas of interest (ROIs) that include flames. This is possible with tools like the labelImg application, which allows the user to define bounding boxes around ROIs and label them accordingly. The annotated photos are then saved in a format compatible with the deep learning framework that was used to train the model. Fig. 3 displays the labeling of the fire's location on positive images.

Fig. 3. Labels the fire location in positive images

A dataset is usually split into training, validation, and testing sets in order to be trained. The YOLOv5 model is trained on the training set, refined and hyperparameters adjusted on the validation set, and the trained model's final performance is assessed on the testing set. By adjusting its parameters according to a loss function, the YOLOv5 model gains the ability to recognize and categorize objects throughout the training phase. The model iterates several times, modifying its weights to minimize the discrepancy between anticipated and actual object placements. It is an iterative procedure to train a YOLOv5 model. It entails training the model, evaluating its performance, and making any improvements. This repeated development aids in fine-tuning the model and achieving higher detection and accuracy. An essential part of training a YOLOv5 model is training data. Training a dependable and accurate YOLOv5 model requires a well-organized and varied dataset, correct annotations, and appropriate quality control. Data augmentation and iterative development further improve the model's performance and generalization abilities.

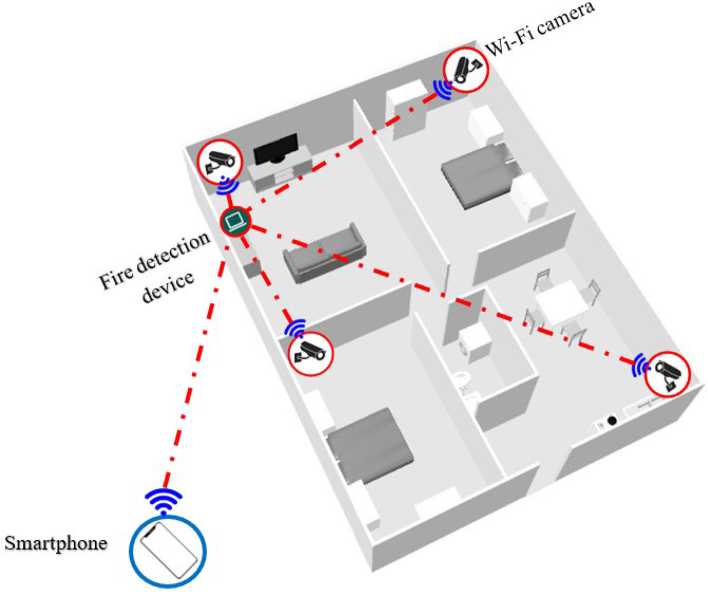

The Wi-Fi camera continuously streams video frames, which are fed into the trained YOLOv5 model. Using Wi-Fi cameras eliminates the need for physical connections, simplifying installation and setup. Wi-Fi cameras can be installed anywhere within the range of a Wi-Fi network, allowing for greater flexibility in camera placement. Proper camera placement is essential for ensuring complete coverage of the area. Fig. 4 depicts a communications network within a home environment, linking surveillance cameras and the homeowner's smartphone to a fire detector.

The Wi-Fi camera continuously provides video to the fire detection system. Individual frames are created from the video stream. Each frame is a single image that will be processed for fire detection by YOLOv5. To improve the quality of the frames before feeding them into YOLOv5, preprocessing

Fig. 4. Communications network within a home environment, linking surveillance cameras and the homeowner's smartphone to a fire detector methods might be used. This may entail scaling the frames to a certain input size, normalizing pixel values, and if necessary modifying brightness or contrast. After that, the preprocessed frames are run through the trained YOLOv5 model. The model examines each frame and detects and localizes fire-related items using bounding boxes to determine the existence of fire. For each recognized object, YOLOv5 returns bounding boxes, class labels, and confidence scores. A confidence threshold can be used to filter out false positives. Only detections with confidence scores greater than the threshold are considered valid fire incidents. These procedures are carried out in real-time to examine the incoming frames from the video stream. This enables rapid identification and reaction to fire occurrences. When a fire occurs, fire detection software is critical for providing early warning and implementing appropriate safety measures. One of the most useful features that fire detection software can provide is the ability to contact the user and provide a video of the location of the fire. To achieve real-time communication between the fire detection software and the user, this system uses WebRTC technology. The fire detection alert software on the user's device can receive video directly from surveillance cameras positioned at the affected site thanks to WebRTC.

VI. Experimental results

We used an image classification program, the Google Colaboratory platform, Android Studio Flamingo 2022.2.1, and the Yolov5 application on a PC with an Intel (R) Core (TM) i7–8550U CPU @ 1.80GHz processor and the 64-bit Windows 11 Pro operating system to do this project. The image labeling program was used to label the location of fires in photographs for training purposes. The Google Colaboratory platform was used to train and test the fire pictures. The WebRTC-based fire alarm application was created in Android Studio. The Yolov5 application was used to put the project's ability to identify flames in photos, videos, and a real-time via a camera to the test. In the case of a fire, the fire detection program was also upgraded to convey alarms via communications technology. To ensure a reliable evaluation of the system, we run the proposed method three times. To validate the effectiveness of the trained model, we tested it with input data from fire images, fire videos, and realtime camera. When the model detects a fire, it displays a bounding box highlighted in red along with a confidence score.

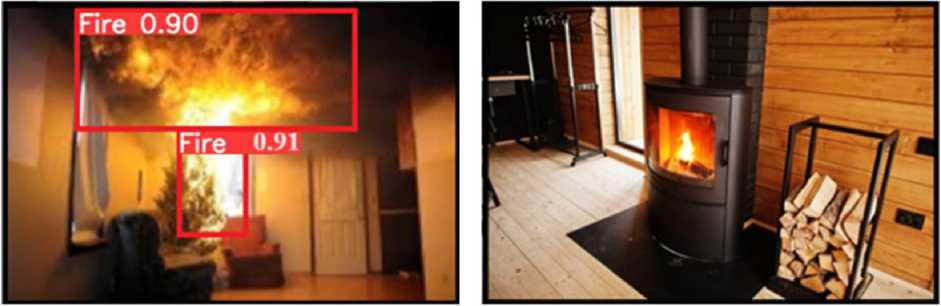

In the first test, we checked the program's ability to detect fire in images using two pictures. One picture contained real fire, while the other did not. Fig. 5 shows the prediction results for detecting real fire in the images. The program correctly detected the real fire in image (a) with a confidence of 0.90 and 0.91, but did not consider the wood stove as a fire in image (b).

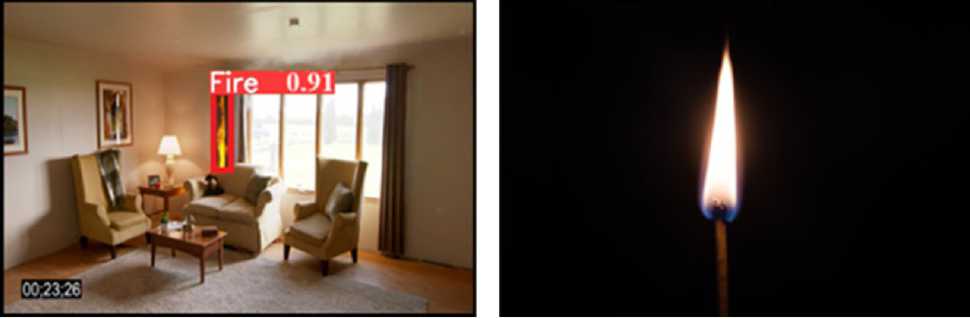

In the second test, we used the program to detect fire in videos that contained both real and fake fire. Fig. 6 shows the detection results for real fire in the videos. The program correctly predicted the presence of real fire in video (a) with a confidence level of 0.90 but did not consider the matchstick as a fire in video (b).

In the third test, we used the program to detect fire in real-time using a camera. Fig. 7 shows the prediction results for a live camera feed. We tested the program's efficiency using fake fire, and the result correctly showed that no fire was detected.

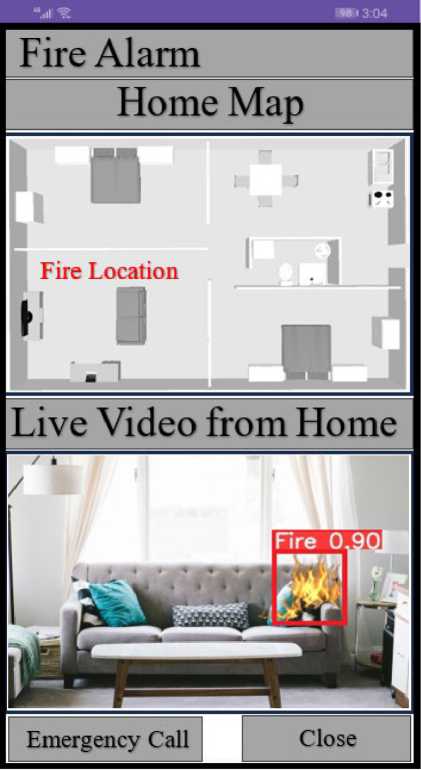

An application was developed to facilitate communication between the fire detection software and the smartphone user over WebRTC. The user gets notified through the user interface of the fire detection software when a fire is detected. The user's smartphone displays a live video of the fire

a

b

Fig. 5. The prediction results for detecting real fire in the images

a

b

Fig. 6. The prediction results for detecting real fire in the videos

Fig. 7. The prediction results for a live camera feed

Fig. 8. Fire alert program displaying a video of the fire area incident and its location within the building. An early fire alarm makes it possible for the user to swiftly assess the situation and take appropriate action. Because it enables safe and effective real-time communication between the user and the software, WebRTC technology is perfect for this use case. This technology enables high-quality, low-latency video transmission, enabling the user to act quickly and choose the best course of action. Fire detection software that makes use of WebRTC technology can be a very effective tool for handling fire situations since it can give users precise, up-to-date information that can help control the fire and limit possible damage. The fire alert program displays a video of the fire's location, as shown in Fig. 8.

Conclusion

Deep learning and wireless sensor networks can help achieve accurate interior fire detection. The proposed system is based on YOLOv5, and the results of the experiments show that the detection model is more accurate and works well for real-time fire detection. Edge cameras with the fire detection model can be used to detect fires in buildings, factories, and houses. Furthermore, this technology is particularly useful in sensitive places such as petrol stations, where it can aid in the rapid identification and extinguishment of fires. The use of cutting-edge technology improves the capacity for swift response and early detection, thereby saving lives and preserving a secure interior environment.

Список литературы Integration of deep learning and wireless sensor networks for accurate fire detection in indoor environment

- Barmpoutis P. Papaioannou P., Dimitropoulos K. Grammalidis N. A review on early forest fire detection systems using optical remote sensing, Sensors, 2020, 20, 1-26, doi:10.3390/s20226442.

- Redmon J, Divvala S, Girshick R. et al. You only look once: unified, realtime objectdetecti-on[C], IEEE Conference on Computer Vision and Pattern Recognition, 2016, 779-788.

- Yu N., Chen Y. Video flame detection method based on TwoStream convolutional neural network, Proceedings of the 2019 IEEE 8th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 24-26 May 2019, 482-486.

- Jain P., Coogan S. C., Subramanian S. G., Crowley M.; Taylor S., Flannigan M. D. A review of machine learning applications in wildfire science and management. Environ. Rev. 2020, 28, 478-505, doi:10.1139/er-2020-0019.

- Abdusalomov A.B., Islam B.M. S., Nasimov R., Mukhiddinov M., & Whangbo T.K. (2023). An improved forest fire detection method based on the detectron2 model and a deep learning approach. Sensors, 23(3), 1512.

- Luo W. Research on fire detection based on Yolov5', 3rd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE) [Preprint], 2022, doi: 10.1109/icbaie56435.2022.9985857.

- Li Y., Shang J., Yan M., Ding B., & Zhong J. Real-time early indoor fire detection and localization on embedded platforms with fully convolutional one-stage object detection, Researchgate, 2023, 15(3), 1794, doi: 0.3390/sul5031794

- Abdusalomov A. B., Mukhiddinov M., Kutlimuratov A., & Whangbo T. K. Improved real-time fire warning system based on advanced technologies for visually impaired people, Sensors, 2022, 22(19), 7305, doi: 10.3390/s22197305

- Sun Y., & Feng, J. Fire and smoke precise detection method based on the attention mechanism and anchor-free mechanism, Complex & Intelligent Systems, 2023, 1-14.

- Zhao J., Wei H., Zhao X., Ta N., & Xiao M. Application of improved YOLO v4 model for real time video fire detection, Basic & clinical pharmacology & toxicology, 2021, 128. 47.

- Bharathi V., Vishwaa M., Elangkavi K. and Krishnan V. H. A custom yolov5-based real-time fire detection system: a deep learning approach, Journal of Data Acquisition and Processing, 2023, 38(2), 441, doi: 10.5281/zenodo.7766359.

- Hery, Hery et al. The design of microcontroller based early warning fire detection system for home monitoring, IJNMT (International Journal of New Media Technology), 2022, 9(1), 6-12.

- Logeshwaran M. and J. Joselin Jeya Sheela ME. Designing an IoT based Kitchen Monitoring and Automation System for Gas and Fire Detection, 6th International Conference on Computing Methodologies and Communication (ICCMC). IEEE, 2022.

- Wu K. and Lagesse B. Do you see what I see? Detecting hidden streaming cameras through similarity of simultaneous observation, IEEE International Conference on Pervasive Computing and Communications (PerCom) [Preprint]. doi:10.1109/percom.2019.8767411.

- Bakary D., Ouamri A., and Keche M. A Hybrid Approach for WebRTC Video Streaming on Resource-Constrained Devices, Electronics, (2023, 12(18), 3775.

- Russell S. J. et al. Artificial Intelligence: A modern approach. Harlow, United Kingdom: Pearson, 2022.

- Janiesch Christian J., Zschech P., and Heinrich K. Machine learning and deep learning, Electronic Markets, 2021, 31(3), 685-695.

- Sultana F., Sufian A., & Dutta P. A review of object detection models based on convolutional neural network. Intelligent Computing: Image ProcessingBasedApplications, 2020, 1-16.

- Zhao Z. Q., Zheng P., Xu S. T., & Wu X. Object detection with deep learning: A review. IEEE transactions on neural networks and learning systems, 2019, 30(11), 3212-3232.

- Zou X. A Review of Object Detection Techniques, International Conference on Smart Grid and Electrical Automation (ICSGEA), 2019, August, 251-254.

- Laroca R., Severo E., Zanlorensi L.A., Oliveira L. S., Gongalves G.R., Schwartz W.R., & Menotti D. A robust real-time automatic license plate recognition based on the YOLO detector, International Joint Conference on Neural Networks (IJCNN), 2018, July, 1-10.

- Tian Y., Yang G., Wang Z., Wang H., Li E., & Liang Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model, Computers and electronics in agriculture, 2019, 157, 417-426.

- Jamtsho Y., Riyamongkol P., & Waranusast R. Real-time license plate detection for non-helmeted motorcyclist using YOLO, ICT Express, 2021, 7(1), 104-109.

- Han J., Liao Y., Zhang J., Wang S., & Li S. Target fusion detection of LiDAR and camera based on the improved YOLO algorithm, Mathematics, 2018, 6(10), 213.

- Lin J. P., & Sun M.T. A YOLO-based traffic counting system. Conference on Technologies andApplicationsofArtificiallntelligence (TAAI), 2018, November, 82-85.

- Lu J., Ma C., Li L., Xing X., Zhang Y., Wang Z., & Xu J. A vehicle detection method for aerial image based on YOLO, Journal of Computer and Communications, 2018, 6(11), 98-107.

- Toulouse T., Rossi L., Campana A., Celik T., Akhloufi M. Computervisionfor wildre research: An evolving image dataset for processing and analysis, Fire Safety Journal, 2017, 92, 188-194.