Introducing TCD-D for Creativity Assessment: A Mobile App for Educational Contexts

Автор: Aurelia De Lorenzo, Alessandro Nasso, Viviana Bono, Emanuela Rabaglietti

Журнал: International Journal of Modern Education and Computer Science @ijmecs

Статья в выпуске: 1 vol.15, 2023 года.

Бесплатный доступ

This paper presents the Test of Creativity and Divergent Thinking-Digital (TCD-D) mobile application, a digital version of the Williams Test for assessing creativity through graphic production. The Test of Creativity and Divergent Thinking-Digital is a new, simple and intuitive mobile application developed by a team of psychologists and computer scientists to remain faithful to the paper version of the test but to provide a faster assessment of divergent thinking. In fact, creativity assessment tests are currently still administered in paper form, which requires a lot of time and human resources for scoring, especially when administered to large samples, as is the case in educational studies. Several digital prototypes of creativity assessment instruments have been developed over the past decade, some of which are derived from paper instruments and some of which are completely new. Of all these attempts, no one has yet worked on a digital version of the Williams Test of Creativity and Divergent Thinking, although this instrument is widely used in Europe and Asia. Moreover, of all the prototypes of digital tools in the literature, none has been developed as a mobile app for tablets, a tool very close to the younger generation. The app was developed to provide a quicker and more contemporary assessment that accommodates the technological interests of digital natives through the use of touch in drawing and adds some additional indices to those of the paper tool for assessing fluency, flexibility, originality and creative elaboration. The application for Android tablets speeds up the assessment of divergent thinking and supports the monitoring of creative potential in educational and learning contexts. The paper discusses how the application works, the preferences and opinions of the students who tested it, and the future developments planned for the implementation of the application.

Creativity, Divergent Thinking, Digital Natives, Mobile App, Education

Короткий адрес: https://sciup.org/15019101

IDR: 15019101 | DOI: 10.5815/ijmecs.2023.01.02

Текст научной статьи Introducing TCD-D for Creativity Assessment: A Mobile App for Educational Contexts

Creativity, defined as the ability to generate original, flexible, and effective ideas, insights, and solutions, has always been considered an essential human capacity to cope with change and demands of our (ever more) complex society [1]. Increasing the creative potential of individuals provides relevant implications for economic and social development [2]. In order to prepare young people for the new, increasingly involved and often technology-related problems they will encounter in the future, it is important that creativity is promoted as a transversal competence to be valued especially in the school context [3]. This is what has been happening since 1993 when the World Health Organization (WHO) included creativity within the Life Skills for the development and adaptation of the individual to the environment [4]. However, the promotion of creativity as a transversal competence is hindered by the difficulty of its assessment. Indeed, the currently existing tools for assessing creative potential apply with many difficulties to the educational context, either because they are too complex and difficult to assess, or simply because they are not freely available [2]. Moreover, the ability to use digital technologies is also seen as a fundamental skill in the 21st century, and this is also true with respect to creativity. Therefore, not surprisingly, the link between technology and creativity plays a key role in contemporary educational settings [5]. The continuing advancement of the digitization of our world and the growing demand for digital talent require changes in educational systems [6]. Digital tools and information and communication technologies (ICTs) in general, now within everyone's reach, offer new opportunities to manifest individual creativity as well as to assess and enhance it [7, 8]. The schools are required to support students in learning experiences that prepare them for the job market, since more than 60 percent of all jobs require a high level of critical thinking, creativity and interpersonal skills. In this context, there is a need for innovation for the future of education and digital approaches that play a significant role in supporting creative thinking [9]. The implementation of new technological tools in educational settings, particularly for mobile devices, allows for the creation of a new learning environment that better supports creativity in school practice and provides concrete means to facilitate its assessment in the classroom [10]. This consideration is all the more valid when one considers that the use of new technologies is quite natural for the students of the new millennium, the so-called digital natives [11].

Despite these premises, almost all instruments used today to assess creative potential in schools and other institutions are still paper-based [12]. While learning appears to be increasingly digitized, assessment of creative potential still remains rooted in tools developed in the middle of the last century: paper tests, especially in the areas of drawing and graphic production [13]. The failure to assess creative potential using digital tools especially in education is not due to the lack of new technologies or the lack of attempts in the literature. With the advent of digitization, various technologies, including the mobile one, have enabled the transfer of paper exams to digital media. Such transfers have become increasingly important over the past decade, which has expanded opportunities for large-scale testing by simplifying data collection and monitoring creativity on a large scale, such as may be the case at the school or at the corporate level [13]. However, even with the improvements offered by new technologies for creativity, the old paper-and-pencil tests remain the most widely used forms because they are universally applicable, easy to understand, and readily available [14,15]. Still, the prevalence of paper-based tests that require in-person assessment, timeconsuming coding, and high cost brings us back to a common consideration in the literature: in the absence of digital assessment tools that are easier to use, inexpensive, and available for daily use in educational settings, it is difficult to think of systematically promoting creativity in educational systems [16]. Indeed, it was not until the late 1990s that the first digital versions of creativity assessment instruments were developed, and few studies have addressed the potential of technological assessment of divergent thinking [14;17,18]. Most studies have used computerized versions of existing paper instruments for creativity assessment, as in the case of Kwon's Torrance Test of Creative Thinking (TCTT) [19], the e-WKCT, the instrument developed by Lau [17] based on Wallach-Kogan's creativity tests [20] or the computerized version of Torrance's TCTT [19] developed by Zabramski [15;21]. However, other authors have focused on developing new digital tools to assess creativity without relying on existing paper versions, as in the case of Palaniappan [18], who developed the Creativity Assessment System (CAS), or Putra and colleagues [22], who proposed a tool to automatically assess four factors of divergent thinking. The use of new approaches and models, as in the case of fuzzy theory [23], Scratch [19] and the use of neural networks [24], makes this branch of digital development of tools for measuring divergent thinking very interesting. However, all of the previously mentioned tools were designed for personal computers and/or were accessible as dedicated web apps, without giving due consideration to the mobile app development that has happened in the last decade. Indeed, tools that are offered through mobile device apps represent one of the most useful and flexible digital technologies for application in instructional settings [25]. At present, most of the tools in digital version follow the model of the Torrance test [19], while there are no digitized versions of the test proposed by Williams [23], which is widely used, especially in educational settings, in countries such as Italy [26] and China [27]. Moreover, an assessment tool that can be used on an Android-based mobile device has not yet been created before, to the best of our knowledge. Within this respect, it is worth noting that Android is the most used mobile operating system worldwide, also due to the fact that it is open source.

For these reasons we implemented the TCD-D, or the Test of Creativity and divergent thinking, in digital form. The TCD-D comes from the paper version of the Williams Test, known as TCD [28] in Italy and as CAP [23] in the rest of the world. The overall aim of this study is to develop a prototype mobile app, called the TCD-D, which replicates and enhance the paper version of the Williams Test for measuring divergent thinking. In detail we:

- Develop a prototype mobile app that automates the assessment of three indices of divergent thinking: Fluency, Originality and Elaboration.

- Assess whether the three indices of divergent thinking measured by the TCD-D Mobile App actually measure fluency, originality and elaboration (construct validity).

- Evaluate the sustainability of the TCD-D Mobile App prototype with a sample of university students.

2. Literature Review

2.1. Digital natives: The new generation of students between digitalization and creativity2.2. Divergent Thinking: Theory and tools for education

2.3. Digital Assessment for creativity

Assessment based on new technologies is one of the most rapidly developing areas of research in educational practice [54]. This growing attention is due to the advantages of digital assessment, such as online test administration, automatic scoring, increased accuracy, objectivity, reliability, and the possibility of immediate feedback [16]. Computer-based techniques allow creativity data to be collected and analyzed using a single system. In addition, digital assessment can reduce the time and manpower required to calculate results and provide feedback by decreasing the margin of error. Finally, the flexibility of digitized exams allows examinees to participate in an exam anytime, anywhere, without the need for the examiner to be physically present [54]. It is important to remember that examinees in the school system are students who belong to the generation of digital natives and who use digital tools for leisure as well as for learning and working [33]. Despite all the advantages that new technologies bring to the assessment of creativity, there are still some critical issues, such as the need to ensure hardware stability and software compatibility, considering the rapid innovations that change the tools used [54]. In the specific case of measuring divergent thinking, test administration and paper scoring are among the main problems. Open-ended tasks generate ambiguities that are difficult to deal with in traditional paper-and-pencil test administration, where each protocol must be coded manually. Due to these and other problems already listed, the process of data analysis is time consuming and cannot be effectively implemented in daily school practice, especially with the intention of systemic evaluation [16]. In 1998, Kwon developed a computerized version of the Torrance Test of Creative Thinking [19]. While the instrument was attractive because it allowed for rapid computation of data, it did not work well because the use of the mouse was problematic and negatively affected drawing performance. Lau [17] developed a computer-based assessment instrument called e-WKCT in 2010 based on Wallach-Kogan's creativity tests [20]. In this case, the paper and digital versions were comparable, but the computerized version had the advantage of being easier to administer and score. They also used an automated scoring system and conducted a large-scale study of 2476 elementary and secondary students. The instrument allowed for immediate feedback upon completion of the test and online comparison of scores with Hong Kong norms. Palaniappan [18] developed a web-based intelligent creativity assessment system (CAS) in which verbal responses were automatically scored based on the manual database of the TTCT. In cases where responses could not be identified or did not fit into a category due to their novelty, the system sent them to a website where the researcher had to categorize them manually. Zabramski [15;21] also developed a computerized version of Torrance's TCTT [19]. The two versions, paper and digital, were found to be comparable. In addition, the graphical user interface (GUI) was examined and three different input methods (mouse, pen, and touch) were compared. However, in the last decade, new technologies related to the mobile world, gamification, and virtual reality techniques have emerged, further diversifying the development of new digitized forms of creativity assessment tools. In the study by Chuang and colleagues [54], a digitized and gamified version of Williams' CAP [23] was developed using fuzzy theory. However, this is a computerized version that does not replicate the paper test, which consists only of graphical productions, but creates a game interface that engages users with challenges, assessments, and entertainment. This digital version does not measure originality and title attribution, an additional index that is almost always considered in the case of graphic productions. Instead, Pásztor and colleagues [16] developed an online divergent thinking measurement tool comprising nine tasks based on Torrance's [19] and Wallach and Kogan's [20] open-ended divergent thinking item types. The tasks were computer-based, specifically three alternative-use tasks, three list-completion tasks, and three graphing tasks. Kovalkov and colleagues [55], inspired by the Torrance test [19], measure divergent thinking (specifically, originality, fluency, and flexibility) by developing an instrument that uses Scratch, a visual programming environment designed for open-ended and creative learning. The use of Scratch is particularly attractive for automatic assessment of results and also allows the instrument to be more applicable to educational settings and students. In their study, Putra and colleagues [22] propose an automated assessment tool that is not based on a paper test but is able to objectively measure the four factors of divergent thinking without lengthy programming. The software development process follows the waterfall model. This automated assessment tool, which encodes divergent thinking by assessing digital storytelling images, can be a practical solution to support students' creative thinking skills in computer programming. Another very recent study by Cropley and Marrone [24] has instead used neural networks to create a digital tool capable of automatically evaluating the creative figure of Torrance's TCTT. This approach has achieved excellent results in terms of accuracy and speed through the application of machine learning and has shown that it is possible to remove the barriers that currently make graphical production testing impractical. These are just a few of the studies that have investigated the development of digital tools to support creativity assessment in recent years. In addition to focusing on divergent thinking, some have also looked at digitizing tools for assessing problem solving [56], and still others have looked at the feasibility of studies using such digital tools to assess creativity [57]. Thus, this is a rapidly evolving literature that produces interesting technological innovations for the application of creativity.

3. Methodology

The term "digital natives" refers to the generation that grew up with personal computers, video games, digital music players, video cameras, smartphones, and other modern technological devices [29]. According to Presnky [11], digital natives are really children of a new culture created by the aggressive intrusion of digital technology into the lives of young people born since 1980. A generation that has grown up in a technology-intensive environment where they move quickly, need instant feedback and are able to multitask, work in parallel and simultaneously on different tasks, and the use of digital technologies is fun and intuitive [30]. Much has also been said about digital natives about the differences in mindset from previous generations. Indeed, digital natives think differently because they grew up in a different cultural context. Their thought processes have evolved differently, overstimulated by contexts rich in rapid interpersonal and communicative exchanges, most of which are mediated by new technologies [31]. Learning also takes place differently [32]. Already at the beginning of the new millennium, Prensky [11] expressed concerns about a school system that is not keeping up with the generation it is educating, and suggested thinking about forms of learning that emerge through games. Although criticized, game-based learning seems to be the preferred form of learning among young people, who especially appreciate the possibility of learning through gamification and the use of mobile devices available to everyone from childhood [33]. As a result of these reflections, a new educational approach has emerged in the literature that involves the intensive use of information and communication technologies (ICT) to improve students' skills and new methods of active learning, such as Serious Games and Blended Learning (face-to-face learning and computer-based learning) [34]. According to the NACCCE framework, creativity and new forms of digital learning have a reciprocal relationship: creative processes enhance learning with new technologies, and conversely, digital learning enhances creative thinking [34]. Therefore creativity, the foundation of learning and knowledge, is becoming increasingly digital and technological and its educational value is ever more present in the daily experiences of the new generations. In recent years, more research has been done to examine the intersection of these two trends that combine creativity and technology in education [7;35,36].

Creativity is one of the most talked about 21st century skills in education, and one of the most frequently stated goals of modern education systems is precisely the development and nurturing of creativity in students of all ages [16]. There are several approaches to the study of creativity in the literature that focus on different factors, such as studies that consider process, people, product, and environmental influences [1]. Particularly important in educational contexts is the study of the creative process, which is the sequence of thoughts and actions that lead to new adaptive productions [37] and that manifest in different ways, not all of which can be assessed. To capture this process, the literature refers to divergent thinking, i.e., the ability to develop multiple solutions in response to a given stimulus or problem [2], and this is thought to be one of the thought processes underlying creative performance [1]. Although divergent thinking is only one component of the creative process, it is considered a predictive indicator of creative potential from an educational perspective [38,39]. Divergent thinking is a way to train students to produce knowledge, seek ideas and solutions to a problem. In addition, the creative process has been shown to promote critical thinking, self-motivation, and student mastery of skills and concepts [7]. Divergent thinking was originally derived from Guilford's Structure of Intellect model [40] and stands as a process that produces numerous answers, in contrast to convergent thinking, where only one or a few correct solutions are possible, as in traditional intelligence tests [16].

Divergent thinking is one of the areas of creativity for which the absolute most assessment instruments have been developed [2]. Some of the earliest instruments developed for measurement in the middle of the last century are still among the most widely used, such as Guilford's Structure of the Intellect (SOI) Divergent-Production Tests [40], Torrance's Tests of Creative Thinking (TTCT) [19], and Wallach and Kogan's [20] and Getzel and Jackson's [41] DT test. Some assessment tools for divergent thinking have also become popular because of their cross-cultural invariance, such as tests of graphic production. These can be more easily adapted and used anywhere in the world without the risk of cultural bias due to the almost complete absence of a verbal component (except for instructions) [42,43]. Among the tests for divergent thinking, the Torrance test is the one that is currently still most widely used, discussed, and adapted in the literature [44, 45, 46, 47]. The Torrance test is based on Guilford's theoretical formulation and includes four main factors for measuring divergent thinking: fluency, flexibility, originality, and elaboration. In general, these four factors are common to almost all tests that assess divergent thinking by measuring it in graphical or verbal form [38]. The same four indices are used, for example, in the design protocols of Williams' Creative Assessment Packet [2]. Like Guilford [40] and Torrance [19], Williams [23] developed a test to assess divergent thinking and, in particular, cognitive factors related to creative behaviors. This instrument was developed specifically for the educational context with the goal of making teachers more aware of the development of their students' creative potential. Similar to the Torrance test [19], the CAP consists of a verbal section, a questionnaire, and a graphic section, i.e., drawings elicited by completing a graphic stimulus [23]. In contrast to the Torrance test [19], which is mainly used in the USA, the CAP enjoys great popularity in the East, especially in China [27; 48, 49] and Taiwan [48; 50], and in some European countries such as Italy [51, 52, 53, 26] where it is known as the Test of Creativity and Divergent Thinking (TCD) [28].

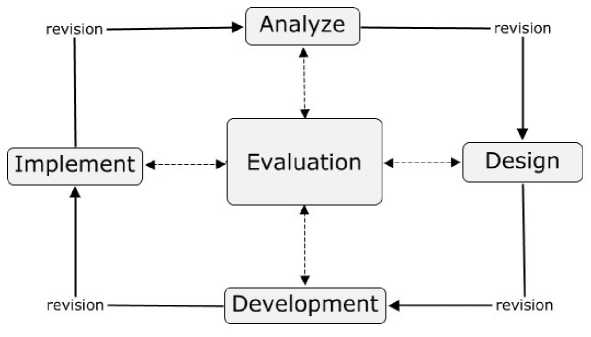

This study uses the ADDIE design model, which is part of Instructional Systems Design (ISD). ADDIE is a model consisting of the phases of Analysis, Design, Development, Implementation and Evaluation [58]. However, this sequence does not have to be followed according to a rigid linear sequence of steps, that is, the phases can be interleaved. Educators and those involved in design and training find this approach very useful as it facilitates the implementation of effective training tools by clearly defining the phases. As an ISD model, ADDIE has been widely accepted and used [58]. We tackled the five development phases in the following way (Figure 1):

• Analysis: in order to define the requirements for the digital implementation of the TCD, we started by observing a certain number of tests on paper, to outline which features to implement and also to understand which additional features could be added to the digital version. It was also important to find out what could be appealing for young children. In particular, this led to the choice of using (Android-based) tablets as digital supports.

• Design: by keeping in mind the target of the users, we decided to limit the number of buttons. Each visual component is simple and colorful, with soft shapes. Each button has an image associated with it, evocative of its function (for instance, a pencil for writing). There are also easy-to-read tutorials for each TCD test, explaining the usage of the buttons. A further goal of the application is to calculate and present the results in a readable and easily analyzable format for the examiners, and this was also taken into account in the Design phase.

• Development: the goal was to make the digital version not only as close as possible to the paper one, but to make it even better. The trickiest part was to position and make the various components interact in the correct way. Another important issue was the storage of the results, in order to make them accessible and readable by the examiners.

• Implementation: the application was implemented as a desktop tablet application, in order to test it first on a limited group of users. We plan to make it a web app in the future.

• Evaluation: we started to design some usability tests. The usability is both from the point of view of the students and from the point of view of the examiners.

4. Design and Implementation

4. Design and Implementation

Fig. 1. ADDIE Model

The ADDIE model is useful when working on a project with a multidisciplinary team, i.e., where the members have different expertise, therefore they are not always experts in all the development phases of the prototype, as it is in this case. In particular, the flexibility of this model allows team members to go back and improve a development phase at any time after comparing notes.

In a nutshell, this paper is about the development of a prototype TCD-D app for Android tablets that facilitates the assessment of divergent thinking according to the Williams' model, starting from the paper version of the Creativity and Divergent Thinking Test (also known as the Creative Assessment Packet). The app makes divergent thinking to be assessed digitally, facilitating remote data collection and automating the assessment of three of the five indices. After the development, the prototype of the app was evaluated with respect to usability and effectiveness. The study obtained Ethics approval from the University Bioethics Committee, Prot. n. 157942.

The Test of Creativity and Divergent Thinking - Digital (TCD-D) is a mobile application that is easy to install, does not require any manual settings from the users, and allows them to save test results anonymously and in a place safe from unauthorized access. The development of the application followed the design model ADDIE [58], part of the Instructional Systems Design (ISD), which is a series of practices for the development of teaching material in paper or digital format.

Exactly as in its paper version, the TCD-D assesses divergent thinking through two protocols (A and B), each of which involves the completion of 12 frames. Each frame presents an incomplete graphic stimulus to be incorporated into the graphic production. The drawing produced in each frame is evaluated through five factors: four graphic factors (fluidity, flexibility, originality, elaboration) and one of verbal production (attribution of a title).

-

1 Fluency: this is the amount of graphic production that is produced by counting the number of completed frames.

-

2 Flexibility: it depends on the number of times the user changes category from the first frame. There are five categories: Living Beings, Mechanical Devices, Symbols, Views, Useful Things.

-

3 Originality: it is calculated based on where the design is placed, i.e., inside, outside or both in relation to the graphic stimulus.

-

4 Elaboration: it is measured by the symmetry or asymmetry of the design within the frame.

-

5 Title: it is measured by the addition of descriptors or abstract references.

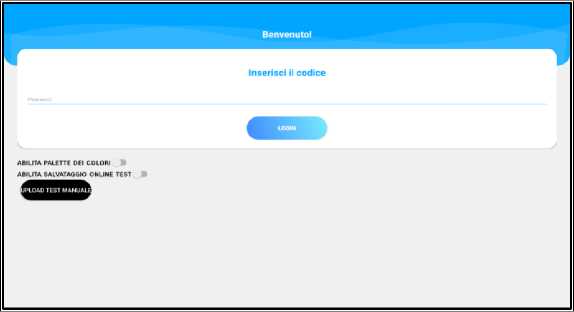

The TCD-D app presents a first simple screen (Fig. 21) where the user can login and enable some features: in particular, the color palette and the automatic saving of the test outputs (through Internet connection). The examiner, based on the sample, the purpose of the test and the availability of a wi-fi connection, decides whether or not to enable the buttons. The access credentials consist of alphanumeric codes that can be changed according to the purpose of use.

After logging in, the user will be able to choose, with the guidance of the examiner, the protocol to be performed: protocol A is the main one, while protocol B, equivalent to the first, is used in the case of longitudinal studies or repeated evaluations, to avoid proposing already known graphic stimuli.

Fig. 2. Login screen

Fig. 3. Drawing screen as for the paper test with tools

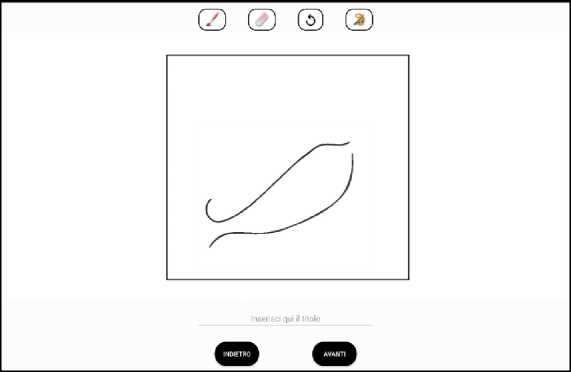

Once the protocol is selected to be completed, the user is prompted to enter their date of birth and gender. These are optional information, yet useful for the purpose of assessing divergent thinking. At this point, users receive instructions for completing the test. The text of the instructions has been reproduced exactly from the paper version of the Williams test [28]. By confirming that one has read the general instructions, the first frame of the protocol is accessible. Before starting the drawing session, the use of each button is illustrated with a brief explanation (Fig. 3). The general instructions and the detailed explanations of the buttons are the only verbalized parts of the test.

The buttons available to the user, from left to right, are:

-

- Pencil tool : this button enables the drawing by touch of graphic signs in the dedicated area inside the frame.

-

- Eraser Tool : this button enables the erasing of the strokes drawn, by touch again.

-

- Undo Tool : this button is related to an addition with respect to the paper version and is therefore exclusive to the digital version; it allows the user to automatically erase the last segment created, for faster and more precise results.

-

- Color Palette : this is optional and can be enabled when opening the app. It gives the possibility to use 8 primary colors. In the paper version the subjects are free to use the colors because it is not an assessment element. In the implementation of the app, the use of color is not counted as an additional index, but it is possible to choose to make it available or not to the users based on the type of assessment (for example, in the case of children, the use of color can represent a distraction from the task).

-

- The FORWARD and BACK buttons are used to move from one frame to another in the protocol, with the option to return to a frame that was left undone previously.

-

- The text area is used to give a title to each frame. Clicking on the text area automatically opens the keyboard of the tablet, giving each user the possibility of attributing the title in the language they prefer.

After completing the 12 frames that compose the protocol, the app asks to confirm that the test has been completed and then invites to save its content. Once saved, the app automatically returns to the home screen. The test is concluded.

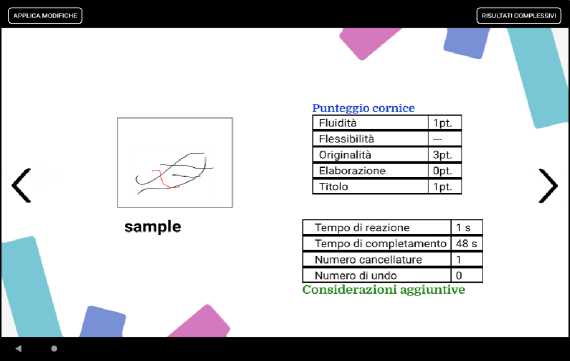

In the upper-left corner there is an area called private , whose access is reserved to the examiner. By accessing the area, it is possible to display a summary screen with all the protocols completed within that tablet and saved. For each protocol is shown the gender and the date of birth of the person, the type of protocol (A or B) and the date on which the test was made. It is also possible to add an alphanumeric identification code to any completed protocol at saving time. By clicking on a single saved protocol, it is possible to visualize each of the frames that compose it and the scores automatically attributed by the system for each factor: at the moment the system is able to evaluate all the indices with the exception of flexibility (passage from one theme to another among the frames), that still needs a manual evaluation. It is also possible to insert/edit each score manually, even the automatically evaluated ones. Each saved protocol can be downloaded on the tablet into a spreadsheet file. This file contains the title and scores of each frame, while drawings are downloaded in a Portable Network Graphics (png) format. One of the advantages of TCD-D is that it permits to store assessment results in a simple folder on the web. For this reason, it was decided to adopt a completely anonymous storage system, which keeps track of scores for all factors, including the ones that were not present in the paper version, for each frame completed by the user. The saving system works in offline mode, saving the tests locally in the device and then transferred manually in the online storage, or in online mode, using the Google Cloud suite to upload the data to protected and dedicated areas. In more detail, the automated assessment (Fig.4) made by the app takes into account the following factors:

-

- Fluidity : this is the amount of graphic production. The app, likewise the paper version, attributes one point to each frame in which even a single graphic sign has been drawn, zero points to frames without drawing.

-

- Flexibility : in the paper version, a point is given every time the subject changes category between one frame and another (e.g. living being frame 1, landscape frame 2). At the moment this is the only factor the app is totally unable to evaluate.

-

- Originality : it evaluates where the user draws. Each frame contains a closed part, created by the stimulus line or shape displayed. The tool assigns, according to the rules of the paper test, 1 point for drawings outside the closed shapes, 2 points for drawings inside the closed shapes and 3 points for drawings inside and outside the closed shapes.

-

- Elaboration : similar to the paper version, the symmetry of the drawing is evaluated. The tool assigns 0 points for symmetrical drawings inside and outside the closed shape, 1 point for asymmetrical drawings outside the closed shape, 2 points for asymmetrical drawings inside the closed shape, 3 points for asymmetrical drawings inside and outside the closed shape.

-

- Title: the title score depends on the length and complexity of the title, but is also influenced by the image recognition (i.e., the flexibility factor, which is not currently implemented). For this reason, currently the only possible scoring assignment is 0 points if the title is absent or 1 point if the user has attributed a title. The evaluator can then modify the score manually on the basis of the instructions provided in the paper version.

In addition to these parameters, already provided in the paper version of the test, we decided to implement some additional new parameters that enhance the assessment through a digital tool, taking into account the users of the test, that is, native digitals. In particular, the additional indicators are:

- Reaction time: it corresponds to the time it takes the user to make the first input from the moment the frame is proposed. Reaction times are commonly associated with intelligence because of the greater ability to process a large amount of information in less time, but the study by Kwiatkowski and colleagues [59] confirms that there is also a relationship between reaction times and creativity. The study of this relationship is still controversial, in fact, according to Glăveanu [60] reaction times are higher in more creative individuals, while according to the study by Dorfman and colleagues [61] reaction times depend on the type of task: lower reaction times for simple tasks that do not involve interference, higher reaction times for creative tasks that involve information interference (negative priming). Adding this index to the digital assessment of creativity will allow examiners to assess directionality of the relationship with divergent thinking factors in a drawing task.

- Time to complete: it corresponds to the time it takes the user to complete the drawing. It is tracked from the moment when the user starts drawing in the frame. The TCD manual [28] calls for completion of drawing protocols in 25-25 minutes, depending on the age group. However, often in group administrations, as is the case in classrooms, it is difficult to be able to maintain timelines and keep them in mind for individual case histories. This additional index makes it possible, not only to calculate the completion time of the entire protocol but also of individual figures for each student.

- The number of erasures: this is the number of times the student uses the eraser button.

- The number of undo: is the number of times the user uses the undo button.

4.1 Participants

During this initial phase of app development, an exploratory study was conducted to assess reliability, construct validity, acceptability and sustainability of the app by a sample of university students. After our study was approved by the University Bioethics Committee (Prot. No. 157942), we distributed a questionnaire to students to consent to participate. Specifically, 34 students from the University of Turin in Italy participated in the study. Of the students who participated in this study, 32 were female and only 2 were male with an average age of 22.47 years (min=21 years; max=30 years).

4.2 Instrument

Students were presented with the Williams Test of Creativity and Divergent Thinking (TCD in Italy and CAP in other Countries) in both paper and digital formats in both protocols A and B (A paper + B digital or A digital + B paper). In the TCD (paper version) and in the TCD-D (digital version), there are five indices for assessing divergent thinking. Students were also asked to answer a short questionnaire that included open-ended questions and the System Usability Scale (SUS) [68]. The SUS was developed by Brooke in 1996 and contains ten simple questions about the usability of a system. The SUS is a useful tool for understanding the problems users have in using the system. It is a ten-item Likert scale with five response options ranging from completely disagree (1) to completely agree (5). In addition to

5. Results

5.1 A significant challenge: The design of the Elaboration assessment

The number of erasures is an index commonly used in graphic tests such as drawing human figures [62] and drawing clocks [63,64]. According to leading developmental psychology authors of the Human Figure Test [65, 66], the number of erasures is an index that can be interpreted as a lack of confidence and uncertainty in one's identity and, in a developmental context, can help distinguish between adolescents with high and low self-esteem. Today, several digital assessment instruments include the erasure index [e.g.67], but there are still no studies in the literature that examine the relationship between the number of erasures and creativity. These are some of the reasons for including these additional indexes to assess digital creativity considering the dual mode of erasure: erasure and undo.

Fig. 4. Screen with traditional Rating Indexes and additional ones

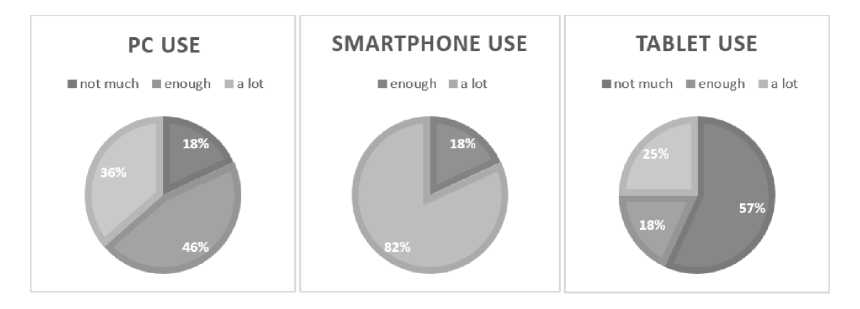

the socio demographic questions, participants were asked to indicate the frequency of use of PCs, smartphones, and tablets to better understand the use of new, traditional, and mobile technologies: the results are shown in Figure 5. The responses show that the tablet is the least used tool by the sample.

Fig. 5. Students' use of standard and mobile technologies

One of the key features of the Williams test [28] is the Elaboration, which gives a score based on the presence or absence of symmetries in the drawing. Symmetry can be mathematically defined as an orderly distribution of the parts of an object, such that geometric elements, be them a point or a line, can be identified so that each element of the object placed on one side of it corresponds, at equal distance, to a point on the other side. This process is a complex task because there are several variables to consider: the position of the lines, the consistency between the dimensions of the shapes that make up the symmetry, the rotation of the figure. Handmade drawings made by children will surely produce drawings that will not meet the mathematical definition of symmetry. Therefore, we researched for a suitable method. Our choice was implementing a method based on the Generalized orthogonal Procrustes analysis2. This method offers a certain degree of flexibility in considering two figures symmetrical or not, which is crucial given the target mentioned earlier. The key of the algorithm are the landmarks , which are elements defined as a finite set of points (independent of the number of actual points that compose the shape) that are placed on the figure and act as its "descriptors". These landmarks are essential for representing mathematical concepts closely related to symmetries, such as curvatures between two points. Once the algorithm has constructed a formal description of how the drawing is composed (by defining its landmarks), it is possible to compare the elements of the drawing among each other. Our implementation reduces the drawing area and divides it into four quadrants, then it compares them to each other and scores the matches found. With respect to the original implementation that involved a comparison based on individual small segments, this route was chosen because two elements, for example two eyes, can be drawn by hand differently and then vary greatly in the number of total coordinates, which would not make them comparable within the original implementation because they would result in an unmanageable increase of total landmarks.

Fig. 6. An example of symmetry

In Figure 6 there is an example of a drawing on which to calculate the score about symmetries, based on the number of correspondences between the descriptors in each pair of the quadrants, with a certain chosen approximation (as described above). Our algorithm is meant to give good results on hand-drawing. For instance, it is possible to note in the drawing of Figure 6 that the right eye is not positioned as the exact opposite of the left eye and also the eyelashes differ in length. However, these differences are negligible for our algorithm (obviously if the position of the right eye was in the right upper corner, no symmetry would be detected). Another detail worth noting is the importance of the use of the above-mentioned landmarks: the irises would not be comparable without being considered as a landmark, i.e. , a descriptor independent from the actual number of its points, because the irises contain a large number of points each, making the element practically impossible to compare directly.

5.2 Reliability and construct validity

5.3 Study of acceptability and sustainability

6. Limitations

7. Conclusions

The instrument’s reliability and internal consistency were measured using the correlation coefficients of the main constructs. Of the three indices of divergent thinking assessed with the TCD-D app, fluency, originality and elaboration, Cronbach's alpha could only be calculated for two of them. The Fluency index is assessed by a dichotomous response (1, 0) based on the presence or absence of a design in each of the twelve frames. The low variability among the items, almost all of which are scored as 1 for the presence of drawings, makes it not possible to assess the alpha. For the other two indices, both positive and statistically significant in this study (p <.001), the Cronbach's alphas are .608 for originality and .665 for elaboration, respectively. This validity of the two indices is considered moderately acceptable (0.50-0.70) [69], especially considering the small number of subjects for which it was evaluated.

Pearson correlations were used to obtain an initial assessment of the construct validity of the indices measured with the TCD-D Mobile App [70]. Specifically, fluency, originality and elaboration measured with the TCD-D app and the paper version of the TCD test were shown to be correlated. As depicted in Table 1, each index measured with the TCD-D app correlated significantly with the corresponding index from the paper test. The remaining positive correlations between the indices (as in the case of fluency of the TCD-D with elaboration and originality of the paper TCD) are evidence that the three digital indices underlie the measurement of a single construct as in the paper version, namely divergent thinking.

Table 1. Pearson’s correlation coefficients

|

Fluency TCD-D |

Originality TCD-D |

Elaboration TCD-D |

|

|

Fluency TCD |

.57** |

.29 |

.10 |

|

Originality TCD |

.48** |

.63** |

.23 |

|

Elaboration TCD |

.46** |

.61** |

.40* |

|

Note: ** p <.001; * p <.05 |

|||

A questionnaire consisting of open-ended questions and the SUS scale [68] was used to assess the acceptability and sustainability of the TCD-D mobile app. Of the 34 participants, 45% preferred to take the Williams test digitally (TCD-D) and 56% of the sample felt that the digital test better expressed their creative potential. Despite the 55% who preferred the paper version of the test, participants' open-ended responses indicated that the TCD-D app is viewed as a faster tool that is less focused on perfectionism and errors. In addition, using the app is perceived as easy and intuitive, and more stimulating than the paper version because it offers more visual cues, more features, and a greater sense of freedom of expression. Additional data on the usability of the app came from the System Usability Scale. The SUS is a standardized questionnaire for assessing perceived ease of use [70, 71]. It is a quick and easy tool to obtain a valid measure of user-perceived usability and is one of the most reliable and widely used questionnaires recommended in the literature [e.g.72]. The standardized version of SUS consists of 10 items rated on a 5-point scale from "strongly disagree" to "strongly agree." Scoring is based on a rating scale developed by Sauro and Lewis [73] that divides scores into percentiles: The best score (A) ranges from 100 to 79, the acceptable score (B-C) ranges from 78 to 62, and the poor score (D-F) is below 62. Participants in the exploratory study rated the TCD-D with a score of 84.60, which corresponds to the A range or above the 96th percentile. The students' rating of the app's usability shows to be very high, although many of them are not very familiar with using tablets as a mobile tool.

This study is not without its limitations: since it is the development of a prototype of a mobile app, it is only a preliminary study. The initial data collected in this study refer to a small sample that can by no means be considered representative of the sample used, i.e. the students of the University of Turin. Moreover, the not entirely satisfactory results of Cronbach's alpha and correlations are also related to the sample size. For these reasons, the present acceptability and sustainability results for the TCD-D app cannot be considered as a complete validation study. In addition, due to the constraints of the Covid 19 pandemic, it has not yet been possible to initiate a validation study with a larger school-age sample. However, data collection for a first validation of the application with university students is already underway and a second validation with a sample of Italian secondary school students is planned.

The TCD-D application, designed for use with mobile devices, specifically Android tablets, provides a digital resource for assessing creative potential and, in particular, divergent thinking in educational settings. Unlike other attempts in the literature [such as 21], TCD-D was developed by a team of psychologists and computer scientists to simultaneously address relevant aspects of psychological assessment and app design.

The literature of recent decades supports the use of new technologies, particularly mobile technologies, to support assessment, monitoring, and creativity facilitation in educational settings [7;35,36]. Indeed, previously digitized measurement tools have several advantages over their paper-based counterparts: for example, to mention a few of them, new technologies allow for faster online test administration and automated scoring, which has reduced the time and cost of the assessment process [16;54]. Given these advantages, many creativity assessment instruments, such as the Torrance test [19], have been converted to digital versions [16;21]. The application of these new instruments in education shows that digital assessment not only enables large-scale assessment of creativity, but also provides teachers with an easy-to-use tool to monitor the development of students' divergent thinking by contributing to the development of creative potential [16]. However, to the best of our knowledge, there are currently no attempts to develop a digital (mobile-based) version of Williams' CAP [23], a widely used classroom divergent thinking assessment tool known as the Test of Creativity and Divergent Thinking [28] in China and Italy. For this reason, we developed the app TCD-D (Test of creativity and divergent thinking - digital form) for Android-based tablets. The app was developed as a faithful reproduction of the Williams instrument [28] and is capable of automatically coding three of the five creativity indices, Fluency, Originality, Elaboration. In addition to the standard indices found in the paper version of the test, the app provides the ability to monitor additional indices to better assess specific cognitive skills mentioned in the literature related to drawing tests and creativity: reaction time, time to completion of each graphic stimulus, and number of erasures/deletions per drawing.

The TCD-D mobile app achieved good Cronbach's alphas, albeit improvable due to the small number of the participants to the preliminary analysis. The correlations between the indices of the paper test and the mobile version are also interesting and encourage new studies in this area for further improvement. The TCD-D app was positively evaluated by university students who had the opportunity to test both the digital and paper versions. In particular, the ability to freely express one's creative potential without having to focus on artistic details, as is the case when drawing on paper, was appreciated. In addition, the app was positively evaluated in terms of usability, although this is a sample of students who do not use tablets as a tool on a daily basis.

Furthemore, there is room for improvement for the TCD-D app. For example, the app is currently only available in Italian, but it is important to note that the only verbal parts present concern the instructions for using the tool. For this reason, an implementation is already planned where the language can be switched, allowing individuals to choose the language they find most appropriate for receiving the test instructions and the indications on the drawing tools, thus overcoming the possible cross-cultural gap regarding the language. Another implementation concerns the flexibility factor, the only factor that currently cannot be automatically scored by the instrument and therefore requires manual coding input by the rater. In the future, and in accordance with these reflections, consideration could be given to incorporating an artificial intelligence-based image recognition algorithm into the app, so that a score can be assigned when moving from one figure category (e.g., object, animal, human, etc.) to another. To improve the scoring of the title, deep learning techniques can be used to measure the correspondence between a drawing and the title provided by the examinee, allowing for more accurate scoring.

The study has the merit of presenting the development of a user-friendly creativity assessment app that can be easily installed on Android devices, with the aim of promoting its dissemination in school and educational settings that often do not have particularly advanced technologies. In such contexts, however, the role of creativity and the ability to think outside the box is becoming increasingly important for the younger generation. Through the use of mobile technologies, it would then be possible to support the assessment of creativity and to monitor and promote the creative potential of students who are digital natives and connoisseurs of new technologies.

8. Disclosure of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. The authors report there are no competing interests to declare.

9. Funding

All the funding regarding the realization of this study was received internally to the authors' organization. There was no additional external funding received for this study.

Acknowledgements

The authors would like to thank all the students who participated in the study.

Список литературы Introducing TCD-D for Creativity Assessment: A Mobile App for Educational Contexts

- Runco, M. A., & Jaeger, G. J. (2012). The standard definition of creativity. Creativity research journal, 24(1), 92-96. https://doi.org/10.1080/10400419.2012.650092

- Kaufman, J. C., & Sternberg, R. J. (Eds.). (2010). The Cambridge handbook of creativity. Cambridge University Press

- Feldman, D. H., & Benjamin, A. C. (2006). Creativity and education: An American retrospective. Cambridge Journal of Education, 36(3), 319-336. https://doi.org/10.1080/03057640600865819

- Saravanakumar, A. R. (2020). Life skill education for creative and productive citizens. Journal of Critical Reviews, 7(9), 554-558. http://dx.doi.org/10.31838/jcr.07.09.110

- Van Laar, E., Van Deursen, A. J., Van Dijk, J. A., & De Haan, J. (2017). The relation between 21st-century skills and digital skills: A systematic literature review. Computers in human behavior, 72, 577-588. https://doi.org/10.1016/j.chb.2017.03.010

- Moya, S., & Camacho, M. (2021a). Developing a Framework for Mobile Learning Adoption and Sustainable Development. Technology, Knowledge and Learning, 1-18. https://doi.org/10.1007/s10758-021-09537-y

- Henriksen, D., Henderson, M., Creely, E., Ceretkova, S., ?ernochová, M., Sendova, E., ... & Tienken, C. H. (2018). Creativity and technology in education: An international perspective. Technology, Knowledge and Learning, 23(3), 409-424. https://doi.org/10.1007/s10758-018-9380-1

- Bikanga Ada, M. (2021). Evaluation of a Mobile Web Application for Assessment Feedback. Technology, Knowledge and Learning, 1-24. https://doi.org/10.1007/s10758-021-09575-6

- Esteve, F. (2015). La competencia digital docente: análisis de la autopercepción y evaluación del desempeño de los estudiantes universitarios de educación por medio de un entorno. Retrieved from https://dialnet.unirioja.es/servlet/tesis?codigo=97673

- Moya, S., & Camacho, M. (2021b). Identifying the key success factors for the adoption of mobile learning. Education and Information Technologies, 26(4), 3917-3945. https://doi.org/10.1007/s10639-021-10447-w

- Prensky, M. (2001). Digital natives, digital immigrants part 2: Do they really think differently?. On the horizon. https://doi.org/10.1108/10748120110424843

- Guo, J. (2019). Web-based creativity assessment system that collects both verbal and figural responses: Its problems and potentials. International Journal of Information and Education Technology, 9(1), 27-34. https://doi.org/10.18178/ijiet.2019.9.1.1168

- Guo, J. (2016). The development of an online divergent thinking test. https://opencommons.uconn.edu/dissertations/1304

- Kwon, M., Goetz, E. T., & Zellner, R. D. (1998). Developing a computer‐based TTCT: Promises and problems. The Journal of Creative Behavior, 32(2), 96-106. https://doi.org/10.1002/j.2162-6057.1998.tb00809.x

- Zabramski, S., Ivanova, V., Yang, G., Gadima, N., & Leepraphantkul, R. (2013, June). The effects of GUI on users' creative performance in computerized drawing. In Proceedings of the International Conference on Multimedia, Interaction, Design and Innovation (pp. 1-10) https://doi.org/10.1145/2500342.2500360

- Pásztor, A., Molnár, G., & Csapó, B. (2015). Technology-based assessment of creativity in educational context: the case of divergent thinking and its relation to mathematical achievement. Thinking Skills and Creativity, 18, 32-42. https://doi.org/10.1016/j.tsc.2015.05.004

- Lau, S., & Cheung, P. C. (2010). Creativity assessment: Comparability of the electronic and paper-and-pencil versions of the Wallach–Kogan Creativity Tests. Thinking Skills and Creativity, 5(3), 101-107. https://doi.org/10.1016/j.tsc.2010.09.004

- Palaniappan, A. K. (2012). Web-based creativity assessment system. International Journal of Information and Education Technology, 2(3), 255. http://www.ijiet.org/papers/123-K20001.pdf

- Torrance, E. P. (1974). The Torrance Tests of Creative Thinking-Norms-Technical Manual Research Edition-Verbal Tests, Forms A and B- Figural Tests, Forms A and B. Princeton, NJ: Personnel Press.

- Wallach, M. A., & Kogan, N. (1965). Modes of thinking in young children. https://psycnet.apa.org/record/1965-35011-000

- Zabramski, S., & Neelakannan, S. (2011, October). Paper equals screen: a comparison of a pen-based figural creativity test in

- Putra, R. E., Ekohariadi, E., Nuryana, I. K., & Anistyasari, Y. (2022, January). Development of Automated Assessment Tool to Measure Student Creativity in Computer Programming. In Eighth Southeast Asia Design Research (SEA-DR) & the Second Science, Technology, Education, Arts, Culture, and Humanity (STEACH) International Conference (SEADR-STEACH 2021) (pp. 92-97). Atlantis Press. https://doi.org/10.2991/assehr.k.211229.015

- Williams, F. E. (1993). Creativity Assessment Packet: CAP. Pro-Ed. https://www.proedinc.com/Products/Default.aspx?bookid=6565&bCategory=LDPOP!GT

- Cropley, D. H., & Marrone, R. L. (2022). Automated scoring of figural creativity using a convolutional neural network. Psychology of Aesthetics, Creativity, and the Arts. https://doi.org/10.1037/aca0000510

- Petko, D., Schmid, R., Müller, L., & Hielscher, M. (2019). Metapholio: A mobile app for supporting collaborative note taking and reflection in teacher education. Technology, Knowledge and Learning, 24(4), 699-710. https://doi.org/10.1007/s10758-019-09398-6

- Sica, L. S., Ragozini, G., Di Palma, T., & Aleni Sestito, L. (2019). Creativity as identity skill? Late adolescents' management of identity, complexity and risk‐taking. The Journal of Creative Behavior, 53(4), 457-471. https://doi.org/10.1002/jocb.221

- Qian, M., Plucker, J. A., & Shen, J. (2010). A model of Chinese adolescents' creative personality. Creativity Research Journal, 22(1), 62-67. https://doi.org/10.1080/10400410903579585

- Williams, F. (1994). TCD. Test della creatività e del pensiero divergente. Edizioni Erickson.

- Kivunja, C. (2014). Theoretical perspectives of how digital natives learn. International Journal of Higher Education, 3(1), 94-109. https://eric.ed.gov/?id=EJ1067467

- Barak, M. (2018). Are digital natives open to change? Examining flexible thinking and resistance to change. Computers & Education, 121, 115-123. https://doi.org/10.1016/j.compedu.2018.01.016

- Prensky, M., & Berry, B. D. (2001). Do they really think differently. On the horizon, 9(6), 1-9. https://doi.org/10.1108/10748120110424843

- Kivunja, C. (2014). Theoretical perspectives of how digital natives learn. International Journal of Higher Education, 3(1), 94-109. https://eric.ed.gov/?id=EJ1067467

- Mellow, P. (2005). The media generation: Maximise learning by getting mobile. In Ascilite (Vol. 1, pp. 469-476).

- Shabalina, O., Mozelius, P., Vorobkalov, P., Malliarakis, C., & Tomos, F. (2015, July). Creativity in digital pedagogy and game-based learning techniques; theoretical aspects, techniques and case studies. In 2015 6th International Conference on Information, Intelligence, Systems and Applications (IISA) (pp. 1-6). IEEE. https://doi.org/10.1109/IISA.2015.7387963

- Mishra, P. (2012). Rethinking technology & creativity in the 21st century: Crayons are the future. TechTrends, 56(5), 13. https://doi.org/10.1007/s11528-012-0594-0

- Mishra, P., Yadav, A., & Deep-Play Research Group. (2013). Rethinking technology & creativity in the 21st century. TechTrends, 57(3), 10-14. https://doi.org/10.1007/s11528-013-0655-z

- Lubart, T. I. (2001). Models of the creative process: Past, present and future. Creativity research journal, 13(3-4), 295-308. https://doi.org/10.1207/S15326934CRJ1334_07

- Reiter-Palmon, R., Forthmann, B., & Barbot, B. (2019). Scoring divergent thinking tests: A review and systematic framework. Psychology of Aesthetics, Creativity, and the Arts, 13(2), 144. https://doi.org/10.1037/aca0000227

- Runco, M. A., & Acar, S. (2012). Divergent thinking as an indicator of creative potential. Creativity research journal, 24(1), 66-75. https://doi.org/10.1080/10400419.2012.652929

- Guilford, J. P. (1962). Potentiality for creativity. Gifted Child Quarterly, 6(3), 87-90. https://doi.org/10.1177/001698626200600307

- Getzels, J. W., & Jackson, P. W. (1962). Creativity and intelligence: Explorations with gifted students. https://psycnet.apa.org/record/1962-07802-000

- Kim, K. H., & Zabelina, D. (2015). Cultural bias in assessment: Can creativity assessment help?. The International Journal of Critical Pedagogy, 6(2).

- Guo, Y., Lin, S., Guo, J., Lu, Z. L., & Shangguan, C. (2021). Cross-cultural measurement invariance of divergent thinking measures. Thinking Skills and Creativity, 41, 100852. https://doi.org/10.1016/j.tsc.2021.100852

- Baer, J. (2011). How divergent thinking tests mislead us: Are the Torrance Tests still relevant in the 21st century? The Division 10 debate. Psychology of Aesthetics, Creativity, and the Arts, 5(4), 309-313. https://doi.org/10.1037/a0025210

- Kim, K. H. (2011). The creativity crisis: The decrease in creative thinking scores on the Torrance Tests of Creative Thinking. Creativity research journal, 23(4), 285-295. https://doi.org/10.1080/10400419.2011.627805

- Yarbrough, N. D. (2016). Assessment of creative thinking across cultures using the Torrance Tests of Creative Thinking (TTCT): translation and validity Issues. Creativity Research Journal, 28(2), 154-164. https://doi.org/10.1080/10400419.2016.1162571

- Bart, W. M., Hokanson, B., & Can, I. (2017). An investigation of the factor structure of the Torrance Tests of Creative Thinking. Educational Sciences: Theory & Practice, 17(2). https://doi.org/10.12738/estp.2017.2.0051

- Wang, H. H., & Deng, X. (2022). The Bridging Role of Goals between Affective Traits and Positive Creativity. Education Sciences, 12(2), 144. https://doi.org/10.3390/educsci12020144

- Fan, H., Ge, Y., & Wilkinson, R. (2021). Humor Style and Creativity Tendency of Senior High School Students of Tujia Ethnic Group in China. Advances in Applied Sociology, 11(4), 141-157. https://doi.org/10.4236/aasoci.2021.114011

- Liu, H. Y., Chang, C. C., Wang, I. T., & Chao, S. Y. (2020). The association between creativity, creative components of personality, and innovation among Taiwanese nursing students. Thinking Skills and Creativity, 35, 100629. https://doi.org/10.1016/j.tsc.2020.100629

- Antonietti, A., & Cornoldi, C. (2006). Creativity in Italy. In J. C. Kaufman & R. J. Sternberg (Eds.), The International Handbook of Creativity (pp. 124–166). Cambridge University Press. https://doi.org/10.1017/CBO9780511818240.006

- De Caroli, M. E., & Sagone, E. (2009). Creative thinking and Big Five factors of personality measured in Italian schoolchildren. Psychological reports, 105(3), 791-803. https://doi.org/10.2466/PR0.105.3.791-803

- Lucchiari, C., Sala, P. M., & Vanutelli, M. E. (2019). The effects of a cognitive pathway to promote class creative thinking. An experimental study on Italian primary school students. Thinking Skills and Creativity, 31, 156-166. https://doi.org/10.1016/j.tsc.2018.12.002

- Chuang, T. Y., Zhi-Feng Liu, E., & Shiu, W. Y. (2015). Game-based creativity assessment system: the application of fuzzy theory. Multimedia Tools and Applications, 74(21), 9141-9155. https://doi.org/10.1007/s11042-014-2070-7

- Kovalkov, A., Paaßen, B., Segal, A., Pinkwart, N., & Gal, K. (2021). Automatic Creativity Measurement in Scratch Programs Across Modalities. IEEE Transactions on Learning Technologies, 14(6), 740-753.

- Wu, H., & Molnár, G. (2018). Computer-based assessment of Chinese students’ component skills of problem solving: a pilot study. International Journal of Information and Education Technology, 8(5), 381-386. https://doi.org/10.18178/ijiet.2018.8.5.1067

- Hass, R. W. (2015). Feasibility of online divergent thinking assessment. Computers in Human Behavior, 46, 85-93. https://doi.org/10.1016/j.chb.2014.12.056

- Davis, A. L. (2013). Using instructional design principles to develop effective information literacy instruction: The ADDIE model. College & Research Libraries News, 74(4), 205-207.

- Kwiatkowski, J., Vartanian, O., & Martindale, C. (1999). Creativity and speed of mental processing. Empirical Studies of the Arts, 17(2), 187-196. https://doi.org/10.2190/2Q5D-TY7X-37QE-2RY2

- Gl?veanu, V. P. (2014). Revisiting the “art bias” in lay conceptions of creativity. Creativity Research Journal, 26(1), 11-20. https://doi.org/10.1080/10400419.2014.873656

- Dorfman, L., Martindale, C., Gassimova, V., & Vartanian, O. (2008). Creativity and speed of information processing: A double dissociation involving elementary versus inhibitory cognitive tasks. Personality and individual differences, 44(6), 1382-1390. https://doi.org/10.1016/j.paid.2007.12.006

- Fabry, J. J., & Bertinetti, J. F. (1990). A construct validation study of the human figure drawing test. Perceptual and Motor Skills, 70(2), 465-466. https://doi.org/10.2466/pms.1990.70.2.465

- Agrell, B., & Dehlin, O. (1998). The clock-drawing test. Age and ageing, 27(3), 399-404. https://doi.org/10.1093/ageing/afs149

- Paganini‐Hill, A., Clark, L. J., Henderson, V. W., & Birge, S. J. (2001). Clock drawing: analysis in a retirement community. Journal of the American Geriatrics Society, 49(7), 941-947. https://doi.org/10.1046/j.1532-5415.2001.49185.x

- McElhaney, M. (1969). Clinical psychological assessment of the human figure drawing. CC Thomas.

- Bodwin, R. F., & Bruck, M. (1960). The adaptation and validation of the Draw-A-Person Test as a measure of self concept. Journal of Clinical Psychology, 16(4), 427–429. https://doi.org/10.1002/1097-4679(196010)16:4<427::AID-JCLP2270160428-3.0.CO;2-H

- Schmidgall, S. P., Eitel, A., & Scheiter, K. (2019). Why do learners who draw perform well? Investigating the role of visualization, generation and externalization in learner-generated drawing. Learning and Instruction, 60, 138-153. https://doi.org/10.1016/j.learninstruc.2018.01.006

- Brooke, J. (2013). SUS: A retrospective. Journal of Usability Studies, 8(2), 29–40. https://uxpajournal.org/sus-a-retrospective/

- HINTON, P. R., BROWNLOW, C., MCMURRAY, I. & COZENS, B. 2004. SPSS explained, East Sussex, England, Routledge Inc.

- Bolarinwa, O. A. (2015). Principles and methods of validity and reliability testing of questionnaires used in social and health science researches. Nigerian Postgraduate Medical Journal, 22(4), 195.

- Sauro, J., & Lewis, J. R. (2011). When designing usability questionnaires, does it hurt to be positive? In Proceedings of CHI 2011 (pp. 2215–2223). Vancouver, Canada: ACM. https://doi.org/10.1145/1978942.1979266

- Lewis, J. R., & Sauro, J. (2009, July). The factor structure of the system usability scale. In International conference on human centered design (pp. 94-103). Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-02806-9_12

- Sauro, J., & Lewis, J. R. (2016). Quantifying the user experience: Practical statistics for user research, 2nd ed. Cambridge, MA: Morgan-Kaufmann.