Method for Constructing Neural Network Means for Recognizing Scenes of Political Extremism in Graphic Materials of Online Social Networks

Автор: Ihor Tereikovskyi, Rabah AlShboul, Shynar Mussiraliyeva, Liudmyla Tereikovska, Kalamkas Bagitova, Oleh Tereikovskyi, Zhengbing Hu

Журнал: International Journal of Computer Network and Information Security @ijcnis

Статья в выпуске: 3 vol.16, 2024 года.

Бесплатный доступ

Countering the spread of calls for political extremism through graphic content on online social networks is becoming an increasingly pressing problem that requires the development of new technological solutions, since traditional approaches to countering are based on the results of recognizing destructive content only in text messages. Since in modern conditions neural network tools for analyzing graphic information are considered the most effective, it is assumed that it is advisable to use such tools for analyzing images and video materials in online social networks, taking into account the need to adapt them to the expected conditions of use, which are determined by the wide variability in the size of graphic content, the presence of typical interference, limited computing resources of recognition tools. Using this thesis, a method has been proposed that makes it possible to implement the construction of neural network recognition tools adapted to the specified conditions. For recognition, the author's neural network model was used, which, due to the reasonable determination of the architectural parameters of the low-resource convolutional neural network of the MobileNetV2 type and the recurrent neural network of the LSTM type, which makes up its structure, ensures high accuracy of recognition of scenes of political extremism both in static images and in video materials under limited computing conditions resources. A mechanism was used to adapt the input field of the neural network model to the variability of the size of graphic resources, which provides for scaling within acceptable limits of the input graphic resource and, if necessary, filling the input field with zeros. Levelling out typical noise is ensured by using advanced solutions in the method for correcting brightness, contrast and eliminating blur of local areas in images of online social networks. Neural network tools developed on the basis of the proposed method for recognizing scenes of political extremism in graphic materials of online social networks demonstrate recognition accuracy at the level of the most well-known neural network models, while ensuring a reduction in resource intensity by more than 10 times. This allows the use of less powerful equipment, increases the speed of content analysis, and also opens up prospects for the development of easily scalable recognition tools, which ultimately ensures an increase in security and a reduction in the spread of extremist content on online social networks. It is advisable to correlate the paths for further research with the introduction of the Attention mechanism into the neural network model used in the method, which will make it possible to increase the efficiency of neural network analysis of video materials.

Recognition, Political Extremism, Social Network, Neural Network, Graphic Resource

Короткий адрес: https://sciup.org/15019284

IDR: 15019284 | DOI: 10.5815/ijcnis.2024.03.05

Текст научной статьи Method for Constructing Neural Network Means for Recognizing Scenes of Political Extremism in Graphic Materials of Online Social Networks

Currently, online social networks (SN) are one of the most popular means of communication. For example, the number of active users who use SN Facebook daily is 2.064 billion people, the monthly audience of Twitter has exceeded 540 million users, and the average daily audience of Instagram is 38.4 million people. Despite the positive effect associated with the availability and speed of exchange of useful information, the use of SN also entails a number of negative aspects. One of them is the spread of calls for violence and political extremism, the danger of which is aggravated by their mass distribution, high influence on a wide range of users and the complexity of creating legal restrictions, which is explained by conflicts in the legislative framework of different countries, the presence of different standards and approaches to defining and suppression of such content. Although SN users often ignore information with calls for political extremism, their reaction to such calls may vary depending on many factors: political position, education, age, ideological beliefs, SN’s reputation, and the current political situation. At the same time, practical experience shows that calls for political extremism can spread quite quickly in SN, having a negative impact on many users, which reinforces the need to develop appropriate methods of recognition and counteraction, focusing on modern technologies for introducing calls for political terrorism into SN content. Note that in modern conditions, traditional means of recognizing calls for political extremism in SN, focused on analyzing test information, have largely lost their effectiveness, since over the past few years such calls are often embedded in SN images and video materials. At the same time, the proven effectiveness of using neural network solutions for graphics recognition allows us to reasonably assert the feasibility of their use in the field of analysis of images and video materials SN. At the same time, the features of SN require a rather complex adaptation of known neural network solutions to the expected operating conditions. Thus, the need to develop effective neural network tools for analyzing graphic content designed to recognize scenes of political extremism in SN explains the relevance of the presented scientific work.

2. Related Works

Currently, the overwhelming number of known SN content monitoring tools are based on methods of semantic analysis of text messages [1-3]. For example, work [4] discusses an approach to using machine learning methods to classify the emotional tone of text comments in SN.

Article [5] describes a procedure for determining the dependence of the sentiments of SN users on the content of text messages. The possibility of increasing the accuracy of sentiment recognition due to metadata, which is proposed to be used as profile characteristics, is shown.

The work [6] proposed a method for processing SN text content based on fuzzy logic methods. The possibility of using the interval intuitionistic fuzzy system TOPSIS is shown. At the same time, works [7, 8] indicate the advisability of recognizing political extremism based on the analysis of not only text messages, but also graphic materials of SN.

At the same time, practical experience, and results [9-11] show the possibility of developing tools for analyzing SN graphic content using neural network technologies. Despite the fact that quite a large number of works are devoted to the development of such tools [12, 13], the issue of detecting political extremism in SN media content has not been sufficiently studied. In particular, the mechanisms for determining the architectural parameters of neural networks used for effective analysis of SN media content in conditions of the expected shortage of computing resources require further improvement.

At the same time, it is worth noting the work [8] in which the process of pre-processing SN images before their submission to neural network tools for recognizing political extremism is considered.

Based on the analysis of the most popular SNs, it was determined that even within one SN there are images whose sizes can differ significantly from each other. For example, for YouTube, the range of varying image and video sizes is from 3840 × 2160 px to 426 × 240 px. In this case, the acceptable aspect ratio of such graphic materials can be 1:1, 4:3, 9:16. It is indicated that the wide variability in the sizes of graphic materials causes significant difficulties associated with adapting the sizes of the image under study to the sizes of the input field of the neural network model (NNM), since, in the case of proportional scaling, the scaling limits are limited by the coefficients k max =2 and k min =0.2. With disproportionate scaling, it should be taken into account that NNM are capable of analyzing images distorted by no more than 30% [14, 15]. The article also focuses on the need to bring the image to a given color format and level out typical noise.

A model for processing graphic resources SN is proposed, which ensures checking the possibility of using proven scaling mechanisms (1-3), bringing the analyzed image to a given color format (4-6), correcting the brightness of color channels (7-9), correcting the contrast of color channels (10- 13), as well as leveling out typical noise, which manifests itself in blurring of local areas of the image (14-17).

Note that the essence of the filtering procedure for leveling the blur of local areas of the image is to calculate wavelet coefficients (14, 15), pairwise comparison of wavelet coefficients of video frames with subsequent selection of a larger wavelet coefficient (16) and restoration of the filtered image (17).

kx - Round (Lnn/^, ), ky - Round (Hnn/ц, )

if (kx ^ [kmin, kmax]) V (ky ^ [kmin, kmax]) ^ Stop(2)

^ ^/ky >d)V (ky/kx > ^ St0^

where kx, ky - x and y scale factors; Round - round-to-smallest-integer function; LNN - input field width NNM, HNN -input field height NNM; L[m - width of the analyzed image; Him - height of the analyzed image; km i n, kmax - minimum and maximum allowable scale factor value; d - maximum coefficient of variation of scaling along the axes.

С = 0,2125R + 0,7154G + 0,0721B

C > a ^ A - 1 else A = 0

a = 0,5N where С - pixel color in halftone format; R,G,B - values that define the pixel color in each RGB channel; a - threshold value; N - pixel color depth in halftone format; A - pixel color in binary format.

С - С - Ссп ср

Г(х, у) - ^а Vj^Kx +i,y+f)X V(x +i,y+ if)(8)

(-2 0

Ф- ( 0 90

-2 0-2

where С - normalized pixel color; С - original pixel color; Сср - average color value in a given color channel; Г -processed image; I — original image; V - filter.

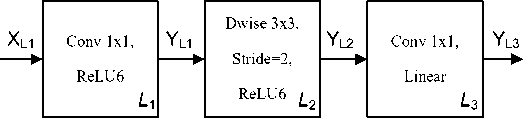

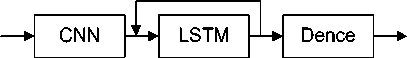

{1-f if g 1+f if g>r^q- r — 1-f f-(^ k kmin + (kmax kmin)a r _ 1 yx+0,5d yy+0,5d ' - ^2 ^i=x-0,5d ^j=y-0,5d ^^, J where q - adjusted pixel brightness value; g - initial pixel brightness value; r - average brightness of some neighborhood of a pixel; / - nonlinear local contrast enhancement function; к - contrast gain constant; kmax = 0,9, km[n = 0,2 -maximum practical and minimum practical gain; a = 0,6 - adaptation coefficient taking into account the characteristics of the pixel neighborhood; d = 20 - pixel neighborhood diameter; x,y - coordinates of the pixel for which contrast adjustment is implemented. Wm,k(i) = ^X—=((c(xn,i) 1,0 p = {-1,0,5 < x < 1(15) 0,x^[0,1[ if w(1)m,k ^ W(2)m,k ^ w(3)m,k = w(1)m,k else w(3)m,k = w(2)m,k q(xn,i) = -^Z^-o1Z^=-o1(p*(xn)^m.k(i)) where W - matrix of wavelet coefficients for the i-th color channel of a color image; TV - image width/height; c(xn, i) -brightness of the i-th color channel at a point xn; m,k - shift and scale; xn - coordinate of the nth point of the image; * - complex conjugation operation; p - basis Haar wavelet; w(1)m,k - m,k-th wavelet coefficient for the first image; w(2)m,k - m,k-th wavelet coefficient for the second image; w(3)m,k - m,k-th wavelet coefficient for the third filtered image; Note that the work [8] indicates the need to improve the model to determine the need to implement a preprocessing procedure. Thus, the results of the analysis indicate a close connection between calls for political extremism in online social networks and the presence of scenes of violence in images and video materials of these networks. In addition, one can argue about the advisability of using neural network tools for recognizing images and video materials, which must be adapted to the conditions of the SN under study. It should also be noted that at present there is no step-by-step scientifically based description of the solution to the problem of constructing neural network tools for sufficiently accurate detection of scenes of political extremism in SN graphic materials with limited computing resources. Thus, the purpose of this article is to develop a method for constructing neural network tools that, in conditions of limited computing resources, provide sufficiently accurate recognition of scenes of political extremism in graphic materials of online social networks, taking into account the variability of the sizes of these materials and the need to level out typical interference. It is assumed that to achieve this goal, one should first develop a neural network model for analyzing graphic resources of online social networks, which will provide formalization of key aspects and structuring of information necessary for the subsequent creation of an effective method for recognizing scenes of political extremism. In addition, to verify the proposed solutions, appropriate experimental studies should be carried out. 3. Development of a Neural Network Model for Analyzing Graphic Resources of Online Social Networks In general, NNM analysis of graphic resources SN can be described using the following expressions: Fnn(GM}^Y(18) GM = ({Im},{Vd})(19) Y=<{YIm},{Yvd})(20) where Fnn - neural network recognition function; GM - graphic material; Y - output of NNM, which signals the presence of political extremism in SN graphic material; {Im}, {Vd} - set of pictures and set of video footage in SN; {YIm}, {Yvd} -sets that contain NNM output signals indicating the presence of political extremism in SN images and video materials. Based on modern advances in the field of neural network analysis of graphic resources [16, 17], it has been determined that the main type of NNM, which is widely used for the analysis of raster images, is a convolutional neural network (CNN). At the same time, taking into account the features of video materials involve the use of recurrent neural networks (RNN) or the integration of the Attention mechanism into a neural network with direct signal propagation or into an RNN. It should be noted that various modifications of CNN are currently in use, the characteristics, and capabilities of which differ significantly from each other. At the same time, the methodology for developing the NNM architecture is widely known and sufficiently tested, the parameters of which make it possible to solve the problem most effectively [18, 3]. Using this methodology, from the standpoint of identifying calls for political extremism in SN images, a mathematical apparatus has been developed that allows choosing the type of CNN. The basis of this mathematical apparatus is the expressions: TCNN = max(E)(21) Ei = Mi (i) + «2^2 (i) + Мз©(23) where TCNN - most efficient type of CNN; E - a set containing integral efficiency indicators for available CNN types; Ei - integral efficiency indicators for the i-type CNN; N - number of CNN types considered; A1(i),A2(i),A3(i) - values of the first, second and third performance criteria for the i-th type of CNN; a1, a2, a3 - weighting coefficient of the first, second and third performance criteria, respectively. Note that the criterion A1 (i) correlates with recognition accuracy, the criterion A2 (i) - with resource-intensive recognition, and the criterion A3 (i) - with the training time of the i-type CNN. Based on the results of [8, 19], taking into account the fact that the task of recognizing political extremism can be represented as a task of classifying graphic material, the Accuracy indicator was used to evaluate the accuracy criterion, which is calculated using the expression: Nr Ac = - (24) where Nr - number of correctly recognized examples; N - total number of examples. Since the accuracy of NNM is usually assessed on training, test and validation samples, a slightly modified expression (24) is used to assess the accuracy: Ack = -±,k = 1,2,3. (25) Nk In expression (7) the value k corresponds to sample number: k = 1 - training sample; k = 2 - test sample; k = 3 -validation sample. In accordance with the recommendations [18, 3], as an additional parameter for assessing the effectiveness criterion A1, correlating with the CNN recognition accuracy, the Loss indicator was used, defined by the expression: where N - total number of examples; Q - number of classes to be recognized; ynq - values of NNM output signals indicating that the nth example is classified in the qth class. Taking into account the need to adapt the Loss indicator to the type of examples used expression (26) is modified as follows: Based on theoretical results [20, 21], to evaluate the efficiency criterion A2 , which correlates with the resource intensity of recognition, expressions of the form: A2 = pNw(28) Nw = ^(29) where p - given coefficient; Nw - number of weights in a CNN of the type being evaluated; Nw — given number of weights in a CNN of the type being evaluated; NWax — maximum number of weights among evaluated CNN types. When carrying out evaluation calculations related to comparing the resource intensity of CNNs, in the basic version it is assumed that p = const the same for all types of networks being assessed. Thus, estimating the resource intensity of a particular type of CNN is correlated with determining the number of weights of a given type of NNM. Since increasing the efficiency of NNM is associated with reducing resource intensity, expression (28) uses a negative value p. Performance criterion A3, correlated with the CNN training time, directly depends on the number of NNM weighting coefficients and the features of the learning mechanism that is characteristic of a given type of NNM. In the case of using the same type of network training mechanisms, in the task of comparing the effectiveness of NNM types, it is possible to use (28) to evaluate the effectiveness criterion A3. The formation of many admissible types of CNNs is implemented from the standpoint of their testing in tasks of neural network image analysis and the availability of accessible tools with open-source software, which is explained by the need for use in highly responsible systems that ensure information security. Ultimately, the many valid CNN types included: VGG-16, VGG-19, Inception-v3, GoogleNet, MobileNetV2, SqueezeNet, ResNet: T— {Tvgg-16’Tvgg-19’ ^Inception-v3> ^GoogleNet, ^MobileNetV2> ^SqueezeNet, ^ResNet } Calculations carried out using expressions (21-30) indicate that among the valid types of CNNs in the task of detecting political extremism in SN images, CNNs of the MobileNetV2 type have the greatest efficiency, for which the calculated integral efficiency indicator has the maximum value. MobileNetV2 is based on the “depthwise separable convolution” mechanism, which is used to carry out the depthwise convolution operation, which is a channel-by-channel convolution with a 1x1 kernel [20, 21]. This convolution is called “pointwise convolution”. By using this mechanism, the effect of reducing the number of computational operations required to calculate the CNN output signal is achieved. In the case of using one convolutional layer using the “depthwise separable convolution” mechanism, the coefficient of change in the number of computational operations relative to the classical convolutional layer can be calculated as follows: d2xkout d2 + kout where 5 - coefficient of change; d - convolution kernel size; kout - number of channels at the output of the layer. A distinctive feature of the MobileNetV2 structure is the presence of modules, conventionally called expanding convolutional blocks. The structure of such a block is shown in Fig. 1. Fig.1. Structure of the dilation convolutional block Note that in Fig. 1 the following designations are accepted: • L1, L2, L3 – layer number 1, 2, 3, respectively; • XL1 – input tensor for L1; • YL1, YL2, YL3 – output tensors for layers L1, L2, L3, respectively; • Conv 1x1 – convolution with convolution kernel 1х1; • ReLU6 – activation function given by expression (32); • Stride=2 – convolution kernel offset in increments of 2; • Dwise 3x3 – deep (multi-channel) convolution with convolution kernel 3х3. The information processing mechanism in the expanding convolutional block is described by the following The range of recommended t values is from 5 to 10. As a first approximation, it is customary to use t = 6. In this case, the output tensor of the previous layer is the input tensor for the next layer. For example, KYli is the input tensor of the layer L2. Output layer tensor L3 is the output tensor of a separate module (spreading convolutional block). It should also be noted that in the terminology of classical neural networks, the input tensor is associated with the input information of the network and a separate layer, and the output tensor is associated with the output information. In CNN terminology, the input tensor is associated with the image fed to the input of the network, convolutional layer, or scaling layer. The output tensor is associated with the output of the convolutional layer, the scaling layer, or the entire network. The next stage of research was devoted to determining the most effective type of RNN designed to recognize calls for political extremism in SN video materials. Based on the results of [22, 19, 3], in accordance with practical experience, it has been determined that currently RNNs based on LSTM cells and GRU cells are considered the most effective. At the same time, classical RNNs such as bidirectional associative memory, Hopfield, Hamming, Jordan, and Elman networks are rightfully considered obsolete due to insufficiently complete consideration of long-term dependencies characteristic of neural network analysis of video materials. At the same time, theoretical studies of RNNs such as LSTM and GRU indicate the absence of an analytical tool for assessing their comparative effectiveness. It only indicates that, relative to the GRU, the LSTM network is able to remember a longer sequence of data. At the same time, the LSTM network is more resource-intensive compared to GRU. Also, in the available scientific and practical literature there is no analytical tool for determining the optimal number of LSTM/GRU cells and LSTM/GRU layers in the network. Therefore, to determine the type of network, as well as the number of LSTM/GRU layers and the number of LSTM/GRU cells in each layer, it is necessary to conduct experimental studies. In the course of such studies, it is advisable to determine the type and parameters of the RNN that most effectively recognizes political extremism in video materials of common SNs. Also using the results of [3], the premise about the feasibility of using integral NNM, which allows analyzing both static images and video materials, is accepted. From the standpoint of developing universal tools for recognizing political extremism, such an NNM should be adapted to the peculiarities of presenting images and video materials of at least SN. First of all, NNM must be adapted to the variability of image and video sizes. Taking into account possible limitations on computing resources, as well as the possibility of effectively scaling the input image or video, in the basic version it is proposed to use an NNM input field of size 64x64. A feature of the used CNN is the absence of an output layer, which leads to the supply of signals corresponding to the last convolution layer to the input of the RNN. In accordance with the recommendations [16, 18], NNM is modified by adding fully connected layers of neurons, the use of which increases the recognition accuracy. The structure of integral NNM recognition with an added block that corresponds to one or more fully connected layers of neurons is shown in Fig. 2, on which this block is designated as Dence. Fig.2. Structure of the basic integrated neural network model for recognizing images and video materials, supplemented with a block of fully connected layers Note that the number of fully connected layers, as well as the number of neurons in each of the fully connected layers, should be determined through experimental research, since a reliable analytical tool for determining these quantities is not publicly available today. Replacement in Fig. 2, a general convolutional network on an adapted NNM of the MobileNetV2 type made it possible to propose a general NNM structure designed for recognizing political extremism in graphical SN objects. The structure of such a network is shown in Fig. 3. Note that the structure of the basic integral NNM recognition based on MobileNetV2, shown in Fig. 3, displayed using the built-in tools of the TensorFlow library. Therefore, Fig. 3 shows elements that are usually not displayed in the traditional presentation of the NNM structure. For example, in Fig. 3 shows the dropout_5 module, which indicates that during the NNM training process, the dropout mechanism is used when calculating the weight coefficients of the third fully connected layer. It should also be noted that, unlike the classic CNN, 16 frames of a video stream are provided at the input of the developed model. The main parameters of the developed neural network model are presented in Table. 1. The total number of design parameters in the recurrent and fully connected modules of the constructed neural network model is 3,637,090, including 576,448 parameters determined during the construction of the model architecture, and 3,060,642 parameters that correspond to the weighting coefficients determined during the network training process. Note that the developed basic version of the neural network model does not include the Attention mechanism, since the results of theoretical studies show the possibility of its use both in a module that corresponds to a convolutional neural network and in a module that corresponds to a recurrent network. At the same time, no tested formalized solutions regarding the feasibility of its implementation into the structure of an integrated neural network model have been found in the available literature. Therefore, the use of the Attention mechanism goes beyond the construction of a basic integrated neural network model and can be justified by modifying the specified model. Also, in modified versions of the proposed integral neural network model, it should be possible to adapt the input field of the 64x64 convolutional neural network model to the variability of the sizes of the analyzed graphic materials, taking into account the possibilities of using a proven uniform scaling mechanism in the range from 0.2 to 2 and a proven non-uniform scaling mechanism that allows you to change the proportions of the sizes of graphic materials by approximately 20-30%. The development of a neural network model made it possible to move on to solving the problem of determining the stages of a method for constructing neural network tools designed to recognize scenes of political extremism in graphic materials of SN. input_2: InputLayer input: [(None, 16, 64, 64, 3)] output: [(None, 16, 64, 64, 3)] time_distributed(mobilenetv2_l.00_224): TimeDistributed(Functional) input: {None, 16, 64, 64, 3) output: (None, 16, 2, 2, 1280) dropout: Dropout input: (None, 16, 2, 2, 1280) output: (None, 16, 2, 2, 1280) time_distributed_l(flatten): TimeDistributed(Flatten) input: (None, 16, 2, 2, 1280) output: (None, 16, 5120) bidirectional(lstm): Bidirectional(LSTM) input: (None, 16, 5120) output: (None, 64) dropout_l: Dropout input: (None, 64) output: (None, 64) dense: Dense input: (None, 64) output: (None, 256) input: (None, 256) output: (None, 256) dense l: Dense input: (None, 256) output: (None, 128) dropout_3: Dropou! input: (None, 128) output: (None, 128) dense_2: Dense input: (None, 128) output: (None, 64) dropout_4: Dropout input: (None, 64) output: (None, 64) input: 'None, 64) - output: (None, 32) dropout_5: Dropout input: (None, 32) output: (None, 32) input: (None, 32) - output: (None, 2) Fig.3. Basic integrated neural network recognition model based on MobileNetV2 Table 1. The main design parameters of the recurrent and fully connected modules of the neural network model for detecting political extremism in graphic materials of online social networks Layer (type) Output Shape Param # time_distributed TimeDistri (None, 16, 2, 2, 1280) 2257984 dropout (Dropout) None, 16, 2, 2, 1280 0 time_distributed_1 TimeDist (None, 16, 5120) 0 bidirectional Bidirectional (None, 64) 1319168 dropout_1 (Dropout) None, 64 0 dense (Dense) None, 256 16640 dropout_2 (Dropout) None, 256 0 dense_1 (Dense) None, 128 32896 dropout_3 (Dropout) None, 128 0 dense_2 (Dense) None, 64 8256 dropout_4 (Dropout) None, 64 0 dense_3 (Dense) None, 32 2080 dropout_5 (Dropout) None, 32 0 dense_4 (Dense) None, 2 66

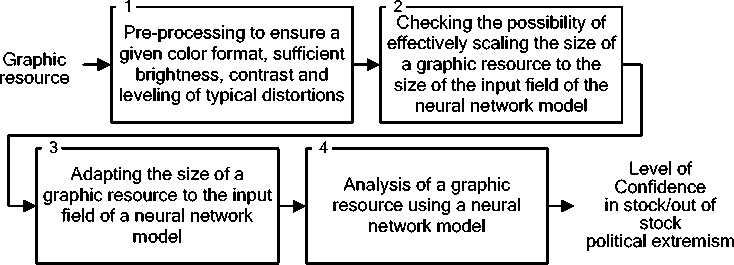

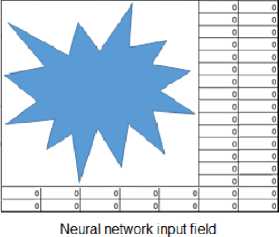

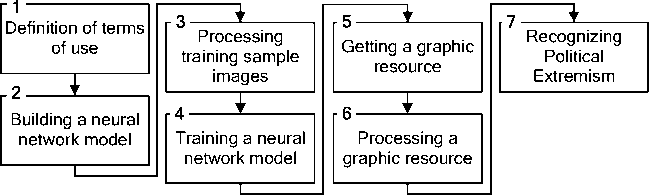

4. Development of a Method for Constructing Neural Network Tools for Recognizing Scenes of Political Extremism in Graphic Materials of Online Social Networks Taking into account the recommendations [22, 3], it is assumed that in addition to the developed neural network model, the method involves the use of the SN image preprocessing model, which was proposed in [8]. In addition, the method should take into account the need to adapt the NNM input field to the variability in the size of graphic resources SN. In accordance with the specified prerequisites, in an analytical form, information processing using the proposed method can be represented using expressions: [GM,M,D)^Ycm (37) (G,N,Q,DS,Z,A)^D (38) where GM - a tuple containing the values of the parameters of the analyzed graphic resource SN; M - a tuple containing the values of the parameters of the graphic resource preprocessing model; D - a tuple containing the parameter values of the NNM used; Ycm - a set containing the results of neural network analysis of graphic resources; G - a tuple containing the values of the parameters of the graphic resources of the analyzed SN; N - tuple containing parameter values of available NNM types; Q - tuple of conditions characterizing the recognition process; DS - set containing examples of the NNM training set; Z - set of expert data used to build NNM; A - set of NNM performance criteria. Note that expression (37) is associated with the process of recognizing graphic resources of a particular SN, and expression (38) is associated with the process of constructing recognition tools, which includes a procedure for preprocessing graphic resources and a procedure for constructing NNM. In this case, the components GM are determined using expression (19), and the components M are determined by expressions (1-17). In the base case Ycm possible to associate with the output signal of this NNM. Also, as a first approximation, the components of the set of NNM effectiveness criteria (A) are A1,A2,A3, defined in the section devoted to the development of NNM analysis of graphic resources of online social networks. Components (37, 38) are detailed using expressions (39-44). G = (C,S)(39) S = (s1,S2,s3}(40) where C - graphic format of GM; S - size of GM; S1 - width of GM; s2 - height of GM; s3 - number of kernels of GM. Note that in the case GM = Im, S3=1. N = {T,RT)(41) where T - set of valid CNN types defined by expression (3.13); RT - set containing component parameters T. Q = {Q1,Q2>(42) Qi = (Nr,Na)(43) Q2 (s1,min, s2,min, s1,max, s2,max, CGM ) where Q1 - requirements for recognition tools; Q2 - parameters of analyzed materials; NR - acceptable resource consumption of recognition tools; NA - acceptable recognition accuracy; s1mtn, s2,mtn - the minimum possible value of the width and height of the graphic resources of the analyzed SN; s1max,s2max - the maximum possible value for the width and height of graphic resources; CGM - set of possible graphical resource formats within the analyzed SN. The diagram of the proposed procedure for detecting political extremism in SN graphic resources is shown in Fig.4. Fig.4. Scheme of the procedure for detecting political extremism Note that when implementing the stages of the specified detection procedure, solutions obtained during the development of the pre-processing model of graphic resources SN [8, 23] are used. Thus, when preprocessing a graphic resource, we use: • to provide a given color format with normalized values for each color channel – expressions (4-6); • to correct the brightness of color channels - expressions (7-9); • for contrast correction – expressions (10-13); • to correct typical distortions - expressions (14-17), taking into account kmax=0,9, km^n= 0,2, a = 0,6, d = 20. In addition, to implement the standard noise leveling procedure provided for in the graphic resource processing model, it is proposed to use the mechanism proposed in [8, 23] for assessing image quality using a standard-free measure. The use of a standard-free evaluation mechanism is explained by the fact that both in the case of creating a database of training examples and in the case of neural network analysis of graphic resources of online social networks, the reference image is most often unavailable. To determine the image quality, the results of [12, 14] were used, in which the so-called Tenengrad method was proposed, which is characterized by sufficient accuracy and a small number of calculations, which allows its use online in systems for detecting political extremism in online social networks. The Tenengrad method is based on estimating the mean square of the brightness gradient of the pixels of a halftone image, which assumes an appropriate conversion of the analyzed image. The implementation of the Tenengrad method is described using the following expressions: SS1(x,y) = ^2 0 —1 0 — 2|*A(x,y) (45) 0 —1 Ss2(x,y) = | 0 21 0 0 * A(x,y) (46) —2 —1 S = XSx1=iZy=i(SS1(x,y)2 + Ss2(x,y)2) (47) where A(x,y) - pixel color intensity at a point with coordinates x, y; s1,s2 - image width and height; * - convolution operator. The procedure for correcting brightness, contrast and leveling typical distortions is implemented in the case when the value of the S indicator is less than a certain threshold value. In accordance with [12, 22, 13], in the basic version, the specified threshold can be taken equal to 150. It should be noted that with traditional preprocessing of graphic materials before submitting them to the input of a neural network, there is usually no automatic comparison of image preprocessing parameters with acceptable values that determine their quality. Thus, with traditional preprocessing, preprocessing parameters can be set regardless of the actual quality of the input image, which in the future can lead to incorrect results of neural network recognition. In accordance with the one shown in Fig. 5 diagram, the next stage of the procedure for detecting political extremism is adapting the size of the graphic resource to the input field of the neural network model. An adaptation mechanism is proposed, which primarily involves checking the possibility of effective size adaptation, which is implemented using expressions (1-3), under the assumption that kmax = 2, km[n = 0,2, d = 1,3. If the test result is positive and the size of the input field of the neural network model differs from the size of the graphic resource, it is proposed to use the proven method of bicubic interpolation for scaling [15]. If after scaling using kmax the size of the graphic resource will be smaller than the size of the input field, then the scaled resource is supposed to be placed in the upper left corner of the input field, and the rest of the input field is padded with zeros. If after scaling using km[n the size of the graphic resource will be larger than the size of the input field, then the possibility of effective neural network analysis of individual parts of such a graphic resource should be considered. If it is impossible to implement an effective neural network analysis of individual parts of the reduced graphic resource, it is necessary to change the size of the input field of the neural network model. An illustration of the process of adapting the size of a graphic resource in the case of the possibility of effective scaling is Fig. 5-a, and the process of adapting the size of a graphic resource in the case of impossibility of effective scaling is illustrated using Fig. 5-b. Note that on the left side of Fig. 5 shows a graphical resource that needs to be adapted to the dimensions of the NNM input field. In the case illustrated in Fig. 5-a – the dimensions must be doubled, and in the case illustrated in Fig. 4.5-b – needs to be more than doubled. Consequently, in the first case, a scaled graphic resource is supplied to the NNM input, and in the second case, the dimensions increase and the NNM input field is filled with zeros. Neural network input field (a) If efficient scaling is possible (b) If effective scaling is not possible Fig.5. Illustration of the process of adapting the dimensions of a graphic resource The diagram of the NNM construction procedure, formed in accordance with the possibility of implementing expression (38) and taking into account the results of [5, 9], is shown in Fig. 6. It should be noted that at the stage of computer experiments, in accordance with the results of [16, 18], the following NNM parameters should be determined: • Number of convolutional layers - KC; • Number of feature maps in each convolutional layer - Ks(k1), k1 £ [1; KC]; • Recurrent cell type - TRNN £ {LST M, GRU); • Number of recurrent layers - KRNN; • Number of recurrent cells in each recurrent layer - KT(k2), k2 £ [1; KRNN]; • The feasibility of using the Attention mechanism in the NNM CNN module - ACNN. If it is advisable to use the mechanism, then ACNN = 1, otherwise ACNN = 0; • The feasibility of using the Attention mechanism in the NNM RNN module - ARNN. If it is advisable to use the mechanism, then ARNN = 1, otherwise ARNN = 0; • Type of Attention mechanism in the NNM CNN module - TAcnN; • Attention mechanism type in the NNM RNN module - TArnn; • Number of fully connected layers - KD; • Number of neurons in each fully connected layer - KN(k3), k3 £ [1; KD]. Expression for determining the optimal values of NNM design parameters: AA1 \KC,Ks(k1),TRNN,KRNN,KT(k2),ACNN,ARNN,TACNN,TARNN,KD,KN(k3)) ^ max I A2< A™* where A1 - recognition accuracy; A2 - resource intensity of the neural network model; Amax- maximum permissible resource intensity. Conditions for using the neural network model Determining the possibility of effectively scaling images of the training sample to the size of graphic materials of an online social network Setting the dimensions of the input field of the neural network model equal to the scaled dimensions of graphic materials of the online social network Adapting the size of the training sample images to the size of the input field of the neural network model Adaptation of parameters of the MobileNetV2 convolutional neural network to the specified input field sizes Conducting computer experiments to determine the optimal parameters of the neural network model Training a neural network model using examples of a training sample with changed image sizes Neural network model parameters Fig.6. Scheme of the procedure for constructing a neural network model Note that the type of Attention mechanism determines the mechanism for generating the importance weight matrix [9]. In the base case, it is possible to use two types of Attention mechanisms: self-attention and multi-focal attention (MultiHead) [22, 19]. The implementation diagram of the developed method for detecting political extremism in images and video materials of online social networks is shown in Fig. 7. The scheme was developed taking into account: • expressions (37-44), which analytically describe the processing of information using this method; • expressions (45-47), which are used as a basis for determining the need for image preprocessing; • expression (48), which is used to determine the optimal values of NNM design parameters; • developed procedures for detecting political extremism, adapting the size of the graphic resource, constructing NNM, which are illustrated in Fig. 4 and Fig. 5. Fig.7. Scheme of implementation of the developed method for detecting political extremism in images and video materials of online social networks As shown in Fig. 7 implementation of the method involves performing 7 stages. Stage 1. Determination of terms of use. The execution of the stage consists of an expert and instrumental assessment of the experimental online social network and the resources allocated for the creation of a recognition system for the formation of: a tuple containing the values of the parameters of graphic resources of the analyzed online social network (G); a tuple containing parameter values of available types of neural network models (N); tuple of conditions characterizing the recognition process (Q); many examples of a training sample of a neural network model (DS); set of expert data used to build neural network models (Z); set of criteria for the effectiveness of neural network models (4); a tuple containing the values of the parameters of the graphic resource preprocessing model (M). Stage 2. Building a neural network model. The input of stage 2 is supplied with G, N, Q, DS, Z, A, determined during stage 1. The stage is implemented based on the developed neural network model for analyzing graphic resources of online social networks in accordance with stages 1-5, the procedure for constructing such a model (Fig. 6). The output of stage 2 is the architectural parameters of the neural network model, adapted to the conditions of the task of detecting political extremism in the specified online social network (D). Stage 3. Processing images of the training sample. The input of stage 3 receives those determined at stage 1: , M, and also the size (51 X 52) and color format (typecolor) input field of the neural network model. Processing is implemented to adapt the color format and size of the training sample images to the input field of the neural network model. Color format conversion is planned to be implemented using publicly available codecs, and size adaptation is planned to be implemented based on the proposed model for pre-processing of graphic resources in accordance with the proposed procedure for adapting the size of a graphic resource, illustrated in Fig. 5. The output of stage 3 is a set of processed examples of the training sample (DS). Stage 4. Training the neural network model. The input of stage 4 is: DS , determined at the stage 2; D - identified at stage 3, as well as allocated resources (rir £ Q) and acceptable training time for the neural network model (tlr £ Q), determined on the stage 1. Based on the results of [18, 11], in accordance with the 6th stage of the developed procedure for constructing a neural network model (Fig. 6), the learning process is implemented using an error backpropagation algorithm using the mini-batch mechanism. The output of stage 4 is a tuple containing the values of the parameters of the used neural network model (D). Stage 5. Obtaining a graphic resource for analysis. The input of stage 5 is: determined at stage 1 - G, and also 0GM - an object whose parameters are subject to neural network analysis. It is assumed that the source of the specified object is the analyzed online social network. To retrieve a specified object from a social network, as well as to determine based on the parameter values G, to be analyzed, it is possible to use well-known parsers. The output of stage 5 is GM - a tuple containing the values of the parameters of the analyzed graphic resource of the online social network. Stage 6. Processing of the graphic resource. The input of stage 6 is: M, D, GM, obtained as a result of performing stages 1, 4, 5. Processing is implemented on the basis of a developed model for processing graphic resources of online social networks, in accordance with stages 1-3 of the developed procedure for detecting political extremism, the diagram of which is shown in Fig. 4. The output of step 6 is GM - tuple of parameters of the processed graphic resource subject to neural network analysis. Stage 7. Recognizing political extremism. The input of stage 7 receives: D и GM, determined at stages 4 and 6. In accordance with the developed procedure for detecting political extremism, the implementation of the stage consists in determining the output signal of the developed neural network model for analyzing graphic resources of online social networks. The output of stage 7 is sets that contain output signals of the neural network model indicating the presence of political extremism in the analyzed graphic resource of the online social network - YCM. The output of step 7 is the output of the method. In general, the results of detecting political extremism in graphic materials of online social networks, obtained using the proposed method, can be taken into account in the integral assessment of the presence of political extremism in online social network resources using expressions of the form: Y^ = kTYT + kGMYGM (49) k^i) + kGM(i) = 1, i = 1.N (50) where Y^ - set containing integral assessments of the presence/absence of political extremism; YT,YCM- sets containing assessments of the presence/absence of political extremism in text and graphic resources, respectively; kT,kCM- sets containing the values of weighting coefficients; N - the number of elements of the set of weighting coefficients. Since the stated research task involves only the detection of political extremism, in the base case it is possible to correlate the assessment of the presence of political extremism with the assessment of its absence using the expression: Y1 = 1 — Y2 where Y1, Y2 - assessment of the presence and absence of political extremism. This assumption allows us to use scalar values of estimates in expressions (49, 50): YY = kTYT + kGMYGM кт + kGM = 1(53) where Ys, YT, YGM - assessment of the presence of political extremism, integral, in text, in graphic materials. Using the results of [16, 11], it was determined that when using the constructed neural network model, trained on insufficiently complete data sets, the values of the weighting coefficients can be taken equal to kT = 0,7, kGM = 0,3. Substituting the indicated values into (52) we obtain: Ys = 0,7Yt + 0,3Ygm (54) In the initial case, this expression can be used to calculate the integral assessment of the detection of political extremism in online social network resources.

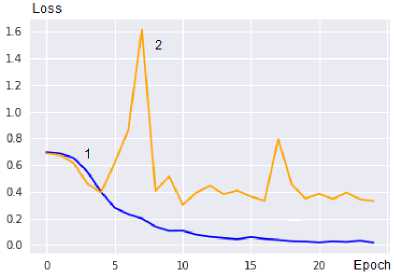

5. Experimental Verification of a Method for Constructing Neural Network Tools for Detecting Scenes of Political Extremism in Graphic Materials of Online Social Networks In accordance with the results [24, 16, 18], the main goal of the experimental research was to confirm the theoretical results regarding the adaptation of the basic neural network model to the conditions of analyzing graphic resources of the online social network under study. Note that the effectiveness of the model for pre-processing images of online social networks, used in the third and sixth stages of the method, is theoretically justified and experimentally confirmed in [8]. Based on the intended purpose of the study, an experimental software and hardware complex has been developed that allows implementing a neural network analysis of graphic resources, changing the design parameters of the basic neural network model, the structure of which is shown in Fig. 3. The software is written in the Python programming language using the TensorFlow library. The functioning of the software is focused on the Google Colab environment, which is explained by the possibility of free access to cloud computing. Using the thesis [25, 8, 26] about the close relationship between political extremism and the presence of scenes of violence in graphic content, the public database Real Life Violence Situations Dataset, formed from YouTube videos, containing 1000 examples with scenes of violence and 1000 examples without scenes of violence, was used to train the network [27]. The training sample was supplemented with 100 videos labeled by experts as scenes of political extremism and 100 videos from team sports. In this way, a balanced training sample was formed, which presented 1100 examples of each of the two recognized classes, which makes it possible to evaluate the accuracy of the neural network model using two indicators - Accuracy and Loss. Computer experiments were carried out in which the Accuracy and Loss indicators were determined for: • An optimized neural network model built using the proposed method for constructing neural network tools for detecting scenes of political extremism in graphic materials of online social networks; • Basic neural network model based on MobileNetV2; • Neural network models built on the basis of a convolutional neural network, selected from a set (30). Note that the construction of a neural network model is implemented in accordance with the second stage of the proposed method for constructing neural network recognition tools, which involves conducting computer experiments to determine the optimal values of the design parameters of the basic NNM, the structure of which is shown in Fig. 3. The actual course of the experiments is regulated by determining the optimal values of the design parameters of the base NNM, specified in expression (48). In this case, the method for determining the optimal values of design parameters was used, proposed in [10, 16, 18]. During the experiments, it was determined that the main difference between the optimized neural network model with an input field of 1024x1024 and the basic version is the change in the number of LSTM cells in each of the LSTM layers from 32 to 128, as well as a doubling of the number of neurons in each of the fully connected layers. The parameters of the convolutional module of the neural network model are determined based on the need to process an input image of size 1024x1024, which, with a 2x2 convolution kernel, entails a corresponding change in the size of the feature maps. So, in the last convolutional layer the size of the feature map is 32x32. The main results of these experiments are shown in Fig. 8 and table. 2. As shown in Fig. 8, after 25 training epochs, the recognition accuracy of the optimized model based on MobileNetV2 for training data is about 0.99, and for validation data is about 0.94. (a) Dependence of Accuracy on training epochs Fig.8. Graphs of the dependence of accuracy and loss indicators on training (1) and validation data (2) for an optimized neural network model (b) Dependence of Loss on training epochs At the same time, the analysis of these graphs shows a synchronous stabilization of the Accuracy and Loss indicators on the validation set at 22-25 training epochs, which, taking into account the recognition accuracy of validation data equal to 0.94 and the recognition accuracy of training data equal to about 0.99, indicates the achievement of the optimal level of generalization capabilities of the neural network model. In addition, synchronous spikes and drops in the Accuracy and Loss graphs on the validation data at 7 and 17 training epochs may indicate a discrepancy between the validation and training data sets. This indicates the presence of anomalous examples in the training sample, which indicates the need to improve its quality by clarifying the labelling of recognized classes and supplementing it with new training examples to increase its representativeness. To confirm the effectiveness of the proposed solutions, a comparative analysis of the results of computer experiments on recognizing scenes of political extremism was carried out, implemented both using the author’s neural network model and using other modern neural network models. The comparison was carried out using indicators of accuracy and resource intensity, which was estimated by the number of weighting coefficients of the neural network model. The results of the experiments are given in table. 2. Table 2. Indicators of resource intensity and accuracy for different neural network models Basic neural network model Resource intensity (approximate number of weighting coefficients, ×106) Accuracy on validation set VGG-16 138 0,91 VGG-19 144 0,91 GoogleNet 5 0,93 Inception-v3 23,8 0,94 SqueezeNet 1,2 0,89 ResNet-50 25,6 0,94 Basic integrated neural network model based on MobileNetV2 2,2 0,93 Optimized model based on MobileNetV2 2,4 0,94 As shown in table. 2, the recognition accuracy of the developed neural network model with optimized parameters is 0.94, which is higher than the recognition accuracy achieved using VGG-16, VGG-19, SqueezeNet and corresponds to the recognition accuracy of models based on Inception-v3, ResNet-50. Moreover, the number of weight coefficients of the proposed neural network model is approximately 10 times less than the number of weight coefficients of Inception-v3, ResNet-50, which have approximately the same recognition accuracy. Thus, the experimental results indicate the effectiveness of the proposed method for constructing neural network tools for detecting scenes of political extremism in graphic materials of online social networks. It is advisable to correlate the ways of further research in the field of improving the method for detecting political extremism in graphic resources of online social networks with the addition of the Attention mechanism to the constructed neural network model, as well as with the formation of representative training samples used to train the neural network model for detecting political extremism in online social networks. In addition, given the relatively low resource intensity of the developed neural network model, the possibility of scaling it for use on a wide range of computing devices with limited resources, as well as for the operational analysis of text and graphic materials from large segments of online social networks, is of interest.

6. Conclusions As a result of the research, a method has been developed for constructing neural network tools that, in conditions of limited computing resources, provide fairly accurate recognition of scenes of political extremism in graphic materials of online social networks, taking into account the variability of the sizes of these materials and the need to level out typical interference. For recognition, the method uses a developed neural network model, which, due to the reasonable determination of the architectural parameters of the structure of the low-resource convolutional neural network of the MobileNetV2 type and the recurrent neural network of the LSTM type, makes it possible to achieve sufficiently high recognition accuracy in conditions of limited computing resources. Taking into account the variability of the sizes of graphic resources are implemented through a mechanism for adapting the input field of the neural network model to the variability of the sizes of graphic resources, which provides for scaling within the acceptable limits of the input graphic resource and, if necessary, filling the input field with zeros. Leveling out typical noise is ensured by using advanced solutions in the method for correcting brightness, contrast and eliminating blur of local areas in images of online social networks. Experimental studies have shown that the tools developed on the basis of the proposed theoretical solutions provide an accuracy of recognition of training data of about 0.99, and of validation data of about 0.94. This accuracy value is comparable to the recognition accuracy values when using other most well-known neural network models, the resource intensity of which is more than 10 times higher. Thus, the experimental results confirm the effectiveness of the developed method for recognizing political extremism in graphic resources of online social networks. The results obtained, with certain modifications, can be used not only for monitoring online social networks, but also for monitoring multimedia messages in the media in order to recognize destructive content of various kinds. It is advisable to correlate the paths for further research with the addition of the Attention mechanism to the constructed neural network model, as well as with the formation of representative training samples used to train the neural network model for detecting political extremism in online social networks.

expressions:

( 0, if x < 0

у —

Список литературы Method for Constructing Neural Network Means for Recognizing Scenes of Political Extremism in Graphic Materials of Online Social Networks

- Archana N., Saleena B. A Comparative Review of Sentimental Analysis Using Machine Learning and Deep Learning Approaches. Journal of Information & Knowledge Management. 2023. Vol. 22. No. 03:2350003.

- Muhammad A., Atiab I., Haseeb A., Hanan A., Jalal S. Sentiment analysis of extremism in social media from textual information. Telematics and Informatics. May 2020. Vol. 48(3):101345. DOI: 10.1016/j.tele.2020.101345.

- Yenter A., Verma A. Deep CNN-LSTM with combined kernels from multiple branches for IMDB review sentiment analysis. IEEE 8th annual ubiquitous computing, electronics and mobile communication conference. 2017. P. 540–546.

- Rezaul H., Naimul I., Mayisha T., Amit K. Multi-class sentiment classification on Bengali social media comments using machine learning. International Journal of Cognitive Computing in Engineering. June 2023. Vol. 4. P. 21-35. DOI: 10.1016/j.ijcce.2023.01.001.

- Xiaodong F., Jie X., Zhiwei T. To be rational or sensitive? The gender difference in how textual environment cue and personal characteristics influence the sentiment expression on social media. Telematics and Informatics. May 2023. Vol. 80: 101971. DOI: 10.1016/j.tele.2023.101971.

- Li K., Chen C., Zhang Z. Mining online reviews for ranking products: A novel method based on multiple classifiers and interval-valued intuitionistic fuzzy TOPSIS. Applied Soft Computing. May 2023. Vol. 139: 110237.

- Agarwal S., Sureka A. A focused crawler for mining hate and extremism promoting videos on youtube. 25th ACM Conference on Hypertext and Social Media. 2014. P. 294–296.

- Bagitova K., Tereikovskyi I., Babayev I., Tereikovska L., Tereikovskyi O. Model for processing images of online social networks used to recognize political extremism. Journal of Mathematics, Mechanics and Computer Science. 2023. Vol. 119, No. 3. P. 91-103. DOI: 10.26577/JMMCS2023v119i3a8.

- Ganesh. B., Bright J. Countering Extremists on Social Media: Challenges for Strategic Communication and Content Moderation. Policy & Internet. 2020. Vol. 12(1). P. 6-19. DOI: 10.1002/poi3.236.

- Tereykovska L., Tereykovskiy I., Aytkhozhaeva E., Tynymbayev S., Imanbayev A. Encoding of neural network model exit signal, that is devoted for distinction of graphical images in biometric authenticate systems. News of the national academy of sciences of the republic of Kazakhstan series of geology and technical sciences. 2017. Vol. 6, No 426. P. 217-224.

- Toliupa S., Tereikovskiy I., Dychka I., Tereikovska L., Trush A. The Method of Using Production Rules in Neural Network Recognition of Emotions by Facial Geometry. 3rd International Conference on Advanced Information and Communications Technologies. 2019. P. 323-327. DOI: 10.1109/AIACT.2019.8847847.

- Kurilin I. Fast algorithm for visibility enhancement of the images with low local contrast. International Society for Optics and Photonics. 2015. P. 93950B-93950B-9.

- Uijlings J. Selective search for object recognition. International journal of computer vision. 2013. Vol. 104. No. 2. P. 154-171.

- Pal K., Sudeep K. Preprocessing for image classification by convolutional neural networks. IEEE International Conference on Recent Trends in Electronics, Information Communication Technology. 2016. P. 1778–1781.

- Yubin Z., Yonghang D., Kaining H., Kaining H. An efficient bicubic interpolation implementation for real-time image processing using hybrid computing. Journal of Real-Time Image Processing. 2022. Vol. 19 (6). P. 1-13.

- Tereikovskyi I., Chernyshev D., Tereikovska L., Mussiraliyeva S., Akhmed G. The procedure for the determination of structural parameters of a convolutional neural network to fingerprint recognition. Journal of Theoretical and Applied Information Technology. 2019. Vol. 97, No 8. P. 2381-2392.

- Vo Hoai Viet, Huynh Nhat Duy, " Object Tracking: An Experimental and Comprehensive Study on Vehicle Object in Video", International Journal of Image, Graphics and Signal Processing, Vol.14, No.1, pp. 64-81, 2022.

- Tereikovskyi I., Korchenko O., Bushuyev S., Tereikovskyi O., Ziubina R., Veselska O. A Neural Network Model for Object Mask Detection in Medical Images. International Journal of Electronics and Telecommunications. 2023, Vol. 69, No 1. P. 41-46. DOI: 10.24425/ijet.2023.144329.

- Indhumathi. J, Balasubramanian .M, Balasaigayathri .B, "Real-Time Video based Human Suspicious Activity Recognition with Transfer Learning for Deep Learning", International Journal of Image, Graphics and Signal Processing, Vol.15, No.1, pp. 47-62, 2023.

- Dong K., Zhou C., Ruan Y., Li Y. MobileNetV2 Model for Image Classification. 2nd International Conference on Information Technology and Computer Application (ITCA). Guangzhou, China. 2020. P. 476-480. DOI: 10.1109/ITCA52113.2020.00106.

- Howard A., Menglong Z., Chen B., Kalenichenko D., Wang W., Weyand T., Andreetto M., Hartwig A. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv preprint arXiv: 1704.04861. 2017.

- Hassan E. Learning Video Actions in Two Stream Recurrent Neural Network. Pattern Recognition Letters. Vol. 151, November 2021, P. 200-208.

- Mohammed A., Ming F., Zhengwei F. Color bala Time Video based Human Suspicious Activity Recognition nce for panoramic images. Modern Applied Science. 2015. Vol. 9, Is. 13. P. 140-147. DOI: 10.5539/mas. v9n13p140.

- Diwakar, Deepa Raj, "Recent Object Detection Techniques: A Survey", International Journal of Image, Graphics and Signal Processing, Vol.14, No.2, pp. 47-60, 2022.

- Ahmad S., Asghar M., Alotaibi F. Detection and classification of social media-based extremist affiliations using sentiment analysis techniques. Hum. Cent. Comput. Inf. Sci. 2019. Vol. 9, 24. DOI: 10.1186/s13673-019-0185-6.

- Sureka A., Agarwal S. Learning to classify hate and extremism promoting tweets. Intelligence and Security Informatics Conference (JISIC). 2014. P. 320–320.

- Soliman M., Kamal M., Nashed M., Mostafa Y., Chawky B., Khattab D. Violence Recognition from Videos using Deep Learning Techniques. 9th International Conference on Intelligent Computing and Information Systems. 2019. Cairo. P. 79–84.