Mobile-Based Skin Disease Diagnosis System Using Convolutional Neural Networks (CNN)

Автор: MWP Maduranga, Dilshan Nandasena

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 3 vol.14, 2022 года.

Бесплатный доступ

This paper presents a design and development of an Artificial Intelligence (AI) based mobile application to detect the type of skin disease. Skin diseases are a serious hazard to everyone throughout the world. However, it is difficult to make accurate skin diseases diagnosis. In this work, Deep learning algorithms Convolution Neural Networks (CNN) is proposed to classify skin diseases on the HAM10000 dataset. An extensive review of research articles on object identification methods and a comparison of their relative qualities were given to find a method that would work well for detecting skin diseases. The CNN-based technique was recognized as the best method for identifying skin diseases. A mobile application, on the other hand, is built for quick and accurate action. By looking at an image of the afflicted area at the beginning of a skin illness, it assists patients and dermatologists in determining the kind of disease present. Its resilience in detecting the impacted region considerably faster with nearly 2x fewer computations than the standard MobileNet model results in low computing efforts. This study revealed that MobileNet with transfer learning yielding an accuracy of about 85% is the most suitable model for automatic skin disease identification. According to these findings, the suggested approach can assist general practitioners in quickly and accurately diagnosing skin diseases using the smart phone.

AI, convolutional neural networks, skin diseases, automatic identification, MobileNet, transfer learning

Короткий адрес: https://sciup.org/15018425

IDR: 15018425 | DOI: 10.5815/ijigsp.2022.03.05

Текст научной статьи Mobile-Based Skin Disease Diagnosis System Using Convolutional Neural Networks (CNN)

As the World Health Organization (WHO) stated, skin diseases are widespread in our society today. As they mentioned, around 30% to 70% of the population have fallen victim to skin diseases. When it comes to Sri Lanka in both semi-urban and urban areas, individuals commonly face skin disease at 47.6% and 32.9% in both semi-urban and urban areas. The development of Artificial Intelligence and Machine learning has provided a significant opportunity for the automated identification of skin diseases [1]. Most people are not aware of skin diseases. Sometimes those diseases do not do that much harm. They can cause severe health issues. So, it is imperative to know about their effects. The diagnosis of skin disease involves a series of laboratory pathology tests to detect the proper illness. These diseases have been the topic of concern for the past ten years as their rapid appearance and complexities also strengthened the risks of life. Such skin defects are very contagious and need to be treated early to prevent their spread. Total well-being, including personal and physical welfare, mental well-being, is negatively affected as well. Many of these skin abnormalities, mainly if not treated at an initial level, are very deadly.

When it comes to symptoms and severity, skin diseases are pretty diverse. They may be short-term or long-term in nature, as well as painless or painful. Some are brought on by current events, while others are hereditary. Skin problems range from non-life-threatening to life-threatening. While the majority of skin problems are pretty trivial, others might be signs of something more serious. If you suspect you have one of these typical skin issues, make an appointment with your doctor right once.

In the same way that each human search begins with terms selected by the user, bias affects medical practitioners' analyses. Doctors frequently make presumptive diagnoses, which mean they start by looking for evidence to support their assumptions, and if those assumptions aren't supported, they'll ignore other possible diagnoses. While a doctor's search for symptoms may be correct, the order or weight given to any of the symptoms will most likely bias the results toward a relevant diagnosis even if another sign is overlooked and therefore not included in the search or taken into account promptly.

To prevent the disease from spreading, it is critical to take control of it early on. Skin disorders may affect more than just the appearance of a person's skin; they can also undermine one's mental well-being and undermine one's self-esteem. As a result, skin disorders have grown to be a significant source of concern for the public. To avoid permanent damage to the skin, it's critical to detect certain skin disorders early on and treat them appropriately. If implemented, this system would significantly contribute to the solution of this issue. Due to the system's ability to identify skin illnesses, users may deliver remedies or advice to patients using photos of diseased skin and information gleaned from patients.

2. Related Works

Images of scenery, wild animals, farm animals, birds, aquatic creatures, fruits, cars, artifacts, and human age and gender have all been studied to distinguish various categories of groups. To achieve their goals and targets, these studies used a variety of approaches. The methods mentioned above include image processing techniques, Radiofrequency Identification technology (RFID), Support Vector Machines (SVM), Neural Networks (NN), k nearest neighbors' algorithm (KNN) as well as the proposed method in this proposal, Convolutional Neural Networks (CNN). These categorization systems have been developed to reduce the cost and time associated with the manual classification.

For the first time, researchers [1] have compared the categorization of skin cancer using CNN to that of a global panel of 58 dermatologists. The CNN outperformed nearly all dermatologists. According to the authors' findings, regardless of a physician's level of competence. Dermoscopic pictures and associated diagnoses were used to train and verify Google's Inception v4 CNN model. One hundred randomly chosen dermoscopic pictures were used in crosssectional research by Marchetti [2] (50 melanomas, 44 nevi, and six lentigines). Throughout this investigation, researchers used a dataset of 379 participants from the international computer vision melanoma challenge. For categorization, the authors combined five different techniques. Used 7895 dermoscopic and 5829 close-up pictures of lesions to train a CNN-based [3] classification classifier. These photos were taken in a basic skin cancer clinic from January 1, 2008, to July 13, 2017. A total of 2072 unidentified instances were tested, and the model's results were compared to those of 95 medical professionals who acted as human ratters [3-5].

Several researchers have adopted NN methods for classification and identification. While pre-processing of pictures is done independently from the network, ANN-based solutions demonstrate efficiency. Such a method has employed ANN to classify gender with the help of facial characteristics identified. In contrast, the selection of features has been performed using the Viola-Jones [4] method provided by A Jaswante and others [5], from which accuracy of approximately 98% has been observed. The research team has highlighted the suggested system's efficiency, claiming that it will benefit a real-time system due to its performance. To take things a step beyond, T Kalansuriya and others have used pictures of people's faces to discuss a gender recognition system that determines age [6] This method utilized image processing methods for pre-processing and feature extraction, while ANNs were employed for classification. Four age categories have been established to train and evaluate the algorithm for faces from Asian and non-Asian countries. The capacity of humans to tell someone's age and gender has been quantitatively matched to the systems. When it comes to people, gender identification is 100 percent accurate. However, when it comes to age detection, that accuracy is just approximately 67% accurate. Using the kinematic data of eight strolling movement characteristics to determine children's gender, a different ANN-based classification method has employed algorithmically created data in addition to initially acquired data. Whenever algorithmically generated data was included in comparing the two datasets trained with ANN, the accuracy increased by 86%. [7]

Artificial Neural Networks (ANN) System detects skin diseases, namely acne, corporis, eczema, scabies, vitiligo, leprosy, foot ulcer, psoriasis, tinea, and pityriasis rosea was presented. Image features like area and edge were collected and used for classification and user's information such as liquid color, liquid type, duration, feeling, elevation, gender, and age. To extract image features, histogram, YCbCr algorithm, and Sobel operator were used, respectively

Artificial Neural Networks (ANN) [8] and Convolutional Neural Networks (CNN) [9] are the most widely utilized approaches for recognizing and diagnosing abnormalities in radiological imaging technology [10]. The CNN method of skin disease diagnosis yielded encouraging results. It is challenging to use CNN models with pictures taken with smartphones or cameras since they aren't scaled and rotation invariant. Early diagnosis of breast cancer with an ANN-based model needs image processing; both neural network strategies demand a lot of training data and computing effort [11]. Models based on neural networks are more esoteric, and we cannot modify them.

Additionally, as picture quality improves, the amount of ANN characteristics that may be trained grows dramatically, necessitating tremendous training effort. The ANN model is hampered by the gradient's decreasing and exploding. Although the size and magnitude of the object have been observed, CNN does not assess them [12].

According to this study, CNN-based approaches produce the best accurate results of all the automatic identification methods. Although ANN techniques may detect skin diseases, their accuracies are lower than those attained by CNN methods. Unlike with ANN, the pre-processing of the pictures is done inside the CNN classifier itself. The only work covering skin disease classification focuses on identifying the most crucial disease characteristics rather than classifying them. Visual perception is the most straightforward technique to distinguish skin disease that differs from disease to disease by minor characteristics. If this isn't feasible, a picture must be used to identify the person. Because a CNN learns to detect acceptable characteristics during the training phase, it can classify pictures more accurately than other machine learning techniques.

Table 1. Objectives and challenges of the identified approaches

|

Strategy |

Objective |

Challenges |

|

Morphological Operations |

Entail efficient expansion and devastation to detect picture characteristics that aid in recognizing abnormalities. The structural element works |

Identifying the appropriate threshold is essential and is not suited for the morphological operations of disease region growth. There is no accurate outcome in the use of the structural components for the categorization of skin diseases. |

|

Bayesian classification [5] |

The method efficiently manages distinct and constant data by disregarding unsuitable characteristics for both binary and multiclass classifications. Method resourcefully manages distinct and constant data by disregarding unsuitable characteristics for multiclass and binary classifications. |

This is commonly regarded as an inadequate probabilistic model since it is unsuitable for unsupervised data classification, independent and independent predictors. |

|

Support Vector Machine (SVM)[4] |

Support The extreme dimensional data are efficient along with minimum memory utilisation. |

The vector machine support technique does not suit noisy picture data and it's difficult to discover the feature-based parameters. |

|

K-Nearest Neighbour’s algorithm [4] |

The KNN-based model works for categorizing the information without training data by a selection of functions and similarities. It acts as a way of determining the association between the selected characteristics through distance measurement. |

The results accuracy is straight reliant on the condition of the basic data. Moreover, the prediction time may be substantially high for a bigger sample size. The KNN model is delicate to the wrong data characteristics |

|

Convolutional Neural Networks[4] |

Models of the revolutionary neural network are effective in selecting the important elements automatically. The CNN model saves the training data in network nodes rather than saved in an extra memory as multi-layer perceptions. |

The CNN method does not understand the scale and size of the item. In addition to the question of the space invariance between pixel data, the model requires enormous training for good results |

|

Fine-tuned Neural Network[5] |

The new problem using pre-trained data through initiation and updates is efficient for the Fine-Tune Neural network. |

In FTNN, when new weights are supplied to the components, the prior weight that might affect the result is forgotten |

|

Artificial Neural Networks[5] |

Nonlinear connections between dependent and independent factors can be recognized by data storage throughout network nodes. |

These models are effective in dealing with settings such as an issue inadequately understood. But it might lose out on the spatial characteristics of the image, and it is a significant worry to decrease and explode the gradient. |

3. Skin Diseases Diagnosing

Dermatologists base their diagnoses on some variables, including the patient's medical history and the look (morphology, color, and textures) of the damaged skin region. Strategies to diagnosing skin lesions are described in detail in whole textbooks, with procedures tailored to certain types of skin disorders. Flowcharts that store visual features, patient records, and the lesion's placement on the skin, for example, can aid in the diagnosis of skin illnesses that appear as stains on the skin. A clinician or machine anticipates the type of skin condition by examining the patient's history, the visual features of skin lesions, or both to diagnose or categorize a skin disease. While it is feasible to predict the illness class directly from the picture, this procedure has usually been divided into the following sub-tasks, particularly during machine classification [7-8].

Sort Dermoscopic Criteria: The presence of specific visual features within a lesion may suggest the presence of a disease. The appearance of particular dermoscopic criteria (such as an unusual pigment network or irregular streaks) in a lesion, for example, indicates the existence of melanoma. Thus, one method for classifying melanoma is to categorize dermoscopic characteristics linked with melanoma. A melanoma diagnosis can be made if a lesion meets several such requirements.

Segmentation of Lesion: Lesion segmentation, which delineates the boundaries of a lesion in an image, allows for thorough measurement of lesion attributes and is frequently used to extract image features that rely on knowing the border of the lesion.

Identifying Dermoscopic Criteria: A certain dermoscopic criterion (for example, streaks linked with melanoma) can be targeted and categorized. While detecting dermoscopic criteria is comparable to categorizing dermoscopic criteria, it needs localization. This exercise may enable clinicians to identify locations that include disease-specific criteria.

Removal of Artefacts: Artifact removal entails removing potentially confusing attributes from pictures and is frequently performed as a pre-processing step before the operations mentioned above. Color constancy, for example, may enhance lesion segmentation or classification.

4. Dataset

The modest size and lack of diversity of the current dataset of dermatoscopic pictures make it difficult to train neural networks for automated identification of pigmented skin lesions. The HAM10000 ("Human Against Machine with 10000 training images") dataset addresses this issue [8]. We gathered dermatoscopic images from various populations, which were captured and preserved using various modalities. Ten thousand fifteen dermatoscopic images make up the final dataset, which can be used as a training set for academic machine learning purposes.

The cases provide a cross-section of all key diagnostic categories in the field of pigmented lesions. Actinic keratoses and intraepithelial carcinoma / Bowen's disease (akiec), basal cell carcinoma (bcc), benign keratosis-like lesions (solar lentigines / seborrheic keratoses and lichen-planus like keratoses, bkl), dermatofibroma (df), melanoma (mel), melanocytic nevi (nv) and vascular lesions (angiomas, angiokeratomas, pyogenic granulomas and haemorrhage, vasc).

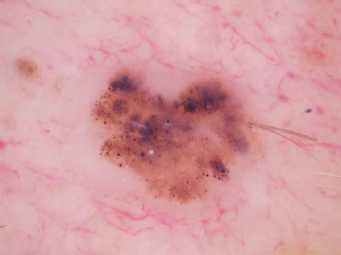

More than half of the lesions are confirmed through histology; the rest of the cases are confirmed through followup investigation, expert consensus, or in-vivo confocal microscopy (confocal). The lesion id-column in the HAM10000 metadata file can track lesions with many images in the dataset. A few samples of the images have shown in Fig.1 to Fig.7, and table 2 shows the number of images available in the dataset.

Table 2. Distribution of the dataset

|

Class |

Number of images |

|

Dermatofibroma |

115 |

|

Vascular lesions |

142 |

|

Actinic keratoses |

327 |

|

Basal cell carcinoma |

514 |

|

Benign keratosis-like lesions |

1099 |

|

Melanoma |

1113 |

|

Melanocytic nevi |

6705 |

Fig.1.Actinic Keratoses

Fig.3.Melanoma

Fig.4. Melanocytic Nevi

Fig.5. Bengin keratosis

Fig.6. Dermatofibroma

Fig.7.Vascular lesions

5. Data Pre-processing and Model Training

The image generator is a technology of applying different modifications to original pictures, resulting in several copies of the same image being changed. However, every duplicate is distinct, based on shifting, rotating, flipping, etc., from the other. The application of these modest modifications on the original image does not affect its target class but simply offers a fresh prospect of the actual capture of the item. And thus, we utilize it to make deep learning models reasonably frequently. Out of the total of 10015 pictures, 6009 images were used to train the CNN models, and 2003 images were used to verify the accuracy of the models. The rest of the 2003 pictures were kept around for comparison purposes. The images used for training, validation, and testing were selected at a 60 %, 20 %, and 20 % image percentage.

5.1. Convolutional Neural Networks

5.2. TensorFlow Library

5.3. Keras Deep Learning Library

6. Mobile Application Development

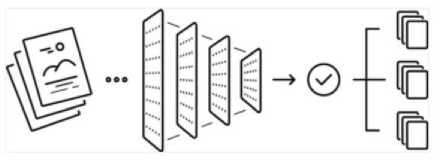

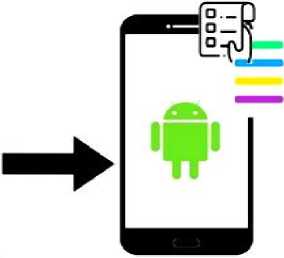

Convolutional Neural Networks have made tremendous advancements in computer vision using deep learning. The figure depicts the most basic level of procedure that occurs within a CNN model.

Fig.8. Basic process inside a CNN model

The design of the visual system inspired CNN architecture, which mirrors the connection pattern of the human brain neurons. The basic architecture of the model shown in Fig.8. CNN segments images using filters to make them easier to process without losing vital details. A convolution layer is created by convolving the image as input with a filter to extract low-level characteristics like colors and sharp edges. To lower the processing power required for data processing, a pooling (max-pooling or pooling layer) layer reduces the size of this layer with extracted characteristics. One layer of CNN is made up of these two layers. A chosen number of layers can be put into the training model and collect minimal stage characteristics based on the picture's complexity. The output is then flattened to feed it to a conventional complete layer for image classification. The system is then enhanced by an entire layer that learns high-level characteristics via nonlinear mixtures processed by the convolution layer. After that, the image is flattened into a column vector and fed into a feed-forward neural network. Every cycle of the training process is then subjected to backpropagation. Through a sequence of epochs, the model learns to differentiate between dominant and limited characteristics of images to classify them [13-16].

TensorFlow isn't as simple as Keras, but it has more features and capabilities for developing machine learning models. It's a Python open-source software library. Data flow graphs for machine learning models are created with it. TensorFlow models can be run on any platform, including locally on a personal computer, the cloud, Android, and iOS. It's simpler to use than CNTK because it allows you to focus entirely on the model's logic. It also allows the developer to observe the neural network that has been created [17-18].

Keras is a user-friendly high-level API that is exceedingly easy to use. It's a Python-based deep learning package that's free source. It can be used as the frontend for other Deep Learning packages, such as TensorFlow. The construction of the CNN model will be aided by combining TensorFlow and Keras. Deep Learning capabilities that are more advanced the backend of the build process will use TensorFlow. At the same time, the frontend, Keras, will be utilized because of its high-level API features and user-friendliness[19-22].

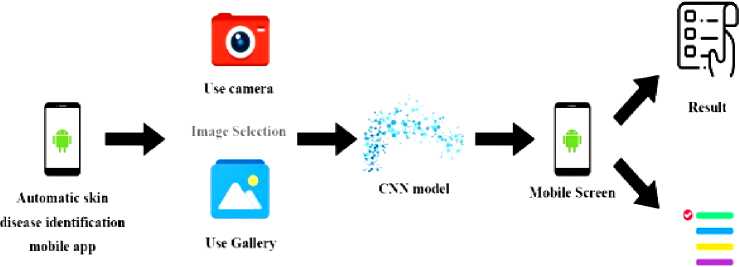

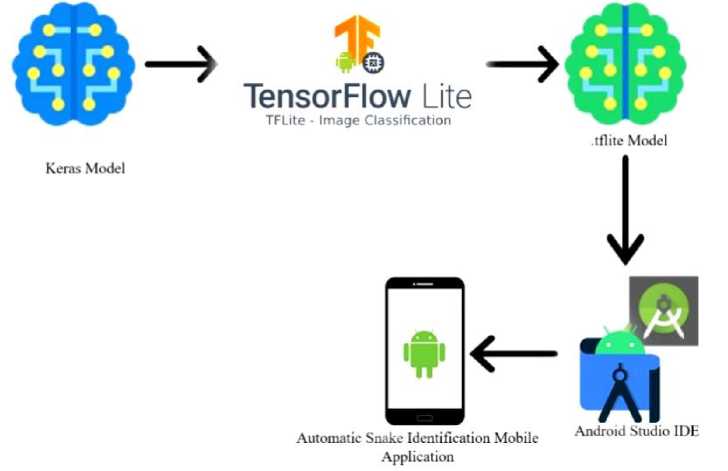

Android Studio is a well-known mobile development platform that offers various tools to help mobile app developers create user-friendly, dependable apps. Android Studio makes machine learning model implementation simpler using TensorFlow Lite. We can take a trained model and turn it into a lightweight model used in a mobile app.The system's general design is a blend of the CNN model and Android smartphone app development. The figure depicts a high-level perspective of the system's design process.The mobile application feature lets users shoot live images with their phone's camera and upload photos from the gallery as inputs to the app system. The layout also makes it possible for consumers to observe the results of the disease type on their Android phones' screens, as shown in Fig. 9 and 10.

Additional

Information

Automatic skin

Skin Disease identification

CNN model

disease identification

Mobile App showing result

mobile app

Fig.10. Overall Design of the Automatic skin disease Identification Mobile Application

The translation of the Keras model into a tflite model, which is accepted by development in Android studio, is required for the android mobile implementation. Keras models were obtained immediately after training (with the extension .h5). Because it only accepts .tflite models, this model can't be utilized to create Android apps. TensorflowLite is a lightweight package that gives access to the Android Neural Networks API. Python was used to carry out the conversion. This transformed model is utilized in Android Studio for creating the mobile application because the model has been adjusted to work with Android. The Fig.11 depicts straightforward visual representation of what was covered in this subtopic. As previously said, the diagram depicts how the Keras model is transformed into a TensorFlowLite model formerly being cast-off to create the Android mobile app.

Fig.11. Design of the Mobile Application Deployment

The mobile app has extra features like contact numbers of hospitals, be safe guidelines and details about some common skin diseases with their images.

7. Model Training and Evaluation

All of the pictures in the validation dataset have been fed into the model to acquire validation accuracy. The model adjusts its weights and restarts the process of obtaining the training dataset pass through each of the layers, and the validation dataset does the same at the ending of the epoch. This process will be repeated until the training and validation datasets have flown through the layers of the model flown through model's layers a predetermined number of times, known as epochs. After every epoch, the model examines the pictures in the validation dataset to determine how reliable the model's weights are for that epoch.

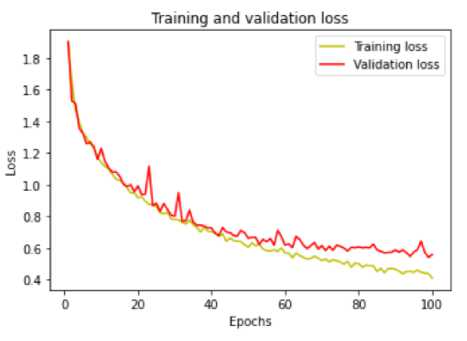

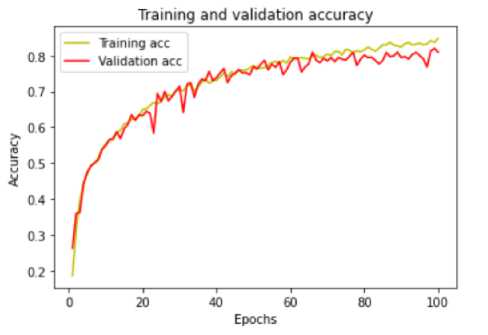

With each model, two graphs are obtained from the results of the training procedure. The Accuracy graph, which compares the training and validation accuracy plots, was one of them. The other was the Loss graph, which showed the training loss plot vs. the model's validation loss plot.

The accuracy and loss graphs of the CNN model created utilizing transfer learning and fine-tuning with the MobileNet pre-trained network are shown in the Fig.12 and 13 . These graphs indicated the predicted behavior of the models produced, allowing them to evaluate whether the models should be rejected or accepted. These graphics were crucial in determining which model was the most correct.

Fig.12. Training and validation loss

Fig.13. Training and validation accuracy

Table 3. Evaluation and testing on test set/ classification report

|

Precision |

Recall |

F1-score |

support |

|

|

akiec |

0.73 |

0.46 |

0.57 |

69 |

|

bcc |

0.83 |

0.67 |

0.74 |

93 |

|

bkl |

0.65 |

0.77 |

0.71 |

228 |

|

df |

0.81 |

0.61 |

0.69 |

28 |

|

mel |

0.73 |

0.69 |

0.71 |

226 |

|

nv |

0.94 |

0.95 |

0.94 |

1338 |

|

vasc |

0.95 |

0.86 |

0.90 |

21 |

|

Micro avg |

0.87 |

0.87 |

0.87 |

2003 |

|

Macro avg |

0.80 |

0.72 |

0.75 |

2003 |

|

Weighted avg |

0.87 |

0.87 |

0.86 |

2003 |

8. Conclusion

During the requirements analysis, it was discovered that it is impossible to detect all skin disorders among individuals and that gathering dataset (pictures) for each class is tough. Similarly, certain common ailments will be removed from the issue area since most of them will be recognized by individuals. Those illnesses are quite frequent in today's society. For example, when it comes to diseases such as blisters, warts, and acne, more than 95% of individuals can detect them. As a result, it's more necessary to think about diseases that people aren't aware of. It was also clear that developing an android mobile application that implements a CNN Model would be the ideal option. During the testing phase, it was discovered that the accuracy of the CNN Model's results when tested apart from the mobile application was better than when tested with the mobile application. This study revealed that MobileNet with transfer learning yielding an accuracy of more than 85% is the most suitable model for automatic skin disease identification. The cause of this issue has been determined to be that while converting a trained model to a. flite model, part of the data necessary for correct results is lost. This is likely to be addressed with further training and a more tremendous amount of data. For the identification probability to stabilize, the image had to be kept for around 4 to 5 seconds. The highest accuracy rates in detecting the seven skin disorders were obtained by capturing the image to cover as much screen as possible. The accuracy rates in recognizing the skin diseases were also affected by the lighting conditions. Developing the model to recognize skin diseases from any region of its body in any lighting situation should also be done to make this android mobile application even more helpful. Linking Google Maps with the locations of hospitals and healthcare centers into an Android mobile application would be immensely beneficial to those who suffer from skin disorders or who find themselves in an emergency scenario due to skin injury. The mobile application was developed only for android devices. The identification accuracy is depending on the camera quality of the mobile. If there is a high-quality camera the accuracy can be increased, otherwise it is hard to get the best performance from the developed model. These are the limitations with the proposed system. This research is still based on the existing dataset and can identify only seven types of skin diseases. Therefore, the most important future prospect for research is to increase the dataset and develop this model to diagnose more skin diseases with higher accuracy.

Список литературы Mobile-Based Skin Disease Diagnosis System Using Convolutional Neural Networks (CNN)

- Haenssle, Holger A, "Man against machine: diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists," Annals of Oncology, 2018.

- Marchetti, Michael A, "Results of the 2016 International Skin Imaging Collab oration International Symposium on Biomedical Imaging challenge: Comparison of the accuracy of computer algorithms to dermatologists for the diagnosis of melanoma the accuracy of computer algorithms to," Journal of the American Academy of Dermatology, pp. 270-277., 2018.

- Tschandl, Philipp, "Expert-level diagnosis of nonpigmented skin cancer by combined convolutional neural networks, JAMA dermatology," 2019.

- ViDIR Group, Tschandl, Rosendahl, Kittler, 2018. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Scientific Data, Volume 5

- M W P Maduranga and Ruvan Abeysekara. Supervised Machine Learning for RSSI based Indoor Localization in IoT Applications. International Journal of Computer Applications 183(3):26-32, May 2021

- M.W.P Maduranga, Ruvan Abeysekera, "TreeLoc: An Ensemble Learning-based Approach for Range Based Indoor Localization", International Journal of Wireless and Microwave Technologies (IJWMT), Vol.11, No.5, pp. 18-25, 2021.DOI: 10.5815/ijwmt.2021.05.03R.

- EL SALEH, S. BAKHSHI and A. NAIT-ALI, "Deep convolutional neural network for face skin diseases identification," 2019 Fifth International Conference on Advances in Biomedical Engineering (ICABME), 2019, pp. 1-4, doi: 10.1109/ICABME47164.2019.8940336.

- The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions, Harvard Dataverse, 2018, doi:10.7910/DVN/DBW86T

- Chatterjee, C.C, 2019. Basics of the Classic CNN. [Online] Available at: https://towardsdatascience.com/basics-of-the-classic-cnn-a3dce1225add [Accessed 02 06 2021].

- Zakaria, N.K., Jailani, R., Tahir, N.M., "Application of ANN in Gait Features of Children for Gender Classification," Vols. Procedia Computer Science 76, 235–242. https://doi.org/10.1016/j.procs.2015.12.348.

- M. Mehdy, P. Ng, E. Shair, N. Saleh and C. Gomes, "Artificial Neural Networks in Image Processing for Early Detection of Breast Cancer," Comput. Math. Methods Med. 2017, 2017, 2610628.

- Rathod, J.; Waghmode, V.; Sodha, A.; Bhavathankar, P, Diagnosis of skin diseases using Convolutional Neural Networks. In Proceedings of the 2018 Second International Conference on Electronics, Communication, and Aerospace Technology (ICECA)," Coimbatore, India, 29–31 March 2018.

- Rathod, J.; Waghmode, V.; Sodha, A.; Bhavathankar, P, 2018. Diagnosis of skin diseases using Convolutional Neural Networks. Coimbatore, India, Second International Conference on Electronics, Communication and Aerospace Technology (ICECA).

- Saha, S, 2018. A Comprehensive Guide to Convolutional Neural Networks — the ELI5 way Towards Data Science. [Online] Available at: https://towardsdatascience.com/a-comprehensive-guide-to-convolutional neural-networks-the-eli5-way-3bd2b1164a53

- N. S. A. ALEnezi, "A Method Of Skin Disease Detection Using Image Processing And," in 16th International Learning & Technology Conference, Makkah , Saudi Arabia, 2019.

- M. Mehdy, P. Ng, E. Shair, N. Saleh and C. Gomes, 2017. Artificial Neural Networks in Image Processing for Early Detection of Breast Cancer, s.l.: Comput. Math. Methods Med.

- Fredes, C.; Valenzuela, ANaranjo-Torres, J.; Mora, M.; Hernández-García, R.; Barrientos, R.J, "A Review of Convolutional Neural Network Applied to Fruit Image Processing.," 2020.

- M.W.P Maduranga, Ruvan Abeysekera, " Bluetooth Low Energy (BLE) and Feed Forward Neural Network (FFNN) Based Indoor Positioning for Location-based IoT Applications", International Journal of Wireless and Microwave Technologies(IJWMT), Vol.12, No.2, pp. 33-39, 2022

- Rupak Bhakta, A. B. M. Aowlad Hossain, " Lung Tumor Segmentation and Staging from CT Images Using Fast and Robust Fuzzy C-Means Clustering", International Journal of Image, Graphics and Signal Processing (IJIGSP), Vol.12, No.1, pp. 38-45,2020.DOI: 10.5815/ijigsp.2020.01.05.

- Wei, L., Gan, Q., Ji, T, 2018. Skin Disease Recognition Method Based on Image Colour and Texture Features. s.l.Computational and Mathematical Methods in Medicine.

- M. M. I. Rahi, F. T. Khan, M. T. Mahtab, A. K. M. Amanat Ullah, M. G. R. Alam and M. A. Alam, "Detection Of Skin Cancer Using Deep Neural Networks," 2019 IEEE Asia-Pacific Conference on Computer Science and Data Engineering (CSDE), 2019, pp. 1-7, doi: 10.1109/CSDE48274.2019.9162400.

- A. D. Andronescu, D. I. Nastac and G. S. Tiplica, "Skin Anomaly Detection Using Classification Algorithms," 2019 IEEE 25th International Symposium for Design and Technology in Electronic Packaging (SIITME), 2019, pp. 299-303, doi: 10.1109/SIITME47687.2019.8990764.