Motion Segmentation from Surveillance Video using modified Hotelling's T-Square Statistics

Автор: Chandrajit M, Girisha R, Vasudev T

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 7 vol.8, 2016 года.

Бесплатный доступ

Motion segmentation is an important task in video surveillance and in many high-level vision applications. This paper proposes two generic methods for motion segmentation from surveillance video sequences captured from different kinds of sensors like aerial, Pan Tilt and Zoom (PTZ), thermal and night vision. Motion segmentation is achieved by employing Hotelling's T-Square test on the spatial neighborhood RGB color intensity values of each pixel in two successive temporal frames. Further, a modified version of Hotelling's T-Square test is also proposed to achieve motion segmentation. On comparison with Hotelling's T-Square test, the result obtained by the modified formula is better with respect to computational time and quality of the output. Experiments along with the qualitative and quantitative comparison with existing method have been carried out on the standard IEEE PETS (2006, 2009 and 2013) and IEEE Change Detection (2014) dataset to demonstrate the efficacy of the proposed method in the dynamic environment and the results obtained are encouraging.

Motion segmentation, Video surveillance, Spatio-temporal, Hotelling's T-Square test

Короткий адрес: https://sciup.org/15013995

IDR: 15013995

Текст научной статьи Motion Segmentation from Surveillance Video using modified Hotelling's T-Square Statistics

Published Online July 2016 in MECS DOI: 10.5815/ijigsp.2016.07.05

Video surveillance has become one of the most active areas of research in computer vision. Generally, video surveillance system involves activities like motion segmentation, object classification, object recognition, object tracking and motion analysis. Moving object segmentation is extracting the regions of the video frame which are non-stationary. Object classification is classifying the objects such as a person, vehicle or animal. Identifying the object of interest is object recognition. Motion tracking is establishing frame by frame correspondence of the moving object in the video sequence. Finally, analyzing the object motion and interpretation leads to motion analysis.

Motion segmentation is a vital task in video surveillance as the subsequent tasks of the video surveillance system are dependent on the accurate output of motion segmentation. The surveillance video sequences are generally captured through different sensors like aerial, PTZ, thermal and night vision. The captured sequence consists of noise and illumination variations, which makes the motion segmentation from surveillance videos a challenging task [35, 36, 38]. Therefore, the research focuses on developing efficient and reliable motion segmentation algorithm to overcome the mentioned limitations and to extract foreground information from the image data for further analysis.

Several techniques are proposed in the literature for motion segmentation and these techniques can be categorized as conventional background subtraction [14], statistical background subtraction [2, 10, 21, 28, 30, 32, 34, 37], temporal differencing [1, 5, 6], optical flow [9] and hybrid approaches [3, 4, 7, 8, 15, 16, 17, 31, 33, 40].

The conventional background subtraction technique initially builds the background model and the new frame is subtracted from the background model for motion segmentation. The statistical background subtraction technique builds the background model by using individual pixel or group of pixels dynamically and then each pixel from the current frame is treated as foreground or background by comparing the statistics of the current background model. In the temporal difference method, absolute difference of successive frames is done to segment motion pixels. Optical flow technique computes the flow vectors of every pixel and then segments the moving object. The hybrid techniques use the combination of above techniques for segmentation of moving objects in video sequences [11]. A brief review of the existing works is discussed in the subsequent section.

This paper proposes two generic methods for segmenting moving objects from surveillance video in the dynamic environment by fusing spatial neighborhood information from color video frames in a temporal statistical framework. The article is organized as follows. Section 2 reviews the related works on segmentation methodologies. The overview of the proposed work is described in Section 3. Section 4 elaborates the proposed work. The experimental results and conclusion are reported in Section 5 and 6 respectively.

-

II. Related Works

Research in the area of motion segmentation has been attempted using conventional background subtraction [14], statistical background subtraction [2, 10, 21, 28, 30, 32, 34, 37], temporal differencing [1, 5, 6], optical flow [9] and hybrid [3, 4, 7, 8, 15, 16, 17, 31, 33, 40] approaches.

Heikkila and Silven [14] modeled background by observing the pixel values of the previous frames and then a pixel in the current frame is marked as foreground or background based on predefined threshold after subtraction with the background frame. Stuaffer and Grimson [30] modeled the background as a mixture of Gaussians for motion segmentation. Human detection using background subtraction is proposed by Munoz [21] where the background is modeled as mixtures of Gaussians. A variant of background subtraction that is based on sampled consensus is proposed by Wang and Suter [34]. Armanfard and Komeili [2] proposed blockbased background subtraction which makes use of texture and edge information to detect pedestrians. Johnsen and Tews [32] propose background modeling based on approximated median filter. Elgammal et al. [10] proposed non-parametric kernel density estimation technique for background modeling. Median based background subtraction in temporal domain is proposed in [28].

Lee et al. [5] performed moving objects segmentation using frame difference between the current and previous frame. Jung et al. [1] proposed an algorithm to segment moving objects by frame differencing followed by flood fill. Cheng and Chen [6] used Discrete Wavelet Transform followed by frame differencing and extracted color and spatial information to segment moving objects.

Denman et al. [9] detected vehicle and person using optical flow discontinuities and color information. Girisha and Murali [33] proposed a method that combines temporal differencing with statistical correlation for motion segmentation. Chandrajit et al. [3, 4] proposed two methods based on statistical spatio-temporal to segment motion objects. Another work to segment motion pixels using robust background construction using Wronskian framework is reported in [31] by Subudhi et al. Some hybrid methods are proposed in [7, 8, 15, 16, 17] which used a combination of motion based and spatiotemporal segmentation [39] for segmenting the objects of interest.

Nearly, most of the statistical background subtraction based approaches [2, 21, 28, 30, 32, 34] model the background as normally distributed. However, in the realtime environment, this assumption cannot be considered [10]. Optical flow based approaches can accurately segment moving objects based on the direction of intensity gradient. However, these methods suffer from illumination variations. Temporal differencing based methods generate holes in the segmented objects. Hence, these methods are unsuitable if entire motion object region has to be segmented. Further, most of the methods proposed in the literature tackle the segmentation problem with respect to a specific application along with a set of assumptions. In [33], motion segmentation was achieved with no prior assumptions. However, the method requires a post processing step to fill the holes of moving objects.

The majority of the works reported in the literature have been tested either on video that is captured from normal or PTZ sensors. And also, it is clear from the literature that many approaches to motion segmentation have been attempted but there are still challenges to develop a robust motion segmentation algorithm that can segment semantically meaningful objects from the video [18, 25]. This makes room for further research to develop generic and efficient motion segmentation algorithm which can work on complex and dynamic environment videos captured from different kinds of sensors.

-

III. Overview of Proposed Method

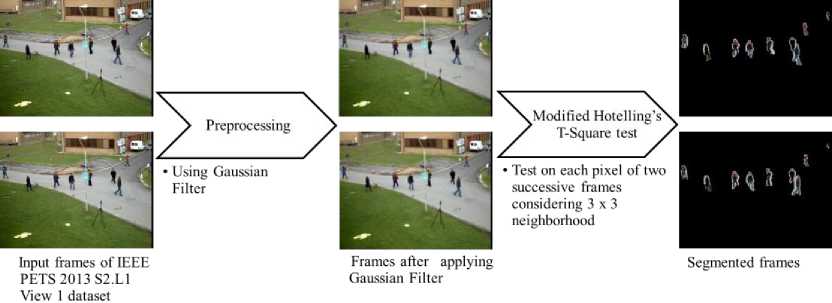

Motion segmentation is the process of extracting the non-stationary objects from the video frames. Initially, we perform preprocessing of the input frames by convolving with a Gaussian filter [29] to reduce the noise. Next, the RGB color intensity values of the 3 x 3 spatial neighborhood of each pixel from two successive temporal frames are used in the statistical Hotelling’s T-Square test or modified Hotelling's T-Square test [13] for detecting the variations of the intensity values. Finally, moving objects are segmented based on the value of test statistic. The overview of the proposed method is shown in Fig.1.

-

IV. The Proposed Method

Generally, the scene captured from surveillance video sensors consists of noise and suffer from illumination variations. Gaussian blur is widely used in many vision applications to reduce the image noise [29]. In this work, smoothing is performed by employing 2D 3 x 3 Gaussian filter on the input frames of the video sequence. Extensive experiments have been carried out with other popular filters. Due to the various disadvantages of the other filters over Gaussian filter, we have found the performance of the 3 x 3 Gaussian filter to be convincing.

In color video frames each pixel is composed of red, green and blue intensities. In this work, we have considered the mean of RGB color intensity value of each pixel P ( w , h ) computed using (1) as the pixel value for segmenting moving objects.

P ( w , h ) R + P ( w , h ) G + P ( w , h ) B (1)

where, w and h are the coordinates, in which w ranges from 0 to maximum width of the frame in the horizontal direction and h ranges from 0 to maximum height of frame in the vertical direction.

Fig.1. The Proposed Motion Segmentation Algorithm using Modified Hotelling's T-Square Test

A. Motion Segmentation using Hotelling’s T-Square test

Statistics is a tool for describing and understanding variability in the data. The Hotelling's T-Square test (2) is a popular statistical test to determine significant difference between mean vectors [13, 26].

t 2 = n 1 n 2 ( - 1 — x 2 ) T s - i ( x i — x 2 ) (2) n + n 2

where, ( xv & X i) are the mean vectors of two population, s - 1 is the inverse of the covariance matrix and ( n & n2 ) are size of the observations.

In order to segment motion pixels in a video frame, we need to identify the pixels whose values vary drastically from previous observations. Since single pixel value from the current and previous frame is not sufficient for detecting variability, a spatial 3 x 3 neighborhood of each pixel from two successive frames P ( w , h ) t and p ( w, h ) t + 1 is considered in this work for motion segmentation. These neighborhood values from successive temporal frames are fed into the Hotelling's T-Square test. Hence, two vectors each of which consisting of 9 mean RGB values are built for Hotelling's T-Square test.

The test statistic T 2 computed for each pixel is compared with the critical value ( T ) for measuring the significant difference between the pixel values. The critical value is chosen from the F-table [13, 26] by using ( n + n 2 - 2 ) degrees of freedom with predetermined level of significance ( a = 0.5 ) Hence, the motion is detected for each pixel by considering neighbors. The output frames ( Dt & Dt+ j) containing the extracted foreground objects are generated from frames ( it & it+ j) as follows

B. Motion segmentation using modified Hotelling's T-Square test

The Hotelling's T-Square test statistic computation requires the multiplication of transpose of mean difference vectors with the inverse of covariance matrix and mean difference vectors. However, these computations are expensive for real time scenario. In this work, instead of mean vectors, we are considering the weighted mean for mean vectors of two populations. The arithmetic mean is based on the assumption that, all the data in the population are of equal importance. However, in real time scenario, this assumption is overridden. If some values need to be given more importance while calculating mean then a weighted mean is more appropriate. The weighted mean of 3 x 3 neighborhood values of each pixel in successive temporal frames is computed using (4).Thereby, we bring down the two vectors of (2) to two single weighted mean values.

M’\ 9

Ув3Р (w, h ) N j=1

j =1

D P ( w , h )

, m

= f RGBofl p ( w , hk

( T P ( w , h ) - T ) ( otherwise )

where, m = { t , t + 1} and i is a pixel in the input

frame.

where, p_ is the weight and m = { t , t + 1} . The values of p_ are chosen such that the center pixel weight is higher than its neighbors.

Since we are interested in measuring the degree of change between two mean vectors, the covariance cov (5) of 3 x 3 neighborhood mean RGB values of each pixel in successive frames are used instead of covariance matrix which is used in Hotelling's T-Square test.

S p ( w , h ) N - ^ n 9 )( p ( w , h ) n1 - ^ N ) cov t , t + 1 = 221-------------------^----------------------- (5)

Hence, the modified Hotelling's T-Square test statistic is given in (6) which uses covariance of two populations and square of the mean difference.

Motion Segmentation for each pixel in successive frames is performed using modified Hotelling's T-Square test on the ^N9 and ^t +J values using:

2 tP ( w , h )

ПП ( ^ N 9 - ^ ) 2

n 1 + n 2 cov t , t + i

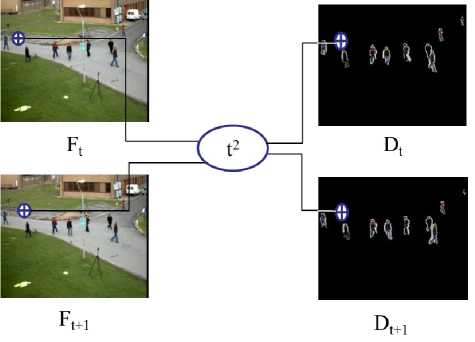

The test statistic which is computed for each pixel shown in Fig.2 using (6) is compared the threshold ( r ) for measuring the significant difference between the pixel values. The threshold value is chosen empirically. The output frames ( Dt & Dt+ J are generated using (7) which contains extracted foreground objects from input frames ( I t & I t + 1 ) as shown in Fig.2.

DP ( w , h )

w , m

J RGBofl p ( w , h )

< , m

( t P ( w , h ) - T ) ( otherwise )

where, m and I are same as discussed in previous subsection.

Fig.2. Test Statistic Computation

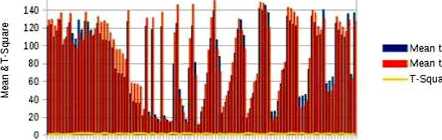

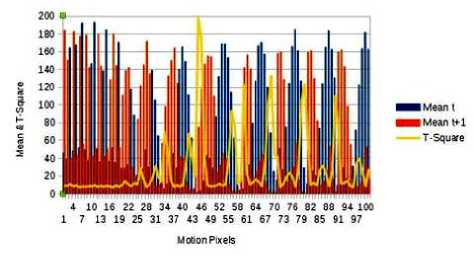

The test statistic and weighted mean values are shown in Fig.3 and Fig.4 for 100 non-motion and motion pixels of frames frame0001 and frame0002 of IEEE PETS 2013 S2.L1 View1 dataset. Fig.3 clearly shows that the test statistic is very low when the 3 x 3 RGB weighted mean values of successive frames are almost same which indicates non-motion pixels. On the other hand, Fig.4 interprets that, for motion pixels, a high test statistic value ( > T ) has resulted when the 3 x 3 RGB weighted mean values of succesive frames varies drastically. Thus, the proposed modified Hotelling's T-Square test statistic is a clear indication for deciding the pixel as motion or non-motion pixel to achieve motion segmentation.

4 10 16 22 28 34 40 46 52 58 64 70 76 82 88 94 100

1 7 13 19 25 31 37 43 49 55 61 67 73 79 85 91 97

Non motion Pixels

Fig.3. Sample 3 x 3 Weighted Mean RGB, T-Square Values for First

100 Non-motion Pixels.

Fig.4. Sample 3 x 3 Weighted Mean RGB, T-Square Values for First 100 Motion Pixels

-

V. Experimental Results and Discussions

The methods have been implemented using C++ on Intel Dual Core 1.8 GHz machine with Ubuntu operating system. The challenging IEEE PETS (2006, 2009 and 2013) [22, 23, 24] and IEEE Change Detection (CD) 2014 datasets [12] are used for experiments. The dataset consists of both indoor and outdoor with varying illumination sequences captured from various kinds of sensors like thermal, PTZ, aerial and also night vision sensor. Furthermore, the proposed methods have been tested on more than 50000 frames of IEEE CD dataset and more than 10000 frames of IEEE PETS dataset to prove its effectiveness. The results of proposed method and working code will be posted on community blog for verification.

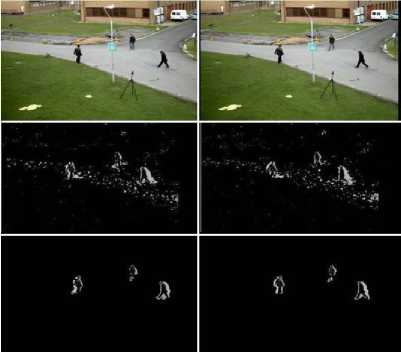

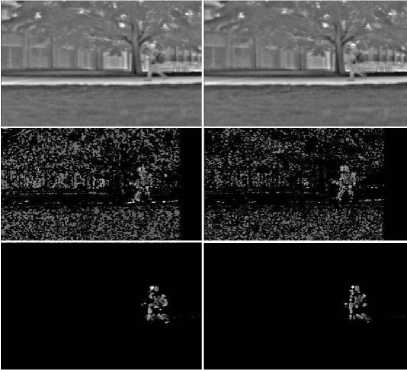

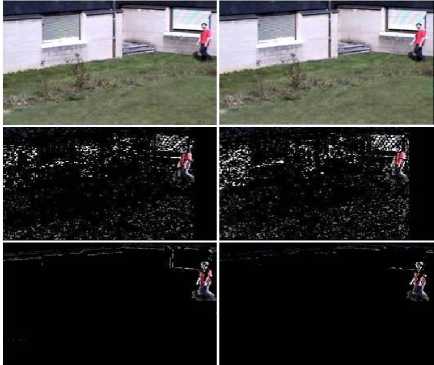

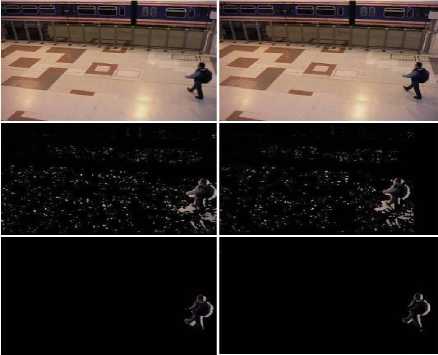

The representative results of the Hotelling’s T-Square test method are shown in the second row of Fig.5 (IEEE PETS 2013 S2.L1 View1 dataset), Fig.6 (IEEE CD thermal sensor dataset), Fig.7 (IEEE CD night vision sensor dataset), Fig.8 (IEEE CD PTZ camera dataset) and Fig.9 (IEEE PETS 2006 dataset) respectively.

The representative results of the modified Hotelling’s T-Square test are shown in the third row of Fig.5 (IEEE PETS 2013 S2.L1 View1 dataset), Fig.6 (IEEE CD thermal sensor dataset), Fig.7 (IEEE CD night vision sensor dataset), Fig.8 (IEEE CD PTZ camera dataset) and Fig.9 (IEEE PETS 2006 dataset) respectively. Further, results of other sequences of IEEE CD dataset are shown in Fig.10 (IEEE CD night vision Bridge Entry dataset), Fig.11 (IEEE CD night vision Street Corner dataset),

Fig.12 (IEEE CD night vision Trauma dataset) and Fig.13 (IEEE CD dynamic background Fall dataset) respectively.

The modified Hotelling's T-Square test method can segment moving objects from the dynamic background as well as in illumination variation surveillance video sequences. In comparison with the results of Hotelling’s T-Square test method (Fig.5 through Fig.9) the modified method achieves motion segmentation accurately with less computational time.

Fig.5. Example results for IEEE PETS 2013 People Tracking S2.L1 View 1.

Fig.6. Example results for IEEE CD Thermal Frames.

Fig.7. Example results for IEEE CD Night Vision Fluid Highway Frames.

Fig.8. Example results for IEEE CD PTZ ZoomInZoomOut Frames.

Fig.9. Example results for IEEE PETS 2006 Frames.

Fig.10. Example results for IEEE CD Night Vision Bridge Frames.

Fig.11. Example results for IEEE CD Night Vision Street Corner Frames.

Fig.12. Example results for IEEE CD Night Vision Trauma Frames.

(a) (b) (c)

Fig.15. (a) IEEE CD Night Vision Fluid Highway Frame no 968; (b) Result of SOBS algorithm; (c) Result of proposed method.

Fig.13. Example results for IEEE CD Dynamic Fall Frames.

(a) (b) (c)

Fig.16. (a) IEEE PETS 2006 Frame no 977; (b) Result of SOBS algorithm; (c) Result of proposed method.

Further, to validate the efficacy of the proposed method, we have qualitatively compared our results with one of the top ranked methods of CVPR Change Detection Workshop 2012 namely SOBS [19]. Fig. 14 through Fig. 16 compares the results of SOBS algorithm and proposed technique.

In Fig. 16, the result of proposed method has not segmented the complete body of a person who is in the center of the frame while SOBS has segmented. This is because the person is still for a long time and only the lower portion of objects body was in motion.

The results of proposed modified Hotelling’s T-Square test statistic method have been evaluated with the help of performance metrics namely precision, recall and F-measure [12]. Evaluations have been done using the sample ground truth frames of IEEE CD dataset [12].

The three performance metrics are computed as follows:

|

Precision |

TP |

(8) |

|

TP + FP |

||

|

Recall = |

TP |

(9) |

|

TP + FN |

F

2 * Precision * Recall

- measure =------------------

Precision + Recall

(a) (b) (c)

Fig.14. (a) IEEE CD PTZ ZoominZoomout Frame no 961; (b) Result of SOBS algorithm; (c) Result of proposed method.

where, TP (True Positive) is number of pixels correctly labeled as motion pixels, FP (False positive) is number of pixels incorrectly labeled as motion pixels and FN (False Negative) is number of pixels which belong to motion pixels but labeled as non-motion pixels; Precision is the fraction of retrieved instances that are relevant; Recall is the fraction of relevant instances that are retrieved and F-measure is harmonic mean of precision and recall.

The mean values of performance metrics obtained for proposed and SOBS method is shown in Table. 1. It is to be noted that the results of the algorithm are raw and no post processing techniques have been applied.

Table 1. Performance Metrics

|

Dataset |

Precision |

Recall |

F-measure |

|||

|

SOBS |

Proposed |

SOBS |

Proposed |

SOBS |

Proposed |

|

|

IEEE PETS 2013 S2.L1 View1 |

NA |

0.73 |

NA |

0.68 |

NA |

0.70 |

|

IEEE PETS 2006 |

0.71 |

0.77 |

0.74 |

0.66 |

0.72 |

0.71 |

|

IEEE CD night vision sensor Fluid Highway |

0.48 |

0.47 |

0.75 |

0.53 |

0.58 |

0.50 |

|

IEEE CD PTZ Zoom in Zoom out |

0.02 |

0.33 |

0.70 |

0.50 |

0.05 |

0.40 |

|

IEEE CD thermal sensor Park |

0.96 |

0.77 |

0.43 |

0.61 |

0.60 |

0.67 |

From Table. 1., we can observed that, the performance metrics obtained for the IEEE CD night vision sensor and the PTZ sensor dataset is low compared to other datasets.

This is because of the extreme environment in case of night vision dataset and the frames captured while zoom in zoom out operation using PTZ camera makes background also to be treated as foreground by the algorithm.

In comparison the proposed modified Hotelling’s T-Square test statistic method is equally good as SOBS, also it is observed that the results obtained on IEEE CD PTZ Zoom in Zoom out dataset by proposed method are better than the SOBS. Furthermore, the SOBS method is based on background construction and subtraction which is related to Stauffer and Grimson's work [30] whereas, the proposed method does not construct any background image for motion segmentation since we segment motion based on temporal-statistic framework which is more sensitive than the background construction based methods.

The average computation time required for execution of frames of size 320 x 240 by using the Hotelling’s T-Square test and modified Hotelling’s T-Square test statistic is shown in Table.2.

Table 2. Computing Time Required for Motion Segmentation

|

Method |

Average time in ms |

|

Hotelling's T-Square |

650 |

|

Modified Hotelling's T-Square |

50 |

-

VI. Conclusion

In this paper, we proposed two generic statistical spatio-temporal methods for motion segmentation in surveillance videos. In the first method, the Hotelling’s T-Square test statistic is used to segment motion pixels by considering spatial neighborhood RGB color intensity values of successive frames. A modified version of Hotelling’s T-Square test statistic is proposed in the second method for motion segmentation. Comparative results interpret that the modified Hotelling's T-Square test method is better than using original Hotelling's T-Square test formula with respect to computation time and accuracy of the result. Experimental evaluations and comparison of the results have also been conducted with the help of performance metrics to demonstrate the efficiency of the proposed method. The results show that the method satisfactorily segments moving objects in complex and dynamic environments.

Список литературы Motion Segmentation from Surveillance Video using modified Hotelling's T-Square Statistics

- J. Ahn, C. Choi, S. Kwak, K. Kim, and H. Byun. Human tracking and silhouette extraction for human robot interaction systems. Pattern Analysis and Applications, 12(2):167–177, 2009.

- N. Armanfard, M. Komeili, and E. Kabir. Ted: A texture-edge descriptor for pedestrian detection in video sequences. Pattern Recognition, 45(3):983 – 992, 2012.

- M. Chandrajit, R. Girisha, and T. Vasudev. Motion segmentation from surveillance videos using t-test statistics. In ACM India Computing Conference (COMPUTE '14). ACM, New York, 2014.

- M. Chandrajit, R. Girisha, and T. Vasudev. Motion segmentation from surveillance video sequences using chi-square statistics. In Emerging Research in Computing, Information, Communication and Applications, volume 2, pages 365–372. Elsevier, 2014.

- C. L. ChaoYang Lee, ShouJen Lin and C. Yang. An efficient continuous tracking system in real-time surveillance application. Journal of Network and Computer Applications, 35(3):1067 – 1073, 2012. Special Issue on Trusted Computing and Communications.

- F. Cheng and Y. Chen. Real time multiple objects tracking and identification based on discrete wavelet transform. Pattern Recognition, 39(6):1126 – 1139, 2006.

- Y. Chen, C. Chen, C. Huang, and Y. Hung. Efficient hierarchical method for background subtraction. Pattern Recognition, 40(10):2706 – 2715, 2007.

- E. Dallalazadeh and D. S. Guru. Moving vehicles extraction in traffic videos. IJMI, 3(4):236–240, 2011.

- S. Denman, C. Fookes, and S. Sridharan. Group segmentation during object tracking using optical flow discontinuities. In Image and Video Technology (PSIVT), 2010 Fourth Pacific-Rim Symposium on, pages 270–275, Nov 2010.

- A. Elgammal, R. Duraiswami, D. Harwood, and L. Davis. Background and foreground modeling using nonparametric kernel density estimation for visual surveillance. Proceedings of the IEEE, 90(7):1151–1163, Jul 2002.

- R. Girisha. Some New Methodologies to Track Humans in a Single Environment using Single and Multiple Cameras-Doctoral Thesis. University of Mysore, Mysore, 2010.

- N. Goyette, P. Jodoin, F. Porikli, J. Konrad, and P. Ishwar. Changedetection.net: A new change detection benchmark dataset. In Computer Vision and Pattern Recognition Workshops (CVPRW), 2012 IEEE Computer Society Conference on, pages 1–8, June 2012.

- R. A. Johnson and D. W. Winchern. Applied Multivariate Statistical Analysis. Pearson Prentice Hall, 2007.

- H. J and S. O. A real-time system for monitoring of cyclists and pedestrians. In Visual Surveillance, 1999. Second IEEE Workshop on, (VS 99), pages 74–81, Jul 1999.

- T. Lim, B. Han, and J. H. Han. Modeling and segmentation of floating foreground and background in videos. Pattern Recognition, 45(4):1696 – 1706, 2012.

- C. Liu, P. C. Yuen, and G. Qiu. Object motion detection using information theoretic spatio temporal saliency. Pattern Recognition, 42(11):2897 – 2906, 2009.

- M. T. Lopez, A. F. Caballero, M. A. Fernandez, J. Mira, and A. E. Delgado. Visual surveillance by dynamic visual attention method. Pattern Recognition, 39(11):2194 – 2211, 2006.

- X. L. L Zappella and J. Salvi. New Trends in Motion Segmentation. In Pattern Analysis and Applications,InTech, 2009.

- L. Maddalena and A. Petrosino. The sobs algorithm: What are the limits? In Computer Vision and Pattern Recognition Workshops (CVPRW), 2012 IEEE Computer Society Conference on, pages 21–26, June 2012.

- M. M. Mohamed Sedky and C. C. Chibelushi. Spectral-360: A physics-based technique for change detection. in proc of IEEE Workshop on Change Detection, 2014.

- R. S. Munoz. A bayesian plan-view map based approach for multiple-person detection and tracking. Pattern Recognition, 41(12):3665 – 3676, 2008.

- www.cvg.rdg.ac.uk/PETS2006/data.html [17 March 2016].

- www.cvg.rdg.ac.uk/PETS2009/data.html [17 March 2016].

- www.cvg.rdg.ac.uk/PETS2013/a.html [17 March 2016].

- A. Prati, R. Cucchiara, I. Mikic, and M. Trivedi. Analysis and detection of shadows in video streams: a comparative evaluation. In Computer Vision and Pattern Recognition, 2001. CVPR 2001. Proceedings of the 2001 IEEE Computer Society Conference on, volume 2, pages II–571–II–576 vol.2, 2001.

- A. C. Rencher. Methods of multivariate analysis. Wiley-Interscience publication, 2001.

- G. S. K. P. Rui Wang, Filiz Bunyak. Static and moving object detection using flux tensor with split gaussian models. in proc of IEEE Workshop on Change Detection, 2014.

- A. Sadaf, J. Ali, M. Irfan,"Human Identification On the basis of Gaits Using Time Efficient Feature Extraction and Temporal Median Background Subtraction", IJIGSP, vol.6, no.3, pp.35-42, 2014. DOI: 10.5815/ijigsp.2014.03.05

- L. G. Shapiro and S. G C. Computer Vision. Prentice Hall, 2001.

- C. Stauffer and Grimson. Adaptive background mixture models for real-time tracking. In Computer Vision and Pattern Recognition, 1999. IEEE Computer Society Conference on., volume 2, 1999.

- B. Subudhi, S. Ghosh, and A. Ghosh. Change detection for moving object segmentation with robust background construction under wronskian framework. Machine Vision and Applications, 24(4):795–809, 2013.

- A. T. S Johnsen. Real-time object tracking and classification using a static camera. In IEEE ICRA, 2009.

- M. S and G. R. Segmentation of motion objects from surveillance video sequences using temporal differencing combined with multiple correlation. In Advanced Video and Signal Based Surveillance, 2009. AVSS '09. Sixth IEEE International Conference on, pages 472–477, Sept 2009.

- H. Wang and D. Suter. A consensus-based method for tracking: Modelling background scenario and foreground appearance. Pattern Recognition, 40(3):1091 – 1105, 2007.

- Wang and Xiaogang. Intelligent multi-camera video surveillance: A review. Pattern Recogn. Lett., 34(1):3–19, Jan 2013.

- L. W. Weiming Hu, Tieniu Tan and M. S. A survey on visual surveillance of object motion and behaviors. Systems, Man, and Cybernetics, Part C: Applications and Reviews, IEEE Transactions on, 34(3):334–352, Aug 2004.

- J. Yang, B. Price, X. Shen, Z. Lin and J. Yuan, "Fast Appearance Modeling for Automatic Primary Video Object Segmentation," in IEEE Transactions on Image Processing, vol. 25, no. 2, pp. 503-515, Feb. 2016.

- A. Yilmaz, O. Javed, and M. Shah. Object tracking: A survey. ACM Comput. Surv., 38(4), dec 2006.

- D. Zhang and G. Lu. Segmentation of moving objects in image sequence: A review. Circuits, Systems and Signal Processing, 20(2):143–183, 2001.

- S. Zhang, D. A. Klein, C. Bauckhage and A. B. Cremers, "Fast moving pedestrian detection based on motion segmentation and new motion features", Multimedia Tools and Applications, pp 1-20, Springer Science, 2015.