Multi featured fuzzy based block weight assignment and block frequency map model for transformation invariant facial recognition

Автор: Kapil Juneja, Chhavi Rana

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 3 vol.10, 2018 года.

Бесплатный доступ

Misalignment of the camera, some jerk during capture is natural that results some tilt or geometric transformed photo. The accurate recognition on these misaligned facial images is one of the biggest challenges in real time systems. In this paper, a fuzzy enabled multi-parameter based model is presented, which is applied to individual blocks to assign block weights. At first, the model has divided the image into square segments of fixed size. Each segmented division is analyzed under directional, structural and texture features. Fuzzy rule is applied on the obtained quantized values for each segment and to assign weights to each segment. While performing the recognition process, each weighted block is compared with all weighted-feature blocks of training set. A weight-ratio to exactly map and one-to-all map methods are assigned to identify overall matching accuracy. The work is applied on FERET and LFW datasets with rotational, translational and skewed transformation. The comparative observations are taken against KPCA and ICA methods. The proportionate transformation specific observations show that the model has improved the accuracy up to 30% for rotational and skewed transformation and in case of translation the improvement is up to 11%.

Transformation Invariant, Misaligned, Rotational, Structural, Block Featured

Короткий адрес: https://sciup.org/15015942

IDR: 15015942 | DOI: 10.5815/ijigsp.2018.03.01

Текст научной статьи Multi featured fuzzy based block weight assignment and block frequency map model for transformation invariant facial recognition

Published Online March 2018 in MECS DOI: 10.5815/ijigsp.2018.03.01

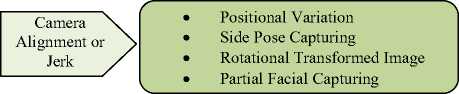

Facial photo capturing cannot be brought within the bounds of standardized constraints. Different mutations in distinctive capturing instances can be identified as of environment change, lighting variation, camera device and the photographer himself. Today, that many facial feature processing methods are present so that these variations are considered as common analytic vectors and cannot be considered as critical challenges. However, even then available recognition systems are not much capable to offer effective solutions. As the intensity of these variations increases as well as more than one mutation occurs, the recognition system lacks to provide exact answers. In this paper, featured weighted method is provided to get the solution against these familiar problems under high and multiple critical vectors. Some of the common problems and the relative high impact on recognition rate are shown in figure 1.

Fig.1. Common Problems and Critical Challenges to Face Recognition

To offer the effective recognition against defined complexities in figure 1, the foremost approach adopted by different researchers is to normalize the image during preprocessing stage. The earlier contribution of researchers signifies that multiple solutions are utilized before the feature extraction phase to achieve accurate recognition. The reliability of these methods depends on the prior estimation applied on available imageset. These variations are separated in terms of electrostatic and dynamic evaluation methods based on which relative adjustments can be applied on facial image. Static variable aspects such as firmness, size and format can be normalized without any algorithmic evaluations. Dynamic varying aspects with multiple perspectives are taken in these ratings so that the normalization in terms of camera focusing, positional aspect, resolution, scaling vectors will be gained. One time the evaluation phase is completed; multi-mode operations are to be taken for the changeover of images relative to multi-aspect variations. Merely, as per complexities, the individual method or operations are not sufficient to reach a substantial consequence. In this paper, a feature based aspect modelling method during is provided for improving the facial recognition against multi-mode variations.

The scope of face recognition is also identified for many real time applications[20] other than facial recognitions. Face expression [24] classification, individual identification of group [29] [25], gender identification, age-estimation are the most common application worked by the researchers in recent years. These applications are having the challenges of occlusion[25][26], illumination[19][21][26] variance, contrast[27] unbalancing, head or pose[28] precise variations, etc. The researchers have provided numerous algorithms to rectify the images against these challenges or to extract the variant robust features so that the accuracy of face recognition can be improved in existence of these challenges. The facial region[29] extraction, noise rectification, color, contrast and alignment specific adjustments were integrated in preprocessing stage to transform the facial image to normalized form[26][27][28][29]. Various statistical [24], textural [20][21][25] and other variance robust feature descriptors were applied by the researchers to represent the face with reduced and effective dimensions. At the final stage, the classification algorithms are applied to the extracted features to recognize the face accurately in real environment.

In this paper, a fuzzy rule based multi-aspect block feature mapping framework is presented to achieve high recognition rates. In this part, the common facial extraction problem and relatively complexities in the recognition process are discussed. In section II, the earlier work provided by different researchers is explained. In section III, the proposed framework is designed along with relative process stage algorithms. In section IV, the experimentations applied to different data sets and comparative evaluations are presented. In section V, the conclusion of the work is presented.

-

II. Related Work

Different researchers already identified different research results against various problems in facial identification. These problems are distinguished as the deviation between the learned database images and the captured real time pictures. Light [1] difference, phase difference, positional difference, head movement differences are some of common troubles that can be made out easily while capturing the facial images. Some of the researchers offered the resolution to the illumination problem during the preprocessing stage as easily as during feature generation stage. A selective feature extraction [1] method is offered by the author to compensate the illumination feature and discarding the future impact on the picture. An intensity difference [3] based feature selection method is likewise assumed by the researcher. Age difference [2] [7] [11] is also common problem when the facial images are captured in two different instances with larger time gaps. The relative feature patterns are picked out to capture the strengthen features from facial pictures. To furnish the most accurate recognition it is involved to minimize the deviation between the captured image and the reconstructed image. Different standards, including MSE are considered to analysis of these differences and applied some transformation [4] [6] to belittle the conflicts. An expression and illumination invariant [9] face recognition method is offered by the author [5] using Fisher map, Linear Discriminant Analysis and Eigen space mapping. Author applied this work on multiple databases and achieved the higher accuracy near 100%. Author [8] employs the same engine feature space to reach the recognition in case of pose difference. Some other feature vectors applied by different researchers for improving the recognition accuracy in case of multiple feature difference parameters includes DCT, Nearest Neighbor Discriminant Analysis[10], LBP[12], Gabor Filter[13], textural feature[18] etc. These features are used individually or collectively for improving the recognition accuracy in case of head movement[14], pose variation[15], illumination[16][17] variant, age variation etc. In this paper, a aspect variant analysis is presented by using the static and dynamic equalization along with multiple featured analysis. The model and the algorithmic approach presented in this work is described in the next sections along with experimentation.

In the recent years, researchers have provided the face normalization, feature extraction and recognition methods to improve the accuracy of face recognition against realtime appearance level challenges[20]. Javed et al.[19] has applied the mathematical filters to normalize the image projection variance of facial images. The correlation filters and weight matrix processed PCA (Principal Component Analysis) was applied to improve the performance of face recognition system. Different forms of Local Binary Pattern (LBP)[21] were used as the face-textural descriptor to improve the performance for noise and illumination sensitive facial images. SURF (Speeded up Robust Features)[22] is the local feature descriptor used with LDA (Linear Discriminant Analysis) to outperform the face recognition in existence of illumination and pose variations. The non-linear mapping with kernel extreme learning machine (KELM) was proposed by Goel et al.[23] to handle the pose variations.

The transformation of the image in occlusion and illumination ineffective signal [26] forms to improve the robustness of facial recognition. The ring[27]

segmentation method was applied to take dynamic and feature adaptive decision to handle the contrast issue in facial recognition. Deng et al.[30] has provided a work on lighting aware face recovery and descriptor using fiducial landmarks. The sophisticated frontalization techniques processed symmetric features were used by the author to outperform the deep learning method under varying pose and lighting. The drastic change in query image can occur in real time because of expression[31] or emotion variations. Author used the contourlet transformation to exhibit the directionality and anisotropy properties. The feature fusion method was applied by the author to achieve expression invariant face recognition. Interpolation based directional wavelet transform (DIWT) and local binary pattern (LBP) were utilized collectively by Muqeet et al.[32] extract pose and expression robust features. The directional assessment method with quadtree partitioning identified the effective local regions that improved the efficiency of face recognition.

-

III. Proposed Model

-

3.1 Squared Block Segmentation

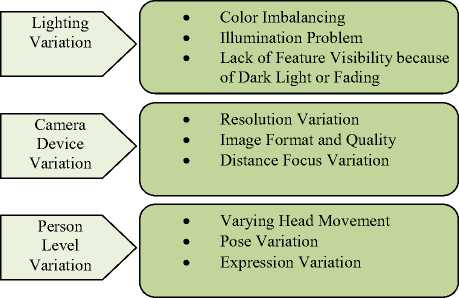

Real time face image capturing in complex environments can involve more than one variation in geometric and feature perspectives. In this segment, a fuzzy based multi-featured block adaptive weight assignment model is shown to improve the identification under these common problems with critical impact. The proposed model is based on feature analytical evaluation under multiple parameters to identify the invariant adaptive structural analysis. This fuzzy based aggregator featured model is depicted in figure 2.

Here figure 2 is showing that the mannequin will be processed on facial database captured in a normalized environment, whereas the input image can be a real time misaligned image. The complete study is separated into four major stages applied in parallel to both real time images as well as on database images. The beginning stage is here defined to apply equalized squared block segmentation over the pictures. Once the blocks are divided, each block is under content and structural aspects and represented by three different features. In the following stage, the featured values are qualified under fuzzy operators and represented as the block score. These characteristics are also combined using fuzzy operators to complete block cost. Apiece of the interior and outer blocks are transformed to featured numerals. At the last level, the score of the input image is mapped to each of database images to discern the most mapped images. Each of the integrated work stage is explained in this division.

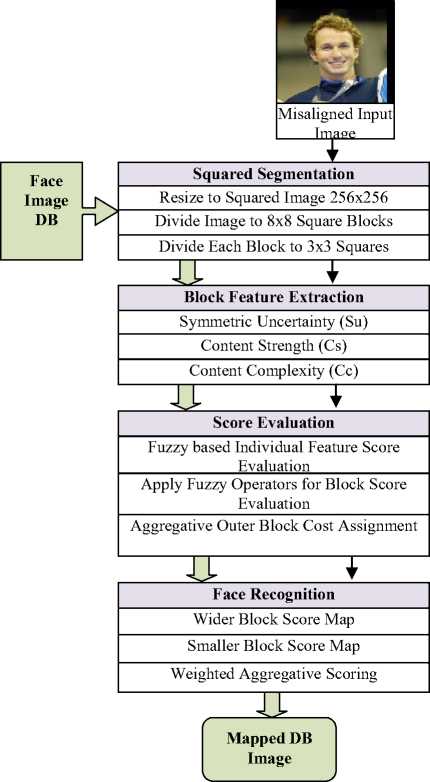

To give the fuzzy adaptive featured mapping to cover the invariance in capturing images, it is required to first split the picture into smaller sections. A static segmentation process method is recorded here to split the image in outer and interior blocks. For static process, the image is transformed to a normalized size of 256x256. This normalized image is split into smaller blocks of 8x8

which are considered as outer blocks. Each of the outer block is later on divided in 3x3 inner blocks as described in figure 3. Today the feature extraction procedure is applied and quantified using fuzzy operators which are explicated in the following subsections.

Fig.2. Fuzzy Aggregative Multi-Featured Model

Fig.3. Squared Block Segmentation

-

3.2 Block Feature Extraction

-

3.2.1 Symmetric uncertainty (Su)

After generating the block segments, the following work is to transform these blocks in quantifying featured form. In this study, three features are needed to evaluate the blocks. These features are able to characterize the structure and the contents. In this section derivation applied to generate these features are researched. Let the particular segment block (SB) is assessed under three features. N is the size of the cube and the extracted features includes Symmetric Uncertainty (Su), Content Strength (Cs) and Content Complexity (Cc).

The discrete structural observation relative to the intensity difference analysis is used to analyze the symmetric uncertainty. To generate this, at first the mutual intensity (Mi) variation based observations are led and represented by equations (1)

Mi(SB)=- 1^ 1 56(1) * log2(SB(i)) (1)

Once the block information is derived, it is required to observe the relational discrete characterization so that the mutual information analysis and content gain observation will be obtained. The block observation is here applied relative to the surrounding blocks. Let SBX is the current block which is being observed relative to the surrounding block SBY. Here SBY will represent each of the neighbours segments. The relational symmetric information carried here is given by equation (2)

Mi(SBX|SBY)=Mi(SBX)+Mi(SBY)-Mi(Abs(SBX-SBY))

Before analyzing the symmetric correlation observation, it is also postulated to get the frequency map so that the weight function fulfilment to the individual block will be broken down. This frequency map (Fm) for the block section is presented in equation (3)

_^^i(Mean(SB)-SB(i))

' Max(SB) '

Here the content intensity gain relative to mean value and the maximum intensity observation is derived. As the final information, the uncertainty equation for the cube is shown in equation (4)

SufSB SBY) = 2 ( Mi(SB)+Mi(SBY)-Mi(SB,SBY) \ Fm(SB)

, к Mi(SB)*SB(SBY) ' Л Fm(SffY')

Here SB is representing the current evaluated block and SBY is the neighbourhood block respective to which the symmetric observations are applied. This function is applied to generate the symmetric structure of the individual segmented block and used as the prior block feature.

-

3.2.2 Content Strength (Cs)

-

3.2.3 Content Complexity (Cc)

To obtain the individual content evaluation, the intensity and energy level analysis is applied. The intensity observation is based on local extrema along with directional scan method. The intensity count analysis with subsequent pixel map is applied to select the featured points. The textural information is decomposed along with detained pixel observation. The content strength (Cs) is shown in equation (5)

r 2^127=1(SB(i)-SB(7'))(i-;)2

Cs(SB)=—■ ■••. —

The regression observation with frequency domain analysis with scaling vector is analyzed. Each of the pixel values is analyzed with relative dimension described with surrounding pixels, so that computation relative the intensity vector will be obtained. The higher the pixel strength more adaptive the difference value will be obtained. This intensity observation is derived in ratio form so that the intensity difference based aspect observation can be obtained.

Another content parameter along with the distance level weight function is considered as the content complexity vector. Here the unified manifold estimation is applied to directional aspects. The radial analysis within the block under angular variation is taken. The content descriptor is able to provide the robustness against the pose, head movement and the positional difference parameters. The connection along with projection is taken from the each arbitrary point with cooperative specification. The featured characterization is shown in equation (6)

( Minf^R Г -СНГ

Max^-SB^y ) X(d( i SB 01 - SB 02D

Here, r is the radial coverage within the block

θ1 and θ2 are the two directional instance based on with actual feature estimation is done

This characterization is based on the minimum and maximum intensity difference observations so that more accurate feature generation and extraction is obtained. The directional aspect inclusion provides more realistic observation so that that the intensity derivation can be considered as the transformed featured value.

After obtaining each of the featured value for each innermost block is obtained, the next work is to transform these values in the form of block scoring. This scoring is described in the next subsection.

-

3.3 Score Evaluation

After generating the aggregative featured estimation under all three parameters described in the previous section, the next work is presented in the parameter values through some significant measures. Here, the fuzzy interference system (FIS) is implemented in this block score evaluation. This FIS accepted the generated featured payload as input and transformed it to some normalized quantitative value based on the acceptable existence or absence of particular feature. The fuzzifier is applied on all three features and represent them an individual bits. All three vectors, generated a three bit code which itself generated a specific number between 0

and 7 to represent the score of the inner block as shown in table 1.

Table 1.Fis Based Block Score Evaluation

|

Symmetric uncertainty (Su) |

Content Strength (Cs) |

Content Complexity (Cc) |

Score SuCsCc |

|

HIGH |

LOW |

HIGH |

0 |

|

HIGH |

LOW |

LOW |

1 |

|

HIGH |

HIGH |

HIGH |

2 |

|

HIGH |

HIGH |

LOW |

3 |

|

LOW |

LOW |

HIGH |

4 |

|

LOW |

LOW |

LOW |

5 |

|

LOW |

HIGH |

HIGH |

6 |

|

LOW |

HIGH |

LOW |

7 |

during the recognition process. This recognition process modelling is described in detail in the next subsection.

3.4 Face Recognition

After evaluating the fuzzy assisted scoring for both inner and outer blocks, the next work is apply this scoring map to input image as well as one-by-one for each of database images. A block similarity map and similarity count is identified for inner blocks, outer blocks and combined weighted blocks. Some rules based limits are applied to generate this mapping scores and the maximum mapped database image is considered as the result image. The recognition algorithm obtained from the work is shown in table 2.

OuterBVal= ^^BlockScorea) (7)

49 V 7

Here, N is the number of inner blocks

49 is the maximum scored value of all inner blocks

Equation (7) will convert this value between 0 and 1 which is finally taken by the fuzzification process as input. This fuzzy rule applied at this stage is shown here below

High(OuterBVal) =

I

1, OuterBVal > a

0, OuterBVal < b

(a — OuterBVal)

--------------, b < OuterBVal < a (b — a)

Where a=.8 And b=.3

1,b < OuterBVal < c

0, OuterBVal > a or OuterBVal < d

Medium(OuterBVal) =

(b — OuterBVal) (b — d)

d < OuterBVal < b

(a — OuterBVal)

--------------, c < OuterBVal < a (a — c)

Where a=.8, d=.2, b=.4 and c=.7

Low(OuterBVal) =

I

1, OuterBVal < b

0, OuterBVal > a

(a — OuterBVal)

--------------,b < OuterBVal < a (b — a)

Where a=.8 and b=.3

Generating the fuzzy formed, converted values for outer blocks, the final work is to adopt these values

Table 2.Recognition Algorithm

|

Algorithm(RealTimeInputFace, FaceDB) /*RealTimeInputFace is the actual captured face with multiple |

||

|

differences in terms of position, size, contrast, etc. faced is constraint captured facial datanase*/ |

the |

|

|

{ |

||

|

1. |

SBlocks=SquaredSegmentation(RealTimeInptFace) |

|

|

2. |

FBlocks=GenerateFeatures(SBlocks) |

|

|

3. |

[InnerBScore OuterBScores]=ScoreEvaluation(FBlocks) |

|

|

4. |

For i=1 to FaceDB.Length /*Process each DBImage*/ { |

|

|

5. |

DbImg=FaceDB(i) |

|

|

6. |

SBlocks=SquaredSegmentation(DbImg) |

|

|

7. |

FBlocks=GenerateFeatures(SBlocks) |

|

|

8. |

[DInnerBScore DOuterBScores]=ScoreEvaluation(FBlocks) |

|

|

/*Generate Scores for db image*/ |

||

|

9. |

For j=1 to InnerBScore.Length /*Analyze Inner Block Scores*/ { |

|

|

10. |

For k=1 to DInnserBScore.Length { |

|

|

11. |

if(DInnsrBScore(k).Status=NotMapped) { |

|

|

12. |

if(DInnsrBScore(k)= InnerBScore(j)) { |

|

|

13. |

InnerCount++ |

|

|

DInnerBScore(k).Status=Mapped } |

||

|

14. |

} } } For j=1 to OuterBScores.Length /*Process Outer Blocks*/ { |

|

|

15. |

For k=1 to DOuterBScores.Length { |

|

|

16. |

if(DOuterBScores(k).Status=NotMapped) { |

|

|

17. |

if(DOuterBScores(k)=High And OuterBScores(k)=High) { |

|

|

18.. |

OuterCount=OuterCount+3 |

|

|

DOuterBScores(k).Status=Mapped } |

||

|

19. |

Else if(DOuterBScores(k)=Medium OuterBScores(k)=Medimum) { |

and |

|

20. |

OuterCount=OuterCount+2 |

|

|

DOuterBScores(k).Status=Mapped } |

||

|

21. |

Else if(DOuterBScores(k)=Low And OuterBScores(k)=Low) |

|

|

{ |

||

|

22. |

OuterCount=OuterCount+1 DOuterBScores(k).Status=Mapped } } |

|

|

} |

|

|

23. |

} MapCount.InnerCount(i)= InnerCount MapCount.OuterCount(i)= OuterCount } |

|

} 24. |

[index1 scorecount1]=MaxMatchIndex(OuterScoring) [Index2 scorecount2]=MaxMatchIndex(InnerScoring) /*Based on Individual Scoring Map the image*/ |

|

25. |

if(index1=index2) { |

|

26. |

matchedIndex=index1 } |

|

27. |

Elseif(scorecount1> Thresh1 and scorecount2>Thresh2) { |

|

28. |

matchedIndex=max(scorecount1,scorecount2) } |

|

29. |

Elseif(scorecount1> Thresh1) { |

|

30. |

matchedIndex=index1 } |

|

31. |

Elseif(scorecount2> Thresh2) { |

|

32. |

matchedIndex=index2 } |

|

33. |

Else |

|

{ |

|

|

34 |

matchedIndex=AssignWeights(scorecount1, weight1,scorecount2,weight2) } |

|

35 |

Return matchedIndex |

|

} |

The algorithm defined in table 2 shows the complete model implementation applied to rough captured image and the normalized DB image in the recognition process. At the earlier stage, both db image and input images are transformed to blocks and the feature extraction under three defined parameters is done which are discussed earlier. Two level fuzzy are applied to generate the scoring for inner and outer blocks. Once the blocks are identified, each block of the input image is compared with every block of database image. This mapping is significant to achieve the robustness against multiple featured and structural variations. This mapping is captured in terms of block count for both inner and outer blocks. This block counting is stored for each database image. As the map count of all images is obtained, the maximum mapped count and index is taken and different mapping rules are applied to perform the recognition. This block frequency map based recognition process model is applied to different datasets which are discussed in the next section.

-

IV. Experimentation

To prove the effectiveness of work, two of the most appropriate data set that are having descriptive variations in facial images are taken called FERET(The Facial Recognition Technology) and LFW(Labeled Faces in the Wild). FERET database is having 3737 images of both male and females with expression variation, pose variation, head moment etc. LFW is a wider database with 13233 instances of different people with poses, expression, phase and camera position variations. To

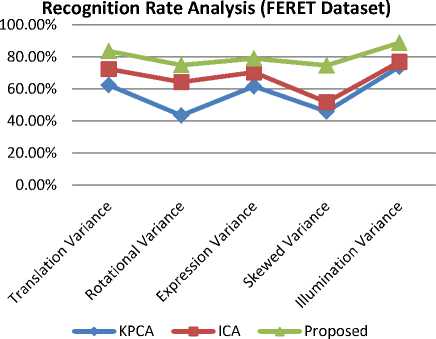

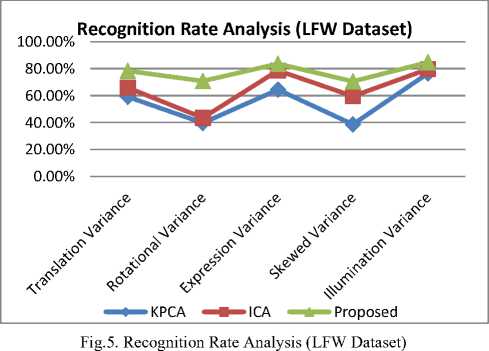

perform the analysis three different training and testing sets are formed with 30 test images and 200 training images. The test images are taken with five different variation aspects including illumination, position, rotation, expression and skewness. The recognition rate analysis is here in comparison of KPCA(Kernel Principal Component Analysis) and ICA(Independent Component Analysis). The proposed model is also applied on the same sample and taken an average recognition rate of multiple sampleset processing. The comparative recognition rate observations are shown in figure (4) and figure (5)

Fig.4. Recognition Rate Analysis (FERET Dataset)

Here figure 4 is showing the recognition rate analysis under different variations. Here x axis showing the variations and y axis showing the recognition rate. The results show that the proposed model is effective in case of all forms of variations. The maximum improvement achieved is in case of skewed variation for FERET database.

Figure 5 shows the recognition rate observations in LFW database. The figure showing the highest recognition improvement is achieved for translation variation and least improvement is identified in case of expression variation.

The comparative results resemble that the proposed method has provided the significant improvement in case of all the problem cases.

-

V. Conclusion

In this paper, a multi-featured fuzzy based block featured method is presented. The model used three features to present structural and the content information. Two-level fuzzy method is applied to evaluate the scoring for facial blocks segments. The robust block mapping and frequency count method is applied to perform the recognition. The analysis is taken for FERET and LFW datasets for five different variation aspects. The comparative observation against KPCA and ICA methods shows that the proposed model provided the significant improvement in recognition rate.

Список литературы Multi featured fuzzy based block weight assignment and block frequency map model for transformation invariant facial recognition

- Xiaohua Xie, Jianhuang Lai, and Wei-Shi Zheng, "Extraction of illumination invariant facial features from a single image using nonsubs ampled contourlet transform", Pattern Recognition, Vol 43, pp 4177-4189, 2010

- Saeid. Fazli, and Leila and Ali Heidarloo, "Wavelet Based Age Invariant Face Recognition using Gradient Orientation", International Conference on Advances in Computer and Electrical Engineering, pp 13-16, 2012

- Amany Farag and Randa Atta, "Illumination Invariant Face Recognition Using the Statistical Features of BDIP and Wavelet Transform", International Journal of Machine Learning and Computing, Vol 2, Issue 1, 2012

- Weihong Deng, Jiani Hu, Jiwen Lu and Jun Guo, "Transform-Invariant PCA: A Unified Approach to Fully Automatic Face Alignment, Representation, and Recognition", IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, Vol 36, Issue 6, pp 1275-1284, 2014

- Shubhankar De and Ranjan Parekh, "Illumination and Expression Invariant Automatic Human Face Recognition using Wavelet, Eigen and Fisher Analysis", International Journal of Computer Applications, Vol 117, Issue 21, 2015

- R.Pavithra, A. Usha Ruby, J. George and Chellin Chandran, "Scale Invariant Feature Transform Based Face Recognition from a Single Sample per Person", International Journal of Computational Engineering Research, Vol 4, Issue 10, pp 41-47, 2014

- Aman Kumar, Aditya Vardhan Srivastava and Pavan Singh Yadav, "Survey on Age Invariant Facial Recognition", Advances in Computer Science and Information Technology, Vol 2, Issue 5, pp 450-453, 2015

- Jeet Kumar, Aditya Nigam, Surya Prakash and Phalguni Gupta, "An Efficient Pose Invariant Face Recognition System", Proceedings of the International Conference on Soft Computing for Problem Solving, Vol 2, pp 145-152, 2012

- Haresh D., Chande and Zankhana H. Shah, "Illumination Invariant Face Recognition System", International Journal of Computer Science and Information Technologies, Vol 3, Issue 3, pp 4010-4014, 2012

- Surya Kant Tyagi and Pritee Khanna, "Face Recognition Using Discrete Cosine Transform and Nearest Neighbor Discriminant Analysis", International Journal ofEngineering and Technology, Vol 4, Issue 3, 311-314, 2012

- Felix Juefei-Xu, Khoa Luu, Marios Savvides, Tien D. Bui, and Ching Y. Suen, "Investigating Age Invariant Face Recognition Based on Periocular Biometrics", International Joint Conference on Biometrics, pp 1-7, 2011

- Hardeep Kaur and Amandeep Kaur, "Illumination Invariant Face Recognition", International Journal of Computer Applications. Vol 64, Issue 21, pp 23-27, 2013

- Anissa Bouzalmat, Arsalane Zarghili and Jamal Kharroubi, "Facial Face Recognition Method using Fourier Transform Filters Gabor and R_LDA", International Conference on Intelligent Systems and Data Processing, pp 18-24, 2011

- Hadis Mohseni Takallou and Shohreh Kasaei, "Head pose estimation and face recognition using a nonlinear tensor-based model", IET Computer Vision, Vol 8, Issue 1, pp 54-65, 2014

- Hadis Mohseni Takallou and Shohreh Kasaei, "Multiview face recognition based on multilinear decomposition and pose manifold", IET Image Processing, Vol 8, Issue 5, pp 300-309, 2014

- Wonjun Kim, Sungjoo Suh, Wonjun Hwang and Jae-Joon Han, "SVD Face: Illumination-Invariant Face Representation", IEEE SIGNAL PROCESSING LETTERS, Vol 21, Issue 11, pp 1336-1340, 2014

- Mohammad Reza Faraji and Xiaojun Qi, "Face Recognition under Varying Illumination with Logarithmic Fractal Analysis", IEEE SIGNAL PROCESSING LETTERS, Vol 21, Issue 12, pp 1457-1461, 2014

- Gaurav Goswami, Mayank Vatsa and Richa Singh, "RGB-D Face Recognition With Texture and Attribute Features", IEEE TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY, Vol 9, Issue 10, pp 1629-1640, 2014

- Ali. Javed, "Face recognition based on principal component analysis." International Journal of Image, Graphics and Signal Processing, Vol 5, Issue, 2, (2013): pp 38-44.

- Subrat Kumar Rath, Siddharth Swarup Rautaray, "A Survey on Face Detection and Recognition Techniques in Different Application Domain." International Journal of Modern Education and Computer Science, Vol 6, Issue 8 (2014): pp 34-44.

- K. Srinivasa Reddy, V. Vijaya Kumar, B. Eswara Reddy. "Face recognition based on texture features using local ternary patterns." International Journal of Image, Graphics and Signal Processing Vol 7, Issue 10, (2015): pp 37-46.

- Narpat A. Singh, Manoj B. Kumar, Manju C. Bala. "Face Recognition System based on SURF and LDA Technique." International Journal of Intelligent Systems and Applications Vol 8, Issue 2, (2016): pp 13-19.

- Tripti Goel, Vijay Nehra, Virendra P. Vishwakarma. "Pose Normalization based on Kernel ELM Regression for Face Recognition." International Journal of Image, Graphics & Signal Processing Vol 9, Issue 5. (2017), pp 68-75

- Kapil Juneja, "MPMFFT based DCA-DBT integrated probabilistic model for face expression classification." Journal of King Saud University-Computer and Information Sciences (2017).

- Kapil Juneja, "Multiple feature descriptors based model for individual identification in group photos." Journal of King Saud University-Computer and Information Sciences (2017).

- Kapil Juneja, "MFAST Processing Model for Occlusion and Illumination Invariant Facial Recognition." Advanced Computing and Communication Technologies. Springer Singapore, 2016. 161-170.

- Kapil Juneja, "Ring Segmented and Block Analysis Based Multi-feature Evaluation Model for Contrast Balancing." International Conference on Information, Communication and Computing Technology. Springer, Singapore, 2017

- Kapil Juneja, and Nasib Singh Gill. "Tied multi-rubber band model for camera distance, shape and head movement robust facial recognition." Applied and Theoretical Computing and Communication Technology (iCATccT), 2015 International Conference on. IEEE, 2015.

- Kapil Juneja, and Nasib Singh Gill. "A hybrid mathematical model for face localization over multi-person images and videos." Reliability, Infocom Technologies and Optimization (ICRITO)(Trends and Future Directions), 2015 4th International Conference on. IEEE, 2015.

- Weihong Deng, Jiani Hu, Zhongjun Wu, Jun Guo, Lighting-aware face frontalization for unconstrained face recognition, Pattern Recognition, Volume 68, 2017, Pages 260-271

- Hemprasad Y. Patil, Ashwin G. Kothari, Kishor M. Bhurchandi, Expression invariant face recognition using local binary patterns and contourlet transform, Optik - International Journal for Light and Electron Optics, Volume 127, Issue 5,2016, Pages 2670-2678

- Mohd. Abdul Muqeet, Raghunath S. Holambe, Local binary patterns based on directional wavelet transform for expression and pose-invariant face recognition, Applied Computing and Informatics, 2017