MultiBiometric Fusion: Left and Right Irises based Authentication Technique

Автор: Leila Zoubida, Réda Adjoudj

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 4 vol.9, 2017 года.

Бесплатный доступ

Biometric science is one of the important applications in the pattern recognition field. There are several modalities used in the biometric applications, among these different traits we choose the iris modality. Therefore, this paper proposes a multi-biometric technique which combines the both units of the iris modality: the left and the right irises. The fusion combines the advantages of the two instances. For the both units of the iris, the segmentation is realized by a modified method and the feature extraction is done by a global approach (the Daubechies wavelets). The Support Vector Machine SVM is used to obtain scores for fusion. Then the scores obtained are normalized by Min-Max method and the fusion is performed at score level by the combination of two methods: a combination method with a classification method. The Fusion is tested using four databases which are: CASIAV4 database, SDUMLA-HMT database, MMU1, and MMU2 databases. The obtained results have confirmed that the multi-biometric systems are better than the mono-modal systems according to their performance.

Pattern Recognition, Multi-biometric System, Iris Authentication, Left Iris, Right Iris, Score-level Fusion, Support Vector Machines

Короткий адрес: https://sciup.org/15014177

IDR: 15014177

Текст научной статьи MultiBiometric Fusion: Left and Right Irises based Authentication Technique

Published Online April 2017 in MECS DOI: 10.5815/ijigsp.2017.04.02

Biometric is an application of the pattern recognition, it uses the different characteristics of the persons to recognize them. Today, it is considered as one of the best solutions to solve many problems of security. The biometric systems which use one biometric modality are called mono-modal systems. Whereas these systems present a number of disadvantages compared with the multi-biometric systems [1].

Therefore, one trait biometric can’t be able to achieve the task of recognition. So, we need multi traits biometrics to authenticate or identify a person. In this context, the integration of the information presented by the various modalities can reduce some limitations related to the mono-modal systems [2]. The combination of multiple modalities biometrics improves the reliability of the biometric systems [3], and it can address the problem of universality [4]. The use of the Multi-biometric can also improve the accuracy of the comparison [5].

In this work, we propose multi-units biometrics approach based on score level fusion of the both irises (the right and the left irises).

The present paper is organized as follows: The different classes of multimodal biometric systems are discussed in section 2. Whereas the operation modes with the different fusion levels of the multimodal biometric and the related works are illustrated in section 3 and 4. In section 5, the proposed methodology is shown. Both the fusion and the decision modules are presented in section 6 and 7 respectively. Finally, the experimental results and conclusions of the work are presented in the two last section of the paper.

-

II. Classification of Multi-Biometrics Systems

The multi-biometric system integrated more than one biometric system for the identification or the authentication mode. Its goal is to reduce the limitation related to the use of one biometric system. So, it is used to identify or authenticate the persons by using multiple traits biometrics [6] [7]. However, the use of multiple elements within the same system can generate many scenarios. According to Ross and Jain [6], the multibiometric systems can be classified into six categories which are:

-

(1) . Multi-sensors system: In this system, there are several sensors which used to acquire the same trait biometric. For example, the 2D camera, the range scanner, and the IR camera are used to acquire the face modality in the facial recognition system.

-

(2) . Multi-algorithms system: In this type of system, several algorithms are used to verify the same trait biometric. For example, the global and the local features extraction methods are used for the face features extraction module.

-

(3) . Multi-units system: For this system, the

acquisition of multiple instances of the same trait biometric is needed, such as the use of the both units of the iris modality (the left and the right irises) to authenticate a person.

-

(4) . Multi-samples system: In this system, the same capture is used to acquire several variants of the same trait biometric. For example, the front, the left and the right profile of the face can be captured in the facial recognition system.

-

(5) . Multi-modal system: In this system, there are several traits biometric acquired by the different sensors. For example, the face and the iris modality are used to authenticate or identify the persons.

-

(6) . Hybrid system: A multi-biometric system that includes a subset of the five scenarios described above.

A fusion of more than one of the six scenarios can be employed in the same system. However, this work is based on a system that follows the principle of scenario 2 (Multi-algorithms system), combined with scenario 3 (Multi-units system), and scenario 6 (Hybrid system). In fact, the system is interested in recognizing persons from their both irises (the principle of Multi-units, scenario 3). For this, the SVM was used to obtain the scores, while the fusion is based on the use of two methods: a combination method with classification method (the principle of multiple algorithms, scenario 2). The system can also be classified as hybrid as follows the principle of scenario 2, combined with that scenario 3.

-

III. Fusion Level

A multimodal biometric system uses more than one biometric system and it requires the acquisition and the processing of the biometric data. These can be done in three operation modes [1], which are:

-

• Serial mode: In this operation mode, the acquisition and the data processing can be done sequentially. It is used when the different data use different sensors, for example, it is difficult to acquire at the same time the iris and the fingerprints in good conditions.

-

• Parallel mode: the acquisition and the processing take place simultaneously. It is used when the different data use the same sensor. For example, we use this architecture in this work because the both units of the iris modality can be acquired at the same time.

-

• Hierarchical mode: A multi-biometric system that includes the both modes described above.

A biometric system has four modules [2]:

-

• The sensor module: It acquired the biometric data.

-

• The feature extraction module: It is responsible for the data processing and the extraction of the pertinent features of the acquired biometric data.

-

• The matching module: It compared the features

extracted against the stored templates.

-

• The decision module: It made the decision to

identify or authenticate a user.

Sanderson and Paliwal [8] have classified the integration of information in biometric systems into two main classes:

-

• Pre-classification fusion: The integration of the information can take place before the application of any classifiers.

-

• Post-classification fusion: The information is combined after the decision of the classifiers has been obtained.

Therefore, the combination of multiple biometric systems can be done at the following levels:

-

( 1). Pre-classification fusion: There are two levels in this category, which are:

-

• Sensors level: It is the integration of the raw data which are obtained by the sensors.

-

• Features extraction level: It is the combination of the different features vectors.

-

( 2). Post-classification fusion: There are four levels in this category, which are:

-

• The dynamic selection of classifiers: It is the choice of the classifier that can give the high number of the correct decisions.

-

• Rank level: This level is used when the result of the classifiers is a subset of possible correspondences ordered in a descending confidence order.

-

• Scores level: It is the integration of the scores

generated by the different matchers.

-

• Decisions level: It is the combination of the

decisions obtained by the different matchers.

In this work, we chose the integration of the biometric data at the score level, because it is the most common approach and it is used in several multimodal biometric systems. In addition, it characterized by the rich of information with the ease of implementation.

-

IV. Related Work

The iris is one of the most accurate biometric modality. Therefore, the system of iris recognition is a biometric approach that has attracted the attention of several researchers such as:

Daugman’s system [9] is based on a method of iris detection. He also proposed the pseudo-polar method for the normalization of the iris [10].The Gabor filter is used for the features extraction [11] and the Hamming distance is used for the matching step.

Wildes method [12] is based on the use of the Hough transform for the segmentation. The Gaussian filter and the discriminate analysis components are used for the features extraction and the matching.

Masek Libor method [13] is an open-source system of person recognition by their irises. This system is based on the use of the Hough transform for the segmentation. The Gabor filter is used for the features extraction and the Hamming distance is used for the decision.

There are also several other works for the iris recognition, for example we can cite: Miyazawa method [14] (Phase-based image matching); IriTech approach [15]; CASIA approach [16]; OSIRIS system [17]; Boles and Boashash method [18] (Edge detection and Wavelet Transform Zero crossing); Approach of Sanchez-Rellio and al. [19]; Approach of Lim and al. [20] (Haar wavelet and Neural Networks); Daouk and al. [21](Canny Operator, Circular Hough Transform, Haar wavelet, and Hamming distance); Li Ma and al. used in [22][23][24] (Hough transform, Gabor filter, and Weighted Euclidean distance) (Gray-level information with Canny edge detection, Multichannel spatial filter, and Fisher Linear Discriminate classification (FLD)) (Gray-level information, Canny edge detection, 1D Dyadic Wavelet, and similarity function) respectively; Noh and al.[25] (2D Gabor Wavelet and Independent Component Analysis method); Avila and Reillo [26] (Gabor filter, Discrete Dyadic Wavelet Transform, Multiscale Zero Crossing, Euclidean, and Hamming distance); Chen and Chu [27] (Wavelet Probabilistic Neural Network (WPNN) and Particle Swarm Optimization (PSO)); Yuan and He [28] (Eyelash Detection Method); Schonberg and Kirovski [29] (EyeCerts); Daugman’s method [30] (Active contours and generalized coordinates Iris Code); Monro and al. [31] (1D Discrete Cosine Transform and Hamming distance); Poursaberi and Araabi [32] (Morphological Operators with thresholds, Daubechies 2 Wavelet, Hamming distance, and Harmonic mean); Vatsa and al. used in [33][34] (1D Log-Polar Gabor, Euler number, Hamming distance, and Directional Difference Matching) (Modified Mumford–Shah functional, 1D Log-Polar Gabor Transform, Euler numbers, and the Support Vectors Machine SVM) respectively; Azizi and Pourreza [35] (Contourlet transform and Support Vector Machine (SVM)); Araghi and al. [36] (Discrete Wavelet and competitive Neural Network LVQ (Learning Vector Quantization)); Gawande and al. [37] (Zero-Crossing 1 D Wavelet Euler number and Genetic algorithm); Kong and al. [38] (Iris Code); Strzelczyk [39] (modified Hough Transform and boundary energy function); Kekre and al. [40] (Vector Quantization (VQ) and Discrete Cosine Transform(DCT), Linde-Buzo-Gray (LBG) algorithm, Kekre’s Proportionate Error (KPE) algorithm and Kekre’s Fast Codebook Generation algorithm (KFCGA)); Shams and al. [41] (Canny edge detection, Hough Circular Transform, Local Binary Pattern (LBP), and Learning Vector Quantization Classifier (LVQ)); Gupta and Saini [42] (Daugman’s function, Rubber Sheet model, Labor Masek method, Gabor filter, and Hamming distance); Chirchi and al. [43] (Gazing-away eyes, Daugman’s system, 2D Discrete Wavelet Transform with Haar, and Hamming distance); Verma and al. [44] (Hough Transform, Daugman’s Rubber sheet model, 1D Log-Gabor, and Hamming distance); Tsai and al. [45] (Gabor filters, Possibilistic Fuzzy Matching); Suganthy and al. [46] (Local Binary Pattern (LBP), Histogram approaches, and Linear Vector Quantization (LVQ) classifier); Si and al. [47] (New eyelash detection algorithm); Costa and Gonzaga [48] (Dynamic Features

(DFs) and Euclidean distance); Li and Savvides [49] (Figueire do and Jain’s Gaussian Mixture Models (FJ-GMMs), Gabor Filter Bank (GFB), and Simulated Annealing (SA)); Acar and Ozerdem [50] (Texture Energy Measure (TEM) and k-Nearest Neighbor (k-NN)); Mesecan and al. [51] (Scale Invariant Feature Transform (SIFT) and sub-segments); Sanchez and al. [52] (Multi-Objective Hierarchical Genetic Algorithm (MOHGA) and Neural Network); Saravanan and Sindhuja [53] (Gabor filters, Binary Hamming distance, and Euclidean distance); Kumar and Srinivasan [54] (Multi-channel Gabor filter, Wavelet Transform, and Hamming distance); Chirchi and Waghmare [55] (Haar Wavelet and Hamming distance); Homayon [56] (Hough Transform, Daugman’s Rubber Sheet Model, and Lamstar Neural Network); Li [57] (Structure Preserving Projection (SPP), Maximum Margin Criterion (MMC), and Nearest Neighbor classification); Patil and al. [58] (Gray Scale Co-occurrence Matrix (GLCM), Hausdorff Dimension (HD), Biometric Graph Matching (BGM), and Support Vectors Machine (SVM)).

-

V. Proposed Methodology

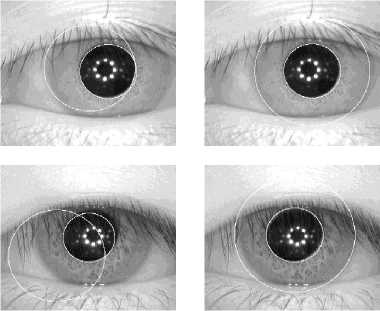

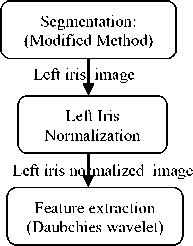

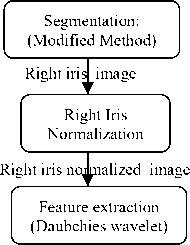

The proposed approach is based on two sub-systems: the first is based on the verification of the right irises while the second is based on the verification of the left irises.

-

A. Unimodal Sub-systems

The recognition of persons by their irises is not an easy thing because many noises can interfere with the image of the iris as (reflections, eyelids, and eyelashes). The iris recognition methods may differ. However, they share the following steps: the capture, the segmentation, the normalization, the features extraction, and the comparison against the images referenced in the database.

-

( 1). Capture: The images used in this work come from four databases which are: CASIA V 4 [59],

SDUMLA-HMT [60], MMU1 and MMU2 [61]. We will detail these databases in section 8.

-

( 2). Segmentation of iris: The images of the used databases do not only include the information of the iris, so it is necessary to segment the image in order to isolate the iris from the rest of the image. Several studies have been conducted in this area: Daugman [9] (integro-differential operator); Daugman [10] (Active edges and generalized coordinates are used, and exclusion of the eyelashes using a statistical inference); Wildes [12] (Image intensity gradient and Hough transform); Bole and Boashash [18] (Edge detection); Masek [13] (Edge detection, the canny filter, and the Hough transform); Ma and al. [62] (Hough transform) ; Ma and al. [24] (Gray-level information and canny edge detection); Proenca and Alexendre [63] (integro-differential operator); Vatsa and al. [34] (modified Mumford–Shah functional); Miyazawa and al. [14] (Iris

deformable model with 10 parameters); He and al. [64] ( approach to drawing and elastic boost); Liu and al. [65] (Modified Hough transform); Liu and al. [66] (Edge detection, the canny filter, and the Hough transform); Schuckers and al. [67] (integrodifferential operator and model of angular deformation); Ross and Shah [68] (Geodesic active edges); Sudha and al. [69] (the edge detection, the canny filter, and the Hough transform); Puhan and al. [70] (Fourier spectral density).

In our work, we used a modified method of the method used in the Libor Masek system [13] for the iris segmentation step. The system Masek [13] includes a segmentation module based on the Hough transform which allows finding the parameters of simple geometric objects such as lines and circles. Therefore, it is used to localize the pupil, the iris, the eyelids and the eyelashes. Also, it determined the radius and the coordinates of the center of the pupil and the iris. The Hough transform is a common method that is used in several studies such as: [12] [13] [62] [69] [71] [72] [73] [74] [75].

In this work, we used the same steps used in the Masek system [13] for the iris segmentation, but we proposed that the coordinates of the center of the pupil and the iris are the same, so the principle of our modified method isn’t like the system Masek [13].

The Masek system segment the iris in the following manner [13]:

-

• Generation of the contour by using the canny detection algorithm. The vertical gradients to detect the white-iris border and the detection of the pupil border by the gradients vertical and horizontal [13].

-

• Detection of the external border (the iris) is done before that of the inner border (the pupil) [13].

-

• In our work, we added a step after this last step of the Masek system [13]: However, while we were applying the system Masek on the images of the databases [59] [60] [61], we found that there are several examples of incorrect iris segmentation. But, the points of the pupil border are well detected. For this reason, we have proposed that the two circles of the pupil and the iris are concentric in order to reduce the number of the poorly segmented iris. Therefore, we used the radius of the circle of the iris detected by the Masek system [13], and the coordinates of the pupil center generated by the same system for the generation of a new circle of the iris. So, this last circle has the same radius of the previously detected iris and the same center as the detected pupil, by using this method we found that the number of the poorly detected iris is reduced compared to the use of the Masek system.

The other steps are the same as the Masek system [13]:

-

• The Hough transform is used to detect the eyelids by searching for the lines on the high and the low of the detected iris [13].

-

• The eyelashes and the reflections are detected by thresholding the image to grayscale [13].

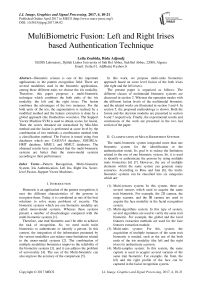

Fig.1 shows the segmentation of two examples of iris images extract from CASIA database (CASIA v4 interval). Column (a) is the examples that used the Masek system for the detection of the iris, and column (b) is the same examples but the detection of the iris is done by our modified method.

(a) (b)

Fig.1. Examples of the iris segmentation.

(a) Examples of poorly detected irises by the Masek system;

(b) Examples of Iris segmentation by our modified method.

-

( 3). Normalization: The step that follows the

segmentation module is the normalization. Its goal is to transform the irregular disc of the detected iris in a rectangular image of constant size. In this work, we used the same method as the Masek system [13]. This system includes a normalization module based on the normalization method “pseudo polar” [76]. The principle of this last method is assigned for each pixel of the iris in the Cartesian area a correspondent in the pseudo-polar area, and this is depended on the distance of the pixel from the center of the circles and the angle it makes with these centers.

-

( 4). For the both steps (the features extraction and the matching), the system Masek [13] used the Gabor filter for the features extraction and the Hamming distance for the matching step. In our system, we used for the:

-

• Feature extraction: The Daubechies wavelets.

-

• Matching: The SVM was used.

-

B. Fusion System

The proposed methodology is composed of two subsystems; the first is based on the authentication of the left iris while the second is based on the authentication of the right iris. The fusion of these two instances is achieved at scores level by the combination of two methods: a combination method with a classifier of classification method Chikhaoui and Mokhtari [77] in order to make the final decision.

The process used in this work to combine the two irises is illustrated by Fig.2:

Left eye image

Right eye image

Left Eye

Left iris features vectors

Right Eye

Right iris features vectors

Left iris scores

Matching (SVM)

Matching (SVM)

Data Base

Right iris scores

Left Iris Score Normalization (MIN-MAX)

Left iris normalized scores

Right Iris Score Normalization (MIN-MAX)

Right iris normalized scores

Score Fusion by: Combination Approach +

Classification Approach

Yes/No

Fig.2. Fusion Architecture

-

VI. Fusion Module

In this section, we will describe the proposed approach used for the automatic recognition of persons based on the fusion of their biometric characteristics which are: the right and the left irises. The steps used in our approach are:

-

A. Obtain Scores

In our work; we have used the Support Vector Machines (SVM) not to give a decision, but to obtain scores for fusion. However, the SVM is one of the statistical learning methods [78] [79]. They are used for the classification and the regression. They seek the optimal separating hyper-plane and the maximal margin.

In our case, the binary classification was used. As our system is a verification system, the SVM used is a binary SVM. During the classification by SVM method, the choice of the kernel function is the very important step. Among the different kernel functions that exist, we chose to use the linear function. According to Ben-yacoub [80], this function gives good results for the data integration.

At this level, we have two scores, a score for the left iris, and the second represents the score of the right iris.

-

B. Normalized Scores

The normalization process is an essential step in the multimodal fusion. It transforms the mono-modal scores in a comparable range. There are several normalization techniques such as Min-Max, Z-score, and Tanh [5].

-

C. Scores Fusion

A scores fusion system consists of two modules: the fusion module and the decision module. There are two approaches to fusing the scores obtained by the different systems. The first one considers the subject as a combination problem, where the scores obtained by each mono-modal system must be normalized before the fusion module [5], while the second approach treats the problem as a classification problem [81]. Jain and al. [5] have shown that the combination approaches are more efficient than the classification methods [5] [2].

In this work, a fusion technique, combining two approaches, namely combination, and classification, is proposed. The two approaches will be presented and detailed hereinafter.

-

(1) . Combine scores: Kittler and al. [82] combined the scores obtained by different systems using: the sum rule, the product rule, the maximum rule, the minimum rule and the median rule. In our work, the four rules ( sum , product , min , and max ) were combined with the classification method [77] to fused the scores of the both units of the iris modality and the results are detailed in section 8. In the combination approach, the mono-modal scores are combined to form a single score which is then used to take the final decision. The combined scores are compared with a threshold to take the decision, but in our approach, we have considered the problem as a classification problem. So, the combined scores are used as inputs of the classification method Chikhaoui and Mokhtari [77] which will be detailed in the next step.

-

(2) . Classify the combined scores: The combined scores obtained in the previous step are classified by using a separable classification method [77]. This method is based on the supports vector machines (SVM). It is a simple method to determine the hyper-plane that separates the two classes of examples so that the distance between these two classes is maximal. This method constructed differently the optimal hyper-plane and the maximum margin compared to SVM [77]. The result of the SVM modeling is a maximization

of a concave quadratic program. This concave quadratic program is solved by the projection method [83]. Here in briefly, the algorithm of the classification method used in this work: [77]

1) Suppose that the separating hyper-plane with maximum margin is written as:

ax + b = 0 (1)

Let X + = { x : ax + b > 1 } and X - = { x : ax + b < - 1 } .

Note x + an element of X + and x — an element of X — .

If card ( X + ) > card ( X — )

a ( — x + + x + ) < 0

^ < a ( x — — x + ) < 1

x + e X + ,

x — e X —

If card ( X + ) < card ( X — ) ^

Max< j —| |a|| I

a ( x — — x — ) < 0

a ( x — — x + ) < — 1

Inf llx +- x —||=|lx +- x —II (2)

x + e X + x — e X —

x + and x — are supports vectors.

2) Separation of x + and x — by the separating hyper plane of wide margin:

x + and x — are used to find the parameter of the separating hyper-plane of wide margin (a and b)

a = ( x +— x — ) (3)

ax + + b = 1

< _ ^

ax — + b = — 1

b = — 1a ( x + + x — )

3) Let:

X + =

X —

^ b =— 2 ( x +

— x — )( x + + X — ) =

x +

e X + : ( x + — x — ) x + +

II x—I2—lx+1 2

< 1 ^

= < x — e X — :

( x + — x — ) x —

>—n

4) Note that: x+ eX+ , x_ eX.

If ( X + { x + } ^ stop (5)

[ 2X — = { x — }

(H) is the separating hyper-plane of the wide margin.

5) Else,

The result of the SVM modeling is a maximization of a concave quadratic program. This concave quadratic program is solved by the projection method [83]. The projection of the point 0 e R n on the hyper-plane a ( x — — x + ) = — 1 is given by:

Pa ( x_ — x„ ) =— l ( 0 ) = 0--1----T ( x —— x + ) (8)

llx —— x + ) 2

For more details about the projection method see [83].

VII. Decision Module

The last module in the multi-biometric recognition system (identification or authentication), is called, the decision module. It is the suite of the fusion module. In this module, the decision of the system is taken in order to accept or reject the users (costumers or imposters). So, the authentication decision at this module is based on the similarity or the dissimilarity degree between the features.

In this module, the results of the fusion module are used to verify the user’s identity in the authentication system and to identify the user in the identification system. According to the output of the classifier [77] which is used for the classification of the combined scores in the previous module, the decision can be taken. From the previous module, we obtain the two parameters of the separating hyper-plane “a” and ”b”, and the both parameters are used for the construction of the decision function. So, the decisions function about a point belonging to one of the two classes (authenticate or nonauthenticate) is given by:

F(u) = sign(au + b) (9)

Where u: is an unknown point to classify.

If the output of this function is positive then the identity proclaimed is accepted. Otherwise, if the output is negative then the claimed identity is rejected.

-

VIII. Experimental Results

-

A. Description of databases

For the evaluation of our approach, we must use a database of N individual having two different instances of the iris modality. For this, we must use databases that contain the both irises (the left and the right irises).

In our work, we used: CASIA V 4 (interval) [59]; SDUMLA-HMT [60]; MMU1 and MMU2 [61].

Herein briefly, the description of the databases used for the test in this work:

-

1) CASIA V4 database:

-

• It is collected by Chinese Academy of Science

•

•

Institute of Automation.

It is an extension of CASIA-Iris V3.

It contains six subsets: Three subsets of CASIA-Iris V3 (CASIA-Iris-Interval, CASIA-Iris-Lamp, and CASIA-Iris-Twins) and the three new are: (CASIA-Iris-Distance, CASIA-Iris-Syn, and CASIA-Iris-Thousand).

-

• CASIA v4 contains 54601 images of the iris.

-

• In our work, we are used the “CASIA-Iris-Interval”. It contains 249 subjects with 7 images for each eye. Every iris image is saved in “JPG” format [59].

-

2) SDUMLA-HMT database:

-

• It was built within the University of Shandong.

-

• It comprised of 106 subjects with 5 images for each eye.

-

• Every iris image is saved in 256 gray-level “BMP” format [60].

-

3) MMU Database:

-

• It is developed by Multimedia University.

-

• It contains two sub-databases:

o The MMU1 data set of 450 iris images (45 subjects with 5 images for each eye)

o The MMU2 data set of 995 iris images (100 subjects with 5 for each eye).

-

• Every iris image is saved in “BMP” format [61].

Table 1 describes the four databases used in this work:

Table 1. Description of the databases used.

Database

Example

Wavelength

Varying

Nb.

CASIA-

Interval

SDUMLA-

HMT

MMU1

MMU2

Near Infrared

Near Infrared

Near Infrared

Near Infrared

distance

No

No

Acquisition Device

CASIA close-up iris camera

Capture device developed by

University of Science and

Technology of China.

LG EOU 2200.

Panasonic B ET100US.

Resolution

320X280

768X576

320X240

320X238

Sub

Train

test

Total

-

B. Results and discussion

In practice, no biometric system can be completely reliable. Therefore, it is necessary to use various indicators or metrics to evaluate the biometric systems. There are several performance indicators but they differ according to the type of the systems: identification or authentication modes. In this work, the authentication mode is chosen, so the indicators used to evaluate our system are:

-

• FAR (False Acceptance Rate): The impostors can be accepted by error.

-

• FRR (False Rejection Rate): The customers can be rejected wrongly.

-

• EER (Equal Error Rate): It is the point where FAR and FRR are equal.

-

• ROC (Receiver Operating Curve): It is the graph of FRR (in the X-axis) versus FAR (in the Y-axis);

-

• Accuracy: It describes how accurate a biometric system performs.

The performance of our system is evaluated using: the equal error rate (EER), the ROC curve, and the accuracy.

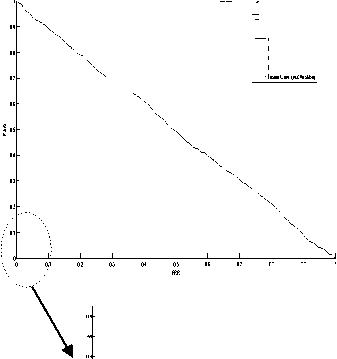

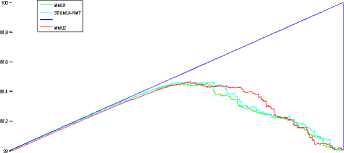

Fig. 3 shows the ROC curve for the four databases (CASIAV4 (Interval), SDUMLA-HMT, MMU1, and MMU2) respectively. Each curve plots the false acceptance rate FAR (in the Y-axis) versus the false rejection rate FRR (in the X-axis). In addition, each curve presents the performance of the two unimodal systems (the left iris authentication system and the right iris authentication system) and the multi-biometric systems. However, it shows the results obtained by the left iris authentication system and the right authentication system tested separately with the SVM classifier. Also, it illustrates the influence of each fusion method on the multi-biometric system. The first fusion method is the SVM, we used this method in order to compare the results obtained by this method with the results of the proposed approaches. Whereas our contribution is the use of the combination methods coupled with the classification method [77] for the fusion module. Therefore, we used the four combination rules (Product, Sum, Min, Max) coupled with the classification method [77] that we talked about earlier. So, there are four proposed approaches which are:

-

• CP is the product rule combined to the classification method [77],

-

• CS is the sum rule coupled with the classification method [77],

-

• CMI is the min rule combined to the classification

method [77].

-

• CMA is the max rule coupled with the

classification method [77].

According to the results illustrated by the Roc curves in fig. 3, we confirm the hypothesis of the superiority of multi-biometrics compared to the mono-modal biometric. Also, these curves show that the four fusion approaches (CP, CS, CMI, and CMA) give the best results compared with the SVM for all the databases.

Left Iris (Auc=0.0023)

Right Iris (Auc=0.0051)

Fusion:svm (Auc=0.5028)

Fusion:CP (Auc=1)

Fusion:CS (Auc=1)

Fusion:CMI (Auc=1)

Fusion:CMA (Auc=0.9988)

0.8

0.9

Left Iris (Auc=0.4342)

Right Iris (Auc=0.4427)

Fusion:svm (Auc=0.4998)

Fusion:CP (Auc=0.7254)

Fusion:CS (Auc=0.7332)

Fusion:CMI (Auc=0.6918)

Fusion:CMA (Auc=0.7182)

0 0.1 0.2 0.3 0.4 0.5 0.6

0.7 0.8 0.9 1

(a)

Left Iris (Auc=0.2386)

Right Iris (Auc=0.4294)

Fusion:svm (Auc=0.4969)

Fusion:CP (Auc=0.8683)

Fusion:CS (Auc=0.8563)

Fusion:CMI (Auc=0.8650)

Fusion:CMA (Auc=0.6032)

(b)

Left Iris (Auc=0.4125)

Right Iris (Auc=0.4171)

Fusion:svm (Auc=0.4993)

Fusion:CP (Auc=0.7500)

Fusion:CS (Auc=0.7595)

Fusion:CMI (Auc=0.7072)

Fusion:CMA (Auc=0.7608)

(c)

(d)

|~ - - Left Iris Right Iris--Fusion svm

Fig.3. ROC plots showing the performance of systems: left iris, right iris, fusion by (SVM, CP, CS, CMI, and CMA) On (a): CASIA Database; (b): SDUMLA-HMT Database; (c): MMU1 Database; (d): MMU2 Database.

There are different criteria for the evaluation of the performance of classification. One of these criteria is AUC (area under the ROC curve). An area of 1 signifies a perfect test and an area of 0.5 corresponds to a worthless test.

However, we can obtain from the ROC curve the rate (EER) at which FAR is equal to FRR and the AUC (area under the ROC curve).

The performance evaluation results are shown in table 2. According to the experiments results, we find that all the fusion methods give EER and AUC better than the both mono-modal systems (the left iris authentication system and the right iris authentication system). In addition, we find that the four fusion approaches (CP, CS, CMI, and CMA) give results better than the SVM for all the databases.

According to the EER and the AUC obtained, the CS approach is the best for the CASIA and the SDUMLA-HMT databases. The CP approach is preferable for the MMU1 database and for the last database the CMA approach gives the best result.

Table 2. The performance evaluation using the EER and the AUC criteria.

|

CASIA |

SDUMLA |

MMU1 |

MMU2 |

||||||

|

EER |

AUC |

EER |

AUC |

EER |

AUC |

EER |

AUC |

||

|

■a 8 "8 P |

Left iris |

0.9786 |

0.0023 |

0.5975 |

0.4342 |

0.7111 |

0.2386 |

0.5885 |

0.4125 |

|

Right iris |

0.967 |

0.0051 |

0.5597 |

0.4427 |

0.5471 |

0.4294 |

0.6065 |

0.4171 |

|

|

я о 3 h |

SVM |

0.496 |

0.5028 |

0.4992 |

0.4998 |

0.5 |

0.4969 |

0.4983 |

0.4993 |

|

CP Approach |

0.00117 |

1 |

0.3489 |

0.7254 |

0.1926 |

0.8683 |

0.3281 |

0.7500 |

|

|

CS Approach |

0.00113 |

1 |

0.3333 |

0.7332 |

0.2 |

0.8563 |

0.3163 |

0.7595 |

|

|

CMI Approach |

0.002467 |

1 |

0.363 |

0.6918 |

0.1926 |

0.8650 |

0.3657 |

0.7072 |

|

|

CMA Approach |

0.009371 |

0.9988 |

0.377 |

0.7182 |

0.4222 |

0.6032 |

0.2989 |

0.7608 |

|

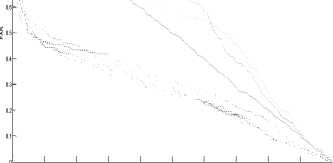

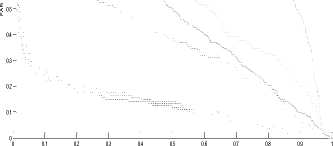

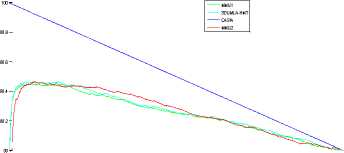

For each database, figure 4 presents the variation of the accuracy of the best approach obtained from the previous table (Table2), according to FAR and FRR respectively.

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

FAR

(a)

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

FRR

(b)

Fig.4. Evaluation using the accuracy. (a): FAR vs. Accuracy (b): FRR vs. Accuracy;

-

IX. Conclusion

In this paper, we have proposed a modified method for the iris segmentation step. Also, we have proposed an integration approach by combining two methods: the combination method with the classification method. We integrated the both units of the iris modality (the left and the right irises) and we confirm the contribution of this multi-units approach compared to the mono-modality by increasing the performance of the multi-biometric systems.

The results obtained are satisfactory and the obtained results validate the hypothesis of the superiority of the multi-biometrics compared to the mono-modal biometric. So, this significant improvement confirms the value of combining the biometric units of the iris modality.

In outlook, further improvements may be considered. The fusion between the two units can be done in the other levels not only at the score level. As another perspective; we can add other modalities and combine different techniques to improve the performance of the verification of the identity of persons.

Список литературы MultiBiometric Fusion: Left and Right Irises based Authentication Technique

- A. Ross and A.k. Jain, ”Multimodal Biometrics: An Overview”, proceedings of 12th European Signal Processing Conference (EUSIPCO), Vienna, Austria, pp. 1221–1224, Sep.2004

- A. Ross and A. Jain, “Information Fusion in Biometrics”, Pattern Recognition Letters, vol. 24, no. 13, pp. 2115–2125, 2003. Doi: 10.1016/S0167-8655(03)00079-5.

- L. Hong, A. K. Jain, and S. Pankanti, ”Can Multibiometrics Improve Performance?”, Proc. AutoID’99, USA, pp. 59-64, Oct.1999. Doi: 10.1.1.460.7831.

- A. Jain, A. Ross, and S. Prabhakar , “An Introduction to Biometric Recognition”, IEEE Transactions on Circuits and Systems for Video Technology, vol. 14, no. 1, pp. 4-20, Jan.2004. Doi: 10.1109/TCSVT.2003.818349.

- A. Jain, K. Nandakumar, and A. Ross, “Score Normalization in Multimodal Biometric Systems”, Pattern Recognition, vol. 38, no. 12, pp. 2270-2285, Dec.2005. Doi: 10.1016/j.patcog.2005.01.012.

- A. Ross, K. Nandakumar, and A.K. Jain, “Handbook of Multibiometrics”, Springer, NewYork, USA, 1st edition, 2006. Doi: 10.1007/0-387-33123-9.

- A. Ross and N. Poh, “Multibiometric Systems: Overview, Case Studies, and Open Issues”, Springer 2009, pp. 273-292, 2009. Doi: 10.1007/978-1-84882-385-3_11

- C. Sanderson and K.K. Paliwal, “Information Fusion and Person Verification Using Speech and Face Information”, Research Paper IDIAP-RR, pp. 02-33, 2004. Doi: 10.1.1.7.9818.

- J.G Daugman, “High Confidence Visual Recognition of Persons by a Test of Statistical Independence”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 15, no. 11, pp. 1148-1161, Nov.1993. Doi: 10.1109/34.244676.

- J.G Daugman, “How Iris Recognition Works”, IEEE Transactions on Circuits and Systems for Video Technology, vol. 14, no. 1, Jan.2004. Doi: 10.1109/TCSVT.2003.818350.

- D. Gabor, “Theory of Communication”, Journal of the Institution of Electrical Engineers, vol. 93, no. 26, pp. 429-441, 1946.

- R.P. Wildes, ”Iris Recognition: An Emerging Biometric Technology”, Proceedings of the IEEE, vol. 85, no. 9, pp. 1348-1363, Sep.1997. Doi: 10.1109/5.628669.

- M. Libor, “Recognition of Human Iris Patterns for Biometric Identification”, University of Western Australia, 2003. Doi: 10.1.1.90.5112.

- K. Miyazawa, K. Ito, T. Aoki, K. Kobayashi, and H. Nakajima, “A Phase-Based Iris Recognition Algorithm”, International Conference on Biometrics, pp. 356–365, 2006. Doi: 10.1007/11608288_48.

- D.H. Kim and J.S. Ryoo, “Iris Identification System and Method of Identifying a Person through Iris Recognition”, Us Ptent 6247813, Jun.2001. Doi: 10.1109/KES.1997.619433.

- D.N. Bhat and S.K. Nayar, “Ordinal Measures for Image Correspondence”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 20, no. 4, pp. 415-423, Apr.1998. Doi:10.1109/34677275.

- E. Krichen, Z. Sun, and A. Mellakh, “Technical Report of Iris Working Group on the 1st Biosecure Residential Workshop”.

- W.W. Boles and B. Boashash, “A Human Identification Technique Using Images of the Iris and Wavelet Transform”, IEEE Transactions on Signal Processing, vol. 46, no. 4, pp. 1185-1188, Apr.1998. Doi: 10.1109/78.668573.

- R. Sanchez-Reillo, C. Sanchez-Avila, and A. Gonzalez-Marcos, “Improving Access Control Security Using Iris Identification”, Proceedings IEEE 34th Annual 2000 International Carnahan Conference on Security Technology, pp. 56-59, 2000. Doi: 10.1109/CCST.2000.891167.

- S. Lim, K. Lee, O. Byeon and T. Kim, “Efficient Iris Recognition through Improvement of Feature Vector and Classifier”, ETRI Journal, vol. 23, no. 2, pp. 61-70, Jun.2001. Doi: 10.1.1.116.7539.

- C.H. Daouk, L.A. El-Esber, F.D. Kammoun, and M.A. Al Alaoui, “Iris Recognition”, Proceeding of the IEEE ISSPIT, Marrakesh, pp. 558-562, 2002.

- L. Ma, Y. Wang, and T. Tan, "Iris Recognition Based on Multichannel Gabor Filtering", The 5th Asian Conference on Computer Vision ACCV’02, vol. 1, pp.279-283, Melbourne, Australia, Jan.2002. Doi: 10.1.1.132.3967.

- L. Ma, T. Tan, Y. Wang, and D. Zhang, “Personal Identification Based on Iris Texture Analysis”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 25, no. 12, pp. 1519–1533,Dec.2003.Doi:10.1109/TPAMI.2003.1251145

- L. Ma, T. Tan, Y. Wang, and D. Zhang, “Efficient Iris Recognition by Characterizing Key Local Variations”, IEEE Transactions on Image Processing, vol. 13, no. 6, pp. 739–750, Jun.2004. Doi: 10.1109/TIP.2004.827237.

- S. Noh, K. Bae, K. Park, and J. Kim. “A New Iris Recognition Method Using Independent Component Analysis”, IEICE - Transactions on Information and Systems, vol. E88-D, no. 11, pp.2573–2581, Nov.2005. Doi: 10.1093/ietisy/e88-d.11.2573.

- C. Sanchez-Avila and R. Sanchez-Reillo, “Two Different Approaches for Iris Recognition Using Gabor Filters and Multiscale Zero-Crossing Representation”, Pattern Recognition, vol. 38, no. 2, pp. 231–240, Feb.2005. Doi:10.1016/S0031-3203(04)00297-3.

- C.H. Chen, C.T. Chu, “Low Complexity Iris Recognition Based on Wavelet Probabilistic Neural Networks”, Proceedings of International Joint Conference on Neural networks IJCNN’05, vol. 3, pp. 1930-1935, 2005. Doi: 10.1109/IJCNN.2005.1556175.

- W. Yuan and W. He, “A Novel Eyelash Detection Method for Iris Recognition”, Proceedings of the IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, china, pp. 6536-6539, Sep.2005. Doi: 10.1109/IEMBS.2005.1615997.

- D. Schonberg and D. Kirovski, “EyeCerts”, IEEE Transactions on Information Forensics and Security, vol. 1, no. 2, pp. 144-153, Jun.2006. Doi: 10.1109/TIFS.2006.873604.

- J. Daugman, “New Methods in Iris Recognition”, IEEE Transactions on Systems, Man, and Cybernetics, Part B(Cybernetic), vol. 37, no. 5, pp. 1167–1175, Oct.2007. Doi: 10.1109/TSMCB.2007.903540.

- D.M. Monro, S. Rakshit, and D. Zhang, “DCT-Based Iris Recognition”, IEEE Transaction on Pattern Analysis and Machine Intelligence, vol. 29, no. 4, pp. 586–595, Apr.2007. Doi: 10.1109/TPAMI.2007.1002.

- A. Poursaberi and B.N. Araabi, “Iris Recognition for Partially Occluded Images: Methodology and Sensitivity Analysis”, EURASIP Journal on Advances in Signal Processing, vol. 2007, no. 1, Jan.2007. Doi: 10.1155/2007/36751.

- M. Vatsa, R. Singh, and A. Noore, “Reducing the False Rejection Rate of Iris Recognition Using Textural and Topological Features”, International Journal of Signal Processing, vol. 2, no. 2, pp. 66–72, 2005. Doi: 10.1.1.307.3788.

- M. Vatsa, R. Singh, and A. Noore, ”Improving Iris Recognition Performance Using Segmentation, Quality Enhancement, Match Score Fusion, and Indexing” ,IEEE Transactions on Systems, Man, and Cybernetics, Part B(Cybernetic), vol. 38, no. 4, pp. 1021-1035, Aug.2008. Doi: 10.1109/TSMCB.2008.922059.

- A. Azizi and H.R. Pourreza, “Efficient Iris Recognition Through Improvement of Feature Extraction and Subset Selection”, International Journal of Computer Science and Information Security (IJCSIS), vol. 2, no. 1, Jun.2009.

- L.F. Araghi, H. Shahhosseini, and F. Setoudeh , “Iris Recognition Using Neural Network”, Proceedings of the International Multi Conference of Engineers and Computer Scientists (IMECS2010), Hong Kong, vol. 1, pp. 338-340, Mar.2010.

- U. Gawande, M. Zaveri, and A. Kapur, “Improving Iris Recognition Accuracy by Score Based Fusion Method”, International Journal of Advancements in Technology, vol. 1, no. 1, Jul.2010. Doi: 978-1-4244-2328-6/08.

- A.W.K. Kong, D. Zhang, and M.S. Kamel, “An Analysis of Iris Code”, IEEE transactions on image processing, vol. 19, no. 2, pp. 522-532, 2010. Doi: 10.1109/TIP.2009.2033427.

- P. Strzelczyk, “Robust and Accurate Iris Segmentation Algorithm for Color and Noisy Eye Images”, Journal of telecommunication and information technology, pp. 5-9, 2010.

- H.B. Kekre, T.K. Sarode, V.A. Bharadi, A.A. Agrawal, R.J. Arora, and al., “Performance Comparison of DCT and VQ Based Techniques for Iris Recognition”, Journal of electronic science and technology, vol. 8, no. 3, pp. 223-229, Sep.2010. Doi: 10.3969/j.issn.1674-862X.2010.03.005.

- M.Y. Shams, M.Z. Rashad, O. Nomir, and R.M. El-Awady, “Iris Recognition Based on LBP and Combined LVQ Classifier”, International Journal of Computer Science and Information Technology (IJCSIT), vol. 3, no. 5, Oct.2011. Doi: 10.5121/ijcsit.2011.3506.

- R. Gupta and H. Saini, ”Generation of Iris Template for Recognition of Iris in Efficient Manner”, International Journal of Computer Science and Information Technologies (IJCSIT), vol. 2, no. 4, pp. 1753-1755,2011. Doi: 10.1.1.228.835.

- V.R.E. Chirchi, L.M. Waghmare, and E.R. Chirchi, “Iris Biometric Recognition for Person Identification in Security Systems”, International Journal of Computer Applications, vol. 24, no. 9, pp. 1-6, Jun.2011. Doi: 10.5120/2986-4002.

- P. Verma, M. Dubey, S. Basu, and P. Verma, ”Hough Transform Method for Iris Recognition- A Biometric Approach”, International Journal of Engineering and Innovative Technology (IJEIT) , vol. 1, no. 6, pp. 43-48, Jun.2012.

- C.C. Tsai, H.Y. Lin, J. Taur, and C.W. Tao, “Iris Recognition Using Possibilistic Fuzzy Matching on Local Features”, IEEE transactions on systems ,man, and cybernetics part B(cybernetics), vol. 42, no. 1, pp. 150-162,Feb.2012. Doi:10.1109/TSMCB.2011.2163817.

- M. Suganthy, P. Ramamoorthy, and R. Krishnamoorthy, “Effective Iris Recognition For Security Enhancement”, International Journal of Engineering Research and Applications (IJERA), vol. 2, no. 2, pp. 1016-1019, Mar.2012. Doi: 10.1.1.416.8936.

- Y. Si, J. Mei, and H. Gao, “Novel Approaches to Improve Robustness, Accuracy and Rapidity of Iris Recognition Systems”, IEEE transactions on industrial informatics, vol. 8, no. 1, pp. 110-117, Feb.2012. Doi: 10.1109/TII.2011.2166791.

- R.M. Costa and A. Gonzaga, “Dynamic Features for Iris Recognition”, IEEE transactions on systems, man, and cybernetics, part B(cybernetics), vol. 42, no. 4, pp. 1072-1082, Aug. 2012. Doi: 10.1109/TSMCB.2012.2186125.

- Y.H. Li and M. Savvides, “An Automatic Iris Occlusion Estimation Method Based on High-Dimensional Density Estimation”, IEEE transactions on pattern analysis and machine intelligence, vol. 35, no. 4, pp. 784-796, Apr.2013. Doi: 10.1109/TPAMI.2012.169

- E. Acar and M. S. Ozerdem, “An Iris Recognition System by Laws Texture Energy Measure Based k-NN Classifier”, 21st Signal Processing and Communications Applications Conference (SIU), pp. 1-4, 2013. Doi: 10.1109/SIU.2013.6531397.

- I. Mesecan, A. Eleyan, and B. Karlik, “Sift-based Iris Recognition Using Sub-Segments”, International Conference on Technological Advances in Electrical Electronics and Computer Engineering [TAEECE), pp. 350-353, 2013. Doi: 10.1109/TAEECE.2013.6557299

- D. Sanchez, P. Melin, O. Castillo, and F. Valdez, “Modular Granular Neural Networks Optimization with Multi-Objective Hierarchical Genetic Algorithm for Human Recognition Based on Iris Biometric”, IEEE Congress on Evolutionary Computation (CEC), pp. 772-778, 2013. Doi: 10.1109/CEC.2013.6557646.

- V. Saravanan and R. Sindhuja, “Iris Authentication through Gabor Filter Using DSP Processor”, Proceedings of IEEE Conference on Information and Communication Technologies (ICT), pp. 568-571, 2013. Doi: 10.1109/CICT.2013.6558159.

- V.K.N. Kumar and B. Srinivasan, “Performance of Personal Identification System Technique Using Iris Biometrics Technology”, International Journal of Image, Graphics and Signal Processing, vol. 5, no. 5, pp. 63-71, Apr.2013. Doi: 10.5815/ijigsp.2013.05.08.

- V.R.E. Chirchi and L.M. Waghmare, “Iris Biometric Authentication used for Security Systems”, International Journal of Image, Graphics and Signal Processing, vol. 6, no. 9, pp. 54-60, Aug.2014. Doi: 10.5815/ijigsp.2014.09.07.

- S. Homayon, “Iris Recognition for Personal Identification Using Lamstar Neural Network”, International Journal of Computer Science and Information Technology (IJCSIT), vol.7, no.1, pp.1-8, Feb.2015. Doi: 10.5121/ijcsit.2015.7101.

- Y. Li, “Iris Recognition Algorithm based on MMC-SPP”, International Journal of Signal Processing, Image Processing and Pattern Recognition, vol. 8, no. 2, pp. 1-10, Feb.2015. Doi: 10.14257/ijsip.2015.8.2.01.

- A.M. Patil, D.S. Patil, and P.S. Patil, “Iris Recognition using Gray Level Co-occurrence Matrix and Hausdorff Dimension”, International Journal of Computer Applications , vol. 133, no. 8, pp. 29-34, Jan.2016. Doi: 10.5120/ijca2016907953.

- Casia iris database: http://www.idealtest.org/findDownloadDbByMode.do?mode=Iris

- SDUMLA-HMT database: http://mla.sdu.edu.cn/sdumla-hmt.htm

- MMU database: http://www.mmu.edu.my/~ccteo/

- L. MA, T. Tan, Y. Wang, and D. Zhang, “Personal Identification Based on Iris Texture Analysis”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 25, no. 12, pp. 1519-1533, Dec.2003. Doi:10.1109/TPAMI.2003.1251145

- H. Proenca and L.A Alexandre, “Toward non Cooperative Iris Recognition: A Classification Approach Using Multiple Signatures”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 29, no. 4, pp. 607-612, Apr.2007. Doi: 10.1109/TPAMI.2007.1016.

- Z. He, T. Tan, Z. Sun, and X. Qiu, “Toward Accurate and Fast Iris Segmentation for Iris Biometrics”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 31, no. 9, pp. 1670-1684, Sep.2009. Doi: 10.1109/TPAMI.2008.183.

- X. Liu, K.W. Bowyer, and P.J. Flynn, ”Experiments with An Improved Iris Segmentation Algorithm”, 4th IEEE Workshop on Automatic Identification Advanced Technologies (AutoID), pp. 118-123, New York, Oct.2005. Doi: 10.1109/AUTOID.2005.21.

- X. Liu, K.W. Bowyer, and P.J. Flynn, “Experimental Evaluation of Iris Recognition”, IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp.158-165, Jun.2005. Doi: 10.1109/CVPR.2005.576.

- S.A.C. Schuckers, N.A. Schmid, A. Abhyankar, V. Dorairaj, C.K. Boyce, and al., “On Techniques for Angle Compensation in Nonideal Iris Recognition”, IEEE Transactions on Systems Man and Cybernetics-Part B(Cybernetics), vol. 37, no. 5, pp. 1176-1190, Oct.2007. Doi: 10.1109/TSMCB.2007.904831.

- A. Ross and S. Shah, “Segmenting Non-Ideal Irises Using Geodesic Active Contours”, Proceedings of biometrics symposium (BSYM): Special Session on Research at the Biometric Consortium Conference, Baltimore, USA, pp. 1-6, 2006. Doi: 10.1109/BCC.2006.4341625.

- N. Sudha, N.B. Puhan, H .Xia, and X. Jiang, “Iris Recognition on Edge Maps”, IET Computer vision, vol. 3, no. 1, pp. 1-7, Mar.2009. Doi: 10.1049/iet-cvi:20080015.

- N.B. Puhan, N. Sudha, and S.A. Kaushalram, ”Efficient Segmentation Technique for Noisy Frontal View Iris Images Using Fourier Spectral Density”, Signal Image and Video Processing, vol. 5, no. 1, pp. 105-119, Nov.2009. Doi: 10.1007/s11760-009-0146-z.

- L. Ma., Y. Wang, and T. Tan, “Iris Recognition Using Circular Symmetric Filters”, 16th international conference on Pattern Recognition (ICRP 2002), vol. 2 , pp. 414-417, Aug.2002. Doi: 10.1109/ICPR.2002.1048327.

- N. Khiari, H. Mahersia, and K. Hamrouni, “Iris Recognition Using Steerable Pyramids”, First Workshops on Image Processing Theory Tools and Applications IPTA 2008, pp. 1-7, Sousse, Tunisia, Dec.2008. Doi: 10.1109/IPTA.2008.4743737.

- R.P. Wildes, J.C. Asmuth, G.L. Green, S.C. Hsu, R.J. Kolczynski, and al., “A System for Automated Iris Recognition”, Proceedings of the second IEEE Workshop on Applications of Computer Vision, Sarasota, pp. 121-128, Dec.1994. Doi: 10.1109/ACV.1994.341298.

- W.K. Kong and D. Zhang, “Accurate Iris Segmentation Based on Novel Reflection and Eyelash Detection Model”, Proceedings of 2001 International Symposium on Intelligent Multimedia, Video and Speech Processing, Hong Kong, pp. 263-266, 2001. Doi: 10.1109/ISIMP.2001.925384.

- Y. Zhu, T. Tan, and Y. Wang, “Biometric Personal Identification Based on Iris Patterns”, Proceedings of 15th International Conference on Pattern Recognition ICPR, Barcelona, Espagne, vol. 2, pp. 805-808, 2000. Doi: 10.1.1.85.5512.

- J.G. Daugman, “High Confidence Recognition of Persons by Rapid Video Analysis of Iris Texture”, European Convention on Security and Detection, pp. 244–251, May.1995. Doi: 10.1049/cp:19950506.

- A. Chikhaoui and A. Mokhtari, “Classification with Support Vector Machine: New Quadratic Programming Algorithm”, COSI’2013, Algeria, Jun.2013.

- V. Vapnik, “Statistical Learning Theory”, John Wiley and Sons, New York, 1998.

- V. Vapnik, “The Nature of Statistical Learning Theory”, Springer-Verlag, New York, 1995. Doi: 10.1007/978-1-4757-2440-0.

- S. Ben Yacoub, “Multi-Modal Data Fusion for Person Authentication Using SVM”, IDIAP Research Report, 1998. Doi: 10.1.1.49.8458.

- R.M. Bolle, J. Connell, and S. Pankanti, N.K. Ratha, and A.W. Senior, “Guide to Biometrics”, Springer-Verlag, New York, 2004. Doi: 10.1007/978-1-4757-4036-3.

- J. Kittler, M. Hatef, R. Duin, and J. Matas “On Combining Classifiers”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 20, no. 3, pp. 226-239,1998. Doi: 10.1109/34.667881.

- A. Chikhaoui, B. Djebbar, and R. Mekki, “New Method for Finding an Optimal Solution to Quadratic Programming Problems”, Journal of Applied Sciences, vol. 10, no. 15, pp. 1627-1631, 2010. Doi: 10.3923/jas.2010.1627.1631.