Multimodal biometric system based face-iris feature level fusion

Автор: Muthana H. Hamd, Marwa Y. Mohammed

Журнал: International Journal of Modern Education and Computer Science @ijmecs

Статья в выпуске: 5 vol.11, 2019 года.

Бесплатный доступ

This paper proposed feature level fusion technique to develop a robust multimodal human identification system. The humane face-iris traits are fused together to improve system accuracy in recognizing 40 persons taken from ORL and CASIA-V1 database. Also, low quality iris images of MMU-1 database are considered in this proposal for further test of recognition accuracy. The face-iris features are extracted using four comparative methods. The texture analysis methods like Gray Level Co-occurrence Matrix (GLCM) and Local Binary Pattern (LBP) are both gained 100% accuracy rate, while the Principle Component Analysis (PCA) and Fourier Descriptors (FDs) methods achieved 97.5% accuracy rate only.

Face-iris biometric, Fourier descriptors, Fusion, GLCM, LBP, PCA

Короткий адрес: https://sciup.org/15016847

IDR: 15016847 | DOI: 10.5815/ijmecs.2019.05.01

Текст научной статьи Multimodal biometric system based face-iris feature level fusion

Published Online May 2019 in MECS DOI: 10.5815/ijmecs.2019.05.01

Biometric system preforms identification process for humans based on their behavioral or physical traits to provide high security against fraud, hacking and breach operations [1]. The signature, keystroke, voice, and gait are known the behavioral traits, where the mensuration depends on human action. The mensuration of physical traits depends on human body such as face, iris, hand geometry, and DNA [2].

Unimodal biometric system utilizes only one trait such as face, fingerprint, iris to perform recognition operation [3]. Some limitations like illumination, pose, and image quality will effect on the accuracy of such biometric system, so multimodal biometric system is proposed to find a solution for such limitations and to improve the performance of identification processes [4].

Multimodal biometric system is a combination of two or more traits in one system, it is more secure and difficult to fraud where the hackers cannot fraud two or more traits in the same time. Furthermore, multibiometric system contains large number of features that are fused together in the one machine vector to reduce the failure rate and enhance the overall matching process [4].

Depending on application requirements, there are four levels can be applied to implement the fusion technique:

-

• Sensor level: several images are captured for the same biometric trait and these images are fused to obtain a high quality one [5].

-

• Feature level: the features for each trait are extracted and fused to create a new dataset; the fusion in this level is implemented before performing matching algorithm [5].

-

• Score level: in this fusion level, each biometric system implements matching algorithm to its extracted features separately then fusion is made between the matching results [5].

-

• Decision level: each unimodal biometric system makes its decision independently then these individual decisions are combined to produce the final decision [5].

In this work, four comparative multimodal biometric systems are developed using four feature extraction methods and face-iris feature level fusion technique. The four feature extraction methods are: Principle Component Analysis, Fourier Descriptors, Gray Level Co-occurrence Matrix, and Local Binary Pattern. Euclidean distance method is proposed as a classifier for matching step. The paper is organized as follows: section I is the introduction, section II is the most related works, design methodology is presented in section III. The four multimodal system results are demonstrated in section IV and finally the conclusion is discussed in section V.

-

II. Related Works

In 2013, S. Nair et al. suggested new technique for fusion the face and iris feature using PCA method. The Discrete Cosine Transform (DCT) and Discrete Wavelet Transform (DWT) algorithms were performed separately to extract the features of both face and iris images independently. The performance of the system was measured using the Peak Signal to Noise Ratio (PSNR), where DWT achieved 48.13 db for both face and iris

features, while the DCT achieved 40.59 db for face and 43.70 db for iris feature [6].

In 2014, Sim et al. used weighted score rule for fusion the face and iris trait in score level. PCA and NeuWave method were used for iris feature extraction, where NeuWave method comes from the combination of Haar Wavelet decomposition and Neural Network. Euclidean distance and Hamming distance methods were used for matching the face and iris features separately. This method achieved 99.4% and 99.6% accuracy rate when ORL - UBIRIS and UTMIFM were used respectively [7].

In 2015, Wang et al. proposed an approach to fuse the face and iris features relied on a serial rule. In this approach, Fisher Discriminant Analysis (FDA) method was performed to extract the features from both face and iris traits. The performance of proposed approach was higher than the performance of face and iris biometric system separately [8].

Sharifi et al. in 2016 applied three levels for fusion the face and iris traits: feature level, score level, and decision level independently. The Log-Gabor transformation method was performed for feature extraction of both face and iris trait. The Linear Discriminant Analysis (LDA) method was applied in this system for dimensional reduction. The Backtracking Search Algorithm (BSA) was used for selecting specific features from the fusion feature set. After applying the Manhattan distance for face and iris features separately, the score level fusion was performed using weighted sum rule. Finally, the threshold-optimized approach was used to implement decision level fusion where it improved the final decision. By computing the FAR values, the system performance acquired 94.91%, 95.00%, and 96.87% for fusion in feature, score, and decision level respectively [9].

In this work, our proposed system is differing from others multimodal systems by using four comparative feature extraction methods. Also, it uses low quality iris image database taken from MMU-1 to make a challenge of the system in recognizing its subjects.

-

III. Methodology

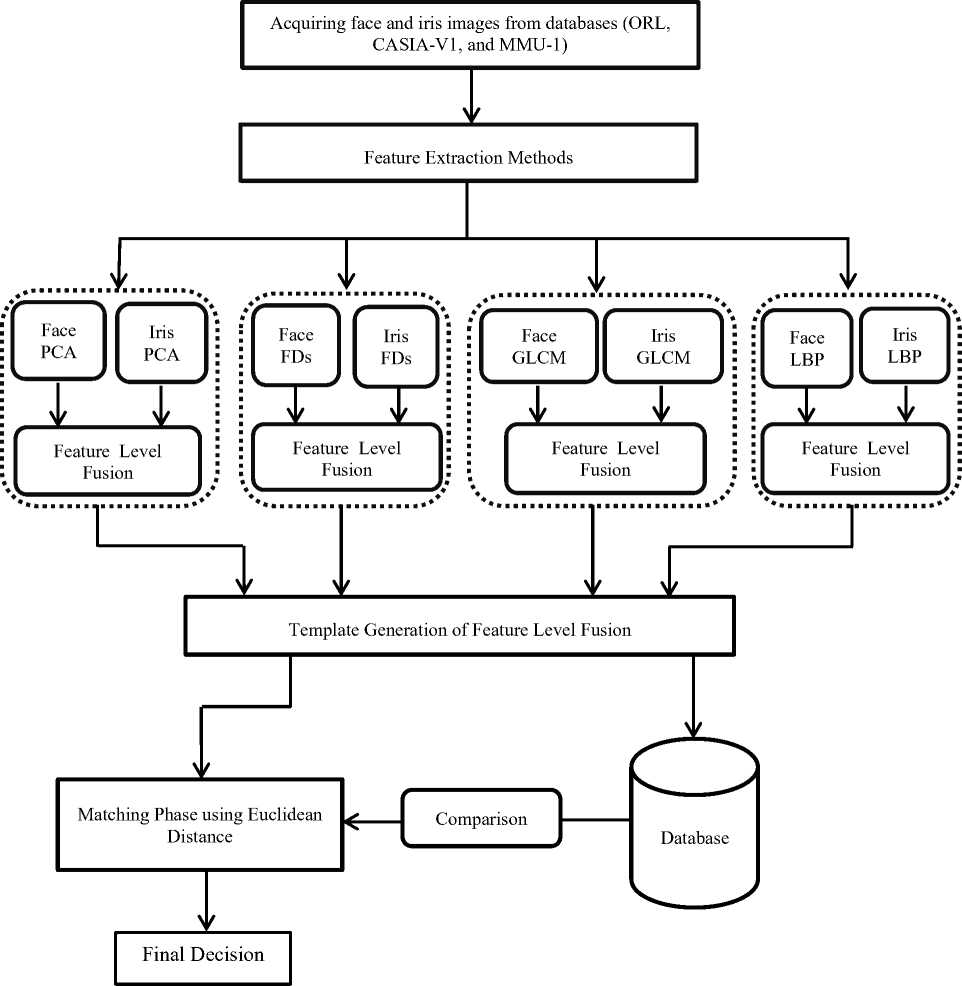

The proposed multimodal biometric system is implemented in four steps: image acquisition, feature extraction, fusion, and matching operation for making decision.

-

i. Feature extraction methods

The four feature extraction methods: PCA, FDs, GLCM, and LBP are applied to extract the features from face and iris images. The result of this stage is called feature vector or pattern which is stored in the template database.

-

A. PCA

The PCA method was used in many linear applications; it provides many facilities such as: feature extraction, data compression, dimension reduction, and redundancy removal. The process of PCA transforms image space into Principle Component space [10]. The covariance matrix, Eigenvalues, and Eigenvectors are computed using (1), (2), and (3):

mean (Л) = 1 En=1a/(1)

cov(a,b) = yi=M-A0 (bi— Bi)(2)

det( cov(a,b) - Xi) = 0(3)

Where: А / and B , are the mean values for a specific vector, while a / and b / are the current values in the vector a and b . The number of rows in the vectors is represented byn.

-

B. FDs

It is a fundamental method for shape describing using a range of spatial frequencies that suitable the border points called Fourier coefficients. The Fourier coefficients are complex number where a small set of these coefficients are used to describe the shape-signature [11].

The first step of FDs procedure is computed the boundary points and registered their complex coordinates. The centroid distance is applied to find the shape signature where it represents the position of shape from the boundary using (4) and (5). The Fourier transform values are computed using (6), it is major step in FDs procedure, where the frequency values are produced with the Direct Current value [11,12,13].

r(t) = 7 C^t) — xd)2 + (y(t) — y^)2(5)

Where:

-

*=1»(О , yd^l^^yd)(6)

FD^y.^1 r(t)expp2^)(7)

Where N refers to sampling number, x(t) and y(t) indicate to complex number of boundary points. ( xd , yd ) represent the centroid of the shape, while FDn called FDs coefficients.

-

C. GLCM

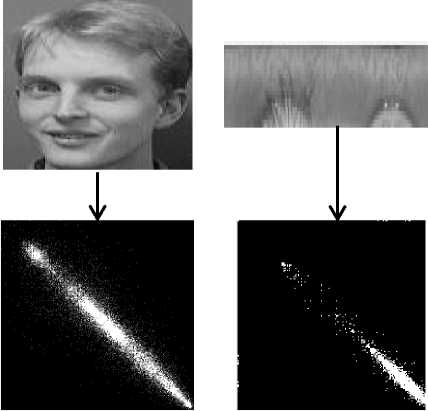

It is a statistical method that is utilized to extract the second order texture features for any interested object. The operation of GLCM is counted how many times the pairs-of-pixels with particular value and direction that appears in image as shown in fig.1. The GLCM matrix is a two dimensional matrix contains the texture features that extracted from image with a square size equals to the number of level. There are four texture features can be obtained from GLCM matrix: contrast, correlation, homogeneity, and energy [14].

GLCM Feature Matrix

Fig.1. GLCM texture features.

-

D. LBP

The operation of LBP depends on the eight neighbors of the present pixel, where the center pixel is used as a threshold for its neighbors using (7) and (8). The final code of center pixel is generated by combined the binary coding of its eight neighbors using (9). The LBP operation could be expanded to include different number of neighbors by generating a circle of neighbor points around the center pixel with radius equals toR. The gray value of neighbors is computed using bilinear interpolation and then the comparison is computed between the neighbors values and center [15].

-

ii. Fusion the face and iris features

The features of each trait are extracted separately then serial rule is used to implement the fusion technique where a combination of face and iris features is created sequentially to produce a new features template for classification step. Based on the four feature extraction methods, four multimodal biometric systems are produced as shown in fig.2. The concept of serial rule is [8]:

fusionFI = {XF1,XF 2 ,^.XF q , Y11,Y1 2, ^Ylm} (10)

Where:

XFq : refers to the facial features with vector size q.

Y1m : refers to the iris features with vector size m, where m ,q: are not equal.

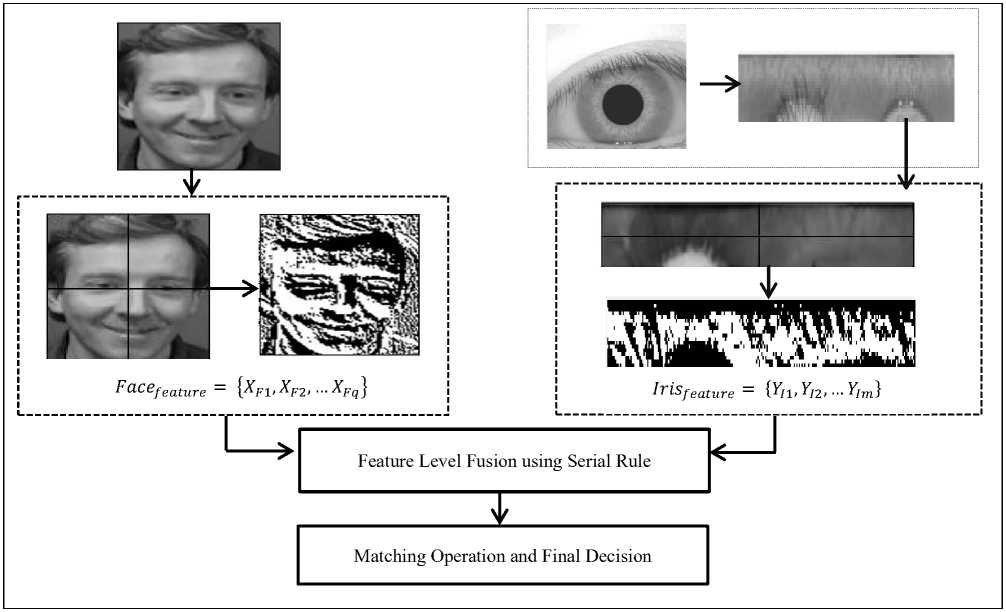

The fusion between the two traits (face and iris) has several advantages, like: the stability of iris over human life, accurately of both face and iris system, and also the reliability. All these benefits would be produced in one system known multimodal biometric system. Fig.3 is an example of fusion between the features of face and iris using LBP method. In this example, each trait image must be divided into blocks, where the uniform LBP procedure is applied on each block. Then, the pattern results of each block are concatenated in one vector to produce the final features of this image. In this step the features of face and iris trait is ready to be fused using serial rule and generated fusion template which it is passed through the matching stage and making final decision.

XL = Sp - gc(7)

, , (1 xL > 0)

s(Xl) = {0 xL< 0}

LBPPJt(xc,yc) = ZP=0s(xl)2p(9)

IV. Experimental Results

Where gp and. gc refers to the gray value of neighbors and center pixel respectively. While 2p indicates to binomial value. The two parameters xc and yc are referred to the coordinates of the center pixel. P refers to the total number of neighbors, while p refers to the current neighbor value.

There are two types of LBP code: uniform and nonuniform pattern, where the uniform pattern extracts the most important features like edge, corner, end line and it is used in this work. The LBP code is called uniform pattern when it has two transitions from 0 to 1 or vice versa and also if there is no transitions in bits. This type is saved memory where the total length of uniform code is equal to P(P — 1) + 3, while non-uniform is equal to 2P[15].

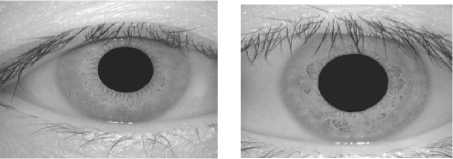

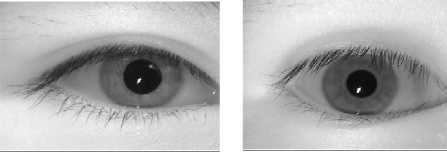

In this work, the Olivetti Research Laboratory (ORL) database is considered for face images, while Chinese Academy of Sciences Institute of Automation (CASIA-V1) is considered for high quality iris image and MultiMedia University (MMU-1) databases is proposed for low quality iris images, where, the right iris images are used for both CASIA-V1 and MMU-1 database. Forty subjects are classified; each one has 10 samples (9 for training and 1 for testing). Fig.4 and 5 show an example for iris and face traits of the proposed databases.

The performance of proposed multimodal system is measured using three important statistics: False Acceptance Rate (FAR), False Rejection Rate (FRR), and Accuracy rate where explained as followed:

FAR

FRR

Number of accepted imposter

Total numberof imposter assessed

Number of rejection genuine Total numberof genuine assessed

x 100

x 100

Accuracy % = ( N = ) x 100 (13)

Where NG : refers to number of genuine samples and NT: refers to the number of total samples.

Fig.2. The implementation of proposed multimodal biometric system .

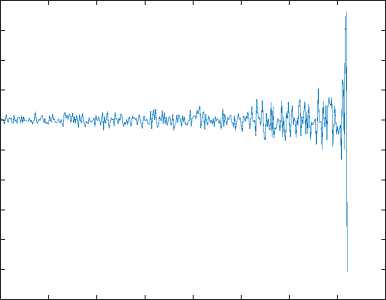

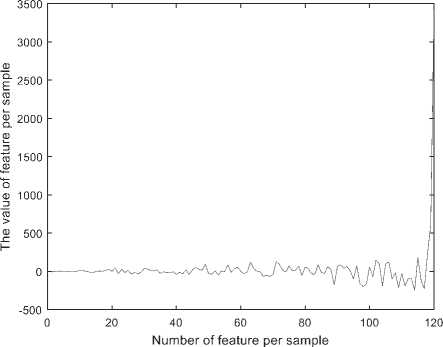

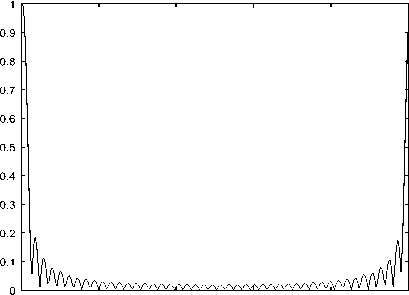

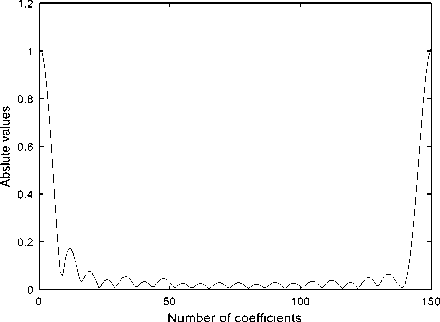

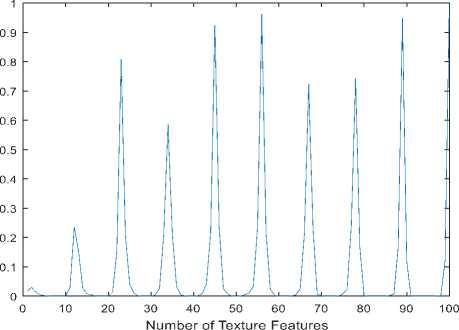

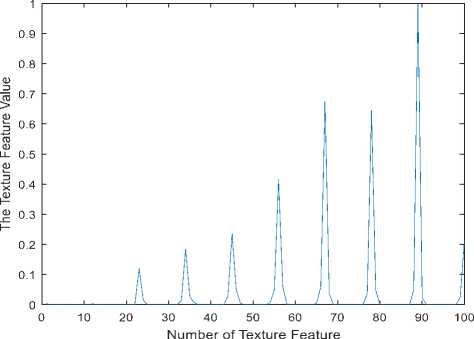

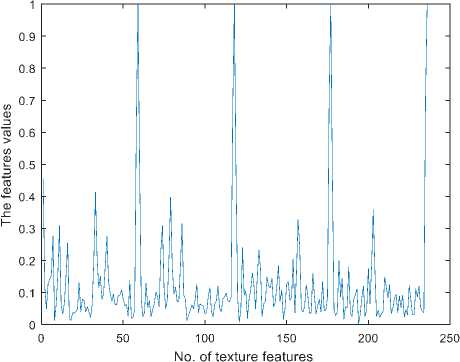

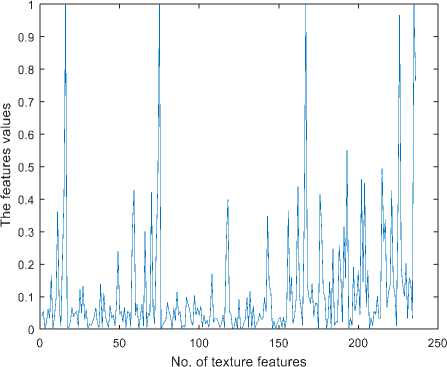

The features of face and iris trait which are extracted using PCA, FDs, GLCM, and LBP methods are graphed in fig.(6.a), (6.b), (7.a), (7.b), (8.a), (8.b), (9.a) and (9.b) respectively. The number of Eigen values is 360 for face and 120 for iris image, while the number of FD coefficients is 250 for face and 150 for iris image.

The GLCM has a minimum vector size among other methods, it has 100 feature value for both face and iris image. Finally, the LBP has also 236 feature value for both face and iris image.

Table 1 summarizes the accuracy results of four multimodal biometric systems where the fusion technique is performed in feature level for two different iris databases. It is clearly that the texture analysis method, GLCM satisfies better performance among all others.

Fig.3. Fusion the face and iris LBP features.

(a)

(b)

Fig.4: Samples of iris trait: (a) CASIA-V1 database; (b) MMU-1 database.

Fig.5: Samples of ORL database.

2000 1500

Ф 1000 ro 500 0 ro -500 о -1000 5 -1500 5 -2000 -2500 -3000

0 50 100 150 200 250 300 350 400

number of feature per sample (a)

(b)

Fig.6. The representation of features using PCA method; (a) Face features, (b) Iris features.

50 100 150 200 250

Number of coefficent

(b)

(a)

The Texture Fature Values

Fig.7. Feature representation using FDs method: (a) Face signature; (b) Iris signature.

-

(a) (b)

Fig.8: Feature representation using GLCM method: (a) Face features; (b) Iris features.

(b)

Fig.9. Feature representation using LBP method: (a) Face texture feature; (b) Iris texture features

(a)

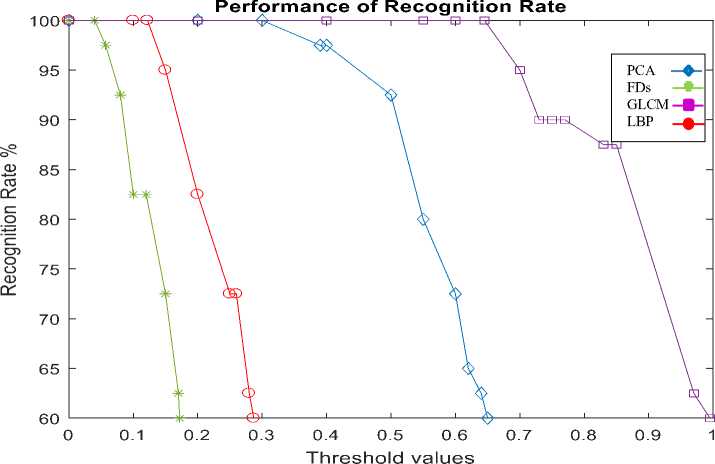

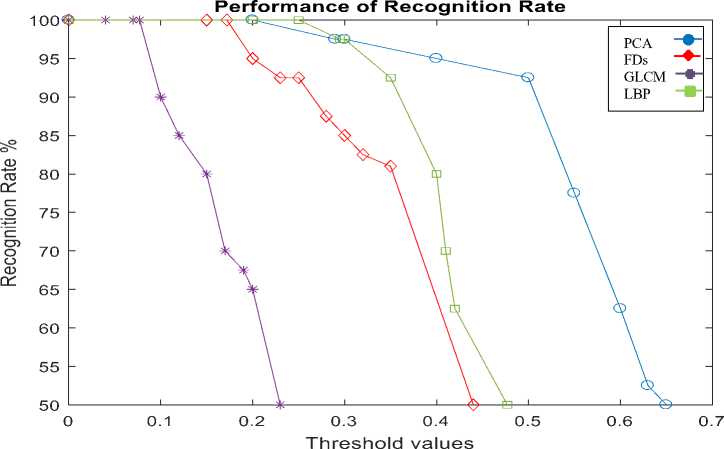

Table 2 shows comparison results between the proposed methods and other related works. It is clearly shown that GLCM, LBP, and FDs advance other approaches in satisfying maximum accuracy rate. The accuracy rates of the four multimodal systems are explained in fig.10 and fig.11 for CASIA-V1-ORL database and MMU-1-ORL database respectively. It is noticed from those two figures that the texture analysis method like GLCM is very sensitive and more affected by image quality, so its threshold values has wide difference range comparing to the more stable methods like PCA.

Table 1. The classification accuracy rate of multimodal biometric systems for 40 subjects.

|

Database |

Methods |

FRR% |

FAR% |

Accuracy Rate % |

|

ORL and CASIA-V1 |

PCA |

0.0309 |

0.0309 |

97.5 % |

|

FDs |

0.0309 |

0.0309 |

97.5 % |

|

|

GLCM |

0 |

0.0317 |

100 % |

|

|

LBP |

0 |

0.0317 |

100 % |

|

|

ORL and MMU-1 |

PCA |

0.0309 |

0.0309 |

97.5 % |

|

FDs |

0 |

0.0317 |

100 % |

|

|

GLCM |

0 |

0.0317 |

100 % |

|

|

LBP |

0.0309 |

0.0309 |

97.5 % |

Table 2. Comparison between proposed methods and other related methods.

|

Method Reference |

Type of Fusion |

Database Type |

Accuracy Rate % |

|

D. Sharma and A. Kumar, 2011 [16] |

Feature level fusion |

CASIA and AT&T |

97.498 |

|

Sharifi et al., 2016 [9] |

Feature level fusion |

CASIA-Iris-Distance |

94.91 |

|

Proposed methods (GLCM and LBP) |

Feature level fusion |

CASIA-V1 and ORL |

100 |

|

Proposed method (GLCM and FDs) |

Feature level fusion |

MMU-1 and ORL |

100 |

|

Proposed methods (PCA and FDs) |

Feature level fusion |

CASIA-V1 and ORL |

97.5 |

|

Proposed methods (PCA and LBP) |

Feature level fusion |

MMU-1 and ORL |

97.5 |

Fig.10. Recognition rate of four multimodal system using CASIA-V1 and ORL database

Fig.11. Rrecognition rate of four multimodal systems using MMU-1 and ORL database.

-

V. Conclusion

References

Список литературы Multimodal biometric system based face-iris feature level fusion

- Pushpa Dhamala, "Multibiometric Systems", M.Sc. Thesis, Norwegian University of Science and Technology, Department of Telematics, June 2012.

- Y. Faridah, Haidawati Nasir, A.K. Kushsairy, Sairul I. Safie, "Multimodal Biometric Algorithm: A Survey", Biotechnology, Vol.15, No.5 , PP 119-124, July 2016

- S.Mohana Prakash, P.Betty, K. Sivanarulselvan, “Fusion of Multimodal Biometrics using Feature and Score Level Fusion”, International Journal on Applications in Information and Communication Engineering, Vol. 2: Issue 4, pp. 52-56, April 2016

- Ching-Han Chen, Chia Te Chu, "Fusion of Face and Iris Features for Multimodal Biometrics", Springer-Verlag Berlin Heidelberg, I-Shou University, Institute of Electrical Engineering, PP. 571 – 580, 2005.

- Nidhi Srivastava, "Fusion Levels in Multimodal Biometric Systems– A Review ", International Journal of Innovative Research in Science, Engineering and Technology, Vol. 6, Issue 5, May 2017.

- S. Anu H Nair, P. Aruna, M. Vadivukarassi " PCA BASED Image Fusion of Face And Iris Biometric Features", International Journal on Advanced Computer Theory and Engineering (IJACTE), Annamalai University, CSE Department, Vol.1, Issue 2, 2013.

- Hiew Moi Sim, Hishammuddin Asmuni, Rohayanti Hassan, Razib M. Othman, "Multimodal biometrics: Weighted score level fusion based on non-ideal iris and face images", Expert Systems with Applications, University Teknologi Malaysia, pp. 5390–5404, 2014

- Qihui Wang, Bo Zhu1, Yu Liu, Lijun Xie, Yao Zheng, "Iris-Face Fusion and Security Analysis Based on Fisher Discriminant", International Journal on Smart Sensing and Intelligent Systems, Vol. 8, No. 1, March 2015.

- Omid Sharifi, Maryam Eskandari, "Optimal Face-Iris Multimodal Fusion Scheme", Symmetry, Toros University, Department of Computer and Software Engineering, 2016

- J. Meghana, Kavyashree K, Naveen Kumar G, Supriya H S, C Gururaj, "Iris Detection Based on Principal Component Analysis with GSM Interface", International Journal of Advances in Electronics and Computer Science, Faculty of Engineering, Department Of Telecommunication Engineering, Vol. 2, Issue 7, July 2015

- M. Nixon and A. Aguado, "Feature Extraction & Image Processing for Computer Vision", third edition, AP Press Elsevier, 2012.

- D. Zhang, G. Lu., "A Comparative Study on Shape Retrieval Using Fourier Descriptors with Different Shape Signatures". The 5th Asian Conference on Computer Vision. 23-25th January 2002.

- A. Amanatiadis, V. Kaburlasos, A. Gasteratos and S. Papadakis, "Evaluation of shape descriptors for shape-based image retrieval", The Institution of Engineering and Technology, Vol. 5, Issue 5, pp. 493-499, 2011.

- Redouan Korchiynel, Sidi Mohamed Farssi, Abderrahmane Sbihi, Rajaa Touahni, Mustapha Tahiri Alaoui, "A Combined Method of Fractal and GLCM Features for MRI and CT Scan Images Classification", Signal & Image Processing : An International Journal (SIPIJ), Vol.5, No.4, August 2014

- Md. Abdur Rahim, Md. Najmul Hossain, Tanzillah Wahid, Md. Shafiul Azam, "Face Recognition using Local Binary Patterns (LBP)", Global Journal of Computer Science and Technology Graphics & Vision, Vol.13, Issue 4, 2013.

- Deepak Sharma, Ashok Kumar, "Multi-Modal Biometric Recognition System: Fusion of Face and Iris Features using Local Gabor Patterns ", International Journal of Advanced Research in Computer Science, Vol.2, No. 6, 2011.