Optimizing VGG16 for Accurate Pest Identification in Oil Palm: A Comparative Study of Fine-Tuning Techniques

Автор: Muhathir, Andre Hasudungan Lubis, Dwika Karima Wardani, Mahardika Gama Pradana, Ilham Sahputra, Mutammimul Ula

Журнал: International Journal of Information Engineering and Electronic Business @ijieeb

Статья в выпуске: 5 vol.16, 2024 года.

Бесплатный доступ

Recent advancements in pest classification using deep learning models have shown promising results in various agricultural contexts. The VGG16 model, known for its robust performance in image classification, has been applied to the task of classifying pests in oil palm plants. This study aims to evaluate the effectiveness of the VGG16 model in identifying pests on oil palm, comparing the performance of default settings with models fine-tuned using grid search and random search techniques. We employed a quantitative approach, training the VGG16 model with three different configurations: default, fine-tuned with grid search, and fine-tuned with random search. Evaluation metrics including precision, recall, F1-Score, and overall accuracy were used to assess model performance across different pest categories: Metisa plana, Setora nitens, and Setothosea asigna. The default VGG16 model achieved precision, recall, and F1-Score values around 90% for Metisa plana, Setora nitens, and Setothosea asigna, with an overall accuracy of 91.00%. Fine-tuning with grid search improved these metrics, with precision, recall, and F1-Score reaching approximately 93.88%, 92%, and 92.93% respectively, and an overall accuracy of 93%. The random search fine-tuning resulted in even higher performance, with precision of about 95.92%, recall of 94%, and F1-Score of 94.95% for Metisa plana, and overall accuracy of 94.67%. The VGG16 model demonstrated strong performance in pest classification on oil palm, with significant improvements achieved through fine-tuning techniques. The study confirms that grid search and random search fine-tuning can substantially enhance model accuracy and efficacy. Future research should focus on expanding the dataset to include more diverse pest species, incorporating attention mechanisms, and leveraging automated control technologies like drones and the Internet of Things (IoT) to further improve pest management practices.

Classification, Grid Search, Pets Oil Palm, Random Search, VGG16

Короткий адрес: https://sciup.org/15019432

IDR: 15019432 | DOI: 10.5815/ijieeb.2024.05.03

Текст научной статьи Optimizing VGG16 for Accurate Pest Identification in Oil Palm: A Comparative Study of Fine-Tuning Techniques

Published Online on October 8, 2024 by MECS Press

Oil palm, a vital agricultural crop, thrives in tropical nations such as Thailand, Papua New Guinea, Malaysia, and Indonesia. The prominence of oil palm is particularly evident in Indonesia's plantation sector [1]. As oil palm plantations expand rapidly, Indonesia has emerged as the world's leading producer of palm oil, contributing over 44% of global production. Renowned for its high productivity, oil palm yields more oil per hectare compared to other oilproducing crops. This positions Indonesia as a crucial player in the global economy, serving as a major supplier of crude palm oil (CPO) [2].

However, the cultivation of oil palm is beset by numerous challenges, with pest and disease infestations posing significant threats to plant health. Despite their natural resilience, oil palm plants are vulnerable to the detrimental effects of pests and diseases, which can substantially reduce overall yield [3]. Insect attacks on oil palms are particularly harmful, potentially stunting growth and diminishing yield. Broadly speaking, productivity losses due to pest infestations alone could reach 70%, with total damage potentially rising to 100% when combined with disease outbreaks [4].

Pest detection remains one of the most formidable challenges in agricultural production, accounting for 20% of global crop losses annually [5], [6]. In 2021, nearly 400 million hectares of land in China alone were affected by plant diseases and pests. Thus, the timely and accurate identification of pests and diseases in crops is essential for maintaining agricultural productivity. Effective pest and disease identification not only enhances crop yields but also propels the agricultural sector forward and boosts farmers' incomes [7]. Developing artificial intelligence models based on agricultural image processing has proven to be a highly effective strategy. These models excel at identifying pests and categorizing them into distinct groups [8], enabling more efficient responses and interventions against pests in agricultural production. Consequently, agricultural productivity losses are minimized, and pest detection efficacy is enhanced.

To overcome these obstacles, scientists have developed more potent pest detection systems by utilizing both conventional machine learning (ML) methods and deep learning-based models [9,10]. Traditional insect detection methods based on morphological traits often rely on skilled taxonomists for accurate identification, introducing limitations [11]. Recognizing these limitations is crucial. Numerous automated methods using traditional machine learning for pest detection have been proposed recently [12]. For instance, Faithpraise and colleagues presented a K-means clustering technique for pest detection [13]. However, this approach requires laborious procedures due to its manual feature extraction and filter application, especially with large datasets. Rumpf and colleagues proposed using support vector machines based on vegetation spectra for disease recognition in sugar beet crops [14]. While traditional machine learning-based models are useful for detecting pests, their limitations restrict overall efficiency. Manual feature extraction and classification processes in traditional ML methods are labor-intensive, time-consuming, error-prone, and require significant computer expertise. To circumvent these limitations and achieve more effective pest detection, integrating deep learning-based techniques with machine learning is increasingly important [15].

Technology holds great promise for helping farmers prevent diseases early and identify harmful insects effectively [16]. Notably, computer vision and imaging technologies have become highly effective tools with broad applications, particularly in modern agriculture. Several detection techniques combining automation and image processing have started to meet the fundamental needs for controlling pest infestations. For example, Kasinathan and colleagues used machine learning techniques to categorize insect pests by their physical characteristics [17]. Similarly, Chiwamba and Nkunika pioneered an automated system to identify moths in the field using supervised machine learning techniques [18]. In another setting, Tageldin and his colleagues used machine learning algorithms to forecast leafworm infestations in greenhouse environments [19]. Machine learning models are typically built to function independently, but they need to be rebuilt when attributes and data change. In contrast, the transfer learning approach aims to reduce the time and effort needed to develop new models by leveraging pre-existing knowledge and existing models. Compared to a standalone learning model, this method can enhance model performance.

A concept within transfer learning known as fine-tuning has proven effective because it is quicker and more accurate than building models from scratch [20]. Fine-tuning a convolutional neural network (CNN) involves initially training it for a related task and then modifying the final layer of the model to accommodate new data [21]. CNN-based transfer learning models have been extensively applied to various agricultural challenges, including plant disease recognition [22], fruit classification [23], weed identification [24], and crop pest classification [25,26], as highlighted by Kamilaris and Prenafeta-Boldú [27]. These models have become reliable tools for image classification in agricultural settings, aiding farmers in identifying practical and efficient pest management techniques, thus reducing substantial financial losses.

Understanding the different types of pests, their attack patterns, and the extent of the damage they cause is significantly enhanced by knowing how oil palm plants are classified [28]–[30]. This knowledge empowers farmers to select the most practical and successful pest management strategies. Research on developing pest recognition technology for oil palm cultivation has increasingly focused on deep learning [31]–[34]. Using deep learning for pest recognition represents a novel approach to automatically classify pests in oil palm. Deep learning techniques train computer algorithms to identify patterns and features [35,36] found in images of insect pests that damage oil palm plants [37,38].

Within the framework of this study, the following specific goals are established:

1- Classify pest cases on oil palm plants, such as Metisa plana, Setora nitens, and Setothosea asigna, using the Convolutional Neural Network (CNN) architecture, specifically VGG16.

2- Evaluate the performance of the VGG16-based classification method in identifying pests on oil palms by introducing model fine-tuning through grid search and random search, aiming to improve the model's accuracy and efficacy.

3- Examine relevant visual cues to assist in the ongoing efforts to identify and manage pests on oil palm plants, contributing to more intelligent and effective pest management decisions for farmers and agricultural professionals.

2. Research Methodology

2.1. Research Framework2.2. Data collection and Pre-Processing data

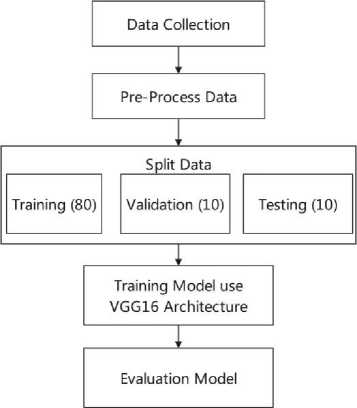

Figure 1 depicts the research framework created for this study.

Fig. 1. Research framework model utilized in this study for oil palm pest classification

The research architectural model employed in this study to categorize pests in oil palm plantations is illustrated in Figure 1. The initial step in the research process involves data collection, followed by pre-processing to create a dataset comprising suitable and unsuitable images representing pest samples. Subsequently, the dataset is divided into three subsets: training, validation, and testing. The training dataset is utilized to train the deep learning model, which employs the VGG16 architecture. After training, the validation dataset is used to assess the model's accuracy and performance. This assessment includes calculating relevant metrics such as accuracy, precision, recall, and F1-score (Equations 1-4). Finally, testing the model on the independent testing set determines its effectiveness. This comprehensive approach ensures that the developed pest classification model for oil palm plantations is reliable and robust.

For this study, data on pests in the oil palm plantation of the Siantar Oil Palm Research Center was meticulously gathered using a rigorous technical methodology. The primary pests under investigation, Metisa plana, Setora nitens, and Setothosea asigna, were captured as photographs. Each pest was carefully photographed to record its distinctive attributes, maintaining a shot distance of 15 to 20 cm to ensure precise and relevant information.

Metisa plana is characterized by its uniquely patterned wings and body color, which often alternates between brown and green. Setora nitens, in contrast, has a distinct morphology with longer wings and more vibrant body colors.

Setothosea asigna is primarily identified by its transparent wings with distinct pattern marks. This methodical and comprehensive photography process results in highly accurate data relevant to our study objectives on the oil palm plantation.

During the data preparation stage, critical measures are taken to preserve the quality of the data for use. Data cleaning is the first step, involving the identification and correction of any noise or anomalies present in the dataset. This step ensures the integrity of the data before analysis begins. Following this, the image data is processed by resizing the images to uniform sizes for further processing. In the context of image recognition or analysis tasks, resizing ensures that all images have consistent proportions.

Finally, data augmentation techniques are applied to enhance the diversity of the image dataset. Data augmentation operations include rotation, shearing, zooming, width shift, height shift, and vertical flip. These operations introduce variability to the training data, helping machine learning models comprehend various image variations they might encounter in real-world scenarios. Data augmentation is a valuable technique in image recognition and processing, as it can improve model performance and prevent overfitting. Once the cleaning, resizing, and augmentation processes are completed, the data is prepared for further analysis or deep learning model training.

-

2.3. Split Data

The dataset comprises 3000 photos, divided into three pest categories, each containing 1000 images, in preparation for the analysis and training of the VGG16 deep learning network. These categories include Setora nitens, Setothosea asigna, and Metisa plana. This dataset was then divided into three distinct sets. The first set, the training data, is used to train the model, allowing it to recognize patterns in the data. The second set, the validation data, is used to evaluate the model's performance during training, select the optimal parameters, and prevent overfitting. The third set, known as the test data, is used to test the trained model with previously unseen data, providing a more accurate estimate of the model's applicability to real-world data. The 80:10:10 split ensures that the models are thoroughly tested, impartially assessed, and trained with an adequate amount of data before being employed in real-world applications.

-

2.4. Performa Measure

An effective method for evaluating an object estimation model's accuracy is the confusion matrix. It provides a thorough understanding of the model's performance by contrasting the predicted classification results with the actual class labels. The degree to which the model's predictions match the actual values is indicated by this method's accuracy. On the other hand, precision measures the accuracy of a prediction or its proportion. The model's recall quantifies its capacity to recognize accurate affirmative answers. Combining recall and precision yields the f1-score, which offers a fair and comprehensive evaluation of the model's performance. The following formulas can be used to compute these metrics, where TP, TN, FP, and FN stand for true positive, false negative, false positive, and false negative, respectively [39].

Accuracy =

TN+TP

TN+FP+TP+FN

Precision = (2)

TP+FP

Recall = -T^(3)

TP+FN

, 2*Presicion*Recall,

I = (4)

Presicion+Recall

2.5. VGG16 Framework

2.6. Fine-tune VGG16 Framework

3. Result and Discussion

3.1. Oil Palm Pest Samples

VGG16 is a deep learning architecture developed by the Visual Geometry Group at Oxford University, known for its simplicity and depth in image classification tasks. The architecture consists of 16 layers, which include 13 convolutional layers and 3 fully connected layers, organized sequentially. A small convolution filter of 3x3 size is used to capture complex features from the input image with high computational efficiency. The main advantage of VGG16 lies in its ability to learn and represent complex patterns in visual data, making it highly effective for various image recognition tasks [40].

In the context of modern agriculture, crop pest detection faces significant challenges due to complex variations in color, size, shape, and background. To address this issue, a deformable VGG-16 (DVGG-16) model was developed by integrating deformable convolution layers and global average pooling. The DVGG-16 model shows a detection accuracy of 91.14%, outperforming other models and proving to be effective in handling geometric deformations in plant pest images in the field [41]. In another study, the CNN VGG-16 model was used to detect and monitor insect pests on eggplant plants using artificial intelligence (AI). With 204 insect images classified, the model achieved accuracy between 95% to 98% as well as an F1 score of 0.89, indicating consistent detection performance [42].

For applications in pest classification in oil palm plantations, VGG16 can be optimized to recognize specific features of pests such as Metisa plana, Setora nitens, and Setothosea asigna. With training using thoroughly prepared datasets and techniques such as data augmentation and transfer learning, VGG16 can achieve high accuracy in pest identification and classification. The selection of VGG16 is based on its strong track record in image classification, its ability to handle visual complexity, and its flexibility in adaptation through fine-tuning for specific applications, ensuring optimal performance in pest identification in oil palm.

Fine-tuning the selected VGG16 model to optimize its performance in the context of palm oil pest classification was achieved through meticulous parameter adjustments. Employing grid search and random search techniques, we systematically varied parameters such as batch size, and choice of optimizers (SGD, RMSprop, Adagrad, etc.) to determine the most effective combination. Through a comprehensive evaluation using grid search, we scrutinized each predefined parameter set in param_grid. Subsequently, with random search, we conducted experiments using diverse parameter combinations. These dual parameter search methodologies allowed us to pinpoint the optimal parameters, resulting in a model that demonstrated superior accuracy in identifying pests in oil palm.

Three major pest species—Metisa plana, Setora nitens, and Setothosea asigna—are included in the sample data of pests on oil palm plants in this study. This dataset includes a range of photos that depict different field conditions and circumstances .

(a) (b) (c)

Fig. 2. Oil Palm Pest (a) Metisa plana (b) Setora nitens (c) Setothosea asigna

-

3.2. Training VGG16

-

3.3. Fine-Tuning VGG16 with Grid Search

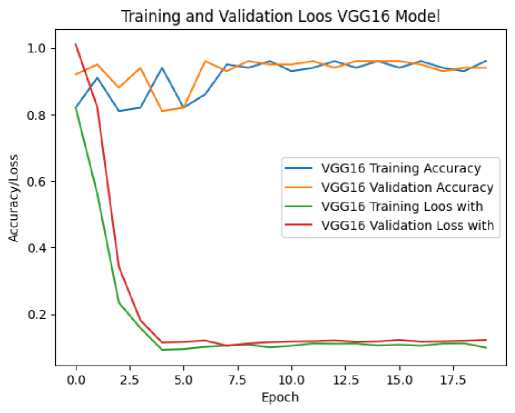

We ran the VGG16 model on our preprocessed dataset in order to train it for pest classification on oil palms. Three different pest types on oil palms were identified using VGG16, which is well-known for its efficiency in image classification tasks: Metisa plana, Setora nitens, and Setothosea asigna. Using the Adam optimizer, the model was trained for 20 epochs with a batch size of 16. Over the course of the 20 epochs, the VGG16 model's training results showed a noticeable improvement. On the training data, the model's accuracy was roughly 94.67% at first, and by the end of the training, it had increased to 97.76%.

Fig. 3. Training VGG16 Model

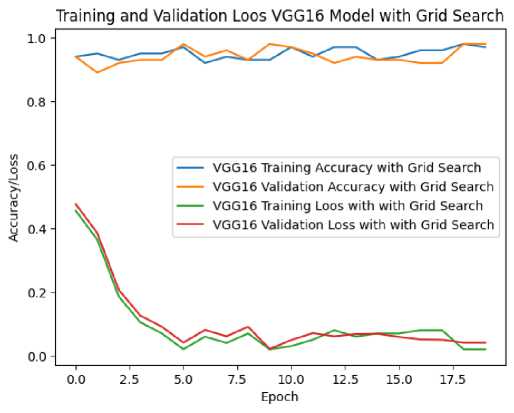

To achieve optimal results, several experiments were conducted during the VGG16 model's training phase for classifying pests on oil palms, with the number of epochs limited to 20. The significant increase in model accuracy observed in these experiments can be attributed to the fine-tuning procedure. Grid search was employed to explore various parameter combinations to identify the one that produced the highest accuracy. The experimental findings highlight the importance of precise parameter tuning in enhancing the VGG16 model's ability to recognize pests on oil palm plants. The results from the grid search for finding the optimal hyperparameters are presented in Table 1.

Table 1. Best Hyperparamter Using Grid Search

|

Hyperparameter |

Fine Tune Grid Search |

|

Batch Size |

Best: {'batch_size': 64} |

|

Learning Rate |

Best: {'optimizer_learning_rate': 0.001} |

|

Optimizer |

Best: {'optimizer: 'SGD'} |

During the training phase of the VGG16 model, fine-tuning with grid search was employed to classify pests in oil palms. Over 20 epochs, the model's performance was meticulously evaluated, revealing notable trends in both training and validation metrics. The training accuracy started at 94%, fluctuating slightly, but consistently improved, reaching up to 98% by the final epoch. Validation accuracy showed initial variability, with a starting point of 94%, but demonstrated a steady increase, achieving 98% in the later stages. Training loss decreased significantly from 0.456 to 0.02, indicating the model's improved ability to fit the training data. Similarly, validation loss showed a marked reduction from 0.477 to 0.041, reflecting the model's enhanced performance on unseen data.

Fig. 4. Training VGG16 with Grid Search

-

3.4. Fine-Tuning VGG16 with Random Search

-

3.5. Evaluation

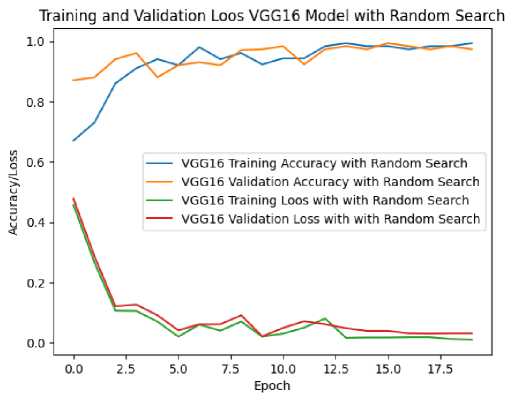

To achieve optimal results, a series of experiments were conducted during the training phase of the VGG16 model using random search for classifying pests in oil palms. The significant improvement in model accuracy observed in these experiments can be attributed to the fine-tuning process. By employing random search, various parameter combinations were explored to identify those yielding the highest accuracy. The experimental outcomes underscore the importance of precise parameter tuning in enhancing the VGG16 model's ability to accurately identify pests in oil palm plants. Table 2 presents the results of using random search to determine the optimal hyperparameters.

Table 2. Best Hyperparamter Using Random Search

|

Hyperparameter |

Fine Tune Random Search |

|

Batch Size |

Best: {'batch_size': 32} |

|

Learning Rate |

Best: {'optimizer_learning_rate': 0.001} |

|

Optimizer |

Best: {'optimizer: Adam} |

The VGG16 model underwent extensive training with fine-tuning through random search, focusing on optimizing its performance in classifying pests on oil palm plants. Over 20 epochs, the model showed significant improvements, with training accuracy rising from 67% to 99.29% and training loss decreasing from 0.456 to 0.01. Validation accuracy followed a similar trend, starting at 87% and peaking at 99.29%, while validation loss dropped from 0.477 to 0.031. The model's performance was marked by steady improvements in both accuracy and loss metrics, demonstrating its ability to learn and generalize effectively. The detailed epoch analysis highlighted rapid initial gains in accuracy and loss reduction, with stability achieved in the later stages.

Fig. 5. Training VGG16 with Random Search

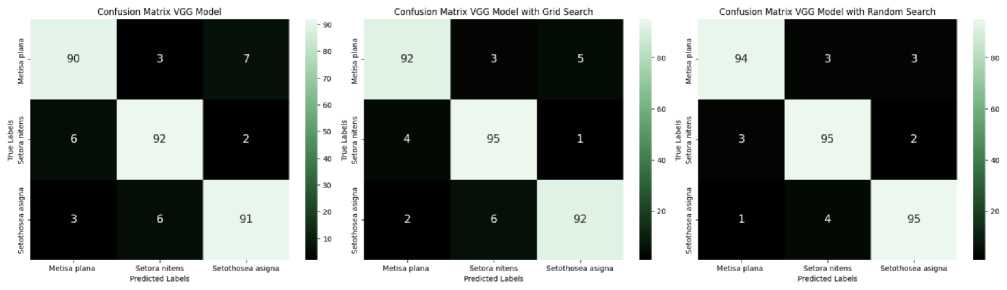

The evaluation phase involves a comprehensive examination of the VGG16-based models that have been developed, providing insight into their performance in classifying pests in oil palm plants. Various metrics such as accuracy, precision, and recall are used in this thorough evaluation to present a detailed picture of the models' performance in the assigned task. This assessment is crucial for determining each model's effectiveness. A direct comparison is made between the default VGG16 model, the improved model via grid search, and the optimized model using a random search strategy. The subsequent discussion explores whether fine-tuning yields significant gains in model performance and identifies which model is best suited for classifying pests in oil palms. Figures 6 visually depict the classification results for the three pest categories (Metisa plana, Setora nitens, and Setothosea asigna) using the three VGG16 training models.

Moreover, Tables 3-5 provide an extensive analysis of the performance metrics of the three VGG16 models, offering a thorough assessment of their classification capabilities. These tables present metrics for accuracy, gain, precision, and F1 Score for each scenario, which are crucial for evaluating how well the models perform. Table 5 corresponds to VGG16 with fine-tuned random search, Table 4 to VGG16 with fine-tuned grid search, and Table 3 to the default VGG16. Analyzing these metrics in detail facilitates understanding the model's performance across different scenarios and helps determine the best strategy for the oil palm pest classification model. These tables play a key role in interpreting the strengths and weaknesses of each scenario, guiding data-driven decisions to enhance and improve the model's classification efficacy. The performance metrics and confusion matrix analysis provide valuable insights that can be used to refine the model and achieve better classification results.

(b)

(a)

(c)

Fig. 6. Confusion matrix (a) VGG16 (b) VGG16 with Grid Search (c) VGG16 with Random Search

Table 3. Performance of VGG16 for Palm Oil Pest Classification

|

Class |

Precision |

Recall |

F1-Score |

Support |

|

Metisa plana |

0.9091 |

0.9000 |

0.9045 |

100 |

|

Setora nitens |

0.9109 |

0.9200 |

0.9154 |

100 |

|

Setothosea asigna |

0.9100 |

0.9100 |

0.9100 |

100 |

|

Accuracy |

0.9100 |

300 |

||

|

Macro Average |

0.9100 |

0.9100 |

0.9100 |

300 |

Table 4. Performance of VGG16 with Fine Tune Grid Search for Oil Palm Pest Classification

|

Class |

Precision |

Recall |

F1-Score |

Support |

|

Metisa plana |

0.9388 |

0.9200 |

0.9293 |

100 |

|

Setora nitens |

0.9135 |

0.9500 |

0.9314 |

100 |

|

Setothosea asigna |

0.9388 |

0.9200 |

0.9388 |

100 |

|

Accuracy |

0.9300 |

|||

|

Average |

0.9303 |

0.9300 |

0.9300 |

300 |

Table 5. Performance of VGG16 with Fine Tune Random Search for Oil Palm Pest Classification

|

Class |

Precision |

Recall |

F1-Score |

Support |

|

Metisa plana |

0.9592 |

0.9400 |

0.9495 |

100 |

|

Setora nitens |

0.9314 |

0.9500 |

0.9406 |

100 |

|

Setothosea asigna |

0.9500 |

0.9500 |

0.9500 |

100 |

|

Accuracy |

0.9467 |

300 |

||

|

Average |

0.9469 |

0.9467 |

0.9467 |

300 |

The VGG16 model demonstrated strong performance in classifying pests in oil palm, as reflected in various evaluation metrics including precision, recall, and F1-Score. The model's precision for Metisa plana is approximately 90.91%, recall is approximately 90%, and F1-Score is approximately 90.45%. For Setora nitens, the precision is approximately 91.09%, recall is approximately 92%, and F1-Score is approximately 91.54%. Setothosea asigna achieved an approximate precision of 91.00%, recall of 91.00%, and F1-Score of 91.00%. The overall accuracy of the model is approximately 91.00%, indicating that the VGG16 model performed well in accurately classifying pests on oil palm, with most evaluation metrics falling into the excellent range.

The results of pest classification in oil palm using the VGG16 model that was fine-tuned using a grid search technique show excellent performance. The model achieved a precision of approximately 93.88%, recall of approximately 92%, and F1-Score of approximately 92.93% for the Metisa plana category. In the Setora nitens category, the model's precision is approximately 91.35%, recall is approximately 95%, and F1-Score is approximately 93.14%. For Setothosea asigna, the model exhibits approximately 93.88% precision, 92% recall, and approximately 93.88% F1-Score. The model's overall accuracy of 93% indicates that it performed exceptionally well in classifying pests on oil palm. These results show that fine-tuning the model with grid search has produced a highly accurate and effective model, with evaluation metrics that are almost perfect.

Excellent performance is demonstrated by the pest classification results in oil palm using the VGG16 model that was fine-tuned using a random search technique. The model achieved a precision of approximately 95.92%, recall of approximately 94%, and F1-Score of approximately 94.95% for the Metisa plana category. The model has an approximate precision of 93.14%, an approximate recall of 95%, and an approximate F1-Score of 94.06% in the Setora nitens category. Regarding Setothosea asigna, the model exhibits approximately 95% precision, 95% recall, and approximately 95% F1-Score. The model's overall accuracy of 94.67% indicates that it performed exceptionally well in classifying pests on oil palms. These results demonstrate that using random search to fine-tune the model has resulted in a highly accurate and effective model, with evaluation metrics that are almost perfect.

3.6. Discussion

4. Conclusion

The three models assessed in this study—the default VGG16, VGG16 with fine-tuning using a grid search strategy, and VGG16 with fine-tuning using a random search approach—performed very well when it came to categorizing pests on oil palms. The VGG16 model that was set as default demonstrated good accuracy, recall, and precision. However, fine-tuning with grid search and random search led to near-perfect metric ratings and greatly increased performance. The application of these two refining strategies resulted in the production of an extremely accurate and successful VGG16 model for oil palm pest identification, suggesting significant opportunities for improving pest observation and treatment practices.

Compared to comparable research, the study conducted by Liu et al. [43] yielded an accuracy of approximately 95.1% in identifying annoying insects in rice fields. This efficiently supports crop protection programs as well as increased agricultural production. Likewise, Wang et al.'s work successfully classified crop pests with approximately 91% accuracy, which can assist farmers in raising agricultural productivity. Barbedo and Castro's [44] study's 70% accuracy rate in recognizing psilids raises the possibility of using convolutional neural network techniques for pest identification, even though more accuracy improvements are needed. Meanwhile, a study by Alves et al.[45] provided strong support for pest monitoring and management in cotton cultivation by accurately classifying pests in the field with a 97.8% classification accuracy.

The results of this study constitute a significant contribution to the field of agricultural pest categorization, particularly with regard to oil palm farming. This study uses the improved VGG16model to validate the network's accuracy in classifying pests in oil palm. These discoveries offer crucial new knowledge for the effective management of pests and the preservation of oil palm trees, with applications for both farmers and agricultural scientists. The classification of pests in oil palm plants by this study is considered successful, but there are certain limitations. One of them is the dataset's size, which can be expanded to improve sample diversity. Moreover, it is possible to optimize the model further in order to get a higher level of accuracy.

It is advisable to add additional varied pest species on oil palm in the future to expand the dataset size. Moreover, adding attention mechanisms and fine-tuning the model using methods like Bayesian optimization may result in an even greater accuracy boost. Combining automated control technologies based on drones with the Internet of Things (IoT) would also be advantageous. Drones that have cameras and sensors installed can be used to gather real-time data on the existence of pests in oil palms. The automatic transfer of this data across the Internet of Things network enables an effective reaction to pest infestations.

The results of this study demonstrate that the three evaluated models—the default VGG16, the VGG16 with grid search fine-tuning, and the VGG16 with random search fine-tuning—perform exceptionally well in classifying pests on oil palms. The default VGG16 model exhibited strong recall and precision, achieving high accuracy. Notably, substantial performance improvements were observed with fine-tuning through grid search and random search, with metric evaluations nearing perfection. This study effectively leveraged the VGG16 model for identifying pests in oil palm, surpassing related research in accuracy and performance. Future research should consider expanding the dataset, further optimizing the model, and integrating drone-based automatic control technology with the Internet of Things (IoT) to enhance accuracy and effectiveness in pest monitoring and management.

Список литературы Optimizing VGG16 for Accurate Pest Identification in Oil Palm: A Comparative Study of Fine-Tuning Techniques

- J. A. Widians, M. Taruk, Y. Fauziah, and H. J. Setyadi, “Decision Support System on Potential Land Palm Oil Cultivation using Promethee with Geographical Visualization,” in Journal of Physics: Conference Series, Institute of Physics Publishing, Nov. 2019. doi: 10.1088/1742-6596/1341/4/042011.

- H. Hayata, Y. Nengsih, and H. A. Harahap, “KERAGAMAN JENIS SERANGGA HAMA KELAPA SAWIT SISTEM PENANAMAN SISIPAN DAN TUMBANG TOTAL DI DESA PANCA MULIA KECAMATAN SUNGAI BAHAR TENGAH KABUPATEN MUARO JAMBI,” Jurnal Media Pertanian, vol. 3, no. 1, pp. 39–46, 2018.

- H. J. Saragih and S. Afrianti, “TINGKAT SERANGAN HAMA ULAT KANTUNG (Mahasena corbetti) PADA AREAL TANAMAN MENGHASILKAN (TM) KELAPA SAWIT PT. INDO SEPADAN JAYA,” Perbal: Jurnal Pertanian Berkelanjutan, vol. 9, no. 2, pp. 88–93, 2021.

- L. A. Harahap, R. I. Fajri, M. F. Syahputra, R. F. Rahmat, and E. B. Nababan, “Identifikasi Penyakit Daun Tanaman Kelapa Sawit dengan Teknologi Image Processing Menggunakan Aplikasi Support Vector Machine,” Talenta Conference Series: Agricultural and Natural Resources (ANR), vol. 1, no. 1, pp. 53–59, Oct. 2018, doi: 10.32734/anr.v1i1.96.

- A. N. Amiri and A. Bakhsh, “An effective pest management approach in potato to combat insect pests and herbicide,” 3 Biotech, vol. 9, no. 1, p. 16, 2019, doi: 10.1007/s13205-018-1536-0.

- R. Mateos Fernández et al., “Insect pest management in the age of synthetic biology,” Plant Biotechnol J, vol. 20, no. 1, pp. 25–36, 2022, doi: https://doi.org/10.1111/pbi.13685.

- S. Habib, I. Khan, S. Aladhadh, M. Islam, and S. Khan, “External Features-Based Approach to Date Grading and Analysis with Image Processing,” Emerging Science Journal, vol. 6, no. 4, pp. 694–704, Aug. 2022, doi: 10.28991/ESJ-2022-06-04-03.

- J. Zhou, J. Li, C. Wang, H. Wu, C. Zhao, and G. Teng, “Crop disease identification and interpretation method based on multimodal deep learning,” Comput Electron Agric, vol. 189, p. 106408, 2021, doi: https://doi.org/10.1016/j.compag.2021.106408.

- Z. A. Khan, W. Ullah, A. Ullah, S. Rho, M. Y. Lee, and S. W. Baik, “An Adaptive Filtering Technique for Segmentation of Tuberculosis in Microscopic Images,” in Proceedings of the 4th International Conference on Natural Language Processing and Information Retrieval, in NLPIR ’20. New York, NY, USA: Association for Computing Machinery, 2021, pp. 184–187. doi: 10.1145/3443279.3443283.

- R. Ullah et al., “A Real-Time Framework for Human Face Detection and Recognition in CCTV Images,” Math Probl Eng, vol. 2022, p. 3276704, 2022, doi: 10.1155/2022/3276704.

- H. Al-Hiary, S. Bani-Ahmad, M. Reyalat, M. Braik, and Z. Alrahamneh, “Fast and Accurate Detection and Classification of Plant Diseases,” 2011.

- T. N. Nguyen, S. Lee, H. Nguyen-Xuan, and J. Lee, “A novel analysis-prediction approach for geometrically nonlinear problems using group method of data handling,” Comput Methods Appl Mech Eng, vol. 354, pp. 506–526, 2019, doi: https://doi.org/10.1016/j.cma.2019.05.052.

- Faithpraise, Fina, and C. Chatwin, “Automatic plant pest detection and recognition using k-means clustering algorithm and correspondence filters) Automatic plant pest detection and recognition using k-means clustering algorithm and correspondence filters,” Int J Adv Biotechnol Res, vol. 4, no. 2, pp. 189–199, 2013, [Online]. Available: http://sro.sussex.ac.uk

- T. Rumpf, A.-K. Mahlein, U. Steiner, E.-C. Oerke, H.-W. Dehne, and L. Plümer, “Early detection and classification of plant diseases with Support Vector Machines based on hyperspectral reflectance,” Comput Electron Agric, vol. 74, no. 1, pp. 91–99, 2010, doi: https://doi.org/10.1016/j.compag.2010.06.009.

- S. Aladhadh, S. Habib, M. Islam, M. Aloraini, M. Aladhadh, and H. S. Al-Rawashdeh, “An Efficient Pest Detection Framework with a Medium-Scale Benchmark to Increase the Agricultural Productivity,” Sensors, vol. 22, no. 24, 2022, doi: 10.3390/s22249749.

- S. Azfar, A. Nadeem, A. Hassan, and A. B. Shaikh, “Pest Detection and Control Techniques Using Wireless Sensor Network: A Review,” J Entomol Zool Stud, vol. 3, no. 2, 2015, [Online]. Available: https://www.researchgate.net/publication/275155897

- T. Kasinathan, D. Singaraju, and S. R. Uyyala, “Insect classification and detection in field crops using modern machine learning techniques,” Information Processing in Agriculture, vol. 8, no. 3, pp. 446–457, 2021, doi: https://doi.org/10.1016/j.inpa.2020.09.006.

- S. H. Chiwamba et al., “An Application of Machine Learning Algorithms in Automated Identification and Capturing of Fall Armyworm (FAW) Moths in the Field,” in PROCEEDINGS OF THE ICTSZ INTERNATIONAL CONFERENCE IN ICTs, 2018. [Online]. Available: https://www.researchgate.net/publication/331935302

- A. Tageldin, D. Adly, H. Mostafa, and H. S. Mohammed, “Applying Machine Learning Technology in the Prediction of Crop Infestation with Cotton Leafworm in Greenhouse,” bioRxiv, 2020, doi: 10.1101/2020.09.17.301168.

- S. P. Mohanty, D. P. Hughes, and M. Salathé, “Using deep learning for image-based plant disease detection,” Front Plant Sci, vol. 7, no. September, Sep. 2016, doi: 10.3389/fpls.2016.01419.

- A. Kaya, A. S. Keceli, C. Catal, H. Y. Yalic, H. Temucin, and B. Tekinerdogan, “Analysis of transfer learning for deep neural network based plant classification models,” Comput Electron Agric, vol. 158, pp. 20–29, 2019, doi: https://doi.org/10.1016/j.compag.2019.01.041.

- M. I. Dinata, S. Mardi Susiki Nugroho, and R. F. Rachmadi, “Classification of Strawberry Plant Diseases with Leaf Image Using CNN,” in 2021 International Conference on Artificial Intelligence and Computer Science Technology (ICAICST), 2021, pp. 68–72. doi: 10.1109/ICAICST53116.2021.9497830.

- M. Muhathir, M. H. Santoso, and R. Muliono, “Analysis Naïve Bayes In Classifying Fruit by Utilizing Hog Feature Extraction,” JOURNAL OF INFORMATICS AND TELECOMMUNICATION ENGINEERING, vol. 4, no. 1, pp. 151–160, Jul. 2020, doi: 10.31289/jite.v4i1.3860.

- C. T. Selvi, R. S. Sankara Subramanian, and R. Ramachandran, “Weed Detection in Agricultural fields using Deep Learning Process,” in 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS), 2021, pp. 1470–1473. doi: 10.1109/ICACCS51430.2021.9441683.

- S. N. A. M. Johari, S. Khairunniza-Bejo, A. R. M. Shariff, N. A. Husin, M. M. M. Masri, and N. Kamarudin, “Automatic Classification of Bagworm, Metisa plana (Walker) Instar Stages Using a Transfer Learning-Based Framework,” Agriculture, vol. 13, no. 2, 2023, doi: 10.3390/agriculture13020442.

- M. A. Malek, S. S. Reya, M. Z. Hasan, and S. Hossain, “A Crop Pest Classification Model Using Deep Learning Techniques,” in 2021 2nd International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), 2021, pp. 367–371. doi: 10.1109/ICREST51555.2021.9331154.

- A. Kamilaris and F. X. Prenafeta-Boldú, “Deep learning in agriculture: A survey,” Comput Electron Agric, vol. 147, pp. 70–90, 2018, doi: https://doi.org/10.1016/j.compag.2018.02.016.

- M. Tudi et al., “Agriculture development, pesticide application and its impact on the environment,” International Journal of Environmental Research and Public Health, vol. 18, no. 3. MDPI AG, pp. 1–24, Feb. 01, 2021. doi: 10.3390/ijerph18031112.

- N. Denan et al., “Predation of potential insect pests in oil palm plantations, rubber tree plantations, and fruit orchards,” Ecol Evol, vol. 10, no. 2, pp. 654–661, Jan. 2020, doi: 10.1002/ece3.5856.

- G. Chung, “Effect of Pests and Diseases on Oil Palm Yield,” 2012, pp. 163–210. doi: 10.1016/B978-0-9818936-9-3.50009-5.

- E. C. Tetila et al., “Detection and classification of soybean pests using deep learning with UAV images,” Comput Electron Agric, vol. 179, p. 105836, 2020, doi: https://doi.org/10.1016/j.compag.2020.105836.

- Y. Ai, C. Sun, J. Tie, and X. Cai, “Research on recognition model of crop diseases and insect pests based on deep learning in harsh environments,” IEEE Access, vol. 8, pp. 171686–171693, 2020, doi: 10.1109/ACCESS.2020.3025325.

- D. C. Amarathunga, J. Grundy, H. Parry, and A. Dorin, “Methods of insect image capture and classification: A Systematic literature review,” Smart Agricultural Technology, vol. 1. Elsevier B.V., Dec. 01, 2021. doi: 10.1016/j.atech.2021.100023.

- J. G. A. Barbedo, “Detecting and Classifying Pests in Crops Using Proximal Images and Machine Learning: A Review,” AI, vol. 1, no. 2, pp. 312–328, Jun. 2020, doi: 10.3390/ai1020021.

- M. Ula, M. Muhathir, and I. Sahputra, “Optimization of Multilayer Perceptron Hyperparameter in Classifying Pneumonia Disease Through X-Ray Images with Speeded-Up Robust Features Extraction Method,” IJACSA) International Journal of Advanced Computer Science and Applications, vol. 13, no. 10, 2022, [Online]. Available: www.ijacsa.thesai.org

- M. Muhathir, M. F. D. Ryandra, R. B. Y. Syah, N. Khairina, and R. Muliono, “Convolutional Neural Network (CNN) of Resnet-50 with Inceptionv3 Architecture in Classification on X-Ray Image,” in Artificial Intelligence Application in Networks and Systems, P. Silhavy Radek and Silhavy, Ed., Cham: Springer International Publishing, 2023, pp. 208–221.

- M. N. Ahmad, A. R. M. Shariff, I. Aris, and I. Abdul Halin, “A Four Stage Image Processing Algorithm for Detecting and Counting of Bagworm, Metisa plana Walker (Lepidoptera: Psychidae),” Agriculture, vol. 11, no. 12, 2021, doi: 10.3390/agriculture11121265.

- S. N. A. M. Johari, S. Khairunniza-Bejo, A. R. M. Shariff, N. A. Husin, M. M. M. Masri, and N. Kamarudin, “Detection of Bagworm Infestation Area in Oil Palm Plantation Based on UAV Remote Sensing Using Machine Learning Approach,” Agriculture, vol. 13, no. 10, 2023, doi: 10.3390/agriculture13101886.

- M. Muhathir, N. Khairina, R. Karenina Isabella Barus, M. Ula, and I. Sahputra, “Preserving Cultural Heritage Through AI: Developing LeNet Architecture for Wayang Image Classification,” IJACSA) International Journal of Advanced Computer Science and Applications, vol. 14, no. 9, 2023, [Online]. Available: www.ijacsa.thesai.org

- R. Syuhada, Muhathir, N. Khairina, R. Muliono, Susilawati and Z. Sembiring, "Analyzing the Effectiveness of VGG Deep Learning Architecture for Mushroom Type Classification," 2023 International Conference of Computer Science and Information Technology (ICOSNIKOM), Binjia, Indonesia, 2023, pp. 1-6, doi: 10.1109/ICoSNIKOM60230.2023.10364551.

- S. N, N. Emmanuel, K. Sri Phani Krishna, C. Chinnabbai, and K. Uma Krishna, “Artificial Intelligence for Classification and Detection of Major Insect Pests of Brinjal,” Indian Journal of Entomology, vol. 85, no. 3, pp. 563–566, Sep. 2023, doi: 10.55446/IJE.2023.1388.

- Z. Shanwen, X. Xinhua, Q. Guohong, and S. Yu, “Detecting the pest disease of field crops using deformable VGG-16 model,” Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), vol. 37, no. 18, pp. 188–194, 2021, doi: 10.11975/j.issn.1002-6819.2021.18.022

- Z. Liu, J. Gao, G. Yang, H. Zhang, and Y. He, “Localization and Classification of Paddy Field Pests using a Saliency Map and Deep Convolutional Neural Network,” Sci Rep, vol. 6, Feb. 2016, doi: 10.1038/srep20410.

- J. G. A. Barbedo and G. B. Castro, “Influence of image quality on the identification of psyllids using convolutional neural networks,” Biosyst Eng, vol. 182, pp. 151–158, 2019, doi: https://doi.org/10.1016/j.biosystemseng.2019.04.007.

- A. N. Alves, W. S. R. Souza, and D. L. Borges, “Cotton pests classification in field-based images using deep residual networks,” Comput Electron Agric, vol. 174, p. 105488, 2020, doi: https://doi.org/10.1016/j.compag.2020.105488.