Performance of Personal Identification System Technique Using Iris Biometrics Technology

Автор: V.K. Narendira Kumar, B. Srinivasan

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 5 vol.5, 2013 года.

Бесплатный доступ

The Iris identification as one of the significant techniques of biometric identification systems s and iris recognition algorithm is described. Biometric technology advances intellectual properties are wanted by many unauthorized personnel. As a result many researchers have being searching ways for more secure authentication methods for the user access. Iris recognition uses iris patterns for personnel identification. The system steps are capturing iris patterns; determining the location of iris boundaries; converting the iris boundary to the stretched polar coordinate system; extracting iris code based on texture analysis. The system has been implemented and tested using dataset of number of samples of iris data with different contrast quality. The developed algorithm performs satisfactorily on the images, provides 93% accuracy. Experimental results show that the proposed method has an encouraging performance.

Biometrics, Iris, Feature Processing, Iris Acquisition, Template

Короткий адрес: https://sciup.org/15012710

IDR: 15012710

Текст научной статьи Performance of Personal Identification System Technique Using Iris Biometrics Technology

Recent advances in information technology and increasing emphasis on security have resulted in more attention to automatic personal identification system based on biometrics. Biometric technology is an automated method for recognizing an individual based on physiological or behavioral characteristics. Among the present biometric traits, iris is found to be the most reliable and accurate due to the rich texture of iris patterns, persistence of features through the life time of an individual and it is neither duplicable nor imitable. The iris characteristics make it used as a biometric feature to identify individuals. They use acquisition devices such as cameras and scanning devices to capture images, recordings, or measurements of an individual’s characteristics and computer hardware and software to extract, encode, store, and compare these characteristics. Because the process is automated, biometric decision-making is generally very fast, in most cases taking only a few seconds in real time.

Iris recognition is one of the most reliable and accurate biometric systems available because of uniqueness in the iris. This system analyzes the complex pattern of the iris, which can be a combination of specific characteristics known as cornea, crypts, filaments, freckles, pits, radial furrows and striations. Compared with other biometric features such as face and fingerprint, iris patterns are more unique, reliable and stable with age. The use of iris patterns as a biometric method has several advantages like its high fidelity, non-invasive etc . The work starts with image acquisition followed by iris segmentation involves locating the iris part and separating it from an eye image. Both inner boundary and the outer boundary of a typical iris can be taken as circles and iris is localized. Localized iris is unwrapped to use for feature extraction.

Iris recognition technology is based on the distinctly colored ring surrounding the pupil of the eye. Made from elastic connective tissue, the iris is a very rich source of biometric data, having approximately 266 distinctive characteristics. These include the trabecular meshwork, a tissue that gives the appearance of dividing the iris radically, with striations, rings, furrows, a corona, and freckles. Iris recognition technology uses about 173 of these distinctive characteristics. Formed during the 8th month of gestation, these characteristics reportedly remain stable throughout a person’s lifetime, except in cases of injury. Iris recognition can be used in both verification and identification systems. Iris recognition systems use a small, high-quality camera to capture a black and white, high-resolution image of the iris. The systems then define the boundaries of the iris, establish a coordinate system over the iris, and define the zones for analysis within the coordinate system [3].

The remaining sections are organized as follows: Brief outline of iris structure and iris camera is presented in section 2 and 3. System methodology processing steps are mentioned in Section 4. The other phases of the implementation of iris authentication system are briefly explained in section 5. Experimental results are given in Section 6. Finally, Section 7 describes the concluding remarks.

II. Literature Survey

The iris feature extraction process is roughly divided into three major categories: the phase-based method, zero-crossing representation and texture analysis based method. The well known phase based methods for feature processing are Gabor wavelet, Log-Gabor wavelet. The 1 D wavelet is known for the zerocrossing representation. The Laplacian of Gaussian filter and Gaussian-Hermit moments are used in texture analysis based method. There are several matching techniques to match a captured iris template with enrolled template. Among all these Hamming distance, Weighted Euclidean distance and Normalized correlation measurement techniques are popular. The Hamming distance gives a measure of how many bits are same between two bit patterns. The Hamming distance (HD) via the XOR operator is used for the similarity measure between two iris templates in. The Weighted Euclidean distance (WED) is used to compare two iris templates in. The weighted Euclidean distance gives a measure of how similar a collection of values are between two templates. The weighted Euclidean distance can be used to compare two templates, especially if the template is composed of integer values.

The normalized correlation is for matching the iris template and was used by Wildes et al. and Kim et al.

-

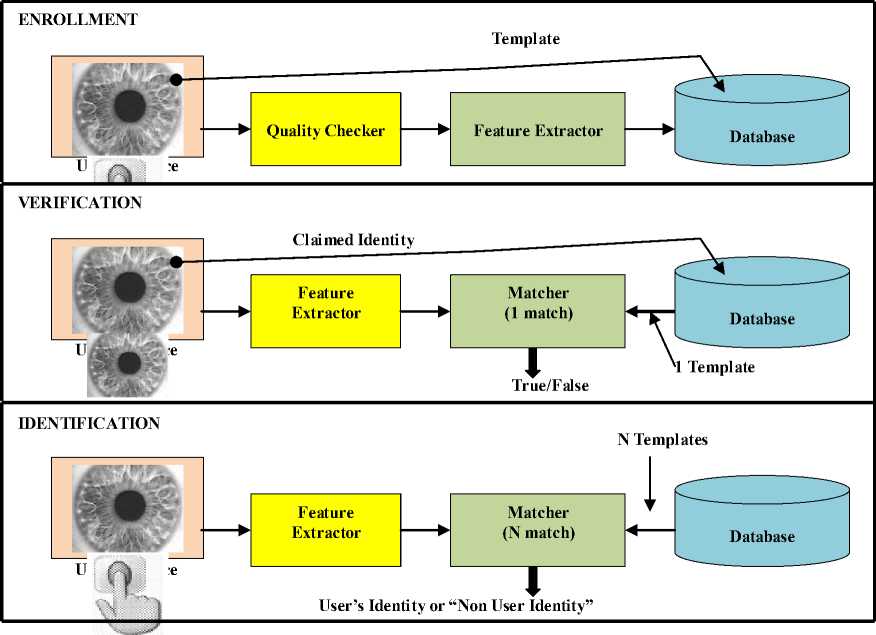

A. Biometric System Consists of Four Basic Modules

In a verification task, a person presents an identity claim to the system and the system only needs to verify the claim. In an identification task, an unknown individual presents himself or herself to the system, and it must identify them.

Enrollment Unit: The enrollment module registers individuals into the biometric system database. During this phase, a biometric reader scans the individual’s biometric characteristic to produce its digital representation see figure 1.

Feature Extraction Unit: This module processes the input sample to generate a compact representation called the template, which is then stored in a central database or a smartcard issued to the individual.

Matching Unit: This module compares the current input with the template. If the system performs identity verification, it compares the new characteristics to the user’s master template and produces a score or match value (one to one matching). A system performing identification matches the new characteristics against the master templates of many users resulting in multiple match values (one too many matching).

Decision Maker: This module accepts or rejects the user based on a security threshold and matching score.

Fig. 1: Block diagrams of enrollment, verification, and identification tasks are shown using the four main modules of a biometric system.

-

B. Attacks on a Biometric System

A biometric system is vulnerable to different types of attacks that can compromise the security afforded by the system, thereby resulting in system failure. All attacks observed into two basic types.

-

1) Zero-effort Attacks: The biometric traits of an opportunistic intruder may be sufficiently similar to a legitimately enrolled individual, resulting in a False Match and a breach of system security. This event is related to the probability of observing a degree of similarity between templates originating from different sources by chance.

-

2) Adversary Attacks: This refers to the possibility that a determined impostor would be able to masquerade as an enrolled user by using a physical or a digital artifact of a legitimately enrolled user. An individual may also deliberately manipulate his or her biometric trait in order to avoid detection by an automated biometric system.

Besides this, there are other attacks that can be launched against an application whose resources are protected using biometrics.

Circumvention: An intruder may fraudulently gain access to the system by circumventing the biometric matcher and peruse sensitive data such as medical records pertaining to a legitimately enrolled user. Besides violating the privacy of the enrolled user, the impostor can modify sensitive data including biometric information.

Repudiation: A legitimate user may access the facilities offered by an application and then claim that an intruder had circumvented the system. A bank clerk, for example, may modify the financial records of a customer and then deny responsibility by claiming that an intruder must have spoofed her (i.e., the clerk’s) biometric trait and accessed the records.

Collusion: An individual with super-user privileges (such as an administrator) may deliberately modify biometric system parameters to permit incursions by a collaborating intruder.

Coercion: An impostor may force a legitimate user (e.g., at gunpoint) to grant him access to the system.

Denial of Service (DoS): An attacker may overwhelm the system resources to the point where legitimate users desiring access will be refused service. For example, a server that processes access requests can be bombarded with a large number of bogus requests, thereby overloading its computational resources and preventing valid requests from being processed.

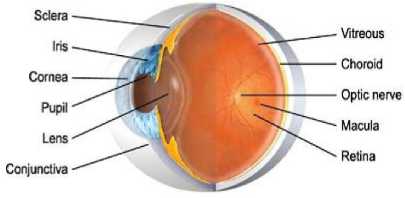

III. Iris Structure

The iris is the colored part of the eye behind the eyelids, and in front of the lens. It is the only internal organ of the body, which is normally externally visible. These visible patterns are unique to all individuals and it has been found that the probability of finding two individuals with identical iris patterns is almost zero. Although the human eye is slightly asymmetrical and the pupil is slightly off the center [2], for the most practical cases we think of the human eye is symmetrical with respect to line of sight. The iris controls the amount of light that reaches the retina. Due to heavy pigmentation, light pass only through the iris via pupil, which contracts and dilates according to the amount of available light. Iris dimensions vary slightly between the individuals. Its shape is conical with the papillary margin located more interiorly than the root. A thickened region called the collaret divides the anterior surface into the culinary and pupil zones [1].

Iris is made up of four different layers and made of five basic sections see figure 2: the anterior border layer, the iris stoma layer, the sphincter, the dilator, and the posterior pigment epithelium. The anterior border layer lies, as it implies, on the anterior surface of the iris. It is similar to the stoma layer in structure, but more densely packed. The individual pigment cells that make up both the anterior border layer and the iris stoma are called chromataphores. The stoma layer consists of the pigment cells and a collage nous connective tissue that is arranged in arch-like processes. Throughout the iris stoma are radically arranged corkscrew-like blood vessels. Both of the iris muscles lie in the iris stoma. The first set of muscles is the sphincter. The sphincter papillae are a typically smooth muscle, lying directly in front of the neuron ectodermic pigment epithelium. The dilators muscles are part of the pigmented my epithelium, which lies directly in front of the posterior iris epithelium. The myoid elongations of the dilator cells extend in front of the pigmented cell bodies. All of the first three parts, stoma, sphincter, and dilator, and intimately connected both physiologically, and in movement. The final layer of the iris is the posterior iris epithelium, a heavily pigmented layer, containing the pigment melanin. The main purpose of this heavy layer is to make the iris “light tight”, or, in other words, impenetrable to light (much like the sclera).

Fig. 2: Structure of the Eye

The visual appearance of the iris is a directly related to its multi-layered construction. The anterior layer is divided into two basic regions, the central papillary zone and the surrounding colliery zone. The border between these areas is known as the collarets. The collarets appears as a zigzag circumferential ridge, where the anterior border layer begins to drop into the pupil. The culinary zone is characterized by interlacing ridges resulting from stoma support. The ridges tend to vary with the state of the pupil (contracted or dilated). Other striations can be seen as an effect of the blood vessels beneath the surface. Crypts, nevi, and freckles make up the other main source of variation on the iris. A crypt is an irregular atrophy of the border layer. Nevi are small elevations in the border layer. Freckles are local collections of chromataphores. The papillary zone, on the other hand, tends to be relatively flat. It will occasionally feature radiating spoke-like processes and a pigment frill where the posterior layer’s heavily pigmented tissue shows at the pupil boundary.

-

A . Iris Camera

The iris camera is the monochrome Cooke Pixel Fly QE, which is equipped with a 2/3” format Sony ICX 285AL CCD sensor, and has boosted sensitivity in the NIR spectrum. High NIR sensitivity is desirable to reduce the required level of illumination. The sensor resolution is 1394 by 1024 pixels, with a frame rate of 12 Hz. The sensor elements of this camera are relatively large at 6.45 by 6.45 mm, limiting the adverse effects of diffraction and thus allowing for high image contrast. The iris camera lens is the potentiometer-equipped Fuji non D16x73A-Y41. This lens provides programmable motorized focus and zoom (focal length 10–160 mm). A study of the trade-off between diffraction effects and depth of field resulted in an aperture setting of f/9.5, where the system is (just) diffraction limited. The depth of field is about 30 mm, making clear the need for accurate focal distance control. The lens is equipped with a 715 nm long-pass filter that blocks most of the ambient light and allows most of the LED NIR illumination. The iris camera and lens are configured to capture iris images at a resolution of approximately 200 pixels across the iris, with a field of view of 76 mm at a subject distance of 1.5m.

IV. System Methodology

Iris recognition leverages the unique features of the human iris to perform identification and, in certain cases, verification. Iris recognition is based on visible (via regular and/or infrared light) qualities of the iris. A primary visible characteristic is the orbicular meshwork (permanently formed by the 8th month of gestation), a tissue which gives the appearance of dividing the iris in a radial fashion. Other visible characteristics include rings, furrows, freckles, and the corona, to cite only the more familiar.

-

A. Iris Scan

The user places him or her so that he can see his own eye's reflection in the device. The user may be able to do this from up to 2 feet away or may need to be as close as a couple of inches depending on the device. Verification time is generally less than 5 seconds, though the user will only need to look into the device for a couple moments. To prevent a fake eye from being used to fool the system, these devices may vary the light shone into the eye and watch for pupil dilation.

Iris scans analyze the features that exist in the colored tissue surrounding the pupil which has more than 200 points that can be used for comparison, including rings, furrows and freckles. The scans use a regular video camera style and can be done from further away than an iris scan. It will work through glasses fine and in fact has the ability to create an accurate enough measurement that it can be used for identification purposes, and not just verification. The uniqueness of eyes, even between the left and right eye of the same person, makes iris scanning very powerful for identification purposes. The likelihood of a false positive is extremely low and its relative speed and ease of use make it a great potential biometric [4].

-

B. Iris Code

Expressed simply, iris recognition technology converts these visible characteristics as a phase sequence into a 512 byte Iris Code(tm), a template stored for future identification attempts. From the iris' 11mm diameter, Dr. Daugman's algorithms provide 3.4 bits of data per square mm. This density of information is such that each iris can be said to have 266 'degrees of freedom', as opposed to 13-60 for traditional biometric technologies. A key differentiator of iris-scan technology is the fact that 512 byte templates are generated for every iris, which facilitates match speed (capable of matching over 500,000 templates per second).

-

C. Iris Image Acquisition

An important and difficult step of an iris recognition system is image acquisition. Since iris is small in size and dark in color (especially for Asian people), it is difficult to acquire good images for analysis using the standard CCD camera and ordinary lighting. We have designed our own device for iris image acquisition [5], which can deliver iris image of sufficiently high quality.

-

D. Preprocessing

The acquired image always contains not only the ‘useful’ parts (iris) but also some ‘irrelevant’ parts (e.g. eyelid, pupil etc.). Under some conditions, the brightness is not uniformly distributed. In addition, different eye-to-camera distance may result in different image sizes of the same eye. For the purpose of analysis, the original image needs to be preprocessed. The preprocessing is composed of three steps [8].

Step 1: Iris Localization White outlines indicate the localization of the iris and eyelid boundaries. Both the inner boundary and the outer boundary of a typical iris can be taken as circles. But the two circles are usually not co-centric. The inner boundary between the pupil and the iris by means of threshold see figure 3. The outer boundary of the iris is more difficult to detect because of the low contrast between the two sides of the boundary. We detect the outer boundary by maximizing changes of the perimeter-normalized sum of gray level values along the circle. The technique is found to be efficient and effective.

Fig. 3: Human Iris Localization.

Step 2: Iris Normalization The size of the pupil may change due to the variation of the illumination and the hippos, and the associated elastic deformations in the iris texture may interfere with the results of pattern matching. For the purpose of accurate texture analysis, it is necessary to compensate this deformation. Since both the inner and outer boundaries of the iris have been detected, it is easy to map the iris ring to a rectangular block of texture of a fixed size [6].

Step 3: Image Enhancement The original iris image has low contrast and may have non-uniform illumination caused by the position of the light source. These may impair the result of the texture analysis. We enhance the iris image and reduce the effect of nonuniform illumination by means of local histogram equalization.

V. Implementation of Iris Biometric System

In order to implement this iris system efficiently, J2EE program is used. This program could speed up the development of this system because it has facilities to draw forms and to add library easily. The researchers presented a system based on phase code using Gabor filters for iris recognition and reported that it has excellent performance on a diverse database of many images. A system for personal verification based on automatic iris recognition. It relies on image registration and image matching, which is computationally very demanding. An algorithm for iris feature extraction using zero crossing representation of 1-D wavelet transforms. All these algorithms are based on grey images, and color information was not used. Grey iris image can provide enough information to identify different individuals. The iris identification is basically divided in four steps.

-

A. Capturing the Image

A good and clear image eliminates the process of noise removal and also helps in avoiding errors in calculation. In practical applications of a workable system an image of the eye to be analyzed must be acquired first in digital form suitable for analysis.

-

B. Defining the Location of the Iris

The next stage of iris recognition is to isolate the actual region in a digital eye image. The part of the eye carrying information is only the iris part. Two circles can approximate the iris image, one for the iris sclera boundary and another interior to the first for the iris pupil boundary. The segmentation consists of binary segmentation, pupil center localization, circular edge detection and remapping.

-

1) Binary Segmentation: For finding the pupil and limbos circular edges in the location of the pupil center is required. The segmentation is such that only pupil part is extracted.

-

2) Pupil Center Location: The binary-segmented image the row gradient is taken in one direction. The pixel location corresponding to maximum gradient is found out. Then row gradient is taken in the reverse direction. And the pixel location corresponding to maximum gradient is found out. Then maximum of all these distances is the distance corresponding to diameter of the pupil circle. The row corresponding to diameter gives us x co-ordinate for pupil center. In the same way above procedure is repeated for column gradients and y co-ordinate for pupil center.

-

3) Circular Edge Detection: Iris analysis begins with reliable means for establishing whether an iris is visible in the video image, and then precisely locating its inner and outer boundaries (pupil and limbos). Because of the felicitous circular geometry of the iris, these tasks can be accomplished for a raw input image.

The complete operator behaves in effect as circular edge detector, that searches iteratively for maximum contour integral derivative with increasing radius at successively finer scales of analysis through three parameter space of center and radius (x , y , r) defining the path of the contour integration. The fitting contour to images via this type of optimization formulation is a standard machine vision technique, often referred to as active contour modeling [7].

The researchers presented a system based on phase code using Gabor filters for iris recognition and reported that it has excellent performance on a diverse database of many images. A system for personal verification based on automatic iris recognition. It relies on image registration and image matching, which is computationally very demanding. An algorithm for iris feature extraction using zero crossing representation of 1-D wavelet transforms. All these algorithms are based on grey images, and color information was not used. The iris identification is basically divided in four steps.

-

C. Remapping of the Iris

To make a detailed comparison between two images, it is advantageous to establish a precise correspondence between characteristic structures across the pair. The system compensates or image shift, scaling, and rotation. Given the systems’ ability to aid operators in accurate self-positioning, these have proven to be the key degrees of freedom that required compensation. Iris localization is charged with isolating an iris in a larger acquired image and thereby essentially accomplishes alignment for image shift. The system uses radial scaling to compensate for overall size as well as a simple model of pupil variation based on linear stretching. This scaling serves to map Cartesian image coordinates to dimensionless polar image coordinates [9].

-

D. Feature Extraction

We implement two well-established texture analysis methods to extract features from the normalized block of texture image, namely the multi-channel Gabor filter and the wavelet transform. The process of feature extraction starts by locating the outer and inner boundaries of the iris. The second step finds the contour of the inner boundary i.e., the iris-pupil boundary [7].

-

E. Matching

Comparison of bit patterns generated is done to check if the two irises belong to the same person. Calculation of Hamming Distance (HD) is done for this comparison. The Hamming distance is a fractional measure of the number of bits disagreeing between two binary patterns. Two similar irises will fail this test since distance between them will be small. The test of matching is implemented by the simple Boolean Exclusive-OR operator (XOR) applied to the 2048 bit phase vectors that encode any two iris patterns. Letting A and B be two iris representations to be compared, this quantity can be calculated as with subscript ‘j’ indexing bit position and Θ denoting the exclusive-OR operator.

VI. Experimental Results

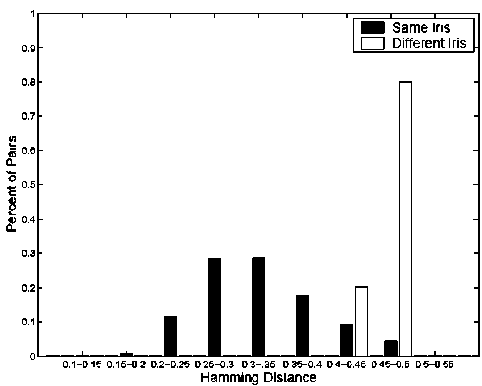

Iris Challenge Evaluation (ICE) Baseline Implementation of iris segmentation step localizes the iris region in the image. Figure 4 is an example of an iris image and the segmentation results from our implementation called ND IRIS. The encoding stage uses filters to encode iris image texture patterns into a bit vector code. The corresponding matching stage calculates the distance between iris codes, and decides whether it is a match (in the verification context), or recognizes the submitted probe iris from the subjects in the gallery set (in the identification context).

(a) Gallery image

(b) Segmentation result

Fig. 4. The image of (a) gallery image and (b) segmentation result.

The pupil and iris boundaries shown here were found by the software automatically. For all the images in the probe set, the rank-one recognition rate for the left eye images is 89.64%. As noted earlier, only approximately 1/3 of the probe images have the Iris LG 2200 system reported localization results. We calculated the HD between each probe image and each gallery image.

Fig. 5. The distribution of the hamming distance for the basic experiments.

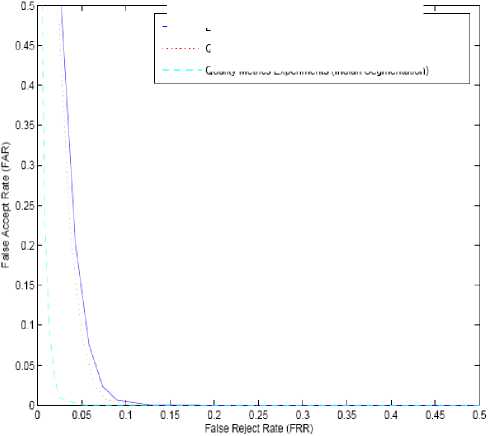

Figure 5 shows the distribution of the HDs. When using HD criterion 0.32 for a verification result, the false reject rate (FRR) is 44.73%, and the FAR is 0.00%. When using HD criterion 0.4, the FRR is 12.80%, and the FAR is 0.03%. The images with quality metrics automatically computed by the LG 2200 system are images with relatively higher quality. For all the 4,249 probe images with quality metrics, the recognition accuracy for the left eye images is 90.92% (using ICE baseline’s segmentation, denoted as ICE_IRIS) and 96.61% (using LG 2200 reported localization results, denoted as Iris). This shows that for the images with LG 2200 quality metrics, the rank-one recognition rate is around 5.7% higher than considering all the images when using ICE localization results. Thus we concluded that the Iris system has more accurate iris localization than in the ICE baseline approach.

Figure 6 shows the ROC curves for the iris verification experiments using the above three settings. The impact of quality metrics on the probe sets. We considered the impact of the three LG 2200 image quality metrics –“Percent Iris”, “Focus Value”, and “Motion Blur” on the rank-one recognition rate, one by one. Especially, the Hamming distance is superior to other distance measures in respect to the speed of calculating the matching score in the applications of the large database. In practice, an iris recognition system has been used under an extensive database because of the unique nature and extreme richness of the human iris. The compact size of the iris code can search the matched iris features at very fast speed.

-------Basic Experiments (ICE Segmentation)

Quality Metrics Experiments {ICE Segmentation)

Quality Metrics Experiments {Iridian Segmentation'

Fig. 6. The ROC for iris verification experiments.

Table I, table II and table III show the breakdown of the subset of images selected by the Iris system for computing performance metrics. They are broken down by the number with different levels of percent iris (Table I), focus value (Table II), or motion blur (Table III) as reported in the quality metrics. For each level of percent iris, focus value, or motion blur, the rank-one recognition rate for the ICE and iris segmentation is reported. The gallery sets are the same as in the basic experiment. We only observe the impact of the three metrics on the probe sets. It is clear that a higher “Percent Iris” leads to a higher rank-one recognition rate. It seems that a higher “Focus Value” first helps to improve the rank-one recognition rate, and then decreases the rate a little. As to the quality metric “Motion Blur”, there is not any obvious trend. It is necessary to preprocess a captured eye image for extracting iris features. Preprocessing consists of interior boundary (between pupil and iris) detection and exterior boundary (between iris and sclera) detection using edge detection algorithm.

TABLE I. The impact of the percent iris measurement on rank-one iris recognition rate

|

PERCENT IRIS |

<50 |

50-59 |

60-69 |

70-79 |

80-89 |

90-99 |

100 |

|

# of Examples |

12 |

61 |

189 |

680 |

1355 |

1858 |

94 |

|

ICE |

33.3% |

62.3% |

74.6% |

83.8% |

90.6% |

96.6% |

93.6% |

|

Iris |

50.0% |

70.5% |

86.2% |

94.1% |

97.3% |

99.0% |

100.0% |

TABLE II. The impact of the focus value measurement on rank-one iris recognition rate

|

Focus Value |

<40 |

40-49 |

50-59 |

60-69 |

70-79 |

80-89 |

90-99 |

100 |

|

# of Examples |

8 |

0 |

539 |

484 |

530 |

680 |

1862 |

146 |

|

ICE |

62.5% |

N/A |

92.0% |

91.9% |

90.2% |

91.5% |

90.8% |

86.3% |

|

Iris |

87.5% |

N/A |

97.2% |

97.5% |

97.5% |

96.6% |

96.2% |

93.8% |

TABLE III. The impact of the motion blurs measurement on rank-one iris recognition rate

|

Motion Blur |

0-4 |

5-9 |

10-14 |

15-19 |

20-24 |

25 and above |

|

# of Examples |

3145 |

629 |

291 |

156 |

26 |

2 |

|

ICE |

90.8% |

91.9% |

90.0% |

94.9% |

73.1% |

50.0% |

|

Iris |

96.6% |

96.8% |

96.2% |

98.1% |

92.3% |

100.0% |

The impact of “percent iris” on rank-one iris recognition rate it confirms that a higher “percent iris” helps to improve the rank-one recognition rate. When using ICE baseline segmentation, for the experiments of using iris images with percent iris greater than 90, there are 63 errors in rank-one recognition. In the 63 cases, there are 52 cases caused by serious iris segmentation errors either from the related gallery image or from the related probe image, or both. It confirms again that inaccurate segmentation is a key problem in iris recognition. It shows that even the commercial system is not 100% accurate in iris segmentation. Figure 5.3 shows the wrong segmentation examples using ICE segmentation and the Iris system’s reported segmentation.

Then we further examined the impact of focus value on these iris images with percent iris greater than 90.

The images are divided into two groups according to whether the focus value is greater than 80 or not. For each of the group, there are some subjects for whom we could not find suitable gallery images. So the number of the gallery images in each of the two groups is less the number of gallery images when not differing focus values. We observed that using images with higher focus value gives a better result than using images with lower focus values. But for both groups, the results are not higher than the result of not splitting the iris images into different groups according to the focus values.

Eye images are used here are from database. The eye image database contains 756 grayscale eye images with 108 unique eyes or classes and 7 different images of each unique eye. Images from each class are taken from two sessions with one month interval between sessions. The images were captured especially for iris recognition research using specialized digital. The eye images are mainly from persons of Asian descent, whose eyes are characterized by irises that are densely pigmented, and with dark eyelashes. Due to specialized imaging conditions using near infra-red light, features in the iris region are highly visible and there is good contrast between pupil, iris and sclera regions.

Experimentation was carried out on total of 45 images with 15 different classes where each class contains 3 images (Left, Right and Centre). Based on Hamming distance for inter-class and intra-class, performance measures like FMR and FNMR are calculated for both methods i.e. using Log - Gabor Filter (single and four orientations). Another important aspect is about area of iris used for processing. Already only half circle of iris is used for both methods. Based on our results, we recommend that a measure of pupil dilation should be created as meta-data to be associated with each generated iris code. This would allow systems to characterize the reliability of an iris code match as a function of the pupil dilations in the underlying images. Our results concerns feature processing the iris image to create an iris code. When the degree of pupil dilation is large, so that the width of the pupil is small in pixels, it may be worthwhile to include a super-resolution step in the pre-processing. Iris recognition, as a biometric technology, has great advantages, such as variability, stability and security, thus it will have a variety of applications.

VII. Conclusion

The work represents an attempt to acknowledge and account for the presence on iris biometrics, towards their improved identification. We proposed the new feature processing method for iris recognition, which is known as the most reliable biometric system. Major challenges and their corresponding solutions are discussed. Further, we have focused only on the core technology of iris capturing the image, location of the iris, feature extraction, and matching. Iris recognition has proven to be a very useful and versatile security measure. It is a quick and accurate way of identifying an individual with no room for human error. Our approach is comparable to all existing approaches with respect to the number of bits to represent feature vectors and processing time. So far the accuracy rate is concerned our approach is also leads the existing approaches except the approach proposed. In the meantime, we, as a society, have time to decide how we want to use this new technology. By implementing reasonable safeguards, we can harness the power of the technology to maximize its public safety benefits while minimizing the intrusion on individual privacy.

Список литературы Performance of Personal Identification System Technique Using Iris Biometrics Technology

- J. Daugman, "High confidence personal identification by rapid video analysis of iris texture", in Proceedings of the 1992 IEEE International Carnahan Conference on Security Technology, 1992.

- J. Daugman, "The Importance of Being Random: Statistical Principles of Iris Recognition", in Pattern Recognition, Vol. 36, No. 2, pp. 279–291, 2003.

- T. Matsumoto, M. Hirabayashi, and K. Sato: "A Vulnerability Evaluation of Iris Matching (Part 3)", in Proceedings of the 2004 Symposium on Cryptography and Information Security (SCIS), Institute of Electronics, Information and Communication Engineers, pp. 701–706, 2004.

- R.Wildes, "Iris Recognition: An Emerging Biometric Technology," Proc. IEEE, Vol. 85, No. 9, pp. 1348–1363, Sep. 1997.

- Liu S. And Silverman M. "A Practical Guide to Iris Biometric Security Technology", pp. 71–76, January 2000.

- UK Biometrics Working Group, "Use of Iris Biometrics for Identification and Authentication: Advice on Product Selection", pp. 55–68, November 2010.

- A.K. Jain, Rued Bolle and Sharath Pankanti "Biometrics: Personal Identification in Networked Society" Publisher: Springer; 1st edition, 1999. 2nd printing edition 2005. pp. 22–26,

- Shin young Lim, Kwanyong Lee, Okhwan Byeon, and Taiyun Kim., "Efficient Iris Recognition through Improvement of Feature Vector and Classifier", ETRI Journal, 23(2):61–70, June 2001.

- L. Ma, Y. Wang, and T. Tan., "Iris Recognition Based on Multichannel Gabor Filtering" In Proc. of the 5th Asian Conference on Computer Vision, Volume I, pages 279–283, 2002.