Quality of Experience Improvement and Service Time Optimization through Dynamic Computation Offloading Algorithms in Multi-access Edge Computing Networks

Автор: Marouane Myyara, Oussama Lagnfdi, Anouar Darif, Abderrazak Farchane

Журнал: International Journal of Computer Network and Information Security @ijcnis

Статья в выпуске: 4 vol.16, 2024 года.

Бесплатный доступ

Multi-access Edge Computing optimizes computation in proximity to smart mobile devices, addressing the limitations of devices with insufficient capabilities. In scenarios featuring multiple compute-intensive and delay-sensitive applications, computation offloading becomes essential. The objective of this research is to enhance user experience, minimize service time, and balance workloads while optimizing computation offloading and resource utilization. In this study, we introduce dynamic computation offloading algorithms that concurrently minimize service time and maximize the quality of experience. These algorithms take into account task and resource characteristics to determine the optimal execution location based on evaluated metrics. To assess the positive impact of the proposed algorithms, we employed the Edgecloudsim simulator, offering a realistic assessment of a Multi-access Edge Computing system. Simulation results showcase the superiority of our dynamic computation offloading algorithm compared to alternatives, achieving enhanced quality of experience and minimal service time. The findings underscore the effectiveness of the proposed algorithm and its potential to enhance mobile application performance. The comprehensive evaluation provides insights into the robustness and practical applicability of the proposed approach, positioning it as a valuable solution in the context of MEC networks. This research contributes to the ongoing efforts in advancing computation offloading strategies for improved performance in edge computing environments.

Computation Offloading, Quality of Experience, Service Time, Workload Orchestration, Multi-access Edge Computing Network

Короткий адрес: https://sciup.org/15019292

IDR: 15019292 | DOI: 10.5815/ijcnis.2024.04.01

Текст научной статьи Quality of Experience Improvement and Service Time Optimization through Dynamic Computation Offloading Algorithms in Multi-access Edge Computing Networks

Nowadays, the proliferation of smart mobile devices with diverse functionalities has created a demand for faster and more personalized services. These applications, which are often resource-intensive, require significant computation and real-time communication capabilities. However, due to the limited resources of mobile devices, including storage and computing capabilities, end users often find the services offered by these devices unsatisfactory compared to traditional desktop computers [1]. Furthermore, with the exponential growth of the Internet of Things (IoT), involving billions of mobile devices, along with the increasing utilization of artificial intelligence (AI) and virtual and augmented reality (VR/AR) technologies, there is a pressing need for communication networks to connect this vast number of mobile devices and computing resources to efficiently process the generated data [2]. However, the amount of data generated by various mobile devices is increasing rapidly, necessitating quick storage and processing capabilities near the end users. Centralized cloud computing environments, which concentrate computing, processing, and storage resources within data centers, present challenges in terms of real-time, large-scale data processing. Additionally, data centers located far from end users make cloud computing less advantageous for low-latency applications, potentially causing network congestion when transmitting data generated by interconnected devices within the same network. To address these challenges associated with cloud computing, a new technology has emerged in recent years, known as Multi-access Edge Computing (MEC).

MEC is a distributed computing architecture designed to bring computing, processing, and storage capabilities in proximity to end-user devices. By deploying resources at the network edge, MEC enhances the efficiency and performance of mobile applications and services. It enables real-time data processing for time-sensitive applications and reduces reliance on centralized cloud computing. MEC offers low latency, high reliability, and high bandwidth, making it well-suited for accommodating numerous mobile devices and diverse applications that demand uninterrupted service and real-time processing capabilities [3]. It facilitates the deployment of new services and applications while enhancing the user experience. MEC plays a pivotal role in the deployment of emerging technologies such as IoT and 5G, which require more computing and processing resources, as well as high-reliability and low-latency services [4]. A typical MEC framework consists of a central cloud data center, multiple MEC servers, and numerous mobile devices. End users utilize mobile applications containing resource-intensive and latency-sensitive computational tasks. When an application task exceeds the processing power available in the mobile device, it can be offloaded to an MEC server or the cloud through an appropriate offloading process, enabling mobile device users to benefit from improved performance. This mechanism allows users to access and process data in real-time, with high bandwidth and low latency. MEC therefore can deliver computing services with a shorter runtime, thus improving quality of service (QoS) levels [5].

While computation offloading techniques in MEC and research contributions aimed at improving MEC technology performance are relatively recent and limited, the implementation of new offloading strategies and processes to enhance the performance of mobile devices and provide better service and experience qualities is becoming increasingly essential. This paper aims to contribute to the development of MEC functionalities by addressing the following research questions: How can the quality of experience (QoE) for mobile users be enhanced in MEC environments? How can the service time of computational tasks be minimized through computation offloading? What novel offloading and workload management strategies can be proposed to improve the overall performance of mobile devices in MEC? How can dynamic offloading algorithms optimize resource utilization and enhance the efficiency of MEC systems? To achieve these goals, a novel offloading and workload management strategy is proposed in this paper, aiming to reduce service time and maximize the quality of experience while respecting task delays and deadlines. The proposed offloading algorithms improve the overall performance of mobile devices by dynamically deploying and exploiting computing resources based on task characteristics, different application constraints, and available resources in the MEC infrastructure. The primary contributions of this research can be summarized as follows:

• We have proposed and modeled a novel three-tiered multi-access edge computing system designed to provide the necessary computational resources for handling computational tasks from various mobile devices. This system architecture is designed to efficiently distribute computational load and improve overall system performance.

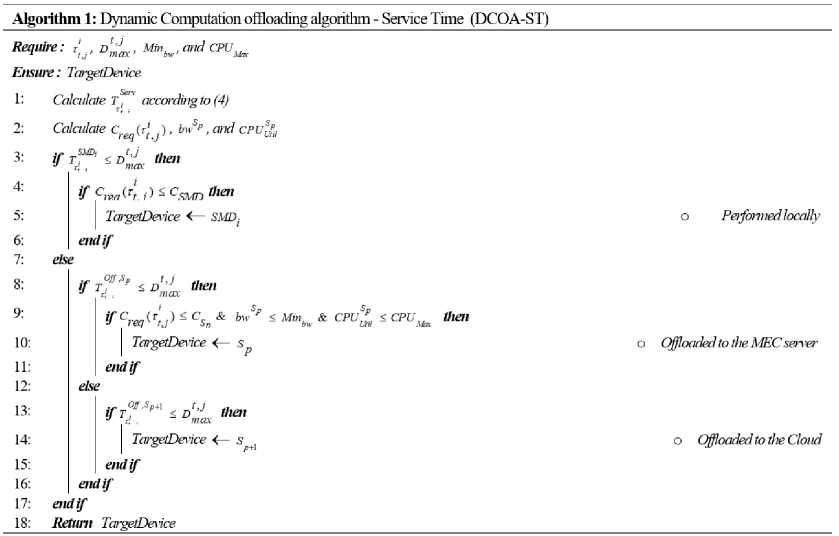

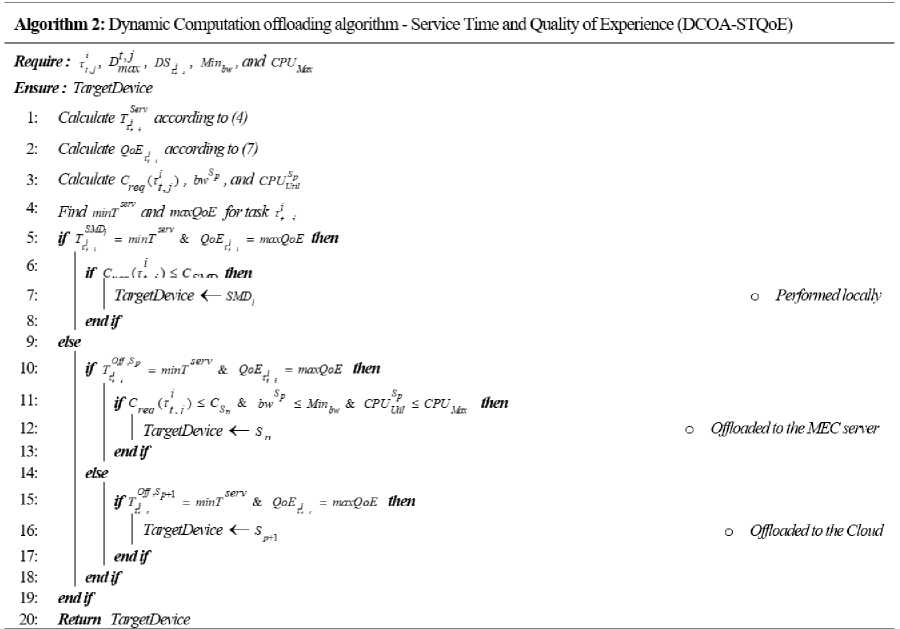

• To further enhance the system's performance, we have proposed two algorithms: the Dynamic Computation Offloading Algorithm - Service Time (DCOA-ST) and the Dynamic Computation Offloading Algorithm -Service Time and Quality of Experience (DCOA-STQoE). These algorithms are specifically designed to dynamically offload computational tasks based on the characteristics of each application and the availability of computational resources. By intelligently allocating tasks, these algorithms optimize resource utilization and enhance overall system efficiency.

• We conducted a thorough performance evaluation of the proposed approaches using the EdgeCloudSim simulator. This simulation framework allowed us to accurately simulate and analyze the problem of offloading computations in various studied scenarios. Through extensive evaluation, we were able to assess the effectiveness and efficiency of the proposed algorithms, providing valuable insights into their performance characteristics and potential benefits.

2. Related Works

3. System Model and Problem Formulation3.1. MEC Multi-layer Architecture Overview

The remainder of this paper is structured as follows. Section 2 provides a summary of the related work, discussing relevant studies and approaches in the MEC field. Section 3 describes the system model and architecture for multi- access edge computing. Section 4 presents the dynamic computing offloading algorithms. Performance evaluation of the proposed algorithms is presented in Section 5. Finally, Section 6 concludes the entire paper and presents possible directions for future work.

The rapid growth of mobile devices and applications places significant demands on real-time computing. Despite increasing computational power, meeting the rigorous requirements of such applications remains challenging for mobile devices [6]. To address mobile limitations in computing capacity, storage, and batteries, offloading resource-intensive tasks to servers with greater resources, like Cloud or Edge servers, is a viable solution.

Computation offloading in Multi-Access Edge Computing allows mobile users to transfer tasks to connected servers for execution, offering a decentralized approach. Task offloading comes in two types: full and partial. This paper primarily focuses on full offloading, where tasks are entirely transferred to an edge server, with no local execution on the mobile device. The process involves transferring input data to a remote machine, triggering the execution of a corresponding module, and sending back the output data. This sequence is termed an offloaded computation. Local execution on the mobile machine is considered a local computation [7]. Numerous studies prioritize computational offloading in MultiAccess Edge Computing networks, emphasizing complete task offloading to an edge server for each user request [8,9]. Further work has concentrated on offloading computations in MEC architecture with multiple servers, presenting a greater challenge as the offloading decision not only involves determining if a request must be offloaded but also selecting the optimal destination [10,11]. The computational offloading problem of minimizing cost or maximizing gain was addressed in [12] and [13], respectively. Zhang et al. [14] studied maximizing system utility while ensuring tasks with high computational requirements meet deadline constraints.

Efficient task offloading strategy design in an MEC environment with devices requiring significant computational resources is a current research focus [15]. Edge computation offloading for delay-sensitive applications has garnered attention, with Naouri et al. [16] implementing a three-layer offloading architecture, considering computation and communication costs to minimize processing delay. Chen et al. [17] addressed the task offloading problem by formulating it as a stochastic optimization problem, applying techniques to ensure queue latency guarantees and minimize energy consumption during transmission. Ning et al. [18] studied latency and efficiency in offloading within MEC networks, addressing scenarios with both single and multiple users using suitable algorithms such as branch and bound and iterative heuristic resource allocation. In Edge computing, the Mobile Edge Orchestrator (MEO) is crucial, as per the ETSI MEC reference architecture [19]. The MEO manages computing, network, and storage resources, coordinating and optimizing resource allocation for efficient workload execution [10]. It plays a critical role in information management for offloading decisions, leveraging application task requirements to determine suitable servers. This aligns resource information with application needs, allowing the MEO to select the optimal host based on suitability [20]. The MEO serves two essential functions: monitoring systems and identifying optimal virtual machines (VMs) for offloading tasks. Strategies consider factors like data transfer size, network latency, and server capabilities, adapting to edge computing infrastructure characteristics [21].

To optimize MEC system performance, using online computation offloading algorithms with the Mobile Edge Orchestrator is crucial for efficient workload distribution to appropriate servers. This approach considers the unique features of mobile, cloud, and edge computing, addressing real-time and online challenges effectively [22]. Simultaneously, the workload orchestrator plays a vital role in identifying target servers based on the characteristics of incoming application tasks and the specific attributes of MEC architecture [23]. Integrating workload orchestration and computation offloading algorithms proves highly effective, supporting the workload orchestrator's operations and improving overall system performance. From the literature review, previous studies have suggested diverse approaches to tackle the computational offloading problem in mobile edge computing, often leveraging the assistance of the Mobile Edge Orchestrator. However, notable metrics such as QoS and QoE evaluation, virtual machine utilization, and the percentage of failed tasks have been neglected in existing research. These metrics are pivotal for assessing the effectiveness of computational offloading strategies but have not received sufficient attention in prior studies. Our proposal distinguishes itself from existing works by focusing on the characteristics of application tasks and their constraints, resource computing capabilities, and network constraints, which play a crucial role in improving QoS in MEC. By developing a framework for the orchestration of computing workloads based on MEO, we provide a practical and efficient solution for task offloading and optimal resource management to improve the overall quality of service and experience.

This section outlines the structure of the workload orchestration mechanism in a multi-layer MEC system. We detail the employed computation offloading strategy and provide insights into the overall system architecture addressing the offloading problem through workload orchestration in MEC systems.

Workload orchestration efficiently selects the optimal server or compute unit for processing tasks by analyzing user requests and monitoring factors like task characteristics and server utilization. This reduces task service time, ensuring prompt completion and an enhanced quality of experience. By leveraging this approach, the need for task transmission to the cloud server is circumvented, eliminating additional delays and conserving bandwidth. Selecting a suitable MEC server capable of efficiently handling time-sensitive tasks optimizes the offloading process, minimizing latency for delaysensitive tasks and improving overall system performance.

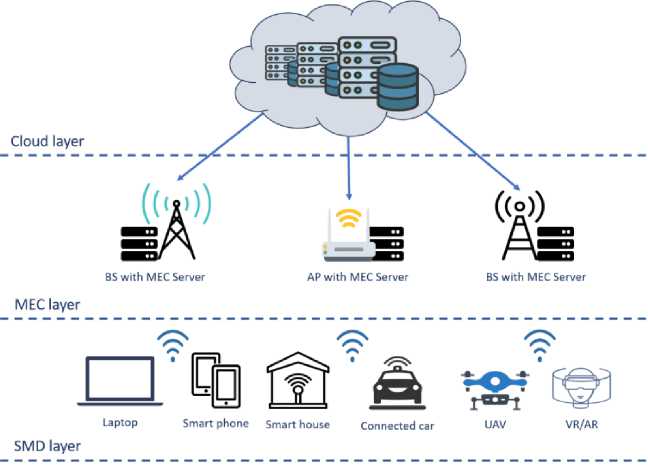

Fig.1 depicts our multi-access, multi-layer edge computing architecture, featuring Smart Mobile Devices (SMDs) connected to MEC servers. The architecture has three layers for efficient computation offloading. The first layer includes SMDs (e.g., smartphones, laptops, connected cars, unmanned aerial vehicles, and smart homes) interacting with WLAN access points through wireless signals, handling various computational tasks, such as virtual and augmented reality applications. SMDs can execute tasks locally or offload them based on a defined policy. The second layer comprises strategically positioned MEC servers connected via a MAN. The third layer involves global cloud servers from providers like Microsoft Azure and Amazon AWS, accessible through a WAN connection. This layered architecture facilitates efficient task offloading and workload orchestration, ensuring optimized processing of computational tasks.

Fig.1. Multi-layer multi-access edge computing architecture

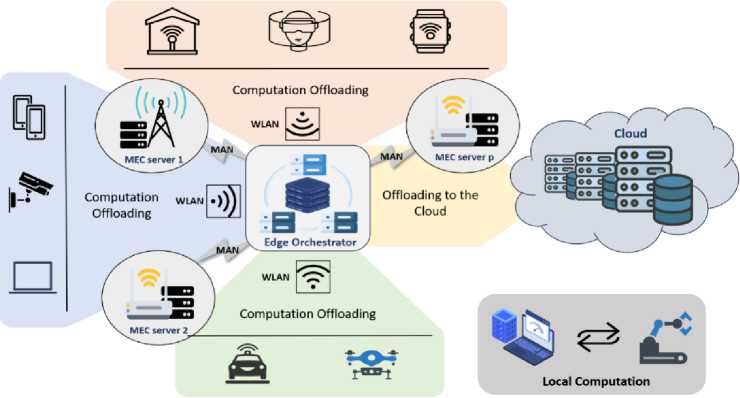

Fig.2. Computation offloading in MEC architecture

In our implementation, smart mobile devices continuously run compute-intensive applications, generating task traffic eligible for efficient processing. Multiple strategically located MEC servers near users ensure comprehensive coverage for all SMDs in the architecture (Fig.2). The decision-making process for offloading primarily resides with the SMD. Tasks generated at the SMD layer prompt an evaluation of the device's computational capacity, determining the need for offloading. Tasks not executable locally on the SMDs are then forwarded to the Edge Orchestrator (EO) for further processing. The EO possesses knowledge of infrastructure resources, server statuses, and available computing capacity for each virtual machine in the network. Upon receiving offloaded tasks, the EO decides where to execute them and which target server to allocate them to. The decision considers options like offloading to an MEC server via a metropolitan area network or sending them to the cloud through a wide area network connection. Workload distribution, resource availability, and network conditions influence this decision. By distributing the offloading decision-making process between SMDs and the EO, we optimize task execution, leveraging device computational capacity and effectively utilizing MEC servers and cloud resources.

-

3.2. System Model

In the multi-layer MEC setup, SMDs can locally execute tasks or offload them to edge or cloud servers, requiring sufficient processing capabilities. MEC server operations involve a host and a data center, utilizing virtualization for resource allocation. A time-sharing policy ensures timely task completion by allowing concurrent execution and avoiding timeouts. Additionally, a space-sharing policy follows the first-in, first-out rule [24], scheduling tasks concurrently to optimize resource utilization and minimize waiting time.

Existing computation offloading strategies often neglect diverse MEC resources, resulting in sub-optimal performance. Our focus is on performance enhancement using online offloading algorithms and the mobile edge orchestrator. The primary objective is to optimize task service time, impacting overall system efficiency by minimizing the duration from task reception to processing completion. In the MEC system framework (Fig.2), let E S = { S 1 , S 2 ,...,S p , S p + 1 } be the set of MEC and Cloud servers, with p as the total number of servers. Additionally, let VMSk = { vm * 1 , vm * 2 , . , vm * n } be the set of virtual machines on each MEC server Sk ■ To differentiate between VMs in MEC and the Cloud server, VMC = { vmc j, vmc 2, . , vmc m } represents the set of VMs in the Cloud server S p + 1, where m is the total number of VMs.

-

A. Task Model

In contrast to other works [25,26], our study assumes that each SMD is not limited to a single task but possesses a set of tasks. Given a set of applications EA , the tasks for each SMD are denoted by ASMD = {Tt 1,^ 2,.,Tt j}. Here, SMD represents intelligent mobile device i , t denotes the application type, and j signifies the total number of tasks in application t . Each task, treated as an atomic unit of input data without fragmentation, has three parameters: task length L i , indicating the task's data amount transferred to the MEC server or the Cloud; delay sensitivity DS i , \> Tt,j specifying task sensitivity to delay (with a value close to 1 indicating high sensitivity); and maximum delay demand Dt, j , indicating the maximum acceptable delay for local or selected server processing.

-

B. Decision Variables

The decision variables represent the offloading decisions for each task. For each task Tt j, a binary decision variable x i is defined as follows: \<

1 1, if task T is offloaded to MEC or Cloud.

x i = i ,

Tt'J 1 0, if task Tt j is executed locally on the SMD.

-

C. Computation Model

The task computation model incorporates both local and remote execution times. The total service time of a task depends on the selected offloading decision. If task T t j is executed locally, the service time is given by:

Serv

SMDi

If task T j is offloaded, the service time is the sum of transmission time and processing time on the selected server:

Serv

= T

Off , S p

т

The service time constraints are formulated as follows:

Here, L i j

Serv

TSM D =

TtJ

F SMDi

,if x , = 0

T t,j

T

Off , S p

ti , j

T t

p = T

Trans , S

ti , j

T t

p + T

Process , S

ti , j

T t

,if X i = 1 j

denotes the amount of data for task T t j , and F SMD is the processing speed of device SMD i in MIPS

(Million Instructions Per Second). TT™5,Sp represents the transmission time of task r‘ to server S , including the time Tt, j ,j p to transfer task data from the mobile device to the MEC server or the Cloud.

Trans , S p Up , S p Dw , S p

j ii. j tii. j

The upload and download data transfer times, denoted by T U i p , S p

T t,j

p and T

Dw , S

ti , j

T

p respectively, account for the transfers

of task input data from the mobile device to the target server Sp and output data from the computing server to the source mobile device. These delays are computed using a network model integrated into the Edgecloudsim simulator [27], which dynamically evaluates data transmission delays considering the locations and number of mobile devices, traffic, and network types. The processing time, denoted TProcess,Sp , represents the time required to execute the task on the MEC or ■j n -th virtual machine of server Sp in MIPS.

Cloud server and F S , n represents the processing speed of the

T

Process , Sp

ti , j

T

'

FS p , n

-

D. Quality of Experience Model

In QoE-based computation offloading, the crucial task is to determine the optimal location for executing computational tasks. The implementation of computation offloading must maximize the user's QoE while meeting the system's quality of service, including service time, task deadline satisfaction, and task failure rate. To address this, we propose a QoE model that deduces QoE from task execution times and their delay sensitivities. The QoE model is defined by the formula in Equation (7), which incorporates both service time and task sensitivity.

, if T S i erv T t,j

t , j max

T

Serv

Qo' -

—

- i Dmax t,j

T

max

X[1 — DS, , if D‘mj, max t,

< T

Serv

t , j

T

t,j max

, if T S i erv T t,j

max

The delay sensitivity of task rlt j is denoted by DS^ i

, and D t , j represents the delay requirement, setting the

maximum permissible execution time. The delay requirement determines the allowed service time for the task. The delay sensitivity, ranging from 0 to 1, indicates the level of tolerance towards delays. For applications intolerant to delays, the delay sensitivity value approaches 1. These parameters are application-specific and determined by the applications generating the tasks. The QoE value equals 1 when the execution time of the task is shorter than the deadline requirement D t , j , indicating that the task is accomplished within the application-defined deadline. As the task execution time exceeds the deadline, the QoE value decreases based on the delay sensitivity. If the service time exceeds twice the maximum delay value, the quality of experience for the task is reset to zero, indicating that the task has significantly surpassed the acceptable delay limit. This formulation captures the relationship between the service time, delay requirement, and delay sensitivity, allowing for the evaluation of the quality of experience for each task based on its execution time.

-

3.2. Problem Formulation

In the Multi-access Edge Computing environment, the goal is to optimize the execution of computational tasks from various mobile devices, addressing challenges related to service time, quality of experience, and resource constraints. This involves determining the most suitable execution location for each task to achieve a balance between service time and user satisfaction.

-

A. Service Time Optimization

The service time optimization problem involves efficiently allocating computational resources to handle tasks originating from different mobile devices. The objective is to minimize the overall service time for executing these tasks while considering the capabilities of the available computing resources.

Minimize T overaii = £ ( t T D i

VT t,j ,J

■ (1 - x , ) + T O ,Sp ■ x, T t,j S T tj T t,j

|

Subject to : |

( C 1) |

x i e 0,1 T t,j |

, V T , j |

|

( C 2) |

T Serv ■ x , T t , j T t , j |

< D‘m j , V< j |

|

|

( C 3) |

L i < Cs T t,j S p |

,

v

|

|

|

( C 4) |

C req T t j ' |

' x, < Csp , V T ,j ,S p T t , j p |

|

|

( C 5) |

bw S p ■ x , T t,j |

< Minb w , VT , J , S p |

|

|

( C 6) |

CPUSUk < |

CPU Max , V S p |

The optimization problem is subject to various constraints ( C 1) to ( C 6) that ensure a feasible and reliable allocation for computational tasks in a MEC environment. Here, V T t j represents the universality of the constraints across all tasks, applications, and SMDs. Constraint ( C 1) enforces that each task is either offloaded or executed locally, but not both. ( C 2) ensures that the service time of a task does not exceed its maximum acceptable delay. ( C 3) and ( C 4) restricts the allocated resources on the selected server to meet the task's computational requirements. ( C 5) and ( C 6) set constraints on the network bandwidth and CPU utilization, respectively, ensuring that the selected server satisfies specific requirements for efficient task offloading.

These constraints collectively define a well-posed optimization problem that seeks an optimal solution for the allocation and execution of computational tasks in a dynamic MEC environment, considering factors such as delay, resource availability, and overall system performance. The objective and constraints together provide the foundation for implementing an efficient and reliable computational offloading algorithm in MEC scenarios.

B. Quality of Experience Optimization

4. Proposed Approach

The quality of experience optimization problem involves maximizing the overall satisfaction or quality of user experience while executing tasks on mobile devices. The objective is to optimize the QoE by considering factors such as service time, network latency, and resource constraints.

Maximize Q overall = ^ QoET .

VT ij ', j

Subject to : ( C 1), ( C 2), ( C 3), ( C 4), ( C 5), ( C 6)

The constraints ( C 1) through ( C 6) address fundamental aspects, including task allocation, service time, network bandwidth, and CPU utilization. By incorporating these constraints, the QoE optimization problem aims to discover the optimal allocation strategy that enhances user satisfaction by considering service time, delay sensitivity, network bandwidth, and CPU utilization.

Efficient task offloading involves considering factors like server utilization, application characteristics, network conditions, and SMD computing capabilities. However, the dynamic nature of these parameters in the MEC environment makes determining the optimal offloading strategy challenging. Resource constraints in scenarios with limited MEC server capacity and numerous SMDs can lead to task failures. Offline optimization methods are less effective due to the dynamic nature of parameters and incomplete infrastructure information. Solving the multi-constraint optimization problem associated with workload orchestration using a mathematical model is complex. Hence, an online technique leveraging computational offloading algorithms with the mobile edge orchestrator is preferred. To address dynamic environment challenges, we propose a dynamic computational offloading algorithm optimizing service time and meeting specified delay requirements. The algorithm prioritizes minimizing service time within given delay constraints, enhancing overall service quality. It also considers the quality of experience, determining the task execution location maximizing QoE while adhering to the quality of service constraints. Combining these considerations, our algorithm aims for an optimal balance between service time, delay objectives, and user experience. The algorithm consists of the following steps:

-

• Step 1: Estimate the service time for the three layers of the architecture (local execution, MEC, or Cloud server);

-

• Step 2: Evaluate the quality of experience provided by each layer;

-

• Step 3: Identify the execution site that minimizes the service time and maximizes the quality of experience;

-

• Step 4: Select the least loaded virtual machine within the chosen server, guided by the orchestrator's decision;

-

• Step 5: Allocate tasks to the selected sites with the fastest expected service time and the best quality of

-

4.1. Dynamic Computation Offloading Algorithm for Service Time Optimization

-

4.2. Dynamic Computation Offloading Algorithm for Service Time and Quality of Experience Optimization

experience.

By dynamically adapting to the changing conditions of the infrastructure, mobile users, and their applications, our algorithm aims to optimize task offloading and improve the overall QoE on mobile devices.

To address the challenges of a dynamic environment, we introduce the DCOA-ST (Dynamic Computation Offloading Algorithm - Service Time). This algorithm minimizes service time while considering task sensitivity requirements. Upon task arrival, DCOA-ST selects the optimal execution location and a suitable server for task execution, factoring in task characteristics and resource computing capacity. The service time is influenced by processing time within the unit and transfer time to the server, considering task length, virtual machine capacity, and upload/download delays affected by network latency. Task transfer times to servers are determined using the network model in the simulator. Algorithm 1 predicts task service time by considering task characteristics, server capacities, and SMD capacities.

The DCOA-ST algorithm comprises three steps. Firstly, it predicts the execution time for each task across three layers: local execution, MEC, and Cloud server. In the second step, the algorithm selects the execution location that meets the maximum delay condition, ensuring task completion within the specified deadline. The third step identifies the best virtual machine on the chosen server using a resource optimization and load-balancing algorithm, aiming to select the VM with the least load to optimize resource utilization and evenly distribute tasks within the server. This algorithm effectively predicts execution times, selects the appropriate execution location based on delay requirements, and optimizes resource allocation for improved performance and load balancing. The application sets the maximum delay for each task, added to the task's start date to determine the task's deadline. Specific thresholds are defined for maximum CPU utilization (CPUMax) and minimum WAN bandwidth (Minbw). As per [27], CPUMax is set to 80% to ensure CPU utilization stays below this threshold, while Minbw is set to 5 Mbps to guarantee a minimum bandwidth level for efficient task offloading and execution.

To enhance task execution time, address delay requirements, and improve task sensitivity, we propose Algorithm 2, an enhanced version that combines service time minimization with improved Quality of Experience. The first step predicts service time using the service time prediction algorithm. Predicted service times are compared, considering WAN bandwidth, server utilization, and QoE. The offloading policy focuses on determining the optimal execution location and selecting servers offering maximum QoE and shorter service times. The DCOA-STQoE (Dynamic Computation Offloading Algorithm - Service Time and Quality of Experience) significantly improves system performance and computation offloading efficiency in the MEO by considering all constraints and requirements of the multi-layer MEC architecture. By minimizing task failures, providing fast services, and meeting these requirements, the proposed algorithm enhances QoE and improves QoS for mobile users in the MEC environment.

After describing our MEC architecture and computation offloading strategies, we set up an experimental environment to evaluate its performance. This environment was designed to faithfully reflect our architecture and include a variety of scenarios representative of application computation tasks. We assessed performance in terms of service time, quality of experience, virtual machine utilization, and task failure rate, thereby providing a comprehensive evaluation of our approach. This experimentation is crucial for validating our MEC architecture and providing valuable insights for its future deployment in real MEC environments.

5. Performance Evaluation

In this section, we present an overview of the measurement and evaluation methodologies used to assess the performance of our MEC architecture. We start by outlining the baseline approaches employed for comparison with our proposed algorithms, all within the context of our designed architecture.

-

5.1. Simulation Parameters

The simulation parameters used in our evaluations are summarized in Table 1, which includes the configuration settings for the EdgeCloudSim simulator [27]. These values are carefully chosen to reflect realistic conditions and to enable a comprehensive analysis of the performance of our offloading algorithms in various scenarios.

Table 1. Values of parameters of each device in the architecture

|

Parameter |

Devices |

||

|

MEC |

Cloud |

SMD |

|

|

Total number of devices |

14 |

1 |

200 - 3000 |

|

Number of hosts |

1 |

1 |

1 |

|

Number of VMs per host |

8 |

4 |

1 |

|

Number of Cores per VM |

2 |

4 |

1 |

|

VM CPU Speed (GIPS) |

10 |

100 |

4 |

In our evaluation, we consider various task types from four different applications: augmented reality, computationally intensive, infotainment, and healthcare applications. The tasks generated by SMDs are categorized into these predefined application types. For example, let's consider a user wearing smart glasses offloading captured images to a computational device that provides facial recognition services. Here, the Augmented/Virtual Reality application is computationally intensive, as it involves complex image processing tasks. Edgecloudsim allows us to model and analyze the behavior of these application types and their tasks. By simulating a realistic mixture of task types and application characteristics, we can effectively evaluate the performance of our offloading algorithms in various scenarios.

As depicted in Table 2, the applications we consider exhibit distinct characteristics in terms of task size, delay sensitivity, and deadline. Utilization percentage represents the proportion of SMDs running a specific application. Delay sensitivity reflects the tolerance of tasks toward high delays. Upload/download data denotes the input/output data values of tasks transmitted to/received from the server. VM utilization percentage indicates the portion of VM resources allocated to application tasks running on each device.

Table 2. Characteristics of applications used in the simulation

|

Characteristics |

Application Type |

|||

|

Augmented Reality |

Health Application |

Infotainment Application |

Heavy Computation Application |

|

|

Avg. Upload Data (KB) |

1500 |

200 |

250 |

2500 |

|

Avg. Download Data (KB) |

250 |

1250 |

1000 |

200 |

|

Avg. Task Length (GI) |

12 |

6 |

15 |

30 |

|

VM Util. on Cloud (%) |

0.8 |

0.4 |

1 |

2 |

|

VM Util. on Edge (%) |

8 |

4 |

10 |

20 |

|

VM Util. on SMD (%) |

20 |

10 |

25 |

50 |

|

Delay Sensitivity (%) |

0.9 |

0.7 |

0.3 |

0.1 |

The careful selection of simulation parameters in our experimental methodology enhances the reliability of our evaluations by faithfully reproducing real-world conditions in MEC environments. Indeed, the inclusion of a high number of devices takes into account the scalable and densely populated nature of current mobile networks. Furthermore, specifying the number of hosts and virtual machines allows us to model the available computing capacity on MEC and Cloud servers, thus reflecting the actual structure of computing infrastructures. Similarly, varying the number of cores per virtual machine enables us to consider the diversity of hardware configurations in MEC servers. Specifying the CPU speed of virtual machines captures variations in computational performance in a real MEC environment. Lastly, considering different types of applications and assigning specific characteristics to tasks allows us to simulate the variety of workloads encountered in MEC environments.

In conclusion, these choices of simulation parameters ensure that our evaluations are based on realistic scenarios, thereby strengthening the credibility of our approach and leading to more reliable conclusions regarding the effectiveness of our offloading algorithms.

-

5.2. Comparison Benchmark

-

5.3. Simulation Results

To comprehensively evaluate the performance of DCOA-STQoE, we considered a diverse set of approaches encompassing both specific and generic algorithms. This inclusive assessment aims to provide a thorough understanding of DCOA-STQoE's capabilities. In specific approaches, DCOA-ST, introduced in this paper, shares the goal of minimizing service time with DCOA-STQoE. However, it does not incorporate task sensitivity and delay requirements into its decision-making process. This direct comparison highlights potential performance gains achievable through the inclusion of these factors. Additionally, LCDA (Latency Classification-based Deadline-Aware), as proposed in [28], aligns with DCOA-STQoE's emphasis on task latencies, employing a distinct strategy based on latency classification. Considering generic approaches, the Hybrid algorithm, as proposed in [23], takes into account various system parameters but lacks explicit consideration for task sensitivity and delay requirements.

This comparison underscores the potential advantages of integrating these factors. The Random approach, a fundamental baseline, randomly assigns tasks without optimization criteria, serving as a benchmark for assessing the effectiveness of more sophisticated algorithms like DCOA-STQoE. All comparisons conducted in this study contribute valuable insights into the strengths and weaknesses of DCOA-STQoE. By contrasting its performance with specific and generic algorithms, we gain a nuanced understanding of its adaptability and efficiency across various computational offload scenarios. These results reinforce DCOA-STQoE's standing as a versatile and efficient solution for optimizing dynamic computation offloading.

Having provided a detailed exposition of our methodology and the simulation parameters employed, we will proceed to the analysis of results in the following section. These findings will play a crucial role in addressing our research questions and enhancing our understanding of how various variables affect the performance of MEC systems.

The simulation results are analyzed to evaluate the performance of the offloading algorithms, focusing on key metrics such as service time, task failure rate, QoE, and virtual machine utilization. The experiments aim to provide insights into the algorithms' effectiveness under different workloads and system conditions.

-

A. Average Service Time Comparison

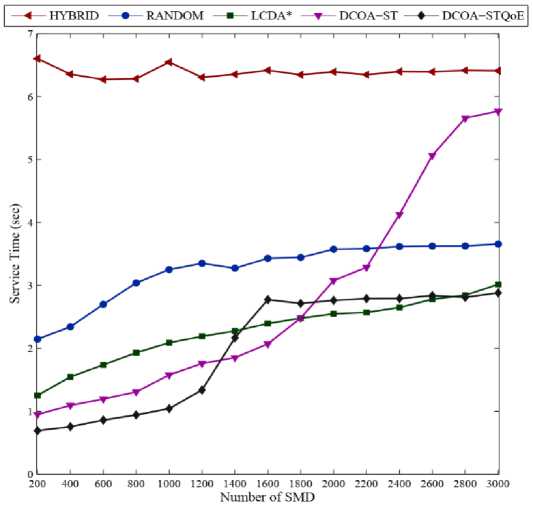

The evaluation concentrates on the average service time metric, a crucial factor influencing the quality of service. Minimizing service time is essential for enhancing user experience. The assessment involves measuring the average service time for each algorithm, considering both transmission time and processing time components. The analysis is conducted under varying workloads, specifically examining the algorithms’ behavior as the system load increases. Simulations cover a range of SMD, from 200 to 3000 devices in increments of 200, providing a comprehensive view of service time performance under different scenarios.

Fig. 3 depicts the service time trends of various algorithms with increasing SMDs. The Hybrid algorithm maintained a constant service time of around 6.5 seconds, while DCOA-ST and DCOA-STQoE showed improved service times under lower system loads, with DCOA-ST outperforming most algorithms from 2300 SMD. DCOA-STQoE displayed consistent service time even as SMDs increased, showing promise for future enhancements. LCDA and RANDOM algorithms had slightly increasing service times with growing SMDs, but RANDOM stabilized at 3000 SMDs. Overall, DCOA-STQoE achieved the minimum and stable service times, making it a promising choice for computation offloading in scenarios with varying system loads and numerous SMDs.

Fig.3. Average service time of all approaches, as a function of number of SMD

-

B. Average Quality of Experience Comparison

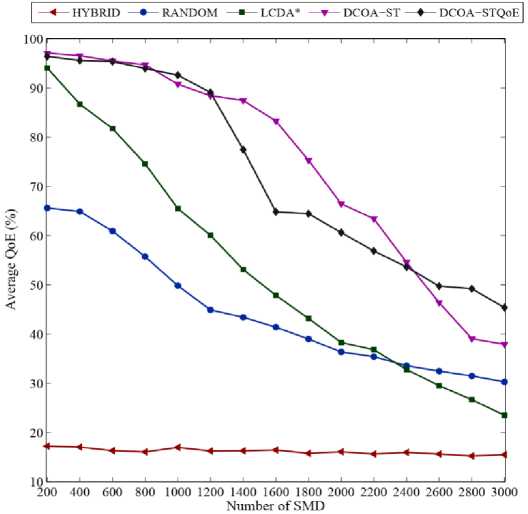

In this experiment, we assessed algorithms using the Quality of Experience metric derived from Equation (7), incorporating service time, delay sensitivity, and maximum delay requirement. QoE diminishes when tasks exceed the maximum delay, gradually decreasing and reaching zero at twice the specified delay. Fig. 4 illustrates QoE changes based on SMD count. DCOA-ST and DCOA-STQoE achieved high QoE (97.09% and 96.80%, respectively) for smaller SMD counts (<400), aiming to minimize service time and QoE simultaneously. LCDA initially had around 94% QoE for 200 SMDs but degraded rapidly with increasing SMDs. RANDOM and HYBRID prioritizing service time over QoE had lower QoE levels—RANDOM at about 65% and HYBRID at around 17%, even with a less loaded system.

In summary, DCOA-ST and DCOA-STQoE outperform other methods in QoE. LCDA experiences rapid QoE degradation, while HYBRID demonstrates the poorest QoE performance, emphasizing task execution success over minimizing service time.

-

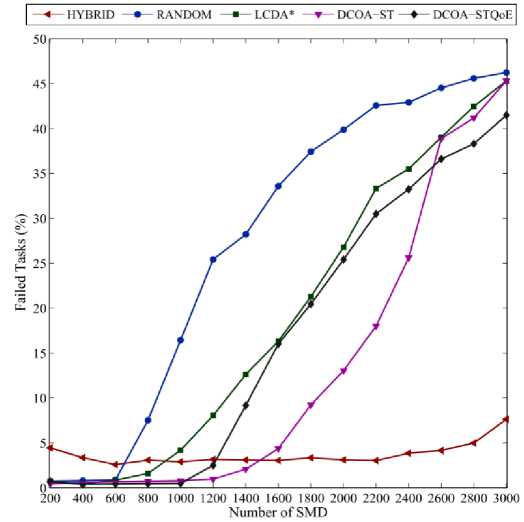

C. Task Failure Rate Comparison

The task failure rate is a crucial metric indicating the percentage of unsuccessful task executions, often resulting from high virtual machine utilization or inadequate network bandwidth. System overload, triggered by an increasing number of SMDs, can lead to processing challenges and task failures. Failed tasks can be categorized as either resource-related or network-dropped. Fig. 5 depicts the task failure rate's correlation with the number of SMDs for the evaluated algorithms. With a less loaded system (SMDs below 400), all algorithms perform comparably in terms of failed tasks. However, beyond this threshold, RANDOM and LCDA algorithms experience a rapid increase in failure rates, while DCOA-ST and DCOA-STQoE show significant increases only from 1200 SMDs. The HYBRID algorithm consistently maintains a low failed task rate.

Fig.4. Average quality of experience of all approaches, as a function of the number of SMD

Fig.5. Average failed task rate of all approaches, as a function of the number of SMD

-

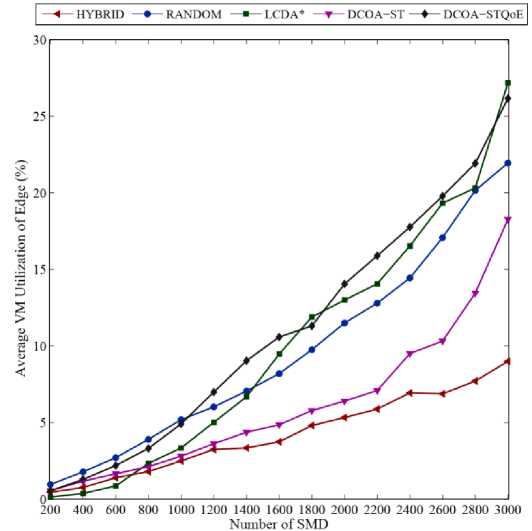

D. VM Utilization Rate Comparison

The figures below offer insights into VM utilization across different layers of the architecture, showcasing how the examined algorithms allocate and utilize VM resources under varying system loads. In terms of VM utilization in MEC servers (Fig. 6), LCDA starts with the lowest rate at 0.1% for 200 SMDs, while Hybrid, DCOA-STQoE, and RANDOM begin with higher rates ranging from 0.3% to 0.9%. As the system load surpasses 1400 SMDs, Hybrid maintains the lowest VM utilization at 9% for 3000 SMDs. DCOA-ST, RANDOM, DCOA-STQoE, and LCDA exhibit higher VM utilization rates in MEC servers, reaching 18.27%, 21.94%, 26.16%, and 27.18%, respectively, for 3000 SMDs.

Fig.6. Average VM utilization in MEC server of all approaches, as a function of the number of SMD

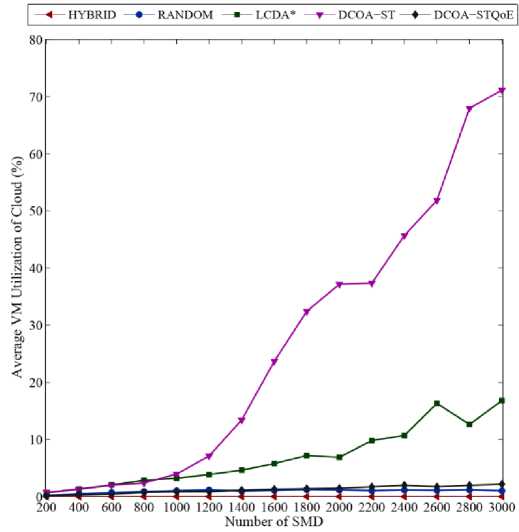

Fig.7. Average VM utilization in Cloud of all approaches, as a function of the number of SMD

Regarding VM utilization in cloud servers (Fig. 7), DCOA-ST achieves the highest rate, peaking at 71% for 3000 SMDs. LCDA demonstrates an average cloud VM utilization of 16%, while Hybrid exhibits minimal usage. DCOA-STQoE and RANDOM have VM utilization rates of approximately 2.17% and 1.09%, respectively, for 3000 SMDs. The utilization of VMs in cloud servers exhibits a slight increase compared to MEC servers.

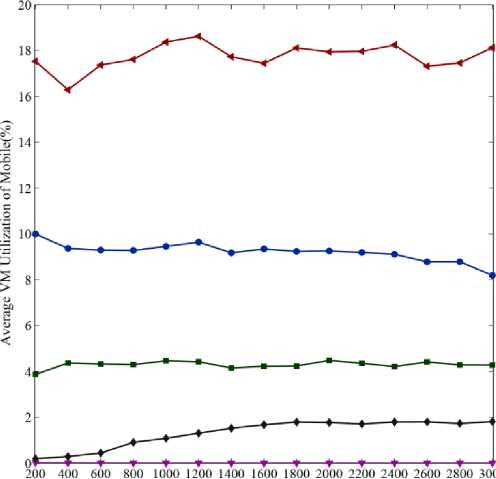

Concerning the utilization of VMs at the mobile device level (Fig. 8), the HYBRID, RANDOM, LCDA, and DCOA-STQoE algorithms consider local task execution within the SMDs. Among these algorithms, HYBRID and RANDOM exhibit higher VM utilization rates in SMDs, reaching 18.12% and 8.18%, respectively, for 3000 SMDs. LCDA maintains a utilization rate of 4.2%. Despite using fewer VMs in SMDs, LCDA does not prioritize the maximization of QoE. Conversely, DCOA-STQoE enables local execution of computational tasks within the SMDs as the number of SMDs increases and when edge and cloud resources cannot meet the constraints of maximizing QoE and minimizing service time. DCOA-STQoE efficiently utilizes the resources of SMDs to perform brief computations of application tasks.

| < HYBRID * RANDOM ■ LCDA* — T DCOA-ST ^+—DCOA-STQoE |

Number of SMD

Fig.8. Average VM utilization in mobile of all approaches, as a function of the number of SMD

By examining the results, we note significant trends that highlight the crucial role of intelligent offloading algorithms in improving MEC system performance. These algorithms, which take into account workload characteristics, application tasks, server computing capabilities, and network constraints, are essential for optimizing operations in these environments. For example, we observe that approaches favoring task transfer to MEC resources tend to offer shorter service times. This results in increased utilization of VMs in the MEC context compared to other methods, such as LCDA and DCOA-STQoE. Additionally, we find that MEC resources play a critical role in reducing latency and improving QoS and QoE. These conclusions underscore the importance of offloading decision-making and load orchestration that consider the constraints and specificities of each MEC environment.

5.4. Discussion

6. Conclusion and Future Work

In this study, we evaluated several computation offloading algorithms in MEC environments, primarily focusing on average service time and QoE as key metrics. Our findings highlight DCOA-STQoE as maintaining the most consistent and lowest service times, particularly in scenarios with fluctuating system loads and numerous mobile devices. Furthermore, our assessment of quality of experience and task failure rates demonstrated that both DCOA-ST and DCOA-STQoE deliver high QoE levels and minimize task failures even amidst varying system loads. The examination of virtual machine utilization across different system load scenarios further underscored the advantages of DCOA-STQoE. Overall, our study underscores the promising potential of DCOA-STQoE for computation offloading in MEC environments, given its exceptional performance and adaptability. Notably, DCOA-STQoE consistently minimizes average service time, crucial for ensuring stability even under system overload. Its simultaneous optimization of service time and user experience quality is paramount for meeting the demands of mobile applications, guaranteeing a superior user experience. The algorithm's effective management of temporal constraints, despite increasing task failure rates, speaks to its robustness. Additionally, its adaptive approach in optimizing resource utilization from edge to cloud highlights its relevance in dynamic MEC settings.

Considering the broader context of MEC, scalability challenges and the impact of network dynamics on algorithm performance must be carefully addressed during algorithm development. Ensuring scalability is vital for managing the growing number of mobile devices and computational demands effectively, while algorithms must also rapidly adapt to network fluctuations to maintain optimal performance in dynamic and large-scale MEC environments. Looking ahead, the integration of computation offloading algorithms into industrial applications necessitates collaboration with various sectors such as automotive, healthcare, logistics, and telecommunications. These partnerships would provide invaluable real-world testing environments, refining algorithms to meet diverse industry needs and fostering the development of tailored, efficient solutions aligned with market demands.

Multi-access Edge Computing improves service quality and user experience for smart mobile devices by leveraging nearby data centers to handle device computations, particularly benefiting compute-intensive and delay-sensitive tasks. Our research delves into computation offloading within the MEC framework, examining its impact on device performance and exploring various strategies. Emphasizing the pivotal role of the Edge Orchestrator in resource management to enhance overall system performance, our study demonstrates that smart mobile devices empowered with offloading capabilities contribute to improved user experience and reduced task failures. Dynamic computation offloading algorithms, designed to adapt to the dynamic nature of MEC, consider task characteristics and resource availability. The proposed algorithms offer flexibility, allowing seamless integration of additional conditions and criteria to meet specific needs. Simulation results underscore the effectiveness of utilizing the Edge Orchestrator, showcasing improvements in user experience, reduced service time, and lower task failure rates. Looking ahead, our future research aims to develop an autonomous system for optimal task execution location using reinforcement learning and machine learning in complex MEC environments. The primary goal is to enhance real-world system performance and facilitate the adoption of these advancements by other mobile edge computing systems.

Список литературы Quality of Experience Improvement and Service Time Optimization through Dynamic Computation Offloading Algorithms in Multi-access Edge Computing Networks

- M. Patel, B. Naughton, C. Chan, N. Sprecher, S. Abeta, A. Neal et al., “Mobile-edge computing introductory technical white paper,” White paper, mobile-edge computing (MEC) industry initiative, vol. 29, pp. 854–864, 2014.

- L. Chettri and R. Bera, “A Comprehensive Survey on Internet of Things (IoT) Toward 5G Wireless Systems,” IEEE Internet of Things Journal, vol. 7, no. 1, pp. 16–32, Jan. 2020.

- N. Abbas, Y. Zhang, A. Taherkordi, and T. Skeie, “Mobile Edge Computing: A Survey,” IEEE Internet of Things Journal, vol. 5, no. 1, pp. 450–465, Feb. 2018.

- M. Liyanage, P. Porambage, A. Y. Ding, and A. Kalla, “Driving forces for Multi-Access Edge Computing (MEC) IoT integration in 5G,” ICT Express, vol. 7, no. 2, pp. 127–137, Jun. 2021.

- Z. Chen, H. Zheng, J. Zhang, X. Zheng, and C. Rong, “Joint Computation Offloading and Deployment Optimization in Multi-UAV-enabled MEC Systems,” Peer-to-Peer Networking and Applications, vol. 15, no. 1, pp. 194–205, Jan. 2022.

- S. Hu and G. Li, “Dynamic Request Scheduling Optimization in Mobile Edge Computing for IoT Applications,” IEEE Internet of Things Journal, vol. 7, no. 2, pp. 1426–1437, Feb. 2020.

- K. Lee and I. Shin, “User Mobility Model Based Computation Offloading Decision for Mobile Cloud,” Journal of Computing Science and Engineering, vol. 9, no. 3, pp. 155–162, Sep. 2015.

- L. Long, Z. Liu, Y. Zhou, L. Liu, J. Shi, and Q. Sun, “Delay Optimized Computation Offloading and Resource Allocation for Mobile Edge Computing,” in 2019 IEEE 90th Vehicular Technology Conference (VTC2019-Fall), Sep. 2019, pp. 1–5.

- U. Saleem, Y. Liu, S. Jangsher, Y. Li, and T. Jiang, “Mobility-Aware Joint Task Scheduling and Resource Allocation for Cooperative Mobile Edge Computing,” IEEE Transactions on Wireless Communications, vol. 20, no. 1, pp. 360–374, Jan. 2021.

- P. Mach and Z. Becvar, “Mobile Edge Computing: A Survey on Architecture and Computation Offloading,” IEEE Communications Surveys & Tutorials, vol. 19, no. 3, pp. 1628–1656, 2017.

- Y. Mao, C. You, J. Zhang, K. Huang, and K. B. Letaief, “A Survey on Mobile Edge Computing: The Communication Perspective,” IEEE Communications Surveys & Tutorials, vol. 19, no. 4, pp. 2322–2358, 2017.

- S. Josˇilo and G. Da´n, “Computation Offloading Scheduling for Periodic Tasks in Mobile Edge Computing,” IEEE/ACM Transactions on Networking, vol. 28, no. 2, pp. 667–680, Apr. 2020.

- T. X. Tran and D. Pompili, “Joint Task Offloading and Resource Allocation for Multi-Server Mobile-Edge Computing Networks,” IEEE Transactions on Vehicular Technology, vol. 68, no. 1, pp. 856–868, Jan. 2019.

- K. Zhang, Y. Mao, S. Leng, S. Maharjan, and Y. Zhang, “Optimal Delay Constrained Offloading for Vehicular Edge Computing Networks,” in 2017 IEEE International Conference on Communications (ICC), May 2017, pp. 1–6.

- Q. You and B. Tang, “Efficient Task Offloading using Particle Swarm Optimization Algorithm in Edge Computing for Industrial Internet of Things,” Journal of Cloud Computing, vol. 10, no. 1, p. 41, Dec. 2021.

- A. Naouri, H. Wu, N. A. Nouri, S. Dhelim, and H. Ning, “A Novel Framework for Mobile-Edge Computing by Optimizing Task Offloading,” IEEE Internet of Things Journal, vol. 8, no. 16, pp. 13 065–13 076, Aug. 2021

- Y. Chen, N. Zhang, Y. Zhang, X. Chen, W. Wu, and X. Shen, “Energy Efficient Dynamic Offloading in Mobile Edge Computing for Internet of Things,” IEEE Transactions on Cloud Computing, vol. 9, no. 3, pp. 1050–1060, Jul. 2021.

- Z. Ning, P. Dong, X. Kong, and F. Xia, “A Cooperative Partial Computation Offloading Scheme for Mobile Edge Computing Enabled Internet of Things,” IEEE Internet of Things Journal, vol. 6, no. 3, pp. 4804–4814, Jun. 2019.

- European Telecommunications Standards Institute (ETSI), “Mobile-edge computing (mec); framework and reference architecture,” European Telecommunications Standards Institute, ETSI GS MEC 003, 2019.

- A. Hegyi, H. Flinck, I. Ketyko, P. Kuure, C. Nemes, and L. Pinter, “Application Orchestration in Mobile Edge Cloud: Placing of IoT Applications to the Edge,” in 2016 IEEE 1st International Workshops on Foundations and Applications of Self* Systems (FAS*W), Sep. 2016, pp. 230–235.

- L. F. Bittencourt, J. Diaz-Montes, R. Buyya, O. F. Rana, and M. Parashar, “Mobility-Aware Application Scheduling in Fog Computing,” IEEE Cloud Computing, vol. 4, no. 2, pp. 26–35, Mar. 2017.

- J. Wang, J. Pan, F. Esposito, P. Calyam, Z. Yang, and P. Mohapatra, “Edge Cloud Offloading Algorithms: Issues, Methods, and Perspectives,” ACM Computing Surveys, vol. 52, no. 1, pp. 2:1–2:23, Feb. 2019.

- V. Nguyen, T. T. Khanh, T. D. T. Nguyen, C. S. Hong, and E.-N. Huh, “Flexible computation offloading in a fuzzy- based mobile edge orchestrator for IoT applications,” Journal of Cloud Computing, vol. 9, no. 1, p. 66, Nov. 2020.

- Y. Zhang, B. Tang, J. Luo, and J. Zhang, “Deadline-Aware Dynamic Task Scheduling in Edge–Cloud Collaborative Computing,” Electronics, vol. 11, no. 15, p. 2464, Jan. 2022.

- H. Gu, M. Zhang, W. Li, and Y. Pan, “Task Offloading and Resource Allocation based on dl-ga in Mobile Edge Computing,” Turkish Journal of Electrical Engineering and Computer Sciences, vol. 31, no. 3, pp. 498–515, 2023.

- M. Zhao and K. Zhou, “Selective Offloading by Exploiting arima-bp for Energy Optimization in Mobile Edge Computing Networks,” Algorithms, vol. 12, no. 2, p. 48, 2019.

- C. Sonmez, A. Ozgovde, and C. Ersoy, “EdgeCloudSim: An Environment for Performance Evaluation of Edge Computing Systems,” Transactions on Emerging Telecommunications Technologies, vol. 29, no. 11, p. e3493, 2018.

- H. Choi, H. Yu, and E. Lee, “Latency-Classification-Based Deadline-Aware Task Offloading Algorithm in Mobile Edge Computing Environments,” Applied Sciences, vol. 9, no. 21, p. 4696, Jan. 2019.