Quantum software engineering and industry 4.0 as the platform for intelligent control of robotic sociotechnical systems in industry 5.0 / 6.0

Автор: Kapkov R.Yu., Tyatyushkina O.Yu., Ulyanov S.V.

Журнал: Сетевое научное издание «Системный анализ в науке и образовании» @journal-sanse

Рубрика: Современные проблемы информатики и управления

Статья в выпуске: 3, 2024 года.

Бесплатный доступ

The fundamentals of the construction and development of the fifth and sixth industrial revolutions (I5.0 / I6.0) are considered as the development of the results of the Industry 4.0 (I4.0) project applying models of intelligent cognitive robotics, quantum software engineering, quantum intelligent control and friendly ship interfaces such as "brain - computer", "human-robot". The issues of constructing physical laws of intelligent control of robotic sociotechnical systems based on the laws of information and thermodynamic distribution of criteria for stability, controllability and robustness are discussed. The extracted quantum information makes it possible to form an additional "social" thermodynamic control force hidden in the information exchange between agents of a multicomponent sociotechnical system.

Quantum software engineering, industry 5.0 / 6.0, robotic sociotechnical systems, quantum intelligent regulators

Короткий адрес: https://sciup.org/14131646

IDR: 14131646 | УДК: 512.6,

Текст научной статьи Quantum software engineering and industry 4.0 as the platform for intelligent control of robotic sociotechnical systems in industry 5.0 / 6.0

Статья находится в открытом доступе и распространяется в соответствии с лицензией Creative Commons «Attribution» («Атрибуция») 4.0 Всемирная (CC BY 4.0)

Innovations of the technology are transforming traditional products as well as business procedures. The digital revolution is converting technology into digital format. Project “Industry 4.0” is a union among the physical assets and advanced technologies such as artificial intelligence (AI), Internet of things (IoT), 3D printing, robotics, quantum cloud computing, etc. The organizations that adopted Industry 4.0 are flexible and prepared for data-driven decisions [1-3]. The term Industry 4.0 collectively refers to a wide range of current concepts, who’s clear classification concerning a discipline as well as their precise distinction is not possible in individual cases. In the following fundamental concepts are listed: Smart Factory, Cyber-physical Systems (CPS), Self-organization, New systems in distribution and procurement, New systems in the development of products and services, Adaptation to human needs, and Corporate Social Responsibility.

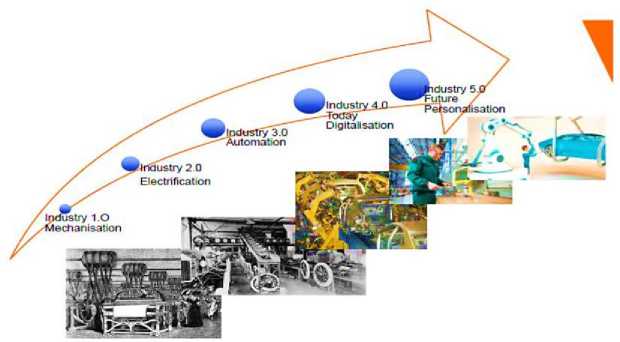

The heart of the Industry 4.0 framework is the CPS, which consists of integrating hardware and software in a mechanical or electrical system designed for a specific purpose. Industry 5.0 is the upcoming technology of the previous generation designed for efficient and intelligent machines (Fig. 1).

Fig. 1. The evolution of industrial digital revolutions [4-6]

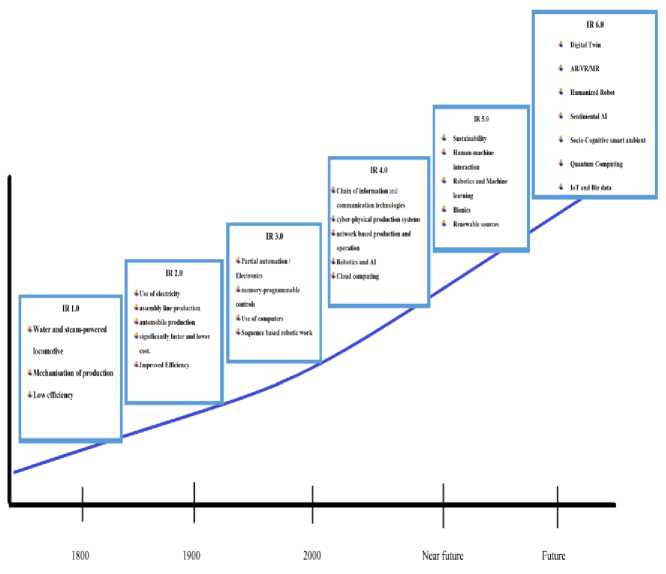

The “Industry 5.0” concept is an evolution of the Industry 4.0 concept, which uses emerging technologies and applications to harmonize the virtual and physical world, placing the human value at the center of the problem. In this way, we will have a society (Society 5.0) with technologies and infrastructures focused on the human being and on solving social and environmental problems, that is, a society based on sustainability, human value, and resilience (see Fig. 2).

Fig. 2. The paradigm of the new era Society 5.0 [4]

However, although technology does not stop providing changes in industry, society, and education, it is not enough to promote the expected improvements in humanity. In this scenario of rapid evolution, transformation, and technological change, there is a need for new learning and skills in education. Thus, Education 5.0 emerges, which must develop in the student/training other skills and competences in an integral and human way, focusing on collaboration between peers and the community, relevant to the improvement of people’s lives and the social and humanity well-being.

Immersive and interactive educational experiences is the future of teaching and learning, combining the best of Education 5.0 and Industry 4.0 learning technologies to engage current generation learners whose learning style is unique, right up to the digital era. The emergence of Education 5.0 highlights skills such as communication, leadership and resistance, curiosity, understanding, and critical and creative thinking. The smart society will be equipped with a new version of Immersive and Interactive Educational Experiences is the Future of Teaching and Learning, combining the best of Education 5.0 and Industry 4.0 learning technologies to engage today’s generation of learners, whose learning style is unique to the digital era. Industry 5.0 presents significant challenges and a demand for new contemporary approaches to learning and education, as well as a need for the transformation of schools and educational institutions. In the university model required by Industry 4.0 or Society 5.0, concepts such as joint education, cooperative education, integrated learning, sandwich learning, internship, experience-based learning, and work-integrated learning are at the forefront.

In this Era of Education 5.0, the teaching and learning theories associated with Education 5.0 are aligned with post-constructivism. The 5.0 Era represents a quantum leap forward from the 4.0 Era and there is a need to strengthen cooperation at national and international levels and conduct skills training on a national and international scale to support the number of graduate profiles that fit the needs (see Fig. 2).

It must be conjectured that the past modern upsets depended on large-scale manufacturing, mechanization, customized client requests, and significant creation runs where machines are locked in with people and empowered with AI calculations to make the items and offer types of assistance to clients with their necessities. But 3D printing innovation is spreading its wings toward another level of opportunity in the new insurgency by adding a more extensive scope of materials, biomaterials, and synthetic compounds for lithography control discharge medication. These 3D printed, AI, AI-empowered innovations utilized in medication could lessen the possibilities of contaminations during the human touch in administrations. Right now, in a pandemic where every day new infections are prepared to assault humankind, these mechanical technology -based clinical frameworks could guarantee the well - being of people with less gambling.

Robotized Applications in Industry 4.0 and Industry 5.0

The Industry 4.0 vision targets a holistic and transformative incorporation of information and communication to improve responsiveness, production efficiency, in addition to self-controlled mass personalization of products and services [1]. Decentralized and reconfigurable robotized automation [2] along with intelligent production and servicing [3] are key pillars of this smart automation. At the heart of this level of automation are often interconnected and interoperating robots. They are endowed with on-board Artificial Intelli- gence/Machine Learning (AI/ML) capabilities to synchronize and orchestrate themselves, predict next actions, and anticipate events while making skillful, robust, and accelerated decisions. Insights gained from measured data help enterprises tailor and scale their productivity and servicing capabilities to adapt to varying demands and elevate customer and partner experiences. Toward this end, the Industry 5.0 vision focuses on the individual, sustainable, and resilient empowerment of the workforce to streamline automation efficiency. Workers are augmented with natural and uplifting capabilities that harness personal skills in humanautomation-collaboration [3]. They are exposed to decent and engaging work conditions, including AI/ML-driven inference and generation of competitive facts [4], that not only build upon a critical perception but also reasoning on top of an efficient and dynamic machine-to-machine [3,4] and human-to-machine communication. A comfortable, responsive, differentiated, and fulfilling accommodation of customer preferences along with an inclusive proximity [5] to capture their subtle needs and offer them an enhanced quality of value are expected [6].

The integration of cognitive skills of humans and robots to enhance not only situational awareness but also adaptation through multi-perception and reasoning improves the accommodation of uncertainties, thereby enhancing the effectiveness of robotized applications that follow the Industry 4.0 vision [6]. Take for instance the case of a carpenter using cobots, i.e., collaborative robots, to industrially construct personalized furniture. The carpenter can even interpret outputs of overlooked modalities beyond audio and video signals. Forces and torques, for example, can act as a modal means of communication between the carpenter and the cobot. Then, the carpenter correlates these modalities with a balanced understanding of provided recommendations from the analysis of big data (e.g., in-house orders, customer relationship management, states of processes and assets, partner demands and offers, resources trading, trends, political news, etc.) to make non-nominal anticipatory decisions. Unlike standard automation, the carpenter in the loop can develop an ergonomics-related empathy for customers involved in the individualized robotics-driven design of a chair whereas the executive department of the fabric can have ethical and moral considerations for customer preferences [7]. This human-centered and inclusive servicing occurs in parallel to a kinesthetic guidance of the cobot to naturally and flexibly guide large and heavy payloads to desired poses. The goal is to responsively reconfigure the work-cell and adapt to high-mix low-volume demands with negligible metabolic costs and zero programming efforts [8]. In this case, the compliant behavior of the cobot contributes to an engaging work experience for the carpenter, as pursued by the Industry 5.0 vision. The resulting physical humanrobot-collaboration (pHRC) fosters a resilient industry propelled by the capability of the cobot to accommodate vacancies of co-workers of the carpenter in collaborative tasks [9].

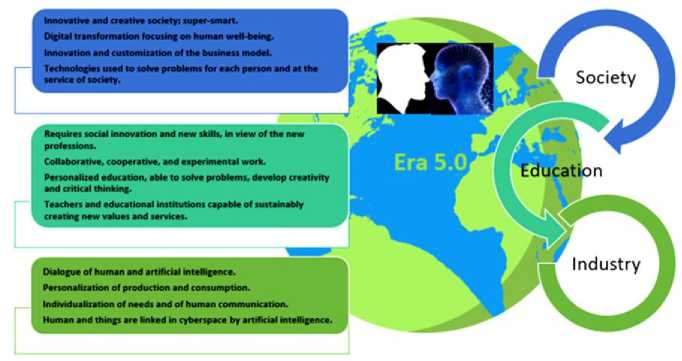

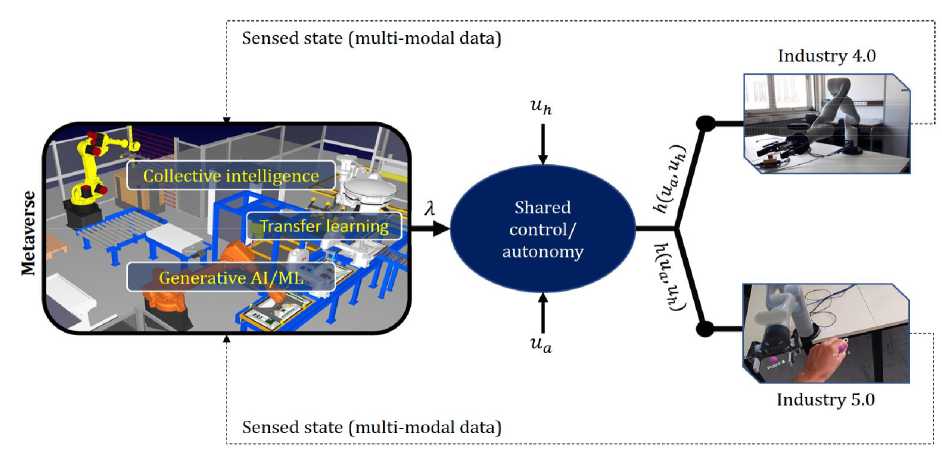

However, connecting robotized applications in Industry 4.0 and Industry 5.0 has received little attention thus far. The Metaverse can be viewed as an ecosystem of interconnected virtual collaboration spaces (vCS) in which this undertaking is developed. vCS are fed with data and knowledge provided by two functionalities: generative AI/ML and collective intelligence (see Fig. 3).

-Enhanced communication

-Qualitative and accelerated

-Qualitative and accelerated decision making processes

-Space-air-underwater-terrestrial

Integrated Networks

'Pervasive and itinerant access to Metaverse

-Al/ML-driven network slicing

-Global participation of customers

-Elevated quality of experience (QoE)

Fig. 3. A three-layer abstraction of the Metaverse [10]

Furthermore, vCS are populated with digital twins (DTs) of mirrored physical assets and applications in which these assets evolved along with embodied avatars that project humans onto vCS. One advantage of vCS is the flexibility, velocity, and density with which synthetic data is globally generated to simulate and experiment DTs and get insights. Emerging properties are transformed into structured information. The representation of structured facts as knowledge graphs can be learned to infer new knowledge and inform decision making, as shown in Fig. 3. Outcomes of decisions are shared in the Metaverse or used to meet goals in physical robotized applications (see Fig. 4). For that, control policies learned in the Metaverse using multiple data modalities globally aggregated are transferred to address challenges in reality. Transfer learning is the third functionality that helps apply solutions developed in the Metaverse to challenges in practice. The three functionalities (i.e., generative AI/ML, collective intelligence, and transfer learning) contribute to consolidate a bidirectional transition between robotized applications that follows Industry 4.0 and Industry 5.0. A way to accomplish this consolidation is to develop an arbitration of the level of shared control or autonomy of the robot (see Fig. 4) [10].

Fig. 4. The Metaverse harnesses shared control/autonomy to mediate between robot autonomy

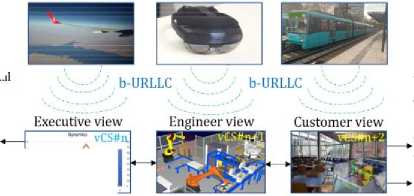

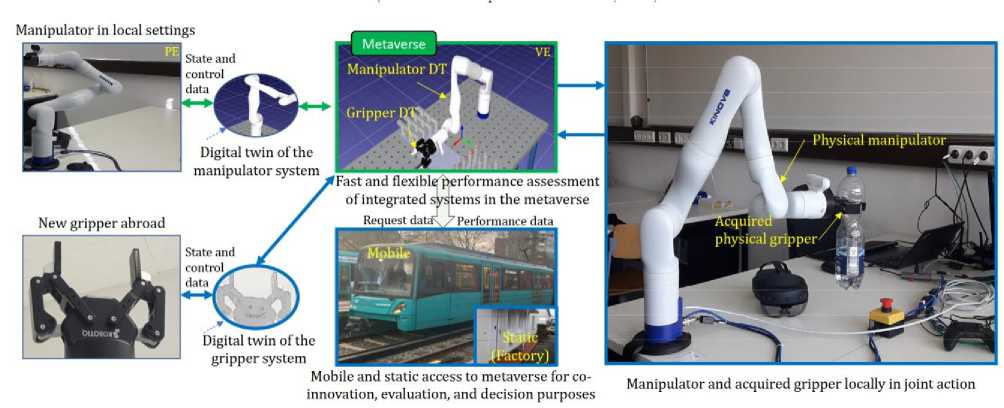

The arbitration signal is adapted to customized goals, ranging from production efficiency (Industry 4.0) driven by autonomous robots to satisfying and resilient work conditions and experiences enabled by an intuitive and accessible pHRC (Industry 5.0). Whereas the complete virtualization and partial augmentation of local real scenes are usual nowadays, a pervasive and itinerant usage of such VR/AR-solutions even under high mobility (e.g., in a high-speed train as shown in fig. 3) remains, however, an open challenge with diverse industrial and societal implications. Upon availability, DTs of a manipulator and a prospective gripper can be combined in a vCS of the Metaverse to assess the performance of the compound system, as shown in the middle upper part of Fig. 5.

Potential implications (e.g., impacts of the geometry and pneumatic force range of the gripper on bin picking processes with overlapped items) of the gripper for the velocity of the feeding system in the shop floor can be detected and competitive solutions can be devised in the vCS long before the gripper is bought. This is done using VR-glasses and DTs while keeping the physical robot in productive usage. Technical and operational questions about how to deal with future co-workers as well as remote customers willing to codesign products (e.g., in 3D robotized printing) are still open. These aspects are however crucial for executives in many companies. One reason for this is that future generations alpha and Z of workers and customers advocate the necessity of providing interconnected inclusive workplaces, in conjunction with market places with personalized experiences and sustainable practices.

Fig. 5. The Metaverse as a virtual testbed for scalable and cost-effective evaluations of robotics technologies, regardless of the current mobility

Despite the potentials and opportunities of the Metaverse emphasized thus far, a bidirectional robotics-related transition between Industry 4.0 and Industry 5.0 is still in its infancy. Such a transition is expected to contribute to identify and consolidate the symbiotic interdependence between both visions and help re-orient research, development, and transfer activities toward their combination to revamp an efficient industry of values. Whereas an autonomous behavior of robots that builds upon communication for process efficiency is a prominent operating mode in Industry 4.0, manual robot guidance based upon e.g. the gravity and payload compensation mode is an approach to cope with musculoskeletal stress in Industry 5.0.

The quantum computational intelligence platform of quantum software engineering as Industry 5.0 / 6.0 toolkit background

The evolution of industrial digital revolutions (see, Fig. 1) based on the corresponding evolution of computational intelligence models and structures of intelligent cognitive control applying new methods of “brain - computer interface” (BCI) information processing for the applications in different models of human – robot interactions. Background of information processing in human-robot interface (HRI) design applying BCI is computational intelligence toolkit.

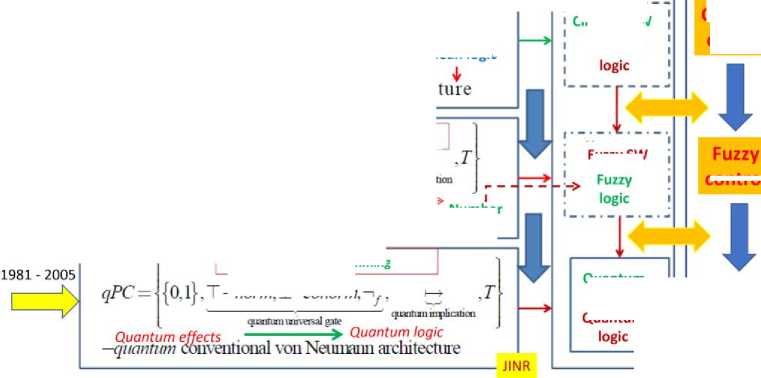

Figure 6 demonstrate the evolution of computational intelligence IT [11-13] according to the requirements of Industrial revolution I2.0 – I5.0. Conventional (deterministic and probabilistic) and unconventional (as fractional) models of calculus on Fig. 2 are corresponded to requirements of I1.0 and I2.0 revolutions and longtime applying as the platform of classical robotics control systems. Fuzzy robotic control based on soft computational intelligence toolkit was introduced in [8], includes “human - machine” interfacing [11] and in present time is the background of platform I4.0 [3].

s

Evolution of HW computer science development and SW implementation

Control algorithms

SW / Logic

1966-1972

Classical SW cPC = {0,1}, Л,V,

,T

Boolean universal gate implication

1967 -1999

fPC = - [0.1], T —nonn. ± ~conorm.—.

fuzzy universal gate

Quantum programming

— conventional von Neumann an

Classical control

Deterministic computation

Sequential computing

—norm, _ -conorm.

Classical physics

Boolean logic

Fuzzy SW

Quantum control

Fig. 6. The temporel evolution of computational intelligence IT

—fiizzy conventional von Neumann architecture Number quality

Quantum SW

Quantum

control

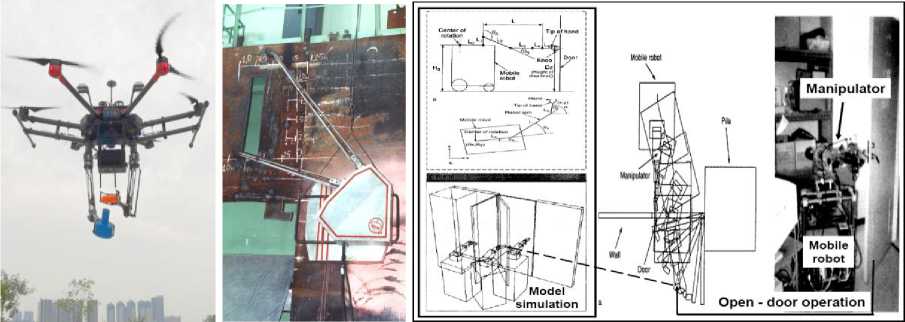

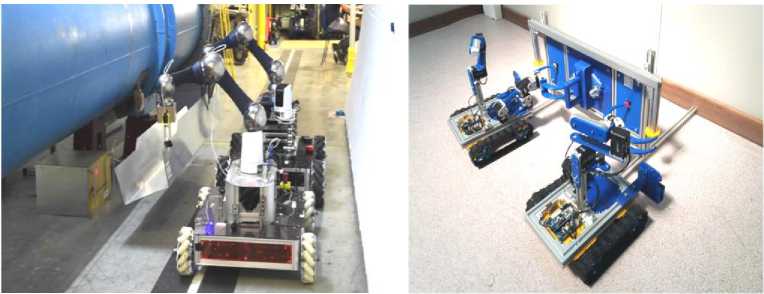

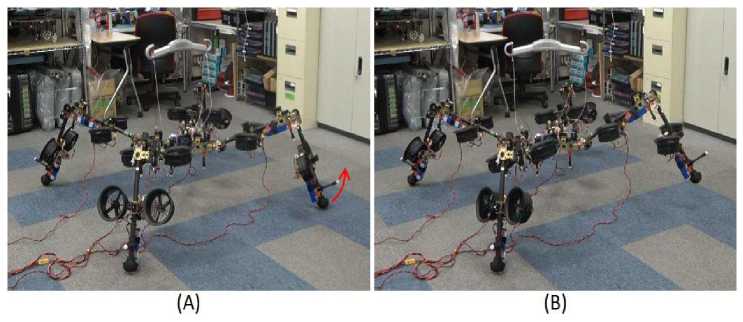

Remark: Related works . Historically I4.0 considered as sociotechnical cyber-physical system (CPS), includes in control loops human being factors and was created different models of autonomous robotic systems for actions with different technology operations in information risk and unpredicted control situations [14]. Figure 7 demonstrate different types of autonomous robotic manipulators (experiments with the Stanford Robotic Platforms: Vacuuming, opening a door, and ironing are examples of tasks demonstrated with the Stanford robotic platforms) and autonomous robotic systems for typical technology operations with air two arm manipulators, wall climbing robot with manipulator and robot for service use.

Fig. 7. Examples of intelligent robotic systems for I4.0 [15-25]

Human-Robot Interfaces have a key role in the design of secure and efficient robotic systems. Great effort has been put during the past decades on the design of advanced interfaces for domestic and industrial robots. However, robots for intervention in unplanned and hazardous scenarios still need further research, especially when the mission requires the use of multiple robotic systems, to obtain an acceptable level of usability and safety. Many researchers describe the design and the software engineering process behind the development of a modular and multimodal Human-Robot Interface for intervention with a cooperative team of robots, as well as its validation and commissioning, as it is being used in real operations, for example, at CERN’s accelerators complex. The proposed Human-Robot Interface allows the control of a heterogeneous set of robots homogeneously, providing the operator, among other features, with live scripting functionalities which can be programmed and adapted in run-time, for example, to increase operator’s multi-tasking in a multi-agent scenario. The operator is given the capability to enter in the control loop between the HRI and the robot and customize the control commands according to the operation. To provide such functionalities, well-defined software development approaches have been adopted, for guaranteeing the modularity and the safety of the system during its continuous development. The modules describe offered by the HRI, such as the multimodality, multi-robot control, safety, operators training, and communications architecture, among others. The HRI and the CERN Robotic Framework where it belongs are designed in a modular manner, in order to be able to adapt both, software and hardware architecture in a short time, to the next planned mission. Results present the experience gained with the system, demonstrating a high level of usability, learnability and safety when operated by both, non-experts and qualified robotic operators. The multimodal user interface has demonstrated to be very accurate and secure, providing a unique system to control, in a teleoperated or supervised manner, both single and multiple heterogeneous mobile manipulators. At the moment of writing, the user interface has been successfully used in 100 real interventions in radioactive industrial environments. The presented HRI is a novel research contribution in terms of multimodality, adaptability and modularity for mobile manipulator robotic teams in radioactive environments, especially for its software architecture, as part of the CERN Robotic Framework.

Standard HRI design techniques, or software engineering development methods, have rarely been applied to HRIs development for teleoperated robots, as the development of such robots involves people from varying engineering disciplines, many of whom do not have a software engineering background; hence some important software engineering principles are often missed out. standard Human-Computer Interaction methods are not universally approved, as well as common Human-Robot Interaction guidelines, but several approaches have been proposed in the last 30 years, both in terms of interaction, displaying of feedback and evaluation. Several studies have been also made in robot teams, in which, however, the control of a single robot is shared by multiple humans. Multi-robot collaboration and control from a single operator do not appear to have received much attention in the research field. Various tests were performed in order to prove the usability and learnability of the presented HRI. As previously mentioned, above all the communication and safety features, the main goal of this work is to create a usable Human-Robot Interface which allows inexperienced operators to carry out telemanipulation tasks. For this test, a set of inexperienced operators was selected to perform a unique task several times (Fig. 8).

(a) (b) (c)

Fig. 8 . Different pictures from the usability tests.

-

(a) The experimental setup table (b) Telerob Telemax used during the (c) CERN’s CERNbot in the configuration used experiment for the experiment

The task had to be accomplished using either Telerob Telemax (Fig. 8(b)), one of the commercial service robots owned by the team, with its closed-box HRI, or CERNbot (Fig. 8(c)), the CERN in-house made robotic platform, with the proposed HRI. Each operator was asked to perform the task with only one of the two robots. The inexperienced operator was supported by an expert operator to explain the basic functionality of both robots before the first attempt and to provide minimal support during the entire test.

The operators were asked to accomplish the task several times, in order to compare the behaviour of the learning process between the two systems. The operators were asked to pick a LEMO push-pull self-latching connect or from aplastic box and insert it in its compatible plug (Fig. 8(a)). The performance of the task was measured by execution time. Moreover, in order to provide a baseline comparison time, the task was executed with both robots by expert operators; their accomplishment time can be considered as a physical lower bound in the execution of the task. In order to validate the use of the scripting for multi-agent control, a transport task has been designed [24]. The operators were requested to use two mobile platforms, each equipped with a single robotic arm (Fig. 9(a)).

(a)

(b)

Fig. 9 . The two robots used for the multi-agent manipulation test while transporting the object

The operators were asked to drive the two-mobile platform in the proximity of the object to transport, grasp it and transport it back. The task has been performed multiple times for each operator, both with and without the scripting behaviors. In hazard education situations are required to organise themselves in multidisciplinary groups and face the development of a software and mechatronic solution, based on the Minicernbot platform (Fig. 9(b)), to solve the following missions:

-

- Mission 1: Press Red Button. The robot has to approach the Panel, from the base station, and press the red button, which stops the machine, in order to allow the robot to disassemble it.

-

- Mission 2: Unscrew the Radioactive Source Cover. The robot has to unscrew two nuts that are holding the cover of the radioactive source. If the nuts are brought to the base, the mission gets extra points.

-

- Mission 3: Uncover the Pretended-Radioactive Source. Once the nuts are released, the robot has to uncover the radioactive source by grasping the handle of the cover. If the cover is brought to the base, extra points are assigned to the team.

-

- Mission 4: Release the Pretended-Radioactive Source. The team is asked to, once the cover is removed, grasp the pretended-radioactive source and release it from the holder. If the pretended-radioactive source is brought to the base, extra points are assigned to the team.

-

- Mission 5: Press Yellow Button. The robot has to press the yellow button to set up the machine.

The missions proposed have demonstrated to be accessible for the students and also challenging. For further educational experiments, or longer projects, they can be updated by letting the students replace the pretended-radioactive source by a new one, cover it, and screw the nuts. This would require much more effort to design the solution. The panel also includes a long pipe aluminium frame with two handles, which has been provided in order to let the students recover it to the base by using two Minicernbot platforms, in a cooperative way. This mission has been reserved as an extra exercise.

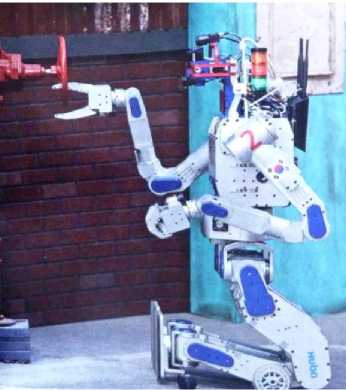

Having in mind a humanoid telerobot that could perform a variety of functions for a disaster situation too dangerous for direct human participation, such as the Fukushima nuclear meltdown, DARPA had each telerobot compete on six tasks (see “DARPA Robotics Challenge” in Wikipedia for numerical results for each task). These tasks included climbing stairs, operating a rotary valve, opening a door and walking through, stepping across rubble, sawing a piece out of plasterboard with a hand tool, and getting in and out of a vehicle. Nineteen contestants were scored on task success and performance time. The DARPA sponsors purposely constrained the bandwidth of communication between telerobot and human controller so that continuous teleoperation was impossible; computer task execution under supervisory control was therefore essential. Not all entrants could do all tasks, and some telerobots fell and could not get themselves up (see .

Figure 10 shows the winner from the Korean Institute of Science and Technology, KAIST (which cleverly had legs with supplementary wheels attached).

Fig. 10. KAIST robot opening a valve. From “Team KAIST. DARPA Robotics Challenge,” by DARPA, 2015 kaist). In the public domain [25]

The second step is the introduction to the robotic facilities at CERN, where it possible face real demonstrations of the modular robots, such as the TIM, CERNBot, CERNBot2, Cranebot, Charmbot, etc., (see Fig. 11).

Fig. 11 . Set of modular robots developed at CERN-EN-SMM-MRO section to perform safe operations in radioactive and hazardous scientific facilities (i.e., Cranebot, Charmbot, CERNBot, CERNBot2, TIM, and Unified GUI [26])

In addition, they can better under-stand the way the robots are remotely operated via the Unified Graphical User Interface (GUI), which guarantees human safety by avoiding their exposure to radioactive scenarios. In addition, the researchers are able to better understand the meaning of «Science for Peace», focusing exclusively on research and applications that have an outstanding social benefit (e.g., medical applications).

The main contribution of this pool of robots is their modularity, which permits adapting the robot configuration to the specific operation to be performed. New tools have to be designed and assembled for each specific intervention, which needs a continuous development and improvement of the robot platforms. In addition, the software used by the operators to control the robots is also explained, so that they can better understand the different ways to interact with the robots, from manual to supervisory control, according to the specific necessity and the expertise of the operator

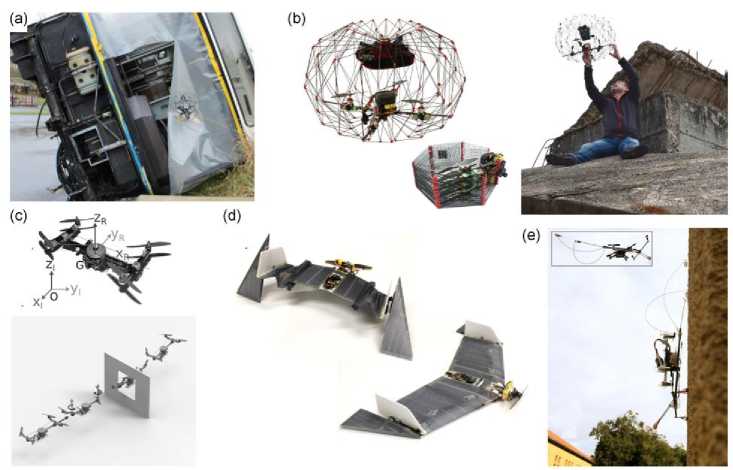

In addition, they can better understand the way the robots are remotely operated via the Unified Graphical User Interface (GUI), which guarantees human safety by avoiding their exposure to radioactive scenarios. Unlike the navigation challenges for other ground‐based systems, for example, autonomous cars, where the system can leverage some knowledge about structure in the environment, and generally does not need to overcome significant obstacles to reach its goal, disaster zones do not offer either of these conveniences. The environment is generally unstructured as well as being unknown in advance, and often contains obstacles that must be negotiated in order for a ground robot to traverse to reach goal locations. The popular locomotion types for ground robots offer different advantages in overcoming these challenges. Legged robots offer the ability to step over challenging terrain but require more sophisticated approaches to control. Tracked and wheeled robots, on the other hand, offer stability and straightforward navigation and planning, but at the expense of requiring a continuous path (see, Fig. 12).

Fig. 12. Examples of different robot morphologies used by teams in the DARPA Robotics Challenge. Many teams, such as (a) MIT (Kuindersma et al., 2016) used bipedal/humanoid designs, (b) Team NimbRo Rescue (Schwarz et al., 2017) used articulated, wheeled legs, while (c) NASA ‐ JPL’s RoboSimian (Karumanchi et al., 2017) and (d) Team KAIST (Jung et al., 2018) utilized platforms that could transform between rolling and walking postures. DARPA, Defense Advanced Research Projects Agency

We consider the state of the art in design and operation across both locomotion types.

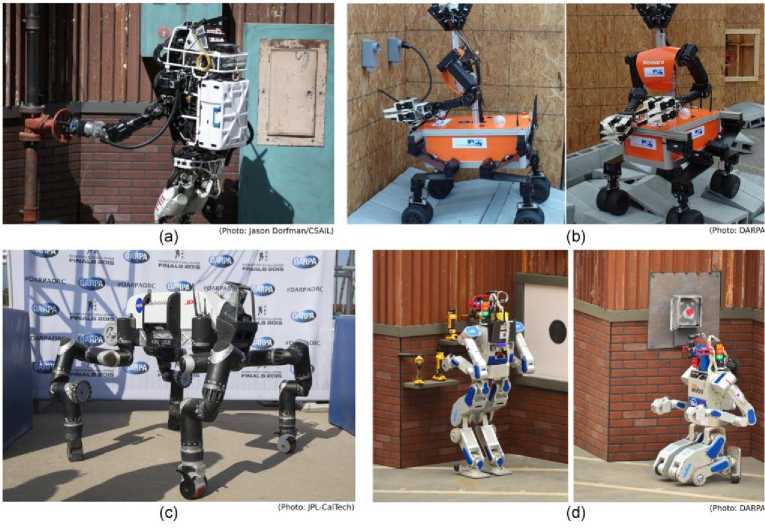

Aerial robots are becoming ubiquitous in SAR scenarios thanks to their capability to gather information from hard to reach or even inaccessible places. The use of drones in SAR missions has been fostered not only by advances in control and perception, but also by new mechanical designs and materials. For instance, advances in drones’ design and manufacturing have contributed to the development of important features for SAR such as collision resilience, transportability and multimodal operations. Collision tolerant drones that can withstand collision with protective cages see Fig. 13(a)) or resilient frames can fly in cluttered environments without the caution and low speed often required for sense and avoid approaches [27-32].

The quest for transportable drones that can be easily deployed on the field is the main motivation for the development of foldable frames (see Fig. 13(b)). By incorporating foldable structures, a relatively large drone with sufficient payload and flight time can be stored and transported in a small volume, while providing safety for handling by operators, as well as collision tolerance in cluttered environments. Foldable frames are also investigated to reduce the size during flight and traverse narrow gaps and access remote locations (see Fig. 13(c)).

Fig. 13 A selection of flying robots with novel morphologies, which offer beneficial properties in disaster environments. (a) Gimball being tested in a realistic disaster scenario; (b) PackDrone, a foldable drone with protective cage for in ‐ hand delivery of parcels; (c) A drone able to negotiate narrow gaps by folding; (d) Multimodal flying and walking wing; (e) Multimodal flying and climbing quadcopter

Most current drones are designed to exploit a single locomotion mode. This results in limited versatility and adaptability to the multidomain environments encountered in SAR missions. Multimodal drones overcome this problem by recruiting different modes of locomotion, each one of them suited for a specific environment or task. Among the different types of locomotion modes, flight and ground locomotion (see Fig. 13d) or climbing (see Fig. 13e) are complementary and their combination offers unique opportunities to largely extend the versatility and mobility of robots. The option of aerial and terrestrial locomotion modes allows robots to optimize over either speed and ease of obstacle negotiation or low power consumption and locomotion safety. For example, in a SAR missions, aerial locomotion can be used to rapidly fly above debris to reach a location of interest.

Proximity interaction techniques can take advantage of pointing gestures to intuitively express locations or objects with minimal cognitive overhead; this modality has been often used in HRI research e.g. for pick‐and‐place tasks, labeling and/or querying information about objects or locations, selecting a robot within a group, and providing navigational goals. Such gestures can enable rescue workers to easily direct multiple robots, and robot types, using the same interface (see Fig. 14).

Fig. 14. Human–robot interface in which the operator uses pointing gestures, estimated from sensors worn in armbands, to provide navigation commands to both flying and legged robots

Terrestrial locomotion can subsequently be used to thoroughly and efficiently explore the environment or to collect samples on the ground (see, Fig. 15).

Fig. 15. Modular quadrupedal robot ANYmal being deployed in challenging disaster environments highlighting its ability to navigate over rough terrain and in degraded sensing conditions, and demonstrating its resistance to fire and water

Scansorial capabilities allow to perch on surfaces and remain stationary to collect information with minimal power consumption. Furthermore, multimodal aerial and terrestrial locomotion also enables hybrid control strategies where, during terrestrial locomotion, steering or adhesion can be achieved or facilitated by aerodynamic forces. Multimodal locomotion has been also exploited to develop FlyCroTugs, a class of robots that add to the mobility of miniature drones the capability of forceful manipulation. FlyCroTugs can perch on a surface and firmly hold on to it with directional adhesion (e.g., microspines or gecko adhesive) while applying large forces up to 40 times their mass using a winch [33]. The combination of flight and adhesion for tugging creates a class of 100 g drones that can rapidly traverse cluttered three‐dimensional terrain and exert forces that affect human‐scale environments for example to open a door or to lift a heavy sensory payload for inspections.

“Brain - computer” interface models in Human - robot cognitive interaction background: Quantum computing technologies in Industry 5.0 / 6.0

Brain-computer interface (BCI) is a direct communication channel between the central nervous system and the computer, without the help of the peripheral nervous system. In this sense, any system that has a direct interaction between the brain and external devices can be considered a BCI system. While early BCI technologies provided tools for movement disorders to communicate with the environment, the use of BCI has expanded to many medical and non-medical applications, including brain state monitoring, neuro-logical rehabilitation, and human cognitive enhancement. With the rapid development of neurotechnology and artificial intelligence (AI), the brain signals used for communication between the brain and computer have developed from the level of sensation, evoked potential and perception, the event-related potential to higher-level cognition (such as goal-directed intention), bringing BCI into a new era of hybrid intelligence.

-

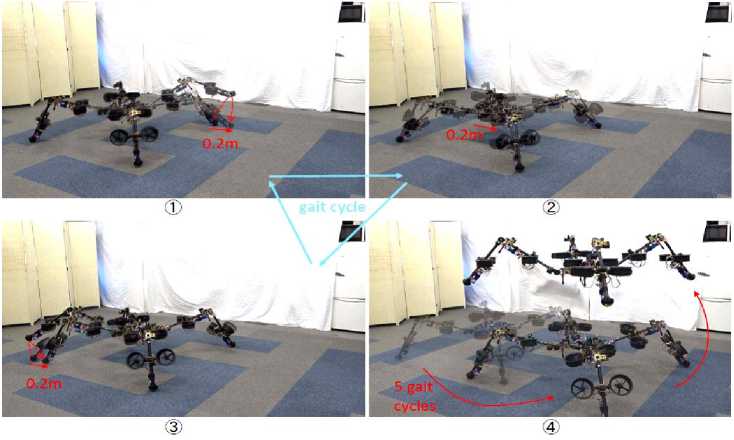

A. Brain - Computer Interface models . As illustrated in Fig. 16, the soft HMIs for humans interacting with machines/robots or monitoring human health condition.

Fig. 16. Schematic diagram for humans interacting with the machines/robots, and various flexi-ble/stretchable devices for soft HMIs. Bottom images: “Pressure sensor”, “E-skin” and “nervous sensor” reproduced with permission. “EMG electrode.” “Epidermal electronics”

HMIs can be divided into several categories: i) soft tactile sensors in E-skin for measuring the pressure and temperature of humans and robots; ii) motion sensors for measuring the joint angles and velocities of natural/artificial limbs; iii) electro-physiology sensors, such as EEG and EMG, for trajectory controlling or health monitoring, as the green and blue arrows; and iv) feedback stimulators for applying electrical stimulations to human bodies, as the red arrows.

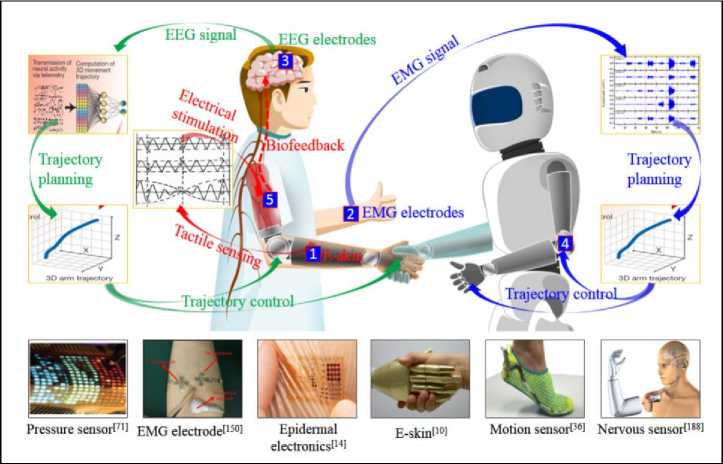

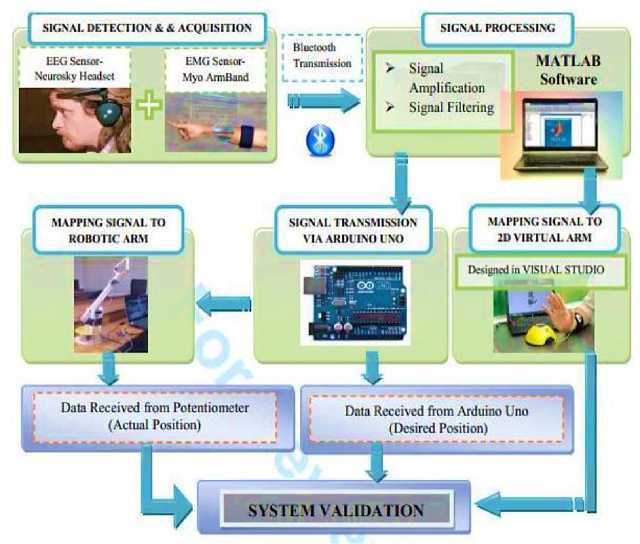

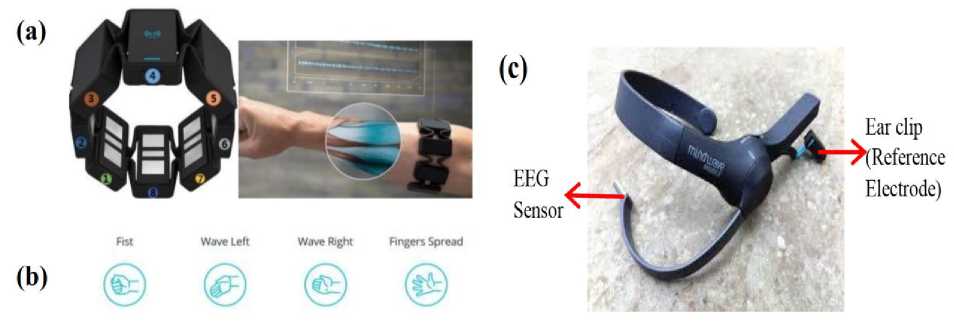

Example: Hybrid EEG-EMG based brain computer interface (BCI) system for on-line robotic arm control . Bio-signal based BCI systems are widely being used in healthcare systems and hence proven to be an effective tool in rehabilitation engineering to assist disabled people in improving their quality of life. Handicapped people with above hand amputee have been targeted and hence non-invasive EEG and EMG biosensors are used to design wireless hybrid BCI system. The hybrid system is able to control real-time movement of robotic arm via combined effect of brain waves (attention and meditation mind states) and wrist muscles movements of healthy arm as command signal. The system operates the robotic arm within 3 degree of freedom (DOF) motion which corresponds to movement of shoulder (internal and external rotation), elbow (flexion and extension) and wrist (Gripper open and close) joint. It has been experimentally tested on 4 subjects with upper limb amputee (having one healthy arm) after training period of one day. On receiving the input signals from EEG and EMG sensors, subjects have successfully controlled the movements of the robotic arm with accuracy of 70% to 90%. In order to validate the obtained results, a potentiometer has been fixed on robotic arm and angular motion of shoulder and elbow joint is recorded (actual motion) and compared with results of the BCI system (required motion). The comparison shows high resemblance between actual and required motion which reflects the reliability of the system. In addition, apart from robotic prototype, its 2D modelled is also designed on visual studio. The presented preliminary experimental results show that the motorized prosthetic prototype movement due to mind and muscle control is in accordance with the 2D modelled virtual arm permitting to improve its real-time adoption for rehabilitation. The BCI system aims to control the movement of targeted body area in the similar fashion as the normal body moves in response to the bio signals acquired from muscles and brain. The implemented BCI system uses patient’s bio potentials (EEG and EMG signals) in simultaneous manner to control the movement of robotic arm.

The schematic representation of overall methodology adopted in designing hybrid EEG-EMG based BCI system has been shown in Fig. 17.

The system mainly comprises of four main modules i.e. signal detection & acquisition, signal processing, signal transmission, mapping signal to robotic arm & 2D virtual arm and system validation. In the first module, EMG and EEG signals are acquired from patient’s arm and scalp respectively. EMG is a technique used for recording and evaluating the electrical activity generated by muscles. Generally, muscles activities can be detected by two methods: (i) invasively by using a needle-based electrode; inserted directly into the muscle and (ii) noninvasively by positioning a surface electrode over the targeted muscle.

Fig. 17. Methodology of Hybrid EEG-EMG based BCI system

Noninvasive technique has been employed and raw EMG signals are detected by using Myo arm band ( Fig. 18(a) ) which has eight integrated sensors to obtain the EMG signals generated by myoelectric activity of human arm. In addition, it also has an integrated accelerometer, gyroscope and magnetometer for complete gesture recognition of hand gestures ( Fig. 18(b) ) along with built-in Bluetooth module for wireless data transmission to the external unit.

Fig. 18 . (a) Myo arm band (EMG Sensor) (b) Myo arm hand gestures (c) Neurosky mind wave mobile headset (EEG Sensor)

Along with EMG, EEG is also used in the proposed hybrid BCI system. EEG records the electrical activity from the scalp, produced by activation of brain cells. Here Neurosky single channel mind wave mobile headset ( Fig. 18(c) ) is used for detection of raw EEG signal. Neurosky Mindset is wireless, portable, lightweight and non-invasive device, which includes a headband with three mounted sensors (dry electrodes) along with a Bluetooth unit for data transmission. The two electrodes (reference and ground) are placed onto the earlobe and third EEG recording electrode is located on the forehead. It reads electric signals generated by neural activity in the brain and provides information about user’s levels of “attention” and “meditation”

on a scale of 0 to 100. Hence by combined effect of hand gesture (from EMG signal), attention and meditation values (from EEG signal) the movement of robotic arm will be controlled.

At area of contact between body and electrodes, there exist several obstacles like skull, skin and many other layers which weaken and distort the electrical signal. Hence, in the second section the acquired raw EEG and EMG signals are filtered and amplified in MATLAB. The processed signals then act as an input for control system of robotic arm prototype and 2D virtual robotic manipulator programmed on visual studio.

The processed data then needs to be transmitted to Arduino Uno microcontroller which controls robotic arm via real time brain and myoelectric signals. Thus, in third unit, a serial communication is established between computer and Arduino Uno to perform signal transmission. In Arduino, attention and meditation values are classified into different ranges and different variables are assigned to hand gestures, attention and meditation ranges. As robotic arm has to perform 6 different actions therefore in Arduino six combinations of EMG and EEG signals are programmed. Thus, when the user performs specific hand gesture along with certain level of focus or meditation, the robotic arm preforms a pre-defined action.

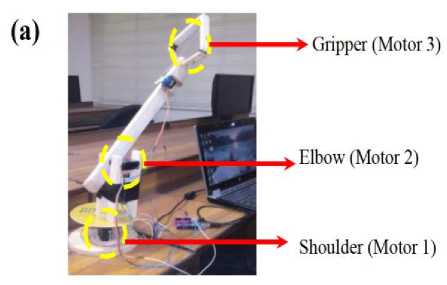

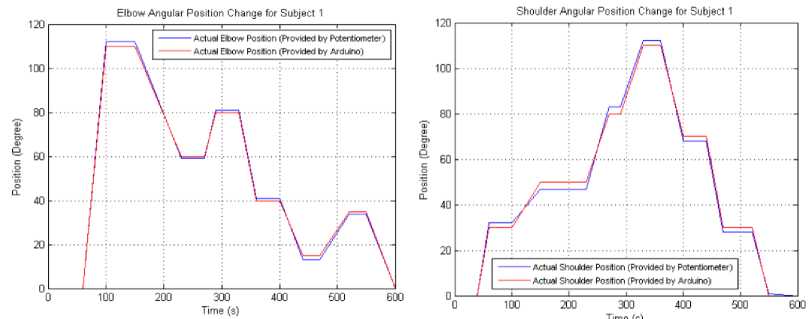

In next module, a generated signal from Arduino controls the movement of robotic arm. The robotic arm prototype has 3 degree of freedom (DoF) motion and its joints represent the human wrist, elbow and shoulder joints ( Fig. 19(a) ) .

Fig. 19 . (a) Robotic Arm Prototype (b) 2D Modelled Robotic Arm

The robotic elbow joint performs extension and flexion motion of arm (varying between 0 to 120 degrees), robotic shoulder joint executes medial and lateral rotation of shoulder (varying between 0 to 150 degrees) whereas robotic wrist joint corresponds to opening and closing of gripper. Each joint is connected with a servo motor and SG90 9g micro servo is used to control the movement of gripper while MG996R servo is used for moving shoulder and elbow joints. Hence these servo motors receive the input command from Arduino which allows them to derive the robotic arm to its desired position.

Finally, in order to validate the system response, a, a linear taper potentiometer “480-5885-ND” (angular position sensor) is connected to Arduino and positioned on the robotic arm in order to measures the actual angular position of the robotic arm. In addition, a virtual 2D robotic hand manipulator has also been designed using Microsoft Visual Studio which consist of shoulder joint, elbow joint and a claw (Fig. 19(b)).

Processed signals from MATLAB are also mapped on virtual robotic arm which moves according to provide input. Thus, the motion of robotic arm manipulator is compared with action of virtual robotic arm to analyze if the movements are in correct accordance. Lastly, the data from potentiometer (actual position) and controller output (desired position) is compared to verify the accuracy and reliability of the system.

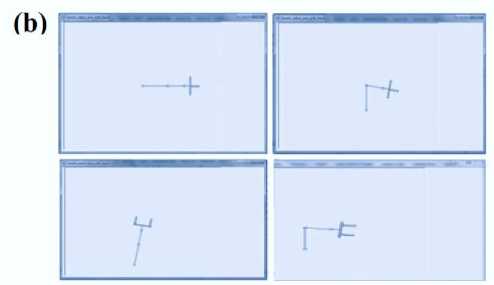

It illustrates that accuracy varies between 70% to 90% depending on the subject’s mind state and their training level. The angular motions performed by elbow and shoulder during tasks execution is presented in Fig. 20.

(a)

(b)

Fig. 20 . Validation Test Results of: (a) Elbow Angular Position for Subject; (b) Shoulder Angular Position for Subject

Furthermore, processed signals from MATLAB are also send to virtual arm which accomplish the actions depending on EMG and EEG signal inputs (Fig. 20(b)). It has been observed that motorized robotic prototype and virtual arm performed the same task simultaneously on providing same input (processed signal); which shows that all actions are executed correctly by the hybrid BCI system [38]. Additionally, in order to validate the obtained results, the potentiometer is mounted on robotic arm that is used as “angular position sensor” to determine the actual angular movement of elbow and shoulder joint. The obtained actual angular position is compared with desired angular position data provided by Arduino microcontroller. In comparison graphs (Fig. 21), overlapping of actual and desired positions show that robotic arm has accurately performed the anticipated actions.

(a)

(b)

Fig. 21. (a) Movement or principal axis of a drone. The drone is facing the x-axis direction and presents high levels of movement freedom; (b) Picture of the Parrot AR Drone 2.0 [35]

However, they cannot move the robotic arm with precise angles i.e. they can move only in right, left, up, and down direction with gripper open and close, irrespective of specific angles. Hence it shows that one of the factors on which system accuracy depends is patient’s training i.e. how perfectly subject’s brain is trained for controlling and synchronizing mind levels with hand motion for specific action.

Example: BCI System for drone control . One of the main challenges involved in designing a BCI system having a drone as target application is the high degree of freedom inherently present in a drone’s movement. For this reason, to better understand this challenge and also to provide some background about the movement mechanisms of this type of aircraft, this section will review the basic concepts related to the drone navigation methods.

In Fig. 21 (a) diagram of the drone rotational movement is depicted. The rotation around the principal axis of an aircraft ( z - vertical, y - lateral and x - longitudinal) are respectively known as yaw, pitch, and roll.

These terms are often used to describe the position and orientation in degrees of the drone in the threedimensional space. In modern drone models, the pitch and roll velocity are stabilized by robust control techniques which make use of onboard sensors. The commands passed to the drone are responsible for controlling the yaw velocity and, hence, the longitudinal direction (forward direction) of the aircraft. Besides, the drone can also have lateral movement ( Y -axis direction) and vertical movement ( Z -axis direction).

A few key-points were taken into account: 1 - flight stability , 2 - available documentation for interfacing with the device , 3 - cost . The first critical point is related to how steady the drone is during flights. Usually, cheaper and simpler drones tend to have poor onboard sensors and control systems, which lead to significant oscillations in the position of the aircraft even when no command is sent. The second key point is associated with the documentation release by the manufacturer to send commands and receive data from the drone. Without the proper API (application programming interface), it would be impossible to link the designed BCI system to the drone. The last key point aff ects the overall cost of the system. Since the purpose of this work is to develop a simple, low-cost yet efficient BCI system, a drone with moderate cost had to be chosen. Taking all these points into account, the drone AR.Drone 2 . 0 from Parrot was selected.

The AR. Drone 2 . 0 shown in Fig. 21(b) is a low-cost quad-copter (approximately $ 300) which presents high flight stability and control precision [1]. The device is well documented and, in addition, there is a large community working with it to build customized applications. The drone flight system is composed by 4 propulsion motors which control the movement speed and direction. To control these motors, the drone is shipped with a stability control system called AutoPilot by the manufacturer. To compute the right command to send to the motors, the central processing unit relies on information provided by several onboard sensors. This sensory system is composed of three sensors: a 3-axis gyroscope, an altitude sensor, and a camera constantly pointed to the ground. The first collects information about the attitude of the aircraft (orientation and inclination). The second reads the current altitude, and the camera is used to monitor the drone’s position, allowing it to stay still in a given area. Using all this information, the AutoPilot system uses inertial sensors and computational visual techniques to maintain a stable and reliable flight. All the processing is performed by an embedded microprocessor running Linux. The total flight time can reach up to 12 minutes.

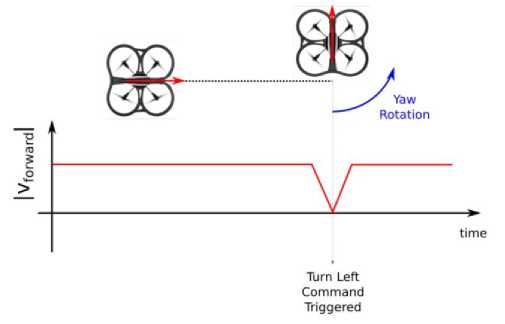

The motion dynamics of a drone, as seen above, is very diverse and can involve a series of diff erent control routines and techniques. The information throughput of a BCI system, though, is significantly limited and, hence, the control of all these movement axes is not feasible or may result in a system too complex to be managed by the user in real time. For this reason, in this work, the goal of the BCI system is to control only the yaw rotation position of the drone. In addition, the longitudinal forward velocity will be automatically controlled based on the commands triggered by the BCI system. In Fig. 22, the proposed method for navigation control is illustrated.

Fig. 22: Drone Navigation Strategy

[The drone is automatically accelerated to a constant longitudinal velocity. When a turn command is triggered by the BCI system, the rotation around the yaw is initiated to alter the direction of the aircraft. After that, the longitudinal velocity is restored to the constant initial value .]

At the start of the simulation, the drone has a constant longitudinal velocity vlong. When a new turn command is triggered by the BCI system, the longitudinal velocity is reduced, and the yaw rotation takes place, altering the direction of the drone (indicated by the red arrow). After the rotation is completed, the velocity vlong is reestablished to its initial constant value. This approach of deriving other control signals from an independent and more critical task avoids the need for multiple and simultaneous inputs from the user. By limiting the number of parameters that the user needed to control, the user can focus better on the primaries and mandatory tasks, which lead to an increase in the accuracy rates and better adaptability to the BCI system.

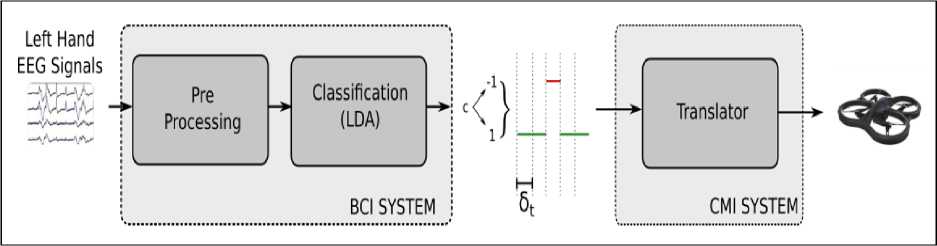

In general, the µ -wave based BCI systems implement routines to directly map the power in the alpha band into commands which are sent to application. The generated command is a basic signal which changes the flight direction of the drone, enabling the user to control the flight path of the aircraft. In Fig. 23, an overview of the BCI system operating in real-time is depicted for the case when only EEG data from a lefthand class is being acquired by the EEG amplifier.

Fig. 23. A simplified diagram of a BCI system connected to a Computer Machine Interface System (CMI) [The CMI translates the classifier outputs into commands which are sent to the application (drone)]

This specific scenario will be useful to understand the mechanisms that underline the online classification problem in a BCI system. The first part to consider is how the classifier operates to classify the incoming signal and, hence, to output the class labels. In Fig. 23, this behavior is illustrated by the continuous output c being generated by the classifier sub-block. It is important to notice that, in the scenario depicted in Fig. 23 where only EEG data from left-hand imagery movement is being acquired, the label c is equal to 1 in a given time instant. Ideally, since only data from one class is being supplied to the classifier, it would be expected that only output labels c = -1 would be generated at the output. However, since the classifier is not an ideal model, miss classifications can occur. The rate at which the classifier miss classify a given EEG segment, as it will be seen shortly, is directly dependent on the validation accuracy of the model. Taking into account this eff ect, the labels c generated must then be converted into a command that the application (drone) can understand and execute. This translation mechanism is performed by the Computer Machine Interface (CMI) system shown in Fig. 23, which implements a translator to map the classifier outputs into flight commands for the drone.

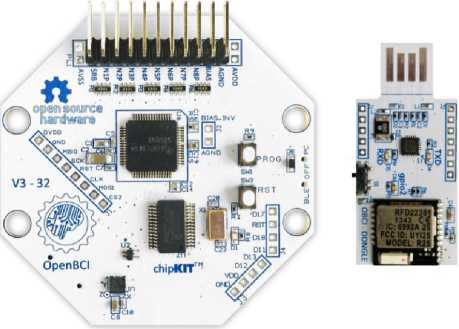

The test assessed the reliability of the communication between the developed platform and the OpenBCI amplifier (see Fig. 24).

Fig. 24. Top view of the OpenBCI board

[The EEG electrodes are connected to the pin headers and the signal is transmitted via bluetooth to a computer using the USB dongle shown on the right-hand side of the picture .]

To get the EEG data from the board, the manufacturer provides a python library with high-level functions. At each sampling interval, the dongle outputs an array with the EEG samples of each enabled channel. This array is then fed to the next stage, which stores the acquired data and process it.

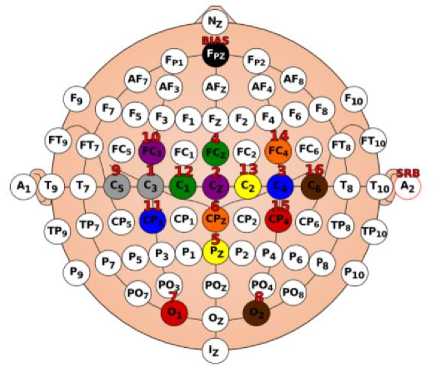

For this experiment, a volunteer was asked to sit comfortably in a chair in front of a monitor which displayed the graphical interface of the designed platform. The EEG electrodes were positioned according to the 10 - 20 system. Since the OpenBCI amplifier only allows the acquisition of 16 electrodes simultaneously, the 10 - 20 system locations shown in Fig. 25 were selected for acquisition based on the information about the brain characteristics and µ -waves described above.

Fig. 25. Electrodes positions according to the 10 - 20 system for signal acquisition with OpenBCI [The locations were chosen based on the brain physical characteristics and the generation properties of µ-waves]

These locations in general provide better results for the detection of µ -waves. After obtaining the data following the procedure discussed above, the segments of EEG data were extracted around the marked events to form the training epochs.

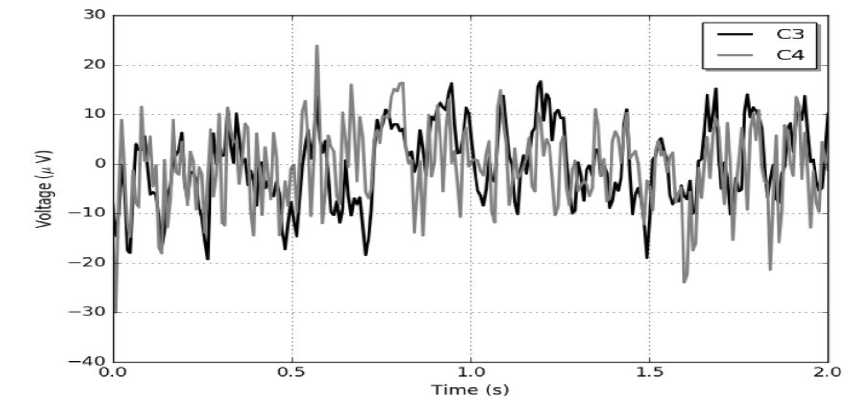

In Fig. 26, the EEG signals of a left-hand movement epoch, acquired from channel C3 and C4, are depicted as a function of time.

Fig. 26. EEG signals acquired using the platform and the OpenBCI amplifier

[The curves show the voltage signal as a function of time acquired at electrode C3(black) and C4(gray).]

When the user is performing a left-hand movement, the neurons from the right hemisphere of the motor cortex (channel C4, for instance) are desynchronized and, therefore, the alpha energy or the µ -waves are expected to have a low amplitude compared to the left hemisphere (channel C3). However, the displayed curves do not present these discriminative characteristics. To highlight these properties, it is common to analyze the average of the spectrum of the measured signals for each class [39].

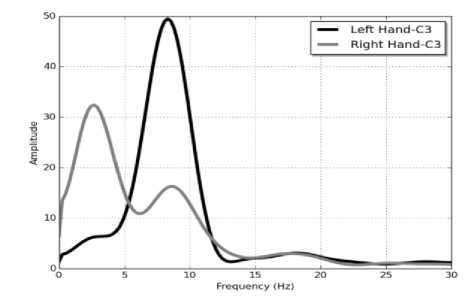

In Fig. 27 the average fast Fourier transforms (FFT) of the epochs from left, and right movement task is displayed.

Fig. 27. Average Fast Fourier Transform of channel C3 of Left movement (black) and Right movement (gray) epochs

[The curves were obtained following the procedure described in the text. Since analyzed channel is in the left hemisphere, its energy in the α band is expected to be higher when the right-side members of the body are in a relaxed state. This can be seen by the amplitude peak found at around 10 Hz in the black curve shown .]

Remark . The average FFT shown in Fig. 27 demonstrates the diff erences between the brain signals when the user is performing a left and right imagery-motor task. The amplitude peak shown in Fig. 27 at around 10 Hz for the case when the user is engaged in a left-hand imagery movement is compatible with the description of µ -waves. When the user is executing a right-hand imagery task, a desynchronization in the neurons of the left hemisphere of the brain occurs. As a result, the power concentrated over this band in channel C3 gets significantly attenuated. This eff ect can be seen in the curves of Fig. 27.

At present and in the future, BCI and quantum computing are important frontier research hot spots in the world, and scientists pay more and more attention to their comprehensive application research. Therefore, this textbook will make a detailed review of the development of quantum computing and BCI.

In recent years, researchers have paid more attention to the hybrid applications of quantum computing and brain-computer interfaces. With the development of neural technology and artificial intelligence, scientists have become more and more researching brain-computer interface, and the application of braincomputer interface technology to more fields has gradually become the focus of research. While the field of BCI has evolved rapidly over the past decades, the core technologies and innovative ideas behind seemingly unrelated BCI systems are rarely summarized from the point of integration with quantum. This textbook provides a detailed report on the hybrid applications of quantum computing and BCI, indicates the current problems, and gives suggestions on the hybrid application research direction.

The hybrid application of quantum computing and brain-computer interface

We introduce a description the hybrid application of quantum computing and brain-computer interface, starting from the main classification of quantum computing-related algorithms and brain-computer interfaces, then the intelligent application of quantum computing and brain-computer interfaces, and then ending with the mixed application. After nearly 40 years of development, quantum information, quantum computing, and quantum simulation have made great progress, but in recent years, the development of the whole field has taken on diff erent characteristics.

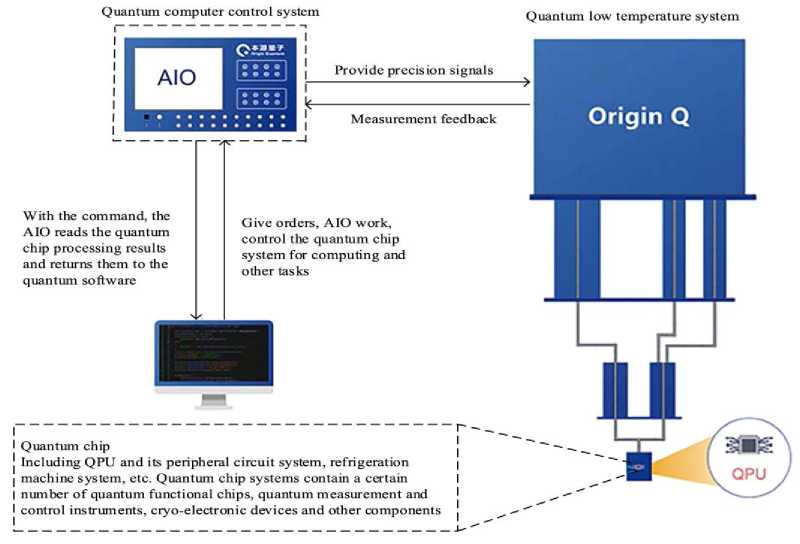

Figure 28 is a diagram of the correlation structure of the quantum computation. People pay more attention to containing more than the number of qubits in quantum computing and quantum simulation, in the premise of guaranteeing the fidelity of quantum operation, to increase the number of qubits, some recent experiments involving the number of qubits to reach dozens, is close to most classical computers can simulate quantum bits, and could realize the so-called quantum advantage or hegemony.

Fig. 28. Quantum computing structure diagram

However, on the other hand, there is a considerable distance between each experimental plat-form and the thousands of quantum bits required by practical quantum computing, and there is still room for significant improvement in the fidelity of quantum logic gates and the threshold value of quantum computing for fault tolerance. DiVincenzo in 2000 summarized five requirements that need to be met for a potential physical system to implement quantum computing. One of the most important is that the system should have scalable qubits. Scientists have said that quantum computing and quantum information are the frontier of contemporary science and have broad development prospects. The following part is mainly described two aspects of quantum algorithm and quantum computing application. Since 1995, quantum computing has gradually become the most popular research frontier in the world, and a variety of possible schemes for realizing quantum computers have been proposed successively.

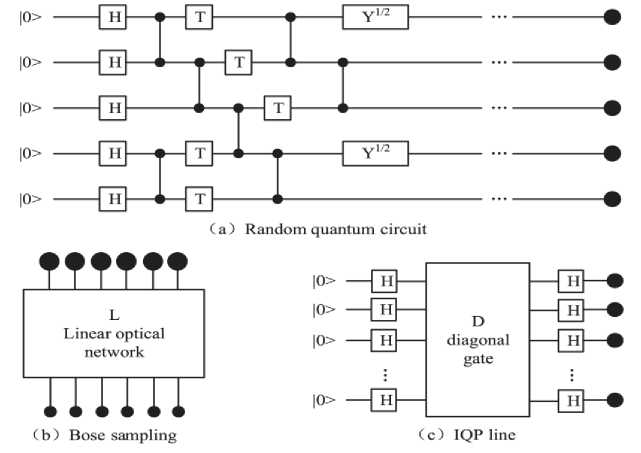

Figure 29 shows three diff erent quantum circuits used in the study of quantum computing.

Fig. 29 . Three quantum circuits of the two

[ This summarizes the problems and shortcomings of mixed applications of quantum computing and brain-computer interfaces in the current social development. This paper also makes a conclusion and prospects for the future combination of quantum computing and brain-computer interface technology.]

Example: Quantum algorithms . As an emerging computing paradigm, quantum computing is expected to solve the technical problems that classical computers are difficult to solve in the fields of combinatorial optimization, quantum chemistry, information security, and AI [11-13]. At present, both the hardware and software of quantum computing continue to develop rapidly, but it is expected that the standard of universal quantum computing will not be reached in the next few years [13]. Therefore, how to use the quantum algorithm to solve practical problems in the short term has become a research hot spot in the field of quantum computing. Quantum algorithms are algorithms applied to quantum computers. For the difficult problems of classical algorithms, some quantum algorithms can be found to solve them in an eff ective time. Some can accelerate the solution of the problem under certain conditions, thus showing the advantages of the quantum algorithm. In quantum computing, a quantum algorithm is an algorithm running on the real model of quantum computing, the most commonly used model is the quantum circuit calculation model. A classical (or non-quantum) algorithm is a finite sequence of instructions, or a step-by-step process of problem-solving, in which each step or instruction can be executed on a classical computer.

The innovation pathways correspond to potential future applications of quantum technologies (Q1-Q5) within the different domains (Fig. 30) of manufacturing systems (D1-D7).

D4 Fabrication and Assembly

D1 Value

Networks

D2 Business

Models

D3 Product Life Cycle

Manufacturing

Domains

D6 Organization

D7 Human

Fields of

Quantum

Technologies

Q1 Computing and Al

Q3 Simulation

Communication

Q4 Imaging

Application

-

1) Optimization of supply chains and logistics including routing of vehicles and fleet management ____________________

-

2) Securing communication between stakeholders and between manufacturing assets and tracking of assets ___________

-

3) Enablement of data-driven business models including match-making, auctioning, and exchange of services

-

4) Optimization of production schedules, operations, robot movements, and machining parameters _________________

-

5) Identification of end-of-life and industrial symbiosis opportunities

-

6) Prediction, design, and testing of material, product, and production behaviors and characteristics ______

-

7) Support of health and competency building

Fig. 30. Links between domains, application, and technologies [37]

The applications identified based on the literature review were subsequently clustered according to the utilized quantum technology(ies) (Q1-Q5) and to their broader field of application in manufacturing systems. The relevant domain of manufacturing systems (D1-D7) was then determined based on the specific applications. Subsequently, novel approaches to potential future applications of quantum technologies in manufacturing systems were ideated. This means that the experts worked out a consensus on the allocation of manufacturing domain applications and description of novel ideated approaches (IDAP) in the course of three moderated iteration cycles.

Example: Quantum Technologies . Quantum technologies exploit the principles of quantum mechanics: superposition, entanglement, and reversibility. In quantum mechanics, systems can be only attributed with a definite state once the states have been measured. Before the measurement, systems remain in an indeterminate state characterized, among other aspects, by the superposition of states. Entanglement means that the states of two systems are not separable. The measured state of one system is perfectly correlated with the state of the other system. All logical operations in quantum mechanics must be reversible. Irreversible operations would entail information loss, since it would lead to a performed measurement of states. Quantum technologies (Q1-Q5) are categorized with two modifications: Quantum computing and AI are jointly summarized in Q1 due to their strong interdependence, and quantum simulation (Q3) is added as a separate technology due to its relevance for manufacturing systems.

Quantum computers and AI ( Q 1) use qubits, the quantum mechanical counterpart to the bit, to conduct calculations. The number of qubits is commonly used as a measure for classifying computational power. IBM, for example, has just announced a breakthrough with its 127-qubit quantum processor. Quantum computation is based on the principle of reversible computation with the following building blocks: quantum gates, quantum memories, quantum CPUs, quantum controlling and measurement, and quantum errorcorrection tools. Manufacturers of quantum computers usually apply one of two different hardware concepts: universal quantum computers or less complex and more task-focused quantum annealers. Quantum computing will likely provide highly efficient means for solving complex but rather specific mathematical problems e.g., partial differential equations which prove impossible or at least very hard for conventional computers. Quantum algorithms are represented by a finite sequence of operations that allow these complex problems to be solved when executed on a quantum computer.

Quantum AI often refers to the fusion of quantum computing and machine learning algorithms, also called Quantum Machine Learning (QML). Quantum AI can more efficiently identify patterns in large data sets, more easily cope with corrupt data, and expedite trainings for large neural networks. Fraunhofer is currently in the process of developing quantum algorithms intended for solving combinatorial optimization problems that are fundamental for Machine Learning and AI. Entanglement enables secure quantum communication (Q2). This is achieved by creating secure quantum keys with the use of entangled photons. Once the keys are transmitted, any attempt to intercept communication disrupts the entanglement, and therefore becomes detectable. Entanglement provides a physical encryption feature effective against hackers, which can be employed jointly with Industry 4.0 technologies. Quantum communication is currently subject to considerable research scrutiny. Studies range from physical grounding, through protocols, etc. to test-bed setups. Advances made in this area are currently mature enough to allow researchers to think about tackling actual challenges of future large-scale utilization. A H2020 project called UNIQORN targets quantum communication with the goal of decreasing costs by 90% and miniaturizing devices measured in meters into millimeter-sized chips. Analyzing complex quantum systems, such as chemical reactions or spintronics, requires sufficient understanding to effectively model quantum interactions between system components. This can be achieved by simulating quantum systems using other systems based on quantum mechanics, for example quantum computers.

Quantum simulators ( Q 3) allows one to approach problems in the fields of physics, chemistry, medicine electrical and material science, but promising industrial applications also arise from solving routing and scheduling problems.

Quantum imaging ( Q 4) uses entangled photons to cover the full optical spectrum. Twin photons can be captured by a camera in the visible spectrum, and an image can be created even if the photon reaching the camera is not the one sent to the object, but rather its twin. Photons can be sent at invisible wavelengths, and their twins can still be detected by the camera.

Quantum sensors ( Q 5) manipulate the quantum state of atoms and photons in such a way that they can be used as measurement probes. Such sensors are highly sensitive and are therefore suited for ultra-precise sensing devices measuring the smallest features of physical systems. Sensors for industrial applications can e.g. utilize quantum diamond magnetometers. They are highly sensitive sensors capable of creating 3D images of individual molecules. TRUMPF and SICK have recently developed their first quantum optical sensors intended for mass production.

Example: Manufacturing Systems . Manufacturing covers the entirety of interrelated value creation measures directly connected with the processing of resources. Manufacturing systems can be interpreted from a technical as well as management perspective. As a technical system, manufacturing focuses on the transformation of shape and properties through manufacturing processes. This means that raw materials are transformed into parts, components, assemblies, or products in various fabrication and assembly processes i.e., primary shaping, forming, cutting, changing material properties, coating, joining, handling, testing and inspection, adjusting, and other auxiliary operations, while utilizing energy and information. Manufacturing as a management system is characterized by connected value networks throughout the different phases of product life cycles i.e., the beginning, middle, and end of product life.

Material flow is facilitated by individual business models provided by contributing stakeholders, which cooperate, collaborate, or compete along these value networks. Moreover, the value networks consist of interlinked value creation modules at different levels of aggregation (e.g., cell, line, or factory level) which in turn generate value by combining respective value creation factors: organization, processes, equipment, product, and human resources. In the following analysis of potential innovation pathways for quantum technologies in manufacturing, the manufacturing system and its relevant domains is considered from the management perspective, which, also includes the technical aspects of manufacturing processes. Hence, the relevant manufacturing domains (D1-D7) are structured in accordance with [1].

Figure 31 shows a conceptual framework summarizing the identified focal links between manufacturing domains, fields of application, and quantum technologies. In summary, the following major findings can be listed [41]:

-

• Quantum computation and AI will potentially be used for optimizing value networks, business models and services, as well as organizing manufacturing systems.

-

• Quantum simulation, imaging, and sensors may contribute to the design of new products and to enhance their life cycles, and will also prove useful in manufacturing processes, human, and equipment domains.

-

• A particularly important area of potential applications pertains to the optimization of design, planning, and scheduling tasks in manufacturing systems, improvement of quality control processes, development of new materials, and simulation of material/product properties and behavior.

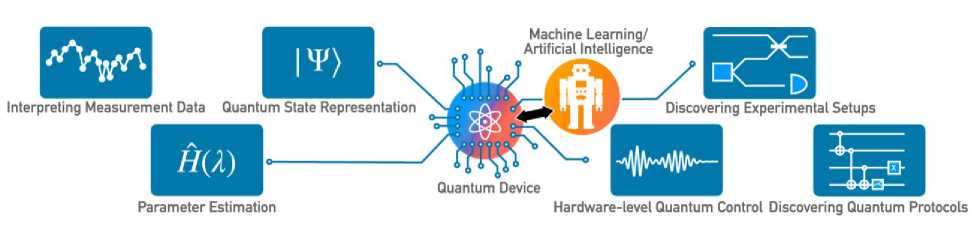

Fig. 31. Overview of tasks in the area of quantum technologies that machine learning and artificial intelligence can help solve better, as explained in this perspective article [39]

The main limitation of the presented study stems from the number of sources analyzed. Hence, it might not represent a holistic perspective on potential application fields. Furthermore, opinions provided by the contributing experts dictated the list of potential applications as well as the allocation of particular technologies under respective domains. Some of the application fields could easily fit into multiple domains or cover several domains at once. In this case, the experts selected the most suitable domain in each case, trying to avoid duplications wherever possible. The study nonetheless provides an indication of the future relevance of quantum technologies in manufacturing domains, as well as a preliminary qualitative overview of the potential application fields.

The fields of machine learning and quantum technologies have a lot in common: Both started out with an amazing vision of applications (in the 1950s and 1980s, respectively), went through a series of challenges, and are currently extremely hot research topics. Of these two, machine learning has firmly taken hold beyond academia and beyond prototypes, triggering a revolution in technological applications during the past decade. This perspective article will be concerned with shining a spotlight on how techniques of classical machine learning (ML) and artificial intelligence (AI) hold great promise for improving quantum technologies in the future. A wide range of ideas have been developed at this interface between the two fields during the past five years, see Fig. 31.

Example: Quantum IoT . IoT has evolved steadily over the last decade. The advancement of IoT technology has laid the groundwork for innovative applications in a wide range of variety in industrial domains such as agriculture, logistics, and healthcare. The applications of IoT are growing so faster over a few decades. IoT working system has four fundamental principles. They are given below,

-

i. Sensors. ii. Connectivity. iii. Data processing. iv. User interference.

Combining these four fundamentals, IoT system works. To make this technology as a ubiquitous communication purpose there are lots of new things and technologies must be added with this system. To take this technology into a new level, we must make sure its security, stable connectivity, and network strength. Quantum technology will give this IoT platform a new level.

Quantum computing improves the computational and data processing capability of IoT sensors and devices. IoT sensor space minimization is a dynamic challenge inspired by the traditional sensor placement problems. Various optimization approaches have been used for this goal; however, recent advances in quantum computing-inspired optimization (QCiO) have opened up new paths for obtaining the optimal behavior of the network system.

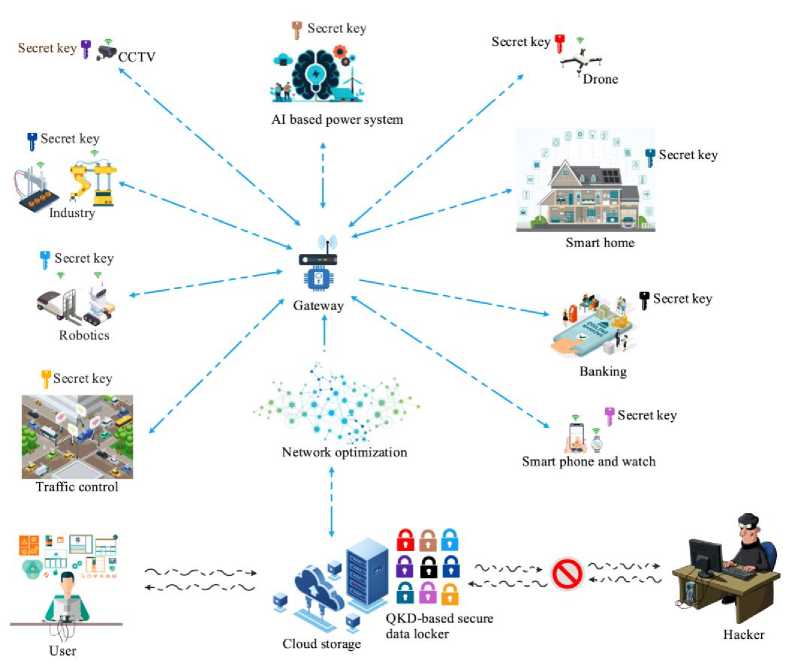

Figure 32 shows QKD based QCiO IoT network system.

Fig. 32. IoT application for QKD based QCiO network system

In this network system a user first gives an input command to the device. Then, the command goes to the server. After that, by using QKD protocol the server processes and optimizes the data. This data optimization is done with the help of QCiO. Now, data reaches the gateway. Gateway optimizes the network to ensure the best routing path in the network and makes secure communication for example in the industry, robotics, smart home, banking, IoT based traffic signal, and power generation systems. This QCiO method can also minimize the sensor space.

QCiO IoT network system accuracy is 93.25%, the precision of the system is 92.55%, and sensor sensitivity is 91.68% [40]. However, applying quantum technology in IoT platforms then it will improve its computational power and data processing capability.

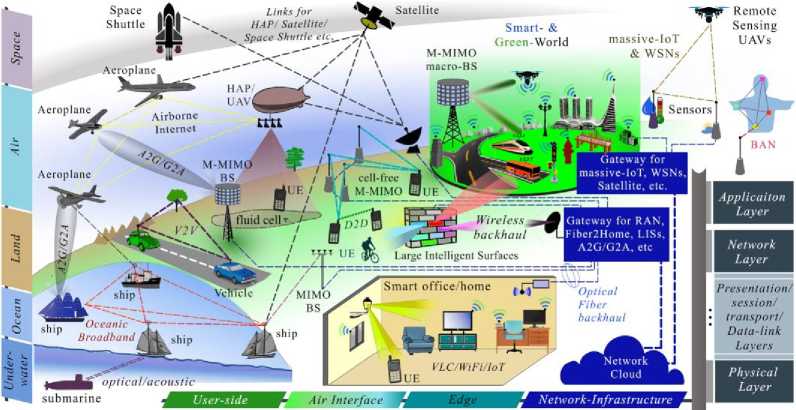

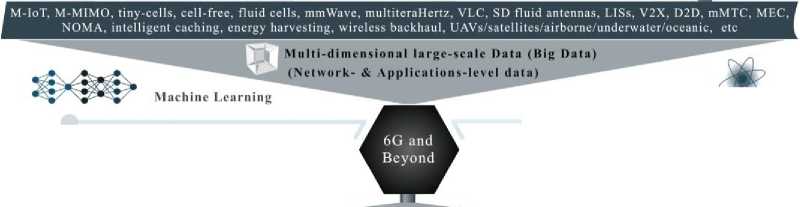

Example: Framework for 6G quantum networks . The 5G networks have now entered into the commercialization phase, which makes it rational to launch a strong effort to draw future vision of the next generation of wireless networks. The increasing size, complexity, services, and performance demands of the communication networks necessitate a deliberation for envisioning new technologies for enabling and harmonizing the future heterogeneous networks. An overwhelming interest in AI methods is seen in recent years, which has motivated the provision of essential intelligence to 5G networks. However, this provision is only limited to perform different isolated tasks of optimization, control, and management nature. The recent success of quantum assisted and data-driven learning methods in communication networks has a clear motivation to consider these as enablers of future heterogeneous networks. This section proposes a novel framework for 6G networks, where quantum-assisted ML and QML are proposed as the core enablers along with some promising communication technology innovations. An illustration of the proposed framework is presented in Fig. 33, which indicates various emerging technologies, complex and heterogeneous network structure, multi-space massive connectivity, and a wide range of available big data across different layers, sides, and applications are indicated.

Quantum Computing

-

- Supervised Learning (ANNs),

-

- Unsupervised Learning (KNN and SVM).

-

- Semi-Supervised Learning (Generative).

-

- Reinforcement learning (Q-Learning),

-

- Heuristic programming (GA).

-

- Deep learning (DNNs),

-

- Deep reinforcement learning,

-

- Multilayer Perceptron.

-

- Convolutional Neural Network,

-

- Recurrent Neural Network.

-

- Restricted Boltzmann Machine.

-

- Generative Adversarial Network,

-

- Distributed Machine Learning, etc

-

- (Py) Torch, Tensor-Flow, Theano, Caffe, DeepSense, MXNET, DeepSence, etc.

-

- RMSprop. Adagrad, Adam, Nadam, etc

Quantum Machine Learning

Quantum Supervised Learning, Quantum Un-Supervised Learning, Quantum Reinforcement Learning, Quantum Neural Network, Quantum Deep Learning, Quantum Deep Neural Networks, Training Parallelization, etc

-

- Physical platforms (ultra cold atoms, superconducting circuits, spins in semiconductors, and trapped-ions, etc), - Qubits, superposition, entanglement.

-

- Quibits measurement & error correction, - Quantum state evolution,

-

- Quantum circuits (nodes, wires, etc),

-

- Capacity of QC Hardware,

-

- Qubits registers and microprocessors,

-

- Algorithms, languages, and Simulators,

-

- Centeral Processing Unit,

-

- Graphics processing unit,

-

- Tensor processing unit,

-

- Cloud and fog computing,

-

- Quantum computing,

-

- Quantum Communications,

Fig. 33. Illustration of different types, layers, sides, and levels of B5G communication networks indicating the applications and scope of QML [41]

The discussion on key thrust areas of future research in the context of the proposed framework is categorized into “Network-Infrastructure and Edge" and “Air Interface and User-End".