Quantum software engineering. Pt II: quantum computing supremacy on quantum gate-based algorithm models

Автор: Ulyanov Sergey V., Tyatyushkina Olga Yu., Korenkov Vladimir V.

Журнал: Сетевое научное издание «Системный анализ в науке и образовании» @journal-sanse

Статья в выпуске: 1, 2021 года.

Бесплатный доступ

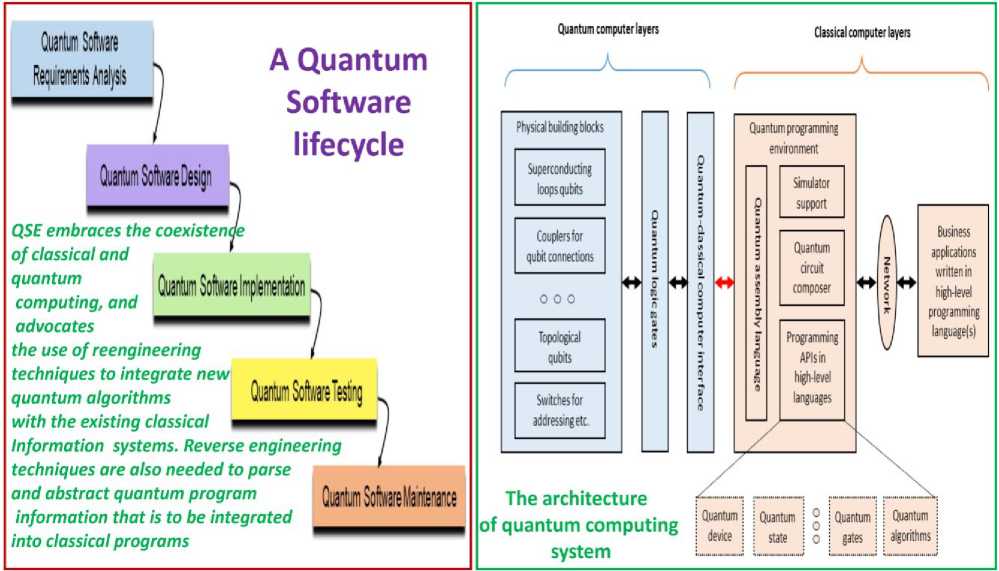

This article discusses the issues related to the description of an open software product for quantum computing, the stages of forming hardware and software along with algorithmic support for quantum tools from hardware interfaces through the methods of quantum compilers of quantum algorithms, including quantum annealing, and calculations on quantum gate-based algorithm models.

Quantum computing, quantum algorithm, quantum software engineering, quantum computing devices

Короткий адрес: https://sciup.org/14123332

IDR: 14123332 | УДК: 512.6,

Текст научной статьи Quantum software engineering. Pt II: quantum computing supremacy on quantum gate-based algorithm models

Ulyanov S., Tyatyushkina O., Korenkov V. Quantum software engineering Pt II: Quantum computing supremacy on quantum gate-based algorithm models. System Analysis in Science and Education, 2021;(1):81– 129. Available from:

There are several known quantum algorithms that offer various advantages or speedups over classical computing approaches, some of them even reducing the complexity class of the problem. These algorithms generally proceed by controlling the quantum interference between the components of the underlying entangled superpositions in such a way that only one or relatively few quantum states have a significant amplitude in the end. A subsequent measurement can therefore provide global information on a massive superposition state with significant probability. The coherent manipulation of quantum states that defines a quantum algorithm can be expressed through different quantum computational modes with varying degrees of tunability and control. The most powerful quantum computing mode presently known is the universal gate model, similar to universal gate models of classical computation. Here, a quantum algorithm is broken down to a sequence of modular quantum operations or gates between individual qubits. There are many universal quantum gate families operating on single and pairwise qubits, akin to the NAND gate family in classical computing. One popular universal quantum gate family is the grouping of two-qubit CNOT gates on every pair of qubits along with rotation gates on every single. With universal gates, an arbitrary entangled state and thus any quantum algorithm can be expressed. Alternative modes such as measurement-based or cluster-state quantum computing can be shown to be formally equivalent to the universal gate model. Like the NAND gate in the classical CMOS technology, the particular choice of a universal gate set or even a mode of quantum computing is best determined by the quantum hardware itself and its native interactions and available controls. The structure of the algorithm itself may also impact the optimal choice of gate set or quantum computing mode.

The difference between classical and quantum algorithms (QA)s is following: problem solved by QA is coded in the structure of the quantum operators. Input to QA in this case is always the same. Output of QA says which problem was coded. In some sense, you give a function to QA to analyze and QA returns its property as an answer without quantitative computing. QA studies directly qualitative properties of the functions. The core of any QA is a set of unitary quantum operators in matrix form or corresponding structure of quantum gates. In practical representation, a quantum gate is a unitary matrix with a particular structure. The size of this matrix grows exponentially with the number of inputs, making it impossible to simulate QAs with more than 30-35 inputs on a classical computer with von Neumann architecture. Background of quantum computing is matrix calculus. New quantum gate-based algorithms and new algorithmic paradigms

(such as adiabatic computing which is the quantum counterpart of simulated annealing) have been discovered. We can explore several aspects of the adiabatic quantum computational model and use a way that directly maps any arbitrary circuit in the standard quantum computing models to an adiabatic algorithm of the same depth. Many quantum algorithms developed for the so-called oracle model in which the input is give as an oracle so that the only knowledge we can gain about the input is in asking queries to the oracle. As our measure of complexity, we use the query complexity. The query complexity of an algorithm A computing a function F is the number of queries used by A . The query complexity of F is the minimum query complexity of any algorithm computing F .

We are interested in proving lower bounds of the query complexity of specific functions and consider methods of computing such lower bounds. The two most successful methods for proving lower bounds on quantum computations are as follows: the adversary method and the polynomial method. An alternative measure of complexity would be to use the time (temporal) complexity that counts the number of basic operations used by an algorithm. The temporal complexity is always at least as large as the query complexity since each query takes one-unit step, and thus a lower bound on the query complexity is a lower bound on the temporal complexity. For most existing quantum algorithms, the temporal complexity is within poly-logarithmic factors of the query complexity.

One barrier to better understanding of the quantum query model is the lack of simple mathematical representations of quantum computing models. While classical query complexity (both deterministic and randomized) has a natural intuitive description in terms of decision trees, there is no such easy description of quantum query complexity. The main difference between the classical and quantum case is that classical computations branch into non-interacting sub computations (as represented by the tree) while in quantum computations, because of the possibility of destructive interference between sub-computations, there is no obvious analog of branching. The bounded-error model is both relevant to understanding powerful explicit non-query quantum algorithms (such as Shor’s factoring algorithm) and theoretically important as the quantum analogue of the classical decision tree model.

There are other modes of quantum computation that are not universal, involving subsets of universal gate operations, or certain global gate operations with less control over the entire space of quantum states. These can be useful for specific routines or quantum simulations that may not demand full universality. Although global adiabatic Hamiltonian quantum computing can be made universal in certain cases, it is often better implemented as non-universal subroutines for specific state preparation. Quantum annealing models do not appear to be universal, and there is current debate over the advantage such models can have over classical computation. Gates that explicitly include error, or decoherence processes, used to model quantum computer systems interacting with an environment via quantum simulation.

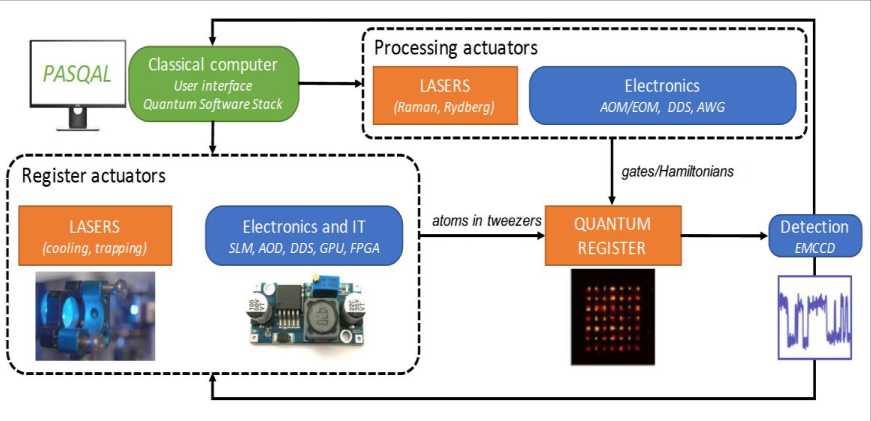

Main Quantum Computing Circuits and Gate-based Devices Platform

The fundamental concepts required for designing and operating quantum computing devices by reviewing state of the art efforts to fabricate and demonstrate quantum gates and qubits demonstrated [1-23].

The development of quantum computing technologies builds on the unique features of quantum physics while borrowing familiar principles from the design of conventional devices. The near-term challenges for devices based on semiconducting, superconducting, and trapped ion technologies with an emphasis on design tools as well as methods of verification and validation summarized. The generation and synthesis of quantum circuits for higher-order logic that can be carried out using quantum computing devices discussed. While the tutorial captures many of the introductory topics needed to understand the design and testing of quantum devices, several more advanced topics have been omitted due to space constraints. Foremost is the broader theory of quantum computation, which has developed rapidly from early models of quantum Turing machines to a number of different but equally powerful computational models. In addition, we have largely omitted the sophisticated techniques employed to mitigate the occurrence of errors in quantum devices. Quantum error correction is an important aspect of long-term and large-scale quantum computing, which uses redundancy to overcome the loss in information from noisy environments. Finally, the review of quantum computing technologies is intentionally narrowed to three of the leading candidates for large-scale quantum computing. However, there is a great diversity of experimental quantum physical systems that can be used for encoding and processing quantum information. Quantum computing promises new capabilities for processing information and performing computationally hard tasks. This includes significant algorithmic advances for solving hard problems in computing, sensing, and communication. The breakthrough examples of Shor’s algorithm for factoring numbers and Grover’s algorithms for unstructured search have fueled a series of more recent advances in computational chemistry, nuclear physics, and optimization research among many others. However, realizing the algorithmic advantages of quantum computing requires hardware devices capable of encoding quantum information, performing quantum logic, and carrying out sequences of complex calculations based on quantum mechanics.

For more than 35 years, there has been a broad array of experimental efforts to build quantum computing devices to demonstrate these new ideas. Multiple state-of-the-art engineering efforts have now fabricated functioning quantum processing units (QPUs) capable of carrying out small-scale demonstrations of quantum computing. The QPUs developed by the commercial vendors such as IBM, Google, D-Wave, Rigetti, and IonQ are among a growing list of devices that have demonstrated the fundamental elements required for quantum computing. This progress in prototype QPUs has opened up new discussions about how to best utilize these nascent devices.

Quantum computing poses several new challenges to the concepts of design and testing that are unfamiliar to conventional CMOS-based computing devices.

For example, a striking fundamental challenge is the inability to interrogate the instantaneous quantum state of these new devices. Such interrogations may be impractically complex within the context of conventional computing, but they are physically impossible within the context of quantum computing due to the nocloning principles. This physical distinction fundamentally changes the understanding how QPUs are designed and their operation tested relative to past practice. This tutorial provides an overview of the principles of operation behind quantum computing devices as well as a summary of the state of the art in QPU.

The continuing development of quantum computing will require expertise form the conventional design and testing community to ensure the integration of these non-traditional devices into existing design workflows and testing infrastructure. There is a wide variety of technologies under consideration for device development, and this tutorial focuses on the current workflows surrounding quantum devices fabricated in semiconducting, superconducting, and trapped ion technologies. We also discuss the design of logical circuits that quantum devices must execute to perform computational work.

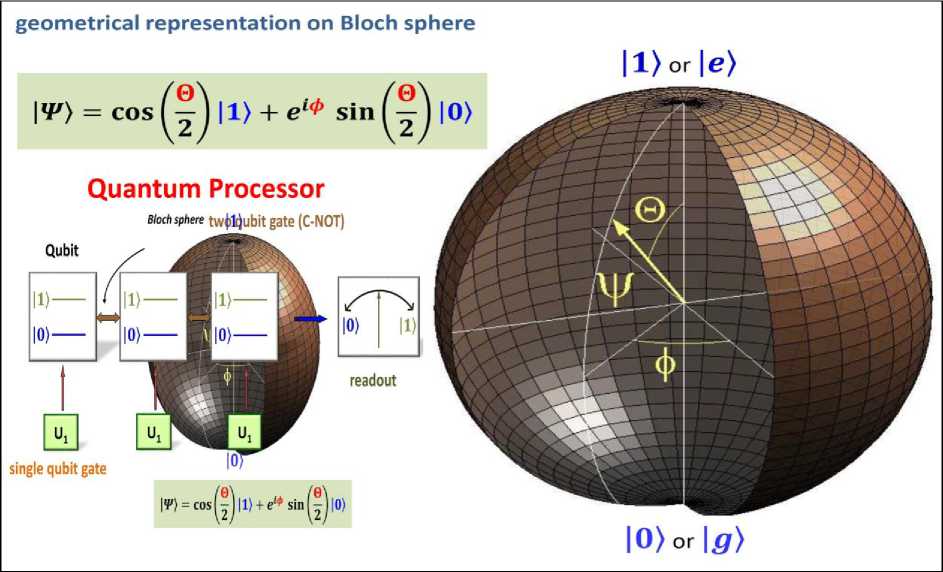

Principles of Quantum Computing

The principles of quantum computing derive from quantum mechanics, a theoretical framework that has accurately modeled the microscopic world for more than 100 years. Quantum computing draws its breakthroughs in computational capabilities from the many unconventional features inherent to quantum mechanics, and we provide a brief overview of these features while others offer more exhaustive explanations. In quantum mechanics, all knowable information about a physical system is represented by a quantum state. The quantum state is defined as vector within a Hilbert space, which is a complex-valued vector space supporting an inner product. By convention, the quantum state with label Ψ is expressed as a column vector using the “ket” notation as ψ , while the dual vector is expressed as the row vector ”bra” ψ . The inner product between these two vectors is ψψ and normalized to one. An orthonormal basis for an N-dimensional Hilbert space satisfies ij = δ , and an arbitrary quantum state may be represented within a

N - 1

complete basis as ψ = ∑ cj . Given the continuous amplitudes that define their quantum states ψ , j = 0

quantum computers have characteristics akin to classical analog computers, where errors can accumulate over time and lead to computational instability. It is thus critical that quantum computers exploit the technique of quantum error correction (QEC), or at least have sufficiently small native errors that allow the system to complete the algorithm. QEC is an extension of classical error correction, where ancilla qubits are added to the system and encoded in certain ways to stabilize a computation through the measurement of a subset of qubits that is fed back to the remaining computational qubits. There are many forms of QEC, but the most remarkable result is the existence of fault-tolerant QEC, allowing arbitrarily long quantum computations with sub-exponential overhead in the number of required additional qubits and gate operations. Qubit systems typically have native noise properties that are neither symmetric nor static, so matching QEC methods to specific qubit hardware noise profiles will play a crucial role in the successful deployment of quantum computers. The general requirements for quantum computer hardware are that the physical qubits (or equiva- lent quantum information carriers) must support (i) coherent Hamiltonian control with sufficient gate expression and fidelity for the application at hand, and (ii) highly efficient initialization and measurement.

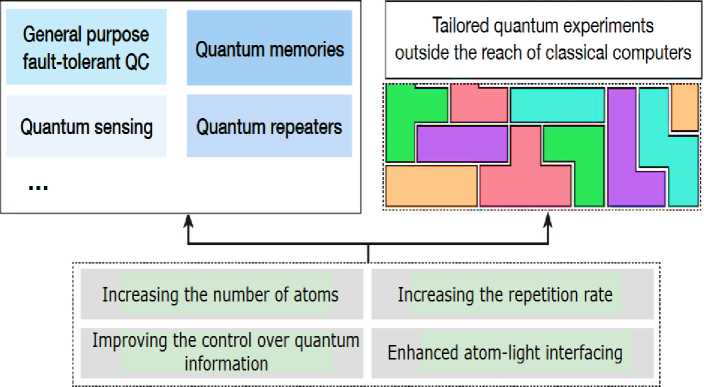

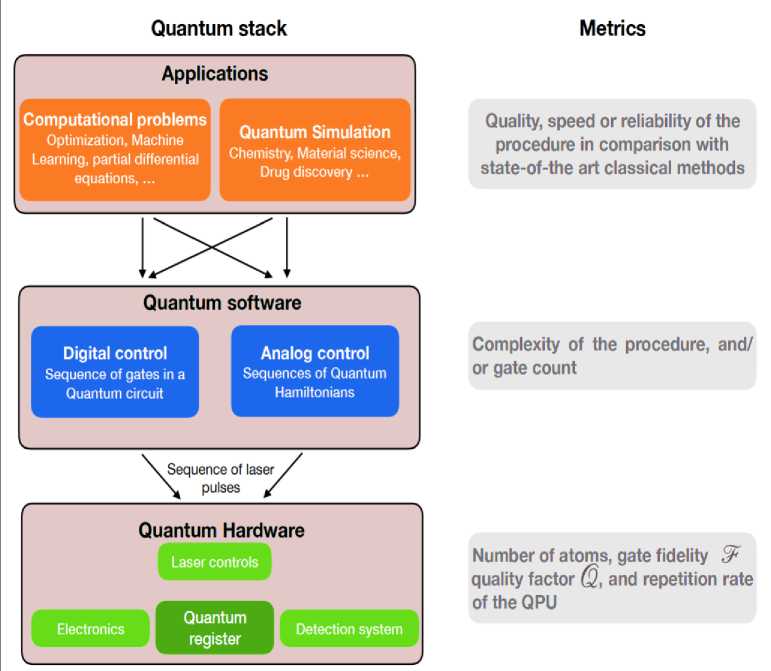

These seemingly conflicting requirements limit the available physical hardware candidates to just a few at this time. Below we describe those platforms that are currently being built into multi-qubit quantum computer systems and are expected to have the largest impact in the next decade. As we will see below in a definition of levels of the quantum computer stack and a sampling of vertical implementations and applications, the near-term advances in quantum computer science and technology will rely on algorithm designers understanding the intricacies of the quantum hardware, and quantum computer builders understanding the natural structure of algorithms and applications.

Quantum Algorithms. Practical interest in quantum computing arose from the discovery that there are certain computational problems that can be solved more efficiently on a quantum computer than on a classical computer, notably number factoring (Shor’s algorithm) and searching unstructured data (Grover’s algorithm). Quantum algorithms typically start at a very high level of description, often as pseudo code. These algorithms are usually distinguished at this level of abstraction by very coarse scaling of resources such as time and number of qubits, as well as success metrics, such as success probabilities or the distance between a solution and the optimal value. Quantum algorithms can be broadly divided into those with provable success guarantees and those that have no such proofs and must be run with heuristic characterizations of success. Once a quantum algorithm has been conceptualized with the promise of outperforming a classical algorithm, it is common to consider whether the algorithm can be run on near-term devices or for future architectures that rely on quantum error correction. A central challenge for the entire field is to determine whether algorithms on current, relatively small quantum processors can outperform classical computers, a goal called quantum advantage. For fault-tolerant quantum algorithms on the other hand, there is a larger focus on improving the asymptotic performance of the algorithm in the limit of large numbers qubits and gates. Shor's factoring and Grover's unstructured search algorithms are the examples of “textbook” quantum algorithms with provable performance guarantees. A handy guide to known quantum algorithms is the quantum algorithm zoo, Another important example is the HHL algorithm which is a primitive for solving systems of linear equations. Factoring, unstructured search, and HHL are generally thought to be relevant only for larger fault-tolerant quantum computers.

Another class of quantum algorithm are quantum simulations, which use a quantum computer to simulate models of a candidate physical system of interest, such as molecules, materials, or quantum field theories whose models are intractable using classical computers. Quantum simulators often determine physical properties of a system such as energy levels, phase diagrams, or thermalization times, and can explore both static and dynamic behavior. There is a continuum of quantum simulator types, sorted generally by their degree of system control. Fully universal simulators have arbitrary tunability of the interaction graph and may even be fault-tolerant, allowing the scaling to various versions of the same class of problems. Some quantum simulations do not require the full universal programmability of a quantum computer, and are thus easier to realize. Such quantum simulators will likely have the most significant impact on society in the short run. Example simulator algorithms range from molecular structure calculations applied to drug design and delivery or energy-efficient production of fertilizers, to new types of models of materials for improving batteries or solar cells well beyond what is accessible with classical computers.

Variational quantum algorithms such as the variational quantum eigensolver (VQE) and the quantum approximate optimization algorithm (QAOA) are recent developments. Here, the quantum computer produces a complex entangled quantum state representing the answer to some problem, for example, the ground state of a Hamiltonian model of a material. The procedure for generating the quantum state is characterized by a set of classical control parameters that are varied in order to optimize an objective function such as minimizing the energy of the state. One particular area of active exploration is the use of VQE or QAOA for tasks in machine learning or combinatorial optimization, as discussed below. Variational quantum solvers are a workhorse in near-term quantum hardware, partly because they can be relatively insensitive to systematic gate errors. However, these algorithms are usually heuristic: one cannot generally prove that they converge. Instead, they must be tested on real quantum hardware to study and validate their performance and compare to the best classical approaches.

Quantum algorithms are typically expressed at a high level with the need to estimate actual resources for implementation. This often starts with a resource estimate for fault-tolerant error-corrected quantum computers, where the quantum information is encoded into highly entangled states with additional qubits in order to protect the system from noise. Fault-tolerant quantum computing is a method for reliably processing this encoded information even when physical gates and qubit measurements are imperfect. The catch is that quantum error correction has a high overhead cost in the number of required additional qubits and gates. How high a cost depends on both the quality of the hardware and the algorithm under study. A recent estimate is that running Shor’s algorithm to factor a 2048-bit number using gates with a 10 - 3 error rate could be achieved with currently known methods using 20 million physical qubits. As the hardware improves, the overhead cost of fault-tolerant processing will drop significantly; nevertheless, fully fault-tolerant scalable quantum computing is presently a distant goal. When estimating resources for fault-tolerant implementations of quantum algorithms, a discrete set of available quantum gates is assumed, which derive from the details of the particular error-correcting code used. There are many different techniques for trading off space (qubit number) for time (circuit depth), resembling conventional computer architecture challenges. It is expected that optimal error correction encoding will depend critically upon specific attributes of the underlying quantum computing architecture, such as qubit coherence, quantum gate fidelity, and qubit connectivity and parallelism.

Estimating resources for quantum algorithms using realistic quantum computing architectures is an important near-term challenge. Here, the focus is generally on reducing the gate count and quantum circuit depth to avoid errors from qubit decoherence or slow drifts in the qubit control system. Different types of quantum hardware support different gate set and connectivity, and native operations are often more flexible than fault-tolerant gate sets for certain algorithms. This optimizing of specific algorithms to specific hardware is the highest and most important level of quantum computer co-design.

Conventional Quantum Algorithms

Historically, Shor's and Grover's algorithms, which are sometimes referred to as “textbook algorithms,” were the first algorithms of practical relevance where a quantum computer offered a dramatic speedup over the best-known classical approaches. Here we consider co-design problems and full-stack development opportunities that arise from mapping these textbook algorithms to quantum computing platforms. At the top of the stack, the challenges that both algorithms face include implementing classical oracles with quantum gates. In Grover's algorithm, the oracle can be implemented using Bennett’s pebbling game. However, it is a non-trivial task to find an efficient reversible circuit, since the most efficient implementation on a quantum computer may not follow the structure suggested by a given classical algorithmic description, even when latter is efficient. There are also intriguing questions in terms of how we can use classical resources to aid in quantum algorithms. For example, in Shor's algorithm we can leverage classical optimizations such as windowed arithmetic, or trade off quantum circuit complexity with classical post-processing complexity. One of the key challenges in implementing textbook algorithms in physical systems is to optimize these algorithms for a given qubit connectivity and native gate set. While these technology-dependent factors will not affect asymptotic scaling, they could greatly influence whether these algorithms can be implemented on near-term devices. For instance, high connectivity between qubits can provide significant advantages in algorithm implementation. Also, in this vein, the work has been done to implement Shor's algorithm with a constrained geometry (1D nearest neighbor), but there are many open questions that involve collaborations across the stack.

Developing implementations of Shor's algorithm and Grover's algorithm will provide exciting avenues for improved error correction, detection, and mitigation. While error correction codes are often designed to correct specific types of errors for particular physical systems, considering error correction for textbook algorithms provides a basis for designing error correction for both application and hardware. Because the output of these textbook algorithms are easily verifiable, they are good testbeds for error mitigation and characterization. For example, simple error correction/mitigation circuits, including randomized compiling, could be implemented in the context of small implementations of Grover’s algorithm and Shor’s algorithm to better understand the challenges in integrating these protocols into more complex ones. While this approach has been used in the quantum annealing community, it could be fruitful to explore in more detail for textbook gate-based algorithms.

Grover’s algorithm seems to break down in the presence of a particular type of error that appears to be unrealistic in actual physical systems. For other algorithms, realistic errors do not appear to be as detrimental. Testing textbook algorithms on different architectures with and without error mitigation would provide us with a way to explore the space of errors relative to a specific algorithm, and give insight into which realistic errors are critical. This would inform error mitigation (not necessarily correction) techniques at both the code and hardware level, tailored to specific algorithms. This way of viewing error correction calls for a full integration of experts at the hardware, software, and algorithm design levels. The work on these textbook algorithms will lead to improved modularity in the quantum computing stack. In software design, modularity refers to the idea of decomposing a large program into smaller functional units that are independent and whose implementations can be interchanged. Modular design is a scalable technique that allows the development of complex algorithms while focusing on small modules, each containing a specific and well isolated functionality. These modules can exist both at the software level as well as at the hardware level. As an example, in classical computing, the increased use of machine learning algorithms and cryptocurrency mining has led to a repurposing of GPUs. In the design of full implementations of quantum algorithms such as Shor’s and Grover’s, modular design can be applied by using library functions that encapsulate circuits for which an optimized implementation was derived earlier. The examples include quantum Fourier transforms, multiple-control gates, libraries for integer arithmetic and finite fields, and many domain-specific applications such as chemistry, optimization, and finance. All major quantum computing programming languages are open source, including Googles Cirq, IBMs QisKit, Microsofts Quantum Development Kit, and Rigettis Quil, which facilitates the development and contribution of such libraries. Highly optimized libraries that are adapted to specific target architectures as well as compilers that can leverage such libraries and further optimize code are great opportunities for large-scale collaborative efforts between academia, industry, and national labs. Like libraries, programming patterns provide opportunities for modularity. Programming patterns capture a recur-ring quantum circuit design solution that is applicable in a broad range of situations. Typically, a pattern consists of a skeleton circuit with subroutines that can be instantiated independently. The examples of patterns are various forms of Quantum Phase Estimation (QPE), amplitude amplification, period finding, hidden subgroup problems, hidden shift problems, and quantum walks.

Implementing textbook algorithms will become important benchmarks for the quantum computing stack as a whole and also at the level of individual components. The need for such benchmarks is evident in the recent proliferation of a variety of benchmarks that test various aspects and components of quantum systems and entire systems, such as quantum volume, two-qubit fidelity, cross-entropy, probability of success, reversible computing, and the active IEEE working group project. It is not likely that any single benchmark will characterize all relevant aspects of a quantum computer system. However, implementing textbook algorithms provides an easy-to-verify test of the full quantum system from hardware to software, as all the aspects of the system must work in concert to produce the desired output.

Quantum Software. A quantum computer will consist of hardware and software. The key components of the software stack include compilers, simulators, verifiers, and benchmarking protocols, as well as the operating system. Compilers - interpreted to include synthesizers, optimizers, transpilers, and the placement and scheduling of operations - play an important role in mapping abstract quantum algorithms onto efficient pulse sequences via a series of progressive decompositions from higher to lower levels of abstraction. The problem of optimal compilation is presently intractable suggesting an urgent need for improvement via sustained research and development. Since optimal synthesis cannot be guaranteed, heuristic approaches to quantum resource optimization (such as gate counts and depth of the quantum circuit) frequently become the only feasible option. Classical compilers cannot be easily applied in the quantum computing domain, so quantum compilers must generally be developed from scratch. Classical simulators are a very important component of the quantum computer stack. There are a range of approaches, from simulating partial or entire state vector evolution during the computation to full unitary simulation (including by the subgroups), with or without noise. Simulators are needed to verify quantum computations, model noise, develop and test quantum algorithms, prototype experiments, and establish computational evidence of a possible advantage of the given quantum computation. Classical simulators generally require exponential resources (otherwise the need for a quantum computer is obviated), and thus are only useful for simulating small quantum processors with less than 100 qubits, even using high-performance supercomputers. Simulators used to verify the equivalence of quantum circuits or test output samples of a given implementation of a quantum algorithm can be thought of as verifier. Benchmarking protocols are needed to test components as well as entire quantum computer systems. Quantum algorithm design, resource trade-os (space vs. gate count vs. depth vs connectivity vs. fidelity, etc.), hardware/software co-design, efficient architectures, and circuit complexity are the examples of the important areas of study that directly advance the power of software. The quantum operating system (QOS) is the core software that manages the critical resources for the quantum computer system. Similar to the OS for classical computers, the QOS will consist of a kernel that manages all hardware resources to ensure that the quantum computer hardware runs without critical errors, a task manager that prioritizes and executes user-provided programs using the hardware resources, and the peripheral manager that handles peripheral resources such as user/cloud and network interfaces. Given the nature of qubit control in near-term devices which requires careful calibration of the qubit properties and controller outputs, the kernel will consist of automated calibration procedures to ensure high fidelity logic gate operation is possible in the qubit system of choice.

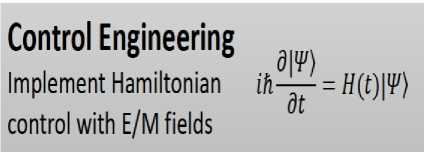

Control Engineering. Advancing the control functions of most quantum computing implementations is largely considered an engineering and economic problem. Current implementations comprise racks of test equipment to drive the qubit gate operations, calibrate the qubit transitions and related control, and calibrate the measurement equipment. While appropriate for the early stages of R&D laboratory development, this configuration will limit scalability, systems integration and applicability for fielded applications and mobile platforms, and affordability and attractiveness for future applications. The quantum gate operations for most qubit technologies require precise synthesis of analog control pulses that implement the gates. These take the form of modulated electromagnetic waves at relevant carrier frequencies, which are typically in the microwave or optical domain. Depending on the architecture of the quantum computer, a very large number of such control channels might be necessary in a given system. While the advances in communication technologies can be leveraged, these have to be adapted for quantum applications. A significant level of flexibility and programmability to generate the required pulses with adequate fidelity must be designed into the control system for quantum computers. The advancement of controls in quantum computing will ultimately require high-speed and application-specific optimized controls and processing. This situation mirrors the explosive advancement of the telecommunications industry in its implementation of 100 to 400+ Gb/s coherent digital optics formats and integrated RF and microwave signal processing for mobile applications. In the short run, however, the challenge we face is defining the control functions and features relevant for the target qubit applications and deriving the required performance specifications. This work is necessary before a dedicated, integrated, and scalable implementation such as ASIC development becomes viable. Near-term applications in quantum computing and full system design activities are critical in identifying and defining these needs. There are strong opportunities to engage engineering communities - in academia, national laboratories, and industry - with expertise ranging from computer architecture to chip design to make substantial advances on this front. To foster such efforts, it will be necessary to encourage co-design approaches and to identify common engineering needs and standards. Generating the types of signals needed can benefit significantly from digital RF techniques that have seen dramatic advances in the last decade. We envision commercially available chipsets based on field-programmable gate arrays (FPGAs) and ASICs that incorporate “System on a Chip” (SoC) technology, where processing power is integrated with programmable digital circuits and analog input and output functions. Besides the signal generation necessary for implementing the gates, other control needs include passive and active (servo) stability, maintenance of system operation environment (vacuum, temperature, etc.), and managing the start-up, calibration, and operational sequences. Calibration and drift control are important to both atomic and superconducting systems, though in somewhat different ways. In fixed-frequency superconducting systems, maintaining fidelities above 99% requires periodic calibration of RF pulse amplitudes; for tunable transmons, low frequency tuning of magnetic flux is required to maintain operation at qubit sweet spots. For atomic qubits, calibrating local trapping potentials and slow drifts in laser intensities delivered to the qubits is necessary. These calibration procedures, which can be optimized, automated, and built into the operating mode of the quantum computer system with help of software controls, are the candidates for implementation in SoC technologies. The development of optimal operating procedures for drift control and calibration processes will require innovation at a higher level of the stack. For example, dynamically understanding how control parameter drifts can be tracked and compensated and when recalibration is needed (if not done on a fixed schedule) will require high-level integration.

Specific performance requirements will help drive progress, by co-design of engineering capabilities and quantum control needs. For example, there is a need for electronic control systems encompassing: (i) analog outputs with faster than 1G samples/s; (ii) synchronized and coherent output with over 100 channels and extensible to above 1000 channels; (iii) outputs switchable among multiple pre-determined states; and (iv) proportional-integrative-derivative (PID) feedback control on each channel with at least kHz bandwidth. Common needs for optical control systems include (i) phase and/or amplitude modulation with a bandwidth of ≈ 100 MHz; (ii) over 100 channels and extensibility to above 1000 channels; (iii) precision better than 12 bits (phase or amplitude); and (iv) operating wavelengths to match qubit splitting.

An essential consideration of the control engineering for high-performance quantum computers is noise. The noise in a quantum system has two distinct sources: one is the intrinsic noise in the qubits arising from their coupling to the environment, known as decoherence, and the other is the control errors. Control errors can be either systematic in nature, such as drift or cross-talk, or stochastic, such as thermal and shot noise on the control sources. The key is to design the controller in a way such that the impact of stochastic noise on the qubits is less than the intrinsic noise of the qubits, and the systematic noise is fully characterized and mitigated. The possible mitigation approaches include better hardware design, control loops, and quantum control techniques.

Qubit Technology Platforms. The various quantum computer technology platforms in terms of their ability to be integrated in a multi-qubit system architecture. To date, only a few qubit technologies have been assembled and engineered in this way, including superconducting circuits, trapped atomic ions, and neutral atoms. While there are many other promising qubit technologies, such as spins in silicon, quantum dots, or topological quantum systems, none of these technologies have been developed beyond the component level. The research and development of new qubit technologies should continue aggressively in materi-als/fabrication laboratories and facilities. However, their maturity as good qubits may not be hastened by integrating them with modular full-stack quantum systems development proposed here, so we do not focus this roadmap on new qubit development. In any case, once alternative qubit technologies reach maturity, we expect their integration will benefit from the full stack quantum computer approach considered here. It is generally believed that fully fault-tolerant qubits will not likely be available soon. Therefore, specific qubit technologies and their native decoherence and noise mechanisms will play a crucial role in the development of near-term quantum computer systems. There are several systems-level attributes that arise when considering multi-qubit systems as opposed to single- or dual-qubit systems. Each of these critical attributes should be optimized and improved in future system generations:

-

- Native quantum gate expression . Not only must the available physical interactions allow universal control, but high levels of gate expression will be critical to the efficient compilation and compression of algorithms so that the algorithm can be completed before noise and decoherence take hold. This includes developing overcomplete gate libraries, as well as enabling single instruction, multiple data (SIMD) instructions such as those given by global entangling and multi-qubit control gates.

-

- Quantum gate speed . Faster gates are always desired and may even be necessary for algorithms that require extreme repetition, such as variational optimizers or sampling circuits. However, faster gates may also degrade their fidelity and crosstalk, and in these cases the speed to complete the higher-level algorithmic solution should take precedence.

-

- Specific qubit noise and crosstalk properties . Qubit noise properties should be detailed and constantly monitored, for there are many error mitigation techniques for specific or biased error processes that can improve algorithmic success in the software layer. Quantum gate crosstalk is usually unavoidable in a large collection of qubits, and apart from passive isolation of gate operations based on better engineering and control, there are software solutions that exploit the coherent nature of such crosstalk and allow for its cancellation by design.

-

- Qubit connectivity. The ability to implement quantum gate operations across the qubit collection is critical to algorithmic success. While full connectivity is obviously optimal, this may not only lead to higher levels of crosstalk, but ultimately resolving the many available connection channels may significantly decrease the gate and algorithmic speed. Such a trade-off will depend on details of the algorithm, and a good software layer will optimize this trade-off. A connection graph that is software-reconfigurable will also be useful.

-

- High level qubit modularity . For very large-scale qubit systems, a modular architecture may be necessary. Just as for multi-core classical CPU systems, the ability to operate separated groups of qubits with quantum communication channels between the modules will allow the continual scaling of quantum computers. Modularity necessarily limits the connectivity between qubits, but importantly allows a hierarchy of quantum gate technologies to allow indefinite scaling, in principle.

Increasing the number of qubits from hundreds to thousands will be challenging, because current systems cannot easily be increased in size via brute force. Instead, a new way of thinking on how to reduce the number of external controls of the system will be needed to achieve a large number of qubits. This could be approached by further integrating control into the core parts of the system or by multiplexing a smaller number of external control signals to a larger number of qubits. In addition, modularizing subsystems to be produced at scale and integrating these into a networked quantum computer may well turn out to be the optimal way to achieve the necessary system size. Many challenges and possible solutions will only become visible once we start to design and engineer systems of such a size, which will in turn be motivated by scientific applications.

In these series of textbooks, we concentrate our attention on quantum software and hardware engineering approaches for search of intractable classical tasks from computer science, intelligent information technologies, artificial intelligence, quantum software engineering, classical and quantum control. Many solutions are received as new decision-making results of developed quantum engineering IT and have important scientific and industrial applications.

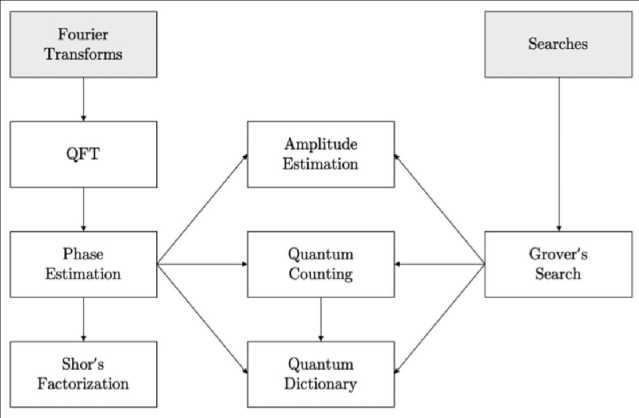

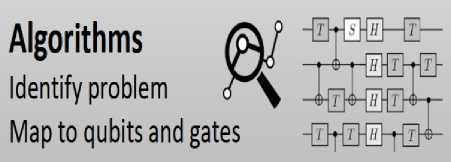

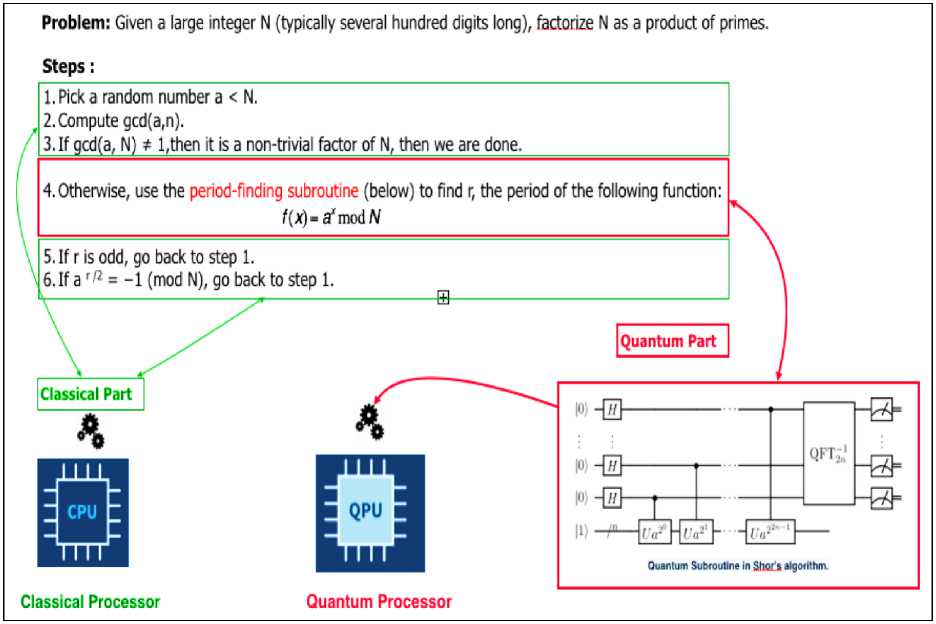

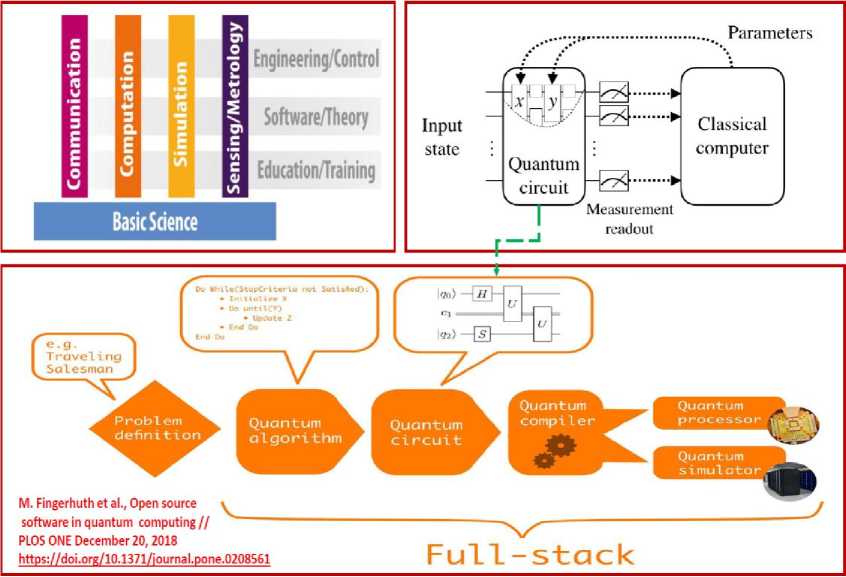

There are two main classes of algorithms where there is a potential quantum advantage - those that rely on Fourier transforms and those that perform searches (Fig. 1).

Fig. 1. The two main classes of quantum algorithms [8]

We can think of an oracle as a black box with a contract - the oracle applies the contract when it recognizes an element of a specified subset of the basis states. We call this subset of basis states the good states, and the rest of the states the bad states. The most common contract is to conditionally multiply the amplitudes of the desired basis states by (– 1). Many quantum algorithms rely on oracles to be efficient, and use a placeholder oracle in their definition. Selecting an oracle depends on the nature of the problem that needs to be solved.

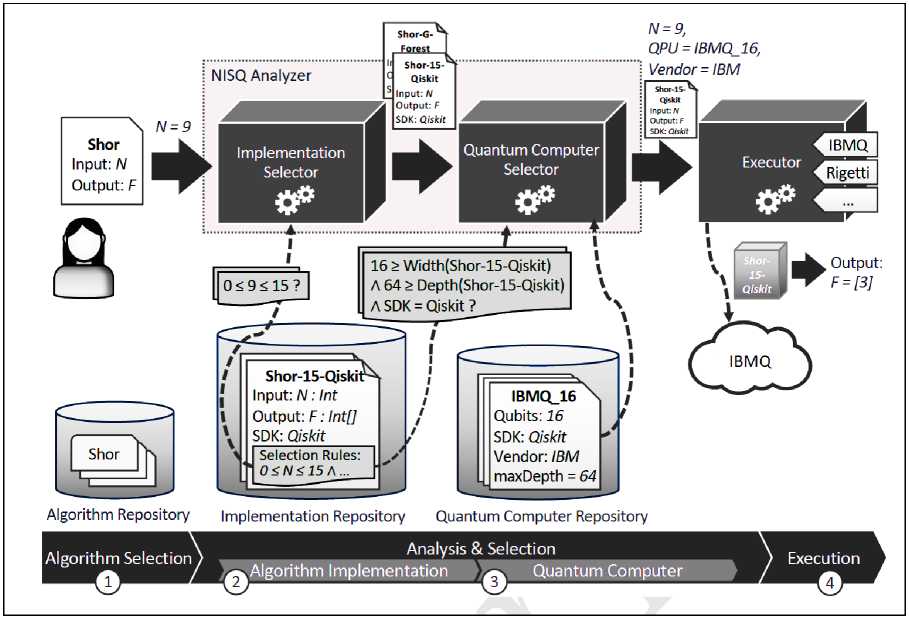

A quantum computer is capable of solving a computational task that would require an unreasonable amount of time on any classical supercomputer. Quantum computing is currently moving from an academic idea to a practical reality. Quantum computing in the cloud is already available and allows users from all over the world to develop and execute real quantum algorithms. However, the companies which are heavily investing in this new technology such as Google, IBM, Rigetti, Intel, IonQ, NTU MISiS (Moscow), D-Wave and Xanadu follow diverse technological approaches. This led to a situation where we have substantially different quantum computing devices available thus far. They mostly differ in the number and kind of qubits and the connectivity between them. Because of that, various methods for realizing the intended quantum functionality on a given quantum computing device are available. This review provides an introduction and overview into this domain and describes corresponding methods, also referred to as compilers, mappers, synthesizers, transpilers, or routers.

Quantum Algorithms Realizing on Real Quantum Computing Devices

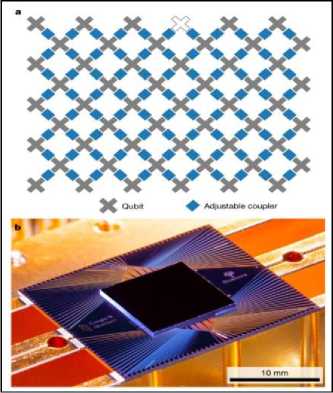

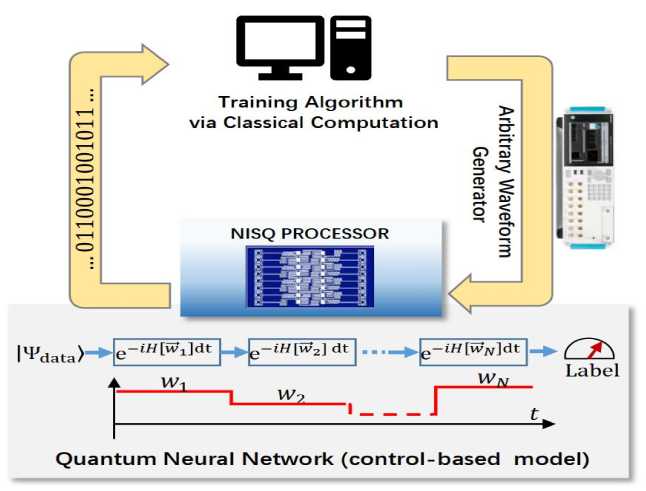

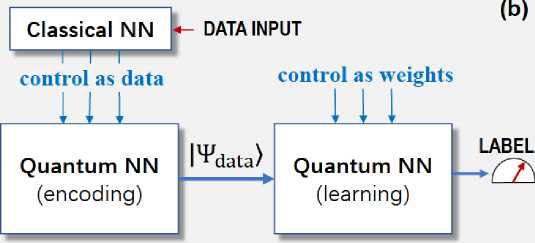

By exploiting the quantum phenomena such as superposition and entanglement, quantum computers promise to solve hard problems that are intractable for even the most powerful conventional supercomputers. In addition, remarkable progress has been made in quantum hardware based on different technologies such as superconducting circuits, trapped ions, silicon quantum dots, and topological qubits. A recent breakthrough in quantum computing has been the experimental demonstration of quantum supremacy using a superconducting quantum processor consisting of 53 qubits. Current and near-term quantum computing devices are often referred to as Noisy Intermediate-Scale Quantum (NISQ) devices [9], to highlight their limited size and imperfect behaviour due to noise. However, while quantum technologies need to improve coherence times and gate fidelities to achieve overall lower error rates, quantum computing in the cloud is already a reality offering small quantum computing devices that are capable of handling basic quantum algorithms. Companies such as Google, IBM, Rigetti, and Intel, have already announced 72-qubit, 50-qubit, 128-qubit, and 49-qubit superconducting devices, respectively.

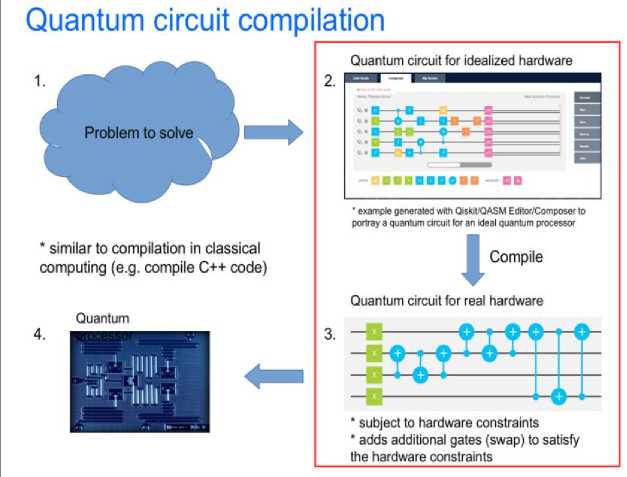

In these quantum processors, qubits are arranged in a 2D topology with limited connectivity between them and in which only nearest-neighbor (NN) interactions are allowed. This is one of the main constraints of today’s quantum devices and frequently requires the quantum information stored in the qubits to be moved to other adjacent qubits – typically by means of SWAP operations. The quantum algorithms, which are described in terms of quantum circuits, neglect the specific qubit connectivity and, therefore, cannot be directly executed on the quantum computing device but need to be realized with respect to this and others constraints. The procedure of adapting a circuit to satisfy the quantum processor restrictions is known as the compiling, mapping, synthesis, transpiling, or routing problem. The mapping process often causes an increase of the number of quantum operations as well as the depth (number of time-steps) of the quantum circuit. The success rate of the algorithm is consequently reduced since quantum operations are error prone and qubits easily degrade their state over the time due to the interaction with the environment. To minimize the negative impact of the mapping, it is required to develop efficient methods that minimize the resulting overhead – especially for NISQ devices in which the lack of active protection against errors will make long computations unreliable.

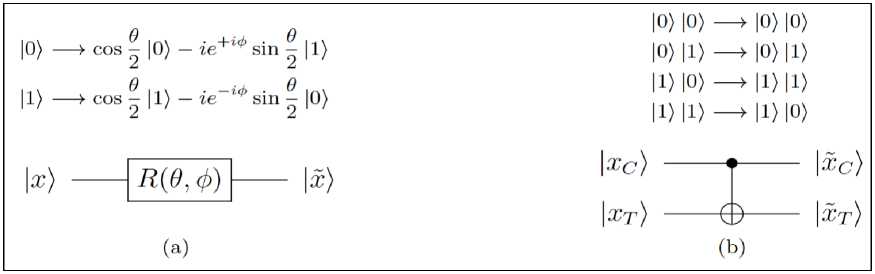

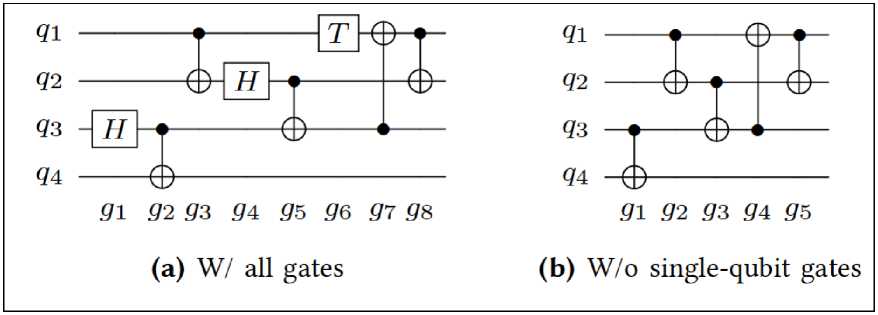

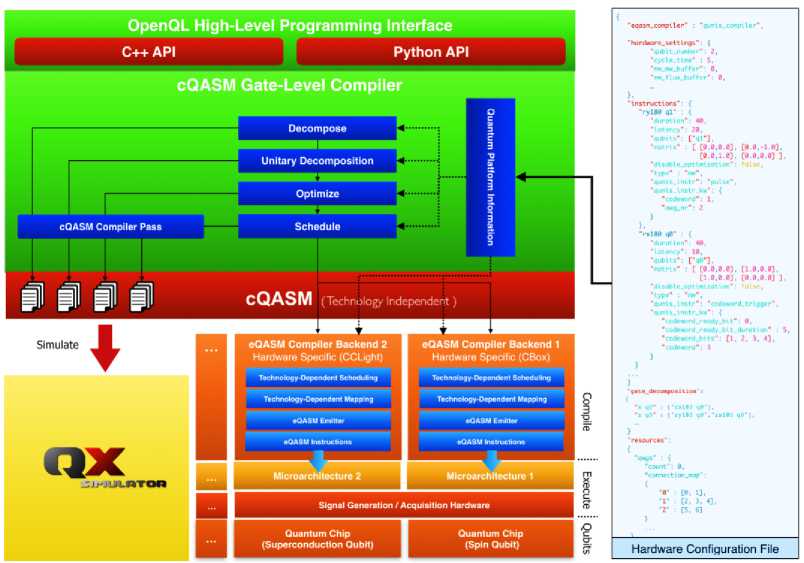

The basics of quantum computing and, afterwards, will present two mapping approaches for realizing quantum algorithms on two different superconducting transmon devices. The first targets an IBM processor, the IBM QX4, that consists of five qubits. The second is meant to execute quantum circuits on the Surface-17 chip composed of seventeen quits (larger quantum architectures are available from both vendors, but to keep the following descriptions and examples simple). To evaluate the effect of a quantum gate on a quantum state, the respective vector (describing the quantum state) simply has to be multiplied with the respective matrix (describing the gate). The finite set of gates form a universal gate set, i.e., all quantum functions can be realized by them. Sequences of quantum operations finally define quantum algorithms which are usually described by high-level quantum languages (e.g. Scaffold or Quipper), quantum assembly languages (e.g. OpenQASM 2.0 developed by IBM or cQASM), or circuit diagrams.

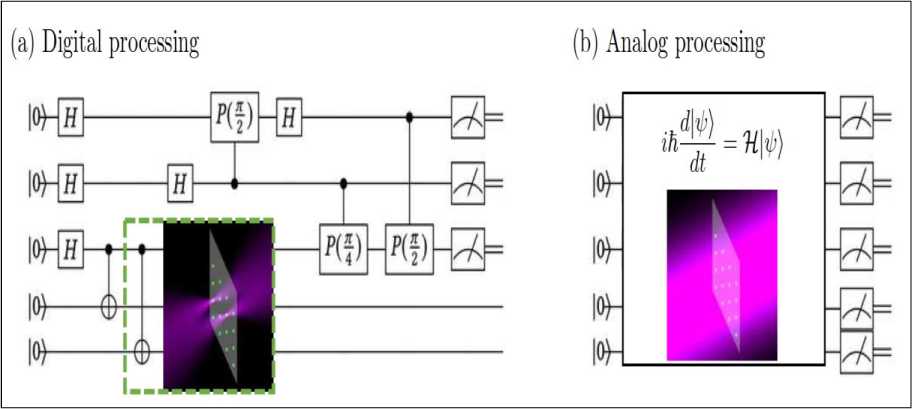

For this purpose, circuit diagrams are using such as those in Fig. 2(a) as representation of quantum algorithms in the following.

Fig. 2. An example of a quantum circuits

Here, qubits are visualized as circuit lines that are passed through quantum operations, which are denoted by boxes including their respective denominator in case of single-qubit operations and a black dot and a ⊕ symbol for control qubit and target qubit, respectively, in case of a CNOT operation. Note that the qubit lines do not refer to an actual hardware connection as in classical logic, but rather define in which order (from left to right) the respective operations are applied. Physical implementations of quantum computers may rely on different technologies. For example, will focus on quantum computers based on superconducting transmon qubits on silicon chips. Here, the operations are conducted through microwave pulses transferred into and out of dilution refrigerators, in which the quantum chips are set at an operating temperature of around 15 mK. Communication into, out of, and among the qubits is done through on-chip resonators.

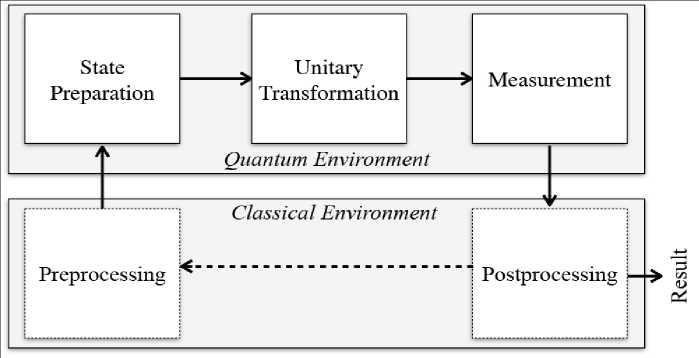

As in classical computers, quantum algorithms described as programs using a high-level language have to be compiled into a series of low-level instructions like assembly code and, ultimately, machine code.

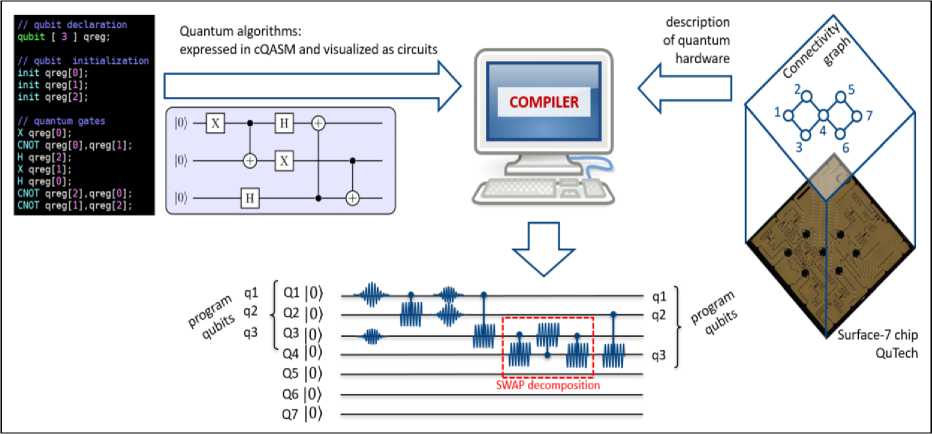

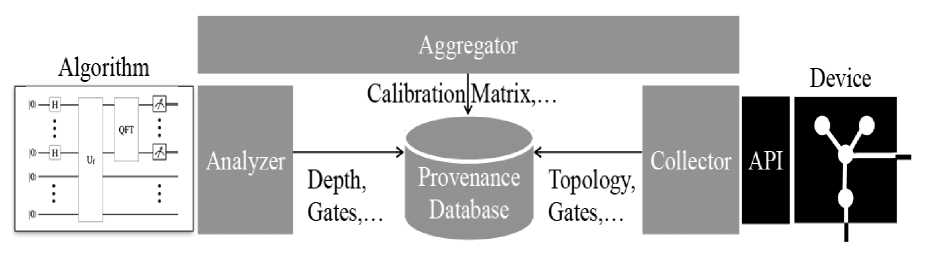

As sketched in Fig. 3, in a quantum computer these instructions need to be ultimately translated into the pulses that operate on the qubits and perform the desired operation.

Fig. 3. Sketch of the mapping process for quantum algorithms

[The compiler depicted in the center receives two kinds of inputs: from the left it receives the quantum algorithm in terms of a sequential list of quantum gates to be executed (expressed in cQASM) and from the right the description of the machine, possibly including the control electronics in addition to the quantum hardware. Its output is a series of scheduled operations that can be executed by the machine and is depicted at the bottom in terms of the control signals that implement it. The initial placement of the program qubits q1, q2, q3 may differ from the final placement. For simplicity we have assumed that the CNOT and H gates are available in the machine’s gate set instead of the native gates of Surface-7].

Thus, quantum algorithms can be described as a list of sequential gates, each acting on a few qubits only, and visualized in terms of quantum circuits. Quantum circuits cannot be directly realized on real quantum processors, but need to be adapted to the specificity of each quantum device. In addition to preserving all dependencies between the quantum operations, compilers of quantum circuits must perform three important tasks: 1) express the operations in terms of the gates native to the quantum processor, a task called gate decomposition, 2) initialize and maintain the map specifying which physical qubit (qubit in the quantum device) is associated to each program qubit (qubit in the circuit description, sometimes called logical qubit in the literature), a task called placement of the qubits, and 3) schedule the two-qubit gates compatibly with the physical connectivity, often by introducing additional routing operations.

Remark . Often do not elaborate on the gate decomposition, apart from observing that most current quantum devices provide a native gate set that is equivalent and often larger than the universal gate set. The two remaining tasks are performed by the circuit mapper within the compiler. Notice that the task of initializing the qubit placement is expected to play an important role in near term devices, but will probably have a relatively limited impact when algorithms grow in depth. For this reason, the main focus will be on the problem of minimizing the routing overhead, arguably the most impactful mapping task especially when excluding quantum error correction.

The problem is simply stated: one needs to schedule a two-qubit gate but the corresponding program qubits are currently placed on non-connected physical qubits. The placement must therefore be modified with the goal of moving the involved qubits to adjacent connected ones. Quantum information cannot be copied and there is essentially one way of transferring it, namely by applying SWAP gates that effectively exchange the state of two connected qubits.

Another approach is based on teleportation, corresponding to long-distance transfer of the qubit state. It requires the creation of multi-qubit entangled states that are preliminarily distributed across the qubit register and that can be consumed to transfer a qubit state. Since the distribution of the entangled state requires SWAP gates, the teleportation approach can be seen as a SWAP-based routing with relaxed time constraints.

The functionality of the circuit mapper, which is usually embedded in the compiler, is sketched in Fig. 2. It requires two separate inputs, one related to the abstract algorithm to implement and the other associated with the quantum processor chosen for its execution. The former is usually provided in terms of high-level code or Quantum Assembly Language (QASM) instructions, an explicit list of low-level operations corresponding to single-and two-qubit gates, and can be visualized in the form of quantum circuits. The latter corresponds to a description of the hardware, from the qubit topology and connectivity to the electronics that generate and distribute the control signals. The compiler is in charge of decomposing the operations in terms of the gates native to the processor and then of the mapping process. The mapping process is comprised of the initial placement of qubits, qubit routing, and operation scheduling.

Several solutions have already been proposed for solving the mapping problem; that is, to make quantum circuits executable on the targeted quantum device by transforming and adapting them to the constraints of the quantum processor. Most of the works focus on NISQ devices such as the IBM or Rigetti chips as they are accessible through the cloud. The proposed mapping solutions differ and therefore can be classified according to the following characteristics:

-

- Quantum hardware constraints: one of the main restrictions of current quantum devices is the limited connectivity between the qubits. Different quantum processors, even within the same family, can have different topologies such as a linear array (1D), a 2D array with only nearest-neighbor interactions, or more arbitrary shapes. Although most of the works on mapping focus on the qubit connectivity constraint, there are other restrictions that originate from the classical control part and that reduce the parallelizability of quantum gates. This kind of constraint become more and more relevant when scaling-up quantum systems as resources need to be shared among the qubits.

-

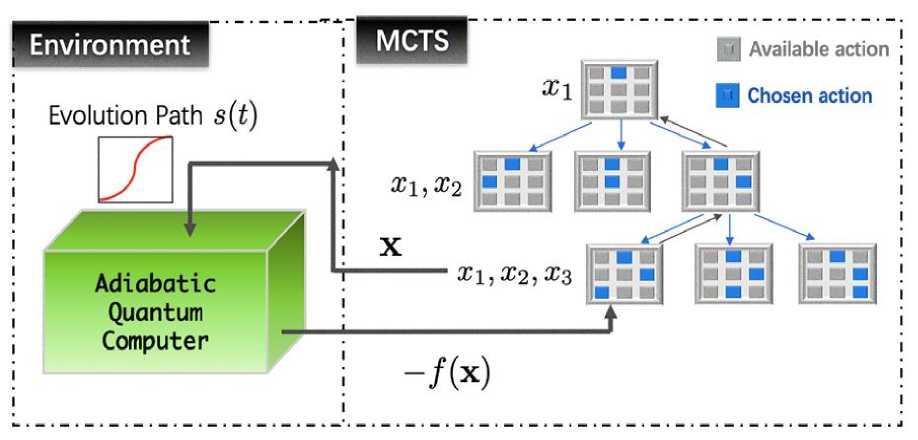

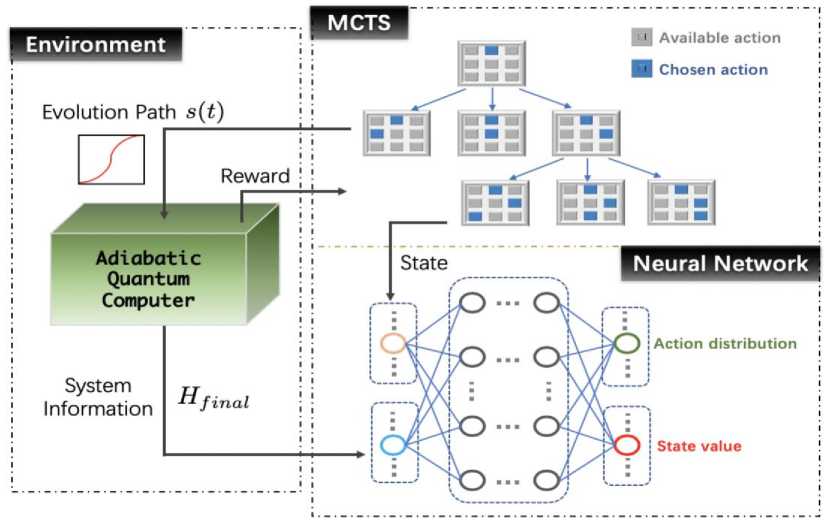

- Solution approach and methodology: exact approaches are feasible when considering relatively small number of qubits and gates, giving minimal or close-to-minimal solutions. However, they are not scalable. Approximate solutions using heuristics can be used for large quantum circuits. Some used methods are (Mixed) Integer Linear Programming ((M)ILP) solvers, Satisfiability Modulo Theory (SMT) solvers, heuristic (search) algorithms, decision diagrams, or even temporal planners and reinforcement learning.

Cost function: there are different metrics that can be optimized in the mapping process. The most common cost functions are the number of gates (i.e. minimize the number of added SWAPs) and the circuit depth or latency (i.e. minimize the number of time-steps of the circuit). Recent works started optimizing directly for circuit reliability (i.e. minimize the error rate by choosing the most reliable paths).

Solution features: In addition to the just mentioned characteristics, there are other important features that can lead to better solutions. Some examples are the look-back strategy in the routing that refers to taking into account the previous already scheduled operations when selecting the routing path or the look-ahead feature that considers not only the current two-qubit gates that need to be routed and scheduled but also some of the future ones with some weights. Besides that, also pre-processing steps dedicated to particular quantum functionality have shown to be extremely beneficial.

Certain machines allow for extensive pre-compilation of the algorithms that solely excludes the routing operations and parallelization information. In this case the mapper receives QASM code that uses only the one- and two-qubit gates available to the device.

The output only adds routing operations. These machines require:

-

- symmetric two-qubit gates;

-

- homogeneous single-qubit gate set;

-

- the possibility of measuring any qubit in the same basis.

Here, SWAP gates are needed only to overcome the connectivity limitations. The mapper needs to know how to decompose SWAP gates into the available gate set. Often there are multiple ways to do so, for example each decom-position originates a second one obtained by exchanging the role of two qubits involved in the first one. While the transmon architecture of Surface-17 chip exhibits the three properties, they are not required for functioning quantum devices. When the properties are not satisfied, the mapper cannot fully separate the gate decomposition and routing tasks. When the two-qubit gates are asymmetric, decisions concerning the addition of extra gates must be made at the time of routing and scheduling. For example, when CNOTs are used as in the IBM architecture, extra Hadamard gates may be required to invert the role of the control and target qubits. This can be known only at the time of routing, i.e. when the qubit placement in the CNOT is known. When the available native one-qubit gates differ from (physical) qubit to qubit, the scheduling involves two steps.

Consider that one needs to schedule gate U acting on the k-th program qubit. In this case it is required to 1) compute the sequence of available gates that implements, or approximates, U for the different physical qubits (or at least those at short distance from the physical qubit currently associated to program qubit k), and 2) add the cost of the routing. Selecting the better option therefore requires performing multiple gate decompositions and can be done only at scheduling time when the placement is known. To date all architectures, provide the same set of one-qubit gates per physical qubit, but this may change due to the pressure of reducing control resources or when the gate fidelity is used as the metric to guide mapping decisions. Finally, when not all qubits can be directly measured or when the available measurements differ from qubit to qubit, additional gates are required. In the first case to move the quantum state towards measurable qubits, and in the second case to adapt the measurement basis.

Despite their differences, all mappers need an internal representation of key quantities and these can be combined in the concept of the execution snapshot. As the name suggests, the execution snapshot is a complete description of the algorithm and its current, usually partial, schedule.

It contains:

-

- the dependency graph of the algorithm with the indication of which gates have already been scheduled;

-

- the initial placement that associate each program qubit to a physical qubit;

-

- the current placement of the qubits

-

- the partial schedule with the timing information and explicit parallelism

-

- the settings of the control electronics for the execution.

The data structure specifying the execution snapshot varies from mapper to mapper. Here it provides an intuitive one: the dependency graph is a directed, acyclic graph with nodes representing the quantum gates and edges indicating dependencies (the target node corresponds to the gate that depends on the source node). Nodes can have one of two colors, differentiating the gates already scheduled from those that need to be scheduled. An additional color may mark the gates that can be scheduled next according to the algorithmic dependencies. Qubit placement is represented by an array of integers of size equal to the number of physical qubits: the k-th entry corresponds to the index of the program qubit associated to the k-th physical qubit, apart from a special integer indicating that the qubit is “free” in situations where the program requires less qubits than those present in the quantum hardware. Finally, the schedule with timing information can be provided as a table by discretizing the time into clock cycles, the greatest common divisor of the gates’ duration. This table also includes any additional gate from gate decomposition and routing. The mapper has to take into account the constraints from the control electronics. To this end one needs a way to track, for every clock cycle in which a certain gate can be performed according to the logical dependencies, if that gate can be executed compatibly with all the gates already scheduled. Therefore, the mapper needs to be aware of how the set of compatible gates (i.e. those part of the physically available gate set and that do not conflict with gates already scheduled) changes at each clock cycle, and update it dynamically. The conceptually simplest method is to keep an explicit list of the compatible gates for each physical qubit, but this may not be the most efficient implementation. In fact, more compact representations are derivable for specific architectures.

Devices based on superconducting circuits no mean the only approach to scalable quantum devices. Multiple physical implementation of quantum processors is being currently developed, including but not limited to trapped ions, silicon quantum dots, photonics, neutral atoms, and topological systems. The maturity of each technology is at a different point and the challenges to scalability are also different. We are interested to provide a few examples in which particular physical implementations provide unique features. We only present three of them for illustration purposes. Most architectures are limited to a planar connectivity between qubits, but trapped ions provide all-to-all connectivity, at least inside groups of tens of ions. This is originated by their long-distance Coulomb interaction and mediated by their collective vibrational modes. However, this desirable property comes at the price of reduced two-qubit gate parallelism. Finally observe that multi-qubit gates are also available for trapped ions and this may require an enlarged instruction set. Photonics architectures are uniquely positioned for tasks that combine computation and communication, like at the nodes of quantum repeaters’ networks. However, they are limited to demolition measurements in which the qubit is “destroyed” when measured since the photon is absorbed by the detector. One can generate a new photon to re-initialize the qubit state. In silicon quantum dots the role of qubits is played by the spin of electrons confined in electromagnetic potential wells called dots. The simplest scheme is one electron per dot, but alternative configurations are also considered. Two-qubit gates are implemented via the exchange interaction between two electrons in nearby dots. However certain dots can be momentarily empty and electrons can be moved to empty dots in a way that maintains the qubit coherence, the so-called shuttling operation. The electron movement can be interpreted either as a change in the device connectivity or as an alternative qubit routing not based on SWAP gates. Specialized mappers are required to take full advantage of these capabilities.

During the compilation process quantum circuits need to be modified to comply with the constraints of the quantum device. This usually results in an increase of the number of gates and the circuit depth, which affects negatively the reliability of the computation. Therefore, minimizing this mapping overhead is crucial, especially for NISQ devices in which no or hardly any error protection mechanisms will be used. Two examples of mappers developed for two specifics superconducting transmon processors, the IBM QX4 and the Surface-17, where different solution approaches are used. In addition, other device types, the internal data representations used by the mappers and described the peculiarities of other possible physical implementations of quantum processors. There are still several open questions requiring the attention of the community working on mappers of quantum algorithms.

First, what is the best metric to optimize? Most of the works use as the optimization metric either the number of gates or the circuit depth. Recent works started considering the expected reliability of the overall quantum computation. New metrics, or possibly a combination of the existing ones, need to be investigated.

Secondly, should aim for machine-specific solutions or more general-purpose and flexible ones capable of targeting different quantum devices and technologies and different optimization problems? So far, the proposed mappers can be considered ad-hoc solutions that are mostly meant for a particular chip or similar kind of processors in which qubits are moved by SWAPs.

While general-purpose mappers would avoid repeating the development effort for each device, the risk is that general optimization strategies will not take full advantage of the hardware capabilities. In addition, the change of the quantum technology may require very different mapping strategies.

Third, what is the good balance between the obtained solution and the time required to compile the circuit? It is necessary to analyze the trade-off between mapping optimizations and runtime, especially for large-scale quantum algorithms.

Finally, it is important to mention that these optimizations should consider both the quantum device and the quantum application characteristics. In this direction, proposes an approach which takes the planned quantum functionality into account when determining an architecture.

An application of an algorithm is only as efficient as the oracle it supplies. Let us consider the example of quantum gate-based computing approach apply qualitative description of quantum amplitude amplifier algorithm. There are multiple ways to implement the contract of multiplying the amplitudes of the recognized states by (– 1):

-

1. Most texts in quantum computing use a nice trick that efficiently performs the multiplication with a

-

2. Recall the ZXZX gate sequence, which multiplies the amplitudes of a state by (– 1) (Fig. 5). This works on any state, unlike the trick above, but at the cost of an increased number of gates.

single qubit. Prepare the state of an ancillary qubit with amplitudes a0 = —and ax = —, using an 0 2 1 2

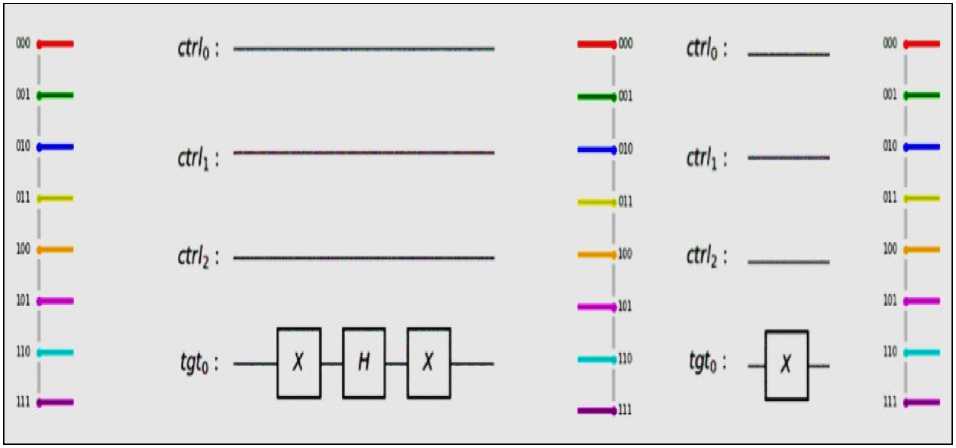

HX gate sequence on the default state. We then apply an X gate to that ancillary qubit, which flips the phase of control qubits (Fig. 4). Note that the trick only works on this specific state.

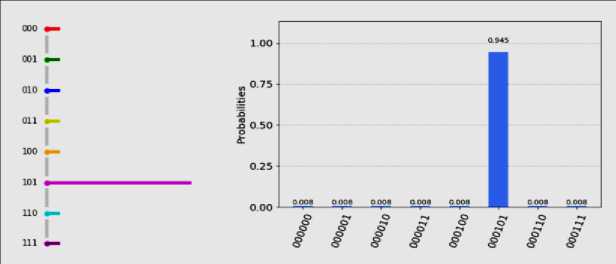

Fig. 4. A circuit that multiplies the state by (– 1) using a specially-prepared state, applied twice for illustration. [The target qubit is not shown in the histograms]

Fig. 5. A circuit using the ZXZX gate sequence, which multiples any state by (– 1), applied twice for illustration. [The target qubit is not show in the histograms]

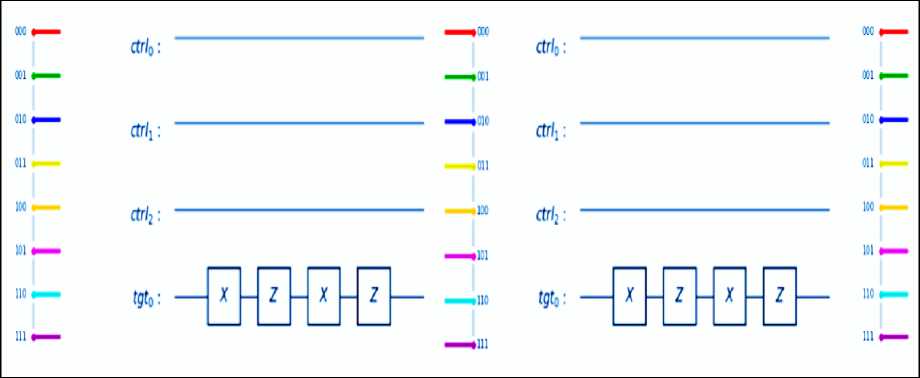

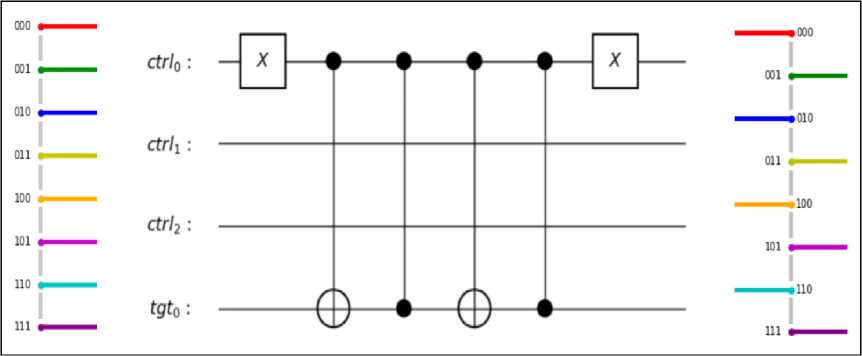

Let’s take a look at a simple oracle, recognizing the basis states (binary strings) representing even integers. For a quantum system with three qubits, the oracle should negate the amplitudes of the basis states ending in 0, e.g. 000, 010, 100, and 110 (Figs 6 and 7).

Fig. 6. An oracle that recognizes the even states and multiplies their amplitudes by (– 1) using the single-qubit trick

Fig. 7. An oracle that recognizes the even states and multiplies their amplitudes by (– 1) using the ZXZX gate

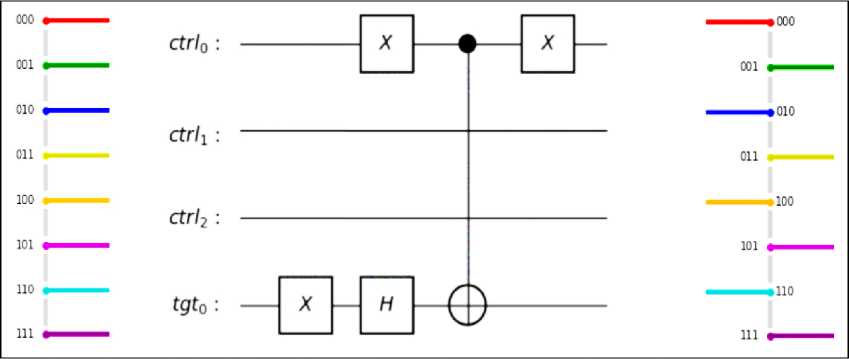

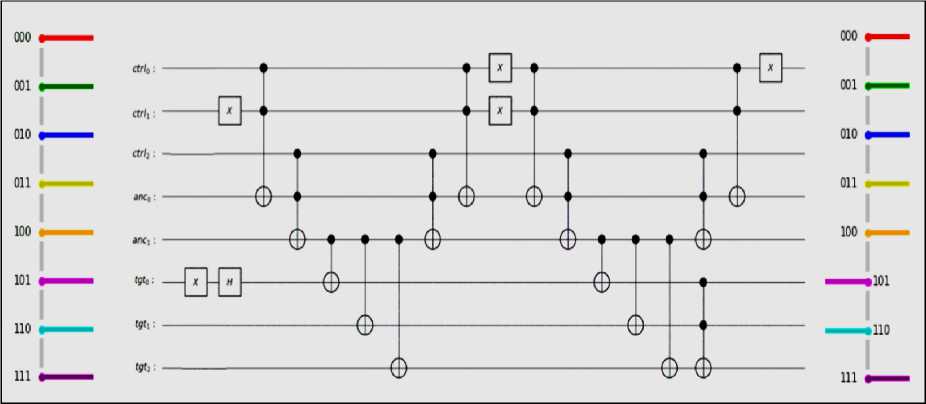

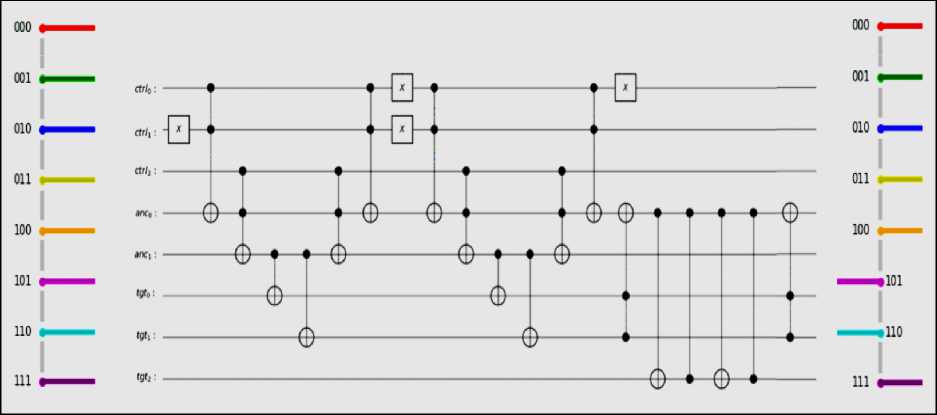

Next, let’s look at an oracle that recognizes a particular set of basis states specified element by element. In this example the oracle must examine all of the basis states, and multiply their amplitudes by (– 1) only if all qubits match an element in the given subset. We will need to introduce ancillary qubits, used as the controls of the multiplication gate(s) on the target qubit (see below Figs 8 and 9).

Note that we have to apply a control gate to the qubit at index j = 0. Recall the control gates are applied when the control qubit is 1. In order to match 0, we apply an X to that qubit. In the resulting state, the amplitudes of the even outputs have been multiplied by (– 1), and the amplitudes of the odd outputs remain unchanged.

Next, let’s look at an oracle that recognizes a particular set of basis states specified element by element, e.g. { 101,110 } . In this example the oracle must examine all of the basis states, and multiply their amplitudes by (– 1) only if all qubits match an element in the given subset. We will need to introduce n – 1 ancillary qubits, used as the controls of the multiplication gate(s) on the target qubit (Figs 8 and 9).

Fig. 8. An oracle that recognizes the states in a set, and multiplies their amplitudes by (– 1) using the single-qubit trick

Fig. 9. An oracle that recognizes the states in a given set, and multiplies their amplitudes by (– 1) using the ZXZX gate

While this is a useful oracle, it is not very efficient, as it requires a large number of controlled gates to match all bits in the recognized basis states. Oracles can also be used for satisfiability problems like 3-SAT, in which we create a black box that recognizes the basis states that satisfy a Boolean expression. While we won’t provide an in-depth discussion, there is one in IBM’s introduction. Another interesting oracle is one that recognizes prime numbers, which could potentially be built using Shor’s algorithm. Here we concentrate our attention on quantum search problem in instructed data base.

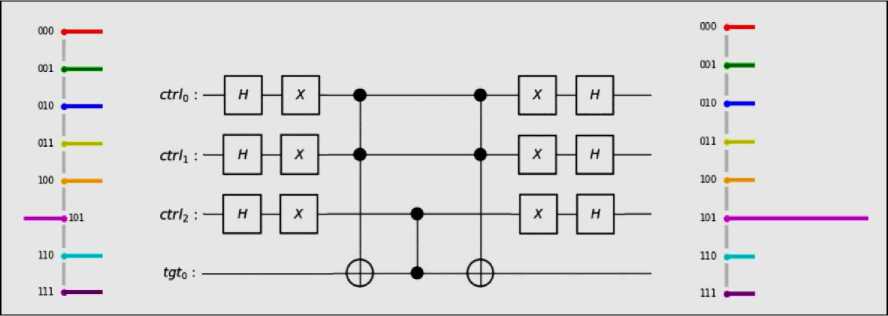

In an efficient quantum computing we want to increase the probability of some desired outputs, and therefore decrease the number of times we need to repeat the computation. We have already seen how Phase Estimation can help increase the amplitudes of the outputs that best approximate a parameter. Grover’s algorithm performs amplitude amplification by using an oracle that recognizes a single basis state. The algorithm uses what is called the Grover iterate - consisting of a sequence of two steps (an oracle O and the diffusion operator) - which is applied a specific number of times. We discussed oracles in the previous subsection. The diffusion operator D has the net effect of inverting all amplitudes in the quantum state about their mean. This causes all the amplitudes of the good states to be scaled by at least , while the ampli-N tudes of all other outcomes (the bad states) decrease. If one thinks of the computation as a die, the probability of one of the faces is dramatically increased. Note that the amplitudes remain real throughout the application of the oracle and the diffusion operator. A complete implementation of Grover’s algorithm is provided in Appendix.

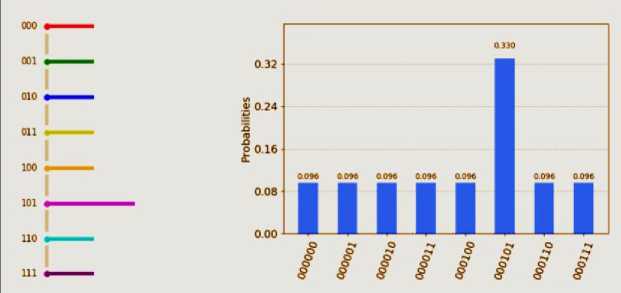

As an example, let’s examine a three-qubit quantum state with the desired outcome being 101, using the set-based oracle described in the previous subsection (Fig. 10).

Fig. 10. A three-qubit state after a single pass of the oracle, which is then passed to the diffusion operator (multiplication of amplitudes by (– 1) not shown in the circuit)

We repeat the application of the oracle and the diffusion operator for I N V I iterations (Fig. 11).

Fig. 11. The result of Grover’s algorithm after I V N = 8 = 2 I iterations of the oracle and diffusion operator

Note that it is possible to over-iterate, which would decrease the magnitude of the desired output during the application of the diffusion operator (Fig. 12).

Fig. 12. The result of Grover’s algorithm after ^ V N = 8 + 1 = 3 j iterations of the oracle and diffusion operator

Therefore, any quantum computation can be simulated classically; the key question is about the efficiency. Much effort has been made trying to pin down the power of quantum computers based on the machinery of the computational complexity theory. In particular, the class of computational (decision) problems that can be solved efficiently by a quantum computer is called BQP (bounded-error quantum polynomial time); the counterpart for classical computers is called P (polynomial time). Of course, BQP cannot be less than P; in principle quantum computers can simulate classical computers efficiently. The point is that the foundation of quantum computation cannot be established without a proof showing that BQP is strictly larger than P (i.e. BQP ^ P). In this sense, this question is as interesting as the famous challenge of proving P ^ NP (non-deterministic polynomial time). A well-known instance of an NP problem is the factoring problem; Shor’s quantum algorithm is capable of solving the factoring problem in polynomial time, which is not achievable with the best classical algorithm discovered so far.

However, we still cannot rigorously exclude the possibility of the existence of an efficient classical algorithm for factoring. Although again without a proof, it is commonly believed that quantum computers are unable to solve some of the NP problems. In fact, the proof of this statement (if true) provides a possible path to prove P ^ NP.

Quantum supremacy. A potentially less demanding question would be, when do we expect a quantum computer to be able to perform some well-defined tasks (not necessarily related to any practical problem) that can-not be simulated with any currently available classical device, within a reasonable time? Once achieved, the status is called “quantum supremacy”. One may ask, what about Grover’s search algorithm, which provides a quadratic speed-up over the classical search? Can we say that we can already achieve quantum supremacy with Grover’s algorithm? The problem is that, instead of taking the large N limit, quantum supremacy requires us to determine the actual number of qubits and gates that can no longer be simulated by classical computers within a reasonable time.

To elaborate further, we remark that there can be two notions of classical simulation, namely “strong simulation” versus “weak simulation”. Strong simulation refers to the cases of calculating the transition probabilities, or expectation values of observables, to a high accuracy in polynomial time. Weak simulation requires the probability distributions to be accurately reproduced, which involves ‘sampling’ the different outcomes of the quantum devices. Some quantum circuits that cannot be strongly simulated with an efficient classical means may have efficient classical methods for weak simulation. However, we should be careful about the accuracy requirement in the simulation tasks. For example, for many decision problems, it may be sufficient to determine the transition amplitudes to within an additive error, instead of multiplicative error. In other words, the classical simulability of quantum computational problems depends on the accuracy requirement. Currently, there are three popular approaches for achieving quantum supremacy (see Fig. 13), namely (i) boson sampling, (ii) sampling IQP (instantaneous quantum polynomial) circuits, and (iii) sampling chaotic quantum circuits.

In all of these approaches, the distributions of different bit strings or photon numbers are sampled from the quantum devices. Furthermore, they all involve the assumption that classical computers are unable to efficiently determine the transition amplitudes, and/or reproduce (or approximate) the distributions of quantum devices performing these tasks, in the sense of both strong and weak simulations.

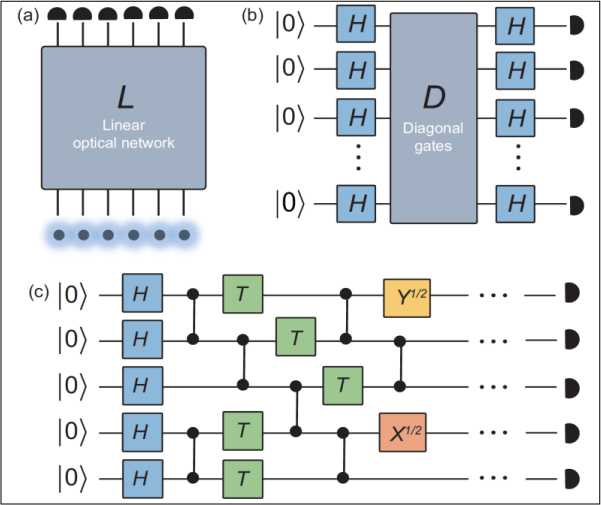

In boson sampling, single photons are injected into different modes of a linear optical network. The task is to determine the photon distributions at the output ports. The key feature of boson sampling is that the transition amplitudes are related to the permanents of complex matrices, which are in general very difficult to calculate exactly, or approximate to within a multiplicative error; these problems belong to the #P-hard complexity class. Moreover, efficient weak simulation of boson sampling is believed to be impossible, unless the polynomial hierarchy collapses to its third level. However, a recent numerical study suggests that, in order to achieve quantum supremacy with boson sampling, one needs to simultaneously generate at least 50 or more single photons, which is still highly technologically challenging. An interesting question is whether linear optics, in the setting of boson sampling, can be applied to solve decision problems. In this case, the transition amplitudes may need to be determined to an additive error. However, a large class of decision problems associated with boson sampling can be simulated by a classical computer, settling an open problem by Aaronson and Hance.

IQP circuits represent a simplified quantum circuit-based model. The initial state starts from the all-zero state, 00 0) , followed by applying Hadamard gates to each qubit. Then, diagonal gates are applied to the qubits, followed by applying Hadamard gates to each qubit again. The argument of showing the complexity in simulating IQP circuits is similar to that of boson sampling. In fact, the complexity of boson sampling was inspired by the complexity results of IQP circuits. However, when subject to noise, IQP circuits may become classically simulable.

Lastly, chaotic quantum circuits refer to the class of quantum circuits where two-qubit gates are applied regularly, while single-qubit gates are applied randomly from a gate set. The output distributions of these circuits approach the so-called Porter–Thomas distribution, which is a characteristic feature of quantum chaos. Recently, there have been several numerical investigations aiming to explore the ultimate limit of classical computing in simulating low-depth quantum circuits. For the ideal cases, one needs to consider both the qubit number and circuit depth for benchmarking quantum supremacy. However, in the presence of noise, these chaotic circuits may also become classically simulable for high-depth circuits.

Finally, other than these three approaches, one should expect that quantum supremacy can be achieved for many practical applications, e.g. simulation of quantum chemistry or quantum machine learning.

What’s a quantum circuit? A quantum circuit can be a tricky thing. Quantum computing promises to be faster than classical computing in some, but not all computational problems. Whether quantum computers can be faster than classical computers depend on the nature of the problem being solved. When speedups are possible, the magnitude of the speedup also depends on the nature of the problem. For example, in certain types of problems, the solving time with quantum computing could reduce to about the square root of the solving time with classical computing. That is, a problem that would require one million operations on a classical computer might require 1,000 operations on a quantum computer.