Recognizing Fakes, Propaganda and Disinformation in Ukrainian Content based on NLP and Machine-learning Technology

Автор: Victoria Vysotska, Krzysztof Przystupa, Yurii Kulikov, Sofiia Chyrun, Yuriy Ushenko, Zhengbing Hu, Dmytro Uhryn

Журнал: International Journal of Computer Network and Information Security @ijcnis

Статья в выпуске: 1 vol.17, 2025 года.

Бесплатный доступ

The project envisages the creation of a complex system that integrates advanced technologies of machine learning and natural language processing for media content analysis. The main goal is to provide means for quick and accurate verification of information, reduce the impact of disinformation campaigns and increase media literacy of the population. Research tasks included the development of algorithms for the analysis of textual information, the creation of a database of fakes, and the development of an interface for convenient access to analytical tools. The object of the study was the process of spreading information in the media space, and the subject was methods and means for identifying disinformation. The scientific novelty of the project consists of the development of algorithms adapted to the peculiarities of the Ukrainian language, which allows for more effective work with local content and ensures higher accuracy in identifying fake news. Also, the significance of the project is enhanced by its practical value, as the developed tools can be used by government structures, media organizations, educational institutions and the public to increase the level of information security. Thus, the development of this project is of great importance for increasing Ukraine's resilience to information threats and forming an open, transparent information society.

Information Security, Cybersecurity, Content, NLP, Propaganda, Disinformation, Fake News, Message, Text, Linguistic Analysis, Artificial Intelligence, Cyber Warfare, Machine Learning, Information Technology

Короткий адрес: https://sciup.org/15019629

IDR: 15019629 | DOI: 10.5815/ijcnis.2025.01.08

Текст научной статьи Recognizing Fakes, Propaganda and Disinformation in Ukrainian Content based on NLP and Machine-learning Technology

In the digital age, information security is one of the critical challenges for many societies, especially for countries in a state of political change or conflict. As a country heavily influenced by information operations, Ukraine faces the need to combat disinformation, fake news and propaganda [1]. Accordingly, the development of tools for identifying and analysing such information threats is an urgent task that is important for ensuring the country's information security [2].

The relevance of this project cannot be overestimated in the conditions of the modern information space, where the struggle for the truth becomes almost synonymous with the preservation of national security. Information wars, in which the truth becomes the first victim, mercilessly bombard the public consciousness of millions of people, distorting reality and forming an artificial reality that serves the interests of external and internal antagonists [1].

In Ukraine, on the front lines of the confrontation with hybrid threats, the lack of reliable tools for recognizing disinformation can lead to systemic failures in public trust, erosion of fundamental democratic values and stability of state institutions. It is not simply a matter of media literacy but a matter of strategic national security defence [1-3].

The flywheel of disinformation can have a destructive effect on domestic political stability and the international reputation of Ukraine, affecting the investment climate and bilateral relations with other states. A qualitatively new level of aggression in the information field requires an adequate response in the form of developing and implementing advanced technological solutions.

The project, which aims to create comprehensive tools for identifying fakes and disinformation, not only meets the critical need of Ukrainian society for reliable means of information verification but also increases the general culture of information consumption, strengthening the information resilience of the nation. This project will become a buffer that will protect Ukrainian society from false narratives and hostile information interventions, ensuring the stable development of democratic institutions and values in the country.

This research aim s to create an innovative software solution – a digital shield capable of identifying and analysing flows of disinformation, propaganda, and fake news that are spread in the Ukrainian language. This tool will serve as a strategic resource for rapid verification of information, allowing users to distinguish fact from fiction at a critical moment. For this, we must follow the solutions of the tasks:

• Creation of algorithms for automated recognition of disinformation. Using advanced technologies of machine learning and natural language processing, develop intelligent systems that can analyse large volumes of text and detect false information with high accuracy and speed.

• Creation of an extensive database of disinformation campaigns. Collect and systematize a large database that includes historical and current examples of fakes identified and verified by experts. This database will be the basis for training algorithms and ensuring their relevance.

• Development of an intuitive user interface. Create a convenient and understandable interface accessible to a wide range of users – from scientists to ordinary citizens. This interface will allow users to easily navigate and analyse information and receive reliable reports on the reliability of content.

2. Related Works

This project is designed not only to increase the level of information literacy among the population but also to create a powerful tool in the fight against information chaos, ensuring the stability and security of the information space of Ukraine. The basis of this study is the process of dissemination and consumption of information in global media spaces -this is a vast and multifaceted canvas on which dynamic and often contradictory information flows unfold. In the age of digital technologies, this process has become especially significant due to its influence on the formation of public opinion, political attitudes, and socio-cultural trends. The speed and volume of information dissemination create unique challenges for information verification and analysis. Our research focuses on the methods and tools used to identify, analyse and neutralize disinformation, fake news and propaganda messages in the media space. We examine modern technological approaches, such as machine learning and natural language processing algorithms, to determine their effectiveness in detecting distorted content. We also analyse how these techniques can be integrated into everyday media consumption, providing users with a powerful means to assess the veracity of the information they consume independently. This twodimensional approach allows you to dive deeply into the mechanisms of information influence and determine strategies for developing practical tools that could resist manipulation and distortion in the media, thereby ensuring a higher level of information transparency and trust in society. This project opens new horizons in machine learning by adapting advanced algorithms to the peculiarities of the Ukrainian language. The uniqueness lies in the creation of the latest methods of deep semantic analysis, which allow us to understand the structural and contextual features of Ukrainian vocabulary and syntax. These developments provide more accurate and efficient detection of information distortions, offering algorithmic innovations that can become the foundation for future research in natural language processing. The project has a significant practical impact, providing Ukrainian society with reliable and affordable tools for identifying and analysing disinformation. These tools allow users to identify false content and understand its sources and potential targets, thereby strengthening society's information immunity. As a result, the project strengthens Ukraine's information independence and sovereignty, increasing information transparency and trust, which is critical to the stability of democratic institutions and national security. The development of digital technologies and the growing amount of information generated and distributed on the Internet daily creates significant challenges for information security, particularly in the context of disinformation and manipulation of public consciousness. In this project, we focused on the importance and necessity of developing practical tools for identifying and analysing disinformation in the Ukrainian language, which is critically important for protecting Ukraine's national security.

The problem of the ineffectiveness of existing tools for identification, analysis and response to disinformation, fake news and propaganda in the Ukrainian information space consists of several components, each requiring special attention and an individual approach [4-6].

Multilingualism and localization. Many modern tools and solutions for detecting disinformation are optimized for English, leaving a significant gap for other languages, including Ukrainian. It creates a barrier, as algorithms that work effectively with the English language may not consider linguistic features and context, which are critical for correctly analysing Ukrainian textual content. Lack of localization and adaptation of technologies can lead to misinterpretation of information or underestimation of threats.

The difficulty of detecting misinformation. Disinformation often uses sophisticated masking techniques, including mixing true and false information, making it difficult to detect with traditional fact-checking methods. Misinformation can be embedded in logically plausible narratives, which requires deeper contextual analysis and the involvement of additional sources for fact-checking.

Dynamics of information campaigns. Information campaigns, especially those involving disinformation, are rapidly changing their forms and means of distribution, which requires disinformation detection tools to be flexible and adapt to new challenges. The spread of misinformation can escalate quickly, making it urgent to implement operational responses.

Limited resources for monitoring. The large volume of information constantly generated on the Internet makes it impossible to monitor all potential sources of disinformation manually. Existing tools are often unable or ineffective at detecting misinformation in real time due to limitations in computing and human resources [7-9].

To solve the problems outlined above, our approach to creating a system includes several key areas of development: Developing deep learning algorithms for the Ukrainian language is necessary to create specialized algorithms that use natural language processing technologies to recognize Ukrainian content. These algorithms must be able to analyze verbal content and understand the context, idioms, slang and stylistic features characteristic of the Ukrainian language. An important aspect will be training data that includes enough examples of fakes to train the models to recognize fake information.

-

• Creating a modular media monitoring platform that integrates various media monitoring tools and modules. This platform should be able to quickly track the spread of potentially dangerous narratives, using algorithms to determine the virality of the content and its impact on the audience. A critical function will be automatic analysis and reporting, which will help quickly respond to critical threats.

-

• Integration with media platforms and social networks . APIs or plugins must be developed to integrate our system with popular media platforms and social networks. It will ensure the possibility of early detection and blocking of disinformation at the initial stages of its spread. The integration should allow the analysis of large data streams in real-time, revealing patterns in spreading misinformation.

-

• Development of educational tools for users that help people critically evaluate information to strengthen society's information literacy. It may include developing training courses, interactive workshops, and other resources that teach users to recognize the signs of fakes and manipulative techniques.

With the help of these measures, it is possible to create an effective system capable of adequately responding to modern challenges in the information space, providing reliable protection against information threats in the Ukrainian context. Implementation is cooperation with scientific institutes, technology firms, government organizations and global partners [1]:

-

• Cooperation with scientific institutes is vital to establish partnerships with universities and research centres that have expertise in natural language processing and machine learning. It will provide access to the latest research and development and attract students and teachers to participate in the project. Cooperation may include joint research projects and developing courses to train personnel who will work with the system.

-

• Technology companies can provide the necessary software, hardware and technical expertise. They can also help integrate the system into existing media platforms and social networks. It will facilitate the implementation of solutions based on artificial intelligence for analysing large volumes of data in real-time.

-

• Government support is critical to providing the legal framework necessary for the implementation and operation of the system. Government agencies can also help fund the project and provide the necessary regulations so that the system can effectively interact with other national initiatives in the fight against disinformation.

-

• Establishing relations with international organizations and foreign partners will help share knowledge, experience, and best practices. It can also provide access to international funding and expand the potential of the system to be applied internationally by ensuring the implementation of globally recognized standards and methodologies.

These measures will help launch the project effectively and ensure its sustainable development and scaling in the future, responding to changes in the information space and technological environment. Several existing platforms and projects can be considered analogues or competitors of your product to create comprehensive software for identifying and analysing fakes, propaganda, and disinformation. Here are some of them:

NewsGuard is an innovative project focused on increasing the transparency and reliability of information on the Internet, particularly on news sites. The service works by assigning trustworthiness ratings to various media resources, allowing users to understand how credible and reliable the sources of their news content are. NewsGuard uses a team of experienced journalists who analyze and evaluate news sites according to journalistic criteria. The team reviews sites and determines whether they meet some standards, such as honesty in reporting, accountability, transparency about funding and ownership, and clarity of advertising and editorial standards. The site is assigned a trustworthiness rating based on these criteria, which becomes available to users through browser plugins or other partner platforms.

The advantages of NewsGuard are the direct involvement of experts and broad coverage. Using professional journalists to evaluate news sources adds weight and credibility to their ratings. Media experts have the necessary knowledge and experience to assess the quality of content adequately. NewsGuard analyzes thousands of sites covering a wide range of the media landscape, providing users with information about the reliability of many sources.

The disadvantages of NewsGuard are the high cost and potential subjectivity of ratings. The analysis process involving qualified journalists is resource-intensive and may require significant financial costs. It may limit the ability to rapidly expand coverage of new sites or the frequency of rating updates. Despite the standardized approach, personal preferences and judgments of journalists may influence the rating. Although NewsGuard tries to minimize subjectivity, it is impossible to eliminate it, which may raise questions about the objectivity and independence of ratings.

Overall, NewsGuard represents a significant step forward in media transparency by providing users with tools to better understand the reliability of online news sources.

Hoaxy is a platform developed by Indiana University's Center for Network Research that specializes in visualizing the dynamics of information dissemination and fact-checking in social networks. This platform will allow experienced researchers, journalists and the general public to see how to amplify specific assertions and news stories online and track the fact-checking results. Hoaxy analyzes and visualizes the distribution chains of articles and applications in social networks. Users can enter specific queries or keywords to track the spread of related information. Your platform uses algorithms to image and visualize information's paths between users and media nodes. It allows you to understand how information and current misinformation is spread through the network.

The advantages of Hoaxy are real-time analysis and visualization of the spread of disinformation. Hoaxy allows users to see how information spreads in real time, which is critical for understanding the dynamics of information campaigns. Clear visual graphics will enable you to quickly identify the primary sources and vectors of the spread of disinformation and the interaction between different actors in the network.

The disadvantages of Hoaxy are the limited ability to detect disinformation and the fact that it does not consider the linguistic features of individual languages. While Hoaxy effectively visualises and analyses how information is spread, the platform cannot always accurately identify whether the content is disinformation. It requires additional verification and critical analysis by users. Hoaxy is mainly focused on English-language content and may not consider the features of other languages, which limits its use in non-English-speaking regions or for language communities that require a specific approach to information analysis.

Overall, Hoaxy is a powerful tool for analysing the spread of information in social networks that can serve as an essential resource for researchers, journalists, and policy analysts interested in studying and combating disinformation.

Factmata is an innovative platform that uses artificial intelligence to detect and analyze malicious content and misinformation online. This system can identify fake news, propaganda, biased statements, and other manipulative content. Factmata uses sophisticated machine learning and natural language processing algorithms to analyze texts. The platform analyzes large volumes of information from various sources, including news, social networks, and blogs. It allows the detection of potentially harmful content in its early distribution stages. Factmata also provides feedback from users and experts, which helps to constantly improve and adjust the platform's algorithms.

The advantages of Factmata are an automated approach and continuous learning. Artificial intelligence allows you to automate detecting malicious content, reducing the need for manual verification and analysis of large volumes of data. Factmata is constantly learning from new data, which improves its ability to detect various forms of misinformation and adapt to changing methods of information manipulation.

The disadvantages of Factmata are high learning resources and accuracy and Errors. Developing and maintaining the algorithms used by Factmata requires significant computing and financial resources. Training artificial intelligence on large data sets can be costly and complex. Like any AI-based system, Factmata can have accuracy issues, especially regarding content that contains subjective judgments, irony, or jargon that could mislead the system.

Overall, Factmata represents a significant step forward in developing anti-disinformation tools, offering a robust platform that can help various organizations, from news agencies to corporate clients, control the quality and reliability of information.

Snopes is one of the first and most recognizable fact-checking platforms on the Internet. Founded in 1994, Snopes specializes in debunking urban legends, popular myths, famous stories, and misinformation spread across the Internet and social media. The Snopes platform uses a research approach involving a team of experienced fact-checkers and journalists who analyse and verify the reliability of information. They investigate each request in-depth based on primary data sources, scientific studies, official statements and other verifiable facts. Snopes is also known for its detailed explanations and conclusions that help users understand the context and nature of the information.

The advantages of Snopes are strong user trust and authority. With its long history and consistency in fact-checking, Snopes has earned a reputation as a reliable source for distinguishing true from false information. Their work has earned them trust and recognition among users around the world.

The disadvantage of Snopes is its dependence on manual fact-checking. The main drawback of Snopes is that the platform is highly dependent on the manual labour of their fact-checkers. It can slow down the verification process, especially in today's news cycle, where information changes quickly. This approach may also prove insufficiently effective in situations where it is necessary to respond quickly to a large amount of fake news on social media.

Snopes continues to be a valuable resource in the fight against disinformation, providing users with a tool to verify the authenticity of the content they consume. However, the growing need for faster and more automated fact-checking may require Snopes and similar platforms to adapt to new technological solutions.

Bellingcat is an independent international initiative specializing in open-source intelligence (OSINT) investigations. Founded in 2014, Bellingcat uses data from open sources, such as social media, satellite images, videos, photos, and even advertising databases, to investigate and verify information often related to international conflicts, geopolitical crises, and rights violations of a person. Bellingcat uses modern data analysis techniques, including geolocation analysis, image and video analysis, and social media research, to uncover and document facts often overlooked by traditional media. It allows Bellingcat to conduct investigations with high independence and objectivity.

The advantages of Bellingcat are its high accuracy and depth of analysis as well as its advanced data collection and analysis techniques. Thanks to an interdisciplinary approach and the use of various sources of information, Bellingcat can conduct in-depth analytical investigations. They are known for their ability to reveal complex networks of relationships and events that take place behind closed doors. Leveraging advanced technology and analysis techniques, Bellingcat leverages large volumes of open data to uncover insights often unavailable through traditional sources.

The disadvantages of Bellingcat are that it focuses primarily on international events and needs specialized knowledge to interpret data. Because Bellingcat often focuses on global events and international conflicts, its activities may be less relevant to local or regional issues that require specific knowledge and resources. Bellingcat's work requires high expertise in data analysis, satellite mapping, and video and image verification. It creates barriers to attracting new team members and requires constant professional development.

Bellingcat remains one of the leading open data investigative organizations, making significant contributions to developing investigative journalism and data analysis.

3. Material and Methods

For an analytical review of the literature and related works on the identification of disinformation, it is essential to research the various methods and tools used in this area. Scientific works often focus on machine learning technologies, particularly deep learning methods for analysing textual data [10-12]. For example, algorithms based on natural language processing (NLP) models can detect patterns and inconsistencies in text that may indicate misinformation [12-15]. These techniques include using transformers such as BERT or GPT, which can generate and understand text at a very high level. However, beyond the technical aspects, many studies emphasize the importance of contextual analysis. Detecting disinformation often requires not only text analysis but also the inclusion of contextual information, such as determining the source of the information and its reliability [16-18]. The work shows that integrating data from different sources and their cross-analysis can significantly improve the accuracy of detecting fake news.

Another aspect often discussed in scientific works is the development of algorithms for automated detection of changes in the behaviour of social network users, which can be an indicator of manipulative information campaigns. Studying information dissemination patterns allows for identifying potentially harmful content before it reaches a large audience. Given the dynamics of modern media, there is a growing need to develop more effective tools for real-time data monitoring and analysis [18-21]. Many works propose using complex systems that integrate artificial intelligence, machine learning and automated monitoring tools to strengthen the ability to quickly respond to information threats [2124]. Finally, it is essential to note that no tool or method can be completely efficacious without considering the ethical aspects and possible consequences of implementing such technologies. A critical review of current research shows that continued development in algorithmic transparency, privacy and data protection is vital to maintaining trust and acceptance among users. The development of our software for the identification and analysis of disinformation offers significant advantages, in particular, the provision of a high level of customization, which allows you to precisely adjust the system to the specific needs of the Ukrainian media space [1]. Given the linguistic and cultural differences often ignored by one-size-fits-all solutions, it is essential. Automation of analysis processes allows the processing of large volumes of data, reducing dependence on the human factor and increasing the speed of response to information threats.

On the other hand, developing your system requires significant initial investment in research and development and ongoing costs for software maintenance and updates. Because such a system depends on skilled professionals for its growth and maintenance, there is a risk if access to skilled labour or technological resources is limited [24-28]. In addition, the constant challenge of ensuring security and data protection can be a significant burden for a start-up [29-33].

Goal tree for the fake, propaganda and disinformation identification project:

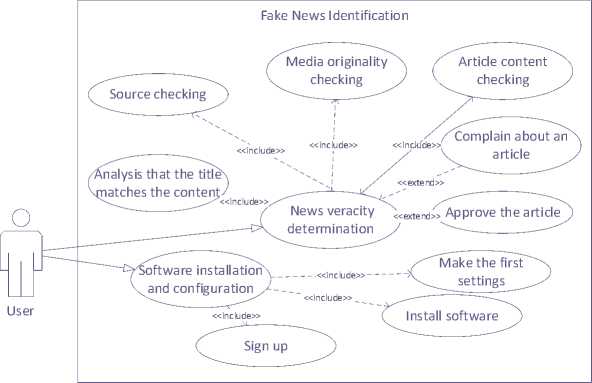

Data collection and preparation (Fig. 1-2) are as data loading (automating the process of collecting texts through the API of social media and news platforms) and data cleaning (removal of non-informative parts of the text (advertising, spam), standardization of data formats).

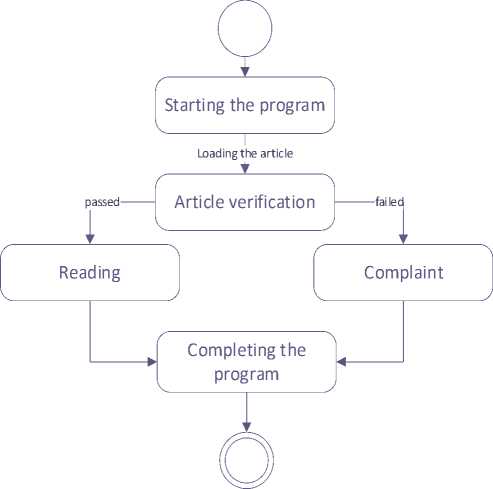

Fig.1. The use case diagram for the system of recognising propaganda, fake news and disinformation

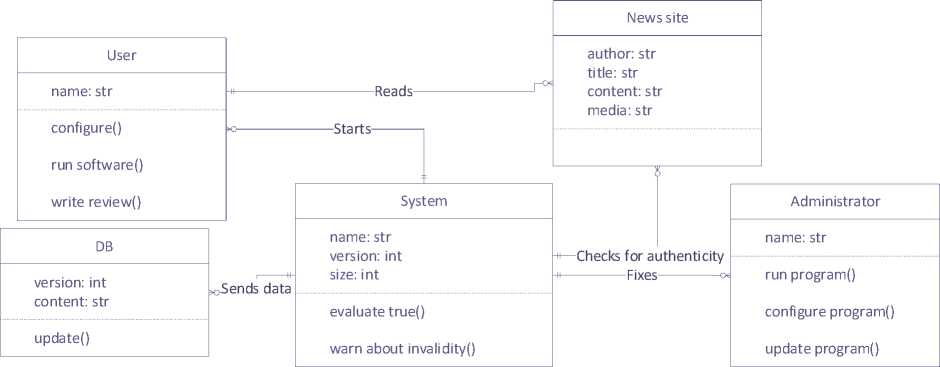

Fig.2. The class diagram for the system of recognising propaganda, fake news and disinformation

User

System

DataBase

Web site

Admin

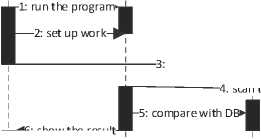

: open the site

7: analyse the news

4: scan the site

: show the result

8: provide feedback

8: update the program

Fig.3. The flow diagram for the system of recognising propaganda, fake news and disinformation

Continue viewing the article

Starting the program

Evaluate reliability of article

[ is reliable]

Leave the site

[not reliable]

File a complaint

Fig.4. State and activity diagrams for the system of recognising propaganda, fake news and disinformation

The development of models to detect misinformation (Fig. 3-4) is as machine learning for text classification (developing models that can classify text as "true" or "false," based on text characteristics and context) and deep sentiment analysis (development of specialized NLP models to analyse the emotional colouring of text and identify language markers often associated with manipulation and propaganda).

The development of fact-checking algorithms is as knowledge Base Building (creating and maintaining a fact-checked knowledge base that can be accessed to automatically compare statements from text) and development of verification algorithms (using combinations of NLP and artificial intelligence algorithms to analyse mentions of facts and automatically verify them in real-time).

Model optimization is tuning hyperparameters (such as Grid Search and Random Search to determine optimal model parameters) and fine-tuning models (micro-tuning models to specific types of misinformation that change over time).

System testing and validation are manual testing with experts (using media and information security experts to validate model results) and error analysis (detailed examination of model errors to improve their accuracy, including analysis of false positives and false negatives).

Identifying fakes, propaganda, and disinformation involves input data, output data, mechanisms, and management. Input data is text data sets that potentially contain fakes, propaganda disinformation and a list of known reliable and questionable sources of information. Output data is information reliability assessment reports and confirmed or denied disinformation cases.

Mechanisms:

-

• choosing a model type is deciding whether to use certain types of analytical models (e.g., classification models, sentiment analysis models);

-

• tuning hyperparameters is fine-tuning model parameters to increase their efficiency and adapt to the specific requirements of a given task;

-

• Fact-checking algorithms use specialized tools and methods for information verification.

Management:

-

• quality assessment methods are validation of information accuracy through comparison with a database of verified data and application of machine learning methods;

-

• accuracy and reliability requirements set high accuracy standards for the identification system to ensure its effectiveness in real-world conditions.

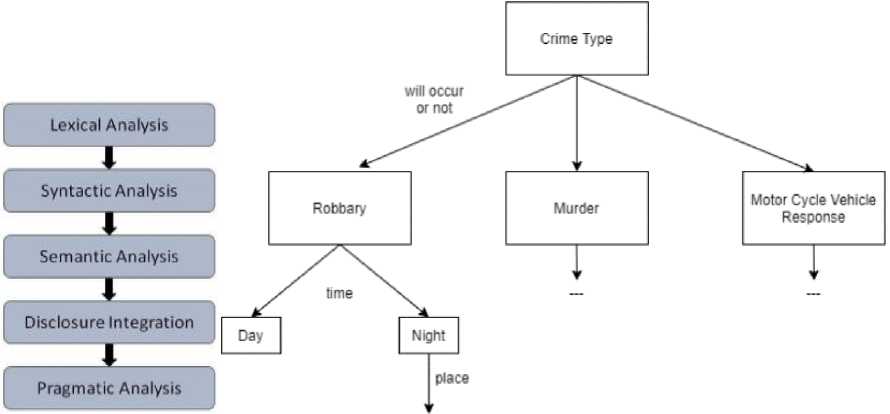

Fig.5. NLP techniques application sequence and using the solution tree to classify crime types

Table 1. Fulfilment of requirements by the linguistic system for identification of fakes/propaganda or analysis of criminal reports

|

Class |

Non-functional |

Functional |

Business |

User |

|

Fulfilment of requirements in the developed information technology |

|

|

|

|

|

Requirements content example |

divided into roles to which part of the functionality is attached.

attributes: efficiency, reliability, convenience.

without specialized knowledge must be able to use them. |

|

|

|

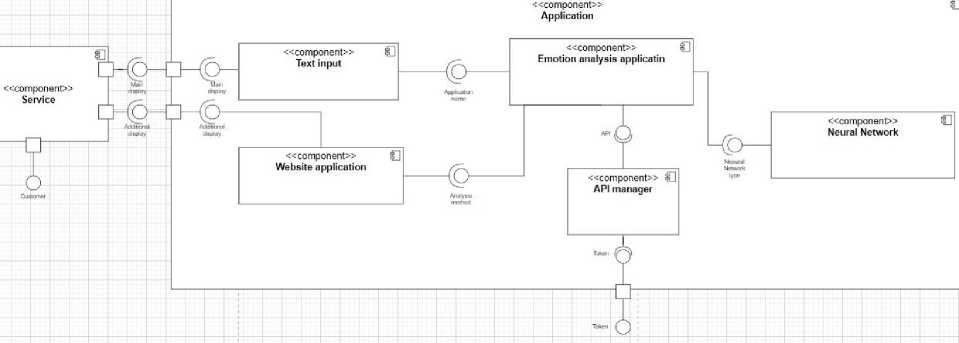

The following features of software solutions that work in the field of NLP (Fig. 5a) are highlighted:

-

• Ability to use as an API;

-

• Possibility of use for users who do not know the NLP area (for example, to classify crime types as in Fig. 5b);

-

• Working with text involves additional functionality (for example, to criminal reports analysis as in Table 1);

-

• Several methods of working with the text are provided;

-

• Running the service in the cloud (Fig. 6);

-

• Use of private datasets (for example, to classify crime types as in Fig. 7).

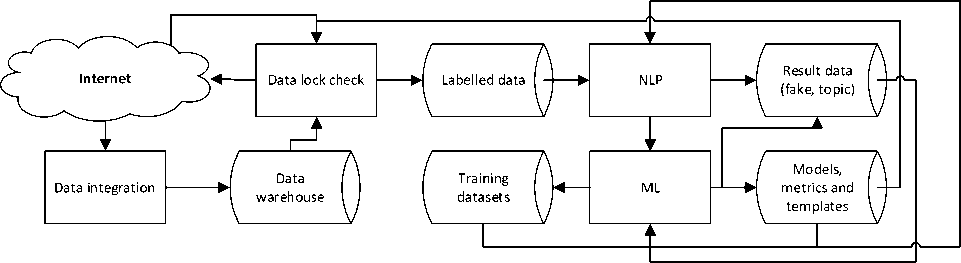

Fig.6. Running the service of the system in the cloud

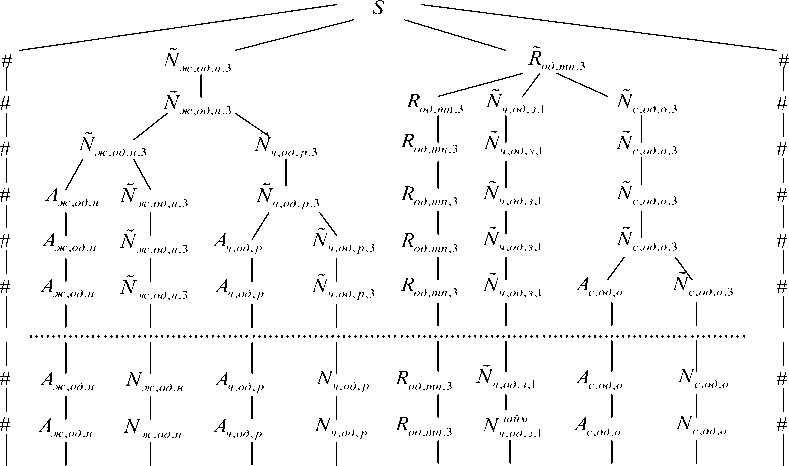

2.(I)

3.(III.1)

4.(II.1)

5.(II.2)

6.(II.2)

7.(II.2)

10.(II.4)

11.(II.3)

8-9

12-18

II II II II

IV.6 IV.2 IV.6 IV.1 IV.7 IV.4 IV.6 IV.3

військова спецоперація бункерного президента наповнює мене бездонним горем

Fig.7. Work results on private test dataset in the system of recognising propaganda, fake news and disinformation

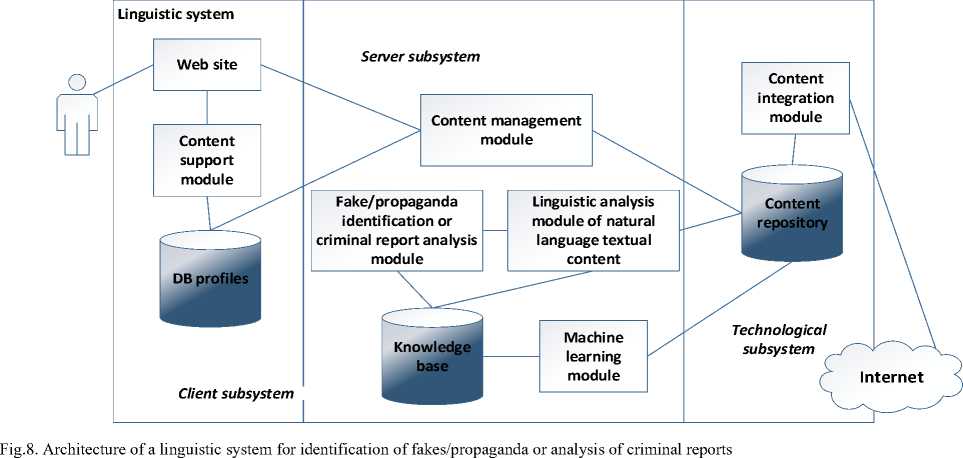

The beginning is the start of the Workflow process (Fig. 8-9).

-

• Download datasets:

-

– From a dataset of news articles and social media for analysis.

-

– With test sets from proven sources to evaluate the model.

-

• Text cleaning and normalization - preparation of texts for further processing:

– Remove non-informative elements (advertising, spam).

– Normalization of language expressions and format standardization.

-

• Choosing an algorithm for disinformation analysis - defining methods for detection and analysis:

-

– Text classification based on machine learning.

-

– Sentiment analysis to identify emotional colouring.

-

• Tuning model hyperparameters - tuning the model for optimal performance:

– Selection of parameters that provide the best recognition of disinformation.

– Adapt the model to specific types of disinformation.

-

• Model testing and validation - checking the quality of the trained model:

-

– Using validation techniques such as cross-validation.

-

– Evaluation of the effectiveness of the model in detecting misinformation.

-

• Integration of the model into information systems - use of the model in systems for monitoring the media space and information portals.

Fig.9. Components diagram for the system of recognising propaganda, fake news and disinformation

Intellectual component for the process of recognising propaganda, fake news and disinformation. Development of algorithms for analysing texts to detect disinformation and unreliable information based on stylistic and contextual features [24-28].

-

• Input data is news texts from various media sources, social networks and information sites.

-

• Output data is scores of credibility of the news, determined based on analytical models, which classify the text

as fake, accurate, or questionable.

-

• Data submission form clean, normalized, annotated or unannotated texts used for training and testing models.

-

• Business processes [24-28]:

-

– Data collection and processing are automated collections of texts from news resources and social media and further cleaning and normalization of texts in preparation for analysis.

-

– Model training and testing is developing and optimising machine learning models for classifying texts according to their reliability.

-

– Implementing the model into practical applications is the integration of models into media monitoring systems and content analysis platforms, where models can automatically recognize fake news and disinformation.

-

• Architecture for disinformation analysis [24-28]:

– Machine Learning based on SVM, Naive Bayes classifier, and deep neural networks.

– Natural language processing is based on NLP algorithms to analyse text data, such as BERT, and detect semantic anomalies and stylistic deviations often associated with fakes.

– Sentiment Analysis to detect emotional colouring that may indicate propagandistic or manipulative text materials.

-

• Evaluation of the quality of models:

-

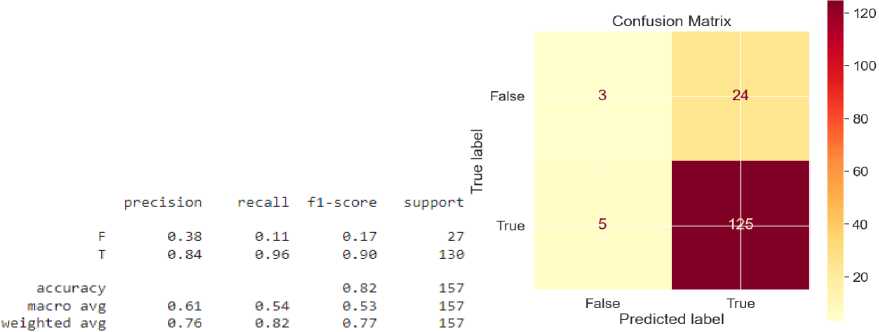

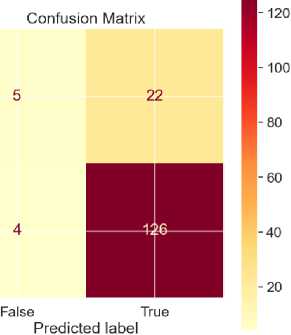

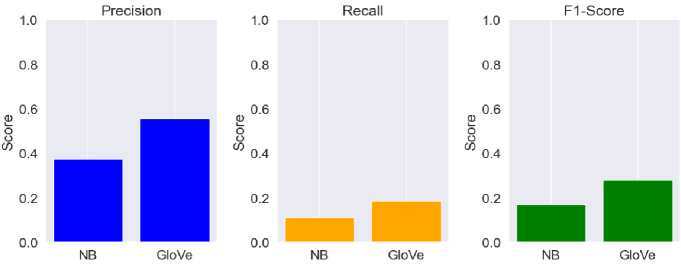

– The confusion matrix uses the error matrix to evaluate the model's accuracy, recovery, and F1 score.

-

– Conducting A/B testing with real users to evaluate the practical effectiveness of algorithms in actual conditions.

-

• Place of application:

– Automate news and social media monitoring systems are used to identify potentially falsified information.

– Use educational programs to teach students to recognize fakes and think critically.

-

• Expected effects:

-

– Awareness raising promotes education and public awareness of the risks of misinformation and fakes.

-

– Protection of the information space: Improving the protection by timely detection and neutralising disinformation.

-

– Enriching scientific research is developing scientific research in information security and machine learning.

Training the embedding model. The following Python libraries can be used to analyse and identify disinformation:

-

• Scikit-learn is a well-known machine learning library that supports a variety of classification algorithms such as logistic regression, naive Bayes, and SVM. These algorithms can be used to develop classifiers that distinguish authentic news from fake news.

-

• NLTK (Natural Language Toolkit) is a feature-rich library for text processing, including sentiment analysis and tokenization tools that are useful in analysing text data for manipulative language patterns. NLTK has essential support for the Ukrainian language with means for tokenization and partial language tagging but may require additional resources for more complex tasks such as parsing.

-

• SpaCy is a high-performance natural language processing library containing pre-trained sentiment analysis and entity detection models. SpaCy can be used for advanced study of phrase structure and context, which is critical when developing algorithms to detect disinformation. SpaCy supports the Ukrainian language with the help of external packages. For example, community-developed models for the Ukrainian language can be integrated with SpaCy for NLP tasks.

-

• Transformers (Hugging Face) - A library that provides access to pre-trained models based on transformers such as BERT, GPT, and RoBERTa. These models can be used for deep contextual analysis of texts, which allows the detection of complex cases of misinformation and manipulation. This library supports the Ukrainian language thanks to multilingual models such as BERT and GPT, which are already trained on data from different languages, including Ukrainian. For specific tasks, these models can be adjusted on the corpora of the Ukrainian language.

-

• TensorFlow and PyTorch are advanced deep learning frameworks that allow you to design and configure complex neural networks for disinformation identification tasks. They are handy for creating customized solutions that consider data specificity and identification accuracy requirements.

In sequence-to-sequence problems, such as neural machine translation, initial proposals for solutions were based on the use of RNN in the encoder-decoder architecture. These architectures had significant limitations when dealing with long sequences. Their ability to retain information from the first elements was lost when new elements were incorporated into the sequence. In an encoder, the hidden state at each step is associated with a specific word in the input sentence, usually one of the last. Therefore, if the decoder only accesses the previous hidden state of the decoder, it will lose the relevant information about the first elements of the sequence. For example, in the sentence "There are dolphins in the …", the next word is obviously "sea" since there is a connection with the word "dolphins". If the distance between the dolphins and the intended word is short, RNN can easily predict it. Consider another example: "I grew up in Ukraine with my parents, spent many years there, and know their culture well. That's why I speak fluently … ." The expected word is "German", which is directly related to "Ukraine". However, in this case, the distance between "Ukraine" and the intended word is more significant, making it more difficult for RNN to predict the correct word.

Consequently, as distance increases, RNNs cannot find connections due to low memory power. So, to eliminate RNN's problems, a new concept was introduced – the attention mechanism. Instead of paying attention to the last state of the encoder, as is usually done with RNN, at each step of the decoder, we view all the states of the encoder, being able to access information about all the elements of the input sequence. It is what "attention" does. It extracts information from the entire sequence, the weighted sum of all the past states of the encoder. It allows the decoder to assign more weight or importance to each output element's occurrence element. However, this approach has a significant limitation: a component must process each sequence. The encoder and the decoder must wait until the t-1 steps are complete to process the step. Therefore, large volumes of occurrences take a long time.

Transformers are a neural network, the architecture aimed at solving sequential tasks while efficiently processing long-term dependencies. The Transformers model extracts characteristics for each word using a self-attention mechanism to determine how important all the other words in the sentence are about the previous words. No duplicate units are used to get these features; they're just weighted sums and activations to be parallelized and efficient.

When choosing the BERT model, we took into account several key factors.

Firstly, BERT can effectively model the context and dependencies between words in the text thanks to the selfattention mechanism used in Transformers. It allows BERT to identify semantic connections and understand the meaning of the text at a deeper level.

Secondly, BERT is a pre-trained model, meaning it has been pre-trained on a large amount of textual data. It allows the model to learn common dependencies and language properties, resulting in better performance when refining for specific tasks.

One of the critical factors that led to the choice of transformers to work with BERT was their ability to effectively model context in text. BERT uses transformer architecture to account for the broad context and interactions between words in a text. It allows BERT to identify semantic dependencies and understand the meaning of the text at a deeper level. Therefore, the choice of transformers for BERT was due to their power in context modelling and the ability to work with large amounts of text data.

Torch (and its PyTorch shell) is one of the most popular packages for developing neural networks and implementing optimization algorithms. Torch is a high-performance library optimized for working with neural networks. It uses accelerators such as graphics processing units (GPUs) to quickly compute and train models. It provides flexible tools for developing neural networks. It makes it easy to create and configure complex architectures, such as transformers, and it has many built-in features for working with text data. Torch can easily integrate with other popular libraries, such as NumPy and Pandas, allowing for convenient data processing and analysis before using it in neural networks.

Hugging Face, Inc. is an American company that develops tools for creating applications using machine learning. She is best known for creating the Transformers library for natural language processing and a platform that allows users to share machine learning models and datasets. Hugging Face provides a wide variety of pre-made models, including BERTs, that can be used directly. It significantly reduces the time needed to develop and train the model. It provides a simple interface for downloading, configuring, and using models. Hugging Face offers additional tools and libraries for working with neural networks, including various modules for processing text data, evaluating models, and conveniently visualizing results. Hugging Face has a large and active community of developers and researchers, which contributes to the constant updating and improvement of the platform. Many resources are also available to help with questions and problems that arise when working with BERT models.

The Jupyter project is an interactive computing platform that works with all programming languages through free software and open standards. This platform, which supports over 40 programming languages, is viral for developing Python, R, Julia, and Scala code. The main element of Jupyter is "notebooks" - a file format that contains code and its executed results to support machine learning and data analysis projects.

Critical Benefits of Jupyter Notebook:

-

• code editing in the browser, with automatic syntax highlighting;

-

• the ability to execute code from the browser with the results of the calculations generated by the code;

-

• displaying the result of the calculation using multimedia formats such as HTML, LaTeX, PNG, SVG, etc.;

-

• editing rich text in the browser using the Markdown markup language, which allows you to add comments to the code without being limited to plain text;

-

• the ability to easily include mathematical notation in commenting cells using LaTeX and be rendered by MathJax.

Statistical evaluation of embedding models. A library specially developed for these purposes can be used to evaluate models of misinformation identification. Like genism evaluations, it provides methods for assessing models but focuses on analysing texts and determining their reliability. Currently, there is no standard, generally accepted library in Python that specializes exclusively in model evaluation for misinformation identification with the same functionality as genism-evaluations for word embeddings. However, for the development and testing of disinformation detection systems, libraries such as:

-

• Scikit-learn for machine learning,

-

• NLTK and SpaCy for natural language processing,

-

• Hugging Face's Transformers for working with advanced transformer-based models that can be adapted for specific disinformation detection tasks.

-

• A library for evaluating disinformation detection algorithms implements methods designed to assess the performance of models in detecting fake news and propaganda, particularly in data-limited language resources. It allows users to automatically generate specialized test sets for any supported languages, using resources such as Wikidata to create sets of articles or texts often associated with disinformation.

-

• Special test sets. Each test set may contain articles or text messages classified (e.g., politics and health) that have already been identified as targets of disinformation. Users can select items from Wikidata or other databases to form these categories.

Similar to Topk and OddOneOut, evaluation functions in the library may include:

-

• Precision@K estimates the accuracy of disinformation identification by counting the number of times fake news was correctly identified among the first K results.

-

• Recall Rate is a score that measures the ability of a system to detect all possible instances of misinformation in a dataset.

The evaluation results can be represented as a tuple of five values that include:

-

• general accuracy of the model;

-

• accuracy by category;

-

• a list of categories where the model showed the worst results;

-

• total score for all tests (number of correctly identified cases);

-

• category score (number of correctly identified cases in each category).

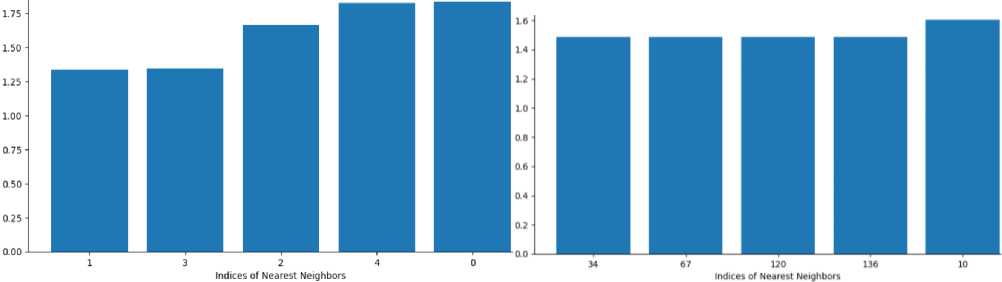

Vector database. To create a system that identifies disinformation, you can consider using a vector database to store articles, news and other textual records for further analysis of fakes. A vector database for such a system could include the following components [24-28]:

-

• Embedded vector represents text derived from advanced NLP models such as BERT or GPT to preserve deep contextual knowledge about the text.

-

• The full text of an article or news story analysed for misinformation.

-

• Additional metadata is information about the source, author, date of publication, and any other metadata that can help verify the authenticity of the information.

When a user receives a query, the query text is transformed into a vector using the embedding model, after which a database search is performed to find the most similar vectors and corresponding texts that can answer the query or refute misinformation.

Web interface. Streamlit was chosen to develop the web interface for several reasons:

• Speed of development. Streamlit lets you quickly create interactive web interfaces with minimal code, which is ideal for displaying research results and real-time models.

• Ease of use and adoption among data analysts. Streamlit's interface is easy to use and configure, allowing nonweb developers to integrate their models quickly.

• Integration with Python. Since most embedding models are developed in Python, Streamlit provides seamless integration with the Python ecosystem, simplifying interaction with the developed models.

• Community and ecosystem. Streamlit has a growing community and ecosystem of extensions and custom components that can enhance its functionality. You can find various resources and support from the Streamlit community.

4. Experiments, Results and Discussion

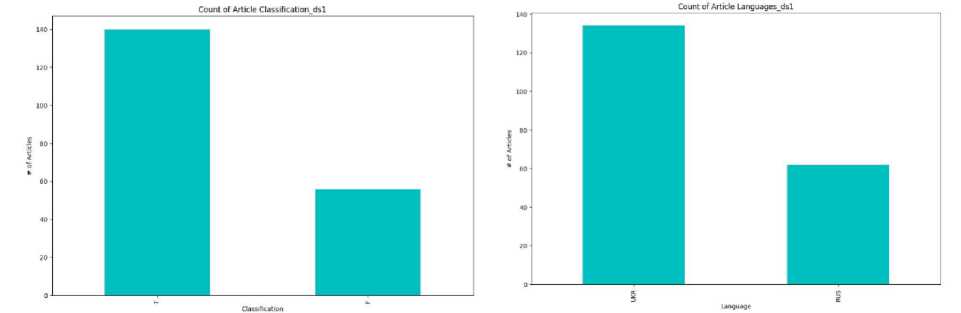

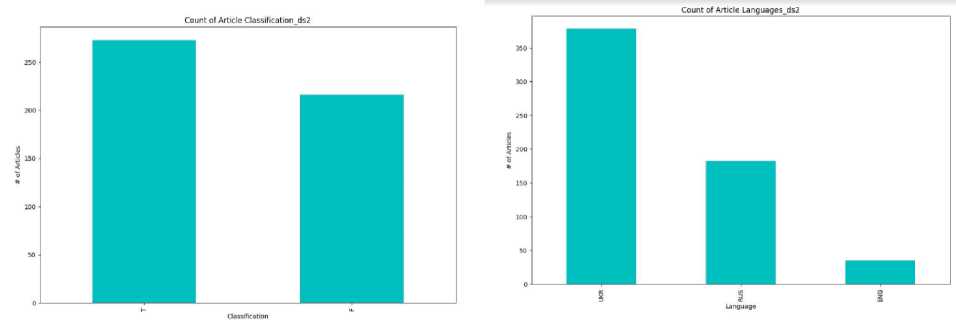

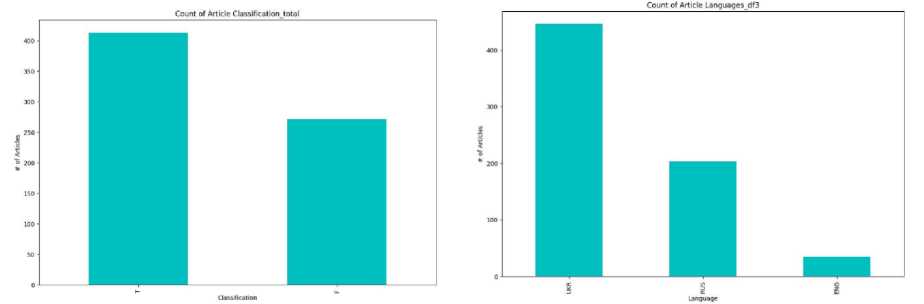

Data collection for the project. You need to choose the correct data for the neural network to work efficiently and train it. Since my topic is related to the search for fake news about the Russian-Ukrainian war, this is exactly what was needed, along with true news. However, we faced the problem of no ready-made datasets on this topic. Accordingly, it was necessary to collect data independently from the beginning. As a data source, we chose various Ukrainian and Russian news sites. It allowed us to get a variety of data on the russian-Ukrainian war. An additional analysis of each news item was carried out during the data collection. We checked the source, the date of publication, and the author of the news and looked for whether this news was mentioned anywhere else. Since we manually entered everything from the beginning, we immediately marked this news in the Label column, where it marked 0 for true news and 1 for fake news. Later, based on these labels, the neural network is learned and trained. The development and content of a dataset for training and testing a machine-learning model is based on the following steps:

Step 1. Research the information base on web resources for the period after the full-scale invasion of Ukraine on February 24, 2022.

Step 2. Form a database of social media chats to identify disinformation, namely, fakes, propaganda and manipulation.

Step 3. Form a database of fact-checking resources to trace the refutation of fake information.

Step 4. A selection of the central narratives of hostile language is needed for the reliability of information sampling and better coverage.

Step 5. Search for at least two posts for each fake: true-false and fake-true pairs.

Step 6. Search for a proportional number of posts in both Ukrainian and Russian, true and false, for more excellent sampling reliability.

Step 7. Formation of criteria for adding fakes to the dataset table.

Step 8. Filling the dataset with the necessary information.

The paper has developed and filled a dataset of disinformation, taking into account the above steps.

Following these steps allows you to solve the following research tasks:

-

• identify the characteristics of primary sources and distribution routes, as well as the characteristics of inauthentic behaviour of chat users;

-

• analyze the statistical characteristics of the indicators of the intensity of the development of information threats;

-

• describe the existing sources of detecting and refuting fakes, propaganda and disinformation;

-

• analyze the existing methods for determining the characteristics of primary sources and distribution routes, as well as the attributes of inauthentic behaviour of chat users;

-

• determine the method of developing and filling disinformation datasets;

-

• to carry out experimental studies based on dataset data to find criteria and parameters for the distribution and change of the dynamics of participants' behaviour.

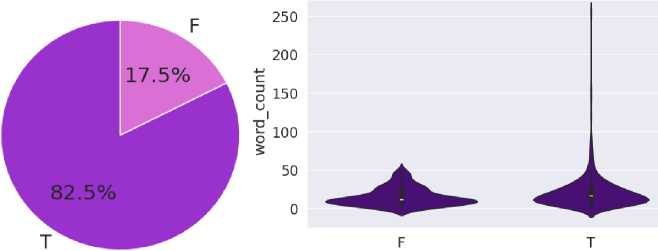

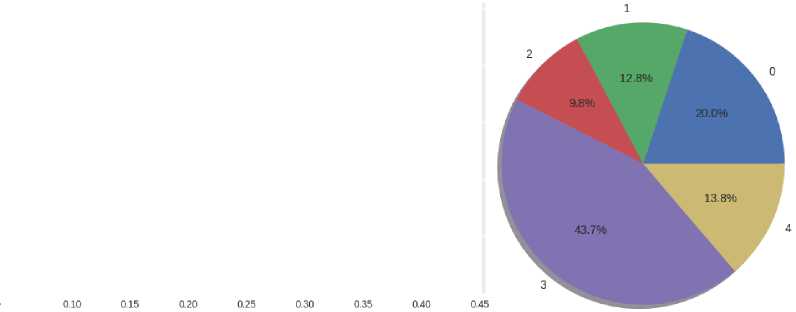

For the study, a dataset (Fig. 10) was collected, which consists of various news and posts on social networks, such as Facebook, Telegram, etc. (true-false). Narratives based on analysing open web resources in the context of a full-scale invasion are studied. The data with fakes and sources of their refutation were investigated to further fill the dataset and identify the primary sources of disinformation. The developed dataset demonstrates:

-

• variability in time of posts, i.e. that posts can be both edited and deleted; For example, this post in the picture no longer exists;

-

• the possibility of self-propagation for different purposes;

-

• the presence of reposts and the possibility of writing both fake and truthful information in the same language, in this case in Ukrainian (also paragraph 1 and paragraph 2 in Fig. 10 in Russian, the language of the aggressor);

-

• Not being able to view some posts due to privacy settings.

Fig.10. 'Class' pie chart for distribution and 'Text' length

Neural network architecture. To accomplish this task, we use the BERT neural network model. As described in the previous paragraphs, it is suitable for natural language processing tasks and does not require large data.

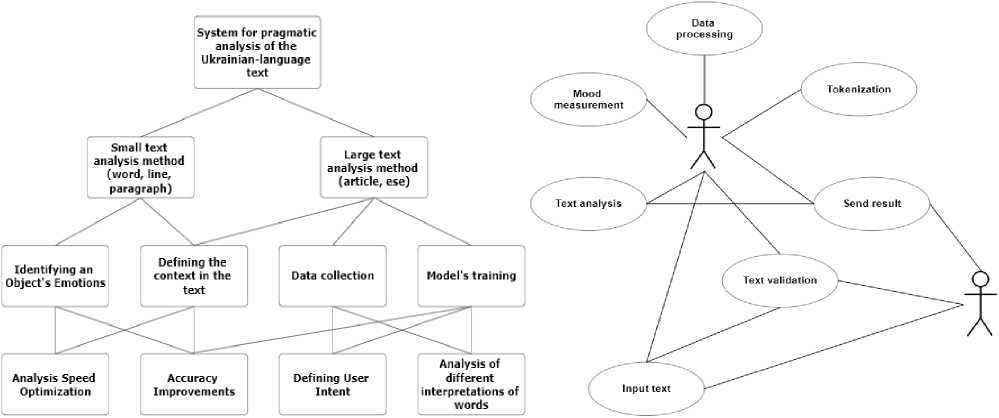

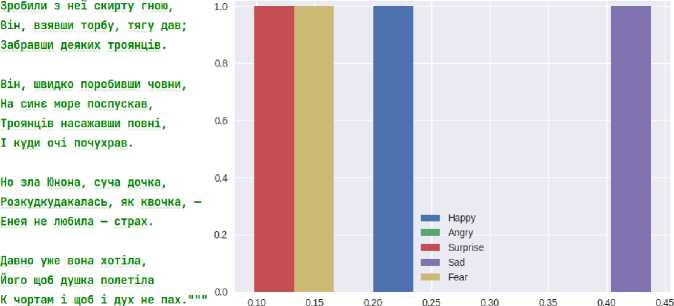

Sentiment analysis. The General Goal is to create a system for pragmatic analysis of the Ukrainian-language text, which allows you to analyse what the given text means. The goal is to conclude the given text. Decisions at the next level involve developing an analysis method. The diagram shows the types of methods. The initial step is to analyse a small text and use a method of complete analysis. The following goals are to determine the context in texts that confuse concepts and add uncertainty to the text. An important step is determining the text's emotions and the user's intent (Fig. 11). First, you must choose an artist whose lyrics must be analysed (on these texts, it is better to check the methods of studying the emotionality of the text, because usually the poems are overloaded with them). Next, you need to enter the data for analysis and perform validation (for example, if you entered only text or symbols). You can enter the text yourself or find it on the Internet. After the input data, that is, the text has been entered, it is necessary to preprocess the text and tokenise it. After that, we send the text for analysis and collect statistics, after which we can determine the mood of the object described in the text after the user receives the study result. NLTK has been called "a great tool for teaching and working with Computational Linguistics with Python" and "a great library for playing with natural language". Libraries such as Pandas and NumPy will also be used for data manipulation.

Fig.11. Tree of goals for sentiment analysis of Ukrainian text and activity diagram

As a result, a script was created that analyses emotions in the text and highlights positive and negative sentences. First, you must select and write the text in the TEXT variable in the text.py file. For a test version, let's take an excerpt from Ivan Kotlyarevsky's work "Aeneid" (Fig.12). Before the analysis, the text was tokenised using the NLTK library and the sent_tokenize method. Tokenisation took place by sentences. The next step is to analyse each sentence and identify the emotions that prevail in the text (Fig 12c). You can understand that in the first sentence, only joy is manifested; for example, in the 3rd sentence, it is sadness and anger.

Еней був парубок моторний I хлопець хоть куди козак, Удавсь на всее зле проворний, ЗавзятТший од ecix бурлак.

Но греки, як спаливши Трою, Зробили з нет скирту гною, BiH, взявши торбу, тягу дав; Забравши деяких троянц!в.

BiH, швидко поробивши човни, На сине море поспускав, Троянц1в насажавши повн!, I куди он! почухрав.

Давно уже вона xorina, Його щоб душка полетала К чартам i щоб i дух не пах.

■» Токен1зований текст;

'\п'

Еней був парубок моторний\п

I хлопець хоть куди козак,\п' 'Удавсь на всее зле проворний,\п

Завзяттший од вс!х бурлак.', 'Но греки, як спаливши Трою,\п' 'Зробили з не! скирту гною,\п'

В!н, взявши торбу, тягу дав;\п’ 'Забравши деяких троянцав.',

В!н, швидко поробивши човни,\п 'На сине море поспускав,\п'

Троянцев насажавши повн!,\п'

Fig.12. Test data for analysis, text tokenisation results, and five-sentence analysis results from the text

In the following Fig. 13a, you can see the average values of the analysis. A method was added for statistics to determine whether sentences are positive or negative. As you can see in the picture, this text has two positive sentences (Fig. 13b) and three negative (Fig. 13c).

»> Negative Sentences Count : 3 Aeneas was a young man

And the guy at least somewhere Cossack, Succeeded in all evil agile, Zealous of all burlaks.

»> Positive Sentences Count : 2

He quickly made boats.

He descended on the blue sea,

|

Emotion |

Value |

Trojans planted full. |

|

|

О |

Happy |

0.200 |

And where the eyes scratched. |

|

1 |

Angry |

0.128 |

|

|

2 |

Surprise |

0.098 |

She's wanted for a long time, |

|

3 |

Sad |

0.438 |

Let his soul fly |

|

4 |

Fear |

0.138 |

To hell with the spirit and not smell |

But the Greeks, as burning Troy, They made a pile of manure out of it, He took the bag and gave it a try; Taking away some Trojans.

But evil Juno, sucking daughter, Razkudkudakalas like a hen -Aeneas did not like - fear.

Fig.13. Average values of the analysis, sentences with a positive character and negative sentences

For statistics, three favourite texts were found on the Internet:

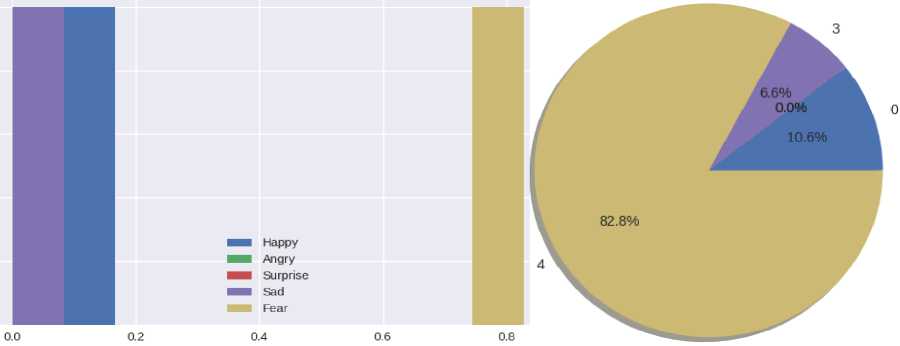

-

• Ivan Kotlyarevsky's poem "Aeneid" (Fig. 14). You can immediately understand that the Aeneid is a literary text in which many emotions are manifested. Fear is the most common emotion in the text. This text is difficult to analyse because many literary transitions are unclear immediately.

ТЕХТ1 = ......

Еней був парубок моторний I хлопець хоть куди козак, Удавсь на всее зле проворний, ЗавзятХший од Bcix бурлак.

Но греки, як спаливши Трою,

Fig.14. The text of the poem and histograms

-

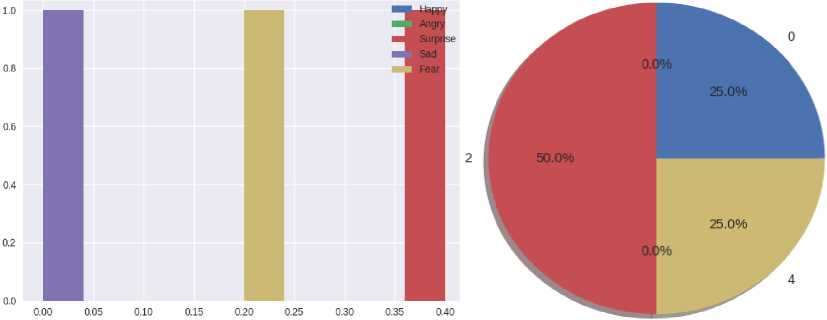

• Text from Ukrainian news about the football match of Ukraine against Wales (Fig. 15-16). Based on the results of the analysis of the football article, you can understand that the text is about something that a person does not care about because the text contains emotions of joy and surprise. The emotion of fear is probably the understanding that the national team can lose the match.

ТЕХТ2 = ......

Зб!рн!й УкраХни з футболу залишилося зробити один крок для виходу на ЧС-2022.

У ф!нал! плей-оф украХнцям потр!бно здолати Уельс на пол! суперника.

Матч УкраХна - Уельс визначить останнього учасника ЧС-2022 з футболу серед европейських зб!рних.

Обидв! краХни потрапили у плей-оф за п!дсумками групового етапу, де ф!н!шували другими у своХх трупах.

А в першому раунд! плей-оф здобули перемоги: УкраХна об!грала Шотланд!ю, Уельс - Австр!ю.

Футбол УкраХна - Уельс в!дбудеться в КардХфф! на пол! валл!йц!в.

Дата матчу з футболу: УкраХна - Уельс 5 червня.

Це буде четверта гра в icTopii м!ж цими збХрними: УкраХна ще жодного разу не програвала валл!йцям.

Fig.15. The text of the article about football

Fig.16. Histograms

-

• Text with news about the total losses from the russian federation in Ukraine (Fig. 17-18). After analysing the news about the war, it becomes possible to immediately see that the emotion of fear here prevails over others at times, which is entirely true.

TEXTS = """Загальн! збитки в!д росгйського военного нападу в с!льському господарств! Украгни сягнули 4,3 млрд долар!в, найблльш! втрати - внасл!док знищення чи пошкодження уг±дь.

Про це йдеться в Огляд! збитк!в в±д в!йни в с!льському господарств! Укратни в±д Центру досл!джень продовольства та землекористування KSE Institute сп!льно з Мхнхстерством аграрно! пол!тики.

Так, у структур! пошкоджень найбзльш! втрати ф!ксуються внасл±док знищення або часткового пошкодження с!льськогосподарських yriflb та незбору врожаю - 2,1 млрд долар!в.

KpiM прямого пошкодження земель, окупац!я, втйськов! flii та м!нне забруднення обмежують доступ фермер!в до пол!в i можливостД збору врожаю.

DpieHTOBHO, 2,4 млн га озимих культур вартхстю у 1,4 млрд дол залишаться нез!браними внасл!док arpecii РФ.

Fig.17. Text from the news about the losses from the russian federation

1,0

0,8

0,4

0.2

Fig.18. Histograms

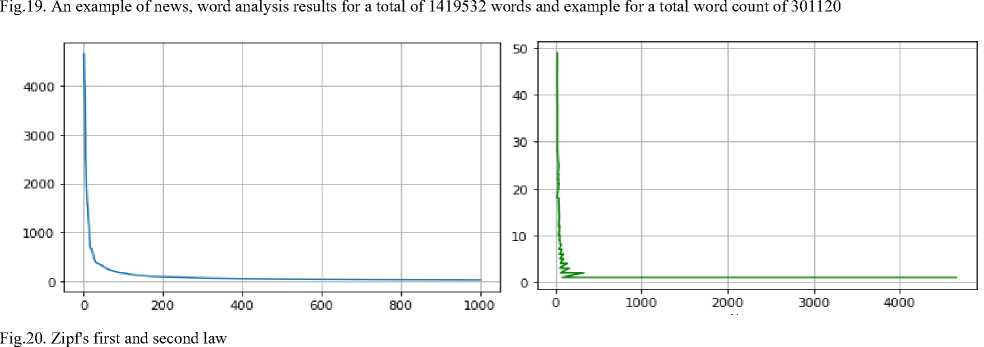

Based on sentiment analysis, we used data from Telegram channels (Fig. 19a) for fake news research (such as mass media or political news). Telegram has a function that allows you to export all text (and not only) data for the entire period from any channel. It is the "Export chat history" function. Here, you can download data in video, photo, files, text and other formats. We care only about the text. We can also choose the format of the downloaded text. We will select json, as it is more convenient for manipulating the data frame. To begin with, open the file with data about the channel's telegram messages. Next, we filter the data frame from {'type': 'service'} since we are only interested in messages. We also open the sheet to extract the text from it. And we run the code for Zipf's law analysis (Fig. 19b-20). The program results show that the most popular words are {in, on, in, and, with, and, that, for, to, not} (Fig. 19b). In the program, data from the Ukrainian telegrams of the channel "TSN Novyy" were used (Fig. 21-22). Since the structure of telegram messages in all groups is the same, the user can successfully use any other news telegram channel. Now, the program has been launched on a channel with fewer publications. In the previous news, the top 10 words {in, on, in, and, with, and, what, for, to, not}. In this: {on, in, with, in, and, for, to, from, for}. 70% match.

|

Rank | |

Word | |

requency |

|||

|

1| |

У 1 |

4653| |

|||

|

2 |

— |

4133| |

|||

|

3 |

на |

3959 | |

|||

|

4 |

в |

3566| |

|||

|

5 |

та |

2532| |

|||

|

6 |

в |

2338 |

Rank | |

||

|

7 |

1971| |

Word |

Frequency |

||

|

8 |

до 1 |

1765| |

|||

|

5 |

ва |

1617 | |

1 1 |

на |

706 | |

|

10 | |

ДО |

1554 | |

2 I |

в |

6011 |

|

11| |

не |

1485 | |

з| |

3 |

469 | |

|

12 I |

про | |

1322 | |

4| |

у |

425 | |

|

13 | |

укратни |

1212 | |

5| |

та |

418 | |

|

14 |

укра!н! |

1149 | |

6 I |

за |

250 | |

|

15 | |

в!д | |

889 | |

7 | |

до |

237 | |

|

16 | |

як! |

753 | |

8| |

- |

213 | |

|

17 | |

694 | |

9 |

Е1Д |

196 | |

|

|

18 | |

ЦО |

668 | |

101 |

Для |

192 | |

|

11 |

не |

186 | |

|||

|

19 | |

а |

665 | |

12 | |

183 | |

|

|

20 |

як |

655 |

|||

|

211 |

для |

620 | |

13 I |

укратн! |

158 | |

|

141 |

про |

150 | |

|||

|

22 1 |

п!д |

600 | |

|||

|

15 | |

киев! |

146 | |

|||

|

2 3 |

13 |

597 | |

укратни що |

||

|

24 |

О' 1 0 1 |

559 | |

16 | 17 | |

138 | 1211 |

|

|

23 |

536 | |

18 | |

120 | |

||

|

26 |

сша |

477 | |

п!д |

||

|

27 | |

черев |

457 | |

19 | |

89 | |

|

|

201 |

облает! |

89 | |

|||

|

28 | |

час |

444 | |

211 |

як! |

88 | |

|

29 |

який |

4111 |

|||

|

30 |

добу |

397 | |

23 | |

як |

79 | |

|

311 |

президент | |

393 | |

241 |

рос!! |

76 | |

|

32 | |

облает! |

387 | |

25 | |

через |

74 |

|

33 1 |

П1СЛЯ |

379 | |

26 | |

РФ |

74 |

|

34 | |

вже |

372 | |

27 | |

рос!йських |

73 | |

|

35 | |

□ ocii |

3711 |

28 | |

людей |

72 |

|

36 | |

р* |

362 | |

29 | |

ще |

71 |

|

37 | |

киев! |

357 | |

301 |

окупанти |

67 | |

|

38 1 |

але |

353 1 |

-. Л 1 |

||

Named Entity Recognition (NER) is part of Natural Language Processing. The main goal of NER is to process structured and unstructured data and classify these named entities into predefined categories. Some common categories include name, location, company, time, monetary value, events, etc. (Fig. 22). In a nutshell, NER deals with:

-

• Named Object Recognition/Detection – Identify a word or series of words in a document.

-

• Named Object Classification – Classifies each detected object into predefined categories.

Named entity recognition makes your machine-learning models more efficient and reliable. However, it would help if you had high-quality training datasets to ensure your models perform optimally and achieve their goals. All you need is an experienced service partner who can provide you with quality datasets ready to use. If that's the case, Shaip is your best bet. Contact us for comprehensive NER datasets to help you develop practical and advanced machine-learning solutions for your AI models.

"id”: 35703,

"type": "message",

"date": "2022-06-15T09:28:42",

"edited": "2022-06-15T09:34:48",

"from": "ТСН новини / TCH.ua", "fromid": "channel!305722586", "file": "(File not included. Change data exporting settings to download.)", "thumbnail": "(File not included. Change data exporting settings to download. "media_type": "video_file", "mime_type": "video/mp4", "durationseconds": 25, "width": 1280, "height": 720, "text": [

"ft", {

"type": "italic",

"text": "Гарна робота наших захисник1в.\п\п"

"В1йськов! з 79-i окремо! десантно-штурмово! бригади МиколаТв записали на св: ] Ь {

"id": 35704,

"type": "message",

"date": "2022-06-15T09:39:20",

"edited": ”2022-06-15109:41:01",

"from_id": "channell305722586",

"text": [

"0",

{

"type": "bold",

"text": "Основы! зусилля pociHH "

{

"type": "text_link",

"text": "зосереджен!",

"type": "bold",

"text": " на п!вденн!й частин! Харк!всько! облает!, Донецьк1й, Луганськ!й oi }/

". Таким чином окупанти мають нам!р оточити укратнськ! сили на сход! Укратни

В(йськов) з 79-1 окремо! десантно-штурмово! бригади МиколаТв записали на СВ1Й рахунок чертову знищену ворожу цшь, порвавши шляхопрокладая БАТ-2 роайських окупайте.

4 2316 4 407 V 149 4 72 4 40 в 9 0 8 ® 5

Ф194.6К edited 9:28

Основы зусилля роаян зосереджен! на ывденнй частин! Харювсько! облает!, Донецыий, Лугансьюй областях. Таким чином окупанти мають нам!р оточити украТнсью сили на сход! УкраТни та захопити всю Донецьку та Луганську облает! — повдомляють анал!тики 1нституту вивчення в!йни (ISW).

ф 1843 4134 ®116 0 43 ф 30 Я 20 V 13

4 12 0 11 А 9 0 7 0 ,96.6k edited 9:39

Fig.21. Code for user interface and examples of news

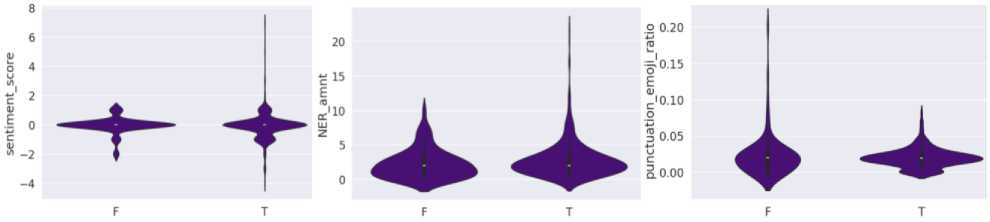

Fig.22. Sentiment analysis for news (fate and true) from our dataset and NER

Most popular NERs in class T: [('України', 55), ('Україні', 52), ('РФ', 27), ('Росія', 26), ('Україна', 24), ('США', 23), ('ЗСУ', 23), ('Росії', 12), ('Україну', 11), ('Києві', 11), ('Володимир Зеленський', 8), ('АЕС', 8), ('Зеленський', 7), ('Донеччині', 7), ('Україною', 7), ('НАТО', 7), ('росії', 6), ('ООН', 6), ('рф', 6), ('ГУР', 5), ('Охматдит', 5), ('Львові', 5), ('Харкові', 5), ('Китаю', 5), ('Курщині', 4), ('Києва', 4), ('Київ', 4), ('Голодомор', 4), ('Польщі', 4), ('Нью-Йорку', 4), ('Зеленського', 4), ('Вугледар', 4), ('Володимира Зеленського', 4), ('Чехії', 4), ('Трамп', 4), ('Африки', 3), ('ОВА', 3), ('Харкова', 3), ('Швейцарії', 3), ('СБУ', 3), ('Японії', 3), ('Тернопільщині', 3), ('Румунії', 3), ('StopFake', 3), ('Вовчанський агрегатний завод', 3), ('Львова', 3), ('МЗС', 3), ('ВОЛЯ', 3), ('Китай', 3), ('Байден', 3), ('Дмитрука', 3), ('Лондоні', 3), ('Захід', 3), ('Bloomberg', 3), ('Монтре', 3), ('Запоріжжя', 3), ('Іван Федоров', 3), ('Вугледара', 3), ('Збройні сили', 2), ('Донецькій області', 2), ('Донбас', 2), ('Африка', 2), ('росія', 2), ('Дніпра', 2), ('Харкову', 2), ('Таджикистану', 2), ('Херсонщині', 2), ('Крокус Сіті Хол', 2), ('УПЦ МП.', 2), ('Закарпаття', 2), ('Росію', 2), ('Олег Синєгубов', 2), ('кремль', 2), ('Краматорська', 2), ('Курській області', 2), ('Франції', 2), ('Сил оборони', 2), ('Єврокомісії', 2), ('Путін', 2), ('DeepState', 2), ('Туреччина', 2), ('Естонії', 2), ('Верховна Рада України', 2), ('Гітлера', 2), ('Курської області', 2), ('ТУ САМУ СІЛЬ', 2), ('Умєров', 2), ('Гнезділова', 2), ('Суспільному', 2), ('Ради Безпеки ООН', 2), ('Генасамблеї ООН', 2), ('Харківщині', 2), ('Грузії', 2), ('Великої Британії', 2), ('ЄС', 2), ('Анатолій Тимощук', 2), ('Байдена', 2), ('Будапешті', 2), ('Олександра Усика', 2), ('Кракова', 2), ('ВР', 2), ('Politico', 2), ('МЗС України', 2), ('Павела', 2), ('Путіна', 2), ('Ердоган', 2), ('Запорізької ОВА', 2), ('Байдену', 2), ('Конгресу', 2), ('Ван Ї.', 2), ('Дональд Трамп', 2), ('Reuters', 2), ('Джо Байдена', 2), ('Запоріжжі', 2), ('Нацбанк', 2), ('Львів', 2), ('Покровська', 2), ('Кремлі', 2), ('The New York Times', 2), ('Anonymous', 2), ('Тихому океані', 2), ('Міністерство оборони Китаю', 2), ('Словаччини', 2), ('Фіцо', 2), ('Заходу', 2), ('USKO MFU', 2), ('Криму', 2)]

Most popular NERs in class F: [('ЗСУ', 8), ('США', 7), ('рф', 5), ('Україні', 5), ('Донецькій області', 4), ('Донбасі', 4), ('України', 4), ('Курщину', 3), ('Курщині', 3), ('Україну', 3), ('Дональд Трамп', 3), ('Курській області', 2), ('Донеччині', 2), ('Курському напрямку', 2), ('Донеччини', 2), ('The Financial Times', 2), ('Дніпра', 2), ('Кремль', 2), ('Львові', 2), ('Харкові', 2), ('Росії', 2), ('Зеленського', 2), ('Польщі', 2), ('Чарльз III', 2), ('Республіканської партії', 2)]

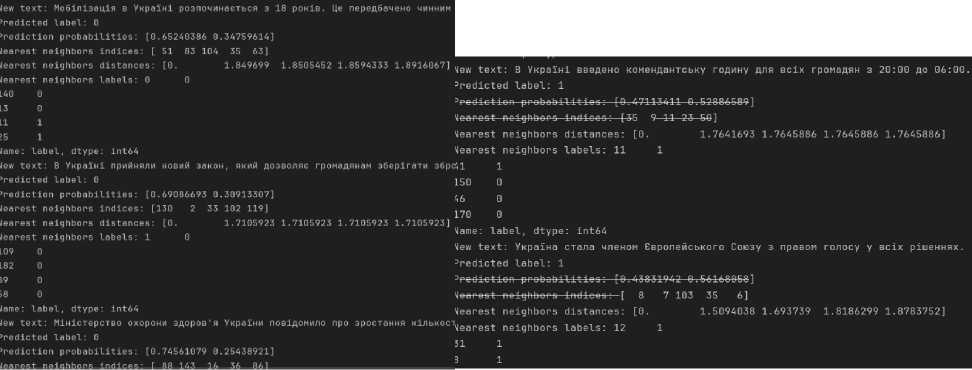

Our program aims to detect disinformation in text documents. Misinformation can come in many forms, such as fake news, manipulative articles, or distorted information that misleads readers. The program's name, "Disinformation Detector", clearly reflects the primary purpose and function of the software. The program offers a tool for detecting disinformation in text documents [29-33], which can be helpful for a variety of purposes, including analysing texts for truthfulness, detecting manipulation or fake news, and for research in media literacy and information security.

Purpose of the program:

-

• Detection of misinformation in the text . The program's primary function is to analyse the text to identify fragments containing misinformation. It may include texts that spread false or distorted information, manipulate facts or create a misleading impression.

-

• Choice of model and parameters. The program was developed considering the possibility of analysing different formats of text documents. It supports formats such as CSV, which allows you to conveniently download and analyse text data. The CSV format is one of the most common formats for storing tabular data, which makes it versatile for software processing.

-

• The model Training module of the app allows it to create a machine learning model that can "learn" from previously collected data. The process of training the model is for the program to automatically analyse the data set, identify patterns in it, and establish connections between various text characteristics that may indicate the presence of misinformation. For this purpose, a proprietary dataset focused on the Ukrainian language, including multiple examples of disinformation and truthful texts. This dataset contains texts from various sources, including news, social media, blogs, and other publications. Data was collected to create the most accurate and representative set for training the model.

-

• Display Results. This program feature provides a user-friendly interface for displaying analysis results to the user via the command line. The program outputs the results, including the predicted label (true or false) and probabilities for each class, simplifying interpreting and using the information. In addition, the program can generate reports in CSV format, which allows users to save and analyze the results later.

The functional purpose of the program is to analyze text documents to identify fragments that may contain misinformation. The main aspects of this functional purpose include:

-

• Analysis of text documents. The program can accept text documents in CSV format and analyze them for the presence of misinformation.

-

• Detection of fragments with disinformation. During the analysis, the program identifies parts of the text that indicate the possible use of disinformation. These can be fragments that look like fake news, manipulative articles or other signs that indicate distortion of information.

-

• Model training. The program uses its dataset to train the model, which allows it to consider the specifics of the Ukrainian language and context. The model learns to recognize different types of misinformation based on input data.

-

• Notification of results. After the analysis, the program notifies the user of the results, indicating the fragments of text that contain misinformation and providing a rating or other information about the detected misinformation. The program can also generate reports for further analysis and storage of results.

Structure of the program. This program is divided into modules and components to organize and structure the code better. They perform various functions and tasks within the program. Components and modules:

-

• The text analysis module contains a set of methods responsible for processing the text, identifying fragments that may contain misinformation, and calculating their percentage relative to the total text volume. These methods provide analysis of textual data to detect and assess the presence of misinformation, helping the user to understand how susceptible the text is to false information or manipulation.

-

• The document Upload Module allows the user to upload text documents to the program for further analysis. It provides an interface through which the user can select a file or files from their device and pass them to the application for processing. Once downloaded, the program may analyse the content of these documents to detect misinformation or other specific characteristics.

-

• Model Training Module includes a set of techniques for training a machine learning model based on a dataset. It enables the program to use machine learning algorithms to analyse and learn the structure and features of input data. These methods may include data preparation, selection and tuning of learning algorithms, execution of the learning process, and evaluation of the resulting models. The output of this component is a trained model that can be used for further analysis and prediction based on new input data.

Main functions of the program.

-

• Training a machine learning model based on a dataset .

def load_training_data(file_path):

df = pd.read_csv(file_path)

return df def train_model(training_data):

vectorizer = TfidfVectorizer()

X_text = vectorizer.fit_transform(training_data["text"]).toarray()

X_train, X_test, y_train, y_test = train_test_split(X_text, y, test_size=0.2, random_state=0)

trained_model = MultinomialNB()

y_prediction = trained_model.predict(X_test)

acc = accuracy_score(y_test, y_prediction)

f1 = f1_score(y_test, y_prediction)

confusion = confusion_matrix(y_test, y_prediction)

print(f"Accuracy: {acc}")

print(f"F1: {f1}")

print(f"Confusion Matrix: ")

print(confusion)

return trained_model, vectorizer

-

• Downloading text documents for analysis.

def upload_document():

file_path = filedialog.askopenfilename(filetypes=[("CSV files", "*.csv")])

if file_path:

df = pd.read_csv(file_path)

content = df["text"].tolist()

return content else:

messagebox.showwarning("Invalid File", "Upload a valid CSV document...") return None

-

• Text analysis with detection of fragments containing misinformation.

def analyse_text(input_text, model, vectorizer):

input_vector = vectorizer.transform([input_text]).toarray()

prediction = model.predict(input_vector)

prediction_proba = model.predict_proba(input_vector)

if prediction[0] == 1:

result = " Misinformation "

probability = prediction_proba[0][1] * 100

else:

result = " True information "

probability = prediction_proba[0][0] * 100

print(f"Результат: {result}")

print(f" Probability: {probability:.2f}%")

return result, probability

-

• Display of analysis results in the command interface.

def display_results(result, probability):

print("\nРезультати аналізу:")

print(f" Result: {result}")

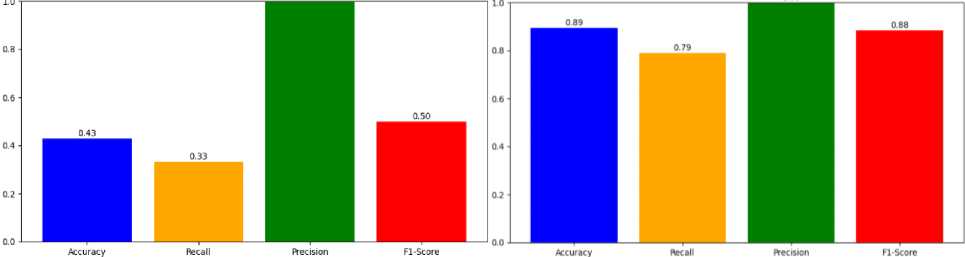

print(f" Probability: {probability:.2f}%")