Robust Algorithm for Face Detection in Color Images

Автор: Hlaing Htake Khaung Tin

Журнал: International Journal of Modern Education and Computer Science (IJMECS) @ijmecs

Статья в выпуске: 2 vol.4, 2012 года.

Бесплатный доступ

Robust Algorithm is presented for frontal face detection in color images. Face detection is an important task in facial analysis systems in order to have a priori localized faces in a given image. Applications such as face tracking, facial expression recognition, gesture recognition, etc., for example, have a pre-requisite that a face is already located in the given image or the image sequence. Facial features such as eyes, nose and mouth are automatically detected based on properties of the associated image regions. On detecting a mouth, a nose and two eyes, a face verification step based on Eigen face theory is applied to a normalized search space in the image relative to the distance between the eye feature points. The experiments were carried out on test images taken from the internet and various other randomly selected sources. The algorithm has also been tested in practice with a webcam, giving (near) real-time performance and good extraction results.

Facial extraction, face detection, skin detection, robust algorithm, faces location, face recognition

Короткий адрес: https://sciup.org/15010399

IDR: 15010399

Текст научной статьи Robust Algorithm for Face Detection in Color Images

Published Online March 2012 in MECS DOI: 10.5815/ijmecs.2012.02.05

Applications such as face tracking, face expression, face recognition, gesture recognition, etc., have a prerequisite that a face is already located in the given image or the image sequence. Numerous face detection techniques have been proposed to address the challenging issues associated with this problem in the literature. These techniques generally fall under four main categories of approach: knowledge-based, feature invariant, template matching and appearance-based [1].

The detection of face and facial features has been receiving researchers’ attention during past a few decades. The ultimate goal has been to develop algorithms equal in performance to human vision system. In addition, automatic analysis of human face images is required in many fields including surveillance and security, human computer interaction (HCI), object-based video coding, virtual and augmented reality, and automatic 3-D face modeling.

Hlaing Htake Khaung Tin presented for fast and accurate extraction of feature points such as eyes, nose, mouth, eyebrows and the like from dynamic images with the purpose of face recognition. This method achieves high position accuracy at a low computing cost by combining shape extraction with geometric features of facial images like eyes, nose, mouth etc [3].

In Facial feature extraction, local features on face such as nose, and then eyes are extracted and then used as input data. And it has been the central step for several applications. Various approaches have been proposed in this chapter to extract these facial points from images or video sequences of faces. The basically of approaches are geometry-based, template-based, colour segmentation techniques, appearance-based approaches [8].

The ability for a computer system to sense the user’s emotions opens a wide range of applications in different research areas, including security, law enforcement, medicine, education, and telecommunications [9]. Combining facial features, which are detected inside the skin color blobs, helps to extend the above type of approach towards more robust face detection algorithms [4][5].

Facial features derived from gray scale images along with some classification models have also been used to address this problem [6]. Menser and Muller presented a method for face detection by applying PCA on skin tone regions [7]. Existing face detection algorithms in literature, however, all indicate that different levels of success have been achieved with varying algorithm complexities and detection performance.

Face recognition is a major area of research within biometric signal processing. Since most techniques (eg. Eigenfaces) assume the face images normalized in terms of scale and rotation, their performance depends heavily upon the accuracy of the detected face position within the image. This makes face detection a crucial step in the process of face recognition [8].

Several face detection techniques have been proposed so far, including motion detection (e.g. eye blinks), skin color segmentation [9] and neural network based methods [10]. Motion based approaches are not applicable in systems that provide still images only. Skin tone detection does not perform equally well on different skin colors and is sensitive to changes in illumination.

Current face detection systems can be classified according to whether they are based on the whole face or on characteristic features [1] [13]. In the first approach a representative database is generated from which a classifier will learn what is a face (Neural Networks, Support Vector Machine, Principal Component Analysis-Eigenfaces...). These system are sometimes remarkably robust [14] [15] but too complex to be carried out in real time. In the second approach three levels of analysis can be distinguished. In the lowest level, gray pixel values, movement or color are taken into account to detect blobs which look like a frontal face. These approaches are not robust but can be achieved in real time.

In the medium-level analysis, characteristics independent of light conditions and faces orientation sought. In the highest level analysis, face features such as eyes, nose, mouth and face outlines are associated. Deformable models, snakes or Point Distributed Models can then be used. These last models require a good image resolution and are not easily achievable in real time. However, once the face is detected, it is then possible to track it [17].

In this paper, we present a robust approach for frontal face detection in color images based on facial feature extraction and the use of appearance based properties of face images. This is followed by the face detection algorithm proposed by Menser and Muller, which attempted to localize the computation of PCA on skintone regions. This approach begins with a facial feature extraction algorithm which illustrates how image regions segmented using chrominance properties can be used to detect facial features, such as eyes, nose and mouth, based on their statistical, structural, and geometrical relationships in frontal face images.

Several teams already implemented an adaptation of the skin color[17]; nevertheless, they postulate that the camera is correctly calibrated and thus use a color skin signature which is known a priori[14] and progressively refined during the sequence.

The paper is organized as follows. In section 2, facial feature extraction technique is described. The proposed system is described in section 3. Eigenface is presented in Section 4. Appearance-based face detection using facial features and PCA (Principal Component Analysis) is described in section 5. Experimental results are presented in section 6. Some conclusions are then given in section 7.

-

II. Facial Feature Extraction

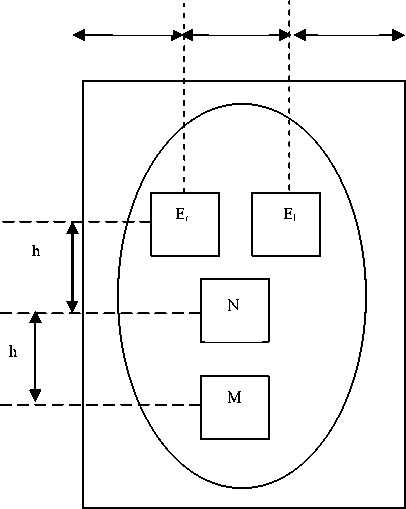

Most of a frontal face image containing a mouth, nose and two eyes is shown in Figure 1. E l and E r represent left and right eyes respectively, while N represents the nose feature and M also represents the mouth feature. The distance between the two eyes is w, and the distance from the mouth to the nose is h and the distance from nose to the two eyes is also h.

In frontal face images, structural relationships such as the Euclidean distance between the mouth , the nose and the left and right eye, the angle between the eyes, the nose and the mouth, provide useful information about the appearance of a face. These structural relationships of the facial features are generally useful to constrain the facial feature detection process.

If the input image from the camera is a color image, color information is used for preprocessing step. The candidates for the facial region are obtained using the facial color chromatic property. This step effectively reduces the search range in the images, thereby reducing the computing time required for facial feature detection.

K. W. Wong, et al. [18] proposed an efficient algorithm for human face detection and facial feature extraction. Firstly, the location of the face regions is detected using the genetic algorithm and the eigenface technique. The genetic algorithm is applied to search for possible face regions in an image, while the eigenface technique is used to determine the “fitness of the regions. As the genetic algorithm is computationally intensive, the searching space is reduced and limited to the eye regions so that the required timing is greatly reduced. Measuring their symmetries and determining the existence of the different facial features then further verify possible face candidates.

Furthermore, in order to improve the level of detection reliability in their approach, the lighting effect and orientation of the faces were considered and solved.

3*w

w w w

Figure 1.A frontal face view

-

III. Proposed System

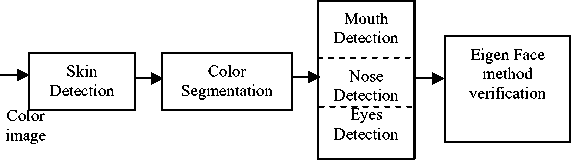

The block diagram of the proposed system is shown in Figure 2. The first task of face detection in this system is skin detection which is carried out using a statistical skin detection model built by acquiring a large training set of skin and non-skin pixels.

A face-bounding box is then obtained from the skin to which the color segmentation is applied for creating a segmentation partition of homogeneous regions. Possible mouth features are first identified based on image pixels and the corresponding color segmentation regions. Nose features and eye features are then identified relative to the position of the mouth, by searching for regions which satisfy some statistical, geometrical, and structural properties of the eyes in frontal face images.

On detecting a feature set containing a mouth, a nose and two eyes, PCA analysis is performed over a normalized search space relative to the distance between the two eyes. The image location corresponding to the minimum error is then considered the position of the detected face.

Principal Component Analysis is a suitable strategy for face recognition because it identifies variability between human faces, which may not be immediately obvious. PCA does not attempt to categorize faces using familiar geometrical differences, such as nose length or eyebrow width. Instead, a set of human faces is analysed using PCA to determine which 'variables' account for the variance of faces. In face recognition, these variables are called eigenfaces because when plotted they display a ghostly resemblance to human faces.

Although PCA is used extensively in statistical analysis, the pattern recognition community started to use PCA for classification only relatively recently. Principal component analysis is concerned with explaining the variance covariance structure through a few linear combinations of the original variables. Perhaps PCA's greatest strengths are in its ability for data reduction and interpretation.

Furthermore, all interpretation (i.e. recognition) operations can now be done using just the 40 eigenvalues to represent a face instead of the manipulating the 10000 values contained in a 100x100 image. Not only is this computationally less demanding but the fact that the recognition information of several thousand.

Figure2. Face detection system

-

IV. Eigenface

In mathematical terms, this is equivalent to finding the principal components of the distribution of faces, or the eigenvectors of the covariance matrix of the set of face images, treating an image as a point (or vector) in a very high dimensional space. The eigenvectors are ordered, each one accounting for a different amount of the variation among the face images.

These eigenvectors can be thought of as a set of features that together characterize the variation among face images. Each image contributes some amount to each eigenvector, so that each eigenvector formed from an ensemble of face images appears as a sort of ghostly face image, referred to as an eigenface. Each eigenface deviates from uniform gray where some facial feature differs among the set of training faces; collectively, they map of the variations between faces.

Because eigenfaces will be an orthonormal vector set, the projection of a face image into “face space”. An image or signal is projected onto an orthonormal basis set of sinusoids at varying frequencies and phase. Each location of the transformed signal represents the projection onto a particular sinusoid. The original signal or image can be reconstructed exactly by a linear combination of the basis set of signals, weighted by the corresponding component of the transformed signal.

If the components of the transform are modified, the reconstruction will be approximate and will correspond to linearly filtering the original signal. This transform is non-invertible called the eigenface transform, in the sense that the basis set is small and can reconstruct only a limited range of images. The transformation will be adequate for recognition to the degree that the “face space” spanned by the eigenfaces can account for a sufficient range of faces.

The idea of using eigenfaces was partially motivated for efficiently representing pictures of faces using principal component analysis. Any collection of face images can be approximately reconstructed by storing a small collection of weights for each face and a small set of standard pictures (the eigenpictures). The weights describing each face are found by projecting the face image onto each eigenpicture. Face recognition, on the other hand, should not require a precise, low mean squared error reconstruction.

If a multitude of face images can be reconstructed by weighted sums of a small collection of characteristic features or eigenpictures, perhaps an efficient way to learn and recognize faces would be this: build up the characteristic features (eigenfaces) by experience over time and recognize particular faces by comparing the feature weights needed to (approximately) reconstruct them with the weights associated with known individuals.

-

V. Appearance-Based Face Detection

In appearance-based approaches is to project face images into a linear subspace with low dimensions. The first version of such a subspace is the eigenface space constructed by the principal component analysis from a set of training images. Later, the concepts of eigenfaces were extended to eigen features for the detection of facial features. A storage problem can occur but it is better performance than feature base it is fast, robust and relatively simple than feature based approach. Even to the same people, the images taken in different surroundings may be unlike. So, the problem is so complicated in the field of face recognition by computer.

As a result of this, inaccurate face images can signal a smaller error, resulting in an image block with the minimum error converging to a wrong face image. However, when the search space is reduced to a smaller size, the intended results can be achieved in most cases.

The detection process could be tuned to the particular task at hand. There are four particular parameters that can be modified to provide better results; the scale factor, the minimum number of neighbouring detections, the minimum detectable feature size and whether to include canny pruning or not. All these parameters would be described in the context of face detection. Parameters for detection of other features follow the same principles.

A difficulty of using PCA as a face classification step is due to the inability of properly defining an error criterion on face images. As a result of this, inaccurate face images can signal a smaller error, resulting in an image block with the minimum error converging to a wrong face image. However, when the search space is reduced to a smaller size, the intended results can be achieved in most cases. The objective of using facial features in this system is to localize the image area on points are used to define a normalized search space.

-

A. Face region detection

Many face detection algorithms have been proposed, exploiting different heuristic and appearance- based strategies (a comprehensive review is presented in [19]). Among those, color-based face region detection gained strong popularity, since it enables fast localization of potential facial regions and is highly robust to geometric variation of face patterns and illumination conditions (except colored lighting).

Skin color alone is usually not enough to detect potential face regions reliably due to possible inaccuracy of camera color reproduction and presence of non-face skin-colored objects in the background. Popular methods for skin-colored face region localization are based on the connected components analysis [20] and integral projection [21].

-

B. Eye position detection

Accurate eye detection is very important in the subsequent feature extraction, since the eyes provide the baseline information about the expected location of other facial features in the proposed system. Most eye detection methods exploit the observation that eye regions usually exhibit sharp changes in both luminance and chrominance, in contrast with the surrounding skin. Researchers have employed integral projection [22], morphological filters [23], edge map analysis [24], and non-skin color area detection to find potential eye locations in the facial image.

One eye contour model consists of upper lid curve in cubic polynomial, lower lid curve in quadratic polynomial, and the iris circle. The iris center and radius are estimated by the algorithm developed by Ahlberg [25]. It is based on the assumptions that the iris is approximately circular and it is dark against the background, i.e. the eye white. Conventional approaches of eyelid contour detection use deformable contour models attracted by high values of luminance edge gradient [26].

-

C. Lip contour detection

In most cases, lip color differs significantly from that of the skin. An iteratively refined skin and lip color models to discriminate lip pixels from the surrounding skin. The pixels classified as skin at the face detection stage and located inside the face ellipse are used to build person-specific skin color histogram.

The pixels with low values of person specific skin color histogram, located at the lower face part are used to estimate the mouth rectangle. Then, skin and lip color classes are modeled by two-dimensional Gaussian probability density functions in (R/G,B/G) color space .

Based on the pixel values of the lip function image, the initial mouth contour is roughly approximated by an ellipse. The contour points move radically outwards or inwards, depending on lip function values of pixels they encounter.

-

D. Nose contour detection

The representative shape of nose side has already been exploited to increase the robustness, and its matching to the edge and dark pixels has been successful in [27] and [28]. However, in cases of blurry picture or ambient face illumination, it becomes more difficult to utilize the weak edge and brightness information.

-

E. Chin and cheek contour detection

Deformable models [29] have been proved to be an efficient tool for chin and cheek contour detection. However, the edge map, which is the main information source for the face boundary estimation, results in very noisy and incomplete face contour information in several cases. A subtle model deformation rule derived from the general knowledge on human face structure must be applied for accurate detection [29].

-

VI. Experimental Results and Analysis

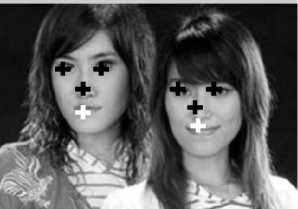

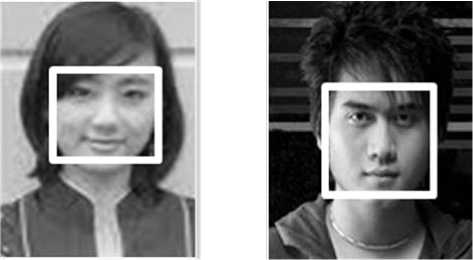

Some examples of the detected facial feature on the original images are shown in figure 3. Eye feature points are shown by black crosses, nose feature points are shown by black crosses while mouth feature points are shown by white crosses.

Figure 3(a) is a bright frontal face image whereas Figure 3(b) is a frontal face image with glasses causing bright reflections. A half frontal face image is shown in Figure 3(c). A randomly selected image of two faces is shown in Figure 3(d).

Accurate results are reported in the first, third, and the fourth image while slightly inaccurate eye feature points have been detected in the second case. Bright reflections caused by glasses in the second image have led to errors in the eye detection process.

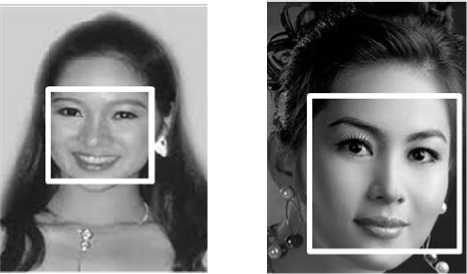

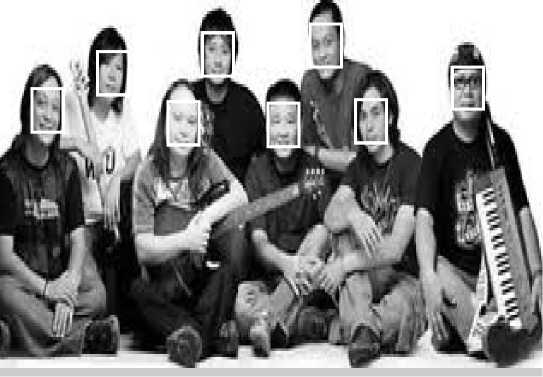

Face detection results are shown in Figure 4. The experiments were carried out on test images taken from the internet and various other randomly selected sources. We noted that the slight inaccuracies occurred in the facial feature extraction process did not affect the performance of face detection.

The processing time may be seriously reduced by algorithms and their implementation optimization, which have not yet performed. The detection results are used in the system to achieve satisfactory face models. Also, the results can play a crucial role in feature-based face recognition system.

(a)

(b)

(e) (f)

(c)

(d)

Figure 3. Facial feature extraction results

(a) (b)

(g)

(c) (d)

Figure 4. Face detection results

VII. Conclusion

Face detection using facial features and Eigen faces theory is presented. Using a facial feature extraction step prior to performing PCA analysis helps to address two requirements for this system. The performance of the system can be improved by extending the face classification step towards a two-class classification problem with the use of a carefully chosen set of nonfaces as the second class. In this system, a total number of 400 face images were used as the set of training images taken from the internet and the various other randomly selected sources. The 200 images training set is a collection of 100 frontal upright images, 50 frontal images with glasses and 50 slightly rotated face images selectively chosen from the internet. The scope of this paper is confined to a limited range of illumination variations. To enhance the robustness of face detection, an efficient method of illumination compensation needs to be employed. In future research, it will be of interest to analyse the active intensity patterns under various illumination conditions.

Acknowledgment

Dr. Mint Myint Sein, University of Computer Studies, Yangon, for supervising my research, guiding me throughout my research and for her very informative lectures on Image Processing.

Список литературы Robust Algorithm for Face Detection in Color Images

- Ming-Hsuan Yang, David J. Kriegman and Narendra Ahuja, “Detecting Faces in Images”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol 24, no.1, pp. 696-706, January 2002.

- A. Eleftheriadis and A Jacquin, “Automatic Face Location, Detection and Tracking for Model Assisted Coding of Video Teleconferencing Sequences at Low Bit Rates”, Signal processing : Image Communication, vol. 7, no. 3, pp. 231-248, July 1995.

- Hlaing Htake Khaung Tin, “Facial Extraction and Lip Tracking Using Facial Points”, International Journal of Computer Science, Engineering and Information Technology (IJCSEIT), Vol. 1, No. 1, March 2011.

- Karin Sobottka and Ioannis Pitas, “A Novel Method for Automatic Face Segmentation, Facial Feature Extraction and Tracking”, Signal Processing: Image communication, vol. 12, no.3, pp. 263-281, 1998.

- Rein-Lien Hsu, Mohamed Abdel-Mottaleb and Anil K. Jain, “Face Detection in Color Images”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 24, no. 5, pp. 696-706, May 2002.

- Henry A. Rowley, Shumeet Baluja and Takeo Kanade, “Neural Network-based Face Detection”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol.20, no.1, pp.23-38, January 1998.

- B.Menser and F.Muller, “Face Detection in Color Images using Principal Component Analysis”, IEE Conference Publication, vol.2, no. 465, pp.620-624, 1999.

- Oliver Jesorsky, Klaus J. Kirchberg, and Robert

- W.Frischholz, “Robust Face Detection Using the Hausdorff Distance”, In Proc. Third International Conference on Audio and Video-based Biometric Person Authentication, Springer, Lecture Notes in Computer Science, LNCS-2091, pp.90-95, Halmstad, Sweden, 6-8 June 2001.

- J. Terrillon, M. David, and S. Akamatsu. Automatic detection of human faces in natural scene images by use of a skin color model and of invariant moments. In Proc. of the Third International Conference on Automatic Face and Gesture Recognition, pages 112-117, Nara, Japan, 1998.

- H. Rowley, S. Baluja, and T.Kanade. Neural network-based face detection. In Proc. IEEE Conf. on Computer Vision and Pattern Recognition, pages 203-207, San Francisco, CA, 1996.

- Elham Bagherian, Rahmita.Wirza.Rahmat and Nur Izura Udzir, “Extract of Facial Feature Point”, International Journal of Computer Science and Network Security, Vol.9, January 2009.

- Sebe, N., Sun, Y., Bakker, E., Lew, M., Cohen, I., Huang, T.: “Towards authentic emotion recognition”, International Conference on Systems, Man and Cybernetics. (2004).

- E.Hjelmas and B.K.Low. Face detection: A survey. Computer Vision and Image Understanding, 2001.

- Raphael Feraud, Oliver J. Bernier, Jean-Emmanuel Viallet, and Michel Collobert. A fast and accurate face dector based on neural networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2001.

- C.Garcia and M.Delakis. A neural architecture for fast and robust face detection. In Proceedings of the IEEE-IAPR International Conference on Pattern Recognition (ICPR’ 02), 2002.

- K. Toennies, F.Behrens, and M. aurhammer. Feasibility of hough-transform-based iris localization for real-time-application. In International Conference on Pattern Recognition, 2002.

- V.Girondel, L.Bonnaud, and A. Caplier. Hands detection and tracking for interactive multimedia applications. In International Conference on Computer Vision and Graphics, 2002.

- K.W.Wong, K.M. Lam and W.C. Siu, “An Efficient Algorithm for Human Face Detection and Facial Feature Extraction under Different Conditions”, Pattern Recognition Society Publish by Elsevier Science, 2001.

- M. Yang, D. Kriegman, and N. Ahuja, “Detecting faces in images: A survey,” IEEE Trans. On Pattern Analysis and Machine Intelligence, vol. 24, no.1, pp. 34-58, January 2002.

- A. Yilmaz and M. Shah, “Automatic feature detection and pose recovery of faces,” in Proc. Fifth Asian Conference on Computer Vision, January 2002, pp. 284-289.

- L. Jordao, M. Perrone, J. Costeira, and J. SantosVictor, “Active face and feature tracking,” in Proc. International Conference on Image Analysis and Processing, September 1999, pp. 572-576.

- S. Baskan, M. Mete Bulut, and Volkan Atalay, “Projection based method for segmentation of human face and its evaluation,” Pattern Recognition Letters, vol. 23, no. 14, pp. 1623-1629, 2002.

- K. Toyama, “Look, ma – no hands!” handsfree cursor control with real-time 3d face tracking,” in Proc. Workshop on Perceptual User Interfaces, November 1998, pp. 49-54.

- X. Zhu, J. Fan, and A. Elmagarmid, “Towards facial feature extraction and verification for omi-face,” in Proc. IEEE International Conference on Image Processing, September 2002, vol. 2, pp. 113-116.

- Jorgen Ahlberg, “A system for face localization and facial feature extraction,” Tech. Rep. LiTH-ISY-R-2172, Linkoping University, 1999.

- M. Kampmann and L.Zhang, “Estimation of eye, eyebrows and nose features in videophone sequences,” in Proc. International Workshop on Very Low Bitrate Video Coding, October 1998, pp.101-104.

- Lijun Yin and Anup Basu, “Nose shape estimation and tracking for model-based coding,” in Proc. IEEE International Conference on Acoustics, Speech, Signal Processing, May 2001, pp. 1477-1480.

- V.Veshnevent, V.Sazonov, and Andreeva, “A survey on pixel-based skin color detection techniques,” in Proc. Graphicon-2003, September 2003.

- M. Kampmann, “Estimation of the chin and cheek contours for precise face model adaptation,” in Proc. International Conference on Image Processing, October 1997, vol. 3, pp.300-303.

- Gu. C. Feng and P.C. Yuen, “Multi-cues eye detection on gray intensity image,” Pattern Recognition, vol. 34, no. 5, pp. 1033-1046, May 2001.