Role of information-thermodynamic trade-off models of control qualitative in quantum control algorithm of self-organization design

Автор: Barchatova Irina

Журнал: Сетевое научное издание «Системный анализ в науке и образовании» @journal-sanse

Статья в выпуске: 3, 2014 года.

Бесплатный доступ

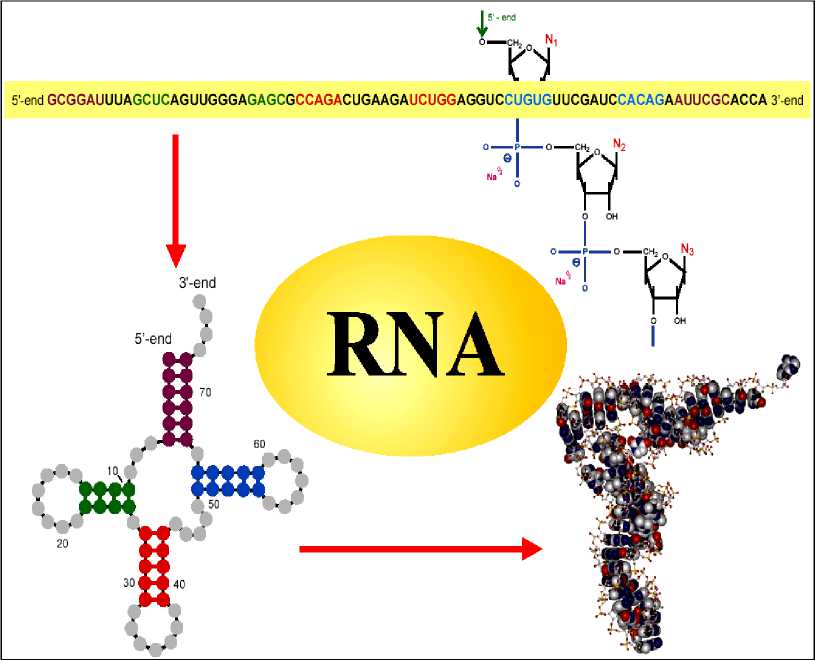

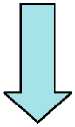

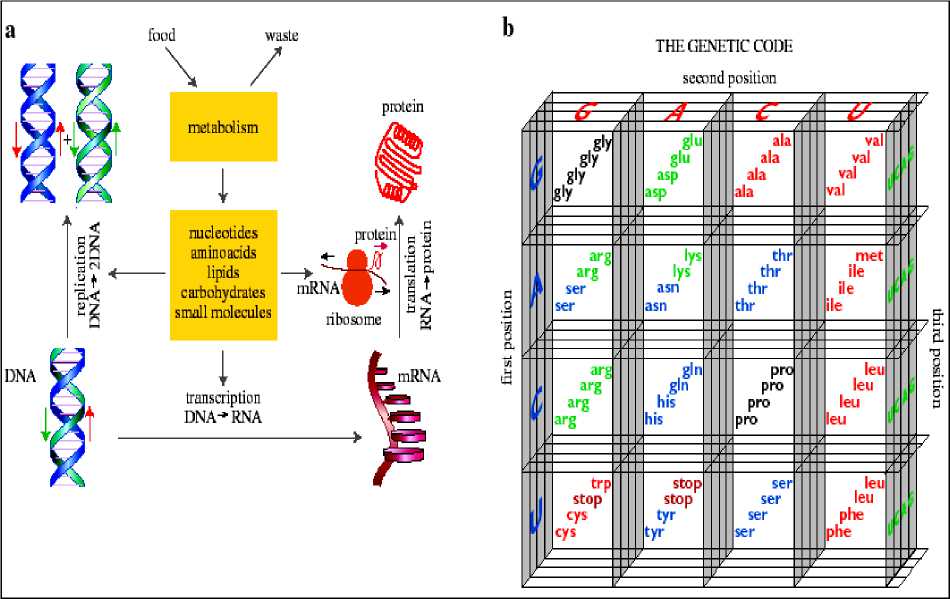

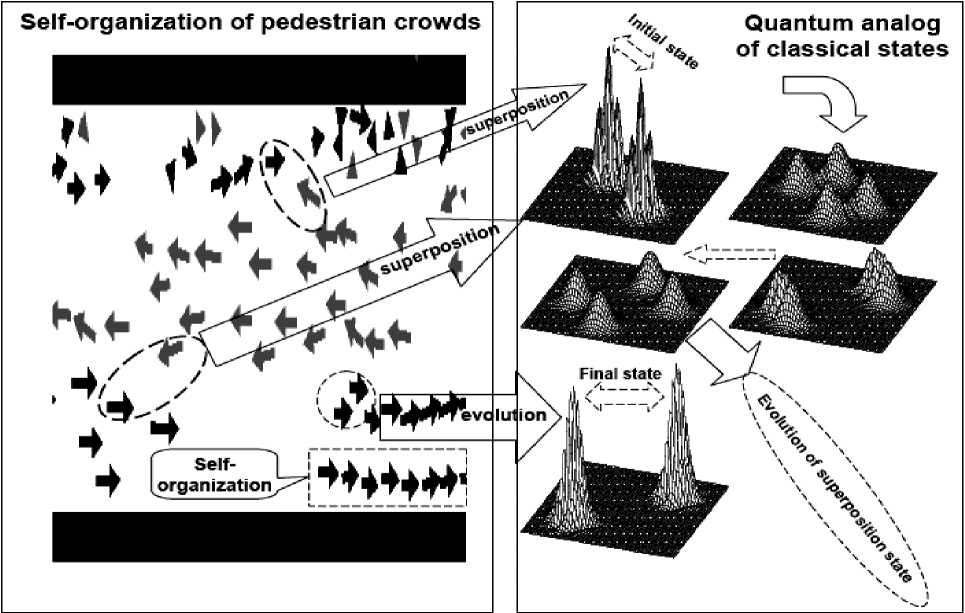

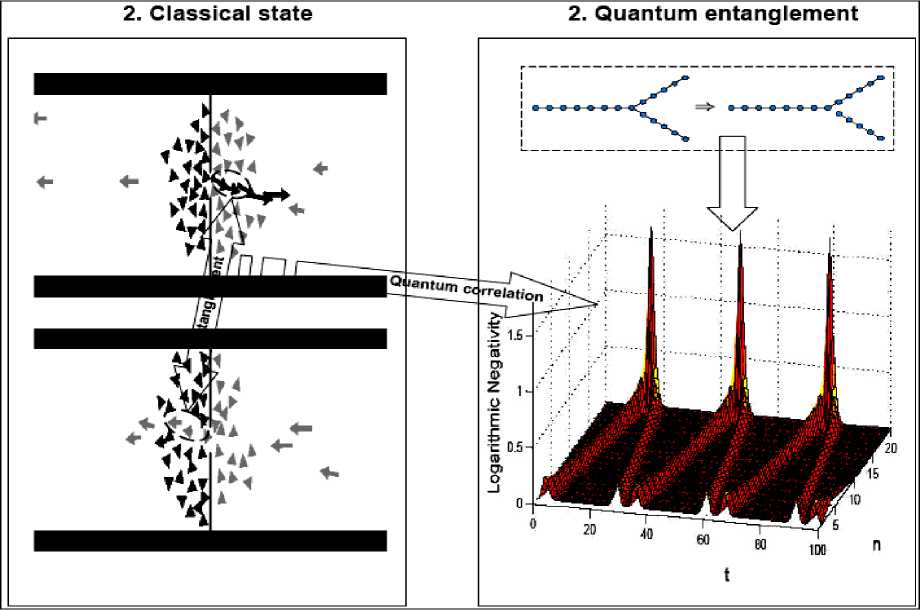

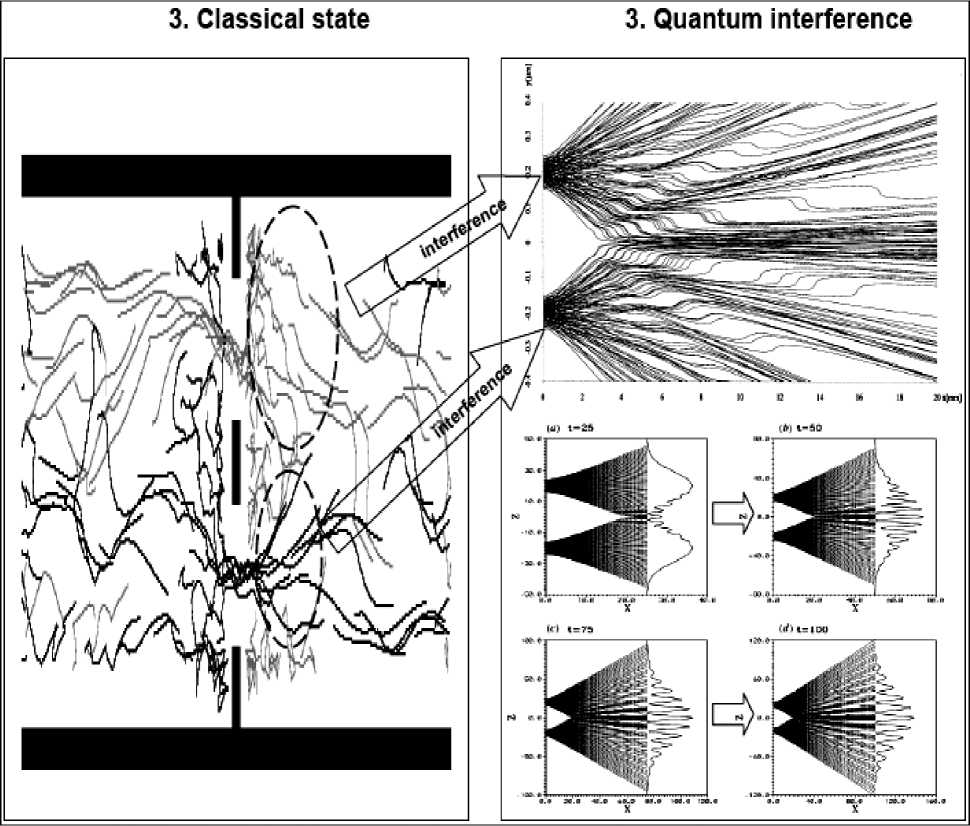

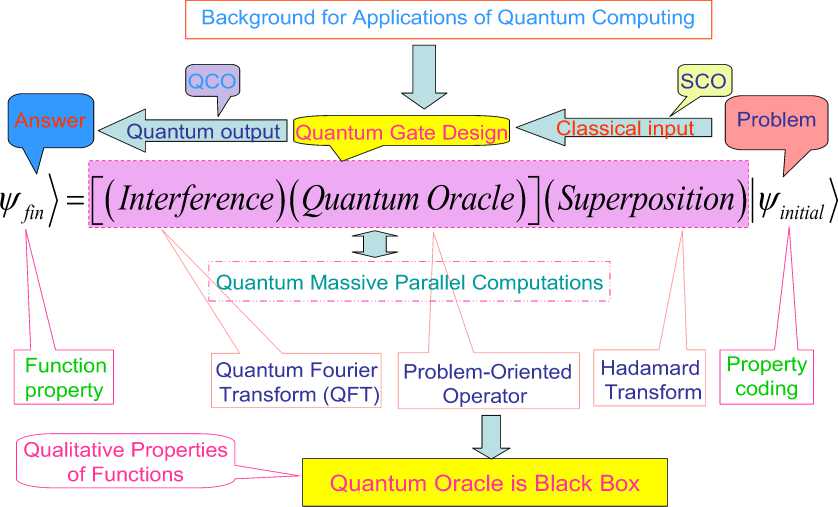

Different models of self-organization processes are described from physical, information, and algorithmic (quantum computing) point of view. Role of quantum correlation types and information transport in self-organization of structure type design is discussed. A generalized quantum algorithm (QA) design of self-organization processes is developed. Particular case of this approach (based on early developed quantum swarm model) is described. Types of quantum operators as superposition, entanglement and interference in different models evolution of self-organization processes are introduced from quantum computing viewpoint. The physical interpretation of self-organization control process on quantum level is introduced based on the information model of the exchange and extraction of quantum (hidden) value information from/between classical particle’s trajectories in particle swarm. New types of quantum correlations (as behavior control coordinator) and information transport (value information) between particle swarm trajectories are introduced.

Self-organization, quantum control algorithm, stability, robustness, controllability

Короткий адрес: https://sciup.org/14123244

IDR: 14123244

Текст научной статьи Role of information-thermodynamic trade-off models of control qualitative in quantum control algorithm of self-organization design

Introduction: Self-organization phenomena

In recent years, the concept of self-organization has been used to understand collective behavior of human being society, animals, ant’s, bird’s, bacteria’s colonies, quantum dots etc. The central tenet of selforganization is that simple repeated interactions between individuals can produce complex adaptive patterns at the level of the group. Inspiration comes from patterns seen in physical systems, such as spiraling chemical waves, which arise without complexity at the level of the individual units of which the system is composed.

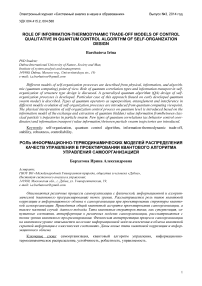

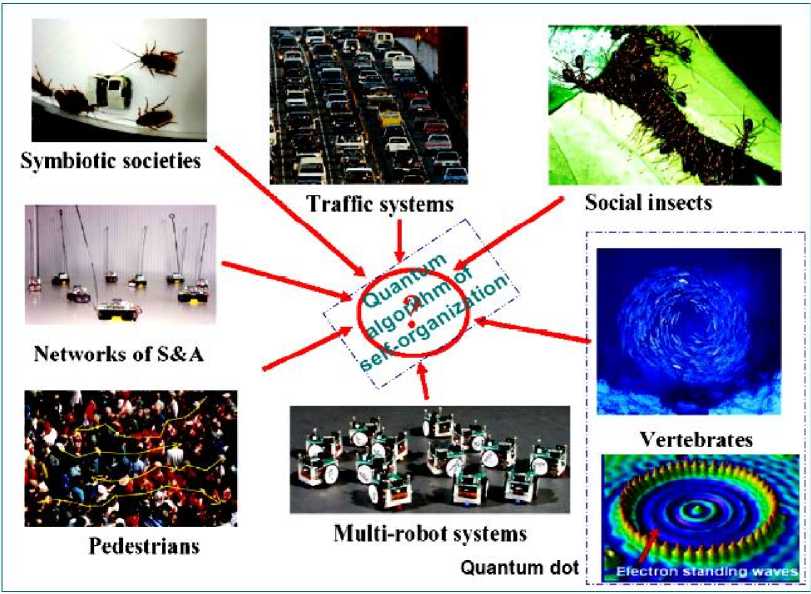

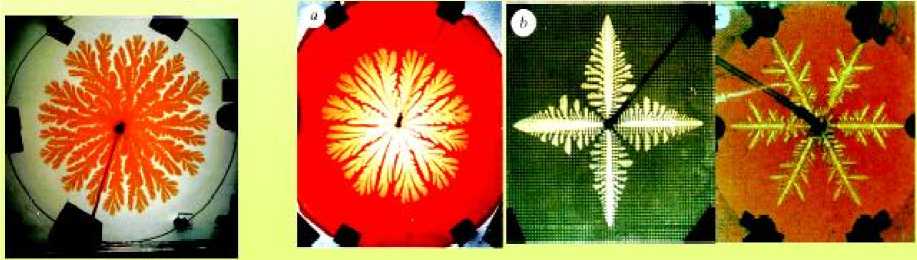

Figure 1 is demonstrated the self-organization in different systems. In all these examples, the individual is submerged as the group takes on a life of its own. The individual units do not have a complete picture of their position in the overall structure and the structure they create has a form that extends well beyond that of the individual units. The suggestion is that biological structures such as termite mounds, ant trail networks and even human crowds can be explained in terms of repeated interactions between the animals and their environment, without invoking individual complexity. As examples, a flock of birds twisting in the evening light; a fish school wincing at the thought of a predator; the cram to leave an underground station; ants marching in an endless line; the stop and start traffic jams; the quiet hum of a honey bee hive; the pulsating roar of a football crowd; a swarm of locusts flying across the desert; or even the bureaucracy of the European Union, USA, Japan, China, and Russia.

Q : Beyond the fact that individuals produce collective patterns, is there anything more specific we can say about these phenomena we have labeled self-organized ?

A general characteristic of self-organizing systems is as following: they are robust or resilient . This means that they are relatively insensitive to perturbations or errors, and have a strong capacity to restore themselves, unlike most human designed systems. One reason for this fault-tolerance is the redundant , distributed organization: the non-damaged regions can usually make up for the damaged ones. Another reason for this intrinsic robustness is that self-organization thrives on randomness , fluctuations or «noise». A certain amount of random perturbations will facilitate rather than hinder self-organization. A third reason for resilience is the stabilizing effect of feedback loops.

The present section reviews and analyzed its most important engineering concepts and principles of self-organization that can be used in design of robust intelligent control systems [1].

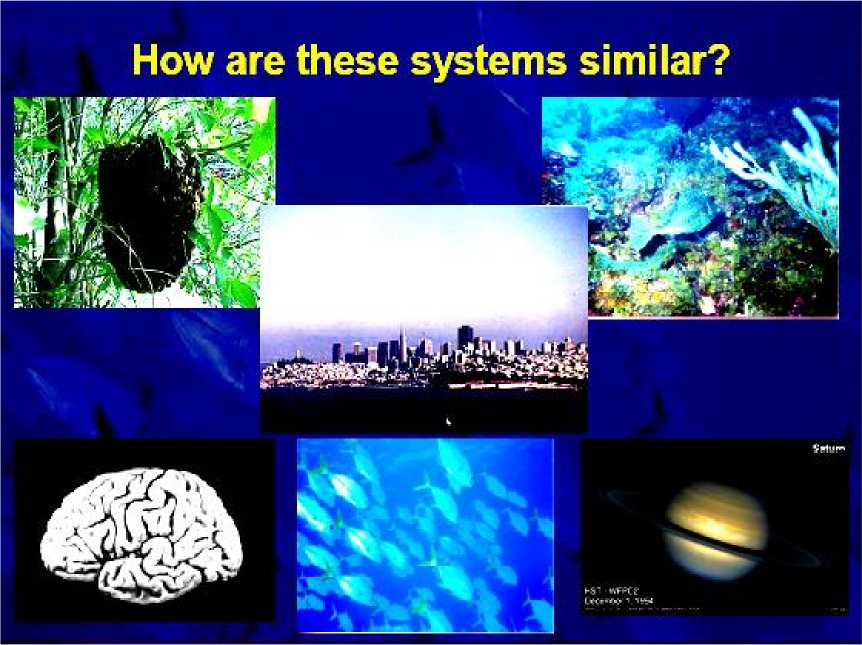

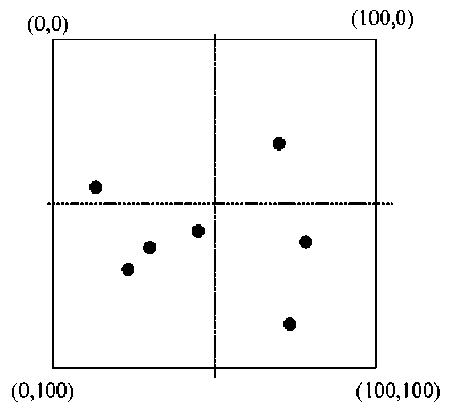

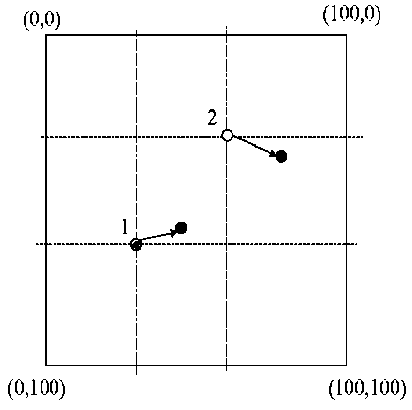

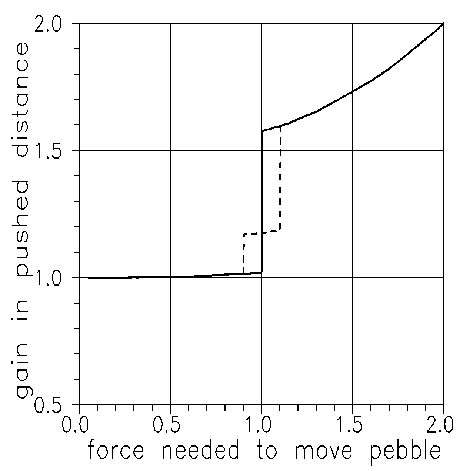

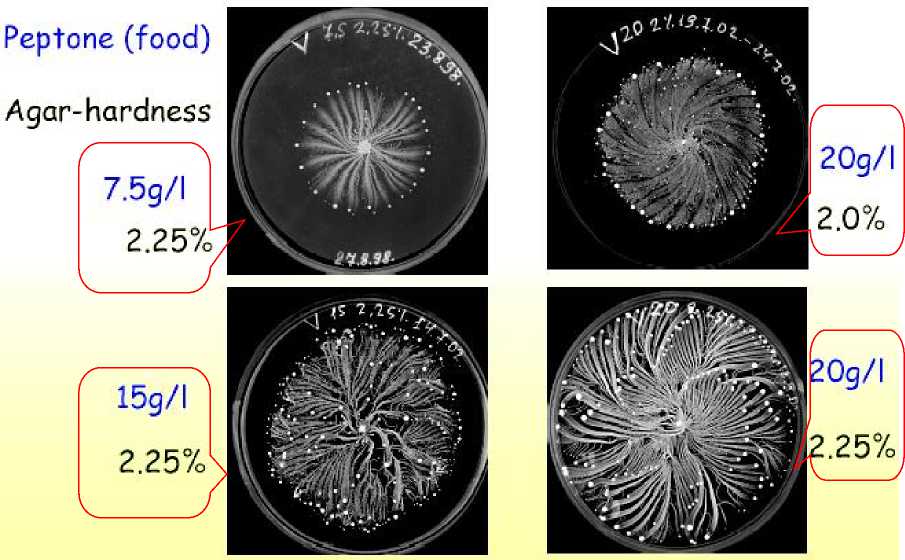

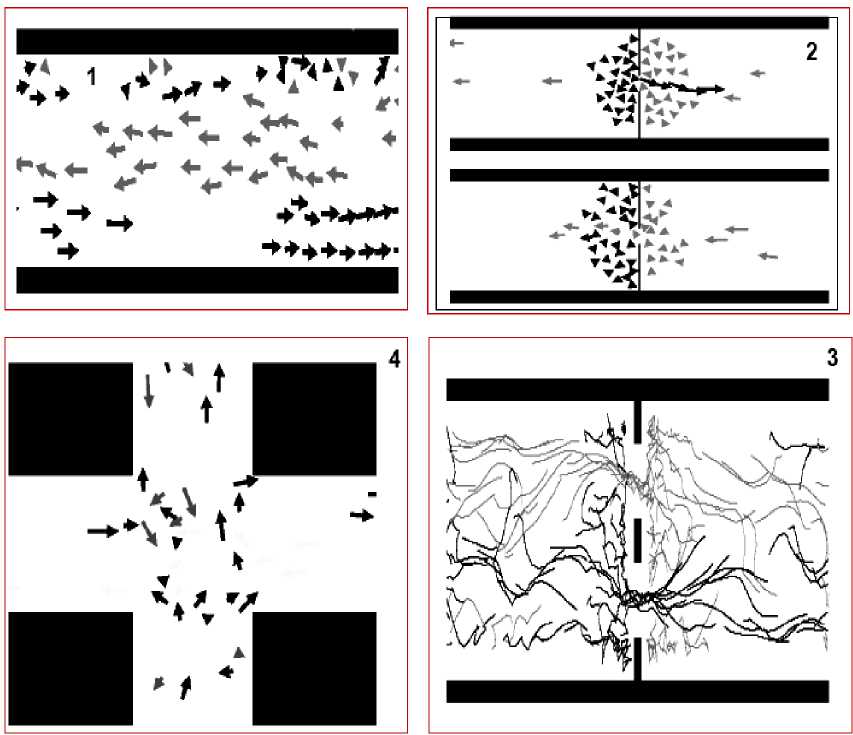

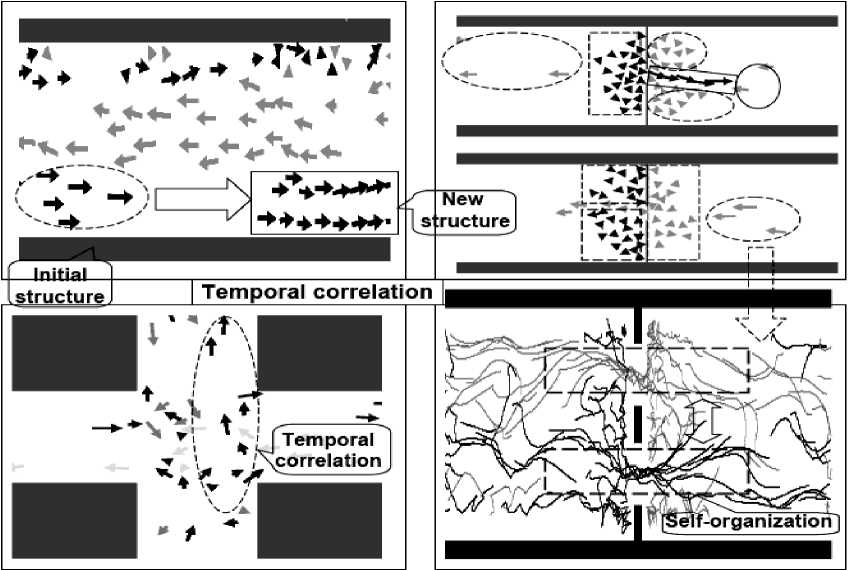

Fig. 3 is demonstrated the main problem of self-organization control.

We choice for analysis of common futures as Benchmarks the following examples [2 – 19]:

-

(1) Pedestrian behavior and self-organization;

-

(2) «Phantom panics» and self-organization;

-

(3) Self-organization of traffic flow models;

-

(4) Swarm self-organization and swarm intelligence (SI):

-

4.1. Applications of SI and ant colony self-organization;

-

4.2. Agent-Based Models (ABM);

-

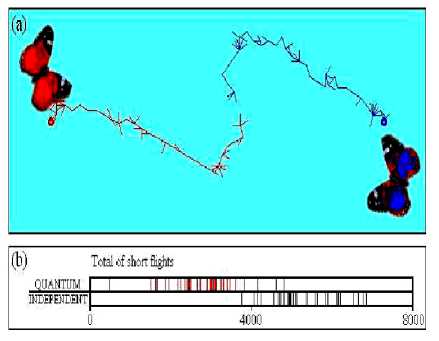

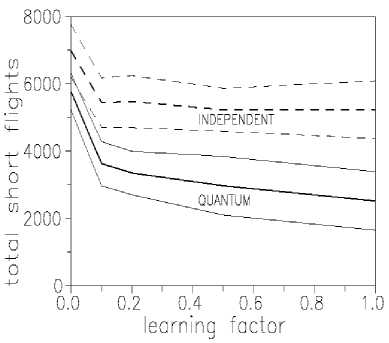

4.3. Quantum cooperation of two insects;

-

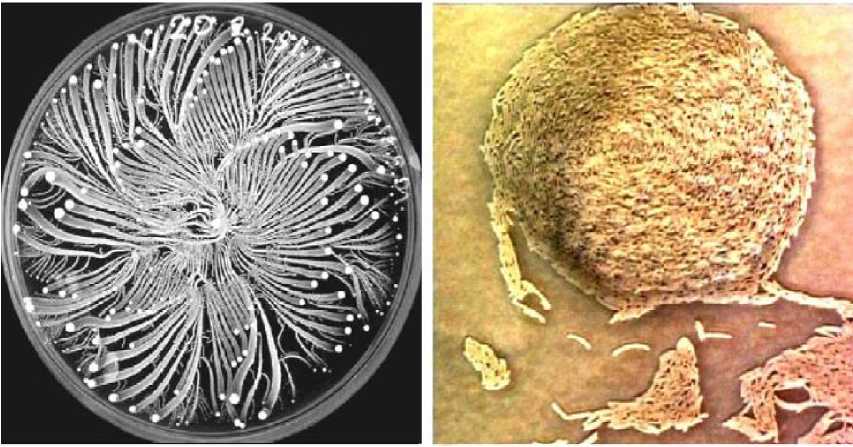

4.4. Engineered self-organization of a bacteria colony;

-

-

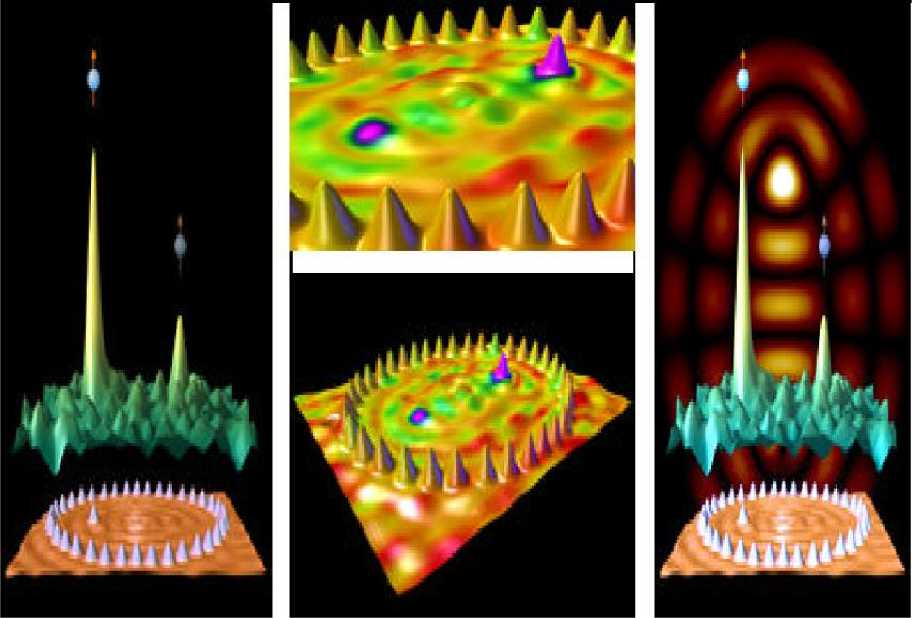

(5) Self-organization in nano-scale structures: Quantum corrals .

Briefly physical principles of these self-organization models are described in this paper.

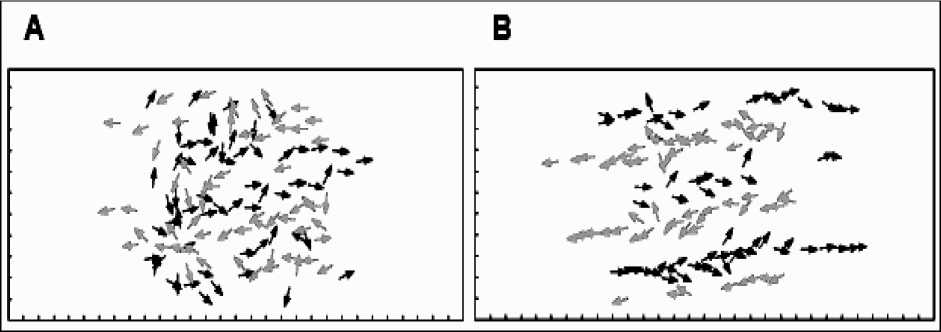

Figure 1. Examples of collective animal behavior:

-

(a) Fish milling (reproduced with permission from Philip Colla, oceanlight.com). (b) The entrance crater to a nest of the ant Messor barbarus (from Theraulaz et al. 2003). (c) Traffic flow in Paris (reproduced with permission from Anthony Atkielski). (d ) A bifurcation in a Pharaoh’s ant trail (reproduced with permission from Duncan Jackson). (e) A Mexican wave at an American football game (taken from Farkas 2002). (f) A band of marching locusts (reproduced with permission from Iain Couzin)

Figure 2. How these systems are similar?

(a)

-

(b)

-

Figure 3. Main problem of self-organization control

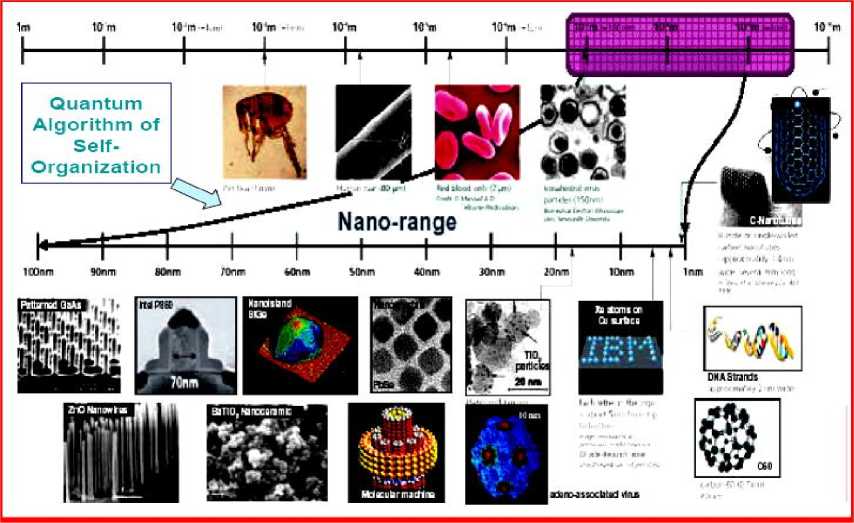

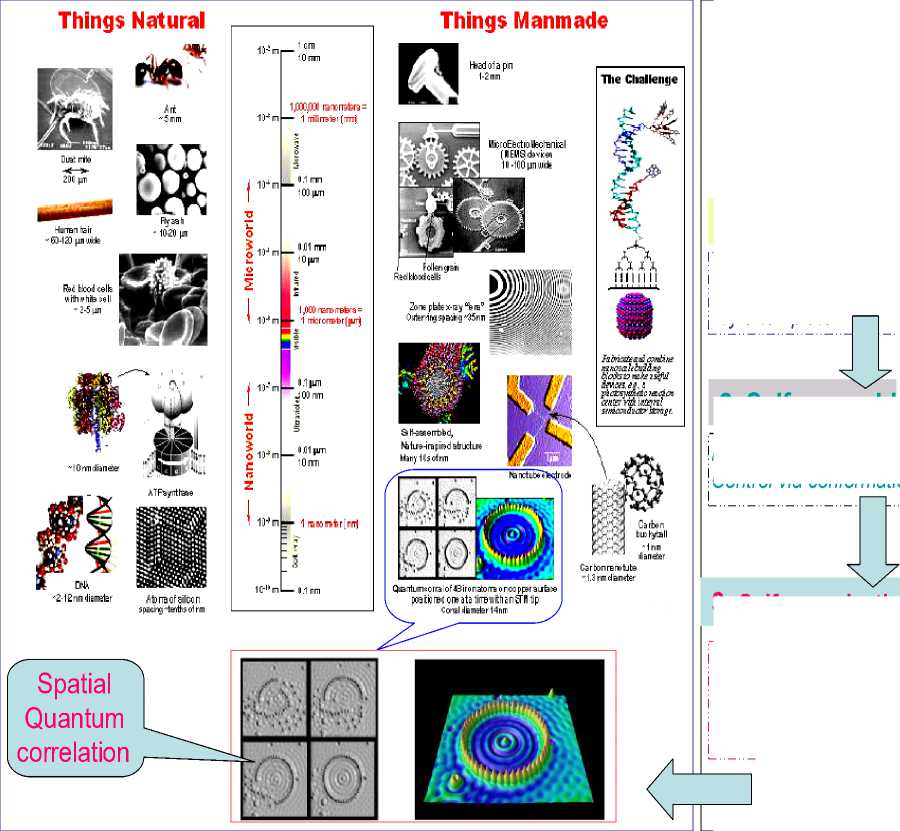

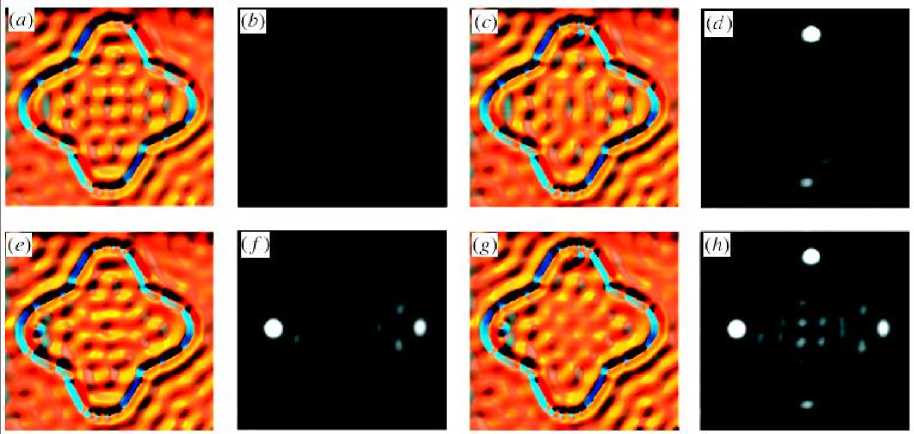

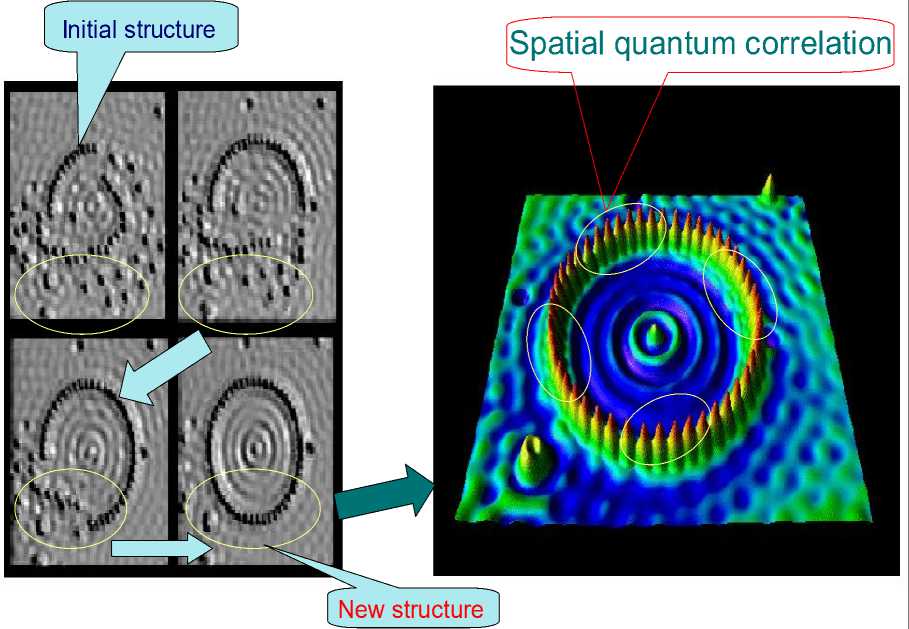

In these examples self-organizational processes begin with the amplification (through positive feedback) of initial random fluctuations. This breaks the symmetry of the initial state, but often in unpredictable (but operationally equivalent) ways. That is, the job gets done, but hostile forces will have difficulty predicting precisely how it gets done. For example, a key issue in nanotechnology is the development of conceptually simple construction techniques for the mass fabrication of nano-scale structures reaching down to the atomic scale. At this level the conventional top-down fabrication paradigm becomes excessively energy-intensive, wasteful, expensive and complicated. The natural alternative is self-organized growth, where nano-scale arrangements are built from their atomic and molecular constituents by processes intrinsically providing structural organization. Fig. 4 is illustrated the self-organization processes on nano-scale levels.

Figure 4. Role of quantum control algorithm in self-organization on nano range

This approach is based on a detailed understanding of the microscopic pathways of diffusion, nucleation and aggregation. The hierarchy in the migration barriers as well as the non-uniform strain fields induced by mismatched lattice parameters can be translated into geometric order and well defined shapes and length scales of the resulting aggregates. In self-organizing systems the relation between cause and effect is much less straightforward: small causes can have large effects, and large causes can have small effects.

This non-linearity can be understood from the relation of feedback that holds between the system’s components. Each component (e.g. a spin) affects the other components, but these components in turn affect the first component. Thus the cause-and-effect relation is circular : any change in the first component is feedback via its effects on the other components to the first component itself.

Remark . The above mentioned feedback is one of important component in advanced control theory and can have two basic values: positive or negative . Feedback is said to be positive if the recurrent influence reinforces or amplifies the initial change. In other words, if a change takes place in a particular direction, the reaction being feedback takes place in that same direction. Feedback is negative if the reaction is opposite to the initial action, that is, if change is suppressed or counteracted, rather than reinforced. Negative feedback stabilizes the system, by bringing deviations back to their original state. Positive feedback, on the other hand, makes deviations grow in a runaway, explosive manner. It leads to accelerated development, resulting in a radically different configuration.

Thus, physically, a process of self-organization typically starts with a positive feedback phase, where an initial fluctuation is amplified, spreading ever more quickly, until it affects the complete system. Once all components have «aligned» themselves with the configuration created by the initial fluctuation, the configuration stops growing: it has «exhausted» the available resources. Now the system has reached equilibrium (or at least a stationary state). Since further growth is no longer possible, the only possible changes are those that reduce the dominant configuration. However, as soon as some components deviate from this configuration, the same forces that reinforced that configuration will suppress the deviation, bringing the system back to its stable configuration. This is the phase of negative feedback.

In more complex self-organizing systems, there will be several interlocking positive and negative feedback loops, so that changes in some directions are amplified while changes in other directions are suppressed. This can lead to very complicated, difficult to predict behavior. These self-organized systems have different physical nature but can be described in general form based on developed quantum control algorithm of self-organization [11].

Analysis of self-organization models gives us the following results.

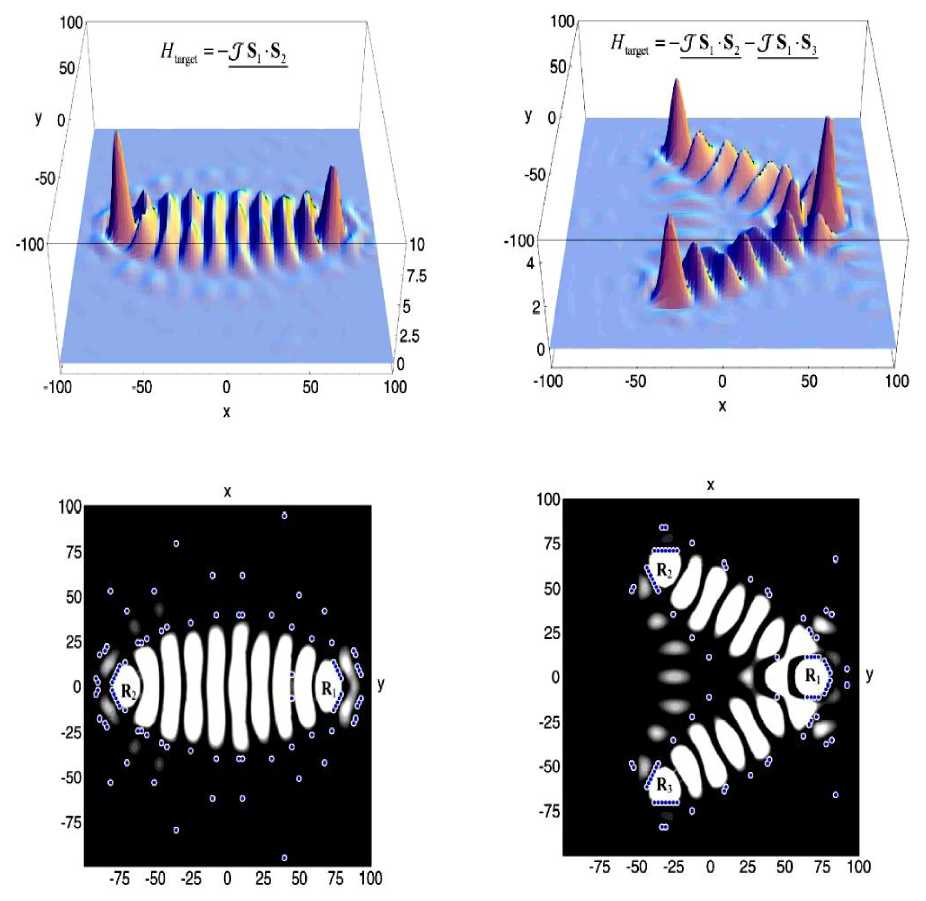

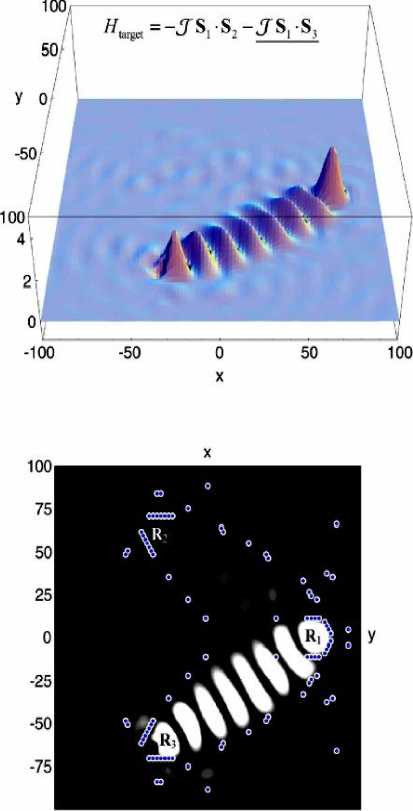

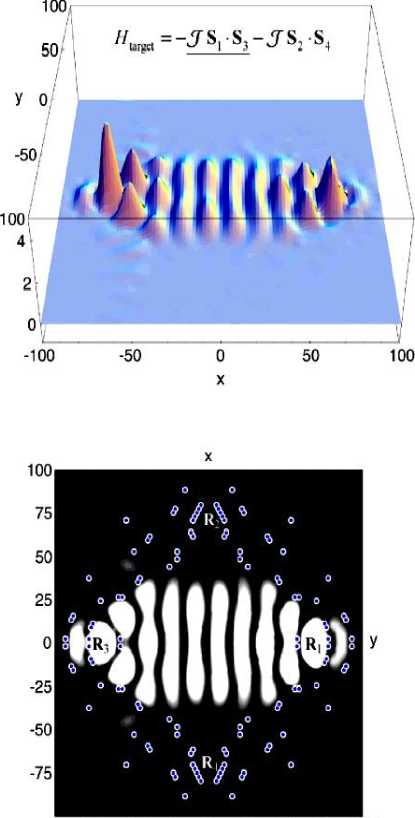

Models of self-organization are included natural quantum effects and based on the following information-thermodynamic concepts: (i) macro- and micro-level interactions with information exchange (in ABM micro-level is the communication space where the inter-agent messages are exchange and is explained by increased entropy on a micro-level); (ii) communication and information transport on micro-level (“quantum mirage” in quantum corrals); (iii) different types of quantum spin correlation that design different structure in self-organization (quantum dot); (iv) coordination control (swam-bot and snake-bot).

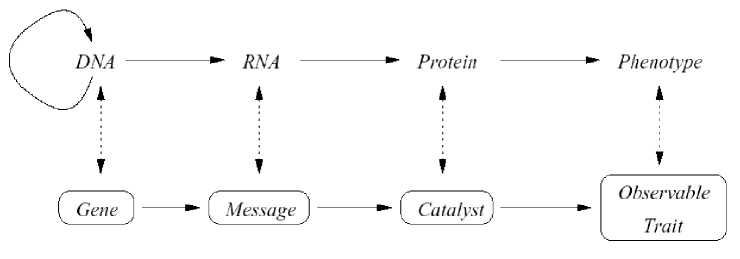

Natural evolution processes are based on the following steps [2–7]: (i) templating; (iii) self-assembling; and (iii) self-organization.

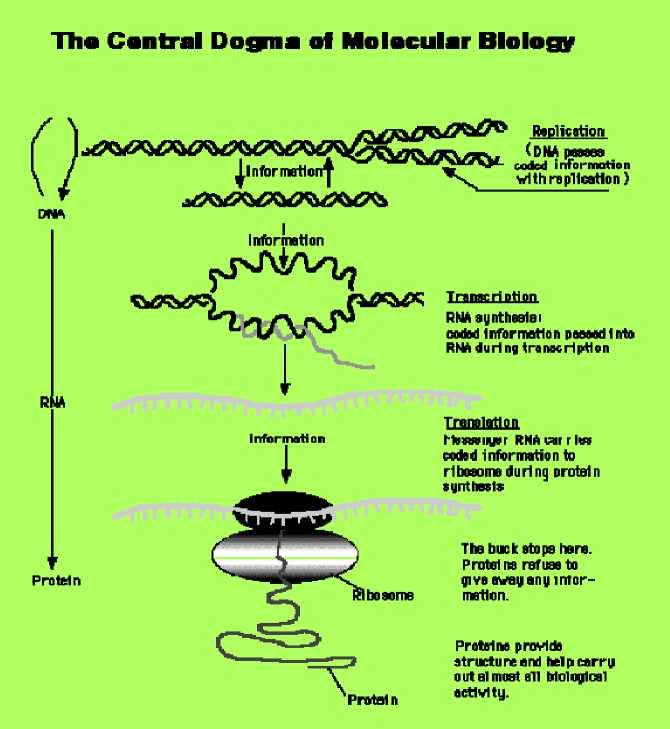

According quantum computing theory in general form every quantum algorithm (QA) includes the following unitary quantum operators: (i) superposition; (ii) entanglement (quantum oracle); (iii) interference. Measurement is the fourth classical operator. [It is irreversible operator and is used for measurement of computation results].

Quantum control algorithm of self-organization that developed below [11] is based on quantum fuzzy inference (QFI) models. QFI includes these concepts of self-organization and has realized by corresponding quantum operators.

QFI is one of possible realization of quantum control algorithm of self-organization that includes all of these features: (i) superposition; (ii) selection of quantum correlation types; (iii) information transport and quantum oracle; and (iv) interference. With superposition is realized templating operation, and based on macro- and micro-level interactions with information exchange of active agents. Selection of quantum correlation type organize self-assembling using power source of communication and information transport on micro-level. In this case the type of correlation defines the level of robustness in designed KB of FC. Quantum oracle calculates intelligent quantum state that includes the most important (value) information transport for coordination control. Interference is used for extraction the results of coordination control and design in online robust KB.

The developed QA of self-organization is applied to design of robust KB of FC in unpredicted control situations. Main operations of developed QA and concrete examples of QFI applications are described.

Principles and Physical Model Examples of Self-Organization

The theory of self-organization, learning and adaptation has grown out of a variety of disciplines, including quantum mechanics, thermodynamics, cybernetics, control theory and computer modeling. The present section reviews its most important definitions, principles, model descriptions and engineering concepts that can be used in design of robust intelligent control systems.

Definitions and main properties of self-organization

Self-organization is defined in general form as following [2, 3, 5, 6]: The spontaneous emergence of large-scale spatial, temporal, or spatiotemporal order in a system of locally interacting, relatively simple components.

Self-organization is a bottom-up process where complex organization emerges at multiple levels from the interaction of lower-level entities. The final product is the result of nonlinear interactions rather than planning and design, and is not known a priori. Contrast this with the standard, top-down engineering design paradigm where planning precedes implementation, and the desired final system is known by design.

Self-organization can be defined as the spontaneous creation of a globally coherent pattern out of local interactions. Because of its distributed character, this organization tends to be robust , resisting perturbations. The dynamics of a self-organizing system is typically nonlinear, because of circular or feedback relations between the components. Positive feedback leads to an explosive growth, which ends when all components have been absorbed into the new configuration, leaving the system in a stable, negative feedback state.

Nonlinear systems have in general several stable states, and this number tends to increase (bifurcate) as an increasing input of energy pushes the system farther from its thermodynamic equilibrium.

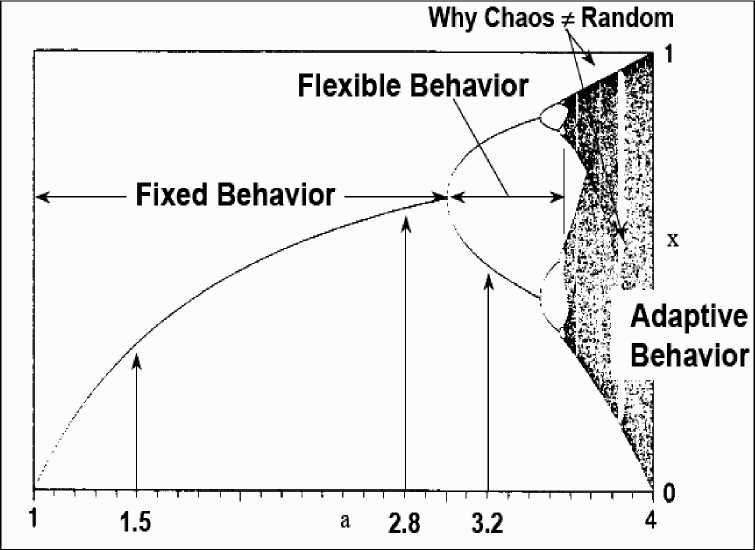

Fig. 5 demonstrates this bifurcation situation.

Figure 5. Bifurcation process of non-linear system

To adapt to a changing environment, the system needs a variety of stable states that is large enough to react to all perturbations but not so large as to make its evolution uncontrollably chaotic. The most adequate states are selected according to their fitness, either directly by the environment, or by subsystems that have adapted to the environment at an earlier stage.

Formally, the basic mechanism underlying self-organization is the (often noise-driven) variation which explores different regions in the system’s state space until it enters an attractor . This precludes further variation outside the attractor, and thus restricts the freedom of the system’s components to behave independently. This is equivalent to the increase of coherence, or decrease of statistical entropy , that defines selforganization .

The most obvious change that has taken place in systems is the emergence of global organization. Initially the elements of the system (spins or molecules) were only interacting locally . This locality of interactions follows from the basic continuity of all physical processes: for any influence to pass from one region to another it must first pass through all intermediate regions. In the self-organized state, on the other hand, all segments of the system are strongly correlated . This is most clear in the example of the magnet: in the magnetized state, all spins, however far apart, point in the same direction. Correlation is a useful measure to study the transition from the disordered to the ordered state. Locality implies that neighboring configurations are strongly correlated, but that this correlation diminishes as the distance between configurations increases. The correlation length can be defined as the maximum distance over which there is a significant correlation.

When we consider a highly organized system, we are usually imagined some external or internal agent ( controller ) that is responsible for guiding, directing or controlling that organization. The controller is a physically distinct subsystem that exerts its influence over the rest of the system. In this case, we may say that control is centralized . In self-organizing systems, on the other hand, «control» of the organization is typically distributed over the whole of the system. All parts contribute evenly to the resulting arrangement.

Remark . Although centralized control does have some advantages over distributed control (e.g. it allows more autonomy and stronger specialization for the controller), at some level it must itself be based on distributed control. For example, the behavior of human being body can be best explained by studying what happens in his brain, since the brain, through the nervous system, controls the movement of muscles. However, to explain the functioning of brain, we can no longer rely on some « mind within the mind » that tells the different brain neurons what to do. This is the traditional philosophical problem of the homunculus , the hypothetical « little man» that had to be postulated as the one that makes all the decisions within mental system. Any explanation for organization that relies on some separate control, plan or blueprint must also explain where that control comes from otherwise it is not really an explanation. The only way to avoid falling into the trap of an infinite regress (the mind within the mind within the mind within...) is to uncover a mechanism of self-organization at some level. The brain illustrates this principle nicely. Its organization is distributed over a network of interacting neurons. Although different brain regions are specialized for different tasks, no neuron or group of neurons has overall control.

This is shown by the fact that minor brain lesions because of accidents, surgery or tumors normally do not disturb overall functioning, whatever the region that is destroyed.

As mentioned in Introduction a general characteristic of self-organizing systems is as following: they are robust or resilient . This means that they are relatively insensitive to perturbations or errors, and have a strong capacity to restore themselves, unlike most human designed systems. One reason for this faulttolerance is the redundant , distributed organization: the non-damaged regions can usually make up for the damaged ones. Another reason for this intrinsic robustness is that self-organization thrives on randomness , fluctuations or «noise». A certain amount of random perturbations will facilitate rather than hinder selforganization. A third reason for resilience is the stabilizing effect of feedback loops. Many self-organizational processes begin with the amplification (through positive feedback) of initial random fluctuations. This breaks the symmetry of the initial state, but often in unpredictable but operationally equivalent ways. That is, the job gets done, but hostile forces will have difficulty predicting precisely how it gets done.

Stigmergy is an important principle of self-organization, seen for example in wasp nest building and spider web construction. It refers to a way of coordinating a collective construction process so that the project itself contains the information necessary to guide the actions of the workers. Scientific research has shown the pervasiveness of self-organization in the natural world, from nonliving systems, through microorganisms, to species of all degrees of complexity, including human beings. This research has demonstrated how comparatively simple interactions, often among organisms with limited cognitive capacities, can solve

Электронный журнал «Системный анализ в науке и образовании» Выпуск №3, 2014 год complex command, control, and coordination problems in order to promote their survival and to accomplish their ends.

The behavior of these species is more robust, flexible, and adaptive than they would be if they were not based on self-organization. Self-organization is especially attractive as an approach to the robust, flexible, and adaptive implementation of command, control, and communication systems of enormous potential value to military operations, homeland security, and public safety. With this increased knowledge of natural selforganization, has come improved understanding of various general principles that can be applied to artificial intelligent systems to achieve the same benefits. In past research, a variety of simulation studies have shown that these principles of self-organization can be applied in artificial systems, which may be quite different from the natural systems in which the principles were originally observed.

Principles and Models of Self-Organization

Self-organization has long been a matter of immense interest and research in sociology, anthropology, physics and many other fields. Though the principles of self-organization can be inducted from almost all walks of human, plant and animal lives [2 – 7], and in computer science [8] can be used. It is most evident in ant and termites colonies [9] or self-engineering capabilities of bacteria [10]. Self-organization has been used to explain the formation of cities, software, brain cells and many natural phenomena in human societies. Though the principle is used to explain other phenomena, the concept itself is continuously evolving. Selforganization phenomena are correlated with information transport and thermodynamics processes [5 – 7, 15 – 17].

The term self-organizing systems refers to a class of systems that are able to change their internal structure and their function in response to external circumstances. By self-organization it is understood that elements of a system are able to manipulate or organize other elements of the same system in a way that stabilizes either structure or function of the whole against external fluctuations. Traditionally three qualitative forms of self-organization are analyzed as following:

-

(i) stigmergy ; (ii) reinforcement mechanisms ; and (iii) cooperation .

The amplification phenomena founded in stigmergic process or in reinforcement process are different forms of positive feedbacks that play a major role in building group activity or social organization. Cooperation is a functional form for self-organization because of its ability to guide local behaviors in order to obtain a relevant collective one.

Self-organization mechanisms guide the behavior of the local entities of a collective.

Consequently these approaches allow a drastic reduction of the solution search space compared to global search algorithms. Working on self-organization implies the creation of disorders inside a collective in order to obtain later a more relevant response of the system faced with unexpected events. From an engineering point of view it could be interesting to propose global systems gauges able to link disorder and relevance behavior at the system macro-level.

Self-organization essentially refers to a spontaneous, dynamically produced (re-)organization. Several definitions corresponding to the different self-organization behaviors: (1) Swarm intelligence (SI); (2) Decrease of entropy; (3) Autopoiesis; (4) Artificial systems; (5) Emergence.

Remark . Many natural systems show self-organization property (e.g. galaxies, planets, chemical compounds, cells, organisms and societies). Traditional scientific fields attempt to explain these features by referencing the micro properties or laws applicable to their component parts, for example gravitation or chemical bonds. Furthermore, self-organization implies organization, which in turn implies some ordered structure and component behavior. In this respect, the process of self-organization changes the respective structure and behavior, and a new distinct organization is self-produced. Emergence is the fact that a structure, not explicitly represented at a lower level, appears at a higher level. In the case of dynamic self-organizing systems, with decentralized control and local interactions, intimately linked with self-organization is the notion of emergent properties. The ants actually establish the shortest path between the nest and the source of food. However in the general case, self-organization can be witnessed without emergence and vice-versa.

-

A . Principles of self-organization . Over the last half a century, much research in different areas has employed self-organizing systems to solve complex control problems. However, there is as yet no general

framework for constructing self-organizing systems. Different vocabularies are used in different areas, and with different goals. (Detail description of self-organization principles and different natural/man-manned models of self-organization structures are presented in [3, 5 – 7, 11]). The essence of self-organization is that system structure often appears without explicit pressure or involvement from outside the system. In other words, the constraints on form (i.e. organization) of interest to us are internal to the system, resulting from the interactions among the components and usually independent of the physical nature of those components. The organization can evolve in either time or space, maintain a stable form or show transient phenomena. General resource flows within self-organized systems are expected (dissipation), although not critical to the concept itself.

Remark : Related works . The term self-organization has been used in different areas with different meanings, as is cybernetics, social-economic systems, thermodynamics, biology, mathematics, computing, information theory, synergetic, and others (for a general overview, see [11], and References there). However, the use of the term is subtle, since any dynamical system can be said to be self-organizing or not, depending partly on the observer: If we decide to call a «preferred» state or set of states (i.e. attractor) of a system «or-ganized», then the dynamics will lead to a self-organization of the system. A practical notion will suffice: A system described as self-organizing is one in which elements interact in order to achieve dynamically a global function or behavior [20]. This function or behavior is not imposed by one single or a few elements, nor determined hierarchically. It is achieved autonomously as the elements interact with one another. These interactions produce feedbacks that regulate the system. Many non-living physical and chemical systems have the capacity to generate order from chaos. This capacity is known as self-organization . Self-organized systems can evolve by small parameter shifts that produce large changes in outcome. A common misconception about self-organization in biological systems is that it represents an alternative to natural selection.

Self-organization usually relies on four basic ingredients: (i) Positive feedback; (ii) Negative feedback; (iii) Balance of exploitation and exploration; and (iv) Multiple interactions. All the previously mentioned examples of complex systems fulfill the definition of self-organization.

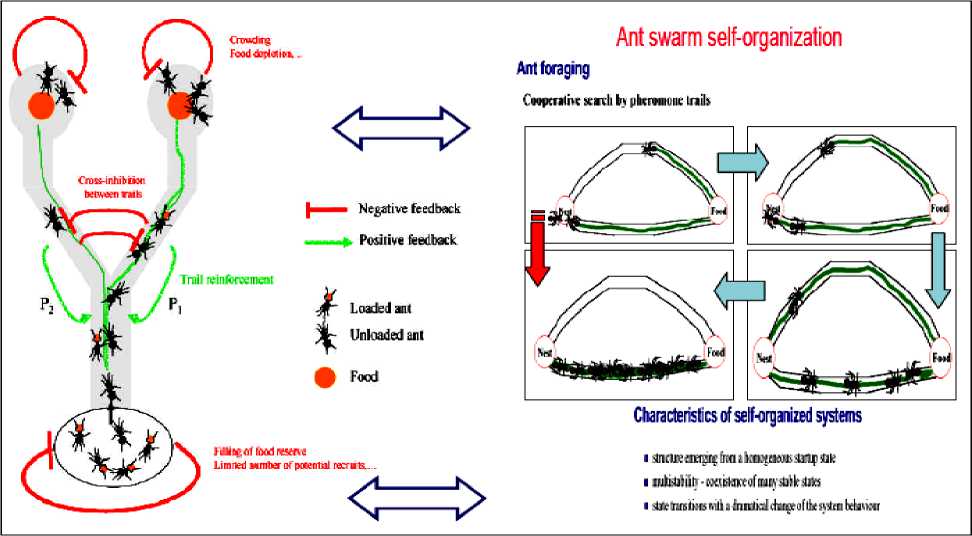

Fig. 6 shows these principles of self-organization in ant colony. When two food sources of equal quality are offered to an ant society, only one becomes selected through the concurrent influence of positive (in green) and negative (in red) feedback loops. Examples of such feedbacks are given in the Fig. 1. Depending on these feedbacks, the trail amount and hence the probability for newly coming ants to choose one path (either P 1 or P 2) at the bifurcation point will change over time and will ultimately lead to the collective choice of one food source. More precisely, the question can be formulated as follows.

Q : When is it useful to describe a system as self-organizing ?

This will be when the system or environment is very dynamic and/or unpredictable.

If we want the system to solve a problem, it is useful to describe a complex system as self-organizing when the «solution» is not known beforehand and/or is changing constantly. Then, the solution is dynamically strived for by the elements of the system. In this way, systems can adapt quickly to unforeseen changes as elements interact locally. In theory, a centralized approach could also solve the problem, but in practice such an approach would require too much time to compute the solution and would not be able to keep the pace with the changes in the system and its environment. In engineering, a self-organizing system would be one in which elements are designed in order to solve dynamically a problem or perform a function at the system level.

Thus, the elements need to divide, but also integrate, the problem. For example, a swarm of robots will be conveniently described as self-organizing, since each element of the swarm can change its behavior depending on the current situation. It should be noted that all engineered self-organizing systems are to a certain degree autonomous, since part of their actual behavior will not be determined by a designer. In order to understand self-organizing systems, two or more levels of abstraction should be considered: elements (lower level) organize in a system (higher level), which can in turn organize with other systems to form a larger system (even higher level).

The understanding of the system’s behavior will come from the relations observed between the descriptions at different levels. Note that the levels, and therefore also the terminology, can change according to the interests of the observer. For example, in some circumstances, it might be useful to refer to cells as elements (e.g. bacterial colonies); in others, as systems (e.g. genetic regulation); and in others still, as systems coordinating with other systems (e.g. morphogenesis).

Figure 6. Feedbacks loops and collective choice of one food source (bifurcation) [9]

A system can cope with an unpredictable environment autonomously using different but closely related approaches:

-

- Adaptation (learning, evolution). The system changes its behavior to cope with the change.

-

- Anticipation (cognition). The system predicts a change to cope with, and adjusts its behavior accordingly. This is a special case of adaptation, where the system does not require experiencing a situation before responding to it.

-

- Robustness. A system is robust if it continues to function in the face of perturbations. This can be achieved with modularity, degeneracy, distributed robustness, or redundancy.

Successful self-organizing systems will use combinations of these approaches to maintain their integrity in a changing and unexpected environment. Adaptation will enable the system to modify itself to “fit” better within the environment. Robustness will allow the system to withstand changes without losing its function or purpose, and thus allowing it to adapt. Anticipation will prepare the system for changes before these occur, adapting the system without it being perturbed.

-

B . Elements of self-organization . We can see that all of them should be taken into account while engineering self-organizing intelligent systems.

-

1. Interacting components . The components provide the substrate for organization of higher-level structures. Interaction / communication is necessary for creating linkages to assemble larger structures. Example components are molecules, cells, agents, etc. Example interactions are excitation, inhibition, sensing, attraction, repulsion, etc.

-

2. Constructive processes . Needed to build larger structures from the components, e.g., reproduction, aggregation, crystallization, copying, growth, recombination, ramification, etc.

-

3. Destructive processes . Needed to tear down existing (possibly suboptimal or unwanted) structures to make room for new ones, e.g., death, fragmentation, dissolution, division, mixing, turbulence, noise, etc.

-

4. Autocatalysis/positive feedback . Needed to reinforce and drive the construction of useful structures, e.g., splits encouraging more splitting to create a complex branching structure.

-

5. Homeostasis/negative feedback . Needed to prevent runaway structure formation (e.g., structures beyond a certain size becoming non-receptive to further addition or even unstable).

-

6. Nonlinearity . Needed to magnify some effects and squelch others in order to produce complex structure.

Examples include thresholds, unimodal and multimodal dependencies, saturation, and amplification underlying the constructive, destructive and feedback processes.

What is Emergence?

The appearance of large-scale collective order that cannot be described completely in terms of the individual system components, e.g., meaning from a collection of words, a society from a collection of individuals, a wave from a collection of particles, a picture from a collection of pixels. Emergence seeks to move beyond pure reductionism without resorting to metaphysical explanations, e.g., in explaining phenomena such as intelligence and life. Complex adaptive systems exhibit spontaneous emergence at many levels of description.

-

C. Elements of engineering self-organization design and its role in design of robust intelligent control. Let us consider main approach in engineering philosophy of control design.

Traditional top-down approach:

-

(1) Consider all possibilities; (2) Develop a very careful design; (3) Thoroughly test the design to verify performance; (4) Implement and test a prototype; (5) Carefully replicate the verified design to ensure reliability. This approach relies on anticipation of all eventualities, meticulous design, thorough testing, and exact replication to obtain the desired level of performance. It works best in well-understood, predictable and relatively simple environments.

Self-organized bottom-up approach:

-

(1) Provide the basic elements/components needed; (2) Let the components interact among themselves and with the environment to organize through an iterative process of creative exploration and selective destruction. This approach produces good designs by multi-scale , parallel , intelligent random search through the space of possibilities. It is appropriate (necessary) for large-scale complex systems operating in complex, dynamic, unpredictable environments, e.g., the real world.

Key Difference

Top-Down: Every aspect of the system at all levels is carefully designed and evaluated

-

(a) Non-scalable in cost, time, effort, reliability; (b) Critically dependent on component reliability; (c) Inflexible in response to novel conditions.

Bottom-Up: Only the basic «simple and cheap» components are designed; the rest of the system organizes itself: (a) Inherently scalable; (b) Flexible, robust, versatile, expandable, evolvable.

What do complex adaptive systems buy us?

-

1 . Scalability : The system can grow much larger because no one needs to keep track of everything;

-

2 . Flexibility : The system can change as needed simply by individual agents changing their behavior;

-

3 . Versatility : The system can be used in many different situations without redesign;

-

4 . Expandability : More agents can be added to the system without redesign;

-

5 . Robustness : The system can withstand changes and even loss of individual agents

This is a new kind of engineering:

-

(1) We’re no longer designing the system;

-

(2) We’re engineering the possibility for the system to arise;

-

(3) This will work for some applications and not for others.

Why do we need to build complex adaptive systems?

- To obtain systems with attributes such as intelligence, adaptively, robustness, scalability, and flexibility for operation in complex, dynamic and uncertain environments e.g., battlefields, disaster areas, hazardous regions, ocean floors, outer space, etc.

- To create very large-scale or fine-grained systems where standard design, control, and analysis methods break down for capacity reasons, e.g., sensor networks with millions of nodes, swarms of microsatellites, etc.

- To control other complex adaptive systems, e.g., traffic networks, communication networks, biological systems, etc.

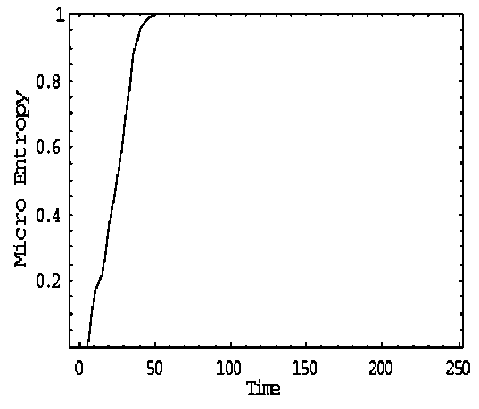

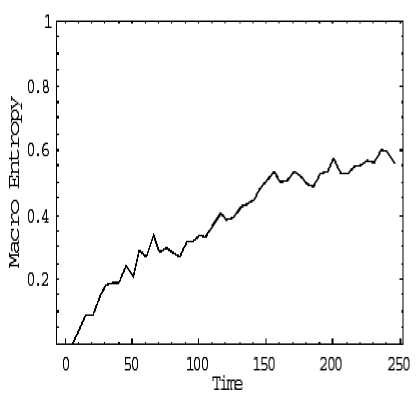

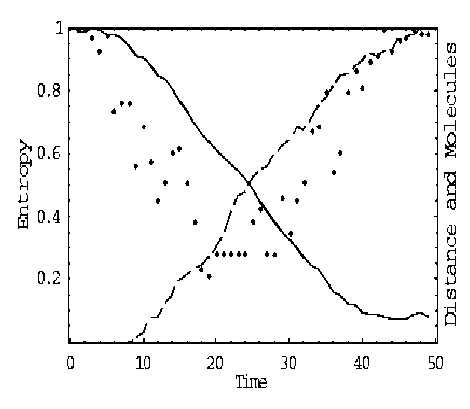

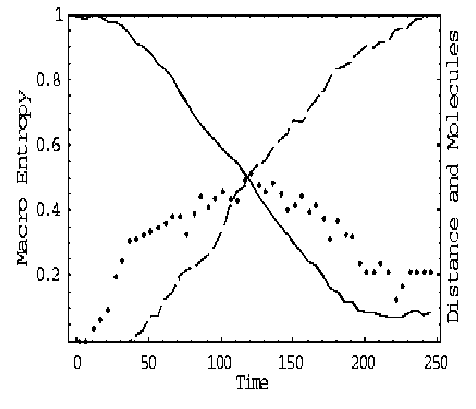

Self-organization may seem to contradict the second law of thermodynamics that captures the tendency of systems to disorder. The «paradox» has been explained in terms of multiple coupled levels of dynamic activity [the Kugler-Turvey model ( Kugler & Turvey , 1987)] self-organization and the loss of entropy occurs at the macro-level, while the system dynamics on the micro-level generates increasing disorder [11, 12, 13, 16, 17].

We are considered five examples of self-organization processes in dynamic systems with different scale dimensions. These examples help to understand main ideas of self-organization and find mutual components of quantum control algorithm of self-organization design.

In next sections the structure of this quantum design algorithm and its application to design of robust knowledge base in intelligent FCs are considered.

Simulation results are considered on examples of essentially non-linear dynamic system control objects.

Model’s analysis of self-organization processes

Analysis of self-organization models in this section gives us the following results.

-

1 . Natural evolution processes are based on the following steps [2–7]:

-

(i) templating ; (iii) self-assembling ; and (iii) self-organization .

-

2 . Models of self-organization are included natural quantum effects and based on the following information-thermodynamic concepts:

-

(i) macro- and micro-level interactions with information exchange (in ABM micro-level is the communication space where the inter-agent messages are exchanged and is explained by decreased entropy on a macro-level and increased entropy on a micro-level);

-

(ii) communication and information transport on macro- and micro-levels (“quantum mirage” in quantum corrals);

-

(iii) different types of quantum spin correlation that design different structure in self-organization (as example, quantum dot);

-

(iv) coordination control (swam-bot and snake-bot).

-

3 . Quantum control algorithm of self-organization that developed below [11] is based on quantum fuzzy inference (QFI) models. QFI includes these concepts of self-organization and has realized by corresponding quantum operators.

Let us consider the common parts of models of self-organization processes of natural evolution processes according to abovementioned Items 1 and 2 of our results.

Main common parts in evolution self-organization processes of Nature. Main steps of generalized (bioinspired) self-organization processes are as following:

-

- First step is a templating that is organization of component by a template ;

-

- The second step is a self-assembly with control via conformation;

-

- The third step is a self-organization as collective behavior of interactive (possible self- assembly) components.

From the description of qualitative properties of these models we can extract common parts. Main common parts of self-organization processes (described in details in [11]) are as following: (1) Presence of different type correlations (spatial, temporal, or spatiotemporal types); (2) Random search in design process of a new structure in accordance with initial state and fixed correlation type; (3) Robustness of final new structure; (4) Flexibility of self-organized structure.

These common parts are used in bio-inspired and man-made self-organization process (see, Fig. 7).

Fig. 7 shows also the structure and the main steps (right column) of bio-inspired self-organization processes in things natural and things man-made.

Let us consider one of simple examples of natural self-organization with natural operators.

Figure 7. Structure of self-organization processes in things natural and things manmade and selforganization evolution

Steps of Bio-Inspired Self-Organizatio Evolution

1. Templating

Organization of molecular component by a template

2. Self-assembly

---------------------------------1

Molecular self-assembly; i

Control via conformation I

3. Self-organization

---------------------------------1

Collective behavior of i interactive I

(possible self-assembly) !

components 1

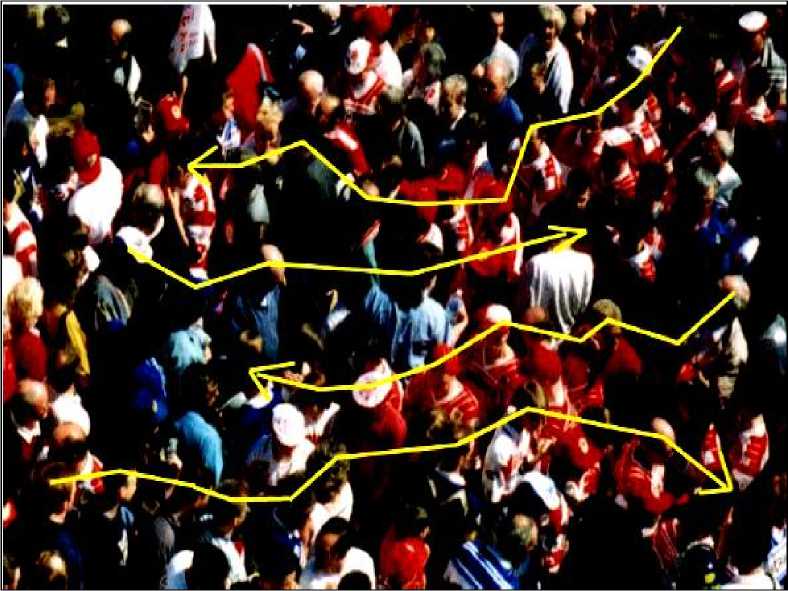

Example : Crowd behaves as excitable media during Mexican wave . Mexican wave, or La Ola, first widely broadcasted during the 1986 World Cup held in Mexico, is a human wave moving along the stands of stadiums as one section of spectators stands up, arms lifting, then sits down as the next section does the same (see, Figs 8 and 9a).

Remark. Here we are used variants of models originally developed by I. Farkas, D. Helbing and T. Vicsek (1994) for the description of excitable media to demonstrate that this collective human behavior can be quantitatively interpreted by methods of statistical physics. Adequate modelling of reactions to triggering attempts provides a deeper insight into the mechanisms by which a crowd can be stimulated to execute a particular pattern of behavior and represents a possible tool of control during events involving excited groups of people. Using video recordings it was analyzed 14 waves in stadiums with above 50.000 people: the wave has a typical velocity in the range of 12m/s (20 seats/s), a width of about 6-12m (~15 seats) and more frequently rolls in the clockwise direction. It is generated by the simultaneous standing up of not more than a few dozens of people and subsequently expands over the entire tribune acquiring its stable, close to linear shape (see dedicated to the present work, offering further data and interactive simulations).

The relative simplicity of the Mexican wave allows us to develop a quantitative treatment of this kind of collective behavior by building and simulating models accurately reproducing and predicting the details of the associated human wave. We show here that the well-established approaches to the theoretical interpretation of excitable media – originally created for describing such processes as forest fires or wave propagation in heart tissue – can readily be generalized to include human social behavior.

Figure 8. A human wave moving along the stands of stadiums

It was developed two mathematical simulation models, a minimal and a more detailed one to demonstrate the robustness of self-organization approach.

Model : If the weighted concentration of active people within a radius of R around a person is above the threshold of the person ci (randomly chosen from [ c-Dc, c+Dc ]) then the person is activated. Weights decrease exponentially with distance and changed linearly with the cosine of the direction so that people on the left of a person have an influence w0 times as strong as those on the right. The direction of the wave’s motion is determined by this anisotropy due to spontaneous symmetry breaking at the early stages resulting from anticipation and the anisotropy in perception since the majority of people are right handed. Group trying to induce a wave and the average threshold c. Parameters are as above, and each point represents the average of 128 simulations.

In analogy with models of excitable media, in both versions people are regarded as excitable units: they can be activated by an external stimulus (a distance and direction-wise weighted concentration of nearby active people exceeding a threshold value c ). Once activated, each unit follows the same set of internal rules to pass through the active (standing and waving) and refractory (passive) phases before returning to its original, resting (excitable) state. While the simpler version distinguishes three states only (excitable/active/passive) and accounts for variations in the individual behavior by means of transition probabilities between the states, the elaborate version takes into account an actual, deterministic activity pattern in more detail.

The two versions of the model we considered differ in the way stochasticity, i.e., differences and fluctuations regarding the above behavioral patterns are represented (for details see .

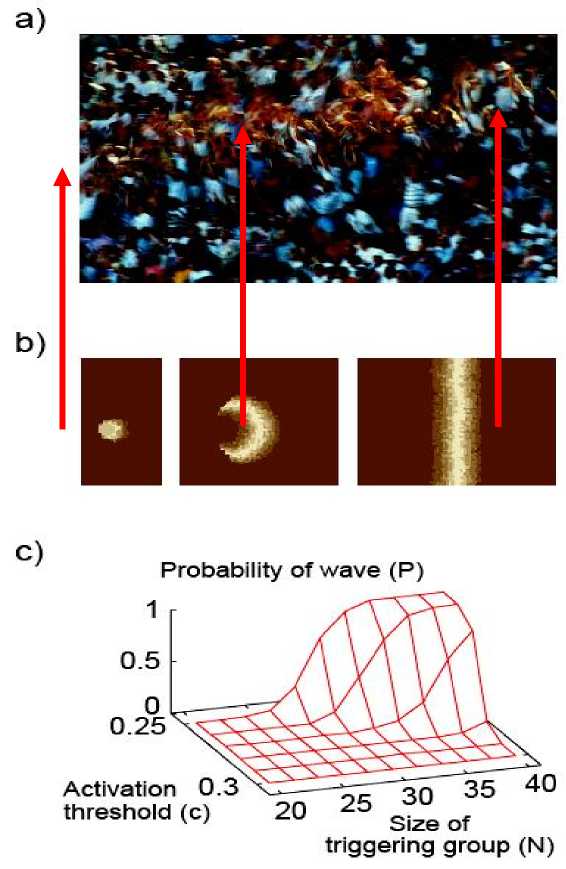

Next, we employed these models to get an insight into the conditions for triggering a wave. Figure 9b shows the evolution of a wave provoked by the simultaneous excitation (standing up) of a small group of units (people). Using parameters deduced from video recordings for the sizes and characteristic times of the phenomenon (interaction radius, reaction/activation times and probabilities) we have been able to reproduce the above described observations concerning the size/form/velocity and stability of the wave.

Figure 9. Photo and simulations of the Mexican wave

-

(a) Photo of a Mexican wave; (b) Snapshots of the n state model, where, after activation, a person deterministically goes through na active states (stages of standing up) and nr refractory states. The wave is shown at 0.5s, 2s, and 15s after the triggering event on a tribune with 80 rows of seats. Brighter shades correspond to higher level of activity. Parameters are na = nr = 5, c = 0.25, Dc = 0.05, R = 3 and w0 = 0.5; (c) P(N, c), the ratio of successful triggering events, as a function of the number of people N in the group trying to induce a wave and the average threshold c.

Fig. 9c displays the probability of generating a wave when a small group of varying size tries to trigger it under different excitation threshold values.

The results clearly demonstrate that the self-organization dependence of the eventual occurrence of a wave on the number of initiators is a rather sharply changing function, i.e., triggering a Mexican wave requires a critical mass. The present approach is expected to have implications for the treatment of situations where influencing the behavior of a crowd is desirable. In particular, in the context of violent street incidents associated with demonstrations or sport events, it is essential to know under what conditions groups can gain control over the crowd and how fast and in which form this perturbation/transition can spread.

Example: The collective behavior of humans within crowds. In our earlier discussion of humans we considered the case where people interact through environmental modification (trail formation), and largely ignored the influence of direct interactions among pedestrians. However, within urban setting individuals can seldom influence their surroundings in this way. Furthermore, when walking down a busy street, or corridor, one balances global goal-oriented behavior (desire to reach a certain point) with local conditions created by the motion and positions of other nearby pedestrians. Each member in such a crowd is likely to have a lim- ited perceptive radius in which information to determine future movement must be gathered. Consequently, larger scale patterns in crowds are seldom evident from an individual pedestrian’s viewpoint.

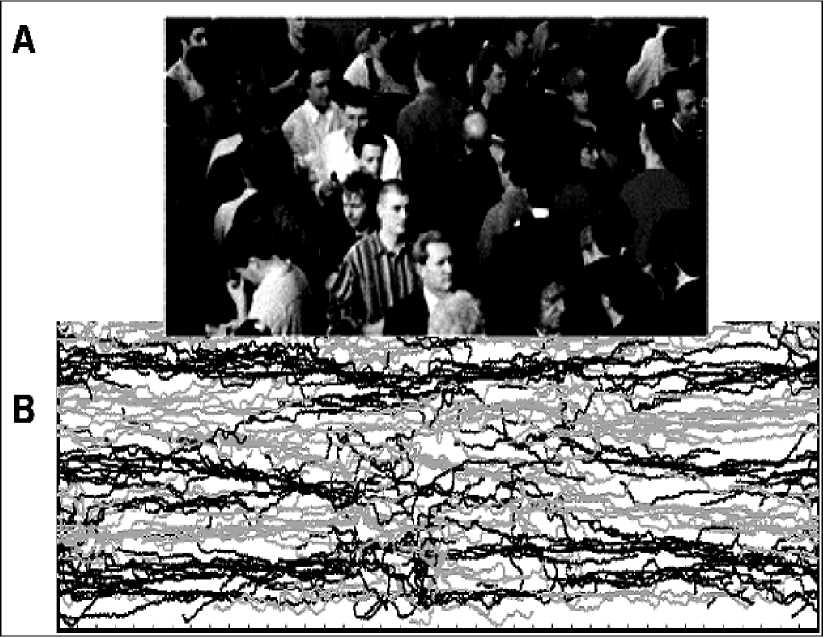

However, if viewed from above crowds often do display obvious and consistent patterns. One of the most common of these can be seen when there is bi-directional traffic, as for example when people are trying to move both ways along a walkway, or crossing the road at a crosswalk. Under such circumstances ‘bands’ of pedestrians form: each band composed of a number of pedestrians with a common directional preference ( Milgram and Toch , 1969).

Fig. 10a demonstrates this situation.

Remark . The flow of pedestrians under conditions of crowding was likened by Henderson (1971) to the motion of fluids or gases. He used a well-known technique for the mathematical analysis of such materials, the Navier-Stokes equation for fluid dynamics, to simulate a crowd. Although providing an insight into how individual-level (microscopic) properties lead to large-scale (macroscopic) properties, such an approach is difficult to implement since the conservation of energy and momentum assumptions for a physical system do not apply to a biological system in which the individual components are «self-driven». Despite this, Helbing (1992) was able to modify such equations with respect to some of these properties, but analytic solutions proved hard to find. The most promising approach to studying crowd behaviors comes from individual-based modeling.

Figure 10. Simulation of pedestrian dynamics showing lane formation (from Couzin, 1999).

The successive positions (trajectories) of individuals with a desire to move to the left are shown in gray.

The positions of those individuals intending to move to the right are shown in black

Influence of repulsion (collision avoidance). Helbing and Molnár (1995) developed a simple individualbased model of pedestrian motion in which they consider people moving in opposite directions along a corridor. This simple geometric representation of space allows the assumption that all individuals have a desire to move only in one direction or another along the walkway. However, pedestrians will also tend to avoid collisions by decelerating and turning away if they come into close contact with one another. When no other individuals are within a specified local range, individuals will tend to accelerate to a desired speed, and orient towards their destination. This simple behavioral response alone can account for the formation of bands when there is bi-directional traffic. Individuals meeting others head on will have «strong» interactions in which they are likely to slow down and move aside to avoid collisions. Initially this occurs frequently. How- ever, individuals that find themselves behind others moving in the same direction are less likely to have to perform such extreme avoidance maneuver, and in turn they «protect» others behind them, from head on avoidance moves. Given a sufficiently long corridor (and a sufficiently high traffic flow for interactions among pedestrians to be an important factor) the system will self-organize into lanes. Individuals entering the corridor (at random positions) move around in the direction perpendicular to their desired direction of travel when they interact with oncoming pedestrians. However, if by chance they fall in behind another individual moving in the same direction this is a more «stable» state.

Thus the system naturally self-organizes into a situation where pedestrians are in the «slipstream» of others moving in the same direction as themselves, thus creating bands, and reducing movement in the direction perpendicular to desired motion (see, Fig. 10b). Helbing and Molnár (1995) also demonstrated in their model that the number of bands that tend to form scales linearly with the width of the walkway.

This demonstrates that there is a characteristic length-scale to the pattern-forming process: that is, from any point in the system statistically similar motions is occurred one wavelength away.

Influence of attraction to other pedestrians . Clearly one does not need to invoke complex individual behavior to explain the banding patterns found in human crowds. The above model shows how individuals would «naturally» occupy space (in the dimension perpendicular to desired direction of travel) in which others ahead and behind them tend to have a similar direction of motion. It is possible in real crowds, however, that individuals actively (as well as passively) seek such positions. That is, instead of finding such positions by chance, as in the previous model, they will tend to deliberately walk behind individuals moving in the same direction as them selves. For example, Couzin (1999) simulated the motion of pedestrians crossing a road at a crosswalk. Given the type of rules described above the system requires some time to «find» the collision-minimization state. Consequently, in the crosswalk situation, although some banding does occur, congestion is still relatively high (Fig. 11a). However, if one adds a supplementary rule such that an individual will exhibit a propensity to follow other individuals moving in their desired direction, then bands tend to form much more readily, thus reducing head-on collisions and increasing the rate of flow (Fig. 11b). On a crosswalk, such bands begin to form even before the pedestrians moving in different directions meet. Thus the groups act as «wedges» when they come into contact with one another allowing the bands to interlace more readily when they reach the central area of the walkway. Thus, although attraction is not a necessary condition for bands to form in crowds, it decreases the time taken for bands to develop, and increases the flow rate more rapidly than does avoidance alone.

Figure 11. Simulation of pedestrians attempting to move across a crosswalk

-

(a) where individuals just exhibit repulsion from others flow is less smooth than when, (b) they exhibit repulsion but also attraction towards others that have a similar desired direction [Gray – individuals intending to move left. Black – individuals attempting to move right]

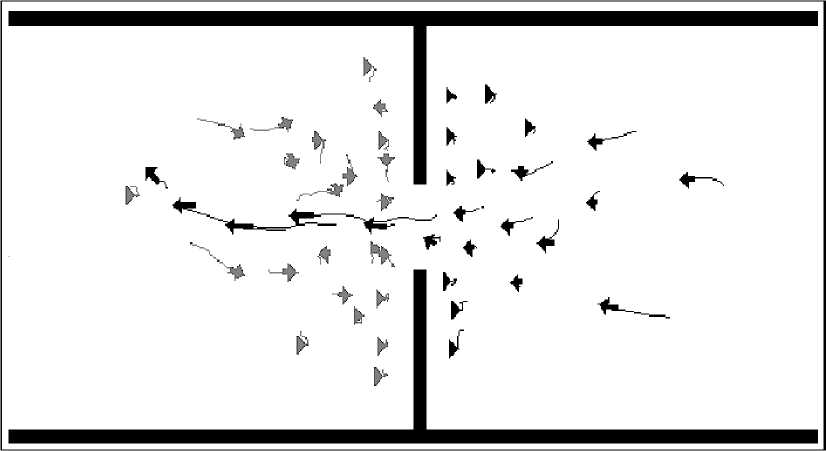

The influence of the geometry of the environment. In these pedestrian models, the geometry of the environment is very simple. However, what happens when one introduces an obstacle within the environment? Helbing and Molnár (1995) investigated how their model behaved when they placed a doorway in the corridor. What they found was that, given a sufficient density of pedestrians, oscillations in alternating flows of passing direction at the doorway occur. This occurs because the “pressure” of pedestrians at one side of the door eventually results in an individual being able to make it through the door. This makes it easier for indi- viduals with the same desired direction to follow, resulting in a unidirectional flow of individuals through the doorway, as shown in Fig. 12.

Figure 12. Simulation of pedestrians at a doorway exhibiting oscillations of flow

Remark. In Fig. 12 individuals moving to the left have temporarily monopolized the doorway. The decrease in «pressure» to the right of the door, caused by this exodus, will shortly allow those standing to the left of the doorway to block, and then to temporarily monopolize the doorway, and so on. Image modified from the simulation results that available from at

This reduces the «pressure» of pushing pedestrians at that side of the door, which will then result in a situation where the flow is stopped, and then individuals moving in the other direction are able to pass through (since the «pressure» on their side is now greater), and so on. If the doorway is widened, changes in direction of flow become more rapid. It was also found that, given the same total width of doorway, two half-sized doors near the walls of the corridor increase the rate of flow of pedestrians relative to a single door. This is because, due to the mechanism of band-formation described above, each door becomes used by pedestrians flowing in a common direction for relatively long periods of time. Individuals leaving their respective doorway in one direction «clear» the space ahead of the door for their successors.

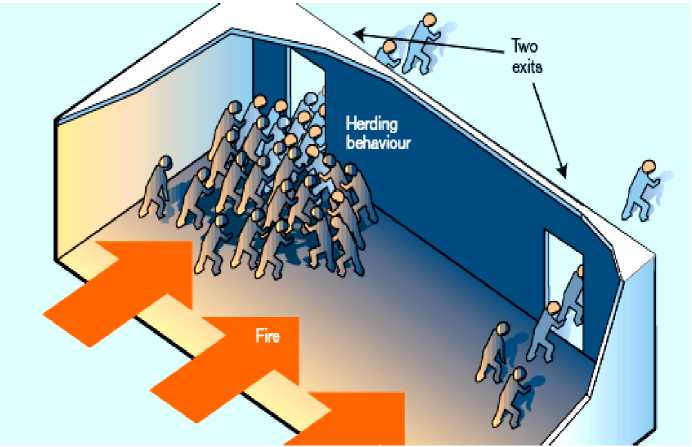

Crowd behavior and emergency situations . Under certain extreme conditions, such as when people are evacuating from a crowded building, panic can result in pedestrians being injured or killed through crushing or trampling. In some cases crushing can occur in the absence of any external factor (e.g. fire) resulting instead from the impatience of queuing individuals, who having predominantly only local information, push forward. The physical interactions among members of a crowd can add up to because dangerous pressures up to approximately 4,450 Nm -1 , which can cause brick walls to collapse, bend steel barriers, and result in a large number of fatalities ( Elliott and Smith , 1993). In an attempt to understand better such collective situations, Helbing et al . [4] extended their models of pedestrian behavior to include a «body force», which counteracts the compression of bodies, and a «sliding friction force» which impedes relative tangential motion within crowds. Furthermore, they assume that, within such crowd situations, people exhibit a greater degree of stochasticity (fluctuations) in their movement, and a higher desired velocity, due to the psychological effects of panic ( Kelly et al. , 1965). The model showed that increasing the value of either, or both, of these parameters caused an increase in evacuation time from a building by increasing the degree of interpersonal friction. This resulted in blockages which occurred especially in the vicinity of bottlenecks. Thus, people fleeing from a building can decrease their chances of survival by attempting to move as fast as possible, or by performing uncoordinated movement through nervousness or panic.

Within conditions where individuals have very restricted information about their local surroundings, such as in a smoke-filled room, Helbing et al . [4] investigated the possibility that people may respond not only individualistically, but also in response to the motion of individuals near them, which they term a «herd-ing effect». Under such conditions neither pure individualism nor herding behavior performs well. Following just the individualistic rule, the discovery of an exit becomes a largely random process for each individual.

Although herding can result in groups of individuals escaping if an exit is found, it is more likely that the crowd will move in the same, blocked, direction.

However, if people are assumed to use an intermediate strategy combining both individualism and herding, then the rate of escape is maximized, given the assumptions of the model. These models of human crowds are based on a simplified set of plausible interactions, and as such provide useful insights into the general behavior of such groups under a variety of conditions. There is, however, a need for further empirical studies, which are lacking despite the economic and/or social benefits of such research (e.g. in designing facilities so to reduce risk during evacuation). We encourage initial studies to be made of crowds within relatively simple environments, such as on walkways, where an individual’s desired direction of travel can be better judged than for example in a crowded street, where motivations may change dynamically and be influenced by many more factors.

Modelling a crowd composed of discrete individuals rather than a continuous fluid clearly brings added complications. Helbing et al . model «non-fluid» crowd properties, such as the «faster-is-slower» phenomenon in which people in a rush end up going slower. They also investigate the best evacuation strategy for people in a smoke-filled room (see, Fig. 13).

Figure 13. How crowd behavior affects escape from a smoke-filled room

Previous simulations of pedestrian behavior in crowds have used a model based on fluid flow through pipes, but these ignored the actions of individuals. According to the individual-centered model of Helbing et al. [4], the evacuation of pedestrians from a smoke-filled room with two exits can lead to herding behavior and clogging at one of the exits. By contrast, a traditional fluid-flow model would predict the efficient use of both exits. A more individual-centered approach is required to reproduce the behavior of real crowds. Such information can then be used to work out low-risk designs for the width of corridors, the number and position of doors, and the size of areas where people may gather. But these types of study can also provide us with a wider range of possible solutions to crowd problems. The crowd composed of individual people can respond to information directed towards them, to help them choose the most appropriate direction to take or the most appropriate exit to use.

Gathering data during genuine evacuation procedures will always be problematic (practically, and in some cases ethically), but data gathered from practice evacuations may be very useful in testing, and further improving, current models. The importance of such safety issues has been further emphasized by the events of September 11 th 2001 where large, highly populated buildings («The World Trade Center» in New York and «The Pentagon» in Washington D.C.), and the streets around these buildings, had to be evacuated.

Remark. The model presented by Helbing et al. is just one of many possible models. To decide whether a particular model is an accurate description of real life, or to determine which model is the «best» for the situation under consideration, requires real data to compare with each model’s predictions. But such data are scarce or non-existent and may be extremely difficult to collect. With any type of mathematical modeling we always have to be careful to distinguish between «real life» and our attempt to model it. Failing to recognize this difference can have serious consequences. But provided we are aware of when it is appropriate to use a particular model, it can provide valuable information to guide the planning process, for construction and for dealing with emergencies. Perhaps perfect safety is unattainable, but improved models of crowd dynamics can help to increase our safety in crowded situations.

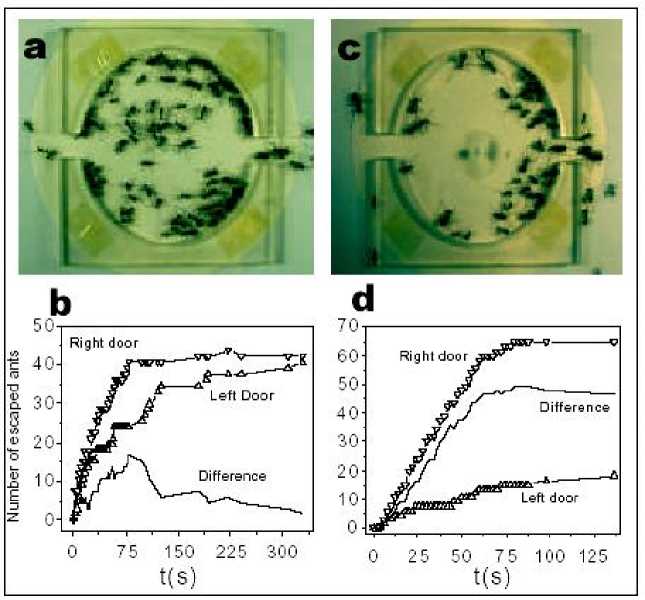

Example . One of the most unexpected phenomena predicted by Helbing et al. is the symmetry breaking in the escape from a room with two identical exits. Here we describe experiments on ants that corroborate this behavior and show that the escape dynamics of ants under controlled panic can serve as a model to study humans in analogous situations. In a first type of experiments (Experiment I), it was introduced a group of approximately 80 individuals of the ant species Atta insularis collected from natural nests, into a circular cell covered by a glass plate with two exits symmetrically situated at left and right (see, Fig. 14a), which were initially blocked.

Figure 14. Escape of ants from a cell with two symmetrically located exits

Experiment detail description (E. Altshuler, O. Ramos, Y. Nuñez & J. Fernández, Panic-induced symmetry breaking in escaping ants , 2005). The cell consists in an acrylic drum of 80 mm diameter and 5 mm height, with two exits 10 mm wide symmetrically situated at left and right positions. The drum rests on an circular piece of filtering paper laying on an horizontal surface, and is covered by a flat glass plate of 3 mm thickness with a hole of 2 mm diameter situated at the center of the drum. Approximately 80 ants from the species Atta insularis are added with both exits blocked by acrylic bars. (a); ant distribution several seconds after opening the exits at t = 0. (b); number of ants abandoning the cell as time goes by. (c); ant distribution several seconds after adding 50 ml of an insect repellent fluid ( Citronela , Labiofam, Cuba) though the central hole, and then opening the exits at t = 0 (note a circular spot of repellent fluid of approximately 20 mm diameter at the centre of the cell) (d). Clogging of ants at the right exit is clearly visible (d), number of ants abandoning the cell as time goes by, quantitatively demonstrating the symmetry breaking when repellent is added. Small deviations from the ideal circular shape of the repellent spot or from its location at the center of the setup did not produce important effects in the experimental output. Then, we opened the exits synchronously, and counted the number of ants abandoning the cell through each exit until it was empty.

Fig. 14b shows a graph describing quantitatively the result of a sample run of Experiment I. Although the difference in the use of the two doors eventually reaches a value of 15 ants at a certain stage of the run (roughly corresponding to 20% of the total of escaping ants), it becomes clear that both doors have been used almost symmetrically at the end.

A very different output, however, results from a second kind of experiments (Experiment II). In this case everything takes place as in Experiment I, with the important difference that, a few seconds before opening the doors, a dose of 25 or 50 ml of an insect repelling liquid is rapidly injected in the cell through a hole in the covering glass, producing a disk-shaped spot of the substance at the center of the filtering paper on which the whole setup rests (Fig. 14c). A sample output of Experiment II is shown in Fig. 14d. Differently from Experiment I, one of the doors is always much more used to escape than the other one, which can be described as a symmetry breaking induced by «panic» associated to the repelling agent. In the specific run depicted in Figs 14c,d the difference in the use of the two doors reached nearly 50 ants at the end of the experiment (around 60% of the total number of escaping ants). Besides symmetry breaking, a second difference between Experiment I and II clearly seen in Fig. 14b, d is that, in the latter, the total time of escape is much smaller, probably because the «desired velocity» of the ants increases due to the effect of the repelling agent. Similar results were observed in several runs of Experiment I and II: statistics showed that the difference in use between the two exits at the end of the experiment were, in average, 12% for Experiment I and 51% for Experiment II. The «preferred exit» in Experiment II was either the left or the right one, with no connection to any source of asymmetry in the experimental set-up or to the spatial distribution of ants inside the cell before opening the doors.

The experimental results were also quite similar when using ants collected from the same nest, or from different nests no more than 20 meters apart from each other. They were also similar when repeated on the same group of ants.

These results are coherent with the theoretical predictions reported by Helbing et al. They defined a «panic parameter» which induces individualistic behavior (each pedestrian tends to find an exit by him/herself) when low, and herding behavior (pedestrians tend to follow the crowd) when high. In their two-exit room simulations, the authors find that a high value of the panic parameter produces jamming at one of the doors, thus provoking inefficient escape.

This tendency shows that their panic parameter is related to the effect of the repelling substance used in experiments. In spite of the huge behavioral differences between humans and ants in normal conditions10, experiments suggest that some features of the collective behavior of both species can be strikingly similar when escaping under panic.

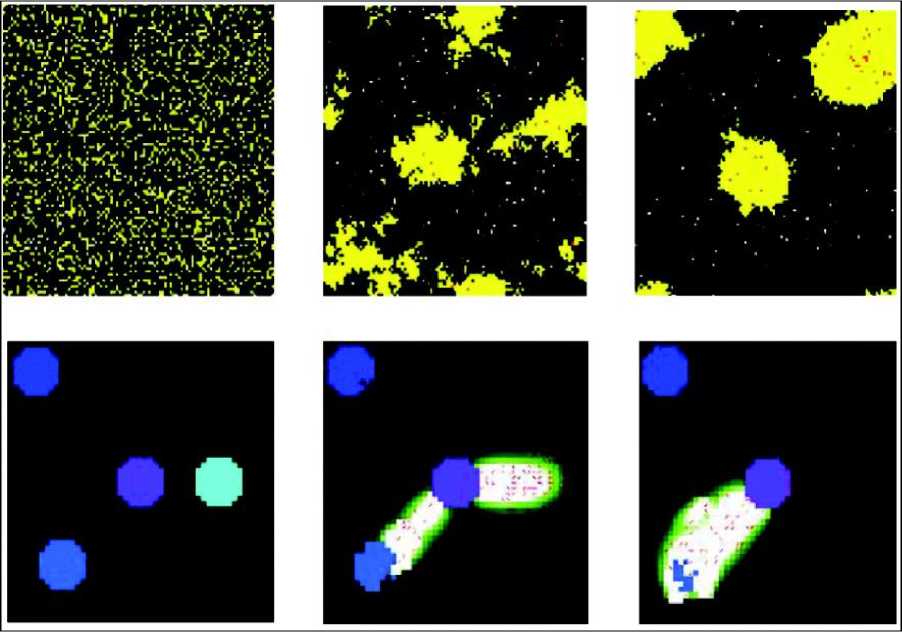

Applications of swarm intelligence (SI) and ant colony self-organization . Wasps, bees, ants and termites all make effective use of their environment and resources by displaying collective SI. Termite colonies – for instance – build nests with a complexity far beyond the comprehension of the individual termite, while ant colonies dynamically allocate labor to various vital tasks such as foraging or defense without any central decision-making ability. Recent research suggests that microbial life can be even richer: highly social, intricately networked, and teeming with interactions, as found in bacteria. What strikes from these observations is that both ant colonies and bacteria have similar natural mechanisms based on Stigmergy and SelfOrganization in order to emerge coherent and sophisticated patterns of global foraging behavior. Keeping in mind the above characteristics a Self-Regulated Swarm (SRS) algorithm was proposed which hybridizes the advantageous characteristics of SI as the emergence of a societal environmental memory or cognitive map via collective pheromone laying in the landscape (properly balancing the exploration/exploitation nature of our dynamic search strategy), with a simple Evolutionary mechanism that trough a direct reproduction procedure linked to local environmental features is able to self regulate the above exploratory swarm population, speeding it up globally. In order to test his adaptive response and robustness, it has recurred to different dynamic multimodal complex functions as well as to Dynamic Optimization Control problems, measuring reaction speeds and performance. Final comparisons were made with standard Genetic Algorithms , Bacterial Foraging strategies , as well as with recent Co-Evolutionary approaches. SRS ’s were able to demonstrate quick adaptive responses, while outperforming the results obtained by the other approaches. Additionally, some successful behaviors were found: SRS was able to maintain a number of different solutions, while adapting to unforeseen situations even when over the same cooperative foraging period, the community is requested to deal with two different and contradictory purposes; the possibility to spontaneously create and maintain different subpopulations on different peaks, emerging different exploratory corridors with intelligent path planning capabilities; the ability to request for new agents (division of labor) over dramatic changing periods, and economizing those foraging resources over periods of intermediate stabilization. Finally, results illustrate that the present SRS collective swarm of bio-inspired ant-like agents is able to track about 65% of moving peaks traveling up to ten times faster than the velocity of a single individual composing that precise swarm tracking system. This emerged behavior is probably one of the most interesting ones achieved.

Example . Networks that control the flow of resources and information are ubiquitous in nature. Moreover, the efficiency of such networks may determine the fundamental scaling properties of certain organisms. The foraging networks of terrestrial animal societies, and especially those of certain ant species, provide unrivalled opportunities to quantify both the behavior of individual items of traffic and the larger-scale patterns of traffic flow. For these reasons, they are ideal subjects with which to test mathematical models that link the behavior of small components (in this instance, individual ants) to the overall efficiency of the dynamic structures they generate.

Many ant species create chemical (pheromone) trail networks, not only to transport resources and/or information swiftly and efficiently during foraging, but also for exploration, emigration and coordinating colony defense ( Holldobler & Wilson , 1990). Just as the functioning and success of modern cities are dependent on an efficient transportation system, the effective management of traffic is also essential to insect societies. The flow of traffic along trails is likely to be particularly important in the New World army ant Eciton burchelli . Colonies of this species may have half a million or more workers, and the ants are strict carnivores. They stage huge swarm raids, in pursuit of arthropod prey, with up to 200 000 virtually blind foragers forming trail systems that are up to 20 m wide and 100 m or more long ( Schneirla , 1971; Franks et al. 1991; Gotwald 1995; Sole ´ et al . 2000). In a single such raid a colony may retrieve more than 30 000 prey items ( Franks 1985). Moreover, these massive raids are severely time constrained. At most, they begin at dawn and end at dusk, when the colony emigrates, under the cover of darkness, to a new nest-site and foraging arena.

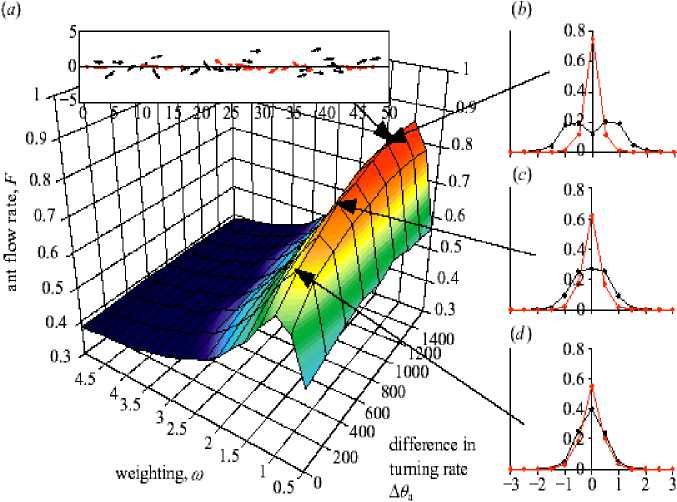

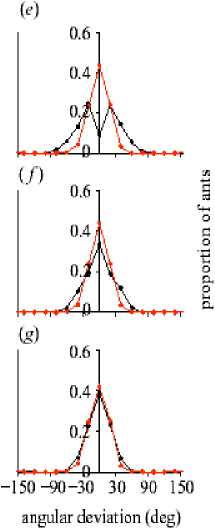

For this reason, E. burchelli colonies need to operate at a very high tempo (see Franks et al. 1999; Boswell et al. 2001). These colonies form traffic lanes in their main foraging columns ( Franks 1985). It was investigated how and why these traffic lanes form. It was shown how the movement rules of individual ants on trails can lead to a collective choice of direction and the formation of distinct traffic lanes that minimize congestion. The results of a new model with a quantitative study of the behavior of the army ant Eciton burchelli was developed and evaluated. Colonies of this species have up to 200 000 foragers and transport more than 3000 prey items per hour over raiding columns that exceed 100 m. It is an ideal species in which to test the predictions of the model because it forms pheromone trails that are densely populated with very swift ants. The model explores the influences of turning rates and local perception on traffic flow. The behavior of real army ants is such that they occupy the specific region of parameter space in which lanes form and traffic flow is maximized.

Remark . Lane formation is also known to emerge spontaneously in human crowds under certain conditions. Where there is bi-directional traffic (such as on a walkway or crosswalk) «bands» of pedestrians can form, each band composed of pedestrians with a common directional preference ( Milgram & Toch 1969). These large-scale patterns are seldom evident to an individual pedestrian because they often have a limited perceptive radius in which information to determine future movement must be gathered. Like army ants, pedestrians in crowds balance goal oriented behavior (desire to reach a destination) with local conditions created by the motion and positions of nearby pedestrians (avoidance of collisions). It is the balance of these «so-cial forces» that results in lane formation. Individuals meeting others head-on will have «strong» interactions in which they are likely to slow down and turn away to avoid collisions. Individuals that find themselves behind others moving in the same direction as themselves are less likely to perform such extreme avoidance maneuvers, and, in turn, they «protect» others behind them from head-on avoidance moves, increasing the flow rate. Thus, given a sufficient density of pedestrians, they will spontaneously form lanes ( Helbing & Molna'r 1995). The number of lanes that form in human crowds scales linearly with the width of the walkway ( Helbing & Molna ´r 1995). Thus, there is a characteristic length-scale to this pattern-forming process: that is from any point in the system statistically similar motions occurred one wavelength (lane width) away (in the direction perpendicular to the desired motion). This is in contrast to the fixed three-lane system of army ants, which results from the asymmetry in interactions (absent in human crowds) combined with a tendency for all ants to move towards the highest concentration of pheromone. A further difference between ants and humans is that pedestrians can typically be expected to behave selfishly. That is, they will tend to minimize their own travel time, but this may be at the cost of others. An army ant colony, however, is composed of cooperative individuals. Thus, natural selection can build an adaptive pattern at the global level by selecting and modifying individual rules that encode collective patterns. The asymmetry in interactions we have revealed is therefore likely to have been selected for. However, there is another potential explanation that is not mutually exclusive: many ants returning to the nest are burdened with prey, and this may make them less maneuverable than unladed ants leaving the nest.

Why do army ants have a three-lane structure as opposed to one with just two lanes ?

Let us consider two models of ant’s motion behavior.

-

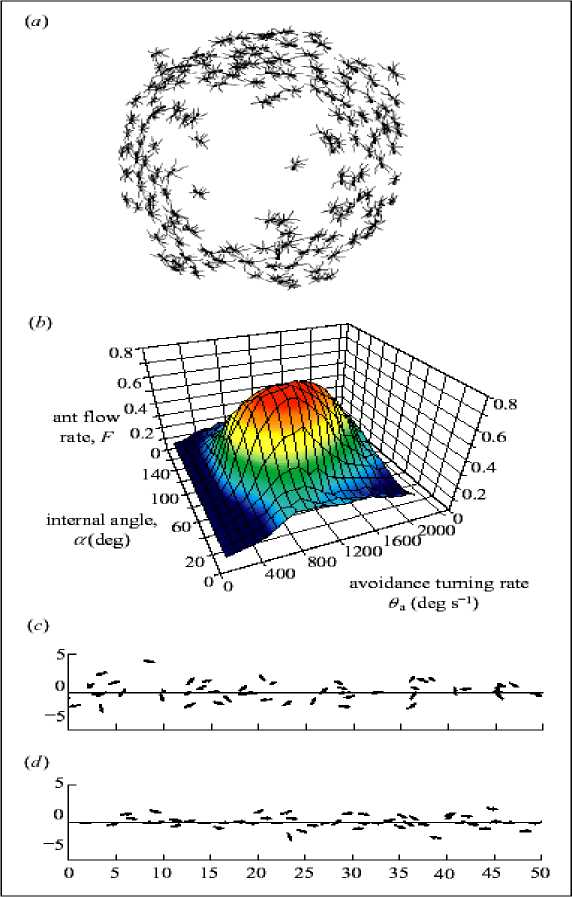

(i) Collective selection of a direction . The collective properties of the model during the generation of spatial pattern over a short but representative section of trail (equivalent to 50 cm) are investigated. Army ants are not only good at following trails but also have a propensity to form circular mills when moderate numbers are separated from a colony and restricted to a confined area, either in the laboratory (see, Figure 15 a ) or naturally in the field during exceptionally severe rainstorms ( Schneirla 1971; Franks et al. 1991; Gotwald 1995).

Figure 15. Circular milling

(a) Drawing of ants forming a circular mill in the laboratory (adapted from Schneirla 1971 by I.D.C.);

(b) The flow of ants is dependent on their ability to detect others and the rate at which they turn during avoidance maneuvers (N = 50, Qp = 500° s - 1 , ст = 0.01, Q = 1.2 x 10 - 6 g cm - 3 , C^ = 1.2 x 10 - 6 g cm - 3 , τ = 300 s. F was calculated at t = 5000, and the results shown are the means of 100 runs per parameter combination); (c) Ants begin the simulation at random positions and with orientations along the trail. Snapshot near the start of a simulation (t = 50) with a = 90° and 0a = 1000° s - 1 . Ants are depicted as arrows representing their instantaneous velocity (units: cm); (d) Simulated ants have selected a direction collectively (t = 3000)

After a period of disorder, the ants all begin moving in the same direction. This behavior is likely to reflect the ability of army ant colonies collectively to select a raid direction. Periodic boundary conditions are used, which make the simulation very similar to the circular mill, and investigate how the model parameters influence the collective behavior of ants on the trail section.

The flow of simulated ants, F , (measured as the normalized magnitude of the sum of the velocities of all individuals; values close to 0 represent little flow in a given direction, and those close to 1 represent high flow in one direction) is influenced strongly by the avoidance turning rate, θ , and the internal angle of the perception zone ahead of an ant, α (see, Fig. 15b). High flow rates occur when the ants select a direction collectively (see, Figs 15c, d). When θ a low, ant is require repeated collisions with others before their trajectory is influenced strongly enough for them to turn around. This causes congestion because ants turn slowly when they detect others, and, consequently, must slow down to avoid collisions. Conversely, when θ is very high, ants are change direction very quickly when others are detected, and their orientations do not become correlated. Similarly, when α a low, ant is cannot detect others ahead of them adequately, and are thus relatively insensitive to the positions of others. If α is high, however, they spend much of their time avoiding individuals that they would be unlikely to collide with. At intermediate values of these parameters ants are sufficiently sensitive, yet not overly sensitive, to the positions of others and, consequently, can select a direction collectively (hereafter α = 90°). In this region of parameter space stochastic fluctuations in the number of ants traveling in each direction leads to one direction being more common than the other. This change causes an autocatalytic behavioral response as ants opposing the main flow are forced to turn around through repeated head-on interactions. As more ants move in one direction, it becomes increasingly hard for individuals to move the opposite way, and this causes the ants collectively to select a (randomly determined) direction.

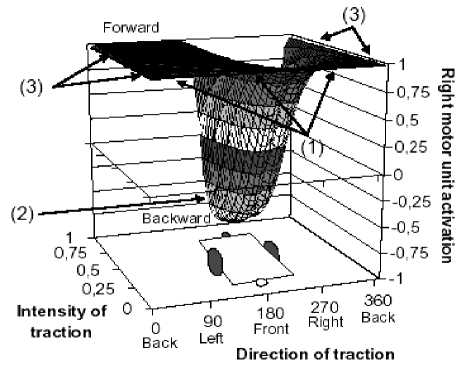

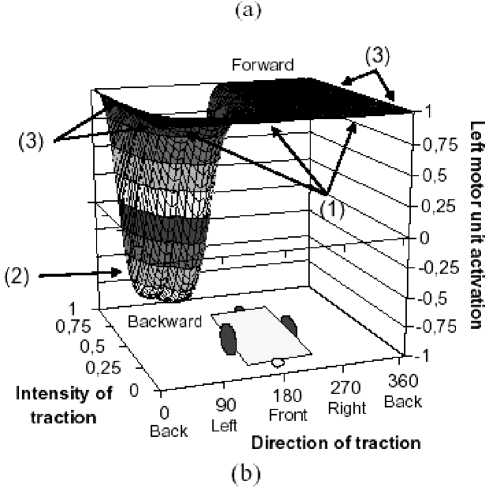

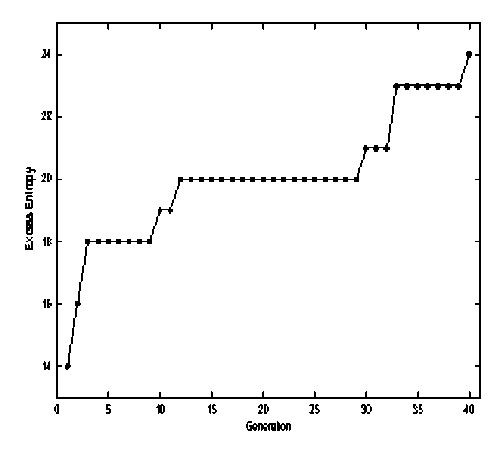

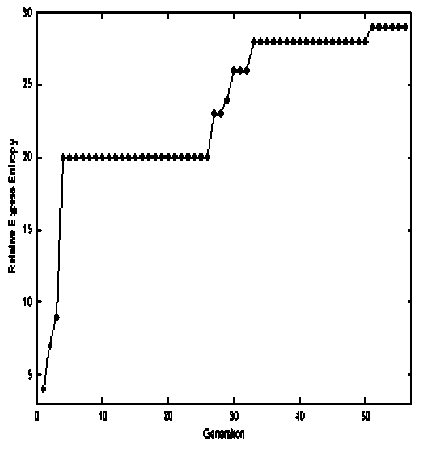

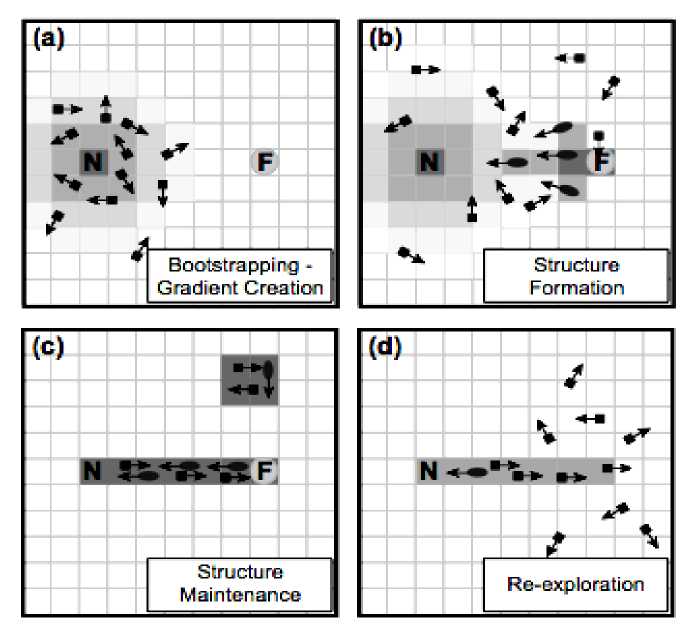

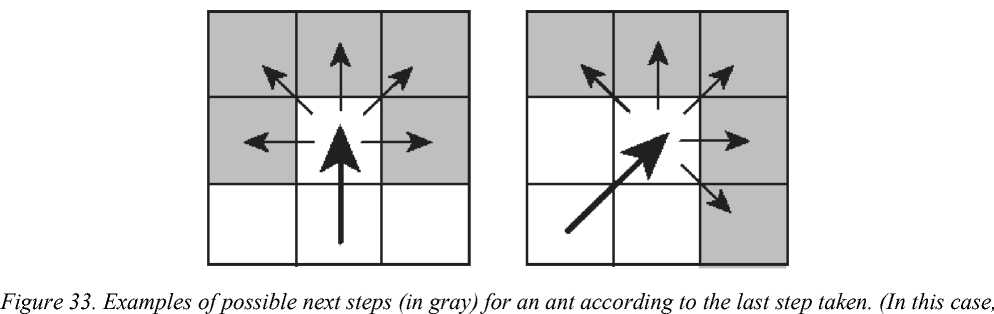

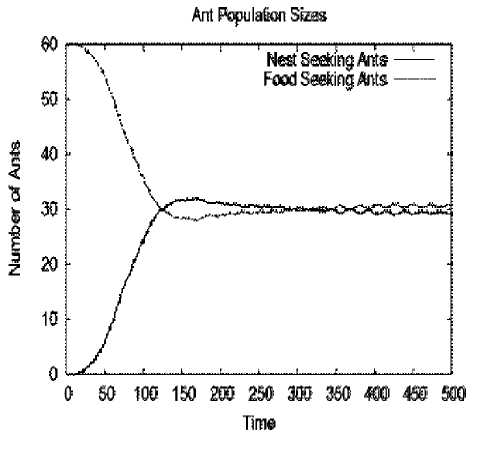

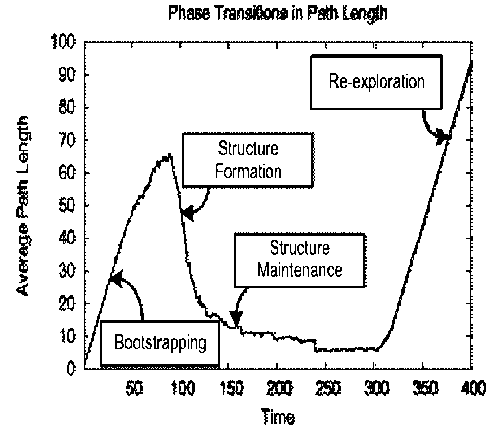

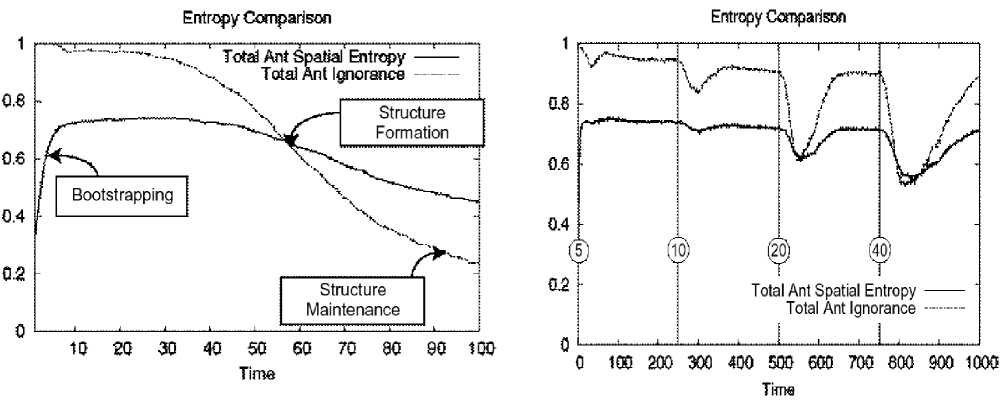

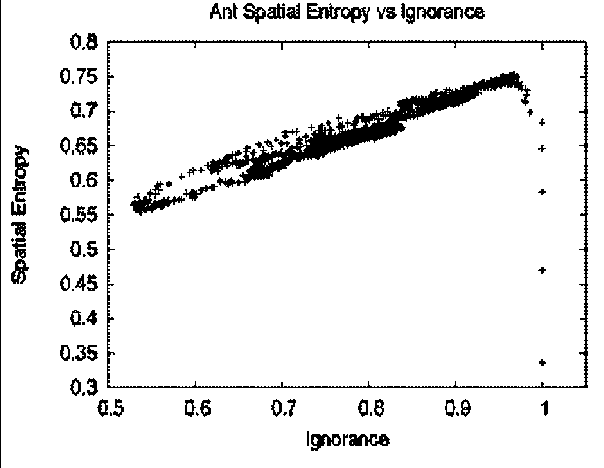

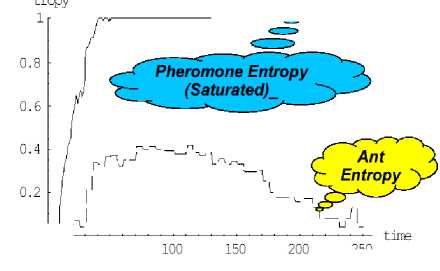

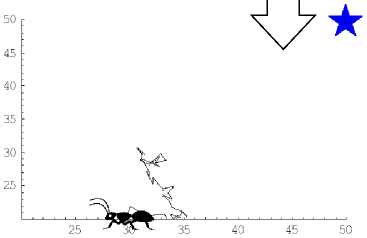

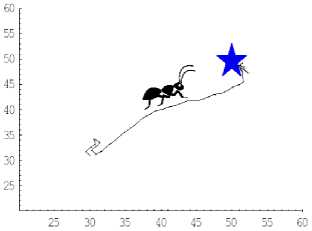

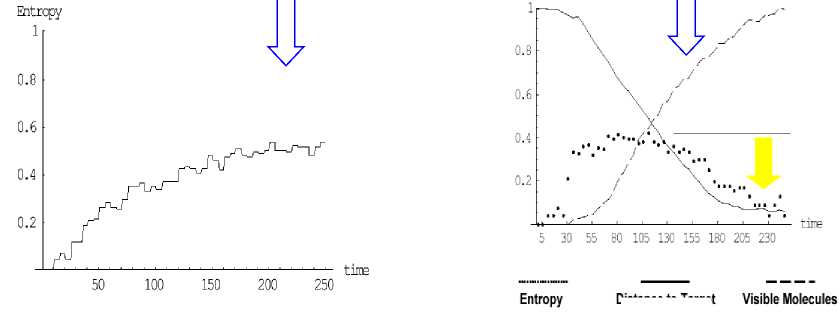

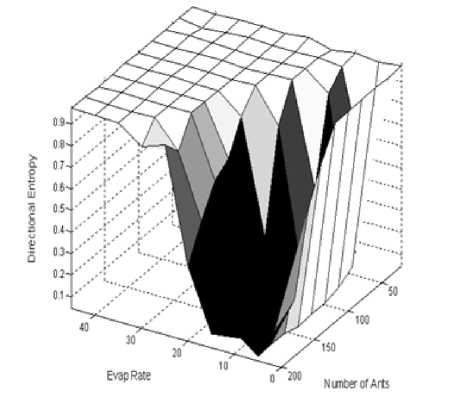

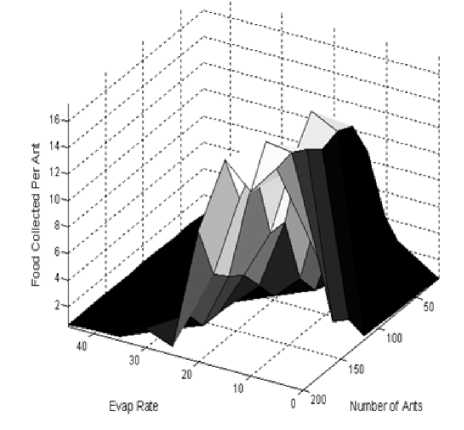

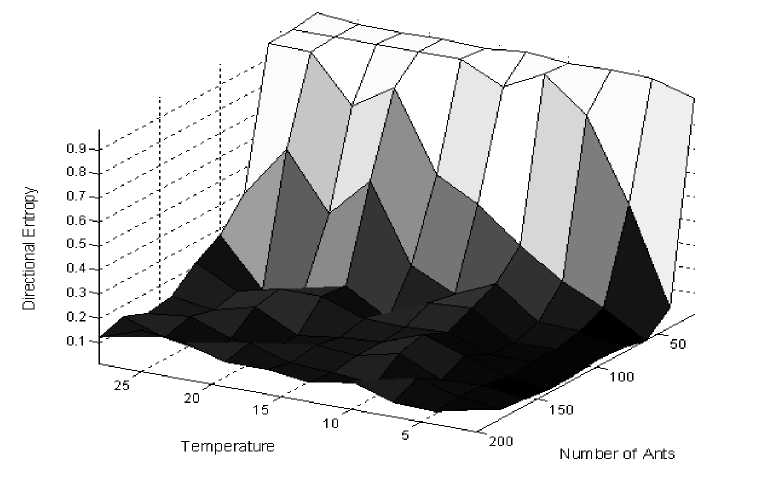

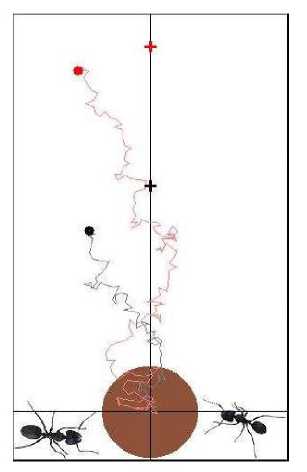

-