Satellite Image Classification and Segmentation by Using JSEG Segmentation Algorithm

Автор: Khamael Abbas, Mustafa Rydh

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 10 vol.4, 2012 года.

Бесплатный доступ

In this paper, a adopted approach to fully automatic satellite image segmentation, called JSEG, "JPEG image segmentation" is presented. First colors in the image are quantized to represent differentiate regions in the image. Then image pixel colors are replaced by their corresponding color class labels, thus forming a class-map of the image. A criterion for “good” segmentation using this class-map is proposed. Applying the criterion to local windows in the class-map results in the “J-image”, in which high and low values corresponding to possible region boundaries and region centers, respectively. A region growing method is then used to segment the image based on the multi-scale J-images. Experiments show that JSEG provides good segmentation and classification results on a variety of images.

Image segmentation, Image classfication, JSEG Algorithm

Короткий адрес: https://sciup.org/15012449

IDR: 15012449

Текст научной статьи Satellite Image Classification and Segmentation by Using JSEG Segmentation Algorithm

Image classification is an important part of the remote sensing, image analysis and pattern recognition. In some instances, the classification itself may be the object of the analysis. For example, classification of land use from remotely sensed data produces a map like image as the final product of the analysis. The image classification therefore forms an important tool for examination of the digital images. The term classifier refers loosely to a computer program that implements a specific procedure for image classification. The analyst must select a classification method that will best accomplish a specific task. At present, it is not possible to state which classifier is best for all situation as the characteristic of each image and the circumstances for each study vary so greatly. Therefore, it is essential that each analyst understand the alternative strategies for image classification so that he or she may be prepared to select the most appropriate classifier for the task in hand. At present, there are different image classification procedures used for different purposes by various researchers. These techniques are distinguished in two main ways as supervised and unsupervised classifications. Additionally, supervised classification has different sub classification methods which are named as parallelepiped, maximum likelihood, minimum distances and Fisher classifier methods [1]. Color image segmentation is useful in many applications. From the segmentation results, it is possible to identify Regions of interest and objects in the scene, which is very beneficial to the subsequent image analysis or annotation. The problem of segmentation is difficult because of image texture Some of the practical applications of image segmentation are

[2] :Medical Imaging ,Locate tumors and other pathologies, Locate objects in satellite images (roads, forests, etc.).Recognition Traffic, detection. Machine. If an image contains only homogeneous color regions, clustering methods in color space such as are sufficient to handle the problem. In reality, natural scenes are rich in color and texture. It is difficult to identify image regions containing color-texture patterns. The approach taken in this work assumes the following:

-

1 .Each region in the image contains uniformly distributed color-texture pattern.

-

2 .The color information in each image region can be represented by a few quantized colors, which is true for most color images of natural scenes.

-

3 .The colors between two neighboring regions are distinguishable - a basic assumption of any color image segmentation algorithm.

-

II. Image Classification

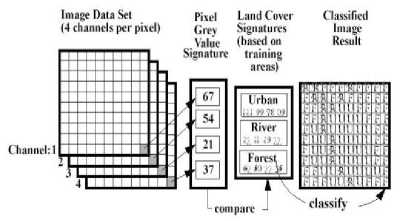

The intent of the classification process is to categorize all pixels in a digital image into one of several land cover classes, or "themes". This categorized data may then be used to produce thematic maps of the land cover present in an image. Normally, multispectral data are used to perform the classification and, indeed, the spectral pattern present within the data for each pixel is used as the numerical basis for categorization. The objective of image classification is to identify and portray the features occurring in an image in terms of the object or type of land cover these features actually represent on the ground as shown in (Figure 1) Image classification is perhaps the most important part of digital image analysis. It is very nice to have a "pretty picture" or an image, showing a magnitude of colors illustrating various features of the underlying terrain, but it is quite useless, unless to know what the colors mean. There are two main classification methods Supervised Classification and Unsupervised Classification [3].

Fig-1:-classification image

-

A. Supervised Classification[3]

With supervised classification, we identify examples of the Information classes (i.e., land cover type) of interest in the image. These are called "training sites "as shown in (Fig-2) . The image processing software system is then used to develop a statistical characterization of the reflectance for each information class. This stage is often called "signature analysis" and may involve developing a characterization as simple as the mean or the rage of reflectance on each bands, or as complex as detailed analyses of the mean, variances and covariance over all bands. Once a statistical characterization has been achieved for each information class, the image is then classified by examining the reflectance for each pixel and making a decision about which of the signatures it resembles most as shown in Figure 2 .

Figure 2. Supervised Classification

-

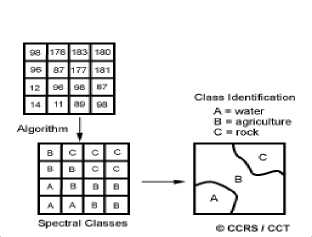

B. Unsupervised Classification

Unsupervised classification is a method which examines a large number of unknown pixels and divides into a number of classed based on natural groupings present in the image values as shown in (Fig-3). Unlike supervised classification, unsupervised classification does not require analyst-specified training data. The basic premise is that values within a given cover type should be close together in the measurement space (i.e. have similar gray levels), whereas data in different classes should be comparatively well separated (i.e. have very different gray levels).

The classes that result from unsupervised classification are spectral classed which based on natural groupings of the image values as shown in (Fig-3) ,the identity of the spectral class will not be initially known, must compare classified data to some form of reference data (such as larger scale imagery, maps, or site visits) to determine the identity and informational values of the spectral classes.

Figure 3. Unsupervised Classification

-

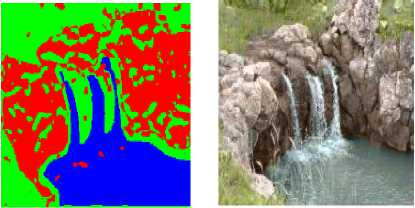

III. Segmentation [17]

In computer vision, segmentation refers to the process of partitioning a digital image into multiple segments (sets of pixels, also known as super pixels)as shown in (Figure 4).

Figure 4 .Segmentation image using JSEG Algorithm

The goal of segmentation is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. Image segmentation is typically used to locate objects and boundaries (lines, curves, etc.) in images. More precisely, image segmentation is the process of assigning a label to every pixel in an image such that pixels with the same label share certain visual characteristics. The result of image segmentation is a set of segments that collectively cover the entire image, or a set of contours extracted from the image (see edge detection) . Each of the pixels in a region are similar with respect to some characteristic or computed property, such as color, intensity, or texture. Adjacent regions are significantly different with respect to the same characteristics [2].

-

IV. Methodology

-

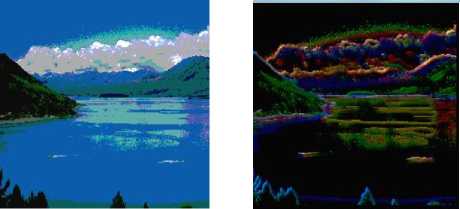

A. Color Quantization

In this paper, firstly, main image segmentation algorithm are quantized color in the image in order to obtain edges that can be used to differentiate multi scale in the image where a threshold value of quantization which it could be a dynamic value. As shown in Figure-7 threshold value is 50.

Figure 7.color quantization

-

B. Spatial Segmentation Algorithm

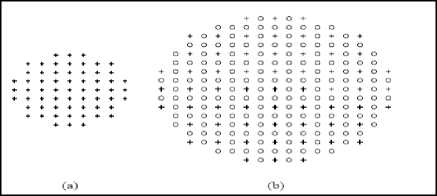

The measure J is defined as

The characteristics of the J-images allow us to use only ‘+’ points for calculating local J values, which forms the same basic window as in (a) [8] as shown in (Fig-5).

j =

S^ _ ( St

- Sw )

Figure 5. Spatial Segmentation

S W

S W

Figure 8. J-Image Calculation

-

C. J-image calculations

In this step the image is partitioned into windows to perform the calculation on each class independently, where the new value of pixels is extracted from the difference between original value of the pixel and the mean of its class as Eq.(1,2,3,4,5). The size of the local class determines the size of image regions that can be detected. classes of small size are useful in localizing the intensity/color edges, while large windows are useful for detecting texture boundaries. Often, multiple scales are needed to segment an image as shown in Figure (6,8)

m

= -1 "^z

1ST ^"^

z e= Z

Suppose Z is classified into C classes, Zi, i=1,…, C Let mi be the mean of the Ni data points of class Zi,

-

D. Region growing

The calculation is performed on J-image to perform multi scale from it. In the beginning the J-image is converted to gray scale level . Then find maximum pixel value and minimum pixel value for each class then make all the pixels in that class white then convert the path between maximum and minimum pixels to black color in order to obtain the multi sacle from image as shown in Figure (9).

Figure 9.Region growing

m i = -1 S z Ni zGZi

Let

S t = SI z — m\ I" z G Z

And

C

S W "^^1 S i i = 1

C

SSI |z i=1 zG zi

- ml

-

E. Valley Growing

The new regions are then grown from the valleys. It is slow to grow the valleys pixel by pixel. A faster approach is used in the implementation:

-

1. Remove “holes” in the valleys.

-

2. Average the local J values in the remaining unsegmented part of the region and connect pixels below the average to form growing areas. If a growing area is adjacent to one and only one valley, it is assigned to that valley.

-

3. Calculate local J values for the remaining pixels at the next smaller scale to more accurately locate the boundaries.

-

4. Grow the remaining pixels one by one at the smallest scale. Unclassified pixels at the valley boundaries are stored in a buffer. Each time, the pixel with the minimum local J value is assigned to its adjacent “valley” and the buffer is updated till all the pixels are classified [8].

Figure 10. Valley Growing

-

F. Region Merge

After region growing, an initial segmentation of the image is obtained. It often has over-segmented regions. These regions are merged based on their color similarity. The quantized colors are naturally color histogram bins. The color histogram features for each region are extracted and the distances between these features can be calculated. Since the colors are very coarsely quantized, in our algorithm it is assumed that there are no correlations between the quantized colors [8]. as shown in Figure 11.

Figure11. Region Merge

-

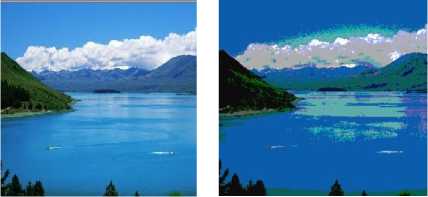

G. Classification (Unsupervised Classification)

The last step is to classify the image by calculate mean for each window and find maximum and minimum mean .The difference between maximum and minimum mean is divided into partitions that represent each classes of image the coloring each class to distinguish between them and check each pixel of the image to determine to what class that similar it and give it the color of that class as shown in Figure12.

Figure 12.Unsupervised Classification

-

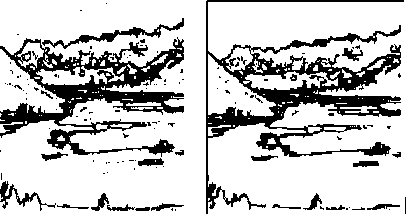

V. Conclusion

The JSEG algorithm is tested on a variety of images segmented images are dimmed to show boundaries. It can be seen that the results are reasonable. The result is always depending on the nature of the image where the result of the image that contains homogenous colors is different from the image that contains inhomogeneous colors.

Список литературы Satellite Image Classification and Segmentation by Using JSEG Segmentation Algorithm

- Sharon E., Brandt A. Basri, R. Fast Multiscale Image Segmentation. Proc of IEEE Conference on Computer Vision, 2000(1):70-77.

- Alan Bovik. Handbook of Image and Video Processing (Second Edition). Beijing: Publishing House of Electronics Industry, 2006.

- Color image segmentation , Yining Deng, B. S. Manjunath and Hyundoo Shin, Department of Electrical and Computer Engineering University of California, Santa Barbara.

- Otsu N. Discriminant and Least Square Threshold Selection. In: Proc 4IJCPR, 1978, 592-596.

- Kittler J, Illingworth J. On Threshold Selection Using Clustering Criteria. IEEE Trans, 1985, SMC-15: 652-655.

- L. Shafarenko, M. Petrou, and J. Kittler, “Automatic watershe segmentation of randomly textured color images” 2007 .

- Rafael C, Gonzalez, Richard E Woods. Digital Image Processing(Second Edition) . Beijing: Publishing House of Electronics Industry, 2007

- Y. Deng, C. Kenney, M.S. Moore, and B.S. Manjunath,“Peer group filtering and perceptual color image quantization”, toAppear in Proc. of ISCAS, 1999.

- Liu Ping. A Survey on Threshold Selection of Image Segmentation. Journal of Image and Graphics,2004.

- Bouman C A, Shapiro M. A Multiscale Random Field Model for Bayesian Image Segmentation. IEEE Transactions on Image Processing , 1994(2):162-177.

- Cattleman Kenneth R. Digital Image Processing. Prentice Hall, 1998.

- Claudio Rosito Jung. Multiscale Image Segmentation Using Wavelets and Watersheds. IEEE, 2003, Computer Graphics and Image Processing, SIBGRAPI XVI Brazilian Symposium:278-284.

- Kittler J, Illingworth J. Minimum Error Thresholding. Pattern Recognition, 1986, 19(1): 41-47.

- Zhou Liping, Gao Xinbo. Image Segmentation via Fast Fuzzy C-Means Clustering [J]. Computer Engineering and Application, 2004, 40(8):68-70.

- Pal B, Pal S K. A Review on Image Segmentation Techniques[J]. Pattern Recognition, 1993, 26(9):1277-1294.

- Carvalho B M, Gau C J, Herman G T, et al. Algorithms for Fuzzy Segmentation[J] . Pattern Analysis & Applications, 1999, 2(1):73-81.

- Selvathi D, Arulmuragn A, Selvi T, et al. MRI Image Segmentation Using Unsupervised Clustering Techniques[C] . Proc of the 6th International Conference on Computational Intelligence and Multimedia Applications(ICCIMA’05), 2005:105-110.

- Galun M., Sharon E., Basri R. & Brandt. Texture Segmentation by Multiscale Aggregation of Filter Responses and Shape Elements. Computer Vision Proceedings of Ninth IEEE International Conference, 2003 (1):469-476.