Student Learning Ability Assessment using Rough Set and Data Mining Approaches

Автор: A. Kangaiammal, R. Silambannan, C. Senthamarai, M.V. Srinath

Журнал: International Journal of Modern Education and Computer Science (IJMECS) @ijmecs

Статья в выпуске: 5 vol.5, 2013 года.

Бесплатный доступ

All learners are not able to learn anything and everything complete. Though the learning mode and medium are different in e-learning mode and in classroom learning, similar activities are required in both the modes for teachers to observe and assess the learner(s). Student performance varies considerably depending upon whether a task is presented as a multiple-choice question, an open-ended question, or a concrete performance task [3]. Due to the dominance of e-learning, there is a strong need for an assessment which would report the learning ability of a learner based on the learning skills under various stages. This paper focuses on assessment through multiple choice questions at the beginning and at the end of learning. The learning activities of the learner are tracked during the learning phase through a Continuous Assessment test to realize the understanding level of the learner. The scores recorded in the database is analyzed using a Rough Set Approach based Decision System. The effectiveness of teaching learning process indicates the learning ability of the learner, presented in a Graphical form. It is evident from the results that the entry behavior and the behavior while learning determine the actual learning. Students generate internal opinion as they monitor their engagement with learning activities and tasks and also assess progress towards goals. Those who are effective at self-regulation, however, produce better feedback or are able to use the self-opinion they generate to achieve their desired goals. The tool developed assists the teacher to be aware of the learning ability of learners before preparing the content and the presentation structure towards complete learning. In other words, the developed tool helps the learner to self-assess the learning ability and thereby identify and focus to gain the lacking skills.

Learning Ability, Pre-test, Post-test, Continuous Assessment, Rough Set Approach, Decision System

Короткий адрес: https://sciup.org/15014544

IDR: 15014544

Текст научной статьи Student Learning Ability Assessment using Rough Set and Data Mining Approaches

Published Online May 2013 in MECS DOI: 10.5815/ijmecs.2013.05.01

People are nowadays expected to be highly educated and continually improve and acquire the latest skills through life-long learning. E-learning is an enhanced learning that enhances existing learning. Though many research works have compared traditional learning and e-learning with differences in learning performances, the other set of researchers state the benefits, limitations and suitability of each category of learning modes.

Learning with focus has been changing in the recent past. More and more people read; and yet fewer and fewer they learn. Web-based course content delivery has to match the learner requirements as if the learners have a face-to-face classroom environment and expressing their need about the learning through human interaction. A variety of assessment methods, such as recognition of prior learning, subsequent learning and on learning assessment can enable the educators to get feedback that would make clear about the future enhancement of learning materials in the e-learning mode.

Online instructional intervention heavily relies on learner-regulated learning. More efforts should be put on understanding learner perceptions and learning assessment of online instruction within the “integrated mode” environment. This understanding is more likely to enable us to create learning environments in correspondence with learner needs and characteristics [9].

-

II. RELATED WORK

Questions create an urge towards learning. Learning has to commence for answering questions and end after the gaining the sufficient knowledge/skill on both the question and answer. Range of learning modes are practiced, each has its own structure, suitability and methodology. Today’s education is more in a mix. Elearning systems help learners to learn electronic course through Internet computer instead of face to face learning. It provides various advantages for the learner by making access to educational material very fast and just-in-time at any time or place. Due to the wide use of E-learning, there is a need for learners to self-assess their learning ability before learning anything to gain the confidence or to improve the aspect of skills lacking in them. The research work by [4] brings out the fact that while students have been given more responsibility for learning in recent years there has been far greater reluctance to give them increased responsibility for assessment processes.

Systematic collection and analysis of information can significantly improve educational practice. Based on the belief that the more you know about what your students know and how they learn, the better you can plan your learning activities and structure your teaching [2].

Diagnostic teaching is the “process of diagnosing student abilities, needs and objectives and prescribing requisite learning activities”. Through diagnostic teaching, the teacher monitors the understanding and performance of students before teaching the lesson while teaching, and after teaching the lesson. Diagnostic teaching can inform teachers of the effectiveness of their lessons with individuals, small groups of students, or whole classes, depending on the instruments used. Within a diagnostic teaching perspective, assessment and instruction are interacting and continuous processes, with assessment providing feedback to the teacher on the efficacy of prior instruction, and new instruction building on the learning that students demonstrate [16].

Authors of [1] believes that online education gives the learner the ability to learn anywhere, anytime and even it pays attention to the pace of learning for each individual; that is learning with high flexibility, and mentions the fact that in this type of education, the learner is himself a decision-maker and can debate or speak with teachers and students using ICT.

The work in [7] elaborates the use of the six efficient words of "what, which, why, where, when and who" in the initial implementation of e-learning and believes that providing education in electronic form is providing opportunities for students in the potential use of technology to improve teaching and learning. Therefore, all aspects must be studied for their establishment.

The authors of [5] show that the main points of success for continuation of e-learning are free access to the tools, high interest and motivation of teachers, the situation of learning based approach, structure development, visual processes of decision – making and the culture of using that education.

Learning Activities are defined as “any activities of an individual, organized with an intention to improve his/her knowledge, skills and competence”. Learning Activities are defined as “any activities of an individual organized with the intention to improve his/her knowledge, skills and competence”. The two fundamental criteria to distinguish learning activities from non-learning activities are [6]:

-

1. The activity must be intentional (as opposed to random learning), so the act has a predetermined purpose;

-

2. The activity is organized in some way, including being organized by the learner himself/herself;

-

3. It typically involves the transfer of information in a broader sense (messages, ideas, knowledge).

-

4. Student learning assessment and student’s learning ability assessment is highly critical for various reasons at both teacher and student end. For students, these assessments:

-

5. Serve as an ongoing communication process between the teacher and the students.

-

6. Help to clarify the teaching goals and students’ expectation to learn, as you progress through the course content.

-

7. Provide credible evidence regarding whether or not learning objectives have been achieved.

-

8. Provide specific feedback on what is working and what is not working.

-

9. For teachers, it provides increased understanding about student learning, allows to adapt the improvement on the course content.

-

III. CLASSIFICATION AND PREDICTION

A Decision Tree (DT) is a classification scheme which generates a tree and a set of rules, representing the model of different classes, from a given dataset. As per [8], DT is a flow chart like tree structure, where each internal node denotes a test on an attribute, each branch represents an outcome of the test and leaf nodes represent the classes or class distributions. Top most node in a tree is the root node.

We can easily derive the rules corresponding to the tree by traversing each leaf of the tree starting from the node. It may be noted that many different leaves of the tree may refer to the same class labels, but each leaf refers to a different rule. DTs are attractive in DM as they represent rules which can readily be expressed in natural language.

The major strengths of the DT methods are the following

-

1. DT is able to generate understandable rules.

-

2. They are able to handle both numerical and categorical attributes.

-

3. They provide a clear indication of which fields are most salient for prediction or classification.

A decision tree is a set of conditions organized in a hierarchical structure [15]. It is a predictive model in which an instance is classified by following the path of satisfied conditions from the root of the tree until reaching a leaf, which will correspond to a class label. A decision tree can easily be converted to a set of classification rules. Some of the most well-known decision tree algorithms are C4.5 [15] and CART [8].

Hence the classification with decision tree using ID3 is considered for the experiment purpose in the webbased tool developed.

-

IV. DAVID MERRILL’S FIRST PRINCIPLES OF INSTRUCTION (FPI)

Problem centric approach is proved to be an effective instructional model as it gives importance to students’ motivation as prime importance, and it follows a cyclic approach using ‘Constructivism’ as the principle. The model places a real world problem as the central theme in the instructional episode. Merrill’s taxonomy [10, 12] divides the instructional event into four phases, which he calls ‘Activation’, ‘Demonstration’, ‘Application’ and ‘Integration’. Central to this instructional model is a real-time problem-solving theme, called ‘Problem’. Merrill suggests that fundamental principles of instructional design should be relied on, and these apply regardless of any instructional design model used. Violating this would produce a decrement in learning and performance. His model is shown in Figure 1. The problem-solving theme (Problem) is surrounded by these four phases viz., ‘Activation’, ‘Demonstration’, ‘Application’ and ‘Integration’.

From the above five components of Merrill’s model, the four phases can be used for any study on instructional methods or subject content analysis, for which provides useful action verbs are arrived at for these components. It can be inferred that the model is ‘Problem Centric’. Merrill goes on to show that, the most effective learning environment is problem-based and involve the students in these four distinct phases of learning as modeled in Figure 1. Learning is facilitated through these four phases and each one along with the theme is explained as below:

Integration Activation

Problem

Application Demonstrati

Figure 1. David Merrill’s First Principles of Instruction

-

[1] Problem:

Learners are engaged in solving real-world problems.

Learners are shown with the task that they will be able to do or problem that they will be able to solve as a result of completing a module or a course. Problembased learning (PBL) is perceived by some, as one of the most exciting approaches to education and learning, which has been developed in the last thirty years. The term PBL is used to describe a variety of projects, from research and solving case studies, to guided the design and engineering design projects. Merrill explores several elements in the process of PBL.

-

[2] Activation:

New knowledge builds on the learner’s existing knowledge. Learners recall or apply knowledge from relevant experience as a foundation for new knowledge. This could be from previous courses or job experiences undergone by the learner. For instance, recall the old relevant information such as date, events and places. The importance of activation of existing knowledge has been addressed by a number of educational psychologists. During Merrill’s Activation phase, prior knowledge (or experience) is recalled, and emotions are triggered. Not only pre-knowledge should be activated during this phase, but mental models as well. If these mental models consist of misconceptions, the instructional process could modify them.

-

[3] Demonstration:

New knowledge is demonstrated to the learner. Learners learn when the instruction demonstrates what is to be learnt, rather than merely telling information about what is to be told. The media used in the process is expected to play the relevant instructional role. Explain with examples, understand information with meanings, predict consequences, group, order and infer are some samples for demonstration. During this phase, the instructor presents new material and demonstrates new skills. Demonstration focuses the learner’s attention on relevant information and promotes the development of appropriate mental models. It shows actions in a certain sequence, which can simplify complex tasks and facilitate learning.

-

[4] Application:

The learner applies new knowledge to his problem. This is the practice phase, where learners are required to use their knowledge and skill to solve the problem. Some samples are: Use information; solve problems using required skills or knowledge. The purpose of a practice phase in the instructional events is to provide an opportunity for learners to develop proficiency and become experts. During this phase, cognitive processes come into play; and there is a search for meaningful patterns and mental programmes occur in the learner’s mind.

-

[5] Integration:

New knowledge is integrated into the learner’s terminal behavior. This is the transfer phase where learners apply or transfer their newly found knowledge or skills into their workday practices. This is felt, if learners can a) demonstrate their new knowledge or skills, b) reflect-on, discuss their new knowledge and skills and c) create, invent and explore new ways to use their new knowledge and skills. Seeing patterns and organizing by recognition of hidden meanings, are some samples. Use old ideas to create new ones (relate knowledge from several areas). Assess values of ideas (make choices based on supported arguments). Most of the instructional events end with an assessment phase. During this phase learners have to prove themselves, that they have acquired the new knowledge and skills. Merrill calls this as the Integration phase, during which the learners get the opportunity to prove new capabilities and show newly acquired skills. This integration phase uses the higher order thinking skills of the Bloom’s taxonomy (Bloom), viz., Analysis, Synthesis and Evaluation.

Using this FPI approach, learning materials have been developed covering each of the portrayals. For various on the action verbs, a manual

-

V. DECISION SYSTEMS

A decision system is a pair D=(θ, D) where θ =(T,H,r) and D is a special attribute of H called a decision attribute. The attributes of H are distinct from D are referred to as conditional attributes, and the set of conditional attribute of H will be denoted by Hc. Hc is obtained by removing D from H [14].

The decision system describes the table containing various attributes like time, score, references, content used level, learning ability of the learner. Here ‘learning ability’ is the decision attribute. All other attributes are conditional attributes.

The decision tables used by the classifier for deciding the level of learners based on the pre-assessment tests so as to provide the learning content to be delivered to the learner is as presented in Table I.

TABLE I. DECISION TABLE CLASSIFIER USED ON PRE-ASSESSMENT TEST DATA

|

Assessment |

Score |

Time |

Level |

|

Pre |

High |

Low |

Advanced |

|

Pre |

High |

High |

Intermediate |

|

Pre |

Medium |

Low |

Advanced |

|

Pre |

Medium |

Medium |

Intermediate |

|

Pre |

Low |

Medium |

Intermediate |

|

Pre |

Low |

High |

Beginner |

|

Pre |

High |

Medium |

Advanced |

|

Pre |

Medium |

High |

Beginner |

|

Pre |

Low |

Low |

Intermediate |

Continuous Assessment Test is carried out after the learners have started learning the material until the same is exhausted. The results pertaining to score and references made for answering are stored in the

Database. The decision table used as classifier is shown in Table II.

TABLE II. DECISION TABLE TO CLASSIFIER USED ON CONTINUOUS-ASSESSMENT TEST DATA

|

Assessment |

Score |

Reference |

Level |

|

Continuous |

High |

No |

Advanced |

|

Continuous |

High |

Yes |

Intermediate |

|

Continuous |

Medium |

No |

Beginner |

|

Continuous |

Medium |

Yes |

Still Improve |

|

Continuous |

Low |

No |

Still Improve |

|

Continuous |

Low |

Yes |

Not Eligible |

Post Assessment Test is administered after the learners have thoroughly learnt the material. Data such as score, time and referencing are recorded in the Database based on the following Decision Systems classifier in Table III.

TABLE III. DECISION TABLE CLASSIFIER USED ON POST-ASSESSMENT TEST DATA

|

Assessme nt |

Mark |

Time |

Referenci ng |

Learner |

|

Post |

High |

Low |

No |

Quick Learner |

|

Post |

High |

High |

Yes |

Average Learner |

|

Post |

Low |

High |

Yes |

Not Eligible |

|

Post |

Medium |

Medium |

No |

Average Learner |

|

Post |

Medium |

Low |

No |

Good Learner |

|

Post |

Low |

Medium |

Yes |

Slow Learner |

|

Post |

High |

Medium |

No |

Good Learner |

|

Post |

Medium |

Medium |

Yes |

Slow Learner |

|

Post |

High |

High |

No |

Slow Learner |

|

Post |

Medium |

High |

Yes |

Not Eligible |

|

Post |

High |

Medium |

Yes |

Average Learner |

|

Post |

Low |

Medium |

No |

Slow Learner |

-

V. PROPOSED WORK

The proposed work progresses with an assumption that the learning content has been already prepared and is ready for use in the e-learning environment. The question bank for the topic of learning is also prepared based on the David Merrill’s first principles of Instruction portrayals [11]. The question bank includes the Instruction portrayals.

As the main objective of this research is to identify the level of learning of a learner through an online assessment tool and then analyze the performance of a learner. The research work focuses on assessment for teachers (pre-test) to plan the learning materials; assessment of learning goals (continuous assessment test) for both teachers and learners; and assessment of the achievement of learning goals (post-test) for both teachers and learners [13].

A web-based assessment tool that has been developed relies primarily on a pre-assessment test which helps to know the target audience of the content. Pre-assessment test performance scores are applied a Rough set approach namely Decision Systems, to know the learning level of the learner so as to decide on target content to be provided for learning. Through asynchronous communication, the learning content is delivered to the learners for learning. While learning takes place the learner observation is carried out through a Continuous-Assessment test, to understand the understanding level of the learner. Based on the score data, the topic to be learnt next is decided and the appropriate content is selectively provided to the learner. At the end of the learning session, a Post-assessment test is carried out to assess the level of learning actually happened at the learner.

During the storing of learner data into a database, in addition to the scores, learner activities such as locating, verifying, referencing the learning content for answering questions and the time taken to complete the assessment test are also recorded in the Database.

Data mining techniques known as classification and prediction are used to predicting the learning skill of the learner. The learning activities data from the database are analyzed using the classification using the classifier built. The classifier works based on the type of questions answered and its respective portrayals. The classifier classifies from the given score and the learner activities data of learners, the level of learning/understanding and the missing portrayal in their learning are determined. Hence, the learning skills are categorized as ‘Beginner’, ‘Intermediate’ and ‘Advanced’. Therefore, these prediction results meet out the objectives of the study. The efficiency of teaching learning process indicates the learning ability of the learner presented in a Graphical form.

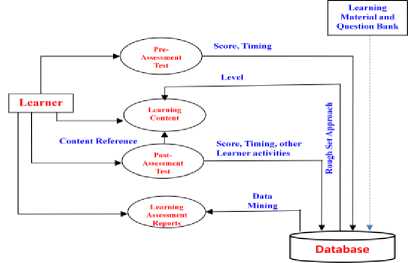

Figure 2. Architecture of the Proposed System

The architecture of the proposed system is shown in Figure 2. Although, portrayals based learning contents and the portrayal based question bank on these contents are not the components in this architecture, they are shown to indicate that it is already available.

During the pre-assessment test, the learner answers to indicate the entry level behavior with his current state of knowledge on the topic of learning. On the contrary, the continuous assessment and post-assessment tests are left with an option for the learner to go through the learning material to choose the correct answer. This additional flexibility in making reference to the materials is also recorded and accordingly the assessment is performed.

-

VI. EXPERIMENTS AND RESULTS

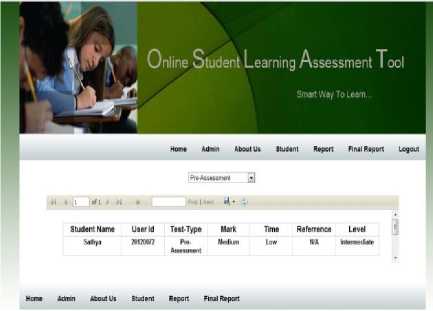

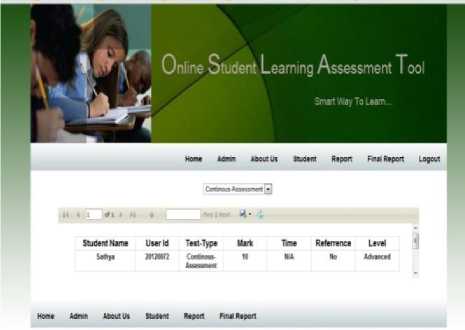

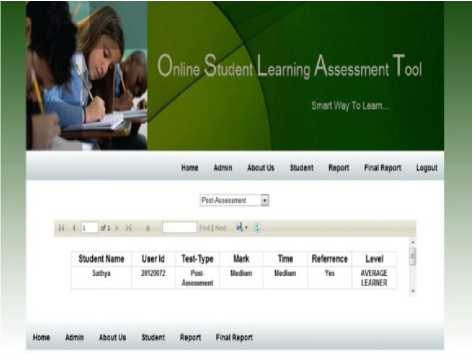

Set of course materials for various levels of learners have been prepared for 10 topics from a course “Programming in C”. The question bank comprising of multiple choice questions have been prepared and stored as a database in SQL Server. The question banks include the list of attributes for various tests are as shown in Figures 3, 4 and 5 respectively for pre-test, continuous-assessment test and post test.

|

Column Name |

Data Type |

Allow Nulls |

|

^ id |

int |

□ |

|

course |

nvarchar(200) |

0 |

|

qno |

nvarchar(50] |

0 |

|

Question |

nvarchar(MAX) |

0 |

|

Optionl |

nvarchar(250) |

0 |

|

Option2 |

nvarchar(250) |

0 |

|

Options |

nvarchar(250) |

0 |

|

Correct |

nvarchar(250) |

0 |

Figure 3. Abstract of Question bank content for preassessment

The question banks were designed for different levels of learners such as beginner, immediate and advanced. While the questions are chosen for administering a test, the (‘slevel’ in Figure 4) questions are chosen from different portrayals appropriate to the learner level mentioned above using random selection.

The administered final test records the score for correct answers, time taken to answer and references made to locate, identify and refer into the content to answer the question.

|

Column Name |

Data Type |

Allow Nulls |

|

id |

int |

!■□■ |

|

slevel |

nvarchar(50) |

0 |

|

course |

nvarchar(50) |

0 |

|

title |

nvarchar(MAX) |

0 |

|

scontent |

nvarchar(MAX) |

s |

|

Question |

nvarchar(MAX) |

в |

|

Optionl |

nvarchar(100) |

s |

|

Option2 |

nvarchar(100) |

s |

|

Option3 |

nvarchar(100) |

s |

|

Correct |

nvarchar(100) |

0 |

Figure 4. Abstract of Question bank content for Continuous-assessment

|

Column Name |

Data Type |

Allow Nulls |

|

№ 3 |

int |

□ |

|

Userid |

nvarchar(50) |

0 |

|

Testtype |

nvarchar(50) |

0 |

|

Mark |

nvarchar(50) |

0 |

|

Time |

nvarchar(50) |

0 |

|

Referrence |

nvarchar(50) |

0 |

|

Level |

nvarchar(50) |

0 |

Figure 5. Abstract of Question bank content for Postassessment

The sequence of pre-test was conducted for 45 under graduate students following which the learning was performed using the learning materials provided to each of the learners based on the pre-test results. Continuous assessment tests were also done to assess the learning level. The next slide/frame of content to be provided for learning is decided based on the recent answer and/or the level identified at the pre-test. These data are also recorded. The final test is also administered with the same set of students and the results are stored. The results include timing and details of references made.

The database with recorded data is subjected for an analysis using the data mining techniques namely classification and prediction. Based on the learner activities such as score, time and referencing made, the classifier classified the 45 learners into four groups namely each of the FPI portrayal to be concentrated. The decision system used for generating the decision rules for post-assessment test is the classifier that works in this phase. The reports are generated as results at the end of each of the assessment tests has shown in 6, 7 and 8 respectively.

Figure 6. Pre-Assessment Test Result

From the test results, it is seen that the fields not applicable to each assessment-test have been shown as ‘N/A’ meaning ‘Not Applicable’.

The performance assessment of each learner is presented at the end of completion of learning session. The chart depicts that the pre-assessment and postassessment tests are average performed than the while learning test i.e., continuous-assessment test. Thus the learning phase was satisfactory while the on the whole result is not showing good sign of learning.

Figure 7. Continuous-Assessment Test Result

Figure 8. Post-Assessment Test Result

Figure 9. Comparison on Performance assessments

The individual learner assessment for identifying the level of learning skill does differ learner to learner. The tool was experimented with 45 under graduate students from computer science and computer applications programme. Three students did not complete the three phases and hence eliminated from the database. The results are summarized and tabulated in Table IV.

TABLE IV. TABLE OF SUMMARIZED POST-ASSESSMENT RESULTS

|

S. No. |

Userid |

Mark |

Time |

Reference |

Level |

|

1 |

20120051 |

Medium |

High |

Yes |

Not Eligible |

|

2 |

20120052 |

High |

Low |

No |

Quick Learner |

|

3 |

20120053 |

Low |

Medium |

Yes |

Slow learner |

|

4 |

20120054 |

High |

Low |

No |

Quick Learner |

|

5 |

20120055 |

High |

Medium |

No |

Good Learner |

|

6 |

20120056 |

Medium |

Medium |

No |

Average Learner |

|

7 |

20120057 |

Low |

High |

Yes |

No Self Learning |

|

8 |

20120058 |

High |

High |

No |

Slow Learner |

|

9 |

20120059 |

Low |

High |

Yes |

Not Eligible |

|

10 |

20120060 |

Medium |

High |

Yes |

Not Eligible |

|

11 |

20120061 |

Low |

High |

Yes |

No Self Learning |

|

12 |

20120062 |

Medium |

High |

Yes |

Not Eligible |

|

13 |

20120063 |

High |

High |

No |

Slow Learner |

|

14 |

20120064 |

Medium |

High |

Yes |

Not Eligible |

|

15 |

20120065 |

High |

Low |

No |

Quick Learner |

|

16 |

20120066 |

High |

Medium |

No |

Good Learner |

|

17 |

20120067 |

High |

Low |

No |

Quick Learner |

|

18 |

20120068 |

High |

Medium |

No |

Good Learner |

|

19 |

20120069 |

Medium |

High |

Yes |

Average Learner |

|

20 |

20120070 |

High |

Medium |

Yes |

Average Learner |

|

21 |

20120071 |

High |

Medium |

Yes |

Average Learner |

|

22 |

20120072 |

High |

Low |

No |

Quick Learner |

|

23 |

20120073 |

High |

Low |

No |

Quick Learner |

|

24 |

20120074 |

High |

Low |

No |

Quick Learner |

|

25 |

20120075 |

High |

Medium |

Yes |

Average Learner |

|

26 |

20120076 |

High |

Medium |

Yes |

Average Learner |

|

27 |

20120077 |

High |

Low |

No |

Quick Learner |

|

28 |

20120078 |

High |

Medium |

Yes |

Average Learner |

|

29 |

20120079 |

Low |

High |

Yes |

No Self Learning |

|

30 |

20120082 |

High |

High |

No |

Slow Learner |

|

31 |

20120085 |

High |

High |

Yes |

Average Learner |

|

32 |

20120086 |

High |

Low |

No |

Quick Learner |

|

33 |

20120087 |

Medium |

Low |

No |

Good Learner |

|

34 |

20120088 |

Medium |

Medium |

Yes |

Slow Learner |

|

35 |

20120090 |

Low |

High |

Yes |

Not Eligible |

|

36 |

20120091 |

Medium |

Low |

No |

Good Learner |

|

37 |

20120092 |

High |

Low |

No |

Quick Learner |

|

38 |

20120093 |

High |

High |

Yes |

Average Learner |

|

39 |

20120095 |

Medium |

Low |

No |

Good Learner |

|

40 |

20120099 |

High |

Low |

No |

Quick Learner |

|

41 |

20120101 |

Medium |

High |

No |

Not Eligible |

|

42 |

20120105 |

Low |

High |

Yes |

No Self Learning |

It is understood that the pre-test serves to identify the learning level to decide on the type/level of learning content to be delivered to the learner whereas the Continuous assessment also does the same contribution with a slight difference to decide on the content for the next topic based on the present observation on the learner.

TABLE V. CLASSIFIED SUMMARIZED POST-ASSESSMENT RESULTS

|

S. No. |

Userid |

Mark |

Time |

Reference |

Level |

|

1 |

20120085 |

High |

High |

Yes |

Average Learner |

|

2 |

20120093 |

High |

High |

Yes |

Average Learner |

|

3 |

20120070 |

High |

Medium |

Yes |

Average Learner |

|

4 |

20120071 |

High |

Medium |

Yes |

Average Learner |

|

5 |

20120075 |

High |

Medium |

Yes |

Average Learner |

|

6 |

20120076 |

High |

Medium |

Yes |

Average Learner |

|

7 |

20120078 |

High |

Medium |

Yes |

Average Learner |

|

8 |

20120069 |

Medium |

High |

Yes |

Average Learner |

|

9 |

20120056 |

Medium |

Medium |

No |

Average Learner |

|

10 |

20120055 |

High |

Medium |

No |

Good Learner |

|

11 |

20120066 |

High |

Medium |

No |

Good Learner |

|

12 |

20120068 |

High |

Medium |

No |

Good Learner |

|

13 |

20120091 |

Medium |

Low |

No |

Good Learner |

|

14 |

20120095 |

Medium |

Low |

No |

Good Learner |

|

15 |

20120087 |

Medium |

Low |

No |

Good Learner |

|

16 |

20120061 |

Low |

High |

Yes |

No Self Learning |

|

17 |

20120079 |

Low |

High |

Yes |

No Self Learning |

|

18 |

20120105 |

Low |

High |

Yes |

No Self Learning |

|

19 |

20120057 |

Low |

High |

Yes |

No Self Learning |

|

20 |

20120059 |

Low |

High |

Yes |

Not Eligible |

|

21 |

20120090 |

Low |

High |

Yes |

Not Eligible |

|

22 |

20120060 |

Medium |

High |

Yes |

Not Eligible |

|

23 |

20120062 |

Medium |

High |

Yes |

Not Eligible |

|

24 |

20120064 |

Medium |

High |

Yes |

Not Eligible |

|

25 |

20120101 |

Medium |

High |

No |

Not Eligible |

|

26 |

20120051 |

Medium |

High |

Yes |

Not Eligible |

|

27 |

20120052 |

High |

Low |

No |

Quick Learner |

|

28 |

20120054 |

High |

Low |

No |

Quick Learner |

|

29 |

20120065 |

High |

Low |

No |

Quick Learner |

|

30 |

20120067 |

High |

Low |

No |

Quick Learner |

|

31 |

20120072 |

High |

Low |

No |

Quick Learner |

|

32 |

20120073 |

High |

Low |

No |

Quick Learner |

|

33 |

20120074 |

High |

Low |

No |

Quick Learner |

|

34 |

20120077 |

High |

Low |

No |

Quick Learner |

|

35 |

20120086 |

High |

Low |

No |

Quick Learner |

|

36 |

20120092 |

High |

Low |

No |

Quick Learner |

|

37 |

20120099 |

High |

Low |

No |

Quick Learner |

|

38 |

20120058 |

High |

High |

No |

Slow Learner |

|

39 |

20120063 |

High |

High |

No |

Slow Learner |

|

40 |

20120082 |

High |

High |

No |

Slow Learner |

|

41 |

20120053 |

Low |

Medium |

Yes |

Slow Learner |

|

42 |

20120088 |

Medium |

Medium |

Yes |

Slow Learner |

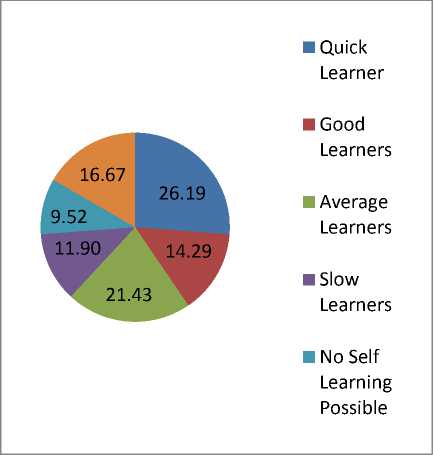

Table V shows the post-assessment results classification based on various learner levels. It is further summarized the observation from the experimental results as shown in Table VI.

TABLE VI. SUMMARY OF EXPERIMENTAL RESULT

|

Description of Learner Level |

Count |

Percentage |

|

Quick Learner |

11 |

26.19 |

|

Good Learners |

6 |

14.29 |

|

Average Learners |

9 |

21.43 |

|

Slow Learners |

5 |

11.90 |

|

No Self Learning Possible |

4 |

09.52 |

|

Not Eligible |

7 |

16.67 |

|

Total |

42 |

100% |

It is seen from Table VI that the learners of all levels are present in a class of students from a particular discipline. More than 63% of student learners are able to learn from self-learning while 12% of learners finding it terribly hard to learn through self-learning and however they are able to make it. 25% of learners could not fit themselves into self-learning or e-learning mode.

Figure 10. Chart showing the Summarized Observation

The chart depicted in Figure 10 reveals that the quick learners is the highest while the average learners with 21% is the next highest followed by good learners with 14%. On the contrary, around 20% turn to be ineligible to go with self-learning. Hence, the learners with the highest three levels namely quick learner, good learner and average learner are suitable to proceed with the elearning while the other 20% of the lower category cannot go ahead with other modes of learning except teacher-assisted instruction and /or traditional chalk and talk method.

-

VII CONCLUSION AND FUTURE WORK

This work identifies the learning level of the learner through a sample online/e-learning mode. The process begins with a pre-test to decide on the content to be provided for learning on a topic such as advanced, intermediate or beginner level. While the learning is performed based on the learning ability exhibited through pre-test, the continuous assessment test helps to decide the next content to be delivered for learning concerning the same topic. At the end of the learning process, the post-assessment test conducted reveals the result of the study in terms of learner’s learning level based on the test score, time taken and the back references made from the content. The summarized experimental result indicates that the major portion of the learners (around 63%) are able to accommodate to the e-learning or online learning; while around 20% of the learners are found unable to learn through these modes of learning.

This work identifies the learning level of the learner before choosing to learn a course through modern media-assisted self-learning. In the future, the data may be observed and can be further analyzed in terms of David Merrill’s portrayals, to discover the learning disability in a particular portrayal. Hence, this tool/application will have to be extended to observe the learning levels with respect to each of the portrayals such as Activation, Demonstration, Application and Integration.

Список литературы Student Learning Ability Assessment using Rough Set and Data Mining Approaches

- Adibi, M., “The effect of information and communication technology on the educational improvement of secondary students”, (Unpublished master’s thesis). Islamic Azad University-Garmsar Branch, 2010.

- Angelo, Th. A. and K. P. Cross, “Classroom Assessment Techniques”, San Francisco: Jossey-Bass, 2nd edition, 1993.

- Baxter, G. P., and R. J. Shavelson, “Science performance assessments: Benchmarks and surrogates”, International Journal of Educational Research 21 (3): 279–98, 1994.

- David J. Nicol, Debra Macfarlane-Dick, “Formative assessment and self-regulated learning: A model and seven principles of good feedback practice Studies in Higher Education”, Vol 31(2), 199-218, 2006.

- Etienne, P. and Van Den Stock, A., “E-learning-assistant: Situation-based learning in education”, Computer and Education Journal, 39(3), 224-226, 2010.

- European Communities, “Classification of Learning Activities - Manual: Methods and Nomenclature”, ISSN 1725-0056, ISBN 92-79-01806-X. Luxembourg: Office for Official Publications of the European Communities, 2006.

- George J., “Social grid platform for collaborative online learning on blogosphere: A case study of eLearning. Expert Systems with Applications”, 36, 2177–2186, 2009.

- Han, J., Kamber, M. Data Mining Concepts and Techniques, Morgan Kaufmann Publisher, 2001.

- In-Sook Lee, “Learners' Perceptions and Learning Styles in the Integrated Mode of Web-based Environment”, AECT International Convention Feb. 16-20 Long Beach, USA, 2000.

- Kangaiammal A., Malliga P, Jayalakshmi P and Sambanthan TG, “Problem Centric Instrumental Approach for different Delivery Modes of Computer Science Subjects”, The Indian Journal of Technical Education, Volume 33 No.3 pg 31-39, July –Sep 2010, ISSN 0971-3034.

- Kangaiammal A., “A Study on the Competencies through Institutionalized and Distance Modes of MCA Programme”, Ph.D thesis, University of Madras. (Permitted by the University to publish research materials of the thesis), Oct. 2008.

- Malliga. P, Sambanthan TG, “ Effectiveness of Problem Centric Approach in e-content of Computer Science and Engineering”, Conference Proceedings of International Conference on e- Resources in Higher Education, Issues, Development, Opportunities and Challenges, BU, Tiruchirappali, Feb 2010, pg 149-153, ISBN 978-81-908078-9-0.

- Office of Assessment, “Teaching and Learning. (2010). Developing appropriate assessment tasks”. In Teaching and Learning at Curtin 2010. (Pp.22-44). Curtin University: Perth, 2010.

- Pawlak, Z, “Rough Set”, International Journal of Computer and Information Sciences 341–356, 1982.

- Quinlan, J.R., “C4.5: Programs for Machine Learning”. Morgan Kaufman. 1993.

- Thomas R. Guskey, “How classroom assessments can improve learning from educational leadership”, Volume 60, No.5, 7-11, 2003.