Traffic extreme situations detection in video sequences based on integral optical flow

Автор: Chen Huafeng, Ye Shiping, Nedzvedz Alexander, Nedzvedz Olga, Lv Hexin, Ablameyko Sergey

Журнал: Компьютерная оптика @computer-optics

Рубрика: Обработка изображений, распознавание образов

Статья в выпуске: 4 т.43, 2019 года.

Бесплатный доступ

Road traffic analysis is an important task in many applications and it can be used in video surveillance systems to prevent many undesirable events. In this paper, we propose a new method based on integral optical flow to analyze cars movement in video and detect flow extreme situations in real-world videos. Firstly, integral optical flow is calculated for video sequences based on optical flow, thus random background motion is eliminated; secondly, pixel-level motion maps which describe cars movement from different perspectives are created based on integral optical flow; thirdly, region-level indicators are defined and calculated; finally, threshold segmentation is used to identify different cars movements. We also define and calculate several parameters of moving car flow including direction, speed, density, and intensity without detecting and counting cars. Experimental results show that our method can identify cars directional movement, cars divergence and cars accumulation effectively.

Integral optical flow, image processing, road traffic control, video surveillance

Короткий адрес: https://sciup.org/140246497

IDR: 140246497 | DOI: 10.18287/2412-6179-2019-43-4-647-652

Текст научной статьи Traffic extreme situations detection in video sequences based on integral optical flow

Traffic flow monitoring and analysis based on computer vision techniques, especially traffic analysis and monitoring in a real-time mode raise valuable and complicated demands to computer algorithms and technological solutions. Most realistic applications are in vehicle tracking, and the critical issue is initiating a track automatically. Traffic analysis then leads to reports of speed violations, traffic congestions, accidents, or actions against the law of road users. A variety of approaches to these tasks were suggested by many scientists and researchers (Al-Sakran [1], Ao et al. [2], Cao [3], Rodríguez and García [4]). A good survey of video processing techniques for traffic applications was published in paper Kastrinaki et al. [5].

In monocular vision-based monitoring systems, the camera is typically assumed to be stationary and mounted at a fixed position to capture passing cars. Traffic analysis on urban traffic domain appears to be more challenging because of high-density traffic flow and low camera angle that lead to a high degree of occlusion (Rodríguez and García [4]).

Most of the existed papers on road traffic control are oriented for monitoring single vehicles in video images (Huang et al., [6]; Zhang et al., [7]). Our goal is to analyze car flow situation without detecting or counting cars. It would be helpful to automatically analyze car flows and predict road traffic jam, cars accumulation, accidents and many other events, especially when traffic monitoring center is understaffed.

A road monitoring system based upon image analysis must detect and react to a changing scene. This adaptability can be brought about by a generalized approach to the problem which incorporates little or no a priori knowledge of the analyzed scene. Such a system should be able to detect ‘changing circumstances’, which may include non-standard situations like traffic jam, rapid cars accumulation and cars divergence in road intersections (Joshi and Mishra [8], Nagaraj et al. [9], Shafie et al. [10], Khanke and Kulkarni [11]). By detecting these situations, the system can immediately inform humans about problems happening at a road.

Different approaches are used to analyze video sequences in general. Optical flow is already proved as a powerful tool for video sequences analysis due to its ability to treat group of objects as single entity and thus avoid individual tracking. Many authors use basic optical flow for motion analysis of dynamic objects (Kamath et al . [12], Cheng et al. [13]), especially for crowd behavior analysis. Zhang et al. [14] proposed a method using optical flow of corner points and CNN based on LeNet model to recognize crowd events on video. Ravanbakhsh et al. [15] employed Fully Convolutional Network and optical flow to obtain the complementary information of both appearance and motion patterns. Andrade et al. [16] presented a method combing optical flow and unsupervised feature extraction based on spectral clustering and Multiple Observation Hidden Markov Model training for abnormal events detection in crowds and correctly distinguished blocked exit situation from normal crowd flow.

Wang et al. [17] presented a feature descriptor called hybrid optical flow histogram and performed training on normal behavior samples through sparse representation, they detected change of speed in different directions of a movement as abnormal behavior for every frame. Mehran et al. [18] proposed abnormal crowd behavior detection method based on social force model. This method performed particle advection along with space-time average optical flow to emulate crowd dynamics. It computed interaction forces between particles based on their velocities. Chen et al. [19, 20] proposed to use integral optical flow for dynamic object monitoring and in particularly for crowd behavior identification. As we showed there, integral optical flow allows one to identify complex dynamic object behaviors based on the interactions between pixels without the need to track objects individually.

In this paper, we present a further extension of our method based on integral optical flow to monitor car traffic such as direct car flow and extreme situations like cars accumulation and cars divergence in videos. Together with detection of extreme situations the method allows to compute traffic parameters like direction, speed, density, and intensity without detecting and counting cars.

Integral optical flow is used to create motion maps and these maps are used to analyze and describe motions at pixel-level and region-level. Our method doesn’t require training and can be effectively used for situation monitoring and analysis. We applied our method on real-word videos and got good results.

-

1. Technology of car traffic monitoring by using motion maps

-

2. Integral optical flow and image motion maps

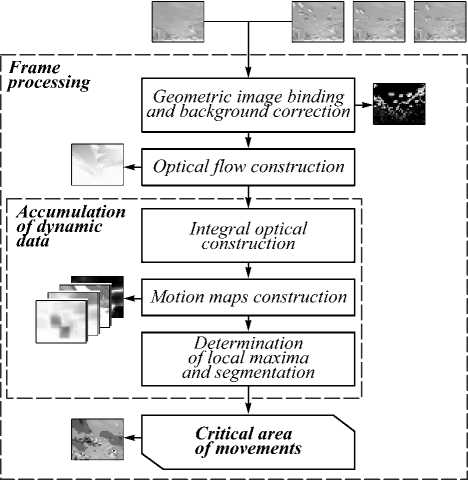

The technology of car traffic monitoring by using motion maps is as the following.

Background Image Sequence offrames

Fig. 1. General scheme of car traffic monitoring process

At the first stage basic optical flow is calculated, the instantaneous movement of moving pixels, which represent moving cars in the video, is determined. Results of the optical flow calculation along the video sequences are accumulated for calculation of the integral optical flow. Based on integral optical flow, we define motion maps to describe pixel motions at each position, i.e. statistical analysis of quantity and motion direction of pixels moving toward or away from each position.

At the second stage, we can identify cars movement types in any regions of interest based on threshold segmentation of local maxima in the motion maps. The general monitoring scheme is shown in Fig. 1.

Integral optical flow is an intuitive idea that accumulate optical flows for several consecutive frames. Along with the accumulation, displacement vectors of background become small, while those of foreground keep growing.

For description convenience, we use I t denote t -th frame of video I and I t ( p ) to denote pixel with coordinate p=( x , y ) throughout the remainder of this paper.

Let OF t denote basic optical flow of I t . It is a vector field with each vector OF t ( p ) represents displacement vector of pixel I t ( p ). Assume OF t ( p ) = d , we can easily determine the coordinate in I t+ 1 where pixel I t ( p ) moves, and it is p + d .

Considering optical flows for several consecutive frames have been computed, we can obtain integral optical flow for the first frame of those. Let IOFtitv denote integral optical flow of I t , where itv is the frame interval parameter used to compute integral optical flow. IOFtitv is also a vector field which records accumated displacement information in time period of itv frames for all pixels in I t .

For any pixel I t ( p ), its integral optical flow IOFtUv ( p ) can be determined as follow:

itv - 1

IGF" ( p ) = £ OF t Д p t + i ) , (1) i = 0

where p t+i is the coordinate in I t+i of pixel I t ( p ). In other words, if I t ( p ) stays in the video scene,

I t ( p ), I t+ 1 ( p+ 1), ... , I t+itv – 1 ( p t+itv – 1 )

are the same pixel in different frames, i.e. I t ( p ).

Integral optical flow provides opportunity to only analyse foreground objects which make actual movements, at least for some time period. In the proposed method, geometric structure formed by pixel motion is considered. For any position or region in the scene, pixel motion paths which relate to (i.e., start from, end at, or pass through) this position or region are analysed, based on which, motion maps are created, they allow to determine whether any certain event is happening at the position or in the region.

A motion map is an image where a feature Q at each position shows information about movement of pixels whose motion path relate to this position or a region centered at this position. We defined several motion maps [20], which describe comprehensive information of pixel motion, including direction, intensity, symmetry/directi-onality, etc. This information reflects behaviour of objects, in this case, moving cars on the road, thus certain events can be detected. The following motion maps are used for traffic monitoring:

-

• IQ (in-pixel quantity) map – A map with a scalar value at each position indicating number of pixels moving toward the corresponding position.

-

• OQ (out-pixel quantity) map – A map with a scalar value at each position indicating number of pixels moving away from the corresponding position.

-

• ICM (in-pixel comprehensive motion) map – A map with a vector at each position indicating comprehensive motion of pixels moving toward the corresponding position.

-

• OCM (out-pixel comprehensive motion) map – A map with a vector at each position indicating comprehensive motion of pixels moving away from the corresponding position.

Based on these motion maps, several indicators can be defined to describe motion in regions, they are useful to identify certain types of cars movement.

-

• RMI (regional motion intensity) – A scalar value indicating average of displacement vector magnitudes of integral optical flow for pixels in a certain region.

-

• RIRQ (regional in-pixel relative quantity ) – A scalar value indicating average of values on IQ map at positions in a certain region.

-

• RORQ (regional out-pixel relative quantity ) – A scalar value indicating average of values on OQ map at positions in a certain region.

-

• RICM (regional in-pixel comprehensive motion ) – A vector indicating average of values on ICM map at positions in a certain region.

-

• ROCM (regional out-pixel comprehensive motion) – A vector indicating average of values on OCM map at positions in a certain region.

-

• RIOI (regional in/out indicator ) – A scalar value indicating whether more pixels move toward than pixels move away from a certain region. RI-OI = RIRQ/RORQ .

-

• RIS (regional in-pixel symmetry) – A scalar value indicating how symmetrically pixels move toward a certain region. RIS = RIRQ/ || RISM 11.

-

• ROS (regional out-pixel symmetry) – A scalar value indicating how symmetrically pixels move away from a certain region. ROS = RORQ/ || ROSM ||.

-

3. Definition and identification of cars movement types

From the above definitions, we have RIS > 1 and ROS > 1, with the equal signs work when corresponding pixels move in the same direction. The bigger RIS or ROS is, the more symmetrically the corresponding pixels move.

If region of interest is self-symmetrically shaped, for example when trying to identify cars movement in the whole scene using a sliding window, usually a square window or rectangular window, values of these indicators can be assigned to region center, thus corresponding region-level motion maps will be created.

Cars in streets can move in one direction and it is called as directional car flow. However, in road intersec- tions, especially in unregulated ones where there are no traffic lights, cars accumulation and divergence can happen. Actually, these three types of movement are the main componets that constitutes usual traffic events, such as car flow stopping, traffic congestion, traffic accidents, etc. So, we define the following types of cars movement:

-

- cars directional movement;

-

- cars accumulation;

-

- cars divergence.

Definition 1 (Cars directional movement). Cars directional movement means that all cars move in the same direction. Three rules are proposed to identify cars directional movement: 1) many cars move from one region to another; 2) they move above a certain speed; 3) they move in one direction.

Cars directional movement is identified in region r at time t if RMI ( r ), RORQ t ( r ), and ROS t ( r ) meet thresholds:

-

(1) RMI t ( r ) > t n ;

-

(2) RORQ t ( r ) > 1 12 ;

-

(3) ROS t ( r ) < 1 13 .

Here t 13 should be a little bigger than 1.

Definition 2 (Cars divergence and accumulation). Divergence is when cars move in different directions from a center and accumulation is when cars are moving to a center.

Cars accumulation is identified in region r at time t if RMI ( r ), RIRQ t ( r ), RIOI t ( r ), and RIS t ( r ) meet thresholds:

-

(1) RMI t ( r ) > 1 21 ;

-

(2) RIRQ t ( r ) > 1 22 and RIOT, ( r ) > 1 23 ;

-

(3) RIS , ( r ) > 1 24 .

Here t 23 > 1 and t 24 > 1.

Cars divergence is identified in region r at time t if RMI ( r ), RORQ t ( r ), RIOI t ( r ) and ROS t ( r ) meet thresholds:

-

(1) RMI t ( r ) > 1 31 ;

-

(2) RORQ t ( r ) > 1 32 and RIOI t ( r ) < 1 33 ;

-

(3) ROS t ( r ) > 1 34.

-

4. Cars movement parameters calculation

Here 0 < t 33 < 1 and t 34 > 1.

Examples of real car flow situations are shown in Fig. 2.

Integral optical flow allows one not only to define types of cars movement but also allows to calculate characteristics of cars movement. The main characteristics of cars movement are:

-

• direction;

-

• speed;

-

• density;

-

• intensity.

Direction indicates a destination where cars move. In order to determine cars movement direction, we can simply divide [0, 2л) into several intervals with equal length and count for each interval number of pixels whose motion direction is in that interval. Interval with most pixels shows main motion direction. Suppose the interval is [2in / n, 2(i +1) л / n), 2(i +1) л / n can be chosen as the main motion direction 9m is more meaningful when ROS is small or close to 1, e.g., ROS < 1.5. If ROS is too big, e.g., ROS > 4, it means pixels are moving symmetrically, to some extent. In this case, there will be no main motion direction.

Speed of pixel I t ( p ) time period from I t to I t+itv is defined as follow:

portion of cars inside a certain region r in time period from I t to I t+ tv and is defined as follow:

, ч TV

D i tv ( r ) = N x 100%, A

S1" (P ) =

I IOF^ t ( P )|| itv

To determine speed of cars inside a certain region r , only pixels move from other positions should be considered. Let MP denote the set of positions inside region r at which the corresponding pixels move from other positions based on integral optical flow, then speed of cars inside r in time period from I t to I t+itv is defined as follow:

N

S t ( r ) = N Z S t ( P i ) ’

where p i e MP , N = card MP , and card MP is number of elements in set MP . Accordingly, density indicates the pro-

(a)

where A is area of r .

Intensity is a measure of the average occupancy of a certain region r by cars in time period from I t to I t+itv . Let MP i denote the set of positions inside r at which the corresponding pixels move from other positions at time I t+i , then intensity of r in time period from I t to I t+itv is defined as follow:

TI" (r ) = Z'caM , itv i=0 A

Where card MP i is number of elements in set MP i and A is area of r .

5. Car flow monitoring results

The proposed methods have been tested on several real-world videos. Let us show how the main cars movement types and parameters are identified at real images. For experiments, we chose a video with moving cars at unregulated road intersection. Fig. 3 shows consequently the results of a ) cars directional movement, b ) cars accumulation and c ) cars divergence.

Fig. 2. Examples of (a) cars direct flow, (b) cars accumulation, (c) cars divergence

(b)

Fig. 3. The results of identified types of cars movement: (a) cars directional movement, (b) cars accumulation and (c) cars divergence

Traffic congestion can be detected by considering parameters including speed and density. The main characteristic of a traffic congestion is that cars move very slowly, or even cannot move at all, for a relatively long time period, and thus the driveway is almost full. Table 1 shows how a traffic congestion is identified, where S – maximum speed for traffic congestion, R – minimum density for traffic congestion, T – minimum time of duration for traffic congestion. Operators of traffic monitoring center should indicate these parameters.

Table 1. Traffic congestion identification

|

Situation to avoid |

Speed |

Density |

Time of duration |

|

Traffic congestion |

< S |

> R |

> T |

Conclusion

Our paper is devoted to important problem of traffic analysis by stationary camera and detection of complex situations that can appear on roads. We have defined three types of cars movement: directional movement, divergence and accumulation and presented a method to identify these movements at their early stage. Our method mainly consists of the following steps: integral optical flow computation, pixel-level motion analysis, regionlevel motion analysis and threshold segmentation. The accumulative effect of integral optical flow is taken advantage to separate background and foreground and obtain intensive motion regions which are usually of inter- est. Based on integral optical flow, pixels can be tracked, thus for traffic monitoring tasks, certain parameters including direction, speed, density, and intensity can be calculated for any region. By using these parameters, traffic congestion can be detected automatically.

The effectiveness of our method has been demonstrated and confirmed by experimental results. The performed experiments proved that integral optical flow and motion maps can be efficiently used for identifying car traffic movement in video.

Список литературы Traffic extreme situations detection in video sequences based on integral optical flow

- Al-Sakran HO. Intelligent traffic information system based on integration of internet of things and agent technology, International Journal of Advanced Computer Science and Applications 2015; 6: 37-43.

- Ao GC, Chen HW, Zhang HL. Discrete analysis on the real traffic flow of urban expressways and traffic flow classification. Advances in Transportation Studies 2017; 1(Spec Iss): 23-30.

- Cao J. Research on urban intelligent traffic monitoring system based on video image processing. International Journal of Signal Processing, Image Processing and Pattern Recognition 2016; 9: 393-406.

- Rodríguez T, García N. An adaptive, real-time, traffic monitoring system. Mach Vis Appl 2010; 21: 555-576.

- Kastrinaki V, Zervakis M, Kalaitzakis K. A survey of video processing techniques for traffic applications. Image and Vision Computing 2003; 21: 359-381.

- Huang DY, Chen CH, Hu WC, et al. Reliable moving vehicle detection based on the filtering of swinging tree leaes and raindrops. J Vis Comun Image Represent 2012; 23: 648-664.

- Zhang W, Wu QMJ, Yin HB. Moving vehicles detection based on adaptive motion histogram. Digit Signal Process 2010; 20: 793-805.

- Joshi A, Mishra D. Review of traffic density analysis techniques. International Journal of Advanced Research in Computer and Communication Engineering 2015; 4(7): 209-213.

- Nagaraj U, Rathod J, Patil P, Thakur S, Sharma U. Traffic jam detection using image processing. International Journal of Engineering Research and Applications 2013; 3(2): 1087-1091.

- Shafie AA, Ali MH, Fadhlan H, Ali RM. Smart video surveillance system for vehicle detection and traffic flow control. Journal of Engineering Science and Technology 2011; 6(4): 469-480.

- Khanke P, Kulkarni PS. A technique on road traffic analysis using image processing. International Journal of Engineering Research and Technology 2014; 3: 2769-2772.

- Kamath VS, Darbari M, Shettar R. Content based indexing and retrieval from vehicle surveillance videos using optical flow method. Int J Sci Research 2013; II(IV): 4-6.

- Cheng J, Tsai YH, Wang S, Yang MH. SegFlow: Joint learning for video object segmentation and optical flow. Proceedings of International Conference on Computer Vision 2017: 686-695.

- Zhang W, Hou Y, Wang S. Event recognition of crowd video using corner optical flow and convolutional neural network. Proceeding of Eighth International Conference on Digital Image Processing 2016: 332-335.

- Ravanbakhsh M, Nabi M, Mousavi H, Sangineto E, Sebe N. Plug-and-play CNN for crowd motion analysis: An application in abnormal event detection. Source: áhttps://arxiv.org/abs/1610.00307ñ.

- Andrade EL, Blunsden S, Fisher RB. Modelling crowd scenes for event detection. Proceedings of 18th International Conference on Pattern Recognition 2006: 1: 175-178.

- Wang Q, Ma Q, Luo CH, Liu HY, Zhang CL. Hybrid histogram of oriented optical flow for abnormal behavior detection in crowd scenes. International Journal of Pattern Recognition and Artificial Intelligence 2016; 30(2): 210-224.

- Mehran R, Oyama A, Shah M. Abnormal crowd behavior detection using social force model. Proceedings of IEEE Conference on Computer Vision and Pattern Recognition 2009: 935-942.

- Chen C, Ye S, Chen H, Nedzvedz O, Ablameyko S. Integral optical flow and its applications for dynamic object monitoring in video. J Appl Spectrosc 2017; 84: 120-128.

- Chen H, Ye S, Nedzvedz O, Ablameyko S. Application of integral optical flow for determining crowd movement from video images obtained using video surveillance systems. J Appl Spectrosc 2018; 85: 126-133]