Using the city’s surveillance cameras to create a visual sensor network to detect fires

Автор: Dheyab O.A., Chernikov D.Yu., Selivanov A.S.

Журнал: Журнал Сибирского федерального университета. Серия: Техника и технологии @technologies-sfu

Рубрика: Информационно-коммуникационные технологии

Статья в выпуске: 2 т.17, 2024 года.

Бесплатный доступ

One of the most destructive natural disasters that harms both the environment and human life is fire. They jeopardize human life and public safety in addition to causing enormous material damages. The development of an effective system for identifying city fires is the aim of this project. An AI method for enhancing fire detection operations is the YOLOv5 model. For precise and effective fire detection, city cameras are turned into a visual sensor network based on the YOLOv5 paradigm. This system scans camera footage to determine the specific location and presence of fires using deep learning technologies. WebRTC technology is also used to send fire alarms. WebRTC enables direct and efficient communication between the system and observers. Combining YOLOv5 and WebRTC with a visual sensor network can enhance and increase the effectiveness of early fire detection and response operations. This study presents a system for early identification of fire incidents in cities, at a low costby taking advantage of the existing surveillance camera infrastructure.

Visual sensor network, webrtc, yolov5, surveillance camera, fire detection

Короткий адрес: https://sciup.org/146282850

IDR: 146282850 | УДК: 621.397.7:614.842-047.25

Текст научной статьи Using the city’s surveillance cameras to create a visual sensor network to detect fires

Цитирование: Деяб О. А. Использование городских камер наблюдения для создания визуальной сенсорной сети для обнаружения пожаров / О. А. Деяб, Д. Ю. Черников, А. С. Селиванов // Журн. Сиб. федер. ун-та. Техника и технологии, 2024, 17(2). С. 266–275. EDN: UBMXVW

Due to the progress of technology, one of the best methods for spotting fires is to use the object detection algorithm YOLOv5. A deep learning-based model called YOLOv5, or You Only Look Once version 5, is capable of precisely identifying and locating a variety of items in live photos and videos [5]. An extensive collection of fire photo datasets is necessary to train the YOLOv5 model for fire detection. A range of fire scenarios, including wildfires, interior and outdoor fires, and varying fire intensities, should be included in the dataset [6].

The utilization of communication and networking technology to establish connections between visual sensor networks and deliver notifications in fire detection systems has fundamentally transformed our approach to fire protection [7]. Through the establishment of a network of interconnected optical sensors, data can be effectively gathered from several locations in real-time. These sensors, comprising cameras, thermal imaging devices, and other visual sensors, acquire visual data pertaining to fire events. The collected data is transferred to a central monitoring system or a designated control center using modern networking techniques. This enables the consolidation of monitoring and analysis of the visual data. If a fire occurs, the network has the ability to promptly identify the existence of smoke, flames, or any other indications related to fire.

A number of methods have been proposed for creating a fire detection system. [8] This study investigates the use of unmanned aerial vehicles (UAVs) and wireless sensor networks for early fire detection. [9] The purpose of this article is to develop a fire detection system that uses unmanned aerial vehicles and sensor network technologies to prevent fire occurrences. The suggested system uses sensors to monitor environmental parameters, and Internet of Things apps are used to process the data. It integrates cloud computing, UAVs, and wireless sensor technology. To increase the accuracy of fire incident recognition, the system also incorporates image processing algorithms. Sendra et al present a Lora WAN-based fire risk assessment system [10]. A LoRa node with several sensors to measure temperature, CO2, wind speed, humidity, and other parameters is part of the system. [11] This work presents FFireNet, a deep learning approach that leverages the MobileNetV2 model's pre-trained convolutional basis. To solve the issue of recognizing forest fires, more completely connected layers are included.

This research proposes to transform the cameras deployed in the city into a visual sensor network based on the YOLOv5 model for fire detection. This proposal comes to increase the utility of cameras and enhance their use in the city, as they are not limited to surveillance and security only. When a fire is detected, an immediate alert signal is sent to the fire control center. WebRTC technology was used as a communication channel between the monitoring point and the fire control center. WebRTC technology can send an image and coordinates of the fire site or display a live broadcast of the fire site. This effort will pave the way for the development of DVR and NVR devices to be able to detect fires and send alerts. The safety of cities is improved thanks to this method at the lowest cost, as the city's already existing infrastructure, such as cameras and networks, is used without requiring additional costs for installing traditional fire detection systems. Using this method, a faster response to fires can be achieved, and the time needed to deal with them can be reduced. The efficiency of fire brigades can also be improved, and they can be better directed to fire sites.

II. DVR and NVR

The DVR (Digital Video Recorder) and NVR (Network Video Recorder) are both devices used for video surveillance and recording. A DVR is a device that records video footage from analog cameras – 268 –

-

[12] . It typically has multiple video inputs to connect analog cameras, and it compresses and stores the video data on a hard drive. DVRs are commonly used in older surveillance systems. On the other hand, an NVR is a device that records video footage from IP (Internet Protocol) cameras [13]. IP cameras capture digital video and send it over a network to the NVR, which then stores the video data on a hard drive. NVRs are commonly used in modern surveillance systems. Both DVRs and NVRs allow users to view live video feeds and playback recorded footage. However, the main difference lies in the type of cameras they support and the way they handle video data.

III. Visual sensor network (VSN)

A visual sensor network (VSN) is a system consisting of numerous strategically placed visual sensors that capture and analyze visual data from the surrounding environment. These sensors encompass a variety of devices, such as cameras, thermal imaging devices, depth sensors, and other vision-based devices. Visual sensor networks have garnered considerable interest and are extensively employed in diverse applications owing to their capacity to offer abundant visual data for analysis and decision-making purposes. Visual sensor networks have a wide range of applications that extend across multiple areas. VSNs are employed in the domain of surveillance and security to oversee public spaces, structures, and vital infrastructure [14]. They provide immediate identification of irregularities, monitoring of objects or persons, and maintenance of public safety. Visual sensor networks can be utilized in the field of traffic management to efficiently capture and analyze traffic patterns, monitor congestion levels, and optimize transportation systems [15]. Visual sensor networks provide a potent method for capturing and interpreting visual data from their surroundings. Due to their capacity to incorporate several sensors and offer a holistic perspective of scenes or occurrences, VSNs have become invaluable instruments in diverse applications, spanning from surveillance and traffic control to environmental monitoring and healthcare. The future is predicted to bring more improvements to visual sensor networks through achievements in sensor technologies and data processing techniques, resulting in enhanced capabilities and expanded applications [16].

IV. Image processing by using python

Image processing is one significant field where Python is utilized frequently. Python's powerful libraries and packages, such as PIL (Python Imaging Library) and OpenCV (Open Computer Vision Library), allow developers to tackle a wide range of image-related tasks with efficiency. Image processing includes object detection, image segmentation, image enhancement, image filtering, and other critical operations. There are several functions and methods available in Python to effectively do these tasks. For instance, the OpenCV library offers several image processing features. Developers can leverage tools like edge detection, resizing, color space conversion, and thresholding to customize photographs to their specifications. Another well-liked choice for Python image processing tasks is PIL. Among PIL's numerous capabilities is the ability to edit photos by cropping, rotating, resizing, and applying filters. Additionally, this library allows developers to work with a range of image file types, such as JPEG, PNG, BMP, and others. Furthermore, because of its versatility and ease of use, Python is a wonderful choice for beginners who want to learn more about image processing. Its extensive documentation and sizable development community facilitate the understanding and use of image – 269 – processing techniques. Python's strong library and intuitive interface let developers tackle complex image processing tasks with ease [17].

V. Algorithm

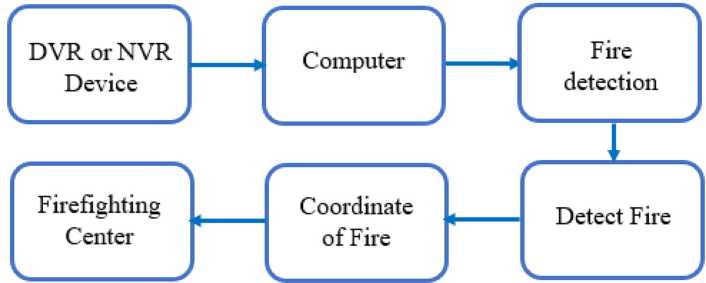

Fig. 1 shows the proposed algorithm for converting surveillance cameras into a visual sensor network for fire detection.

Fig. 1. Proposed algorithm for converting surveillance cameras into a visual sensor network for fire detection

Most surveillance screens typically display a set of video clips coming from cameras installed in monitored areas. Each video clip is labeled to indicate the camera transmitting it. Fig. 2 illustrates an example of a surveillance screen in a building.

Initially, the coordinates of the areas being monitored by the camera, as well as the viewing angle and distance, must be determined and stored on the computer running the fire detection software. A DVR or NVR device is connected to the computer to transmit the surveillance video. The computer

Fig. 2. Is an example of a surveillance screen in a building

Firefighting center

Fig. 3. Depicts the communication network connecting the monitoring stations to the fire control center hosting the fire detection software should have high specifications for video analysis and real-time fire detection. The YOLOv5 software was used for fire detection due to its speed, accuracy, and ease of use. The first method allows for direct input of the video into the fire detection software. The second method involves capturing screen images from the surveillance screen at specified time intervals, such as every 10 seconds, and analyzing them. This method is useful when the computer specifications are not high.

A communication network is created between the monitoring points and the fire control center using WebRTC technology. This network exchanges data and notifications between monitoring points and the fire control center, allowing rapid and effective intervention in fighting fires. Fig. 3 depicts a communication network between monitoring stations and the fire control center.

Alarms from fire monitoring stations will be transmitted to the fire control center via WebRTC technology. The image of the fire taken from the monitoring screen, together with the location coordinates, are sent right away to the fire control center so they can take the appropriate action when a fire breaks out inside the monitoring center's field of view. Using WebRTC technology, the firefighting center may also view a live broadcast of the fire location.

VI. Experimental results

To achieve our goals for this project, we utilized a wide range of applications and technology. Yolov5 and the Google Colaboratory were two of these. One way to mimic a monitoring platform is with a web page written in JavaScript. We carried out three repetitions of the suggested procedure to guarantee the accuracy of our system evaluation. Additionally, we tested the trained model using input data that included a collection of photos and video clips with fire and smoke to confirm its efficacy.

Fig. 4. Image of the input video to the fire detection system

The first experiment involved simulating a video as a live input to the fire detection program. The video contains a group of nine video clips, one of which shows a fire and the other smoke. Fig. 4 illustrates the input video image of the fire detection system.

The results of the experiment show the identification of the fire and smoke areas in the video. The images containing the fire and smoke are cropped to be sent along with the coordinates of the location to the fire control center. Fig. 5 and 6 illustrate the output of the fire detection program.

The time taken to analyze 10 seconds was 90 seconds, and this time is very long. To reduce processing time, an image was taken every 10 seconds of the monitoring screen. The image was processed, and fire and smoke were successfully detected in the image. The time taken to analyze the image was 3 seconds, and this time is considered acceptable compared to the video processing time. The experiment was repeated to detect fires and smoke in videos and photos using Google Colab with a GPU processor. Fires and smoke were detected in real-time videos and images. Table 1 shows the processing time using the PC and Google Colaboratory.

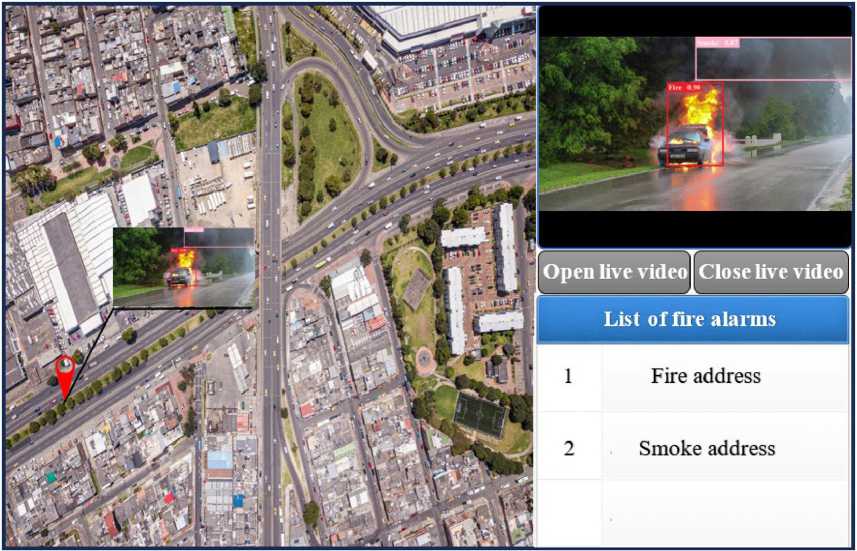

The YOLOv5 program has been modified to be able to send an alarm to the firefighting center when a fire occurs. The fire monitoring system was simulated via a web page based on WebRTC technology. Through the monitoring page, observers can know the location of the fire on the map and a live video of the fire location. An example of the fire monitoring system is depicted in Fig. 7.

VII. Conclusion

Using deep learning technology to transform the city's surveillance cameras into a visual sensor network is a practical and unique approach. This method allows videos to be automatically and correctly analyzed and significant information extracted. Furthermore, significant cost savings are realized because existing CCTV infrastructure is used rather than establishing a new sensor network.

Fig. 5. The output of the fire detection program

Fig. 6. Pictures that will be sent to the firefighting center

Table 1. The processing time using the PC and Google Colab

|

Input Type |

Processing Time by Using the Pc |

Processing Time by Using the Google Colaboratory |

|

Video |

90 second |

Real Time |

|

Image |

3 second |

Real Time |

This method improves the city's ability to harness monitoring data and increase citizen safety and convenience. The use of Yolov5 technology in this context marks a significant advancement in the field of efficiently and effectively transforming security cameras into visual sensor networks.

Fig. 7. Fire monitoring system

Список литературы Using the city’s surveillance cameras to create a visual sensor network to detect fires

- Tanase M. A., Aponte C., Mermoz S., Bouvet A., Le Toan T., Heurich M. Detection of windthrows and insect outbreaks by L‑band SAR: A case study in the Bavarian Forest National Park. Remote Sens. Environ. 2018, 209, 700–711

- Zhao Z.-Q. P, Zheng S.-t. Xu et al. Object detection with deep learning: A review, IEEE transactions on neural networks and learning systems, 2019, 30(11), 3212–3232

- Liu L., Ouyang W., Wang X. et al. Deep learning for generic object detection: A survey, International Journal of Computer Vision, 2020, 128 (2), 261–318

- Pradhan B., Suliman M. D.H.B., Awang M. A.B. Forest fire susceptibility and risk mapping using remote sensing and geographical information systems (GIS). Disaster Prev. Manag. Int. J. 2007, 16.

- Luo W. Research on fire detection based on Yolov5, 2022 3rd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE) [Preprint]. 2022 doi:10.1109/icbaie56435.2022.9985857.

- Toulouse T., Rossi L., Campana A., Celik T., Akhloufi M. Computer vision for wildre research: An evolving image dataset for processing and analysis, Fire Safety Journal, 2017, 92, 188–194

- Dheyab O. A. and S. S. AL-Obaidi. BER COMPARISON OF OFDM FOR DIFFERENT ORDER TYPES OF PSK AND QAM WITH AND WITHOUT USING LDPC. Современные проблемы радиоэлектроники. 2022.

- Sungheetha Dr.A. and Sharma R Dr. R. Real Time Monitoring and fire detection using internet of things and cloud based drones, Journal of Soft Computing Paradigm, 2020, 2(3), 168–174. doi:10.36548/jscp.2020.3.004.

- Sharma A., Singh P. K. & Kumar Y. An integrated fire detection system using IOT and image processing technique for Smart Cities. Sustainable Cities and Society, 2020, 61, 102332. https://doi.org/10.1016/j.scs.2020.102332

- S. Sendra L., García J., Lloret I. Bosch and R. Vega-Rodríguez, LoRaWAN network for fire monitoring in rural environments, 2020, Electronics, 9(3), 1–29

- Khan S. and Khan A. FFireNet: Deep Learning based forest fire classification and detection in smart cities, Symmetry, 2022, 14(10), 2155. doi:10.3390/sym14102155

- Chundi, Venkat et al. Intelligent Video Surveillance Systems. 2021 International Carnahan Conference on Security Technology (ICCST). IEEE, 2021

- Lee Jeonghun and Kwang-il Hwang. RAVIP: Real-Time AI Vision Platform for Heterogeneous Multi-Channel Video Stream. Journal of Information Processing Systems 2021, 17.2

- Wang Z., Wang F. Wireless Visual Sensor Networks: Applications, Challenges, and Recent Advances. In Proceedings of the 2019 SoutheastCon, Huntsville, AL, USA, 11–14 April 2019. 1–8

- Shah V. R., Maru S. V., Jhaveri R. H. An obstacle detection scheme for vehicles in an intelligent transportation system. Int. J. Comput. Netw. Inf. Secur. 2016, 8, 23–28

- Jesus Thiago C. et al. A survey on monitoring quality assessment for wireless visual sensor networks. Future Internet 14.7 (2022): 213

- Chityala, Ravishankar and Sridevi Pudipeddi. Image processing and acquisition using Python. CRC Press, 2020