Validation lamina for maintaining confidentiality within the Hadoop

Автор: Raghvendra Kumar, Dac-Nhuong Le, Jyotir Moy Chatterjee

Журнал: International Journal of Information Engineering and Electronic Business @ijieeb

Статья в выпуске: 2 vol.10, 2018 года.

Бесплатный доступ

In the white paper we strive to cogitate vulnerabilities of one of the most popular big data technology tool Hadoop. The elephant technology is not a bundled one rather by product of the last five decades of technological evolution. The astronomical data today looks like a potential gold mine, but like a gold mine, we only have a little of gold and more of everything else. We can say Big Data is a trending technology but not a fancy one. It is needed for survival for system to exist & persist. Critical Analysis of historic data thus becomes very crucial to play in market with the competitors. Such a state of global organizations where data is going more and more important, illegal attempts are obvious and needed to be checked. Hadoop provides data local processing computation style in which we try to go towards data rather than moving data towards us. Thus, confidentiality of data should be monitored by authorities while sharing it within organization or with third parties so that it does not get leaked out by mistake by naïve employees having access to it. We are proposing a technique of introducing Validation Lamina in Hadoop system that will review electronic signatures from an access control list of concerned authorities while sending & receiving confidential data in organization. If Validation gets failed, concerned authorities would be urgently intimated by the system and the request shall be automatically put on halt till required action is not taken for privacy governance by the authorities.

Digital Signature, Electronic Signature, Data Local Processing, Hadoop, Big Data, Privacy Governance

Короткий адрес: https://sciup.org/15016128

IDR: 15016128 | DOI: 10.5815/ijieeb.2018.02.06

Текст научной статьи Validation lamina for maintaining confidentiality within the Hadoop

Published Online March 2018 in MECS

-

I. Introduction

The term Big Data was originally coined by John R. Mashey in late 1990. As a chief scientist at Silicon Graphics International (S.G.I.) in 1998 Mashey [1, 17,

21], he presented the issues of growing stress on storage & networking infrastructure in organizations that were growing quickly with data (image, graphics, models) & some more difficult data (audio, video). Then after Gartner officially phrased the term Big Data during his research in enterprise market. Gartner analyzed databases of commercial organizations and found there are lot of enterprise level data that which is not structured and has never been used for large scale analysis. This kind of data he termed as “ Dark Data ”. Significance of extracting intelligence out of this dark data was sensed at this stage. Trying making decision out of this intelligence is the heart of Big Data application area.

In an instance while collecting data from unstructured data sources such as social media sites we have to ensure that viruses or spam links are not buried in its content. If we introduce this data and make it part of our analytics system, we could be putting our company at risk. Maintaining a large number of keys can be impractical, and managing the storing, archiving, and accessing of the keys is difficult. Hadoop platform runs on commodity hardware which means the security concerns are of component level. Frameworks like Map-Reduce, Yarn, and Spark harnesses distributed computing between clusters of machines and execute user defined jobs across the nodes in the cluster. Thus, we don’t require external components like SAN and when Hadoop uses commodity hardware it treat them as parallel array of disks without taking data out of local system. The vulnerable attack surfaces are the nodes in a cluster that may not have been reviewed and inadequately firewalled servers. These days companies have to put a lot of trust in the people running the infrastructure and monitoring the accesses. This limitation put companies at risk of any undesired or mistaken activity.

For example, in the English county of Devon, a situation arose recently where a primary school inadvertently sent an email to parents, which leaked private data about 200 children – the information included, their date of birth, their educational needs and behavioral issues. Despite the sensitivity of the data, it was still shared across [6, 16, 24]. Our idea of introducing Validation Lamina comes here that if confidential data related to students would have been digitally signed by the concerned authority, the emails having the data generated by any machine would have been reviewed and automatically halted before sending it to all parents.

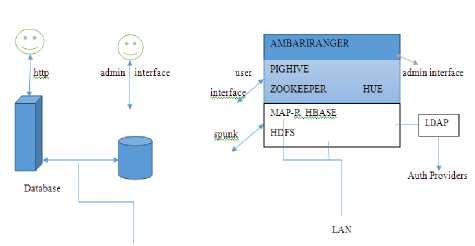

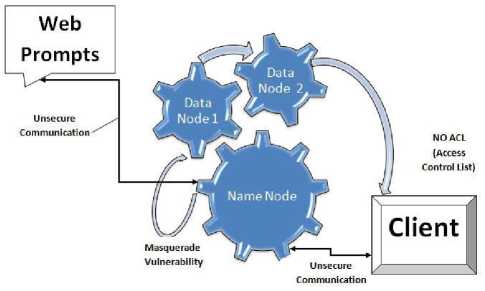

Fig.1. (Attack Points) Normal User System v/s Hadoop User System

A digital signature is a protocol that is implemented like a real signature which provides a unique flag for a sender, and enables others to identify a sender from that flag and thereby confirm an agreement. We would like to propose the concept of Validation Lamina where such an agreement is to be designed to work while sharing of data in Hadoop system. If it does not meet the agreement, sharing of data will be halted since it won’t be able to open it without proper key.

Digital signatures possess unique properties of having:

-

1. Non-repudiation: It provides insurance if a message is sent by someone having digital signature gets identified.

-

2. Uniquely traceable source authenticity (from expected source only)

-

3. Inseparable from message

-

4. Immutable after being transmitted

-

5. Have recent one-time use and should not allow duplicate usage.

Although digital signature is traditionally used for signing a digital document but it can be also implemented for cryptography. Some use the terms cryptography and cryptology interchangeably in English, while others (including US military practice generally) use cryptography to refer specifically to the use and practice of cryptographic techniques and cryptology to refer to the combined study of cryptography and cryptanalysis [15, 16, 20, 24].

-

1. Authentication: It is analogous to signing a contract but in digital world.

-

2. Authorization: It is analogous to signing & identifying one’s own signature in digital world.

Proof of authenticity of the issuer of the data is to be maintained under Hadoop roof.

Data Access: Dark data or computed data which is accessed by user posses almost same level of technical configurations in Hadoop environment as non-Hadoop implementations. However, it is mandatory to have such accesses to be available only at the instance when authentic business people require it for inspection or communication. Traditional data storage frameworks offer variety of security provisions. If augmentation is done with a federated identity capability we can design a security system that would be providing genuine data access across several layers of Hadoop framework.

Application Access: Application Programming Interfaces (API) generally are designed to control data exchange between communicating bodies in a secured fashion. This offers adequate level of security for Hadoop implementation. In Big Data Analytics on cloud the implementation under Software-as-a-service is employed to provide common big data related services like accessing service generated Big Data, Data Analytics result etc directly to users in order to increase efficiency & reduce cost [8, 23].

Data Encryption: In traditional systems, exchange of Big Data exhausts the system’s resources. It is very challenging to secure data by means of encryption as it consumes costly computing cycles. To encrypt only the data in need is a wiser approach & modularizing encrypting elements on various hierarchical level in Hadoop further simply the problem. According to a normal estimation the cost of storing for a year in a traditional system costs around $37000, $5000 for database appliance and only $2000 for Hadoop cluster.

This was neither relied on cryptography nor possessed computer readable characters.

There are several other schemes of cryptographic algorithms. Based on the number of keys that are employed for encryption and decryption, and utilization by their application, we would list following schemes:

-

• Secret Key Cryptography (SKC) : Uses a single key for both encryption and decryption

-

• Public Key Cryptography (PKC) : Uses one key for encryption and another for decryption

-

• Hash Functions : Uses a mathematical

transformation to irreversibly " encrypt "

information

Secret Key Cryptography (SKC): With a secret or symmetric key algorithm, the key is a shared secret between two communicating parties. Encryption and decryption both use the same key. The Data Encryption Standard (DES) and the Advanced Encryption Standard (AES) are examples of symmetric key algorithms. Symmetric-key cryptography refers to encryption methods in which both the sender and receiver share the same key (or, less commonly, in which their keys are different, but related in an easily computable way). This was the only kind of encryption publicly known until June 1976 [10]. Secret (Symmetric) Key cryptography uses single key for encryption and decryption. There are two types of secret key algorithms:

-

• Block ciphers : In a block cipher, the actual encryption code works on a fixed-size block of data. This technique involves encryption of one block of text at a time. Decryption also takes one block of encryption text at a time. If the length of data is not on a block size boundary, it must be padded.

-

• Stream ciphers : Stream ciphers do not work on a block basis, but convert 1 bit (or 1 byte) of data at time. Stream ciphers technique involves the encryption of one plain text byte at a time. The decryption also happens one byte at a time.

Public ( Asymmetric ) Key Cryptography: Public key cryptography provides the same services as symmetric key cryptography in general, but it uses different keys for encryption and decryption. A key pair in a public key cryptography scheme consists of a private key and a public key. These keypairs are generated by a process that ensures the keys are uniquely paired with one another and that neither key can be determined from the other (Hale and Friedrichs,2000). Public key cryptography was conceived in 1976 by Diffe and Hellman [12] and in 1977, Rivest, Shamir and Adleman designed the RSA Cryptosystem [13].

Public-key cryptography is a cryptographic technique that enables users to securely communicate on an insecure public network, and reliably verify the identity of a user via digital signatures [14]. Public key cryptography is an asymmetric scheme that uses a pair of keys: a public key, which encrypts data, and a corresponding private key, or secret key for decryption. Each user has a key pair given to him. The public key is published to the world while the private key is kept secret. Anyone with a copy of the public key can then encrypt information that only the person having the corresponding private key can read. It is computationally infeasible to deduce the private key from the public key. Anyone who has a public key can encrypt information but cannot decrypt it. Only the person who has the corresponding private key can decrypt the information. Again here each entity in a public key system will be assigned a private key and a public key. Private keys are kept private, and public keys are published and accessible to anyone.

Hash Function: A one-way hash function takes variable-length input - say, a message of any length and produces a fixed-length output; say, 160-bits. The hash function ensures that, if the information is changed in any way - even by just one bit - an entirely different output value is produced. A cryptographic hash operation produces a fixed-length output string called a digest from a variable-length input string. For all practical purposes, the following statements are true of a good hash function:

-

• Collision resistant: If any portion of the data is modified, a different hash will be generated.

-

• One-way: The function is irreversible. That is, given a digest, it is not possible to find the data that produces it.

These properties make hash operations useful for authentication purposes. For example, you can keep a copy of a digest for the purpose of comparing it with a newly generated digest at a later date. If the digests are identical, the data has not been altered.

MD5: MD5 is a message digest algorithm developed by Rom Rivest. MD5 actually has its roots in a series of message digest algorithm, which were the predecessors of MD5, all developed by Rivest. The Original Message Algorithm was called MD. MD5 is quite fast and produces 128-bit message digests. Over the years, researchers have developed potential weaknesses in MD5. However, so far MD5 has been able to successfully defend itself against collisions. This may not be guaranteed for too long, though. After some initial processing; the input text is processed in 512-bit blocks (which are further divided into 16, 32-bit sub-blocks). The output of the algorithm is a set of four 32-bit blocks, which make up the 128-bit message digest.

SHA-1: SHA stands for Secure Hash Function. Sha-1 is a strong cryptographic hashing algorithm, stronger than MD5. Sha-1 is used to provide data integrity (it is a guarantee data has not been altered in transit) and authentication (to guarantee data came from the source it was supposed to come from). it was produced to be used with the digital signature standard. Sha-1 uses a 160-bit encryption key. It is cryptographically stronger and recommended when security needs are higher. Sha-1 has proven to be a strong hashing algorithm and no records of it being hacked so far. Sha-1 works with any input message that is less than 264 bits in lengths. Input text is processed in 512 bits blocks. The output of SHA is a message digest, which is 160 bits length (32 bits more than the message Digest produced by MD5).

Table 1. Description of different classes

|

Class of DSC |

Description |

|

Class 1 |

These certificates shall be issued to individuals/private subscribers |

|

Class 2 |

These certificates will be issued for both business personnel and private individuals use. |

|

Class 3 |

These certificates will be issued to individuals as well as organizations |

A standard called as X.509 defines the structure of digital certificate. One of the latest version of the standard is Version 3, called as X.509V3. X.509 version -3 is combination of version-1 of the X.509 and version-2 of the X.509.Version-3 of the X.509 standard has added many extensions to the structure of digital certificate (like key usage, certificate policy, policy mapping, authority key identifiers, subject key identifier etc.). We require an electronic signature that would encrypt Variety of Big Data and establish a shared key over an insecure channel so that only the authority having the signature key will be able to send the data successfully otherwise anyone just having access to data on its local machine would not be able to do so. The validation will be provided by

Validation Lamina having Access Control List (ACL). Thus, cipher text of any data is produced by performing a hashing algorithm which will be reducing the data into a unique number called message digest [16, 17].

Fig.2. Hadoop’s Vulnerabilities

-

III. Proposedscheme of Validation Lamina

Although most of companies may go through an extensive background check on all of its employees, they have to keep a trust that no malicious insiders work in various business units outside of IT. They also have to assume that their cloud provider has diligently checked its employees. This concern is real because close to 50 percent of security breaches are caused by insiders (or by people getting help from insiders). Thus, Validation of documents will enable the tracking of any undesired activity related with the data being shared. Most core data storage platforms have rigorous security schemes that can be augmented with a federated identity capability through electronic signatures and providing appropriate access across the many layers of the architecture. In traditional environments, cryptography exhausts the system’s resources completely. With the volume, velocity, and varieties associated with big data, this problem is exaggerated. Digital Signature Algorithm when perceived mathematically has its basis with discrete logarithm problem.

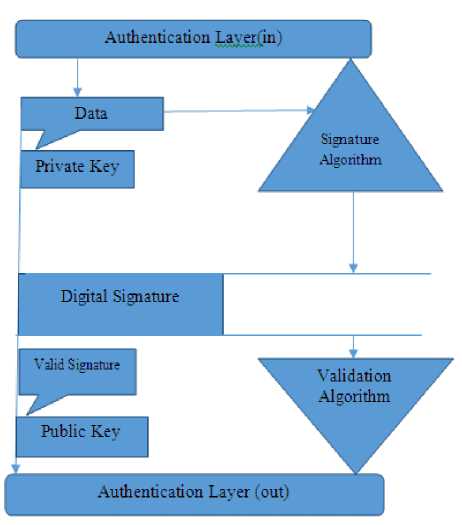

Fig.3. Architecture

The validation of Digital Certificate consists of the following steps:

-

i. The data digest algorithm calculates a message digest (hash) of all fields of the certificate, except for the last one, called MD1.

-

ii. The standard user passes all fields except the last one of the received digital certificate toa data digest algorithm. This algorithm should be the same as the one used by the CA while signing the certificate.

-

iii. The data digest algorithm calculates a message digest (hash) of all fields of the certificate, except for the last one, called MD1.

-

iv. The user now extracts the Digital certificate of the CA from the certificate. (it is a last field in a certificate).

-

v. The user de-Sign the CAs Signature (i.e. the user decrypts the signature with the CA s public key).

-

vi. This Produce another message digest, which we shall called MD2(i.e. MD2 is the same message digest as would have been calculated by the CA during the signing of the certificate.

-

vii. Now, the user compares the message digest it calculated with the one, which is the result of designing the CAs signature. if the two matches, i.e. if MD1=MD2, the user is convinced that the digital certificate was indeed signed by the CA with its private key. If this comparison fails, the user will not trust the certificate and reject it.

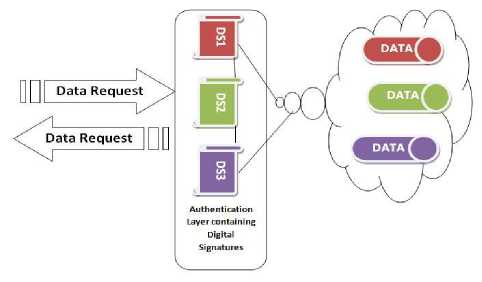

Although RSA encryption is faster than DSA but Hadoop environment gives us advantage of data local processing [7]. The only thing we require is faster key generation as data will be coming at enormous velocities through variety of sources in a Big Data system. Such a situation clearly idealizes the use of DSA over RSA since signature generation is faster in DSA. As compared to incoming data to a Big Data system outgoing data will be always small as we will be analyzing astronomical data into report level scale. Thus, even if signature validation is slower in DSA than RSA, overall performance of the system will be quicker. On priority, we need to make sure that our provider has the right controls in place to ensure that the integrity of the data is maintained. As such any modification is required to be assigned with a signature from legitimate authority. When Hadoop serve lets communicate with each other they do not verify other services what they really claims to be. Rogue can be easily stared therefore and Task-Tracker cans get access to data blocks. Hadoop also used web that serves interoperability among various nodes. Thus, we need to represent Digital-signature related information in a standard format called XML Signature.

The required data elements that are represented in an interoperable manner by XML Signature are:

-

1. Data to be digitally signed

-

2. Hash algorithm (MD5/SHA1) that will create the digest value

-

3. Signature algorithm

-

4. Semantics of certificate or key.

The logs containing list of access sessions (through sharing or forwarding) of confidential data with digital signature can be “seal” so that any change can be easily detected. This seal can be provided by computing a cryptographic function called hash or checksum, or a message digest. Thus hash function will be depending on all bits of the file being sealed and altering one bit will alter the checksum result. Each time the data is accessed or used, the hash function will be recomputed the checksum, and as long as the computed checksum matches the stored value, Validation can know if log of data has not been changed. The hash function and checksum confirm immutability of data hence signature will be required each time data is accessed by concerned authority. A Key-Store is to be applied at Validation Lamina which consist of a highly secured repository of keys or trusted certificates that are used for Validation, encryption etc. The entry of a key will be containing owner’s identity as private key. Trusted certificate’s entry will contain public key along with entity’s identity. For better management and security, we can use two KeyStores with one containing the set of the key entries and other containing trusted certificate entries (including Certificate Authorities’ certificates). Again, access can be restricted to the Key-Store with private keys.

Trust-Store will contain certificates from a given list of expected authorities to communicate with or from Certificate Authorities that it trusts and identify other parties with proper protocol. JKS is most commonly used in the Java world and so can be ideally used as a TrustStore in Hadoop. JKS doesn’t require each entry to be a private key entry, so it can be used for certificates from given trust but for which you don’t need Private keys.

Fig.4. Validation Lamina

-

IV. Conclusion

Список литературы Validation lamina for maintaining confidentiality within the Hadoop

- Mashey, John R., 'Big Data and the Next Wave of Infra Stress', in SGI (1998).

- Davenport, Thomas H., Big Data in Big Companies Statistical Analysis System (SAS) Institute, 2013.

- S. Razick, R. Mocnik, L. F. Thomas, E. Ryeng, F. Drabløs, and P. Sætrom, The eGenVar data management system — Cataloguing and sharing sensitive data and metadata for the life sciences, Database, vol. 2014, p. bau027, 2014.

- Wang, Divyakant Agrawal-Amr El Abbadi-Vaibhav Arora-Ceren Budak-Theodore Georgiou-Hatem A. Mahmoud-Faisal Nawab-Cetin Sahin-Shiyuan, 'A Perspective on the Challenges of Big Data Management and Privacy Concerns', IEEE (2015).

- Gilder, Bret Swanson & George, 'Estimating the Exa flood', Tech rep., Discovery Institute, Seattle, Washington (2008).

- issuu.com, Big Data Innovation, Issue 12 by Innovation Enterprise.

- Bhushan Lakh, “Practical Hadoop Security”, Apress, 2014, Pg-151-154.

- Lyu, Zibin, Zheng-Jieming, Zhu-Michael R., ‘Service-generated Big Data and Big Data as a Service’, IEEE International Congress on Big Data (2013).

- Dean, Jeffrey and Ghemawat, Sanjay, ‘MapReduce: Simplified Data Processing on Large Clusters’, Google Inc, pp1-13 (2004).

- H. Zhu, D. Li, Research on Digital Signature in Electronic Commerce," The 2008IAENG International Conference on Internet Computing and Web Services, HongKong, 2008, pp. 807809.

- LPKI - A Lightweight Public Key Infrastructure for the Mobile Environments", Proceedings of the 11th IEEE International Conference on Communication Systems (IEEE ICCS'08), pp.162-166, Guangzhou, China, Nov. 2008.

- Diffe, W. and Hellman, M. E., New Directions in Cryptography. IEEE Transactions on Information Theory,22 (1 976), pp. 644-654.

- Rivest, R., Shamir, A. and Adleman, L., A Method for Obtaining Digital Signatures and Public Key Cryptosystems Communications of the ACM, 21(1978), pp.120-126.

- Adams, Carlisle & Lloyd, Steve (2003). Understanding PKI: concepts, standards, and deployment considerations. Addison-Wesley Professional. pp. 1115. ISBN 978-0-672-32391-1.

- Merriam-Webster's Collegiate Dictionary (11th ed.). Merriam-Webster.Retrieved2008-02-01.

- Lawrence, W., & Sankaranarayanan, S. (2012). Application of Biometric security in agent based hotel booking system-android environment. International Journal of Information Engineering and Electronic Business, 4(3), 64.

- Kaur, R. K., & Kaur, K. (2015). A New Technique for Detection and Prevention of Passive Attacks in Web Usage Mining. International Journal of Wireless and Microwave Technologies (IJWMT), 5(6), 53.

- Lasota, M., Deniziak, S., & Chrobot, A. (2016). An SDDS-based architecture for a real-time data store. International Journal of Information Engineering and Electronic Business, 8(1), 21.

- Nagesh, H. R., & Prabhu, S. (2017). High Performance Computation of Big Data: Performance Optimization Approach towards a Parallel Frequent Item Set Mining Algorithm for Transaction Data based on Hadoop MapReduce Framework. International Journal of Intelligent Systems and Applications, 9(1), 75.

- Kaur, P., & Monga, A. A. (2016). Managing Big Data: A Step towards Huge Data Security. International Journal of Wireless and Microwave Technologies (IJWMT), 6(2), 10.

- Maxwell, W. J. (2014). Global Privacy Governance: A comparison of regulatory models in the US and Europe, and the emergence of accountability as a global norm. Cahier de prospective, 63.

- Greene, D., & Shilton, K. (2017). Platform privacies: Governance, collaboration, and the different meanings of “privacy” in iOS and Android development. New Media & Society, 1461444817702397.

- Bhatti, H. J., & Rad, B. B. (2017). Databases in Cloud Computing: A Literature Review.

- Alguliyev, R. M., Gasimova, R. T., & Abbasli, R. N. (2017). The Obstacles in Big Data Process. International Journal of Modern Education and Computer Science, 9(3), 28.